How to Add Oracle ZFS Storage Appliance Directories and Projects to a Cluster

Before You Begin

Perform the steps in this procedure only if the directory or project is meant to be protected by cluster fencing, restricting access to read-only for nodes that leave the cluster.

Your cluster is operating.

The Oracle ZFS Storage Appliance NAS device is properly configured.

See Requirements, Recommendations, and Restrictions for Oracle ZFS Storage Appliance NAS Devices for the details about required device configuration.

You have added the device to the cluster by performing the steps in How to Install an Oracle ZFS Storage Appliance in a Cluster.

The procedure relies on the following assumptions:

An NFS file system or directory from the Oracle ZFS Storage Appliance is already created in a project, which is itself in one of the storage pools of the device. It is important that in order for a directory (for example, the NFS file system) to be used by the cluster, to perform the configuration at the project level, as described below.

This procedure provides the long forms of the Oracle Solaris Cluster commands. Most commands also have short forms. Except for the forms of the command names, the commands are identical.

To perform this procedure, become superuser or assume a role that provides solaris.cluster.read and solaris.cluster.modify RBAC authorization.

- Use the Oracle ZFS Storage Appliance GUI to identify the project associated

with the NFS file systems for use by the cluster.

After you have identified the appropriate project, click Edit for that project.

- If read/write access to the project has not been configured,

set up read/write access to the project for the cluster nodes.

- Access the NFS properties for the project.

In the Oracle ZFS Storage Appliance GUI, select the Protocols tab in the Edit Project page.

- Set the Share Mode for the project to None or Read only, depending on the desired access rights for nonclustered systems. The Share Mode can be set to Read/Write if it is required to make the project world-writable, but it is not recommended.

- Add a read/write NFS Exception for each cluster node by

performing the following steps.

- Under NFS Exceptions, click +.

- Select Network as the Type.

- Enter the public IP address the cluster node will use to access the appliance as the Entity. Use a CIDR mask of /32. For example, 192.168.254.254/32 .

- Select Read/Write as the Access Mode.

- If desired, select Root Access. Root Access is required when configuring applications, such as Oracle RAC or HA Oracle.

- Add exceptions for all cluster nodes.

- Click Apply after the exceptions have been added for all IP addresses.

- Access the NFS properties for the project.

- Ensure that the directory being added is set to inherit

its NFS properties from its parent project.

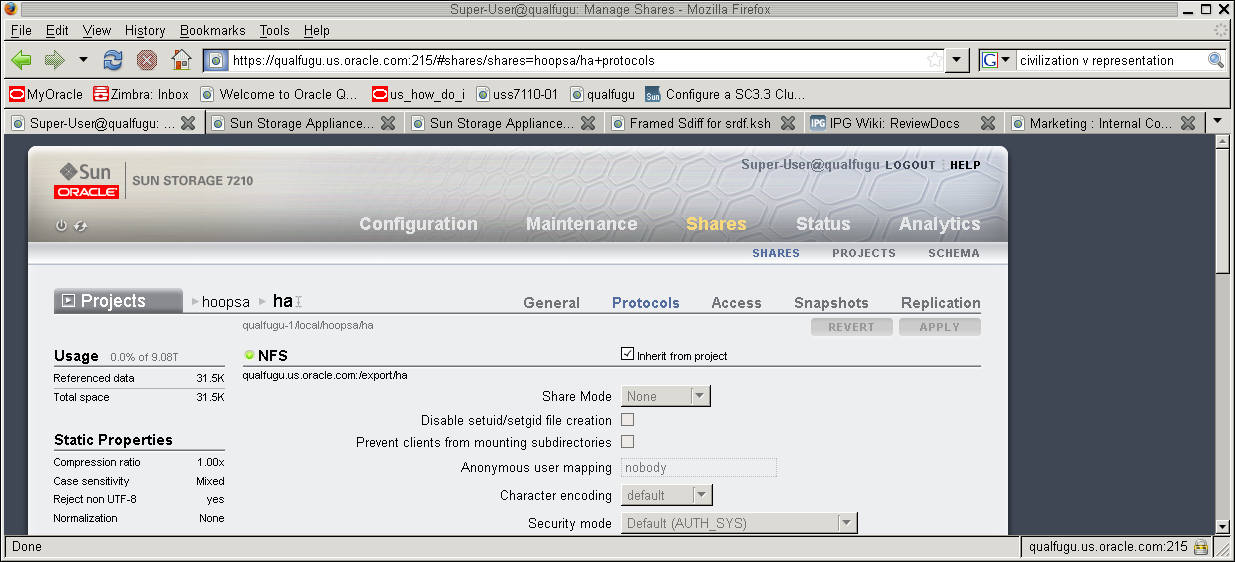

- Navigate to the Shares tab in the Oracle ZFS Storage Appliance GUI.

- Click Edit Entry to the right of the Share that will have fencing enabled.

- Navigate to the Protocols tab for that share, and ensure that the Inherit from project property is set in the NFS section.

If you are adding multiple directories within the same project, verify that each directory that needs to be protected by cluster fencing has the Inherit from project property set.

- If the project has not already been configured with the

cluster, add the project to the cluster configuration.

Use clnasdevice show -v command to determine if the project has already been configured with the cluster.

# clnasdevice show -v ===NAS Devices=== Nas Device: device1.us.example.com Type: sun_uss userid: osc_agent nodeIPs{node1} 10.111.11.111 nodeIPs{node2} 10.111.11.112 nodeIPs{node3} 10.111.11.113 nodeIPs{node4} 10.111.11.114 Project: pool-0/local/projecta Project: pool-0/local/projectb- Perform this command from any cluster node:

# clnasdevice add-dir -d project1,project2 myfiler

- –d project1, project2

Enter the project or projects that you are adding.

Specify the full path name of the project, including the pool. For example, pool-0/local/projecta.

- myfiler

Enter the name of the NAS device containing the projects.

For example:

# clnasdevice add-dir -d pool-0/local/projecta device1.us.example.com # clnasdevice add-dir -d pool-0/local/projectb device1.us.example.com

For example:

# clnasdevice find-dir -v === NAS Devices === Nas Device: device1.us.example.com Type: sun_uss Unconfigured Project: pool-0/local/projecta File System: /export/projecta/filesystem-1 File System: /export/projecta/filesystem-2 Unconfigured Project: pool-0/local/projectb File System: /export/projectb/filesystem-1

For more information about the clnasdevice command, see the clnasdevice(1CL) man page.

- If you want to add the project from an Oracle ZFS Storage Appliance to a zone cluster but you need to issue

the command from the global zone, use the clnasdevice command with the

–Z option:

# clnasdevice add-dir -d project1,project2 -Z zcname myfiler

- zcname

Enter the name of the zone cluster where the NAS projects are being added.

- Perform this command from any cluster node:

- Confirm that the

directory and project have been configured.

- Perform this command from any cluster node:

# clnasdevice show -v -d all

For example:

# clnasdevice show -v -d all ===NAS Devices=== Nas Device: device1.us.example.com Type: sun_uss nodeIPs{node1} 10.111.11.111 nodeIPs{node2} 10.111.11.112 nodeIPs{node3} 10.111.11.113 nodeIPs{node4} 10.111.11.114 userid: osc_agent Project: pool-0/local/projecta File System: /export/projecta/filesystem-1 File System: /export/projecta/filesystem-2 Project: pool-0/local/projectb File System: /export/projectb/filesystem-1 - If you want to check the projects for a zone cluster but

you need to issue the command from the global zone, use the clnasdevice command with the –Z option:

# clnasdevice show -v -Z zcname

Note - You can also perform zone cluster-related commands inside the zone cluster by omitting the –Z option. For more information about the clnasdevice command, see the clnasdevice(1CL) man page.

After you confirm that a project name is associated with the desired NFS file system, use that project name in the configuration command.

- Perform this command from any cluster node:

- If you do not use the automounter, mount the directories

by performing the following steps:

- On each node in the cluster, create a mount-point directory

for each Oracle ZFS Storage Appliance NAS project that you added.

# mkdir -p /path-to-mountpoint

- path-to-mountpoint

Name of the directory on which to mount the project.

- On

each node in the cluster, add an entry to the /etc/vfstab file

for the mount point.

If you are using your Oracle ZFS Storage Appliance NAS device for Oracle RAC or HA Oracle, consult your Oracle database guide or log into My Oracle Support for a current list of supported files and mount options. After you log into My Oracle Support, click the Knowledge tab and search for Bulletin 359515.1.

When mounting Oracle ZFS Storage Appliance NAS directories, select the mount options appropriate to your cluster applications. Mount the directories on each node that will access the directories. Oracle Solaris Cluster places no additional restrictions or requirements on the options that you use.

- On each node in the cluster, create a mount-point directory

for each Oracle ZFS Storage Appliance NAS project that you added.

- To enable file system monitoring, configure a resource

of type SUNW.ScalMountPoint for the file systems.

For more information, see Configuring Failover and Scalable Data Services on Shared File Systems in Oracle Solaris Cluster Data Services Planning and Administration Guide .