14 Developing WebRTC-enabled iOS Applications

This chapter shows how you can use the Oracle Communications WebRTC Session Controller iOS application programming interface (API) library to develop WebRTC-enabled iOS applications.

About the iOS SDK

The WebRTC Session Controller iOS SDK enables you to integrate your iOS applications with core WebRTC Session Controller functions. You can use the iOS SDK to implement the following features:

-

Audio calls between an iOS application and any other WebRTC-enabled application, a Session Initialization Protocol (SIP) endpoint, or a Public Switched Telephone Network endpoint using a SIP trunk.

-

Video calls between an iOS application and any other WebRTC-enabled application, with suitable video conferencing support.

-

Seamless upgrading of an audio call to a video call and downgrading of a video call to an audio call.

-

Support for Interactive connectivity Establishment (ICE) server configuration, including support for Trickle ICE.

-

Transparent session reconnection following network connectivity interruption.

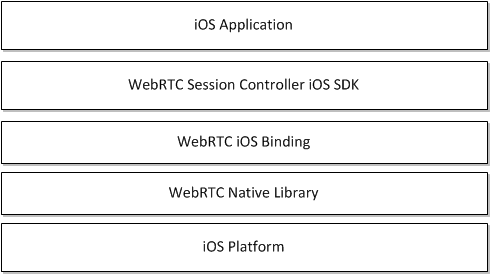

The WebRTC Session Controller iOS SDK is built upon several additional libraries and modules as shown in Figure 14-1.

The WebRTC iOS binding enables the WebRTC Session Controller iOS SDK access to the native WebRTC library which itself provides WebRTC support. The Socket Rocket WebSocket library enables the WebSocket access required to communicate with WebRTC Session Controller.

For additional information on any of the APIs used in this document, see Oracle WebRTC Session Controller iOS API Reference.

Supported Architectures

The WebRTC Session Controller iOS SDK supports the following architectures:

-

armv7

-

armv7s

-

arm64

-

i386

About the iOS SDK WebRTC Call Workflow

The general workflow for using the WebRTC Session Controller iOS SDK to place a call is:

-

Authenticate against WebRTC Session Controller using the WSCHttpContext class. You initialize the WSCHttpContext with necessary HTTP headers and optional SSLContextRef in the following manner:

-

Send an HTTP GET request to the login URI of WebRTC Session Controller

-

Complete the authentication process based upon your authentication scheme

-

Proceed with the WebSocket handshake on the established authentication context

-

-

Establish a WebRTC Session Controller session using the WSCSession class.

Two protocols must be implemented:

-

WSCSessionConnectionDelegate: A delegate that reports on the success or failure of the session creation.

-

WSCSessionObserverDelegate: A delegate that signals on various session state changes, including CLOSED, CONNECTED, FAILED, and others.

-

-

Once a session is established, create a WSCCallPackage class which manages WSCCall objects in the WSCSession.

-

Create a WSCCall using the WSCCallPackage createCall method with a callee ID as its argument, for example, alice@example.com.

-

Implement a WSCCallObserver protocol which attaches to the Call to monitor call events such as ACCEPTED, REJECTED, RECEIVED.

-

Create a new WSCCallConfig class to determine the nature of the WebRTC call, whether bi or mono-directional audio or video or both.

-

Create and configure a new RTCPeerConnectionFactory object and start the WSCCall using the start method.

-

When the call is complete, terminate the call using the WSCCall object's end method.

Prerequisites

Before continuing, make sure you thoroughly review and understand the JavaScript API discussed in the following chapters:

The WebRTC Session Controller iOS SDK is closely aligned in concept and functionality with the JavaScript SDK to ensure a seamless transition.

In addition to an understanding of the WebRTC Session Controller JavaScript API, you are expected to be familiar with:

-

Objective C and general object oriented programming concepts

-

General iOS SDK programming concepts including event handling, delegates, and views.

-

The functionality and use of XCode.

For an introduction to programming iOS apps using XCode, see . For additional background on all areas of iOS app development, see: https://developer.apple.com/library/ios/referencelibrary/GettingStarted/RoadMapiOS/WhereToGoFromHere.html#/apple_ref/doc/uid/TP40011343-CH12-SW1.

iOS SDK System Requirements

In order to develop applications with the WebRTC Session Controller SDK, you must meeting the following software/hardware requirements:

-

A installed and fully configured WebRTC Session Controller installation. See the Oracle Communications WebRTC Session Controller Installation Guide.

-

A Macintosh computer capable of running XCode version 5.1 or later.

-

An actual iOS hardware device.

While you can test the general flow and function of your iOS WebRTC Session Controller application using the iOS simulator, a physical iOS device such as an iPhone or an iPad is required to fully utilize audio and video functionality.

About the Examples in This Chapter

The examples and descriptions in this chapter are kept intentionally straightforward in order to illustrate the functionality of the WebRTC Session Controller iOS SDK API without obscuring it with user interface code and other abstractions and indirections. Since it is likely that use cases for production applications will take many forms, the examples assume no pre-existing interface schemas except when absolutely necessary, and then, only with the barest minimum of code. For example, if a particular method requires arguments such as a user name, a code example will show a plain string username such as "bob@example.com" being passed to the method. It is assumed that in a production application, you would interface with the iOS device's contact manager.

Installing the iOS SDK

To install the WebRTC Session Controller iOS SDK, do the following:

-

Install XCode from the Apple App store:

https://developer.apple.com/library/ios/referencelibrary/GettingStarted/RoadMapiOS/index.html#/apple_ref/doc/uid/TP40011343-CH2-SW1.Note:

The WebRTC Session Controller iOS SDK requires XCode version 5.1 or higher. -

Create a new iOS project using xCode, adding any required targets:

https://developer.apple.com/library/ios/referencelibrary/GettingStarted/RoadMapiOS/FirstTutorial.html#/apple_ref/doc/uid/TP40011343-CH3-SW1.Note:

iOS version 6 is the minimum required by the WebRTC Session Controller iOS SDK for full functionality. -

Download and extract the WebRTC Session Controller iOS SDK zip file. There are two subfolders, in the archive, include and lib.

-

Within the include folder are two folders, wsc and webrtc containing, respectively, the header files for the WebRTC Session Controller iOS SDK, and the WebRTC SDK.

-

Within the lib folder, are two folders, debug and release, each of which contain wsc and webrtc folders with WebRTC Session Controller iOS SDK, and the WebRTC SDK libraries for either debug or release builds depending upon your state of development.

-

-

Import the WebRTC Session Controller iOS SDK .a lib files:

-

Select your application target in XCode project navigator.

-

Click Build Settings in top of the editor pane.

-

Expand Link Binary With Libraries.

-

Drag the .a lib files from the lib folders of webrtc and wsc_sdk into the expanded panel.

Note:

The webrtc folder contains two sets of lib files, one for iOS devices in the ios folder and one for the iOS simulator in the sim folder. Make sure you choose the correct lib files for your target.In addition, make sure you include either debug or release libraries as appropriate for your development target.

-

-

Import any other system frameworks you require. The following additional frameworks are recommended:

-

CFNetwork.framework: zero-configuration networking services. For more information, see

https://developer.apple.com/library/ios/documentation/CFNetwork/Reference/CFNetwork_Framework/index.html. -

Security.framework: General interfaces for protecting and controlling security access. For more information see:

https://developer.apple.com/library/ios/documentation/Security/Reference/SecurityFrameworkReference/index.html. -

CoreMedia.framework: Interfaces for playing audio and video assets in an iOS application. For more information see:

https://developer.apple.com/library/mac/documentation/CoreMedia/Reference/CoreMediaFramework/index.html. -

GLKit.framework: Library that facilitates and simplifies creating shader-based iOS applications (useful for video rendering). For more information see:

https://developer.apple.com/library/ios/documentation/3DDrawing/Conceptual/OpenGLES_ProgrammingGuide/DrawingWithOpenGLES/DrawingWithOpenGLES.html. -

AVFoundation.framework: A framework that facilities managing and playing audio and video assets in iOS applications. For more information see:

https://developer.apple.com/library/ios/documentation/AudioVideo/Conceptual/AVFoundationPG/Articles/00_Introduction.html#/apple_ref/doc/uid/TP40010188. -

AudioToolbox.framework: A framework containing interfaces for audio playback, recording and media stream parsing. For more information see:

https://developer.apple.com/library/ios/documentation/MusicAudio/Reference/CAAudioTooboxRef/index.html#/apple_ref/doc/uid/TP40002089. -

libicucore.dylib: A unicode support library. For more information see:

http://icu-project.org/apiref/icu4c40/. -

libsqlite3.dylib: A framework providing a SQLite interface. For more information, see:

https://developer.apple.com/technologies/ios/data-management.html.

-

-

Import the header files from include/webrtc and include/wsc by dragging the wsc and webrtc folders directly onto your project in the XCode project navigator.

-

Click Build Settings in the top of the editor toolbar.

-

Expand Linking and set -ObjC as Other Linker Flags for both Debug and Release. For more information on setting XCode build settings, see:

https://developer.apple.com/library/ios/recipes/xcode_help-project_editor/Articles/EditingBuildSettings.html. -

If you are targeting iOS version 8 or above, add the libstdc++.6.dylib.a framework to prevent linking errors.

Authenticating with WebRTC Session Controller

You use the WSCHttpContext class to set up an authentication context. The authentication context contains the necessary HTTP headers and SSLContext information, and is used when setting up a wsc.Session.

Initialize a URL Object

You then create a new NSURL object using the URL to your WebRTC Session Controller endpoint.

Configure Authorization Headers if Required

Configure authorization headers as required by your authentication scheme. The following example uses Basic authentication; OAuth and other authentication schemes will be similarly configured.

Example 14-2 Initializing Basic Authentication Headers

NSString *authType = @"Basic ";

NSString *username = @"username";

NSString *password = @"password";

NSString * authString = [authType stringByAppendingString:[username

stringByAppendingString:[@":"

stringByAppendingString:[password]]];

Note:

If you are using Guest authentication, no headers are required.Connect to the URL

With your authentication parameters configured, you can now connect to the WebRTC Session Controller URL using sendSynchronousRequest. or NSURlRequest, and NSURlConnection, in which case the error and response are returned in delegate methods.

Example 14-3 Connecting to the WebRTC Session Controller URL

NSHTTPURLResponse * response;NSError * error;authUrlNSMutableURLRequest *loginRequest = [NSMutableURLRequest requestWithURL:]; [loginRequest setValue:authString forHTTPHeaderField:@"Authorization"]; [NSURLConnection sendSynchronousRequest:loginRequest returningResponse:&response error:&error];

Configure the SSL Context if Required

If you are using Secure Sockets Layer (SSL), configure the SSL context, using the SSLCreateContext method, depending upon whether the URL connection was successful. For more information on SSLCreateContext, see https://developer.apple.com/library/mac/documentation/Security/Reference/secureTransportRef/index.html#/apple_ref/c/func/SSLCreateContext.

Example 14-4 Configuring the SSLContext

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if required... SSLContextRef sslContext = SSLCreateContext(NULL, kSSLClientSide, kSSLStreamType); // Copy the SSLContext configuration to the httpContext builder... [builder withSSLContextRef:&sslContext]; ... }

Retrieve the Response Headers from the Request

Depending upon the results of the authentication request, you retrieve the response headers from the URL request and copy the cookies to the httpContext builder.

Example 14-5 Retrieving the Response Headers from the URL Request

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if required, from Example 14-4... SSLContextRef sslContext = SSLCreateContext(NULL, kSSLClientSide, kSSLStreamType); // Copy the SSLContext configuration to the httpContext builder... [builder withSSLContextRef:&sslContext]; // Retrieve all the response headers... NSDictionary *respHeaders = [response allHeaderFields]; WSCHttpContextBuilder *builder = [WSCHttpContextBuilder create]; // Copy all cookies from respHeaders to the httpContext builder... [builder withHeader:key value:headerValue]; ... }

Build the HTTP Context

Depending upon the results of the authentication request, you then build the WSCHttpContext using WSCHttpContextBuilder.

Example 14-6 Building the HttpContext

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if required, from Example 14-4... SSLContextRef sslContext = SSLCreateContext(NULL, kSSLClientSide, kSSLStreamType); // Copy the SSLContext configuration to the httpContext builder... [builder withSSLContextRef:&sslContext]; // Retrieve all the response headers from Example 14-5... // Build the httpContext... WSCHttpContext *httpContext = [builder build]; ... }

Configure Interactive Connectivity Establishment (ICE)

If you have access to one or more STUN/TURN ICE servers, you can initialize the WSCIceServer class. For details on ICE, see "Managing Interactive Connectivity Establishment Interval."

Example 14-7 Configuring the WSCIceServer Class

WSCIceServer *iceServer1 = [[WSCIceServer alloc] initWithUrl:@"stun:stun-server:port"]; WSCIceServer *iceServer2 = [[WSCIceServer alloc] initWithUrl:@"turn:turn-server:port", @"admin", @"password"]; WSCIceServerConfig *iceServerConfig = [[WSCIceServerConfig alloc] initWithIceServers: iceServer1, iceServer2, NIL];

Creating a WebRTC Session Controller Session

Once you have configured your authentication method and connected to your WebRTC Session Controller endpoint, you can instantiate a WebRTC Session Controller session object.

Implement the WSCSessionConnectionDelegate Protocol

You must implement the WSCSessionConnectionDelegate protocol to handle the results of your session creation request. The WSCSessionConnectionDelegate protocol has two event handlers:

-

onSuccess: Triggered upon a successful session creation.

-

onFailure: Returns a failure status code. Triggered when session creation fails.

Example 14-8 Implementing the WSCSessionConnectionDelegate Protocol

#pragma mark WSCSessionConnectionDelegate

-(void)onSuccess {

NSLog(@"WebRTC Session Controller session connected.");

NSLog(@"Connection succeeded. Continuing...");

}

-(void)onFailure:(enum WSCStatusCode)code {

switch (code) {

case WSCStatusCodeUnauthorized:

NSLog(@"Unable to connect. Please check your credentials.");

break;

case WSCStatusCodeResourceUnavailable:

NSLog(@"Unable to connect. Please check the URL.");

break;

default:

// Handle other cases as required...

break;

}

}

Implement the WSCSession Connection Observer Protocol

Create a WSCSessionConnectionObserver protocol to monitor and respond to changes in session state.

Example 14-9 Implementing the WSCSessionConnectionObserver Protocol

#pragma mark WSCSessionConnectionDelegate

-(void)stateChanged:(WSCSessionState) sessionState {

switch (sessionState) {

case WSCSessionStateConnected:

NSLog(@"Session is connected.");

break;

case WSCSessionStateReconnecting:

NSLog(@"Session is attempting reconnection.");

break;

case WSCSessionStateFailed:

NSLog(@"Session connection attempt failed.");

break;

case WSCSessionStateClosed:

NSLog(@"Session connection has been closed.");

break;

default:

break;

}

}

Build the Session Object and Open the Session Connection

With the connection delegate and connection observer configured, you now build a WebRTC Session Controller session and open a connection with the server.

Example 14-10 Building the Session Object and Opening the Session Connection

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if required, from Example 14-4... ... // Retrieve all the response headers from Example 14-5... ... // Build the httpContext from Example 14-6... ... NSString *userName = @"username"; self.wscSession = [[[[[[[[[[[[WSCSessionBuilder create:urlString] withConnectionDelegate:WSCSessionConnectionDelegate] withUserName:userName] withObserverDelegate:WSCSessionConnectionObserverDelegate] withPackage:[[WSCCallPackage alloc] init]] withHttpContext:httpContext] withIceServerConfig:iceServerConfig] build]; // Open a connection to the server... [self.wscSession open];

In Example 14-10, note that the withPackage method registers a new WSCCallPackage with the session that will be instantiated when creating voice or video calls.

Configure Additional WSCSession Properties

You can configure additional properties when creating a session using the WSCSessionBuilder withProperty method.

For a complete list of properties see the Oracle Communications WebRTC Session Controller iOS SDK API Reference.

Example 14-11 Configuring WSCSession Properties

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if required, from Example 14-4... ... // Retrieve all the response headers from Example 14-5... ... // Build the httpContext from Example 14-6... ... self.wscSession = [[[[[[[[[[[[WSCSessionBuilder create:urlString] ... withProperty:WSC_PROP_IDLE_PING_INTERVAL value:[NSNumber numberWithInt: 20]] withProperty:WSC_PROP_RECONNECT_INTERVAL value:[NSNumber numberWithInt:10000]] ... build]; [self.wscSession open]; }

Adding WebRTC Voice Support to your iOS Application

This section describes how you can add WebRTC voice support to your iOS application.

Initialize the CallPackage Object

When you created your Session, you registered a new WSCCallPackage object using the Session object's withPackage method. You now instantiate that WSCCallPackage.

Example 14-12 Initializing the CallPackage

WSCCallPackage *callPackage = (WSCCallPackage*)[wscSession getPackage:PACKAGE_TYPE_CALL];

Note:

Use the default PACKAGE_TYPE_CALL call type unless you have defined a custom call type.Place a WebRTC Voice Call from Your iOS Application

Once you have configured your authentication scheme, and created a Session, you can place voice calls from your iOS application.

Add the Audio Capture Device to Your Session

Before continuing, in order to stream audio from your iOS device you initialize a capture session and add an audio capture device.

Example 14-13 Adding an Audio Capture Device to Your Session

- (instancetype)initAudioDevice

{

self = [super initAudioDevice];

if (self) {

self.captureSession = [[AVCaptureSession alloc] initAudioDevice];

[self.captureSession setSessionPreset:AVCaptureSessionPresetLow];

// Get the audio capture device and add to our session.

self.audioCaptureDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

NSError *error = nil;

AVCaptureDeviceInput *audioInput = [AVCaptureDeviceInput

deviceInputWithDevice:self.audioCaptureDevice error:&error];

if (audioInput) {

[self.captureSession addInput:audioInput];

}

else {

NSLog(@"Unable to find audio capture device : %@", error.description);

}

return self;

}

Initialize the Call Object

Now, with the WSCCallPackage object created, you then initialize a WSCCall object, passing the callee's ID as an argument.

Configure Trickle ICE

To improve ICE candidate gathering performance, you can choose to enable Trickle ICE in your application using the WSCCall object's setTrickleIceMode method. For more information see "Enabling Trickle ICE to Improve Application Performance."

Create a CallObserverDelegate Protocol

You create a CallObserverDelegate protocol so you can respond to the following WSCCall events:

-

callUpdated: Triggered on incoming and outgoing call update requests.

-

mediaStateChanged: Triggered on changes to the WSCCall media state.

-

stateChanged: Triggered on changes to the WSCCall state.

Example 14-16 Creating a CallObserverDelegate Protocol

#pragma mark CallObserverDelegate -(void)callUpdated:(WSCCallUpdateEvent)event callConfig:(WSCCallConfig *)callConfig cause:(WSCCause *)cause { NSLog("callUpdate request with config: %@", callConfig.description); switch(event){ case WSCCallUpdateEventSent: break; case WSCCallUpdateEventReceived: NSLog("Call Update event received for config: %@", callConfig.description); break; case WSCCallUpdateEventAccepted: NSLog("Call Update accepted for config: %@", callConfig.description); break; case WSCCallUpdateEventRejected: NSLog("Call Update event rejected for config: %@", callConfig.description); break; default: break; } } -(void)mediaStateChanged:(WSCMediaStreamEvent)mediaStreamEvent mediaStream:(RTCMediaStream *)mediaStream { NSLog(@"mediaStateChanged : %u", mediaStreamEvent); } -(void)stateChanged:(WSCCallState)callState cause:(WSCCause *)cause { NSLog(@"Call State changed : %u", callState); switch (callState) { NSLog(@"stateChanged: %u", callState); case WSCCallStateNone: NSLog(@"stateChanged: %@", @"WSC_CS_NONE"); break; case WSCCallStateStarted: NSLog(@"stateChanged: %@", @"WSC_CS_STARTED"); break; case WSCCallStateResponded: NSLog(@"stateChanged: %@", @"WSC_CS_RESPONDED"); break; case WSCCallStateEstablished: NSLog(@"stateChanged: %@", @"WSC_CS_ESTABLISHED"); break; case WSCCallStateFailed: NSLog(@"stateChanged: %@", @"WSC_CS_FAILED"); break; case WSCCallStateRejected: NSLog(@"stateChanged: %@", @"WSC_CS_REJECTED"); break; case WSCCallStateEnded: NSLog(@"stateChanged: %@", @"WSC_CS_ENDED"); break; default: break; } }

Register the CallObserverDelegate Protocol with the Call Object

You register the CallObserverDelegate protocol with the WSCCall object.

Create a WSCCallConfig Object

You create a WSCCallConfig object to determine the type of call you wish to make. The WSCCallConfig constructor takes two parameters, audioMediaDirection and videoMediaDirection. The first parameter configures an audio call while the second configures a video call.

The values for audioMediaDirection and videoMediaDirection parameters are:

-

WSCMediaDirectionNone: No direction; media support disabled.

-

WSCMediaDirectionRecvOnly: The media stream is receive only.

-

WSCMediaDirectionSendOnly: The media stream is send only.

-

WSCMediaDirectionSendRecv: The media stream is bi-directional.

Example 14-18 shows the configuration for a bi-directional, audio-only call.

Configure the Local MediaStream for Audio

With the WSCCallConfig object created, you then configure the local audio MediaStream using the WebRTC PeerConnectionFactory. For information on the WebRTC SDK API, see http://www.webrtc.org/reference/native-apis.

Example 14-19 Configuring the Local MediaStream for Audio

RTCPeerConnectionFactory *)pcf = [call getPeerConnectionFactory]; RTCMediaStream* localMediaStream = [pcf mediaStreamWithLabel:@"ARDAMS"]; [localMediaStream addAudioTrack:[pcf audioTrackWithID:@"ARDAMSa0"]]; NSArray *streamArray = [[NSArray alloc] initWithObjects:localStream, nil];

Receiving a WebRTC Voice Call in Your iOS Application

This section describes configuring your iOS application to receive WebRTC voice calls.

Create a CallPackageObserverDelegate

To be notified of an incoming call, create a CallPackageObserverDelegate and attach it to your WSCCallPackage.

Example 14-21 Creating a CallPackageObserver Delegate

Creating a CallPackageObserver Delegate

#pragma mark CallPackageObserverDelegate

-(void)callArrived:(WSCCall *)call

callConfig:(WSCCallConfig *)callConfig

extHeaders:(NSDictionary *)extHeaders {

NSLog(@"Registering a CallObserverDelegate...");

call.setObserverDelegate = CallObserverDelegate;

NSLog(@"Configuring the media streams...");

RTCPeerConnectionFactory *)pcf = [call getPeerConnectionFactory];

RTCMediaStream* localMediaStream = [pcf mediaStreamWithLabel:@"ARDAMS"];

[localMediaStream addAudioTrack:[pcf audioTrackWithID:@"ARDAMSa0"]];

if (answerTheCall) {

NSLog(@"Answering the call...");

[call accept:self.callConfig streams:localMediaStream];

} else {

NSLog(@"Declining the call...");

[call decline:WSCStatusCodeBusyHere];

}

}

}

In Example 14-21, the callArrived event handler processes an incoming call request:

-

The method registers a CallObserverDelegate for the incoming call. In this case, it uses the same CallObserverDelegate, from the example in "Create a CallObserverDelegate Protocol."

-

The method then configures the local media stream, in the same manner as "Configure the Local MediaStream for Audio."

-

The method determines whether to accept or reject the call based upon the value of the answerTheCall boolean using either Call object's accept or decline methods.

Note:

The answerTheCall boolean will most likely be set by a user interface element in your application such as a button or link.

Adding WebRTC Video Support to your iOS Application

This section describes how you can add WebRTC video support to your iOS application. While the methods are almost completely identical to adding voice call support to an iOS application, additional preparation is required.

Add the Audio and Video Capture Devices to Your Session

As with an audio call, you initialize the audio capture device as shown in "Add the Audio Capture Device to Your Session." In addition, you initialize the video capture device and add it to your session.

Example 14-22 Adding the Audio and Video Capture Devices to Your Session

- (instancetype)initAudioVideo

{

self = [super initAudioVideo];

if (self) {

self.captureSession = [[AVCaptureSession alloc] initAudioVideo];

[self.captureSession setSessionPreset:AVCaptureSessionPresetLow];

// Get the audio capture device and add to our session.

self.audioCaptureDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

NSError *error = nil;

AVCaptureDeviceInput *audioInput = [AVCaptureDeviceInput

deviceInputWithDevice:self.audioCaptureDevice error:&error];

if (audioInput) {

[self.captureSession addInput:audioInput];

}

else {

NSLog(@"Unable to find audio capture device : %@", error.description);

}

// Get the video capture devices and add to our session.

for (AVCaptureDevice* videoCaptureDevice in [AVCaptureDevice

devicesWithMediaType:AVMediaTypeVideo]) {

if (videoCaptureDevice.position == AVCaptureDevicePositionFront) {

self.frontVideCaptureDevice = videoCaptureDevice;

AVCaptureDeviceInput *videoInput = [AVCaptureDeviceInput

deviceInputWithDevice:videoCaptureDevice error:&error];

if (videoInput) {

[self.captureSession addInput:videoInput];

} else {

NSLog(@"Unable to get front camera input : %@", error.description);

}

} else if (videoCaptureDevice.position == AVCaptureDevicePositionBack) {

self.backVideCaptureDevice = videoCaptureDevice;

}

}

}

return self;

}

Configure a View Controller and a View Display Incoming Video

You add a view object to a view controller to display the incoming video. In Example 14-23, when the MyWebRTCApplicationViewController view controller is created, its view property is nil, which triggers the loadView method

Example 14-23 Creating a View to Display the Video Stream

@implementation MyWebRTCApplicationViewController

- (void)loadView {

// Create the view, videoView...

CGRect frame = [UIScreen mainScreen].bounds;

MyWebRTCApplicationView *videoView = [[MyWebRTCApplication alloc] initWithFrame:frame];

// Set videoView as the main view of the view controller...

self.view = videoView;

}

@end

Next you set the view controller as the rootViewController, which adds videoView as a subview of the window, and automatically resizes videoView to be the same size as the window.

Example 14-24 Setting the Root View Controller

#import "MyWebRTCApplicationAppDelegate.h" #import "MyWebRTCApplicationViewController.h" @implementation MyWebRTCApplicationAppDelegate - (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions: (NSDictionary *)launchOptions { self.window = [[UIWindow alloc] initWithFrame:[[UIScreen mainScreen] bounds]]; MyWebRTCApplicationViewController *myvc = [[MyWebRTCApplicaitonViewController alloc] init]; self.window.rootVewController = myvc; self.window.backgroundColor = [UIColor grayColor]; return YES; }

Placing a WebRTC Video Call from Your iOS Application

To place a video call from your iOS application, complete the coding tasks contained in the following sections:

-

Configure Interactive Connectivity Establishment (ICE) (if required)

In addition, complete the coding tasks for an audio call contained in the following sections:

Note:

Audio and video call work flows are identical with the exception of media directions, local media stream configuration, and the additional considerations described earlier in this section.Create a WSCCallConfig Object

You create a WSCCallConfig object as described in "Create a WSCCallConfig Object," in the audio call section, setting both arguments to WSCMediaDirectionSendRecv.

Configure the Local WSCMediaStream for Audio and Video

With the CallConfig object created, you then configure the local video and audio MediaStream objects using the WebRTC PeerConnectionFactory. In Example 14-26, the PeerConnectionFactory is used to first configure a video stream using optional constraints and mandatory constraints (as defined in the getMandatoryConstraints method), and is then added to the localMediaStream using its addVideoTrack method. Two boolean arguments, hasAudio and hasVideo, enable the calling function to specify whether audio or video streams are supported in the current call. The audioTrack is added as well and the localMediaStream is returned to the calling function.

For information on the WebRTC PeerConnectionFactory and mandatory and optional constraints, see http://www.webrtc.org/reference/native-apis.

Example 14-26 Configuring the Local MediaStream for Audio and Video

-(RTCMediaStream *)getLocalMediaStreams:(RTCPeerConnectionFactory *)pcf

enableAudio:(BOOL)hasAudio enableVideo:(BOOL)hasVideo {

NSLog(@"Getting local media streams");

if (!localMediaStream) {

NSLog("PeerConnectionFactory: createLocalMediaStream() with pcf : %@", pcf);

localMediaStream = [pcf mediaStreamWithLabel:@"ALICE"];

NSLog(@"MediaStream1 = %@", localMediaStream);

}

if(hasVideo && (localMediaStream.videoTracks.count <= 0)){

if (hasVideo) {

RTCVideoCapturer* capturer = [RTCVideoCapturer

capturerWithDeviceName:[avManager.frontVideCaptureDevice localizedName]];

RTCPair *dtlsSrtpKeyAgreement = [[RTCPair alloc] initWithKey:@"DtlsSrtpKeyAgreement"

value:@"true"];

NSArray * optionalConstraints = @[dtlsSrtpKeyAgreement];

NSArray *mandatoryConstraints = [self getMandatoryConstraints];

RTCMediaConstraints *videoConstraints = [[RTCMediaConstraints alloc]

initWithMandatoryConstraints:mandatoryConstrainta

optionalConstraints:optionalConstraints];

RTCVideoSource *videoSource = [pcf videoSourceWithCapturer:capturer

constraints:videoConstraints];

RTCVideoTrack *videoTrack = [pcf videoTrackWithID:@"ALICEv0" source:videoSource];

if (videoTrack) {

[localMediaStream addVideoTrack:videoTrack];

}

}

}

if (localMediaStream.audioTracks.count <= 0 && hasAudio) {

[localMediaStream addAudioTrack:[pcf audioTrackWithID:@"ALICEa0"]];

}

if (!hasVideo && localMediaStream.videoTracks.count > 0) {

for (RTCVideoTrack *videoTrack in localMediaStream.videoTracks) {

[localMediaStream removeVideoTrack:videoTrack];

}

}

if (!hasAudio && localMediaStream.audioTracks.count > 0) {

for (RTCAudioTrack *audioTrack in localMediaStream.audioTracks) {

[localMediaStream removeAudioTrack:audioTrack];

}

}

NSLog(@"MediaStream = %@", localMediaStream);

return localMediaStream;

}

-(NSArray *)getMandatoryConstraints {

RTCPair *localVideoMaxWidth = [[RTCPair alloc] initWithKey:@"maxWidth" value:@"640"];

RTCPair *localVideoMinWidth = [[RTCPair alloc] initWithKey:@"minWidth" value:@"192"];

RTCPair *localVideoMaxHeight = [[RTCPair alloc] initWithKey:@"maxHeight" value:@"480"];

RTCPair *localVideoMinHeight = [[RTCPair alloc] initWithKey:@"minHeight" value:@"144"];

RTCPair *localVideoMaxFrameRate = [[RTCPair alloc] initWithKey:@"maxFrameRate" value:@"30"];

RTCPair *localVideoMinFrameRate = [[RTCPair alloc] initWithKey:@"minFrameRate" value:@"5"];

RTCPair *localVideoGoogLeakyBucket = [[RTCPair alloc]

initWithKey:@"googLeakyBucket" value:@"true"];

return @[localVideoMaxHeight,

localVideoMaxWidth,

localVideoMinHeight,

localVideoMinWidth,

localVideoMinFrameRate,

localVideoMaxFrameRate,

localVideoGoogLeakyBucket];

}

Bind the Video Track to the View Controller

Finally you bind the video track to the view controller you created in "Configure a View Controller and a View Display Incoming Video."

Receiving a WebRTC Video Call in Your iOS Application

Receiving a video call is identical to receiving an audio call as described here, "Receiving a WebRTC Voice Call in Your iOS Application." The only difference is the configuration of the WSCMediaStream object, as described in "Configure the Local WSCMediaStream for Audio and Video."

Upgrading and Downgrading Calls

This section describes how you can handle upgrading an audio call to an audio video call and downgrading a video call to an audio-only call in your iOS application.

Handle Upgrade and Downgrade Requests from Your Application

To upgrade from a voice call to a video call as a request from your application, you can bind a user interface element such as a button class containing the WSCCall update logic using the forControlEvents action:

[requestUpgradeButton addTarget:self action:@selector(videoUpgrade) forControlEvents:UIControlEventTouchUpInside]; [requestDowngradeButton addTarget:self action:@selector(videoDowngrade) forControlEvents:UIControlEventTouchUpInside];

You handle the upgrade or downgrade workflow in the videoUpgrade and videoDowngrade event handlers for each button instance.

Example 14-30 Sending Upgrade/Downgrade Requests from Your Application

- (void) videoUpgrade: { // Set the criteria for the current call... self.hasVideo = NO; self.hasAudio = YES; // Fetch local streams using the the getLocalMediaStreams function from Example 14-26 [self getLocalMediaStreams:[self.call getPeerConnectionFactory] enableVideo:hasVideo enableAudio:hasAudio]; // Bind the video stream to the view controller as in Example 14-27 if(localMediaStream.videoTracks.count >0) { [MyWebRTCApplicationViewController localVideoConnected:localMediaStream.videoTracks[0]]; } // Audio -> Video upgrade WSCCallConfig *newConfig = [[WSCCallConfig alloc] initWithAudioVideoDirection:WSCMediaDirectionSendRecv video:WSCMediaDirectionSendRecv]]; [call update:newConfig headers:nil streams:@[localMediaStream]]; } - (void) videoDowngrade: { // Set the criteria for the current call... self.hasVideo = YES; self.hasAudio = YES; // Fetch local streams using the the getLocalMediaStreams function from Example 14-26 [self getLocalMediaStreams:[self.call getPeerConnectionFactory] enableVideo:hasVideo enableAudio:hasAudio]; // Bind the video stream to the view controller as in Example 14-27 if(localMediaStream.videoTracks.count >0) { [MyWebRTCApplicationViewController localVideoConnected:localMediaStream.videoTracks[0]]; } // Video -> Audio downgrade WSCCallConfig *newConfig = [[WSCCallConfig alloc] initWithAudioVideoDirection:WSCMediaDirectionSendRecv video:WSCMediaDirectionNone]]; [call update:newConfig headers:nil streams:@[localMediaStream]]; }

Handle Incoming Upgrade Requests

You configure the callUpdated method of your CallObserverDelegate class to handle incoming upgrade requests in the case of a WSCCallUpdateEventReceived state change.

Note:

The declineUpgrade boolean must be set by some other part of your application's user interface.Example 14-31 Handling an Incoming Upgrade Request

- (void)callUpdated:(WSCCallUpdateEvent)event

callConfig:(WSCCallConfig *)callConfig

cause:(WSCCause *)cause

{

NSLog("callUpdate request with config: %@", callConfig.description);

switch(event){

case WSCCallUpdateEventSent:

break;

case WSCCallUpdateEventReceived:

if(declineUpgrade) {

NSLog(@"Declining upgrade.");

[self.call decline:WSCStatusCodeDeclined];

} else {

NSLog(@"Accepting upgrade.");

NSLog(@"Call config: %@", updateConfig.description);

BOOL hasAudio;

BOOL hasVideo;

if (updateConfig.audioConfig == WSCMediaDirectionNone) {

hasAudio = NO;

}

if (updateConfig.videoConfig == WSCMediaDirectionNone) {

hasVideo = NO;

}

self.callConfig = updateConfig;

[self getLocalMediaStreams:[self.call getPeerConnectionFactory] enableAudio:hasAudio

enableVideo:hasVideo];

[self.call accept:updateConfig streams:localMediaStream];

[callViewController updateView:self.callConfig];

}

case WSCCallUpdateEventAccepted:

break;

case WSCCallUpdateEventRejected:

break;

default:

break;

}