Oracle Solaris Cluster System Hardware and Software Components

This information is directed primarily to hardware service providers. These concepts can help service providers understand the relationships between the hardware components before they install, configure, or service cluster hardware. Cluster system administrators might also find this information useful as background to installing, configuring, and administering cluster software.

-

Cluster nodes with local disks (unshared)

-

Multihost storage (disks/LUNs are shared between cluster nodes)

-

Removable media (tapes and CD-ROMs)

-

Cluster interconnect

-

Public network interfaces

A cluster is composed of several hardware components, including the following:

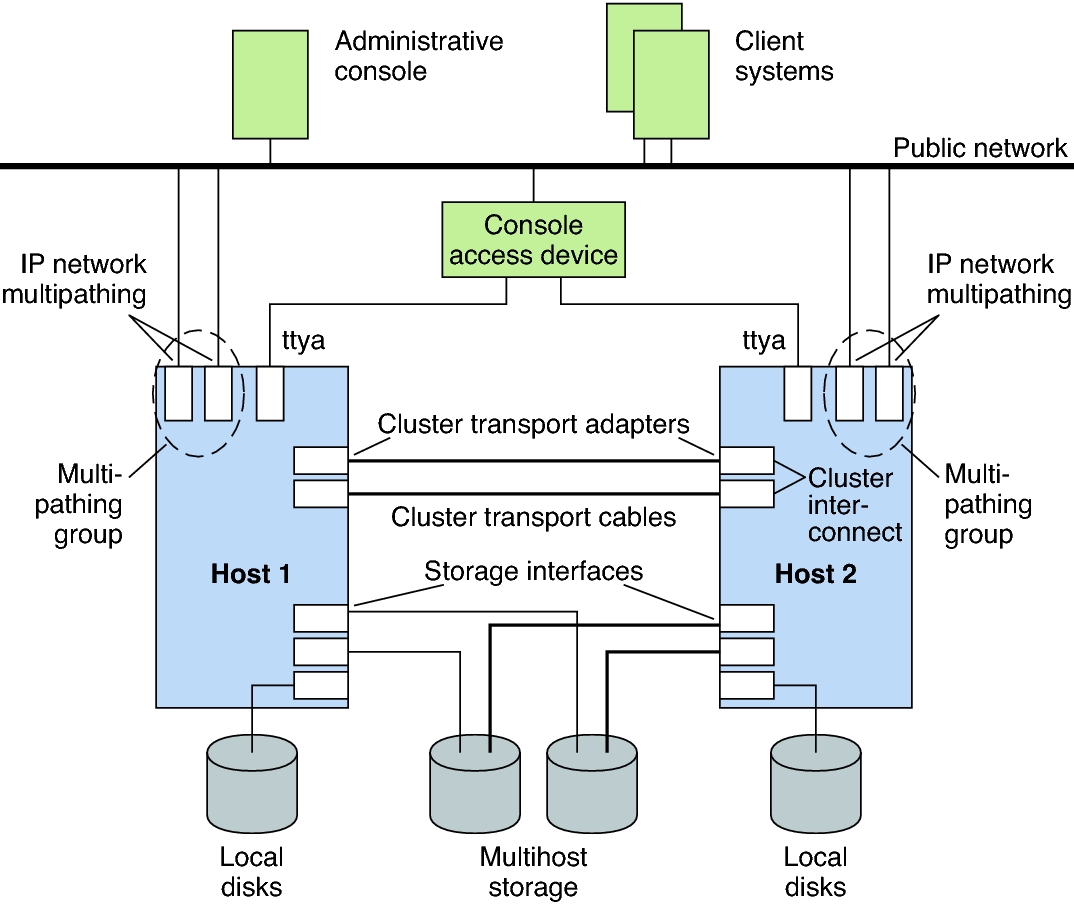

Oracle Solaris Cluster Hardware Components illustrates how the hardware components work with each other.

Figure 1 Oracle Solaris Cluster Hardware Components

Administrative console and console access devices are used to reach the cluster nodes or the terminal concentrator as needed. The Oracle Solaris Cluster software enables you to combine the hardware components into a variety of configurations. The following sections describe these configurations.

For a list of supported hardware and software configurations, see Cluster Nodes

Cluster Nodes

An Oracle Solaris host (or simply cluster node) is one of the following hardware or software configurations that runs the Oracle Solaris OS and its own processes:

-

A physical machine that is not configured with a virtual machine or as a hardware domain

-

Oracle VM Server for SPARC guest domain

-

Oracle VM Server for SPARC I/O domain

-

A hardware domain

Supported Configurations

Depending on your platform, Oracle Solaris Cluster software supports the following configurations:

-

SPARC: Oracle Solaris Cluster software supports from one to 16 cluster nodes in a cluster. Different hardware configurations impose additional limits on the maximum number of nodes that you can configure in a cluster composed of SPARC-based systems. See Oracle Solaris Cluster Topologies for the supported configurations.

-

x86: Oracle Solaris Cluster software supports from one to eight cluster nodes in a cluster. Different hardware configurations impose additional limits on the maximum number of nodes that you can configure in a cluster composed of x86-based systems. See Oracle Solaris Cluster Topologies for the supported configurations.

All nodes in the cluster must have the same architecture. All nodes in the cluster must run the same version of the Oracle Solaris OS. Nodes in the same cluster must have the same OS and architecture, as well as similar processing, memory, and I/O capability, to enable failover to occur without significant degradation in performance. Because of the possibility of failover, every node must have enough excess capacity to support the workload of all nodes for which they are a backup or secondary.

Cluster nodes are generally attached to one or more multihost storage devices. Nodes that are not attached to multihost devices can use a cluster file system to access the data on multihost devices. For example, one scalable services configuration enables nodes to service requests without being directly attached to multihost devices.

In addition, nodes in parallel database configurations share concurrent access to all the disks.

-

See Multihost Devices for information about concurrent access to disks.

-

See Clustered Pair Topology and Clustered Pair Topology for more information about parallel database configurations and scalable topology.

Public network adapters attach nodes to the public networks, providing client access to the cluster.

Cluster members communicate with the other nodes in the cluster through one or more physically independent networks. This set of physically independent networks is referred to as the cluster interconnect.

Every node in the cluster is aware when another node joins or leaves the cluster. Additionally, every node in the cluster is aware of the resources that are running locally as well as the resources that are running on the other cluster nodes.

Software Components for Cluster Hardware Members

-

Oracle Solaris Operating System

-

Oracle Solaris Cluster software

-

Data service applications

-

Optional: Volume management (Solaris Volume Manager )

To function as a cluster member, a cluster node must have the following software installed:

-

See the Oracle Solaris Cluster 4.3 Software Installation Guide for information about how to install the Oracle Solaris Operating System, Oracle Solaris Cluster, and volume management software.

-

See the Oracle Solaris Cluster 4.3 Data Services Planning and Administration Guide for information about how to install and configure data services.

-

See Chapter 3, Key Concepts for System Administrators and Application Developers for conceptual information about the preceding software components.

Additional information is available:

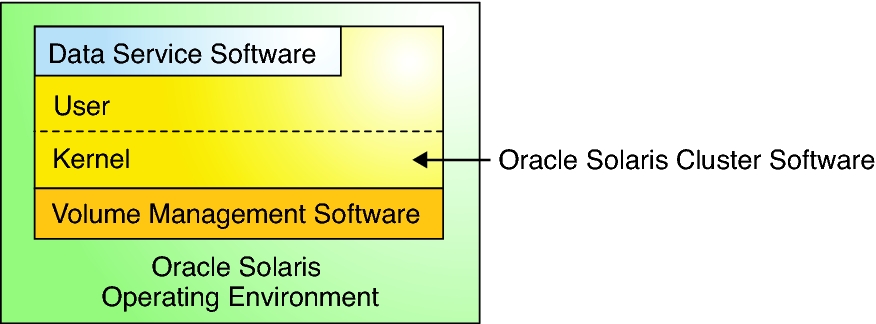

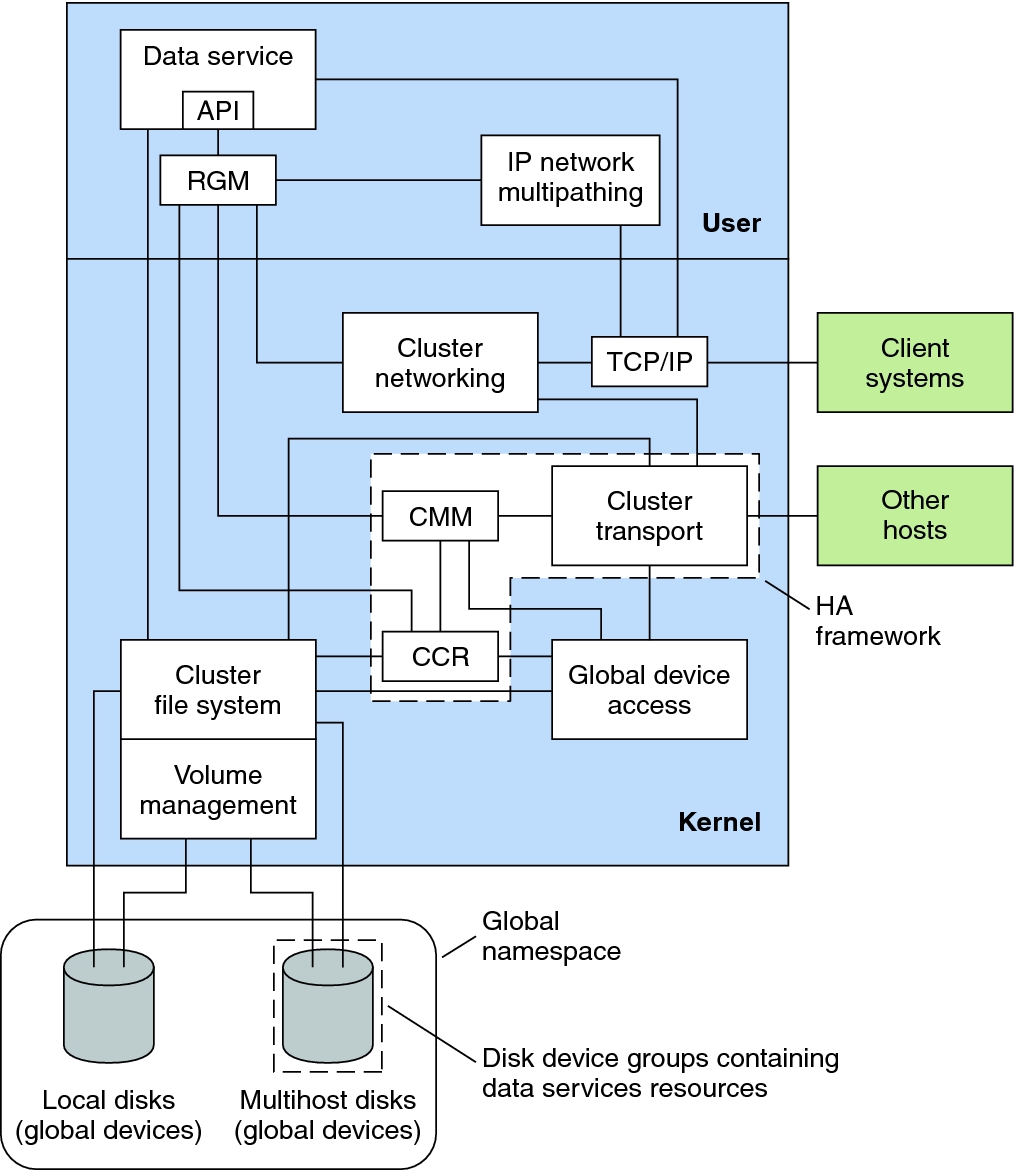

The following figure provides a high-level view of the software components that work together to create the Oracle Solaris Cluster environment.

Figure 2 High-Level Relationship of Oracle Solaris Cluster Components

Oracle Solaris Cluster Software Architecture shows a high-level view of the software components that work together to create the Oracle Solaris Cluster software environment.

Figure 3 Oracle Solaris Cluster Software Architecture

Multihost Devices

LUNs that can be connected to more than one cluster node at a time are multihost devices. Greater than two-node clusters do not require quorum devices. A quorum device is a shared storage device or quorum server that is shared by two or more nodes and that contributes votes that are used to establish a quorum. The cluster can operate only when a quorum of votes is available. For more information about quorum and quorum devices, see Quorum and Quorum Devices.

Multihost devices have the following characteristics:

-

Ability to store application data, application binaries, and configuration files.

-

Protection against host failures. If clients request the data through one node and the node fails, the I/O requests are handled by the surviving node.

A volume manager can provide software RAID protection for the data residing on the multihost devices.

Combining multihost devices with disk mirroring protects against individual disk failure.

Local Disks

Local disks are the disks that are only connected to a single cluster node. Local disks are therefore not protected against node failure (they are not highly available). However, all disks, including local disks, are included in the global namespace and are configured as global devices. Therefore, the disks themselves are visible from all cluster nodes.

See the section Global Devices for more information about global devices.

Removable Media

Removable media such as tape drives and CD-ROM drives are supported in a cluster. In general, you install, configure, and service these devices in the same way as in a nonclustered environment. Refer to Oracle Solaris Cluster Hardware Administration Manual for information about installing and configuring removable media.

See the section Global Devices for more information about global devices.

Cluster Interconnect

The cluster interconnect is the physical configuration of devices that is used to transfer cluster-private communications and data service communications between cluster nodes in the cluster.

Only nodes in the cluster can be connected to the cluster interconnect. The Oracle Solaris Cluster security model assumes that only cluster nodes have physical access to the cluster interconnect.

You can set up from one to six cluster interconnects in a cluster. While a single cluster interconnect reduces the number of adapter ports that are used for the private interconnect, it provides no redundancy and less availability. If a single interconnect fails, moreover, the cluster is at a higher risk of having to perform automatic recovery. Whenever possible, install two or more cluster interconnects to provide redundancy and scalability, and therefore higher availability, by avoiding a single point of failure.

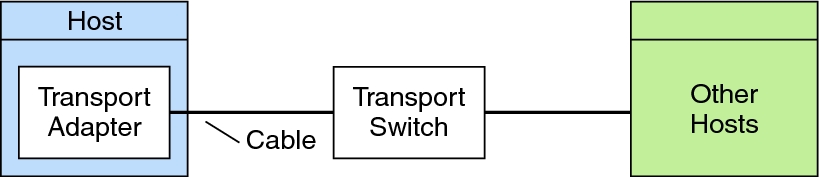

The cluster interconnect consists of three hardware components: adapters, junctions, and cables. The following list describes each of these hardware components.

-

Adapters – The network interface cards that are located in each cluster node. Their names are constructed from a driver name immediately followed by a physical-unit number (for examplenet 2). Some adapters have only one physical network connection, but others, like the net card, have multiple physical connections. Some adapters combine both the functions of a NIC and an HBA.

A network adapter with multiple interfaces could become a single point of failure if the entire adapter fails. For maximum availability, plan your cluster so that the paths between two nodes does not depend on a single network adapter. On Oracle Solaris 11, this name is visible through the use of the dladm show-physcommand. For more information, see the dladm (1M) man page.

-

Junctions – The switches that are located outside of the cluster nodes. In a two-node cluster, junctions are not mandatory. In that case, the nodes can be connected to each other through back-to-back network cable connections. Greater than two-node configurations generally require junctions.

-

Cables – The physical connections that you install either between two network adapters or between an adapter and a junction.

Cluster Interconnect shows how the two nodes are connected by a transport adapter, cables, and a transport switch.

Figure 4 Cluster Interconnect

Public Network Interfaces

Clients connect to the cluster through the public network interfaces.

-

Allow a cluster node to be connected to multiple subnets

-

Provide public network availability by having interfaces or links acting as backups for one another through public network management (PNM) objects. PNM objects include the Internet Protocol network multipathing (IPMP) groups, trunk and datalink multipathing (DLMP) link aggregations, and VNICs that are directly backed by link aggregations.

You can set up cluster nodes in the cluster to include multiple public network interface cards that perform the following functions:

If one of the adapters fails, IPMP software or link aggregation software is called to fail over the defective interface to another underlying adapter in the PNM object. For more information about IPMP, see Chapter 2, About IPMP Administration, in Administering TCP/IP Networks, IPMP, and IP Tunnels in Oracle Solaris 11.3 . For more information about link aggregations, see Chapter 2, Configuring High Availability by Using Link Aggregations, in Managing Network Datalinks in Oracle Solaris 11.3 .

No special hardware considerations relate to clustering for the public network interfaces.

Logging Into the Cluster Remotely

You must have console access to all cluster nodes in the cluster. You can use the Parallel Console Access (pconsole) utility from the command line to log into the cluster remotely. The pconsole utility is part of the Oracle Solaris terminal/pconsole package. Install the package by executing pkg install terminal/pconsole. The pconsole utility creates a host terminal window for each remote host that you specify on the command line. The utility also opens a central, or master, console window that propagates what you input there to each of the connections that you open.

The pconsole utility can be run from within X Windows or in console mode. Install pconsole on the machine that you will use as the administrative console for the cluster. If you have a terminal server connected to your cluster nodes' serial ports (serial consoles), you can access a serial console port by specifying the IP address of the terminal server and relevant terminal server's port (terminal-server's IP:portnumber).

See the pconsole (1) man page for more information.

Administrative Console

You can use a dedicated workstation or administrative console to reach the cluster nodes or the terminal concentrator as needed to administer the active cluster. For more information, see Chapter 1, Introduction to Administering Oracle Solaris Cluster, in Oracle Solaris Cluster 4.3 System Administration Guide .

You use the administrative console for remote access to the cluster nodes, either over the public network, or optionally through a network-based terminal concentrator.

-

Enables centralized cluster management by grouping console and management tools on the same machine

-

Provides potentially quicker problem resolution by your hardware service provider

Oracle Solaris Cluster does not require a dedicated administrative console, but using one provides these benefits: