5 Storage Configuration

This chapter describes the storage configuration for the Oracle Exalytics In-Memory Machine.

It contains the following topics:

5.1 Overview of Disk Partitions

Table 5-1 lists the disk partitions configured on the Oracle Exalytics In-Memory Machine.

| Partition | Capacity | Hardware RAID | Mount Point | Description |

|---|---|---|---|---|

|

boot |

99 MB |

RAID1 |

|

Boot partition |

|

base operating system, logs, and temp space |

X5-4: 670 GB X4-4: 670 GB X3-4: 670 GB X2-4: 447 GB |

RAID1 |

|

Partition for the base operating system, logs, and temp space |

|

Software, data files, and so on |

X5-4: 2.4 TB X4-4: 2.4 TB X3-4: 2.4 TB X2-4: 1.797 TB |

RAID5 |

The |

Data partition |

Note:

In addition to the hard-disk storage described in Table 5-1, X3-4 machines have six 400 GB Sun FlashAccelerator F40 PCIe cards (total memory: 2.4 TB RAW) and X4-4 machines have three 800 GB Sun FlashAccelerator F80 PCIe cards (total memory:2.4 TB RAW), mounted on/u02.

For information about configuring the Flash cards, refer to the "Configuring Flash and Replacing a Defective Flash Card" topic in the Oracle Exalytics In-Memory Machine Installation and Administration Guide for Linux.

5.2 Connecting Exalytics to a Storage Area Network

Starting with Oracle Exalytics Release 1 Patchset 1 (1.0.0.1), the Storage Area Network (SAN) feature is supported. This section describes the procedure for connecting Exalytics to a Storage Area Network.

Connecting Exalytics to a Storage Area Network involves the following steps:

5.2.1 Before You Begin

Before connecting the Oracle Exalytics In-Memory Machine to your data center's existing network, ensure that the following prerequisites are satisfied:

5.2.1.1 Cable Requirements

Fiber-optic media, short-wave, multimode fiber (400-M5- SN-S)

5.2.1.2 Host Bus Adapter Features and Specifications

For more information, see "HBA Features and Specifications" in the StorageTek 8 Gb FC PCI-Express HBA Installation Guide Installation Guide For HBA Models SG-XPCIE1FC-QF8-Z, SG-PCIE1FC-QF8-Z, SG-XPCIE1FC-QF8-N, SG-PCIE1FC-QF8-NandSG-XPCIE2-QF8-Z, SG-PCIE2FC-QF8-Z, SG-XPCIE2-QF8-N, SG-PCIE2FC-QF8-N.

5.2.2 Preparing for Storage Area Network (SAN) Configuration

To prepare Oracle Exalytics In-Memory Machine for SAN Configuration, complete the following steps:

-

Configure the system to enable loading of the following kernal module at boot:

-

qla2xxx.ko -

dm-multipath.ko

-

-

Initialize the

/etc/multipath.conffile by running the following command:/sbin/mpathconf

-

Enable multipath startup during an operating system boot, by running the following commands as the

rootuser:chkconfig multipathd on chkconfig --level 345 multipathd on service multipathd start

5.2.3 Connecting the Optical Cable

To connect the optical cable, complete the following steps:

Note:

The HBA does not allow normal data transmission on an optical link unless it is connected to another similar or compatible Fibre Channel product (that is, multimode to multimode).-

Connect the fiber-optic cable to an LC connector on the HBA.

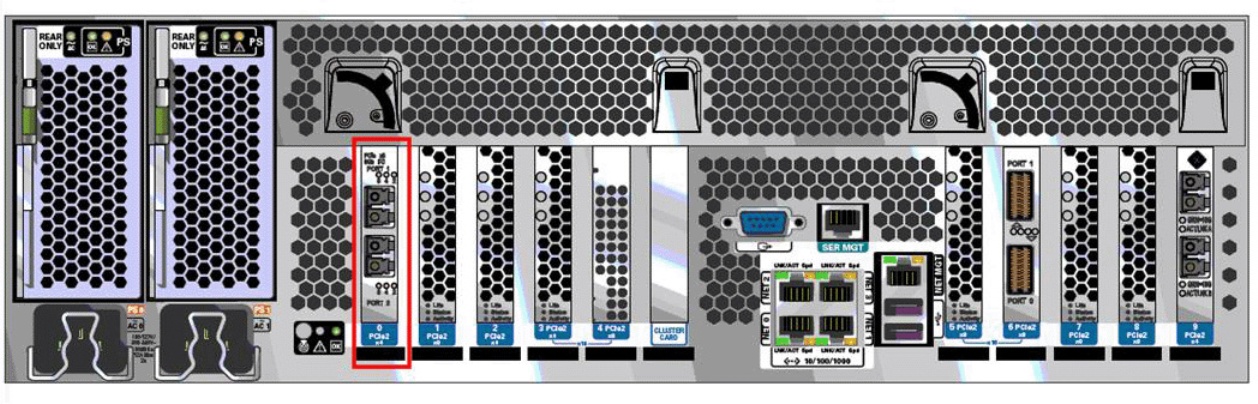

Figure 5-1 Connecting the Optical Cable: Dual Port HBA in Oracle Exalytics In-Memory Machine X2-4

Description of "Figure 5-1 Connecting the Optical Cable: Dual Port HBA in Oracle Exalytics In-Memory Machine X2-4"

Figure 5-2 Connecting the Optical Cable: Dual Port HBA in Oracle Exalytics In-Memory Machine X3-4

Description of "Figure 5-2 Connecting the Optical Cable: Dual Port HBA in Oracle Exalytics In-Memory Machine X3-4"

-

Connect the other end of the cable to the FC device.

After the optical cable is connected to the HBA, you can power on the system.

5.2.4 Installing Diagnostic Support for the Red Hat/SUSE Linux OS (Optional)

For more information, see "Diagnostic Support for the Red Hat/SUSE OS" in the StorageTek 8 Gb FC PCI-Express HBA Installation Guide Installation Guide For HBA Models SG-XPCIE1FC-QF8-Z, SG-PCIE1FC-QF8-Z, SG-XPCIE1FC-QF8-N, SG-PCIE1FC-QF8-NandSG-XPCIE2-QF8-Z, SG-PCIE2FC-QF8-Z, SG-XPCIE2-QF8-N, SG-PCIE2FC-QF8-N.

5.2.5 Verifying the Connectivity

To verify the connectivity, complete the following steps:

-

Check the status of the multipath device as follows:

[root@node1 ~]# service multipathd status multipathd (pid xxxx) is running...

-

Run

multipath -llcommand to view the detailed information of the multipath devices as shown in the following example:# multipath -ll mpath2 (3600144f0d153d65600004f90462c0003) dm-3 SUN,Sun Storage 7410 [size=100G][features=0][hwhandler=0][rw] \_ round-robin 0 [prio=1][active] \_ 7:0:0:0 sdc 8:32 [active][ready] \_ round-robin 0 [prio=1][enabled] \_ 8:0:0:0 sde 8:64 [active][ready] mpath3 (3600144f0d153d65600004f90464f0004) dm-4 SUN,Sun Storage 7410 [size=200G][features=0][hwhandler=0][rw] \_ round-robin 0 [prio=1][active] \_ 7:0:0:2 sdd 8:48 [active][ready] \_ round-robin 0 [prio=1][enabled] \_ 8:0:0:2 sdf 8:80 [active][ready]

In the above example,

mpath2andmpath3are the two LUNs configured in the storage device.

5.2.6 Completing the Setup

Complete the following steps:

-

Mount the LUNs to a mount point:

# mount /dev/mapper/mpathXX <mount_point>

Note:

The multipath LUNs are available as block devices at:/dev/mapper/mpathXX. -

Add the mount point to

/etc/fstabto auto mount during an operating system boot.

5.3 For Additional Help

For additional help or information regarding the storage configuration, contact the Oracle support personnel.