7 ACSLS HA 8.4 Installation and Startup

The SUNWscacsls package contains ACSLS agent software that communicates with Oracle Solaris Cluster. It includes special configuration files and patches that insure proper operation between ACSLS and Solaris Cluster.

Basic Installation Procedure

-

Unzip the downloaded

SUNWscacsls.zipfile in /opt.# cd /opt # unzip SUNWscacsls.zip

-

Install the

SUNWscacslspackage.# pkgadd -d .

-

Repeat steps 1 and 2 on the adjacent node.

-

Verify that the

acslspoolremains mounted on one of the two nodes.# zpool status acslspool

If the

acslspoolis not mounted, check the other node.If the

acslspoolis not mounted to either node, then import it to the current node as follows:# zpool import -f acslspool

Verify with

zpool status. -

Go into the

/opt/ACSLSHA/utildirectory on the node that owns theacslspooland run thecopyUtils.shscript. This operation updates or copies essential files to appropriate locations on both nodes. There is no need to repeat this operation on the adjacent node.# cd /opt/ACSLSHA/util # ./copyUtils.sh

-

On the node where the

acslspoolis active, as useracsss, start the ACSLS application (acsssenable) and verify that it is operational. Resolve any issues encountered. Major issues may be resolved by removing and reinstalling the STKacsls package on the node.If the STKacsls package must be reinstalled, run the

/opt/ACSLSHA/util/copyUtils.shscript after installing the package -

Shutdown

acsls.# su - acsss $ acsss shutdown $ exit #

-

Export the

acslspoolfrom the active node.# zpool export acslspool

Note:

This operation fails if useracsssis logged in, if a user shell is active anywhere in theacslspool, or if anyacsssservice remains enabled. -

Import the

acslspoolfrom the adjacent node.# zpool import acslspool

-

Startup the ACSLS application on this node and verify successful library operation. Resolve any issues encountered. Major issues may be resolved by removing and reinstalling the STKacsls package on the node.

If the STKacsls package must be reinstalled, run the

/opt/ACSLSHA/util/copyUtils.shscript after installing the package.

Configuring ACSLS HA

This step creates three ACSLS resources that are managed and controlled by Solaris Cluster:

-

acsls-rsis the ACSLS application itself. -

acsls-storageis the ZFS file system on which ACSLS resides. -

<logical host>is the virtual IP (the network identity that is common to both nodes). See "Configuring /etc/hosts".

Once these resource handles are created, they are assigned to a common resource group under the name acsls-rg.

To configure these resources, first verify that the acslspool is mounted (zpool list), then go to the /opt/ACSLSHA/util directory and run acsAgt configure:

# cd /opt/ACSLSHA/util # ./acsAgt configure

This utility prompts for the logical host name. Ensure that the logical host is defined in the /etc/hosts file, and that the corresponding i.p. address maps to the ipmp group defined in the chapter, "Configuring the Solaris System for ACSLS HA". In addition, before running acsAgt configure, use zpool list to confirm that the acslspool is mounted to your current server node.

This configuration step may take a minute or more to complete. Once the resource handles have been created, the operation attempts to start the ACSLS application.

Monitoring ACSLS Cluster Operation

There are multiple vantage points from which to view the operation of the ACSLS Cluster. When Solaris Cluster probes the ACSLS application once each minute, it is helpful to view the results of probes as they happen. When a probe returns a status to trigger a node switch over event, it is helpful to view the shutdown activity on one node and the start-up activity on the adjacent node. It is generally helpful to have a view into the operational health of the ACSLS application over time.

The primary operational vantage point is from the perspective of ACSLS. The tail of the acsss_event.log can provide the best indication of overall system health from moment to moment.

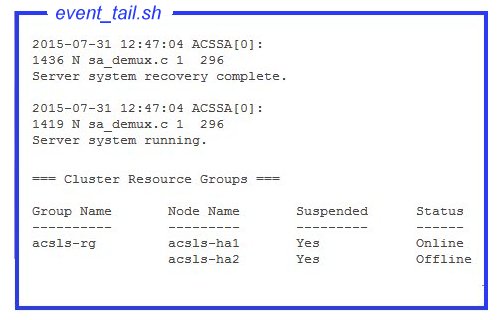

The tool event_tail.sh in the /opt/ACSLSHA/util/ directory provides direct access to the acsss_event.log from either node. The view provided from this tool remains active, even as control switches from one node to the other. In addition to normal ACSLS activity, this tool dynamically tracks each status change of the ACSLS Cluster resource group (acsls-rg) allowing a real time view when one node is going offline and the other coming online. Assert this tool from the shell as follows:

# /opt/ACSLSHA/util/event_tail.sh

To view start and stop activity from the perspective of a single node, view the start_stop_log from that node as follows:

# tail -f /opt/ACSLSHA/log/start_stop_log

To view the results of each periodic probe on the active node:

# tail -f /opt/ACSLSHA/log/probe_log

Solaris Cluster and the ACSLS Cluster agent sends details of significant events to the Solaris System log (var/adm/messages). To view the system log on a given node, a link is provided in the directory, /opt/ACSLSHA/log:

# tail -f /opt/ACSLSHA/log/messages

The ha_console.sh Utility

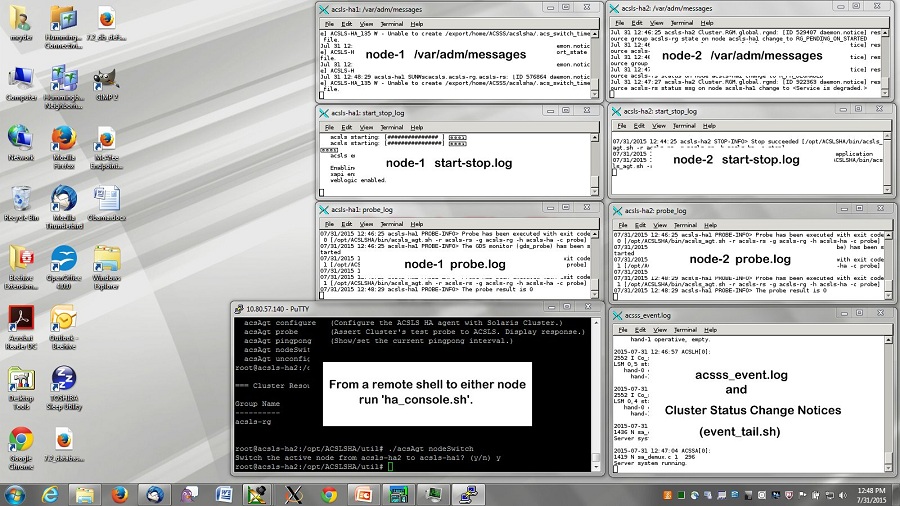

With multiple vantage points in a clustered configuration, and with migration of cluster control from one node to the other over time, it can be a challenge to follow the operational activity of the system from moment to moment from a single perspective. The ha_console.sh utility makes it easier to provide a comprehensive view.

Log in to either node on the ACSLS HA system from the remote desktop and run

ha_console.shwho am i) to determine where to send the DISPLAY. Log in directly from the local console or desktop system to the HA node to see the display. If problems are encountered, look for messages in gnome-terminal.log in the /opt/ACSLSHA/log directory.

# /opt/ACSLSHA/util/ha_console.sh

This utility monitors all of the logs mentioned in this section from both nodes. It launches seven gnome-terminal windows on the local console screen. It can be helpful to organize the windows on the screen in the following manner:

From a single terminal display, a comprehensive view of the entire ACSLS cluster complex is displayed.

Because the remote system sends display data to the local screen, yopen X-11 access on your local system. On UNIX systems, the command to do this is xhost +. On a Windows system, X-11 client software such as xming or exceed must be installed.

If you have difficulty using ha_console.sh, multiple login sessions can be opened from the local system to each node to view the various logs mentioned in this section.

Verifying Cluster Operation

-

Once

acslshahas started and is registered with Solaris Cluster, use cluster commands to check status of the ACSLS resource group and its associated resources.# clrg status === Cluster Resource Groups === Group Name Node Name Suspended Status ---------- --------- --------- ------ acsls-rg node1 No Online node2 No Offline # clrs status === Cluster Resources === Resource Name Node Name State Status Message ------------- --------- ----- -------------- acsls-rs node1 Online Online node2 Offline Offline acsls-storage node1 Online Online node2 Offline Offline <logical host> node1 Online Online node2 Offline Offline -

Temporarily suspend cluster failover readiness to facilitate initial testing.

# clrg suspend acsls-rg # clrg status

-

Test cluster switch operation from the active node to the standby.

# cd /opt/ACSLSHA/util # ./acsAgt nodeSwitch

Switch over activity can be monitored from multiple perspectives using the procedures described in the previous section.

-

Verify network connectivity from an ACSLS client system using the logical hostname of the ACSLS server.

# ping acsls_logical_host # ssh root@acsls_logical_host hostname passwd:

This operation should return the hostname of the active node.

-

Verify ACSLS operation.

# su acsss $ acsss status

-

Repeat steps 3, 4, and 5 from the opposite node.

-

Resume cluster failover readiness

# clrg resume acsls-rg # clrg status

-

The following series of tests involve verification of node failover behavior.

To perform multiple failover scenarios in sequence, lower the default pingpong interval from twenty minutes to five minutes. (See the chapter, "Fine Tuning ACSLS HA" for details.) For testing purposes, it is handy to lower the default setting.

To change the pingpong interval, go to the

/opt/ACSLSHA/utildirectory and runacsAgt pingpong.# ./acsAgt pingpong Pingpong_interval current value: 1200 seconds. desired value: [1200] 300 Pingpong_interval : 300 seconds

-

Reboot the active node and monitor the operation from the two system consoles and from the viewpoints suggested in "Monitoring ACSLS Cluster Operation". Verify automatic failover operation to the standby node.

-

Verify network access to the logical host from a client system as suggested in step 4.

-

Once ACSLS operation is active on the new node, reboot this node and observe failover action to the opposite node.

If monitoring the operation using

ha_console.sh, the windows associated with the rebooting node can be seen going away. Once that node is up again, run the commandha_console.shonce more on either node to restore the windows from the newly rebooted node. -

Repeat network verification as suggested in step 4.

"ACSLS Cluster Operation" provides a complete set of failover scenarios. Any number of these scenarios can be tested before placing the ACSLS HA system into production. Before returning the system to production, restore the recommended pingpong interval setting to avoid constant failover repetition.