2 Cluster Concepts

Oracle Fail Safe high-availability solutions use Microsoft cluster hardware and Microsoft Windows Failover Clusters software.

-

A Microsoft cluster is a configuration of two or more independent computing systems (called nodes) that are connected to the same disk subsystem.

-

Microsoft Windows Failover Clusters software, included with Microsoft Windows software, enables you to configure, monitor, and control applications and hardware components (called resources) that are deployed on a Windows cluster.

To take advantage of the high-availability options that Oracle Fail Safe offers, you must understand Microsoft Windows Failover Clusters concepts.

This chapter discusses the following topics:

Cluster Technology

The Windows systems that are members of a cluster are called cluster nodes. The cluster nodes are joined together through a public shared storage interconnect as well as a private internode network connection.

The internode network connection, sometimes referred to as a heartbeat connection, allows one node to detect the availability of another node. Typically, a private interconnect (that is distinct from the public network connection used for user and client application access) is used for this communication. If one node fails, then the cluster software immediately fails over the workload of the unavailable node to an available node, and remounts on the available node any cluster resources that were owned by the failed node. Clients continue to access cluster resources without any changes.

Figure 2-1 shows the network connections in a two-node Microsoft cluster configuration.

About Clusters Providing High Availability

Until cluster technology became available, reliability for PC systems was attained by hardware redundancy such as RAID and mirrored drives, and dual power supplies. Although disk redundancy is important in creating a highly available system, this method alone cannot ensure the availability of your system and its applications.

By connecting servers in a Windows cluster with Microsoft Windows Failover Clusters software, provide server redundancy, with each server (node) having exclusive access to a subset of the cluster disks during normal operations. A cluster is far more effective than independent standalone systems, because each node can perform useful work, yet still is able to take over the workload and disk resources of a failed cluster node.

By design, a cluster provides high availability by managing component failures and supporting the addition and subtraction of components in a way that is transparent to users. Additional benefits include providing services such as failure detection, recovery, and the ability to manage the cluster nodes as a single system.

About System-Level Configuration

There are different ways to set up and use a cluster configuration. Oracle Fail Safe supports the following configurations:

-

Active/passive configurations

-

Active/active configurations

See Designing an Oracle Fail Safe Solution for information about these configurations.

About Disk-Level Configuration

When a Windows Failover cluster is recovering from a failure, a surviving node gains access to the failed node's disk data through a shared-nothing configuration.

In a shared-nothing configuration, all nodes are cabled physically to the same disks, but only one node can access a given disk at a time. Even though all nodes are physically connected to the disks, only the node that owns the disks can access them.

Figure 2-2 shows that if a node in a two-node cluster becomes unavailable, then the other cluster node can assume ownership of the disks and application workloads that were owned by the failed node and continue processing operations for both nodes.

Cluster Shared Volumes allow multiple nodes to concurrently access the same disk.

Resources, Groups, and High Availability

When a server node becomes unavailable, its cluster resources (for example, disks, Oracle Databases and applications, and IP addresses) that are configured for high availability are moved to an available node in units called groups. A group is sometimes referred to as a "service or application" or "clustered role". The following sections describe resources and groups, and how they are configured for high availability.

About Resources

A cluster resource is any physical or logical component that is available to a computing system and has the following characteristics:

-

It can be brought online and taken offline.

-

It can be managed in a cluster.

-

It can be hosted by only one node in a cluster at a given time, but can be potentially owned by another cluster node. (For example, a resource is owned by a given node. After a failover, that resource is owned by another cluster node. However, at any given time only one of the cluster nodes can access the resource.)

About Groups

A group is a logical collection of cluster resources that forms a minimal unit of failover. A group is sometimes referred to as a "service or application" or "clustered role". During a failover, the group of resources is moved to another cluster node. A group is owned by only one cluster node at a time. All resources required for a given workload (database, disks, and other applications) must reside in the same group.

For example, a service or application created to configure an Oracle Database for high availability by using Oracle Fail Safe may include the following resources:

-

An Oracle Database instance

-

One or more network names, each one consisting of:

-

An IP address

-

-

An Oracle Net network listener that listens for connection requests to databases in the group

Note that when you add a resource to a group, the disks it uses are also included in the group. For this reason, if two resources use the same disk, then they cannot be placed in different groups. If both resources are to be fail-safe, then both must be placed in the same group.

Microsoft Windows Failover Cluster Manager helps to create groups and add the resources needed to run applications.

About Resource Dependencies

Figure 2-3 shows a group created to make a Sales database highly available. When you add a resource to a group, Oracle Fail Safe Manager automatically adds the other resources upon which the resource you added depends; these relationships are called resource dependencies. For example, when you add a single-instance database to a group, Oracle Fail Safe adds the shared-nothing disks used by the database instance and configures Oracle Net files to work with each group. Oracle Fail Safe also tests the ability of each group to fail over on each node.

Each node in the cluster can own one or more groups. Each group is composed of an independent set of related resources. The dependencies among resources in a group define the order in which the cluster software brings the resources online and offline. For example, a failure causes the Oracle application or database (and Oracle Net listener) to be brought offline first, followed by the physical disks, network name, and IP address. On the failover node, the order is reversed; Windows Failover Cluster brings the IP address online first, then the network name, then the physical disks, and finally the Oracle Database and Oracle Net listener or application.

About Resource Types

Each resource type (such as a generic service, physical disk, Oracle Database, and so on) is associated with a resource dynamic-link library (DLL) and is managed in the cluster environment by using this resource DLL. There are standard Microsoft Windows Failover Clusters resource DLLs as well as custom Oracle resource DLLs. The same resource DLL may support several different resource types.

Microsoft Windows Failover Clusters provides resource DLLs for the resource types that it supports, such as IP addresses, physical disks, generic services, and many others. (A generic service resource is a Windows service that is supported by a resource DLL provided in Microsoft Windows Failover Clusters.)

Oracle Fail Safe uses many of the Windows Failover Cluster resource DLLs to monitor resource types for which Oracle Fail Safe provides custom support, such as generic services.

Oracle provides a custom DLL for the Oracle Database resource type. Windows Failover Cluster uses the Oracle resource DLL to manage the Oracle Database resources (bring online and take offline) and to monitor the resources for availability.

Oracle Fail Safe provides a DLL file to enable Microsoft Windows Failover Clusters to communicate with and monitor Oracle Database resources. FsResOdbs.dll provides functions that enable Microsoft Windows Failover Clusters to bring an Oracle Database and its listener online or offline and check its status through Is Alive polling.

When you use Oracle Fail Safe Manager to add an Oracle Database to a group, Oracle Fail Safe creates the database resource and an Oracle listener resource.

Because Oracle Fail Safe has more information than Microsoft Windows Failover Clusters about Oracle cluster resources, Oracle recommends that you use Oracle Fail Safe Manager (or the Oracle Fail Safe PowerShell cmdlets) to configure and administer Oracle Databases and applications.

Groups, Network Names, and Virtual Servers

A network name is a network address at which resources in a group can be accessed, regardless of the cluster node hosting those resources. A network name provides a constant node-independent network location that allows clients easy access to resources without the need to know which physical cluster node is hosting those resources.

Because groups move from an unavailable node to an available node during a failure, a client cannot connect to an application that uses an address that is identified with only one node. To identify a network name for a group in Oracle Fail Safe Manager, add a unique network name and IP address to a group.

To add a network name resource to a group, use the Microsoft Windows Failover Cluster Manager.

Once you add a network name to a group, the group becomes a virtual server. Although at least one network name is required for each group for client access, you can assign multiple network names to a group. You may assign multiple network names to provide increased bandwidth or to segment security for the resources in a group.

Each group appears to users and client applications as a highly available virtual server, independent of the physical identity of one particular node. To access the resources in a group, clients always connect to the network name of the group. To the client, the virtual server is the interface to the cluster resources and looks like a physical node.

Figure 2-4 shows a two-node cluster with a group configured on each node. Clients access these groups through Virtual Servers A and B. By accessing the cluster resources through the network name of a group, as opposed to the physical address of an individual node, you ensure successful remote connection regardless of which cluster node is hosting the group.

Figure 2-4 Accessing Cluster Resources Through a Virtual Server

Description of "Figure 2-4 Accessing Cluster Resources Through a Virtual Server"

Allocating IP Addresses for Network Names

-

One IP address for each cluster node

-

One IP address for the cluster alias (described in Cluster Group and Cluster Alias)

-

One IP address for each group

For example, the configuration in Figure 2-4 requires five IP addresses: one for each of the two cluster nodes, one for the cluster alias, and one for each of the two groups.

Note:

You can specify multiple network names for a group. See Configurations Using Multiple Network Names for details.

Cluster Group and Cluster Alias

The cluster alias is a node-independent network name that identifies a cluster and is used for cluster-related system management. Microsoft Windows Failover Clusters creates a group called the Cluster Group, and the cluster alias is the network name of this group. Oracle Fail Safe is a resource in the Cluster Group, making it highly available and ensuring that Oracle Fail Safe is always available to coordinate Oracle Fail Safe processing on all cluster nodes.

In an Oracle Fail Safe environment, the cluster alias is used only for system management. Oracle Fail Safe Manager interacts with the cluster components and Microsoft Windows Failover Clusters using the cluster alias.

To add a cluster to Oracle Fail Safe Manager cluster list, perform the following steps:

-

Select Add Cluster from the Actions menu on the right pane of Oracle Fail Safe Manager page.

-

Enter the network name of the cluster in the Cluster Alias field as shown in Figure 2-5.

-

Optionally, you can select Connect using different credentials.

By default, Oracle Fail Safe Manager connects to the cluster using the credentials for your current Windows login session. To use another user's credentials, perform the following steps:

-

Select Connect using different credentials option.

-

This opens a Windows Security Cluster Credentials dialog box that allows you to enter new credentials for administering the cluster. Enter the user name and password in the fields provided to continue.

-

To save the credentials, select the Remember my credentials option and click OK. The credentials will be saved in the Windows credentials cache so that when you connect to the cluster, Oracle Fail Safe Manager will check to see if there are any saved credentials for that cluster and use the same to connect to the cluster.

See Also:

Oracle Fail Safe Tutorial for step-by-step instructions on adding a cluster to the Oracle Fail Safe Manager cluster list and connecting to a cluster

Figure 2-5 Cluster Alias in Add Cluster to Tree Dialog Box

Description of "Figure 2-5 Cluster Alias in Add Cluster to Tree Dialog Box"

Client applications do not use the cluster alias when communicating with a cluster resource. Rather, clients use one of the network names of the group that contains that resource.

About Failover

The process of taking a group offline on one node and bringing it back online on another node is called failover. After a failover occurs, resources in the group are accessible as long as one of the cluster nodes that is configured to run those resources is available. Windows Failover Cluster continually monitors the state of the cluster nodes and the resources in the cluster.

A failover can be unplanned or planned:

-

An unplanned failover occurs automatically when the cluster software detects a node or resource failure.

-

A planned failover is a manual operation that you use when you must perform such functions as load balancing or software upgrades.

The following sections describe these types of failover in more detail.

Unplanned Failover

There are two types of unplanned group failovers, which can occur due to one of the following:

-

Failure of a resource configured for high availability

-

Failure or unavailability of a cluster node

Unplanned Failover Due to a Resource Failure

An unplanned failover due to a resource failure is detected and performed as follows:

-

The cluster software detects that a resource has failed.

To detect a resource failure, the cluster software periodically queries the resource (through the resource DLL) to see if it is up and running. See Detecting a Resource Failure for more information.

-

The cluster software implements the resource restart policy. The restart policy states whether or not the cluster software must attempt to restart the resource on the current node, and if so, how many attempts within a given time period must be made to restart it. For example, the resource restart policy may specify that Oracle Fail Safe must attempt to restart the resource three times in 900 seconds.

If the resource is restarted, then the cluster software resumes monitoring the software (Step 1) and failover is avoided.

-

If the resource is not, or cannot be, restarted on the current node, then the cluster software applies the resource failover policy.

The resource failover policy determines whether or not the resource failure must result in a group failover. If the resource failover policy states that the group must not fail over, then the resource is left in the failed state and failover does not occur.

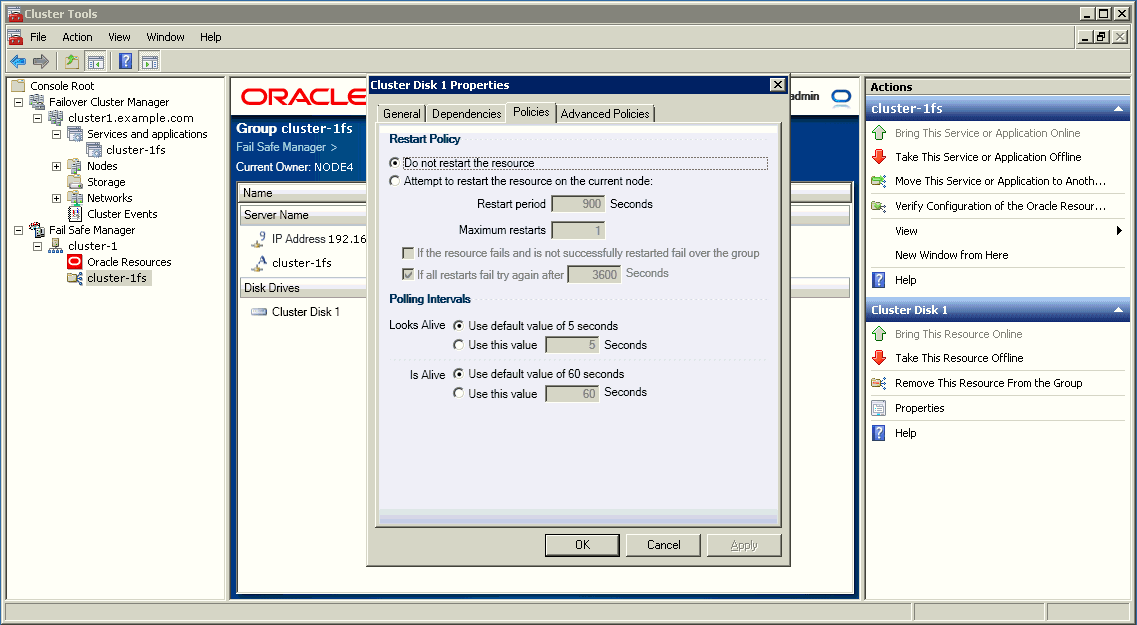

Figure 2-6 shows the property page on which you can view or modify the resource restart and failover policies.

If the resource failover policy states that the group must fail over if a resource is not (or cannot be) restarted, then the group fails over to another node. The node to which the group fails over is determined by which nodes are running, the resource's possible owner nodes list, and the group's preferred owner nodes list. See Resource Possible Owner Nodes List for more information about the resource possible owner nodes list, and see About Group Failover and the Preferred Owner Nodes List for more information about the group preferred owner nodes list.

-

Once a group has failed over, the group failover policy is applied. The group failover policy specifies the number of times during a given time period that the cluster software must allow the group to fail over before that group is taken offline. The group failover policy lets you prevent a group from repeatedly failing over. See About Group Failover Policy for more information about the group failover policy.

-

The failback policy determines if the resources and the group to which they belong are returned to a given node if that node is taken offline (either due to a failure or an intentional restart) and then placed back online. See About Failback for information about failback.

In Figure 2-6, Virtual Server A is failing over to Node B due to a failure of one of the resources in Group 1.

Unplanned Failover Due to Node Failure or Unavailability

An unplanned failover that occurs because a cluster node becomes unavailable is performed as described in the following list:

-

The cluster software detects that a cluster node is no longer available.

To detect node failure or unavailability, the cluster software periodically queries the nodes in the cluster (using the private interconnect).

-

The groups on the failed or unavailable node fail over to one or more other nodes as determined by the available nodes in the cluster, each group's preferred owner nodes list, and the possible owner nodes list of the resources in each group. See Resource Possible Owner Nodes List for more information about the resource possible owner nodes list, and see About Group Failover and the Preferred Owner Nodes List for more information about the group preferred owner nodes list.

-

Once a group has failed over, the group failover policy is applied. The group failover policy specifies the number of times during a given time period that the cluster software must allow the group to fail over before that group is taken offline. See About Group Failover Policy for more information about the group failover policy.

-

The failback policy determines if the resources and the groups to which they belong are moved to a node when it becomes available once more. See About Failback for information about failback.

Figure 2-7 shows Group 1 failing over when Node A fails. Client applications (connected to the failed server) must reconnect to the server after failover occurs. If the application is performing updates to an Oracle Database and uncommitted database transactions are in progress when a failure occurs, the transactions are rolled back.

Note:

Steps 3 and 4 in this section are the same as steps 4 and 5 in Unplanned Failover Due to a Resource Failure. Once a failover begins, the process is the same, regardless of whether the failover was caused by a failed resource or a failed node.

Planned Group Failover

A planned group failover is the process of intentionally taking client applications and cluster resources offline on one node and bringing them back online on another node. This lets administrators perform routine maintenance tasks (such as hardware and software upgrades) on one cluster node while users continue to work on another node. Besides performing maintenance tasks, planned failover helps to balance the load across the nodes in the cluster. In other words, use planned failover to move a group from one node to another. In fact, to implement a planned failover, perform a move group operation in Oracle Fail Safe Manager (see the online help in Oracle Fail Safe Manager for instructions).

During a planned failover, Oracle Fail Safe works with Microsoft Windows Failover Clusters to efficiently move the group from one node to another. Client connections are lost and clients must manually reconnect at the virtual server address of the application, unless you have configured transparent application failover (see Configuring Transparent Application Failover (TAF) for information about transparent application failover). Then, take your time to perform the upgrade, because Oracle Fail Safe lets clients work uninterrupted on another cluster node while the original node is offline. (If a group contains an Oracle Database, then the database is checkpointed prior to any planned failover to ensure rapid database recovery on the new node.)

Group and Resource Policies That Affect Failover

Values for the various resource and group failover policies are set to default values when you create a group or add an Oracle resource to a group using Oracle Fail Safe Manager and Microsoft Windows Failover Cluster Manager. However, you can reset the values in these policies with the Oracle Resource Properties page of Oracle Fail Safe Manager and the Group Failover Properties page of Microsoft Windows Failover Cluster Manager. You can set values for the group failback policy at group creation time or later, using the Group Failover Properties page and selecting the Allow Failback option.

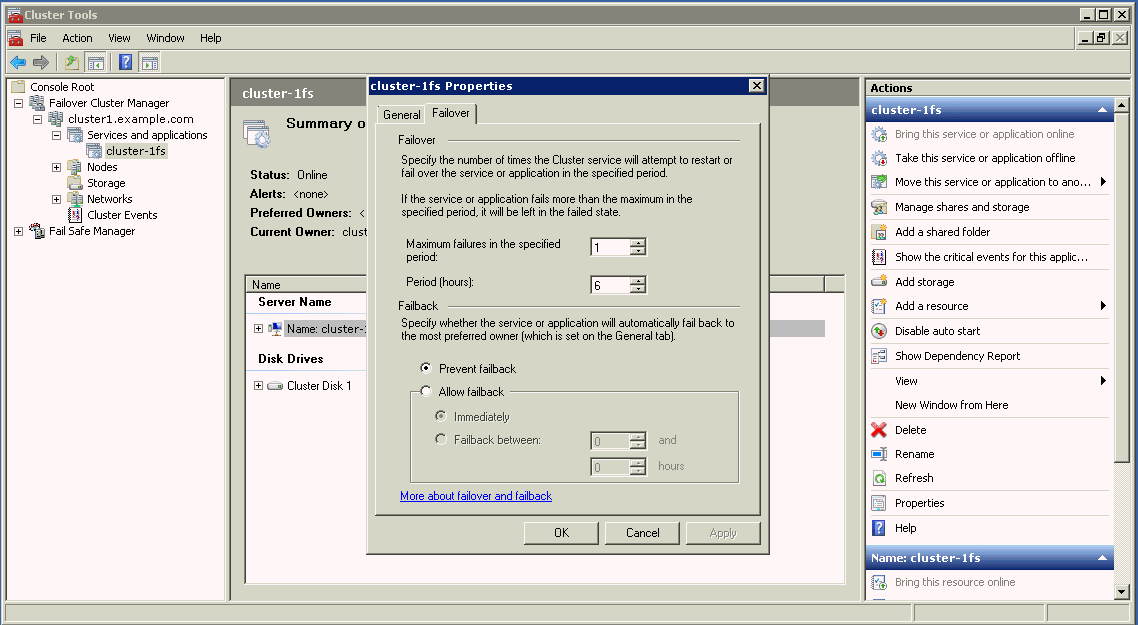

Figure 2–8 shows the page for setting group failover policies. To access this page, select the group of interest in Microsoft Windows Failover Cluster Manager tree view, then select the Properties action from the Actions menu on the right pane of the screen. Then select the Failover tab in the Properties page.

Figure 2–9 shows the page for setting Oracle resource policies. To access this page, either select the Properties action menu from the right pane of the screen or select Properties from the Action item in the menu bar. Then select the Policies tab in the Oracle resource Properties page.

Figure 2-9 Resource Policies Property Page

Description of "Figure 2-9 Resource Policies Property Page"

Detecting a Resource Failure

All resources that have been configured for high availability are monitored for their status by the cluster software. Resource failure is detected based on three values:

-

Pending timeout value

The pending timeout value specifies how long the cluster software must wait for a resource in a pending state to come online (or offline) before considering that resource to have failed. By default, this value is 180 seconds.

-

Is Alive interval

The Is Alive interval specifies how frequently the cluster software must check the state of the resource. Either use the default value for the resource type or specify a number (in seconds). This check is more thorough, but uses more system resources than the check performed during a Looks Alive interval.

-

Looks Alive interval

The Looks Alive interval specifies how frequently the cluster software must check the registered state of the resource to determine if the resource appears to be active. Either use the default value for the resource type or specify a number (in seconds). This check is less thorough, but also uses fewer system resources, than the check performed during an Is Alive interval.

About Resource Restart Policy

Once it is determined that a resource has failed, the cluster software applies the restart policy for the resource. The resource restart policy provides two options, as shown in Figure 2-9:

-

The cluster software must not attempt to restart the resource on the current node. Instead, it must immediately apply the resource failover policy.

-

The cluster software must attempt to restart the resource on the current node a specified number of times within a given time period. If the resource cannot be restarted, then the cluster software must apply the resource failover policy.

About Resource Failover Policy

The resource failover policy determines whether or not the group containing the resource must fail over if the resource is not (or cannot be) restarted on the current node. If the policy states that the group containing the failed resource must not fail over, then the resource is left in the failed state on the current node. (The group may eventually fail over anyway; if another resource in the group has a policy that states that the group containing the failed resource must fail over, then it will.) If the policy states that the group containing the failed resource must fail over, then the group containing the failed resource fails over to another cluster node as determined by the group preferred owner nodes list. See About Group Failover and the Preferred Owner Nodes List and Group Failback and the Preferred Owner Nodes List for a description of the preferred owner nodes list.

Resource Possible Owner Nodes List

The possible owner nodes list consists of all nodes on which a given resource is permitted to run. Some of the options are:

-

The Advanced Policies property tab of the resources displays the possible owner nodes.

-

To change the node, use the Microsoft Windows Failover Cluster Manager.

-

Run the Validate group action after changing the possible owner nodes.

-

Figure 2-10, Figure 2-11, and Figure 2-10 are examples of the Group property pages in Microsoft Windows Failover Cluster Manager.

Note:

If you select the Validate group action, then Oracle Fail Safe checks that the resources in the specified group are configured to run on each node that is a possible owner for the group. If it finds a possible owner node where the resources in the group are not configured to run, then Oracle Fail Safe configures them for you.

Therefore, Oracle strongly recommends you select the Validate group command for each group for which the new node is listed as a possible owner. Validating the Configuration of Oracle Resources describes the Validate group action.

Figure 2-10 Preferred Owner Nodes Property Page

Description of "Figure 2-10 Preferred Owner Nodes Property Page"

Figure 2-11 Preferred Owner Nodes Property Page

Description of "Figure 2-11 Preferred Owner Nodes Property Page"

Figure 2-12 Possible Owner Nodes Property Page

Description of "Figure 2-12 Possible Owner Nodes Property Page"

About Group Failover Policy

If the resource failover policy states that the group containing the resource must fail over if the resource cannot be restarted on the current node, then the group fails over and the group failover policy is applied. Similarly, if a node becomes unavailable, then the groups on that node fail over and the group failover policy is applied.

The group failover policy specifies the number of times during a given time period that the cluster software must allow the group to fail over before that group is taken offline. The failover policy provides a means to prevent a group from failing over repeatedly.

The group failover policy consists of a failover threshold and a failover period:

-

The failover threshold specifies the maximum number of times failover can occur (during the failover period) before the cluster software stops attempting to fail over the group.

-

The failover period is the time during which the cluster software counts the number of times a failover occurs. If the frequency of failover is greater than that specified for the failover threshold during the period specified for the failover period, then the cluster software stops attempting to fail over the group.

For example, if the failover threshold is 3 and the failover period is 5, then the cluster software allows the group to fail over 3 times within 5 hours before discontinuing failovers for that group.

When the first failover occurs, a timer to measure the failover period is set to 0 and a counter to measure the number of failovers is set to 1. The timer is not reset to 0 when the failover period expires. Instead, the timer is reset to 0 when the first failover occurs after the failover period has expired.

For example, assume again that the failover period is 5 hours and the failover threshold is 3. As shown in Figure 2-13, when the first group failover occurs at point A, the timer is set to 0. Assume a second group failover occurs 4.5 hours later at point B, and the third group failover occurs at point C. Because the failover period has been exceeded when the third group failover occurs (at point C), group failovers are allowed to continue, the timer is reset to 0, and the failover counter is reset to 1.

Assume that another failover occurs at point D (after 7 total hours have elapsed since point A, and 2.5 hours have elapsed since point B). You may expect that failovers will be discontinued. The failovers at points B, C, and D have occurred within a 5-hour timeframe. However, because the timer for measuring the failover period was reset to 0 at point C, the failover threshold has not been exceeded, and the cluster software allows the group to fail over.

Assume that another failover occurs at point E. When a problem that ordinarily results in a failover occurs at point F, the cluster software does not fail over the group. Three failovers have occurred during the 5-hour period that has passed since the timer was reset to 0 at point C. The cluster software leaves the group on the current node in a failed state.

Figure 2-13 Failover Threshold and Failover Period Timeline

Description of "Figure 2-13 Failover Threshold and Failover Period Timeline"

Effect of Resource Restart Policy and Group Failover Policy on Failover

Both the resource restart policy and the failover policy of the group containing the resource affect the failover behavior of a group.

For example, suppose the Northeast database is in a group called Customers, and you specify the following:

-

On the Policies property page for the Northeast database:

-

Attempt to restart the database on the current node 3 times within 600 seconds (10 minutes)

-

If the resource fails and cannot be restarted, fail over the group

-

-

On the Failover property page for the Customers group:

-

The failover threshold for the group containing the resource is 20

-

The failover period for the group containing the resource is 1 hour

Assume a database failure occurs. Oracle Fail Safe attempts to restart the database instance on the current node. The attempt to restart the database instance fails three times within a 10-minute period. Therefore, the Customers group fails over to another node.

On that node, Oracle Fail Safe attempts to restart the database instance and fails three times within a 10-minute period, so the Customers group fails over again. Oracle Fail Safe continues its attempts to restart the database instance and the Customers group continues to fail over until the database instance restarts or the group has failed over 20 times within a 1-hour period. If the database instance cannot be restarted, and the group fails over fewer than 20 times within a 1-hour time period, then the Customers group fails over repeatedly. In such a case, consider reducing the failover threshold to eliminate the likelihood of repeated failovers.

-

About Group Failover and the Preferred Owner Nodes List

When you create a group, you can create a preferred owner nodes list for both group failover and failback. (When the cluster contains only two nodes, you specify this list for failback only.) Create an ordered list of nodes to indicate the preference you have for each node in the list to own that group.

For example, in a four-node cluster, you may specify the following preferred owner nodes list for a group containing a database:

-

Node 1

-

Node 4

-

Node 3

This indicates that when all four nodes are running, you prefer for the group to run on Node 1. If Node 1 is not available, then your second choice is for the group to run on Node 4. If neither Node 1 nor Node 4 is available, then your next choice is for the group to run on Node 3. Node 2 has been omitted from the preferred owner nodes list. However, if it is the only choice available to the cluster software (because Node 1, Node 4, and Node 3 have all failed), then the group fails over to Node 2. (This happens even if Node 2 is not a possible owner for all resources in the group. In such a case, the group fails over, but remains in a failed state.)

When a failover occurs, the cluster software uses the preferred owner nodes list to determine the node to which it must fail over the group. The cluster software fails over the group to the top-most node in the list that is up and running and is a possible owner node for the group. Determining the Failover Node for a Group describes in more detail how the cluster software determines the node to which a group fails over.

See Group Failback and the Preferred Owner Nodes List for information about how the group preferred owner nodes list affects failback.

Determining the Failover Node for a Group

The node to which a group fails over is determined by the following three lists:

-

List of available cluster nodes

The list of available cluster nodes consists of all nodes that are running when a group failover occurs. For example, you have a four-node cluster. If one node is down when a group fails over, then the list of available cluster nodes is reduced to three.

-

List of possible owner nodes for each resource in the group (See Resource Possible Owner Nodes List.)

-

List of preferred owner nodes for the group containing the resources (See About Group Failover and the Preferred Owner Nodes List.)

The cluster software determines the nodes to which your group can possibly fail over by finding the intersection of the available cluster nodes and the common set of possible owners of all resources in the group. For example, assume you have a four-node cluster and a group on Node 3 called Test_Group. You have specified the possible owners for the resources in Test_Group as shown in Table 2-1.

Table 2-1 Example of Possible Owners for Resources in Group Test_Group

| Node | Possible Owners for Resource 1 | Possible Owners for Resource 2 | Possible Owners for Resource 3 |

|---|---|---|---|

|

Node 1 |

Yes |

Yes |

Yes |

|

Node 2 |

Yes |

No |

Yes |

|

Node 3 |

Yes |

Yes |

Yes |

|

Node 4 |

Yes |

Yes |

Yes |

By reviewing Table 2-1, you see that the intersection of possible owners for all three resources is:

-

Node 1

-

Node 3

-

Node 4

Assume that Node 3 (where Test_Group currently resides) fails. The available nodes list is now:

-

Node 1

-

Node 4

To determine the nodes to which Test_Group can fail over, the cluster software finds the intersection of the possible owner nodes list for all resources in the group and the available nodes list. In this example, the intersection of these two lists is Node 1 and Node 4.

To determine the node to which it must fail over Test_Group, the cluster software uses the preferred owner nodes list of the group. Assume that you have set the preferred owner nodes list for Test_Group to be:

-

Node 3

-

Node 4

-

Node 1

Because Node 3 has failed, the cluster software fails over Test_Group to Node 4. If both Node 3 and Node 4 are not available, then the cluster software fails over Test_Group to Node 1. If Nodes 1, 3, and 4 are not available, then the group fails over to Node 2. However, because Node 2 is not a possible owner for all of the resources in Test_Group, the group remains in a failed state on Node 2.

About Failback

A failback is a process of automatically returning a group of cluster resources to a preferred owner node from the failover node after a preferred owner node returns to operational status. A preferred owner node is a node on which you want a group to reside when possible (when that node is available).

You can set a failback policy that specifies when (and if) groups must fail back to a preferred owner node from the failover node. For example, you can set a group to fail back immediately or between specific hours that you choose. Or, you can set the failback policy so that a group does not fail back, but continues to run on the node where it currently resides. The following figure shows the property page for setting the failback policy for a group.

Figure 2-14 Group Failback Policy Property Page

Description of "Figure 2-14 Group Failback Policy Property Page"

Group Failback and the Preferred Owner Nodes List

When you create a group on a cluster, you can create a preferred owner nodes list for group failover and failback. When the cluster contains two nodes, you specify this list for failback only. You create an ordered list of nodes that indicates the nodes where you prefer a group to run. When a previously unavailable node comes back online, the cluster software reads the preferred owner nodes list for each group on the cluster to determine whether or not the node that just came online is a preferred owner node for any group on the cluster. If the node that just came online is higher on the preferred owner nodes list than the node on which the group currently resides, then the group is failed back to the node that just came back online.

For example, in a four-node cluster, you may specify the following preferred owner nodes list for the group called My_Group:

-

Node 1

-

Node 4

-

Node 3

Assume that My_Group has failed over to, and is currently running on, Node 4 because Node 1 had been taken offline. Now Node 1 is back online. The cluster software reads the preferred owner nodes list for My_Group (and all other groups on the cluster); it finds that the preferred owner node for My_Group is Node 1. It fails back My_Group to Node 1, if failback is enabled.

If My_Group is currently running on Node 3 (because both Node 1 and Node 4 are not available) and Node 4 comes back online, then My_Group fails back to Node 4 if failback is enabled. Later, when Node 1 becomes available, My_Group fails back once more, this time to Node 1. When you specify a preferred owner nodes list, be careful not to create a situation where failback happens frequently and unnecessarily. For most applications, two nodes in the preferred owner nodes list is sufficient.

A scenario with unexpected results is exhibited when a group has been manually moved to a node. Assume all nodes are available and My_Group is currently running on Node 3 (because you moved it there with a move group operation). If Node 4 is restarted, then My_Group fails back to Node 4, even though Node 1 (the highest node in the preferred owner node list of My_Group) is also running.

When a node comes back online, the cluster software checks to see if the node that just came back online is higher on the preferred owner nodes list than the node where each group currently resides. If so, all such groups are moved to the node that just came back online.

See About Group Failover and the Preferred Owner Nodes List for information about how the group preferred owner nodes list affects failover.

Client Reconnection After Failover

Node failures affect only those users and applications:

-

That are directly connected to applications hosted by the failed node

-

Whose transactions were being handled when the node failed

Typically, users and applications connected to the failed node lose the connection and must reconnect to the failover node (through the node-independent network name) to continue processing. With a database, any transactions that were in progress and uncommitted at the time of the failure are rolled back. Client applications that are configured for transparent application failover experience a brief interruption in service; to the client applications, it appears that the node was quickly restarted. The service is automatically restarted on the failover node—without operator intervention.

See Configuring Transparent Application Failover (TAF) for information about transparent application failover.