13 Developing WebRTC-Enabled iOS Applications

This chapter shows how you can use the Oracle Communications WebRTC Session Controller iOS application programming interface (API) library to develop WebRTC-enabled iOS applications. The library is delivered in a WebRTC Session Controller SDK framework.

About the iOS SDK

The WebRTC Session Controller iOS SDK enables you to integrate your iOS applications with core WebRTC Session Controller functions. You can use the iOS SDK to implement the following features:

-

Audio calls between an iOS application and any other WebRTC-enabled application, a Session Initialization Protocol (SIP) endpoint, or a public switched telephone network endpoint using a SIP trunk.

-

Video calls between an iOS application and any other WebRTC-enabled application, with suitable video conferencing support.

-

Seamless upgrading of an audio call to a video call and downgrading of a video call to an audio call.

-

Peer to peer data transfers between an iOS application and any other WebRTC-enabled application.

-

Support client notifications when the device goes into hibernation. When the server sends a notification to wake up the client, the application can reestablish the connection, rehydrate the session.

-

Support for Interactive Connectivity Establishment (ICE) server configuration, including support for Trickle ICE.

-

Transparent session reconnection following network connectivity interruption.

-

Session rehydration following a device handover.

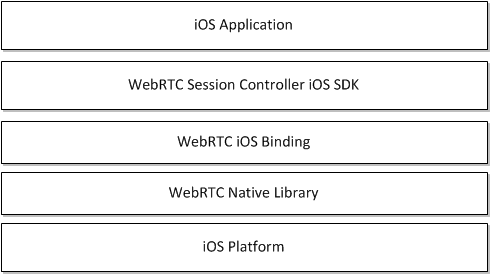

The WebRTC Session Controller iOS SDK is built upon several additional libraries and modules as shown in Figure 13-1.

The WebRTC iOS binding enables the WebRTC Session Controller iOS SDK access to the native WebRTC library which itself provides WebRTC support. The Socket Rocket WebSocket library enables the WebSocket access required to communicate with WebRTC Session Controller.

For additional information about any of the APIs used in this document, see Oracle Communications WebRTC Session Controller iOS API Reference.

Supported Architectures

The WebRTC Session Controller iOS SDK is compliant with iOS9 (arm64) mobile operating system.

About the iOS SDK WebRTC Call Workflow

The general workflow for using the WebRTC Session Controller iOS SDK to place a call is:

-

Authenticate against WebRTC Session Controller using the WSCHttpContext class. You initialize the WSCHttpContext with necessary HTTP headers and optional SSLContextRef in the following manner:

-

Send an HTTP GET request to the login URI of WebRTC Session Controller.

-

Complete the authentication process based on your authentication scheme.

-

Proceed with the WebSocket handshake on the established authentication context.

-

-

Establish a WebRTC Session Controller session using the WSCSession class.

Two protocols must be implemented:

-

WSCSessionConnectionDelegate: A delegate that reports on the success or failure of the session creation.

-

WSCSessionObserverDelegate: A delegate that signals on various session state changes, including CLOSED, CONNECTED, FAILED, and others.

-

-

Once a session is established, create a WSCCallPackage class which manages WSCCall objects in the WSCSession.

-

Create a WSCCall using the WSCCallPackage createCall method with a callee ID as its argument, for example, alice@example.com.

-

To monitor call events such as ACCEPTED, REJECTED, RECEIVED, implement a WSCCallObserver protocol which attaches to the WSCCall.

-

To maintain the nature of the WebRTC call, create a WSCCallConfig object with one of the following settings:

-

Bi-directional or mono-directional audio or video.

-

Bi-directional or mono-directional audio and video.

-

Message exchanges containing raw data.

-

-

Create and configure a RTCPeerConnectionFactory object and start the WSCCall using the start method.

-

When the call is complete, terminate the call using the end method of the WSCCall object.

Prerequisites

Before continuing, make sure you thoroughly review and understand the JavaScript API discussed in the chapters listed below:

The WebRTC Session Controller iOS SDK is closely aligned in concept and functionality with the JavaScript SDK to ensure a seamless transition.

In addition to an understanding of the WebRTC Session Controller JavaScript API, you are expected to be familiar with:

-

Objective C and general object-oriented programming concepts

-

General iOS SDK programming concepts including event handling, delegates, and views.

-

The functionality and use of XCode.

For an introduction to programming iOS applications using XCode and for more background on all areas of iOS application development, see:

https://developer.apple.com/library/ios/referencelibrary/GettingStarted/RoadMapiOS/WhereToGoFromHere.html#/apple_ref/doc/uid/TP40011343-CH12-SW1

iOS SDK System Requirements

In order to develop applications with the WebRTC Session Controller SDK, complete the following software/hardware requirements:

-

An installed and fully configured WebRTC Session Controller installation. See the Oracle Communications WebRTC Session Controller Installation Guide.

-

A Macintosh computer capable of running XCode version 5.1 or later.

-

An actual iOS hardware device.

You can test the general flow and function of your iOS WebRTC Session Controller application using the iOS simulator. To utilize audio and video functionality fully, a physical iOS device such as an iPhone or an iPad is required.

About the Examples in This Chapter

In order to illustrate the functionality of the WebRTC Session Controller iOS SDK API, the examples and descriptions in this chapter are kept intentionally straightforward. The examples assume no pre-existing interface schemas except when necessary, and then, only with the barest minimum of code. For example, if a particular method requires arguments such as a user name, the code examples show a plain string userName such as "bob@example.com" being passed to the method. It is assumed that in a production application, you would interface with the contact manager of the iOS device.

Installing the iOS SDK

To install the WebRTC Session Controller iOS SDK, do the following:

-

Install XCode from the Apple App store:

https://developer.apple.com/library/ios/referencelibrary/GettingStarted/RoadMapiOS/index.html#/apple_ref/doc/uid/TP40011343-CH2-SW1Note:

The WebRTC Session Controller iOS SDK requires XCode version 6 or higher. -

Create an iOS project using xCode, adding any required targets:

https://developer.apple.com/library/ios/referencelibrary/GettingStarted/RoadMapiOS/FirstTutorial.html#/apple_ref/doc/uid/TP40011343-CH3-SW1Note:

iOS version 7 is the minimum required by the WebRTC Session Controller iOS SDK for full functionality. -

Download and extract the WebRTC Session Controller iOS SDK compressed (.zip) file. There are three subfolders in the archive, debug, docs and release.

-

The debug folder contains the debug frameworks.

-

The docs folder contains the iOS API Reference.

-

The release folder contains the release frameworks.

-

-

Add the WebRTC Session Controller SDK frameworks to your project.

-

Select your application target in XCode project navigator.

-

Select the Build Phases tab in the top of the editor pane.

-

Expand Link Binary With Libraries.

-

Depending on your requirements, drag the framework files from either release or debug to Link Binary with Libraries.

Note:

The webrtc folder contains a single library that supports iOS devices and the iOS simulator.

-

-

Import any other system frameworks you require. The following frameworks are recommended:

-

CFNetwork.framework: zero-configuration networking services. For more information, see:

https://developer.apple.com/library/ios/documentation/CFNetwork/Reference/CFNetwork_Framework/index.html -

Security.framework: General interfaces for protecting and controlling security access. For more information, see:

https://developer.apple.com/library/ios/documentation/Security/Reference/SecurityFrameworkReference/index.html -

CoreMedia.framework: Interfaces for playing audio and video assets in an iOS application. For more information, see:

https://developer.apple.com/library/mac/documentation/CoreMedia/Reference/CoreMediaFramework/index.html -

GLKit.framework: Library that facilitates and simplifies creating shader-based iOS applications (useful for video rendering). For more information, see:

https://developer.apple.com/library/ios/documentation/3DDrawing/Conceptual/OpenGLES_ProgrammingGuide/DrawingWithOpenGLES/DrawingWithOpenGLES.html. -

AVFoundation.framework: A framework that facilities managing and playing audio and video assets in iOS applications. For more information, see:

https://developer.apple.com/library/ios/documentation/AudioVideo/Conceptual/AVFoundationPG/Articles/00_Introduction.html#/apple_ref/doc/uid/TP40010188 -

AudioToolbox.framework: A framework containing interfaces for audio playback, recording, and media stream parsing. For more information, see:

https://developer.apple.com/library/ios/documentation/MusicAudio/Reference/CAAudioTooboxRef/index.html#/apple_ref/doc/uid/TP40002089 -

libicucore.dylib: A unicode support library. For more information, see:

-

libsqlite3.dylib: A framework providing a SQLite interface. For more information, see:

https://developer.apple.com/technologies/ios/data-management.html

-

-

If you are targeting iOS version 8 or above, add the libstdc++.6.dylib.a framework to prevent linking errors.

Authenticating with WebRTC Session Controller

You use the WSCHttpContext class to set up an authentication context. The authentication context contains the necessary HTTP headers and SSLContext information, and is used when setting up a wsc.Session.

Initialize a URL Object

You then create an NSURL object using the URL to your WebRTC Session Controller endpoint.

Configure Authorization Headers

Configure authorization headers as required by your authentication scheme. The following example uses Basic authentication; OAuth and other authentication schemes are similarly configured.

Example 13-2 Initializing Basic Authentication Headers

NSString *authType = @"Basic ";

NSString *username = @"username";

NSString *password = @"password";

NSString * authString = [authType stringByAppendingString:[username

stringByAppendingString:[@":"

stringByAppendingString:[password]]];

Note:

If you are using Guest authentication, no headers are required.Connect to the URL

With your authentication parameters configured, you can now connect to the WebRTC Session Controller URL using sendSynchronousRequest, or NSURlRequest, and NSURlConnection, in which case the error and response are returned in delegate methods.

Example 13-3 Connecting to the WebRTC Session Controller URL

NSHTTPURLResponse * response;NSError * error;authUrlNSMutableURLRequest *loginRequest = [NSMutableURLRequest requestWithURL:]; [loginRequest setValue:authString forHTTPHeaderField:@"Authorization"]; [NSURLConnection sendSynchronousRequest:loginRequest returningResponse:&response error:&error];

Configure the SSL Context

If you are using Secure Sockets Layer (SSL), configure the SSL context, using the SSLCreateContext method, depending upon whether the URL connection was successful. For more information about SSLCreateContext, see the following Apple developer content:

https://developer.apple.com/library/mac/documentation/Security/Reference/secureTransportRef/index.html#/apple_ref/c/func/SSLCreateContext

Example 13-4 Configuring the SSLContext

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if necessary... SSLContextRef sslContext = SSLCreateContext(NULL, kSSLClientSide, kSSLStreamType); // Copy the SSLContext configuration to the httpContext builder... [builder withSSLContextRef:&sslContext]; ... }

Retrieve the Response Headers from the Request

Depending upon the results of the authentication request, you retrieve the response headers from the URL request and copy the cookies to the httpContext builder.

Example 13-5 Retrieving the Response Headers from the URL Request

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if necessary, from Example 13-4... SSLContextRef sslContext = SSLCreateContext(NULL, kSSLClientSide, kSSLStreamType); // Copy the SSLContext configuration to the httpContext builder... [builder withSSLContextRef:&sslContext]; // Retrieve all the response headers... NSDictionary *respHeaders = [response allHeaderFields]; WSCHttpContextBuilder *builder = [WSCHttpContextBuilder create]; // Copy all cookies from respHeaders to the httpContext builder... [builder withHeader:key value:headerValue]; ... }

Build the HTTP Context

Depending upon the results of the authentication request, you then build the WSCHttpContext using WSCHttpContextBuilder.

Example 13-6 Building the HttpContext

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if necessary, from Example 13-4... SSLContextRef sslContext = SSLCreateContext(NULL, kSSLClientSide, kSSLStreamType); // Copy the SSLContext configuration to the httpContext builder... [builder withSSLContextRef:&sslContext]; // Retrieve all the response headers from Example 13-5... // Build the httpContext... WSCHttpContext *httpContext = [builder build]; ... }

Configure Interactive Connectivity Establishment (ICE)

If you have access to one or more STUN/TURN ICE servers, you can initialize the WSCIceServer class. For details on ICE, see "Managing Interactive Connectivity Establishment Interval".

Example 13-7 Configuring the WSCIceServer Class

WSCIceServer *iceServer1 = [[WSCIceServer alloc] initWithUrl:@"stun:stun-server:port"]; WSCIceServer *iceServer2 = [[WSCIceServer alloc] initWithUrl:@"turn:turn-server:port", @"admin", @"password"]; WSCIceServerConfig *iceServerConfig = [[WSCIceServerConfig alloc] initWithIceServers: iceServer1, iceServer2, NIL];

Configuring Support for Notifications

Set up client notifications to enable your applications to operate without impacting the battery life and data consumption with the associated mobile devices.

Whenever a user (for example, Bob) is not actively using your application, your application can hibernate the client session after informing the WebRTC Session Controller server. The WebSocket connecting your application to the WebRTC Session Controller server closes. During that hibernation period, if Bob needs to be alerted of an event (such as a call from Alice on the Call feature of your iOS application), the WebRTC Session Controller server sends a message (about the call invite) to the cloud messaging server.

The cloud messaging server uses a push notification, a short message that it delivers to the specific device (such as a mobile phone). This message contains the registration ID for the application and the payload. On being woken up on that device, your application reconnects with the server, uses the saved session ID to resurrect the session data, and handles the incoming event.

If no event occurs during the specified hibernation period and the period expires, there are no notifications to process and the WebRTC Session Controller server cleans up the session.

The preliminary configurations and registration actions that you perform to support client notifications in your applications provide the WebRTC Session Controller server and the cloud messaging provider the necessary information about the device, the APIs, the application, and so on. The client application running on the mobile device or browser retrieves a registration ID from its notification provider.

About the WebRTC Session Controller Notification Service

The WebRTC Session Controller Notification Service manages the external connectivity with the respective notification providers. It implements the Cloud Messaging Provider specific protocol such as Apple Push Notification service (APNs). The WebRTC Session Controller Notification Service ensures that all notification messages are transported to the appropriate notification providers.

The WebRTC Session Controller server constructs the payload in the push notification it sends by combining the received message payload from your application with the pay load configured in the application settings or the application provider settings you provide to WebRTC Session Controller.

If you plan to use the WebRTC Session Controller server to communicate with the APNs system, then you must register it with Apple. See "The Notification Process Workflow for Your iOS Application".

About Employing Your Current Notification System

At this point, verify if your current installation has an existing notification server that talks to the Cloud Messaging system and that the installation supports applications for your users through this server.

If you currently have such a notification server successfully associated with a cloud messaging system, you can use the pre-existing notification system to send notifications using the REST interface. For more information, see the Oracle Communications WebRTC Session Controller Extension Developer's Guide.

How the Notification Process Works

In its simplest form, the notification process works in this manner:

-

Bob, a customer, accesses your application on his mobile device. For example, assume this is your iOS Audio Call application.

-

The client application running on the device/browser fetches a device token from its notification provider.

-

WebRTC Session Controller iOS SDK sends the information about the client device and the application settings to the WebRTC Session Controller server.

A WebSocket connection is opened.

-

When there is no activity on the part of the customer (Bob), your application goes into the background. Your application sends a message to the WebRTC Session Controller server informing the server of its intent to hibernate and specifies a time duration for the hibernation.

The WebSocket connection closes.

-

During the hibernation period an event occurs. For example, Alice makes a call to Bob on your iOS Audio Call application.

-

WebRTC Session Controller server receives this call request from Alice and checks the session state. Since the call invite request came during the time interval set as the hibernation period for that session, the WebRTC Session Controller server uses its notification service to send a notification to the APNs server.

-

The APNs server delivers the notification to your ioS Call application on the mobile device.

-

On receiving this notification,

-

Your iOS application reconnects with the notification service using the last session-id and receives the incoming call.

-

WebRTC Session Controller iOS SDK once again establishes the connection to the server WebRTC Session Controller server.

-

-

WebRTC Session Controller sends the appropriate notification to your application. The user interface logic in your application informs Bob appropriately.

-

Bob accepts the call.

-

Your application logic manages the call to its completion.

Note:

If the time set for the hibernation period completes with no event (such as the call from Alice to Bob), then, the WebRTC Session Controller Server closes the session.The Session Id and its data are destroyed. Your application must create another session. Your application cannot use that session ID cannot be used to restore the data.

Handling Multiple Sessions

If you have defined multiple applications in WebRTC Session Controller, your customer may have accessed more than one such application. As a result, there may be multiple WebRTC Session Controller-associated sessions associated with the application.

In such a scenario where data for more than one session is involved, all of the associated session data is stored appropriately and can be retrieved by your application instances.

The Notification Process Workflow for Your iOS Application

The process workflow to support notifications in your iOS application are:

-

The prerequisites to using the notification service are complete. See "About the General Requirements to Provide Notifications".

-

Your application on the iOS device sends the registration_Id to the WebRTC Session Controller iOS SDK, which then sends it to the WebRTC Session Controller server and saves it locally for future use.

-

When a notification is to be sent, the WebRTC Session Controller server sends a message with the deviceToken to the appropriate notification provider (APNs).

Internally, the WebRTC Session Controller iOS SDK passes the device and operating system information about the client to the server to determine what features the client is able to support.

-

The notification provider (APNs) forwards the notification to the device.

-

When the notification is clicked on the device, your application is awakened. It re-establishes communication with the WebRTC Session Controller server again and handles the event.

Note:

Apple allows a payload up to 256 bytes for pre- iOS8 systems and 2Kilobytes for later iOS8 systems).Additionally, the notifications can do one of the following:

-

Display a short text message

-

Play a brief sound

-

Display a number in a badge on the application icon

-

Display a short text message

About the WebRTC Session Controller APIs for Client Notifications

The WebRTC Session Control iOS API objects that enable your applications to handle notifications related to session hibernation are:

-

hibernate

The hibernate method of the WSCSession object sends a hibernate request to the WebRTC Session Controller server.

-

WSCSessionStateHibernated

The enum value of the WSCSessionState object indicating that the session is in hibernation.

-

WSCHibernateParams

The WSCHibernateParams object stores the parameters for the hibernating session.

-

withDeviceToken parameter of WSCSessionBuilder

When you provide the withDeviceToken parameter, the session is built with the device token obtained from APNs.

-

withHibernationHandler parameter of WSCSessionBuilder

When you provide the withHibernationHandler parameter, the session is built to handle hibernation.

-

withSessionId method for the WSCSessionBuilder object

Used for rehydration. When you provide the withSessionId parameter, the session is built with the input session ID.

-

WSCHibernationHandler.h, a new protocol file

For more on these and other WebRTC Session Controller iOS API classes, see AllClasses at Oracle Communications WebRTC Session Controller iOS API Reference.

About the General Requirements to Provide Notifications

Complete the following tasks as required for your application. Some are performed outside of your application:

-

Enabling Your Applications to Use the WebRTC Session Controller Notification Service

-

Informing the Device to Deliver Push Notifications to Your Application

-

All actions with respect to changes in the activity life cycle, creating, updating, and removing notifications and so on.

Registering with Apple Push Notification Service

Register your WebRTC Session Controller installation with the Apple Push Notification service to set up the following:

-

SSL certificate to communicate with the APN service.

-

Provisioning profile for the application.

For information about completing these tasks, refer to the Local and Remote Notification Programming Guide in the iOS Developer Library at

Obtaining the Device Token

The device token is similar to a phone number and is used in the push notification. The Apple Push Notification service uses this token to locate the specific device on which your iOS application is installed.

Your application should register with Apple Push Notification service to obtain a deviceToken.

For information about how to register with the notification service and obtain a device token, refer to the Local and Remote Notification Programming Guide in the iOS Developer Library documentation.

Enabling Your Applications to Use the WebRTC Session Controller Notification Service

Access the Notification Service tab in the WebRTC Session Controller Administration Console and enter the information about each application for the use of the WebRTC Session Controller Notification Service. For each application, enter the application settings such as the application ID, API Key, the cloud provider for the API service. For more information about completing this task, see "Creating Applications for the Notification Service" in WebRTC Session Controller System Administrator's Guide.

Informing the Device to Deliver Push Notifications to Your Application

Ensure that, after your application launches successfully, your application informs the device that it requires push notifications.

In Example 13-8, the application registers for push notifications.

Example 13-8 Registering for Push Notifications

- (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions:(NSDictionary *)launchOptions

{

// Application lets the device know it wants to receive push notifications

[[UIApplication sharedApplication] registerForRemoteNotificationTypes:

(UIRemoteNotificationTypeBadge | UIRemoteNotificationTypeSound | UIRemoteNotificationTypeAlert)];

NSDictionary *appDefaults = [NSDictionary

dictionaryWithObject:[NSNumber numberWithBool:YES]

forKey:@"CacheDataAgressively"];

[[NSUserDefaults standardUserDefaults] registerDefaults:appDefaults];

[self registerDefaultsFromSettingsBundle];

if (launchOptions != nil)

{

NSDictionary *dictionary = [launchOptions objectForKey:UIApplicationLaunchOptionsRemoteNotificationKey];

if (dictionary != nil)

{

NSLog(@"Launched from push notification: %@", dictionary);

//[self addMessageFromRemoteNotification:dictionary updateUI:NO];

}

}

return YES;

}

Note:

iOS7.0 and later versions support silent remote notification where the silent notification wakes up the application in the background so that it can get new data from the server.If you remove the Sound, Badge and Alert from the registerForRemoteNotificationTypes call by sending in an empty set, the push service should send your notifications silently.

Storing the Device Token

After you have successfully registered with Apple Push Notification service, store the device token in your application.

The Example 13-9 code excerpt follows from Example 13-8 and shows how an application stores the device token and reports any failure to do so.

Example 13-9 Storing the Token Device

(BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions:(NSDictionary *)launchOptions

{

// Let the device know we want to receive push notifications

...

return YES;

}

...

-(void)application:(UIApplication*)application didRegisterForRemoteNotificationsWithDeviceToken:(NSData*)deviceToken {

DLog(@"My token is: %@", deviceToken);

[SampleIOSUtils setDeviceToken:deviceToken];

}

- (void)application:(UIApplication*)application didFailToRegisterForRemoteNotificationsWithError:(NSError*)error {

NSLog(@"Failed to get token, error: %@", error);

}

The following excerpt from an application shows sets up the logic to get and set device tokens.

...

static NSString * const DEVICE_TOKEN_KEY = @"DeviceToken";

static NSData *deviceToken;

...

+(NSData *)getDeviceToken {

return [[NSUserDefaults standardUserDefaults] objectForKey:DEVICE_TOKEN_KEY];

}

+(void)setDeviceToken:(NSData *)token {

[[NSUserDefaults standardUserDefaults] setObject:token forKey:DEVICE_TOKEN_KEY];

[[NSUserDefaults standardUserDefaults] synchronize];

}

Storing the Session ID

Your application should use the various standard storage mechanisms offered by the iOS platforms to persist the session ID. Your application can use this session ID to immediately present "Bob" (the customer) with the last current state of Bob's session with your application.

The getSessionId() method of WSCSession object returns the session identifier as an NSString object. For more information, see the description of WSCSession in Oracle Communications WebRTC Session Controller iOS API Reference.

Implement Session Rehydration

To implement session rehydration in your application:

-

Persist Session Ids

To provides your customers with a seamless user experience, persist the session ID value in your application. Use the various standard storage mechanisms offered by iOS platform to store user credentials in your iOS applications.

-

Use the appropriate Session ID

Provide the same session ID that the client last successfully connected with when it hibernated. The WebRTC Session Controller iOS SDK uses the session ID to rehydrate the session. It uses stored credentials to authenticate the client session.

-

Provide for the ability to trigger hydration for more than one session object.

This scenario occurs when you have multiple applications defined in WebRTC Session Controller and your customer creates a session with more than one of the WebRTC Session Controller applications in their mobile application. In such a scenario, the client application is using more than one WSCSession(s), each with its own session ID.

Handling Hibernation Requests from the Server

At times your application receives a request to hibernate from the WebRTC Session Controller server. To respond to such a request, provide the necessary logic to handle the user interface and other elements in your application.

See "Responding to Hibernation Requests from the Server" for information on how to set up the callbacks to the specific WebRTC Session Controller iOS SDK event handlers.

Tasks that Use WebRTC Session Controller iOS APIs

Use WebRTC Session Controller iOS APIs to do the following:

For information about the WebRTC Session Controller iOS APIs used in the following sections, see Oracle Communications WebRTC Session Controller iOS API Reference.

Associate the Device Token when Building the WebRTC Session

Associate the device token when you build a WebRTC Session Controller session. The withdeviceToken method returns a WSCSessionBuilder object with a device token configuration:

-(WSCSessionBuilder *)withDeviceToken:(NSData *)token;

Pass the stored device token when you initialize the session, as shown in Example 13-17.

In the following sample code excerpt, the application provides a stored device token that it retrieves from its SampleIOSUtils interface.

...

self.wscSession = [[[[[[[[[[[[[[[WSCSessionBuilder create:url]

...

withDeviceToken:[SampleIOSUtils getDeviceToken]

...

For information about WSCSessionBuilder, see Oracle Communications WebRTC Session Controller iOS API Reference.

Associate the Hibernation Handler for the Session

Set up the hibernation handling function when you build a WebRTC Session Controller session. The withHibernationHandler method returns a WSCSessionBuilder object with a hibernation handler configuration:

- (WSCSessionBuilder *)withHibernationsHandler:(id<WSCHibernationHandler>)handler

See Example 13-17.

In the following sample code excerpt, the application allocates and initializes a hibernation handler:

...

self.wscSession = [[[[[[[[[[[[[[[WSCSessionBuilder create:url]

...

withHibernationsHandler:[[WSCHibernationHandler alloc] init]

...

Implement the HibernationHandler Interface

Implement the HibernationHandler interface to handle the hibernation requests that originate from the server or the client. The HibernationHandler interface has the following event handlers:

-

onFailure: Called when a hibernate request from the client fails.

-

onSuccess: Called when a hibernate request from the client succeeds.

-

onRequest: Called when there is a request from the server to hibernate the client. Returns an instance of WSCHibernateParams.

-

onRequestCompleted: Called when the request from the server end completes. This event handler uses a WSCStatusCode enum value as input parameter.

Example 13-10 Sample HibernationHandler Implementation

-(void)onSuccess {

// Hibernate success. Store the recent sessionId

DLog("Success Hibernating");

WSCSession *session = [SampleIOSUtils getActiveSession];

[SampleIOSUtils setLastSessionId:[session getSessionId]];

[SampleIOSUtils logout];

}

-(void)onFailure:(WSCStatusCode)code {

// Hibernate failed. Issue alert

DLog("Error Hibernating, status code: %ld",(long)code);

//[SampleIOSUtils showAlertBox];

[SampleIOSUtils logout];

}

// Return the timetolive param set for the hibernation

-(WSCHibernateParams *)onRequest {

WSCHibernateParams *params = [[WSCHibernateParams alloc] initWithTTL:12000];

return params;

}

-(void)onRequestCompleted:(WSCStatusCode)code {

if(code == WSCStatusCodeOk ){

// WebRTC Session Controller Status 200.. Store the recent sessionId

WSCSession *session = [SampleIOSUtils getActiveSession];

[SampleIOSUtils setLastSessionId:[session getSessionId]];

DLog("Success Hibernating, status code:%ld",(long)code);

} else {

// WebRTC Session Controller Not OK.. Show Alert

DLog("Error Hibernating, status code: %ld",(long)code);

//[SampleIOSUtils showAlertBox];

}

[SampleIOSUtils logout];

}

For information about WSCHibernationHandler and WSCStatusCode, see Oracle Communications WebRTC Session Controller iOS API Reference.

Implement Session Hibernation

When your iOS application is in the background, your application must send a request back to WebRTC Session Controller stating that it wants to hibernate the session.

Use the appropriate method to release shared resources, invalidate timers, and store the state information necessary to restore your application to its current state, in case it is terminated later. For information about handling the applications life cycle see the UIApplicationDelegate reference section of the iOS Developer Library.

The WebRTC Session Controller iOS SDK provides the following method in the WSCSession object.

-(void)hibernate:(WSCHibernateParams *) params;

Provide the hibernation period with the WSCHibernateParams object, using its static of method to create a holder for the time interval and its unit. For information about the hibernate method, see Oracle Communications WebRTC Session Controller iOS API Reference.

Example 13-11 illustrates how to initiate a hibernate request to the WebRTC Session Controller server.

Example 13-11 Hibernating the Session

-(void)applicationDidEnterBackground:(UIApplication *)application

{

WSCSession *session = //get current active session

// Hibernate the session

[session hibernate:[session.hibernationHandler]];

}

The WebRTC Session Controller server identifies the client device (going into hibernation) by the deviceToken you provided when building the session object (Example 13-17).

When you implement the hibernate method, provide the maximum period for which the client session should be kept alive on the server. All notifications received within this period are sent to the client device. The WebRTC Session Controller server maintains a maximum interval depending on the policy set for each type of client device. If your application sets an interval greater than this period, the server uses the policy-supported maximum interval.

When the hibernate method completes, the WSCSessionState for the session is WSCSessionStateHibernated. The session with the WebRTC Session Controller server closes. Your application can take no action, such as making a call request.

For information about hibernate method, see the description about WSCSession in Oracle Communications WebRTC Session Controller iOS API Reference.

Send Notifications to the Callee when the Client Session is in Hibernated State

If the client session for the callee is in a hibernated state, any incoming event for that client session may require some time for the call setup so that the callee can accept the call. In your iOS application, add the logic to the callback function to handle incoming call event when the session for the callee is in a hibernated state.

Note:

This section describes how to use the WebRTC Session Controller notification API to send the notification.If your application connects to a notification system that exposes a REST API, you can use REST API Callouts instead.

Set up a function to handle the onWSHibernated method provided by the Groovy Script library. This method takes NotificationContext object as a parameter.

The NotificationContext object serves as a cache and way for notifications and allows notifications to be marked for consumption after the render life cycle has completed. It allows equal access to notifications across multiple interfaces on a page. Use this object to do the following:

-

Retrieve

-

information about the triggering message, (such as the initiator, the target, package type)

-

Information about the application (Id, version, platform, platform version)

-

The device token

-

The incoming message that triggered this notification, as a normalized message

-

The REST client instance for submitting outbound REST requests (synchronized call outs only).

-

-

Dispatch the messages through the internal notification service, if configured.

For more information about NotificationContext, see All Classes in Oracle Communications WebRTC Session Controller Configuration API Reference.

Example 13-12 shows a sample code excerpt that creates the JSON message in the msg_payload object. It uses the context.dispatch method to dispatch the message payload through the local notification service.

Example 13-12 Using Groovy Method to Define the Notification Payload

/**

* This function gets invoked when the client end-point is in a hibernated state when an incoming event arrives for it.

* A typical action would be to send some trigger/Notification to wake up the client.

*

* @param context the notification context

*/

void onWSHibernated(NotificationContext context) {

// Define the notification payload.

def msg_payload = "{\"data\" : {\"wsc_event\": \"Incoming " + context.getPackageType() +

"\", \"wsc_from\": \"" + context.getInitiator() + "\"}}"

if (Log.debugEnabled) {

Log.debug("Notification Payload: " + msg_payload)

}

// Using local notification gateway

context.dispatch(msg_payload)

}

Provide the Session ID to Rehydrate the Session

To rehydrate an existing session, use the stored session ID. The withSessionId method to create a session with a stored session ID (value) is:

-(WSCSessionBuilder *)withSessionId:(NSString *)value;

Important:

Invoke this method when attempting to rehydrate an existing session only.As shown in Example 13-13, you can set up a listener for push notification in your application. Pass the session ID received in the push notification into the session builder. The session builder rehydrates the session by retrieving the hibernated session out of persisted storage using the passed sessionId as the key.

Example 13-13 Rehydrating an Existing Session

-(void)application:(UIApplication*)application didReceiveRemoteNotification:(NSDictionary*)userInfo

{

NSLog(@"Received notification: %@", userInfo);

NSString *sessionId = //Parse sessionID from userInfo object

...

// Build a new session object containing the sessionId pulled from userInfo object

WSCSessionBuilder *builder = [[WSCSessionBuilder alloc] init:webSocketURL]];

[[[[[builder withHibernationsHandler:hibernationHandler]

...

withSessionId: sessionId];

...

// Build the new session object

WSCSession *session = [builder build];

}

Responding to Hibernation Requests from the Server

When the server has to force your application to hibernate, it calls the onRequest method in the WSCHibernationHandler. When the hibernation request from the server completes, it calls the onRequestCompleted method in that WSCHibernationHandler.

Provide the necessary logic in your implementation of WSCHibernationHandler to handle the user interface and other elements in your application, as shown in Example 13-14.

Creating a WebRTC Session Controller Session

Once you have configured your authentication method and connected to your WebRTC Session Controller endpoint, you can instantiate a WebRTC Session Controller session object.

Implement the WSCSessionConnectionDelegate Protocol

You must implement the WSCSessionConnectionDelegate protocol to handle the results of your session creation request. See Example 13-15. The WSCSessionConnectionDelegate protocol has two event handlers:

-

onSuccess: Triggered upon a successful session creation.

-

onFailure: Returns a failure status code. Triggered when session creation fails.

Example 13-15 Implementing the WSCSessionConnectionDelegate Protocol

#pragma mark WSCSessionConnectionDelegate

-(void)onSuccess {

NSLog(@"WebRTC Session Controller session connected.");

NSLog(@"Connection succeeded. Continuing...");

}

-(void)onFailure:(enum WSCStatusCode)code {

switch (code) {

case WSCStatusCodeUnauthorized:

NSLog(@"Unable to connect. Please check your credentials.");

break;

case WSCStatusCodeResourceUnavailable:

NSLog(@"Unable to connect. Please check the URL.");

break;

default:

// Handle other cases as required...

break;

}

}

Implement the WSCSession Connection Observer Protocol

Create a WSCSessionConnectionObserver protocol to monitor and respond to changes in session state, as shown in Example 13-16.

Example 13-16 Implementing the WSCSessionConnectionObserver Protocol

#pragma mark WSCSessionConnectionDelegate

-(void)stateChanged:(WSCSessionState) sessionState {

switch (sessionState) {

case WSCSessionStateConnected:

NSLog(@"Session is connected.");

break;

case WSCSessionStateReconnecting:

NSLog(@"Session is attempting reconnection.");

break;

case WSCSessionStateFailed:

NSLog(@"Session connection attempt failed.");

break;

case WSCSessionStateClosed:

NSLog(@"Session connection has been closed.");

break;

default:

break;

}

}

Build the Session Object and Open the Session Connection

With the connection delegate and connection observer configured, you now build a WebRTC Session Controller session and open a connection with the server, as shown in Example 13-17.

Example 13-17 Building the Session Object and Opening the Session Connection

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if necessary, from Example 13-4... ... // Retrieve all the response headers from Example 13-5... ... // Build the httpContext from Example 13-6... ... NSString *userName = @"username"; self.wscSession = [[[[[[[[[[[[WSCSessionBuilder create:urlString] withConnectionDelegate:WSCSessionConnectionDelegate] withUserName:userName] withObserverDelegate:WSCSessionConnectionObserverDelegate] withPackage:[[WSCCallPackage alloc] init]] withHttpContext:httpContext] withIceServerConfig:iceServerConfig] withObserverDelegate:[WSCSessionObserverDelegate] withHibernationsHandler:[WSCHibernationHandler] withDeviceToken:["MydeviceToken"] build]; // Open a connection to the server... [self.wscSession open];

In Example 13-17, note that the withPackage method registers a new WSCCallPackage with the session that will be instantiated when creating voice or video calls.

Configure Additional WSCSession Properties

You can configure additional properties when creating a session using the WSCSessionBuilder withProperty method, as shown in Example 13-18.

Example 13-18 Configuring WSCSession Properties

if (error) { // Handle an error.. NSLog("The following error occurred: %@", error.description); } else { // Configure the SSLContext if necessary, from Example 13-4... ... // Retrieve all the response headers from Example 13-5... ... // Build the httpContext from Example 13-6... ... self.wscSession = [[[[[[[[[[[[WSCSessionBuilder create:urlString] ... withProperty:WSC_PROP_IDLE_PING_INTERVAL value:[NSNumber numberWithInt: 20]] withProperty:WSC_PROP_RECONNECT_INTERVAL value:[NSNumber numberWithInt:10000]] ... build]; [self.wscSession open]; }

For a complete list of properties see the Oracle Communications WebRTC Session Controller iOS SDK API Reference.

Adding WebRTC Voice Support to your iOS Application

This section describes how you can add WebRTC voice support to your iOS application.

Initialize the CallPackage Object

When you created your Session, you registered a new WSCCallPackage object using the withPackage method of the Session object. You now instantiate that WSCCallPackage, as shown in Example 13-19.

Example 13-19 Initializing the CallPackage

WSCCallPackage *callPackage = (WSCCallPackage*)[wscSession getPackage:PACKAGE_TYPE_CALL];

Note:

Use the default PACKAGE_TYPE_CALL call type unless you have defined a custom call type.Place a WebRTC Voice Call from Your iOS Application

Once you have configured your authentication scheme, and created a Session, you can place voice calls from your iOS application.

Add the Audio Capture Device to Your Session

Before continuing, in order to stream audio from your iOS device you initialize a capture session and add an audio capture device, as shown in Example 13-20.

Example 13-20 Adding an Audio Capture Device to Your Session

- (instancetype)initAudioDevice

{

self = [super initAudioDevice];

if (self) {

self.captureSession = [[AVCaptureSession alloc] initAudioDevice];

[self.captureSession setSessionPreset:AVCaptureSessionPresetLow];

// Get the audio capture device and add to our session.

self.audioCaptureDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

NSError *error = nil;

AVCaptureDeviceInput *audioInput = [AVCaptureDeviceInput

deviceInputWithDevice:self.audioCaptureDevice error:&error];

if (audioInput) {

[self.captureSession addInput:audioInput];

}

else {

NSLog(@"Unable to find audio capture device : %@", error.description);

}

return self;

}

Initialize the Call Object

Now, with the WSCCallPackage object created, you then initialize a WSCCall object, passing the callee ID as an argument, as shown in Example 13-21.

Configure Trickle ICE

To improve ICE candidate gathering performance, you can choose to enable Trickle ICE in your application using the setTrickleIceMode method of the WSCCall object, as shown in Example 13-22.

Example 13-22 Configuring Trickle ICE

NSLog(@"Configure Trickle ICE options, WSCTrickleIceModeOFF, WSCTrickleIceModeHalf, or WSCTrickleIceModeFull..."); [call setTrickleIceMode: WSCTrickleIceModeFull];

For more information, see "Enabling Trickle ICE to Improve Application Performance".

Create a WSCCallObserverDelegate Protocol

You create a WSCCallObserverDelegate protocol, as shown in Example 13-23, so you can respond to the following WSCCall events:

-

callUpdated: Triggered on incoming and outgoing call update requests.

-

mediaStateChanged: Triggered on changes to the WSCCall media state.

-

stateChanged: Triggered on changes to the WSCCall state.

-

onDataTransfer: Triggered when a WSCDataTransfer object is created.

Example 13-23 Creating a WSCCallObserverDelegate Protocol

#pragma mark WSCCallObserverDelegate -(void)callUpdated:(WSCCallUpdateEvent)event callConfig:(WSCCallConfig *)callConfig cause:(WSCCause *)cause { NSLog("callUpdate request with config: %@", callConfig.description); switch(event){ case WSCCallUpdateEventSent: break; case WSCCallUpdateEventReceived: NSLog("Call Update event received for config: %@", callConfig.description); break; case WSCCallUpdateEventAccepted: NSLog("Call Update accepted for config: %@", callConfig.description); break; case WSCCallUpdateEventRejected: NSLog("Call Update event rejected for config: %@", callConfig.description); break; default: break; } } -(void)mediaStateChanged:(WSCMediaStreamEvent)mediaStreamEvent mediaStream:(RTCMediaStream *)mediaStream { NSLog(@"mediaStateChanged : %u", mediaStreamEvent); } -(void)stateChanged:(WSCCallState)callState cause:(WSCCause *)cause { NSLog(@"Call State changed : %u", callState); switch (callState) { NSLog(@"stateChanged: %u", callState); case WSCCallStateNone: NSLog(@"stateChanged: %@", @"WSC_CS_NONE"); break; case WSCCallStateStarted: NSLog(@"stateChanged: %@", @"WSC_CS_STARTED"); break; case WSCCallStateResponded: NSLog(@"stateChanged: %@", @"WSC_CS_RESPONDED"); break; case WSCCallStateEstablished: NSLog(@"stateChanged: %@", @"WSC_CS_ESTABLISHED"); break; case WSCCallStateFailed: NSLog(@"stateChanged: %@", @"WSC_CS_FAILED"); break; case WSCCallStateRejected: NSLog(@"stateChanged: %@", @"WSC_CS_REJECTED"); break; case WSCCallStateEnded: NSLog(@"stateChanged: %@", @"WSC_CS_ENDED"); break; default: break; } }

Register the WSCCallObserverDelegate Protocol with the Call Object

You register the WSCCallObserverDelegate protocol with the WSCCall object, as shown in Example 13-24.

Create a WSCCallConfig Object

You create a WSCCallConfig object to determine the type of call you wish to make. The WSCCallConfig constructor takes two parameters, audioMediaDirection and videoMediaDirection. The first parameter configures an audio call while the second configures a video call.

The values for audioMediaDirection and videoMediaDirection parameters are:

-

WSCMediaDirectionNone: No direction; media support disabled.

-

WSCMediaDirectionRecvOnly: The media stream is receive only.

-

WSCMediaDirectionSendOnly: The media stream is send only.

-

WSCMediaDirectionSendRecv: The media stream is bi-directional.

Example 13-25 shows the configuration for a bi-directional, audio-only call.

Configure the Local MediaStream for Audio

With the WSCCallConfig object created, you then configure the local audio MediaStream using the WebRTC PeerConnectionFactory, as shown in Example 13-26.

Example 13-26 Configuring the Local MediaStream for Audio

RTCPeerConnectionFactory *)pcf = [call getPeerConnectionFactory]; RTCMediaStream* localMediaStream = [pcf mediaStreamWithLabel:@"ARDAMS"]; [localMediaStream addAudioTrack:[pcf audioTrackWithID:@"ARDAMSa0"]]; NSArray *streamArray = [[NSArray alloc] initWithObjects:localStream, nil];

For information about the WebRTC SDK API, see https://webrtc.org/native-code/native-apis/.

Start the Audio Call

Finally, you start the audio call using the start method of the WSCCall object and passing it the WSCCallConfig object and the streamArray, as shown in Example 13-27.

Receiving a WebRTC Voice Call in Your iOS Application

This section describes configuring your iOS application to receive WebRTC voice calls.

Create a WSCCallPackageObserverDelegate

To be notified of an incoming call, create a WSCCallPackageObserverDelegate and attach it to your WSCCallPackage, as shown in Example 13-28.

Example 13-28 Creating a CallPackageObserver Delegate

Creating a CallPackageObserver Delegate

#pragma mark WSCCallPackageObserverDelegate

-(void)callArrived:(WSCCall *)call

callConfig:(WSCCallConfig *)callConfig

extHeaders:(NSDictionary *)extHeaders {

NSLog(@"Registering a WSCCallObserverDelegate...");

call.setObserverDelegate = WSCCallObserverDelegate;

NSLog(@"Configuring the media streams...");

RTCPeerConnectionFactory *)pcf = [call getPeerConnectionFactory];

RTCMediaStream* localMediaStream = [pcf mediaStreamWithLabel:@"ARDAMS"];

[localMediaStream addAudioTrack:[pcf audioTrackWithID:@"ARDAMSa0"]];

if (answerTheCall) {

NSLog(@"Answering the call...");

[call accept:self.callConfig streams:localMediaStream];

} else {

NSLog(@"Declining the call...");

[call decline:WSCStatusCodeBusyHere];

}

}

}

In Example 13-28, the callArrived event handler processes an incoming call request:

-

The method registers a WSCCallObserverDelegate for the incoming call. In this case, it uses the same WSCCallObserverDelegate, from the example in "Create a WSCCallObserverDelegate Protocol".

-

The method then configures the local media stream, in the same manner as "Configure the Local MediaStream for Audio".

-

The method determines whether to accept or reject the call based on the value of the answerTheCall boolean using the accept or decline methods of the WSCCall object.

Note:

The answerTheCall boolean will most likely be set by a user interface element in your application such as a button or link.

Adding WebRTC Video Support to your iOS Application

This section describes how you can add WebRTC video support to your iOS application. While the methods are almost completely identical to adding voice call support to an iOS application, additional preparation is required.

Add the Audio and Video Capture Devices to Your Session

As with an audio call, you initialize the audio capture device as shown in Example 13-20. In addition, you initialize the video capture device and add it to your session, as shown below in Example 13-29.

Example 13-29 Adding the Audio and Video Capture Devices to Your Session

- (instancetype)initAudioVideo

{

self = [super initAudioVideo];

if (self) {

self.captureSession = [[AVCaptureSession alloc] initAudioVideo];

[self.captureSession setSessionPreset:AVCaptureSessionPresetLow];

// Get the audio capture device and add to our session.

self.audioCaptureDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

NSError *error = nil;

AVCaptureDeviceInput *audioInput = [AVCaptureDeviceInput

deviceInputWithDevice:self.audioCaptureDevice error:&error];

if (audioInput) {

[self.captureSession addInput:audioInput];

}

else {

NSLog(@"Unable to find audio capture device : %@", error.description);

}

// Get the video capture devices and add to our session.

for (AVCaptureDevice* videoCaptureDevice in [AVCaptureDevice

devicesWithMediaType:AVMediaTypeVideo]) {

if (videoCaptureDevice.position == AVCaptureDevicePositionFront) {

self.frontVideCaptureDevice = videoCaptureDevice;

AVCaptureDeviceInput *videoInput = [AVCaptureDeviceInput

deviceInputWithDevice:videoCaptureDevice error:&error];

if (videoInput) {

[self.captureSession addInput:videoInput];

} else {

NSLog(@"Unable to get front camera input : %@", error.description);

}

} else if (videoCaptureDevice.position == AVCaptureDevicePositionBack) {

self.backVideCaptureDevice = videoCaptureDevice;

}

}

}

return self;

}

Configure a View Controller to Display Incoming Video

You add a view object to a view controller to display the incoming video. In Example 13-30, when the MyWebRTCApplicationViewController view controller is created, its view property is nil, which triggers the loadView method

Example 13-30 Creating a View to Display the Video Stream

@implementation MyWebRTCApplicationViewController

- (void)loadView {

// Create the view, videoView...

CGRect frame = [UIScreen mainScreen].bounds;

MyWebRTCApplicationView *videoView = [[MyWebRTCApplication alloc] initWithFrame:frame];

// Set videoView as the main view of the view controller...

self.view = videoView;

}

@end

Next you set the view controller as the rootViewController, which adds videoView as a subview of the window, and automatically resizes videoView to be the same size as the window, as shown in Example 13-31.

Example 13-31 Setting the Root View Controller

#import "MyWebRTCApplicationAppDelegate.h" #import "MyWebRTCApplicationViewController.h" @implementation MyWebRTCApplicationAppDelegate - (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions: (NSDictionary *)launchOptions { self.window = [[UIWindow alloc] initWithFrame:[[UIScreen mainScreen] bounds]]; MyWebRTCApplicationViewController *myvc = [[MyWebRTCApplicaitonViewController alloc] init]; self.window.rootVewController = myvc; self.window.backgroundColor = [UIColor grayColor]; return YES; }

Placing a WebRTC Video Call from Your iOS Application

To place a video call from your iOS application, complete the coding tasks contained in the following sections:

-

Configure Interactive Connectivity Establishment (ICE) (if necessary)

In addition, complete the coding tasks for an audio call contained in the following sections:

-

Configure Trickle ICE (if necessary)

-

Register the WSCCallObserverDelegate Protocol with the Call Object

Note:

Audio and video call work flows are identical with the exception of media directions, local media stream configuration, and the additional considerations described earlier in this section.Create a WSCCallConfig Object

You create a WSCCallConfig object as described in "Create a WSCCallConfig Object", in the audio call section. Set both arguments to WSCMediaDirectionSendRecv, as shown in Example 13-32.

Configure the Local WSCMediaStream for Audio and Video

With the CallConfig object created, you then configure the local video and audio MediaStream objects using the WebRTC PeerConnectionFactory. In Example 13-33, the PeerConnectionFactory is used to first configure a video stream using optional constraints and mandatory constraints (as defined in the getMandatoryConstraints method), and is then added to the localMediaStream using its addVideoTrack method. Two boolean arguments, hasAudio and hasVideo, enable the calling function to specify whether audio or video streams are supported in the current call. The audioTrack is added as well and the localMediaStream is returned to the calling function.

For information about the WebRTC PeerConnectionFactory and mandatory and optional constraints, see https://webrtc.org/native-code/native-apis/.

Example 13-33 Configuring the Local MediaStream for Audio and Video

-(RTCMediaStream *)getLocalMediaStreams:(RTCPeerConnectionFactory *)pcf

enableAudio:(BOOL)hasAudio enableVideo:(BOOL)hasVideo {

NSLog(@"Getting local media streams");

if (!localMediaStream) {

NSLog("PeerConnectionFactory: createLocalMediaStream() with pcf : %@", pcf);

localMediaStream = [pcf mediaStreamWithLabel:@"ALICE"];

NSLog(@"MediaStream1 = %@", localMediaStream);

}

if(hasVideo && (localMediaStream.videoTracks.count <= 0)){

if (hasVideo) {

RTCVideoCapturer* capturer = [RTCVideoCapturer

capturerWithDeviceName:[avManager.frontVideCaptureDevice localizedName]];

RTCPair *dtlsSrtpKeyAgreement = [[RTCPair alloc] initWithKey:@"DtlsSrtpKeyAgreement"

value:@"true"];

NSArray * optionalConstraints = @[dtlsSrtpKeyAgreement];

NSArray *mandatoryConstraints = [self getMandatoryConstraints];

RTCMediaConstraints *videoConstraints = [[RTCMediaConstraints alloc]

initWithMandatoryConstraints:mandatoryConstrainta

optionalConstraints:optionalConstraints];

RTCVideoSource *videoSource = [pcf videoSourceWithCapturer:capturer

constraints:videoConstraints];

RTCVideoTrack *videoTrack = [pcf videoTrackWithID:@"ALICEv0" source:videoSource];

if (videoTrack) {

[localMediaStream addVideoTrack:videoTrack];

}

}

}

if (localMediaStream.audioTracks.count <= 0 && hasAudio) {

[localMediaStream addAudioTrack:[pcf audioTrackWithID:@"ALICEa0"]];

}

if (!hasVideo && localMediaStream.videoTracks.count > 0) {

for (RTCVideoTrack *videoTrack in localMediaStream.videoTracks) {

[localMediaStream removeVideoTrack:videoTrack];

}

}

if (!hasAudio && localMediaStream.audioTracks.count > 0) {

for (RTCAudioTrack *audioTrack in localMediaStream.audioTracks) {

[localMediaStream removeAudioTrack:audioTrack];

}

}

NSLog(@"MediaStream = %@", localMediaStream);

return localMediaStream;

}

-(NSArray *)getMandatoryConstraints {

RTCPair *localVideoMaxWidth = [[RTCPair alloc] initWithKey:@"maxWidth" value:@"640"];

RTCPair *localVideoMinWidth = [[RTCPair alloc] initWithKey:@"minWidth" value:@"192"];

RTCPair *localVideoMaxHeight = [[RTCPair alloc] initWithKey:@"maxHeight" value:@"480"];

RTCPair *localVideoMinHeight = [[RTCPair alloc] initWithKey:@"minHeight" value:@"144"];

RTCPair *localVideoMaxFrameRate = [[RTCPair alloc] initWithKey:@"maxFrameRate" value:@"30"];

RTCPair *localVideoMinFrameRate = [[RTCPair alloc] initWithKey:@"minFrameRate" value:@"5"];

RTCPair *localVideoGoogLeakyBucket = [[RTCPair alloc]

initWithKey:@"googLeakyBucket" value:@"true"];

return @[localVideoMaxHeight,

localVideoMaxWidth,

localVideoMinHeight,

localVideoMinWidth,

localVideoMinFrameRate,

localVideoMaxFrameRate,

localVideoGoogLeakyBucket];

}

Bind the Video Track to the View Controller

As shown in Example 13-34, bind the video track to the view controller you created in "Configure a View Controller to Display Incoming Video".

Receiving a WebRTC Video Call in Your iOS Application

Receiving a video call is identical to receiving an audio call as described here, "Receiving a WebRTC Voice Call in Your iOS Application". The only difference is the configuration of the WSCMediaStream object, as described in "Configure the Local WSCMediaStream for Audio and Video".

Supporting SIP-based Messaging in Your iOS Application

You can design your iOS application to send and receive SIP-based messages using the messaging package in WebRTC Session Controller iOS SDK.

To support messaging, define the logic for the following in your application:

-

Setup and management of the various activities associated with the states of the various objects, such as the session and the message transfer.

-

Enabling users to send or receive messages

-

Handling the incoming and outgoing message data

-

Managing the required user interface elements to display the message content throughout the call session.

About the Major Classes Used to Support SIP-based Messaging

The following major classes and protocols of the WebRTC Session Controller iOS SDK enable you to provide data channel support in your iOS application:

-

WSCMessagingPackage

This package handler enables messaging applications. You can send SIP-based messages to any logged-in user with an object of the WSCMessagingPackage class. This object also dispatches received messages to the registered delegate.

-

WSCMessagingDelegate

This class acts as a listener for incoming messages and their acknowledgements. It holds the following event handlers:

-

onNewMessage

This event handler is called when your application receives a new SIP-based message.

-

onSuccessResponse

This event handler is called when your application receives an accept/positive acknowledgment for a sent message.

-

onErrorResponse

This event handler is called when your application receives a reject/negative acknowledgment for a sent message.

-

-

WSCMessagingMessage

This class is used to hold the payload for SIP-based messaging.

-

withPackage

This method belongs to the WSCSessionBuilder class. It is used to hold to build a session that supports a package, such as the messaging package.

For more on these and other WebRTC Session Controller iOS API classes, see AllClasses at Oracle Communications WebRTC Session Controller iOS API Reference.

Setting up the SIP-based Messaging Support in Your iOS Application

Complete the following tasks to setup SIP-based messaging support in your iOS applications:

Enabling SIP-based Messaging

To enable SIP-based messaging in your iOS application, create and assign an instance of a messaging package.

When you set up the WSCSession class, pass this messaging package in the withPackage parameter of the WSCSession builder API, as shown in Example 13-37.

Example 13-37 Building a Session with a Messaging Package

#import "WSCMessagingPackage.h"

...

WSCSession *wscSession = [[[[[[[[WSCSessionBuilder create: wsUrl]

withConnectionDelegate: self]

withUserName: userName]

withObserverDelegate: self]

withPackage: [[WSCCallPackage alloc] init]]

withPackage: [[WSCMessagingPackage alloc] init]]

withHttpContext: httpContext]

build];

Ensure that your application implements the onSuccess and OnFailure event handlers in the WSCSessionConnectionDelegate object. WebRTC Session Controller iOS SDK sends asynchronous messages to these event handlers, based on its success or failure to build the session.

Sending SIP-based Messages

To send a SIP-based message, invoke the send method of the WSCMessagePackage object. The signature of the send method is:

(NSString *)send:(NSString *)textMessage target:(NSString *)target extHeaders:(NSDictionary *)extHeaders

In Example 13-38, destination is "bob@example.com" and the text is "Hi There." Bob sees the message from the sending party.

Handling Incoming SIP-based Messages

Set up your application to handle incoming messages and acknowledgements. Register a WSCMessagingDelegate to be notified when a new message is received. Set the ObserverDelegate property of the WSCMessagePackage object, as shown in Example 13-39:

Example 13-39 Registering the Observer for the Message Package

... WSCSession session; // Register an observer for listening to incoming messaging events. WSCMessagingPackage *msgPackage = (WSCMessagingPackage *)[self.wscSession getPackage:PACKAGE_TYPE_MESSAGING]; [msgPackage setObserverDelegate:self]; ...

When a new message comes in, the onNewMessage event handler of the WSCMessagingDelegate object is called. In the callback function you implement for the onNewMessage event handler, accept or reject the message received using the appropriate APIs.

Set up the logic to handle the acknowledgements appropriately:

-

The accept method of the WSCMessagePackage object. When the receiver of the message accepts the message, the onSuccessResponse event is triggered on the sender's side (that originated the message).

-

The reject method of the WSCMessagePackage object. When the receiver of the message rejects the message, the onErrorResponse event is triggered on the sender's side (that originated the message).

Example 13-40 Example of a Delegate Set up for a Message Package

// Class that observes for incoming messages from Messaging.

#pragma mark WSCMessagingDelegate

- (void) onNewMessage:(WSCMessagingMessage *)message {

NSLog(@"Got Messaging: onNewMessage with content:%@", message.content);

// Show message content in some UITextView

// Accept message as received

[self.wscMsging accept:message];

}

-(void) onSuccessResponse:(WSCMessagingMessage *)message{

NSLog(@"Messaging: onSuccessResponse");

}

-(void) onErrorResponse:(WSCMessagingMessage *)message cause:(WSCCause *)cause reason:(NSString *)reason {

NSLog(@"Messaging: onErrorResponse");

}

Adding WebRTC Data Channel Support to Your iOS Application

This section describes how you can add WebRTC data channel support to the calls you enable in your iOS application. For information about adding voice call support to an iOS application, see "Adding WebRTC Voice Support to your iOS Application".

To support calls with data channels, define the logic for the following in your application:

-

Setup and management of the various activities associated with the states of the various objects such as the session, the data transfer.

-

Enabling users to make or receive calls with data channels set up with or without the audio and video streams

-

Handling the incoming and outgoing data

-

Managing the required user interface elements to display the data content throughout the call session.

About the Major Classes and Protocols Used to Support Data Channels

The following major classes and protocols enable you to provide data channel support in your iOS application:

-

WSCCall

This object represents a call with any combination of audio, video, and data channel capabilities. It creates a data channel and initializes the WSCDataTransfer object for the data channel when the call starts or when accepting the Call if the Call has capability of data channel.

-

WSCCallConfig

The WSCCallConfig object represents a call configuration. It describes the audio, video, or data channel capabilities of a call.

-

WSCDataChannelOption

The WSCDataChannelOption object describes the configuration items in the data channel of a call such as whether ordered delivery is required, the stream id, maximum number of retransmissions and so on.

-

WSCDataChannelConfig

The WSCDataChannelConfig object describes the data channel of a call, including its label and WSCDataChannelOption.

-

WSCDataTransfer

The WSCDataTransfer object manages the data channel. If the WSCCallConfig object includes the data channel, the WSCCall object creates an instance of the WSCDataTransfer object.

-

WSCDataSender

The WSCDataSender object exposes the capability of a WSCDataTransfer to send raw data over a data channel. The instance is created by WSCDataTransfer.

-

WSCDataReceiver

The WSCDataReceiver object exposes the capability of a WSCDataTransfer to receive raw data over the established data channel. The instance is created by WSCDataTransfer.

-

onDataTransfer

The onDataTransfer method associated with WSCCallObserverDelegate indicates that a WSCDataTransfer is created.

-

WSCDataTransferObserverDelegate

The WSCDataTransferObserverDelegate acts as an observer protocol for WSCDataTransfer.

Your application can implement this protocol to be informed of changes in WSCDataTransfer.

-

WSCDataReceiverObserverDelegate

The WSCDataReceiverObserverDelegate acts as an observer protocol for WSCDataReceiver, the receiver of the data transfer.

For more information on these and other WebRTC Session Controller iOS API classes, see AllClasses at Oracle Communications WebRTC Session Controller iOS API Reference.

About the Sample Code Excerpts in This Section

The sample code excerpts shown in this section are taken from a sample iOS application which supports data-channels. The sample interface extends the WSCDataTransferObserverDelegate and WSCDataReceiverObserverDelegate Observers in addition to existing WSCCallObserverDelegate observers. Each excerpt is simple and attempts to illustrate the functionality under that discussion only.

About the Data Transfers and Data Channels

If the data channel is enabled, then, for both incoming and outgoing calls, a WSCDataTransfer object is created and passed back to your iOS application in the callback for the onDataTransfer method of the WSCCallObserverDelegate protocol.

Setting Up DataTransferObserverDelegate Protocol to Handle Data Transfers

When the onDataTransfer method of the WSCCallObserverDelegate protocol object is called, set the data transfer observer delegate to be informed of changes in the data transfer.

-(void)onDataTransfer:(WSCDataTransfer *)dataTransfer {

// register delegate to listen to the state change

dataTransfer.observerDelegate = self;

// keep this data transfer object for later use

_dataTransfer = dataTransfer;

}

In order for your application to respond to the various states of the data channel, implement the following methods of the WSCDataTransferObserverDelegate protocol:

-

onOpen

- (void)onOpen:(WSCDataTransfer *)dataTransfer

This method is called when the data channel of the WSCDataTransfer object is open. The state of the WSCDataTransfer object is denoted by the enum value WSCDataTransferOpen.

Your application can send and receive messages, as appropriate.

-

onClose

The method is called when the data channel of the WSCDataTransfer object is closed. The state of the WSCDataTransfer object is denoted by the enum value WSCDataTransferClosed.

-

onError

- (void)onError:(WSCDataTransfer *)dataTransfer

This method is called when the data channel of the WSCDataTransfer object encounters an error. The state of the WSCDataTransfer object is denoted by the enum value WSCDataTransferError.

Initialize the CallPackage Object

When you created your Session, you registered a new WSCCallPackage object using the Session object's withPackage method. You now instantiate that WSCCallPackage, as shown in Example 13-41.

Example 13-41 Initializing the CallPackage

WSCCallPackage *callPackage = (WSCCallPackage*)[wscSession getPackage:PACKAGE_TYPE_CALL];

Use the default PACKAGE_TYPE call type unless you have defined a custom call type. For more information, see "Initialize the CallPackage Object" under the audio call section of this chapter.

Sending Data from Your iOS Application

To send data from your iOS application, complete the coding tasks contained in the following sections.

-

Configure Interactive Connectivity Establishment (ICE) (if necessary)

Complete the coding tasks for an audio call contained in the following sections:

-

Configure Trickle ICE (if necessary)

-

Register the WSCCallObserverDelegate Protocol with the Call Object

Configure the Data Channel for the Data Transfers

Configure the data channel with WSCDataChannelConfig before you set up the WSCCallConfig object. The WebRTC Session Controller client iOS SDK supports multiple data channels in a call.

If you create one WSCDataChannelConfig object for a call, then one instance of the WSCDataTransfer object is created to support the call. Assign a label for the data channel configuration object, to allow your application to access the corresponding WSDDataTransfer with this label.

Example 13-42 shows one data channel that is assigned the label, Sample.

Example 13-42 Configuring a Single Data Channel for the Call

... // Set up WSCDataChannelOption WSCDataChannelOption *option = [[WSCDataChannelOption alloc]init]; // Set various options on WSCDataChannelOption //For example, option.maxRetransmits = 5; // create WSCDataChannelConfig WSCDataChannelConfig *dcConfig = [[WSCDataChannelConfig alloc] initWithLabel:@"sample" withOption:option]; NSArray *dcConfigs = [[NSArray alloc] initWithObjects:dcConfig, nil] ...

You can create multiple WSCDataChannelConfig objects. When you do so, the WebRTC Session Controller iOS SDK creates the required number of WSCDataTransfer objects to support your requirement.

Example 13-43 shows the code sample defining two data channels, each with its own label and placing them in myDataChannelConfig, its DataChannelConfig object.

Example 13-43 Configuring Multiple Data Channels for the Call

// This code sample sets up 2 different data channels both using default values.

WSCDataChannelOption *firstDcOption = [[WSCDataChannelOption alloc] init];

WSCDataChannelConfig *firstDcConfig = [[WSCDataChannelConfig alloc] initWithLabel:@"firstDataChannel" withOption:firstDcOption];

WSCDataChannelOption *secondDcOption = [[WSCDataChannelOption alloc] init];

WSCDataChannelConfig *secondDcConfig = [[WSCDataChannelConfig alloc] initWithLabel:@"secondDataChannel" withOption:secondDcOption];

// Create an array containing both data channel definitions.

NSArray *myDataChannelConfigs = [[NSArray alloc] initWithObjects:firstDcConfig, secondDcConfig, nil];

// Finally initialise the call configuration by including both data channels.

WSCCallConfig* myCallConfig = [[WSCCallConfig alloc] initWithAudioDirection:audioMediaDirection

withVideoDirection:videoMediaDirection

withDataChannel:myDataChannelConfigs];

Handling the Data Channel States

Implement the onOpen, onClose, and onError methods of the DataTransferObserverDelegate protocol so you can respond to changes in the states of the data channel.

For a description of the methods, see "Setting Up DataTransferObserverDelegate Protocol to Handle Data Transfers".

Create a WSCCallConfig Object with Data Channel Option

Having defined the data channel setup for the call, you can now create a WSCCallConfig object to determine the type of call you wish to make.