9 Managing Storage

Expand storage capacity and replace disks in Oracle Database Appliance.

- About Managing Storage

You can add storage at any time without shutting down your databases or applications. - Storage on Single Node Platforms

Review for storage and memory options on Oracle Database Appliance X7-2S and X7-2M single node platforms. - Storage on Multi Node Platforms

Review for storage and memory options on Oracle Database Appliance X7-2-HA multi node platforms.

About Managing Storage

You can add storage at any time without shutting down your databases or applications.

Oracle Database Appliance uses raw storage to protect data in the following ways:

-

Fast Recovery Area (FRA) backup. FRA is a storage area (directory on disk or Oracle ASM diskgroup) that contains redo logs, control file, archived logs, backup pieces and copies, and flashback logs.

-

Mirroring. Double or triple mirroring provides protection against mechanical issues.

The amount of available storage is determined by the location of the FRA backup (external or internal) and if double or triple mirroring is used. External NFS storage is supported for online backups, data staging, or additional database files.

Oracle Database Appliance X7-2M and X7-2-HA models provide storage expansion options from the base configuration. In addition, Oracle Database Appliance X7-2-HA multi-node platforms have an optional storage expansion shelf.

When you add storage, Oracle Automatic Storage Management (Oracle ASM) automatically rebalances the data across all of the storage including the new drives. Rebalancing a disk group moves data between disks to ensure that every file is evenly spread across all of the disks in a disk group and all of the disks are evenly filled to the same percentage. Oracle ASM automatically initiates a rebalance after storage configuration changes, such as when you add disks.

The redundancy level for FLASH is based on the DATA and RECO selection. If you choose High redundancy (triple mirroring), then FLASH is also High redudancy.

WARNING:

Pulling a drive before powering it off will crash the kernel, which can lead to data corruption. Do not pull the drive when the LED is an amber or green color. When you need to replace an NVMe drive, use the software to power off the drive before pulling the drive from the slot. If you have more than one disk to replace, complete the replacement of one disk before starting replacement of the next disk.

See “Adding Optional Oracle Database Appliance X7-2-HA Storage Shelf Drives (CRU)” in the Oracle Database Appliance Service Manual for disk placement.

Storage on Single Node Platforms

Review for storage and memory options on Oracle Database Appliance X7-2S and X7-2M single node platforms.

- Memory and Storage Options for Single Node Systems

Oracle Database Appliance X7-2S and X7-2M have NVMe storage configurations with expansion memory and storage options.

Parent topic: Managing Storage

Memory and Storage Options for Single Node Systems

Oracle Database Appliance X7-2S and X7-2M have NVMe storage configurations with expansion memory and storage options.

Table 9-1 Storage Options for Oracle Database Appliance X7-2S and X7-2M

| Configuration | Oracle Database Appliance X7-2S | Oracle Database Appliance X7-2M |

|---|---|---|

|

Base Configuration |

1 x 10 CPU 192 GB memory 2 x 6.4 TB NVMe |

2 x 18 CPU 384 GB memory 2 x 6.4 TB NVMe |

|

Expansion Options |

192 GB memory (part number 7117433) |

Options:

|

Parent topic: Storage on Single Node Platforms

Storage on Multi Node Platforms

Review for storage and memory options on Oracle Database Appliance X7-2-HA multi node platforms.

- About Expanding Storage on Multi-Node Systems

Oracle Database Appliance X7-2-HA platforms have options for high performance and high capacity storage configurations. - Preparing for a Storage Upgrade for a Virtualized Platform

Review and perform these best practices before adding storage to the base shelf or adding the expansion shelf.

Parent topic: Managing Storage

About Expanding Storage on Multi-Node Systems

Oracle Database Appliance X7-2-HA platforms have options for high performance and high capacity storage configurations.

The base configuration has 16 TB SSD raw storage for DATA and 3.2 TB SSD raw storage for REDO, leaving 15 available slots to expand the storage. If you choose to expand the storage, you can fill the 15 slots with either SSD or HDD drives. For even more storage, you can add a storage expansion shelf to double the storage capacity of your appliance.

In all configurations, the base storage and the storage expansion shelf each have four (4) 800 GB SSDs for REDO disk group and five (5) 3.2TB SSDs (either for DATA/RECO in the SSD option or FLASH in the HDD option).

Note:

The base storage shelf must be fully populated before you can add an expansion shelf and the expansion shelf must have the same storage configuration as the base shelf. Once you select a base configuration, you cannot change the type of storage expansion.

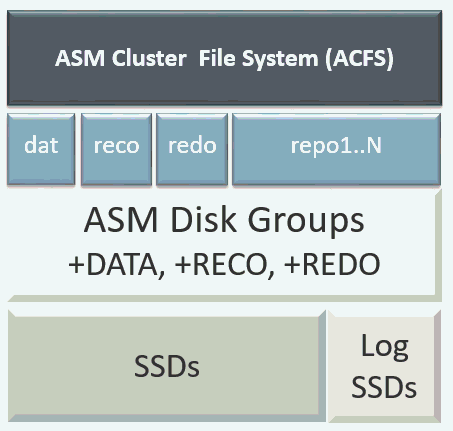

High Performance

A high performance configuration uses solid state drives (SSDs) for DATA and REDO storage. The base configuration has 16 TB SSD raw storage for DATA and 3.2 TB SSD raw storage for REDO.

You can add up to three (3) 5-Pack SSDs on the base configuration, for a total of 64 TB SSD raw storage. If you need more storage, you can double the capacity by adding an expansion shelf of SSD drives. The expansion shelf provides an additional 64 TB SSD raw storage for DATA, 3.2 TB SSD raw storage for REDO, and 16 TB SDD raw storage for FLASH.

Adding an expansion shelf requires that the base storage shelf and expansion shelf are fully populated with SSD drives. When you expand the storage using only SSD, there is no downtime.

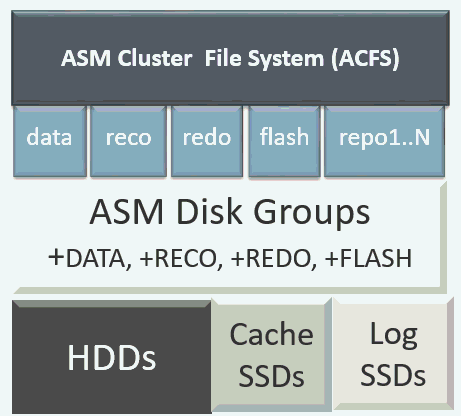

High Capacity

A high capacity configuration uses a combination of SSD and HDD drives.

The base configuration has 16 TB SSD raw storage for DATA and 3.2 TB SSD raw storage for REDO.

The following expansion options are available:

-

Base shelf: additional 150 TB HDD raw storage for DATA (15 pack of 10 TB HDDs.)

-

HDD Expansion shelf: additional 150 TB HDD raw storage for DATA, 3.2 TB SSD for REDO, and 16 TB SSD for FLASH

Note:

When you expand storage to include HDD, you must reposition the drives to the correct slots and redeploy the appliance after adding the HDD drives.

A system fully configured for high capacity has 300 TB HDD raw storage for DATA, 6.4 TB SSD raw storage for REDO and 32 TB SSD for Flash.

Table 9-2 Storage Options for Oracle Database Appliance X7-2-HA

| Configuration | Oracle Database Appliance X7-2-HA Base Configuration | Oracle Database Appliance X7-2-HA SSD Only Configuration for High Performance | Oracle Database Appliance X7-2-HA SSD and HDD Configuration for High Capacity |

|---|---|---|---|

| Base Configuration |

2 servers, each with:

JBOD:

|

2 servers, each with:

JBOD:

|

2 servers, each with:

JBOD:

|

| Expansion Options |

Options:

|

Options:

|

Options:

|

Parent topic: Storage on Multi Node Platforms

Preparing for a Storage Upgrade for a Virtualized Platform

Review and perform these best practices before adding storage to the base shelf or adding the expansion shelf.

Parent topic: Storage on Multi Node Platforms