Every Oracle Private Cloud at Customer rack is shipped with all the required network equipment and cables to support the Oracle Private Cloud at Customer environment. Depending on your particular deployment, this includes InfiniBand and Ethernet cabling between the base rack and the Oracle ZFS Storage Appliance ZS7-2, as well as additional Oracle Private Cloud switches and hardware running the Oracle Advanced Support Gateway and Oracle Cloud Control Plane. Every base rack also contains pre-installed cables for all rack units where additional compute nodes can be installed during a future expansion of the environment.

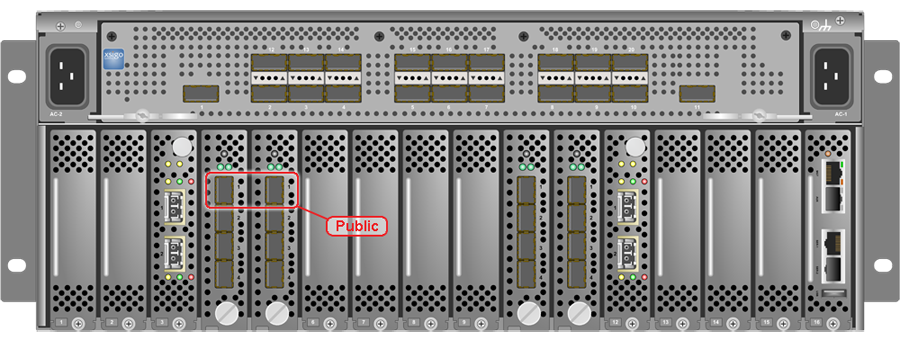

Before the Oracle Private Cloud at Customer system is powered on for the first time, the Fabric Interconnects must be properly connected to the next-level data center switches. You must connect two 10 Gigabit Ethernet (GbE) IO module ports labeled “Public” on each Fabric Interconnect to the data center public Ethernet network.

Figure 4.1 shows the location of the 10 GbE Public IO module ports on the Oracle Fabric Interconnect F1-15.

It is critical that both Fabric Interconnects have two 10GbE connections each to a pair of next-level data center switches. This configuration with four cable connections provides redundancy and load splitting at the level of the Fabric Interconnects, the 10GbE ports and the data center switches. This outbound cabling should not be crossed or meshed, because the internal connections to the pair of Fabric Interconnects are already configured that way. The cabling pattern plays a key role in the continuation of service during failover scenarios involving Fabric Interconnect outages and other components.

The IO modules only support 10 GbE transport and cannot be connected to gigabit Ethernet switches. The Oracle Private Cloud at Customer system must be connected externally to 10GbE optical switch ports.

It is not possible to configure any type of link aggregation group (LAG) across the 10GbE ports: LACP, network/interface bonding or similar methods to combine multiple network connections are not supported.

To provide additional bandwidth to the environment hosted on Oracle Private Cloud at Customer, additional custom networks can be configured. Please contact your Oracle representative for more information.