After you prepare data in the required templates in the .csv format, you must use the PAR URL that is mentioned in the Object Storage to access the bucket. Enter the details of the .csv file path, PAR URL, and the .csv file name in the HTTP utility such as cURL to upload data files into the Object Storage. The PAR URL, which you use to access the Object Storage is refreshed every seven days. Multiple users can load data into the Object storage concurrently from different locations. If there are any corrections required in the data files, you can modify the .csv data files and upload them using the same PAR URL. The modified data files overwrite the previously loaded data files in the Object Storage.

NOTE:

· You can not download or delete data files after you upload them to the Object Storage.

· The maximum size for an uploaded object (data file) is 10 TiB.

· Object parts must be no larger than 50 GiB.

If there are any issues with the file upload, you must contact My Oracle Support.

For every instance of OFS FCCM provisioned, two buckets are created - a Standard Storage Bucket and an Archive Storage Bucket.

· Standard Storage Bucket: This storage bucket is accessed daily to load data. This bucket stores data for seven days. After seven days, data files are archived into the Archive Storage Bucket. This bucket is also used to process data from the Object Storage to the staging tables.

· Archive Storage Bucket: This storage bucket is used to access data rarely. For example, weekly or monthly. You cannot load the data files into this bucket directly. The Data file is archived in this bucket from the Standard Storage Bucket after seven days. The archived data file is preserved for one year. After one year, the archived data files are deleted from this bucket.

Use this section to access the Standard Storage Bucket using Pre-Authenticated URL.

To access the Object Storage Pre-Authenticated URL, follow these steps:

1. Enter the application URL in the browser provided by your Administrator. The Oracle Cloud Account Sign In page is displayed.

2. Enter the User Name or Email and Password provided by the Administrator.

3. Click Sign In. The Home page displays

4. From the Home

page, click the Admin Console icon ![]() .

The Admin Console page is displayed.

.

The Admin Console page is displayed.

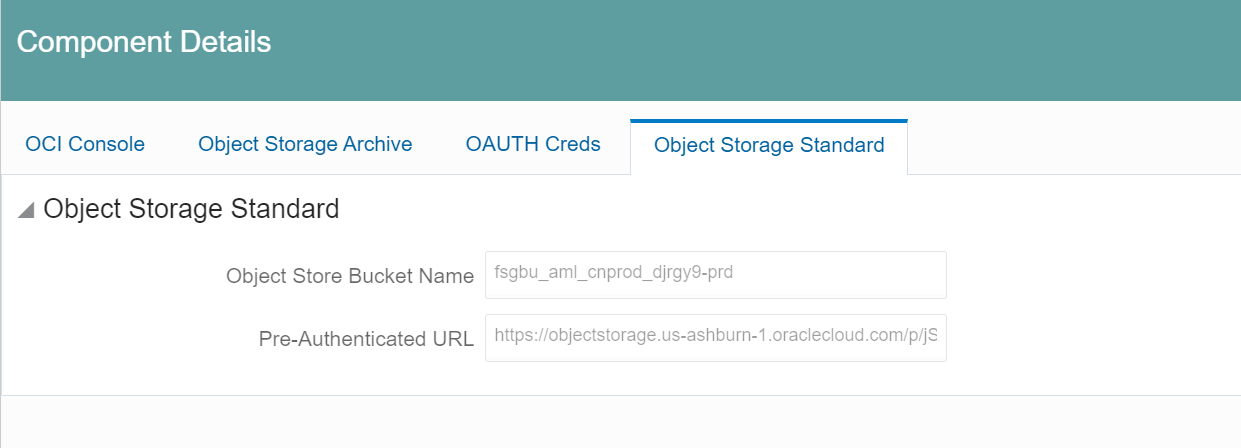

5. Click the Component Details tile. The Component Details window is displayed.

6. Click the Object Storage Standard tab.

The Object Storage Standard pane is displayed with two fields:

§ Object Store Bucket Name: Provides the details of the bucket name where you are loading the data files. For example, fsgbu_aml_cndevcorp_qufspr.

§ Object Store PAR URL: This URL helps you to access the Object Store Bucket to load data files into to the Object Storage.

7. Copy the Object Store PAR URL. For example, https://objectstorage.us-phoenix-1.oraclecloud.com/p/cYMpe4ovWjPN0vF_VS1b4STTTRkCsVtcNMIAxnC7pJM/n/oraclegbudevcorp/b/fsgbu_aml_cndevcorp_uklfff/o/

To upload the data into the Object Storage, follow these steps:

1. Open the Command prompt, enter the following cURL command to upload the data.

curl -v -X PUT --data-binary '@<full file path>' <your PAR URL><file name>

Table 3 describes the place holders of the cURL command.

Place Holders |

Description |

| <full file path> | Enter the path of the file. For example, /filepath/20201218_STG_CASA_TXNS_1.csv |

| <PAR URL> | Paste the copied PAR URL. For example, https://objectstorage.us -phoenix-1.oraclecloud.com/p/IWWPtdM1MNr_VG-I2p5YJldIxnNgAwbMHdrTfnqr3rM/n/oraclegbudevcorp /b/fsgbu_aml_cndevcorp_qufspr/o/ |

| <file name> | Enter the file name. · For non-split: Format:

YYYYMMDD_Tablename.CSV · For split: Format: YYYYMMDD_Tablename_#.CSV,

YYYYMMDD_Tablename_#.CSV. NOTE: For information about configuring Multiple Data Origin, see Multiple Data Origin Support. |

For example:

curl -v -X PUT --data-binary @/filepath/20201218_STG_CASA_TXNS_1.csv https://objectstorage.us-phoenix-1.oraclecloud.com/p/IWWPtdM1MNr_VG-I2p5YJldIxnNgAwbMHdrTfnqr3rM/n/oraclegbudevcorp/b/fsgbu_aml_cndevcorp_qufspr/o/20201218_STG_CASA_TXNS_1.csv

2. Press Enter. Data is successfully pushed into the Object Storage Standard Bucket.

NOTE:

· The status response code must be: < HTTP/1.1 200 OK>

· If there is any error message, you must provide the correct details and try again. If this issue persists, contact My Oracle Support.

· To ensure that all data files that are required to be processed in the Object Storage, you must also upload the File Watcher file with yyyymmdd_filewatcher.txt format in the Object Storage. Until this file is not available in the Object Storage, the data loading process will not be initiated.

· If the data loading batch is initiated but the File Watcher file is not present in the Object Storage, the batch will wait until the file is uploaded. The waiting period for the batch to look out for the File Watcher file is five hours.

To load data files from the Object Storage Standard Bucket to the application staging table, see Load Data Files.

The data-loading service supports multiple data origin files to load data into the stage tables with different batches having different Data Origins.

· For Non-Split Format: YYYYMMDD_Tablename_DataOrigin.CSV

For example:

20201218_STG_CASA_TXNS_MAN.csv

20201218_STG_CASA_TXNS_UK.csv

· For Split Format: YYYYMMDD_Tablename_DataOrigin_#.CSV

For example:

20201218_STG_CASA_TXNS_MAN_1.csv

20201218_STG_CASA_TXNS_MAN_2.csv

20230727_STG_CASA_TXNS_UK_1.csv

20230727_STG_CASA_TXNS_UK_2.csv

To execute batches using multiple data origin, update the Schedule Batch parameters as follows:

· $DATAORIGIN$ : This should be the Data Origin Name which is provided in the file name. Example: MAN / UK

· $F_DATAORIGIN$: This must be set as True.

§ If the value of $F_DATAORIGIN$ is False then the multiple data origins will not be considered. It will pick the CSV files without having the Data Origin name in the file format.

§ If the value of $F_DATAORIGIN$ is True then the multiple data origins will be considered. It will pick the CSV files which are having the Data Origin name in the file format.

NOTE:

There are no changes for existing or single data origin customers.

AES 256 CBC encryption is a symmetric encryption algorithm that uses a 256-bit key to encrypt and decrypt data.

To encrypt a CSV file using AES-256-CBC encryption, follow these general steps:

1. Generate the 256-bit Hex key using the following command:

openssl rand -hex 32 >> keyfile.key

2. Save the Master Encryption Key in the ADMIN-CONSOLE UI by navigating to the Configurations tile and selecting the Master Encryption Key tab.

3. Encrypt the data using the AES-256-CBC encryption algorithm to encrypt data using the encryption key generated above.

AES-256-CBC using OpenSSL-

openssl enc -aes-256-cbc -K b9ffef696fed55193f9aed357ed2481c4d5f1b84a6ac88c8386932dddb3ae120 -iv 00000000000000000000000000000000 -nosalt -p -in /<RelativePath>/20230425_STG_ACCOUNT_ADDRESS.csv -out /<RelativePath>/20230425_STG_ACCOUNT_ADDRESS.csv

-aes-256-cbc — the cipher name( symmetric cipher : AES ;block to stream conversion : CBC(cipher block chaining)

-nosalt —not to add default salt

-p — Print out the salt, key, and IV used.

-in file— input file /input file absolute path

-out file— output file /output file absolute path

4. Upload the encrypted files to the Object Store as described in Uploading Data into Object Storage.

NOTE:

If files are uploaded without encryption, then remove the key (If key exists) from the ADMIN-CONSOLE by leaving the Master Encryption Key field as blank.