This chapter provides an understanding of the data flow. It explains what happens within data flow and how various processing aspects are integrated with the overall data flow.

Topics:

· Overview of OFS AGILE RP User Interface

· Adjustment Feature for Template-based Reports

· Mapping of Results to Line Items in Reporting

This section explains the input data preparation from OFSAA.

Topics:

· Assumptions for Data Preparation

· Run or Execution Expectations

· Data Flow from Source Systems to Staging Area

· Data Flow from Staging to Results Area

The following are the assumptions for data preparation:

1. RRS is a reporting solution, which uses data from underlying fact tables directly for reporting. The end-user is expected to prepare the load for the required data in the reporting area accordingly. Although this has a thin processing layer to reclassify to regulatory dimensions and bands, all the processing measures are expected to be from respective applications and provide as required.

2. It is integrated with the results area of the respective processing application, and any change in the underlying processing can disturb the RRS data sourcing.

3. Baseline and stress data must be populated with appropriate codes. Inaccurate mappings may lead to inaccurate results.

4. For usage of consolidation dimension (which has values like Actual, Budget, Forecast, and so on), all historical data is expected to be tagged as actual to report vintage data, as per report requirements. For projection data, for a given run and Projection Period (quarter or year), only one set of data is expected to be stored.

Oracle Financial Services Regulatory Reporting for Office of Superintendent of Financial Institutes Canada (OFS REG REP OSFI ) provides the OSFI RUN Chart listing the tasks required for the population of data for OSFI Reports. This covers the following tasks:

· Run chart

· Data Load

· Dimension Data Population

· FSDF Source Run PMF

· OSFI REG RUN PMF

Download the OFS REG REP OSFI 8.1.1.0.0 Run Chart from the MOS.

Run refers to execution. It is assumed that at different periods, a different combination of parameters, and different data require different executions. From a reporting perspective, as required by regulators, data is required for the following executions:

1. Current Data or Execution

a. Reporting month-end data

b. Projection Data

2. Historical (trend or vintage) Data

a. Yearly

b. Quarterly

3. Stressed Data

NOTE:

· For Movement measures data is not carried from one reporting period to another. For example, Profit or Loss. Where General ledger balances such as loan outstanding are carried forward from one year to another, profit and loss are period specific.

· Therefore, unlike End of Period (EoP) balance, movement values for quarter actuals must be derived for reporting. For historical data, net sales for quarter 3 is the difference between the sales figure as of the end of quarters 2 and 3. You are not required to provide this difference as a download. Movement data for actual is identified through different runs and the respective values are summed up.

· Only those records, whose corresponding runs fall between the fiscal month start date and end date of the reporting quarter are selected for summation. Each Run has an associated date and runs can be performed daily. Assuming that runs are performed daily in a given quarter (90 days), RRS sums up data points across all 90 days to arrive at a quarter-end movement figure.

· However, when the projection of net sales for quarter 2 next year is to be performed, no derivation is required. Projections data for the said quarter can be directly downloaded in the respective Fact table (or tables) for reporting.

The staging area is populated with data from various data sources, such as GL data, Account data, Customer data, Trading data, Currency data, and Master data. See the Data Integration Hub (DIH) User Guide in OHC Documentation Library for details. DIH enables to load the data from the source systems to the OFSAA staging tables, through logical interfaces, known as Application Data Interfaces (ADI). DIH provides a set of User Interfaces (UI), which is used to define and maintain External Data Descriptor (EDD), Application Data Interfaces, and map the EDDs and ADIs through connectors.

This section details the pass-through data, transformed data, and classification.

Topics:

· Reclassified to Regulatory Classifications

Pass through data refers to the static data that is pre-processed and flows to the results area directly. The Common Staging Area (CSA) model represents the data entry point into the FSDF. CSA provides a simplified, unified data sourcing area for inputs required by analytical applications and engines. It consists of over 400 tables and nearly 9000 columns organized into distinct subjects.

The staging area is a physical data model, which is deployed using the Analytical Application Infrastructure, which manages it. The design of the staging area data model is to allow efficient data loading for analytics. It thus has crucial differences from a general-purpose repository of operational or transactional data across a bank.

The staging area acts as the single source of data and contains unified data requirements for various banking areas such as Loans and Losses, Off-balance Sheet products, Securities, Derivatives, Capital Data, Management Ledger and General Ledger. A common example of this category includes various monetary amounts, dates, and so on.

After transformation, the regulatory data is reclassified as follows.

Table 3: Data Reclassification Example 1

Source |

Attribute |

Interim Target |

Target |

|---|---|---|---|

DIM PRODUCT |

Withdrawable Reserve |

DIM STANDARD PRODUCT |

DIM REG PRODUCT |

Checking Accounts |

= N |

CASA |

Current Accounts |

Table 4: Data Reclassification Example 2

FCT REG ACCOUNT SUMMARY |

|||

|---|---|---|---|

Account Number |

REG PROD Classification |

Remaining Maturity Band |

Delinquency Band |

1 |

OTHER TERM LOAN |

1 |

3 |

The additional transformations that are performed are:

· Remaining Time to Maturity Band

· Regulatory Delinquency Band

Within reclassification rules, few rules where the source is customer-specific values. In such cases, these rules must be validated and updated as required by you because the ready-to-use rule can differ from what you have. Such rules are very few and restricted to the following:

1. Standard Product Type Reclassification

2. Standard Party Type Reclassification

3. Standard Mitigant Type Reclassification

4. Regulatory Industry Reclassification

5. Regulatory Credit Status Reclassification

6. Regulatory Loan Purpose Reclassification

See Business Metadata for details on these reclassifications.

Regulatory reports make use of data available across several fact tables in the OFSAA data foundation model and these result tables are either loaded from the raw data sourced from source systems via out of the box T2Ts.

· Consistent Usage of Run Identifier

Most of the fact tables used in regulatory reporting are run enabled and have a composite primary key inclusive of run identifier that enables the same snapshot of data to be loaded multiple times into the target fact table for any given execution date. All the out of the box processes that impact data used in regulatory reports are executed as part of an integrated run to ensure that run identifier is consistent across fact tables. Since the reporting is done on an integrated schema, the customs data flow design must keep this integrity intact. This essentially means that the custom ETL processes designed to load the data directly into the fact tables must be able to leverage the run identifier generated by the run engine during execution. Run Identifier information is available in the DIM_RUN table.

· Correct Dimensional Lookup Configuration

Dimensional identifiers are typically part of referential integrity constraints with the fact table so the custom ETL processes must ensure that lookups retrieve a valid surrogate key for a given value of the business key. The intermediate staging structure must ensure all the business keys are persisted correctly and the lookup condition is designed on the correct dimension table.

From the OFSAA technical infrastructure standpoint, the mentioned options are available to the customer to design and implement the custom ETL process explained above. OFSAA strongly recommends the following options to maintain consistency in terms of data lineage in Metadata browser as the configured metadata can be made available in the meta-model through MDB publish:

1. Data Integration Hub (DIH) Connectors

2. Data Mapping (T2T) option in Application Infrastructure

3. Data File Mapping (F2T) option in Application Infrastructure

Topics:

§ Data File Mapping (Flat File to RDBMS Target - F2T)

If you have a licensed DIH to source the data from the external systems into OFSAA, a DIH connector is the recommended approach to load the data into results. The Source data could either reside in a relational structure or a file structure. The mappings maintained in DIH are logical and they abstract the physical references including the Dimensional lookups seamlessly without the need for any additional join or configuration.

See the Data Integration Hub (DIH) User Guide, for more information about loading the data into a result area table.

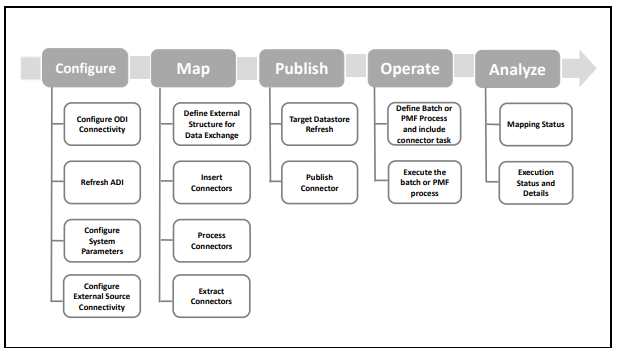

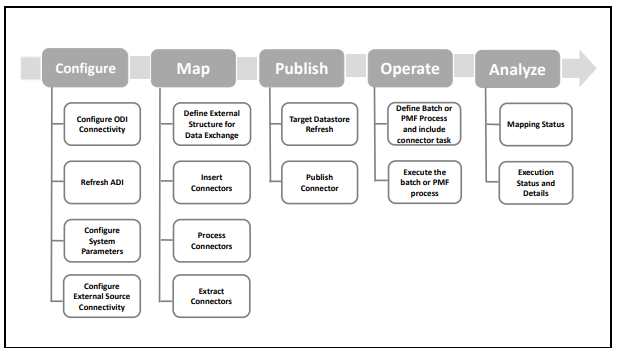

Figure 26: DIH Connectors

Data Mapping refers to the process of retrieving unstructured data from data sources for further data processing, storage, or migration. This feature is commonly known as RDBMS source to RDBMS target (T2T) framework in the OFSAA world and can be leveraged when source data is available in the Oracle database. Dimensional lookups must be handled via the T2T’s join condition and expressions. See the OFSAAI User Guide for more details on configuring a T2T.

If the source data is available in file structures, the OFSAA F2T component can be used to bring the data in the OFSAA ecosystem. As lookups cannot be configured in an F2T, this component must be used in conjunction with the T2T component, that is, data is first loaded from the file to an interim staging structure using the F2T component followed by data load to the target result area table using the T2T component. This is the least recommended approach as there is a need for interim table structure in the data model and involves multiple data hops that add to the overhead.

See the OFSAAI User Guide, for more details on configuring an F2T.

The FSDF entity information is given in the Dimension Tables and Data Elements documents available on the MOS page.

OFS Regulatory Reporting for Office of Superintendent of Financial Institutes Canada - Dimension Tables <release version>

OFS Regulatory Reporting for Office of Superintendent of Financial Institutes Canada - Data Elements <release version>

For all tables with data flow type tagged as a Processing, it is recommended that you map data directly to the result area if the processing application is not part of the OFSAA product suite. For example, Basel computations, RWA Numbers, and Capital Ratio are taken from the processing area which is populated by OFSAA or other Basel applications.

For processed tables, you can look for the following options:

· OFSAA Data Integration Hub (DIH) product

· Flat File

· Table-to-Table Transformation with the source being processing application

The list of processing output tables is available in the OFS Regulatory Reporting for Office of Superintendent of Financial Institutes Canada - Data Elements <release version> document in the MOS page.

This section provides details to log in to the OFSAA application, view report summary, view schedule summary, view cells, and map data schedules. It includes:

· Logging in to OFS REG REP OSFI UI

After the applications are installed and configured, to access the OFS REG REP OSFI UI you must log in to the OFSAAI environment using the OFSAAI login page.

NOTE:

The built-in security system ensures that you are permitted to access the window and actions based on the authorization only.

To access the OFS REG REP OSFI UI, follow these steps:

1. Enter the OFSAAI URL in your browser. The OFSAAI login page is displayed.

Figure 27: OFSAAI Log In

2. Select the desired language from the Language drop-down list.

3. Enter your User ID and Password. When you log into OFSAAI, the OFSAA Applications page is displayed.

Figure 28: OFSAA Applications Screen

4. Select the Financial Services Data Foundation. The FSDF landing page is displayed.

Figure 29: Financial Services Data Foundation Landing Page

5. Or select the Regulatory Reporting for Office of Superintendent of Financial Institutes Canada. The Regulatory Reporting for Office of Superintendent of Financial Institutes Canada landing page is displayed.

Figure 30: Regulatory Reporting for Office of Superintendent of Financial Institutes Canada Page

6. Select the Navigation Menu  in the OFS REG REP OSFI UI to access the following windows:

in the OFS REG REP OSFI UI to access the following windows:

a. Home

b. Data Elements

c. Administration

i. Map Maintenance

ii. Save Metadata

d. Metadata Management

i. Dataset

ii. Build Hierarchy

iii. Measure

iv. Business Processor

v. Derived Entity

vi. Reports

e. Process Modelling Framework

i. Process Modeller

ii. Process Monitor

iii. Process Execution Summary

f. Operations

i. Batch Maintenance

ii. Batch Execution

iii. Batch Monitor

g. Regulatory Data Extract

h. Metadata Browser

i. Report Statistics

The Report Summary data comes pre-seeded based on the applications that are installed. The Report Summary enables you to view all the configured reports for the jurisdiction.

After logging into the OFS REG REP OSFI UI, navigate to Metadata Management and select Reports to view the Reports Summary window.

Figure 31: Report Summary Screen

NOTE:

You can view the summary of all the configured reports in the Tile view  or List view

or List view .

.

The Search Bar helps you to find the required information from the database. You can enter the nearest matching keywords to search and filter the results by entering information on the search box. You can search for a Report using either the name or description.

Figure 32: Report Summary Search Bar

The Paging option at the bottom right corner allows you to see more reports than the ones currently displayed on the window.

Figure 33: Report Summary Paging Option

Each tile or list on the Report Summary window corresponds to one report. For each report, you can view the report code, report description, number of schedules within the report, the number of configured non-derived cells, and count of utilized derived entities.

For example, the E2 report in the tile or list view is displayed as follows:

Figure 34: Report in Tile View

Figure 35: Report in List View

Select the Report Code to navigate to the Schedule Summary window.

Figure 36: Report Information

The Schedule Summary window provides the component schedules for the corresponding report. Select the Report Code in the Report Summary window to navigate to the Schedule Summary window (as shown in Figure 37).

For example, the Schedule Summary window for the E2 report is displayed as follows.

Figure 37: Schedule Summary Screen

NOTE:

You can view the summary of all the configured reports in the Tile view  or List view

or List view .

.

The Search Bar helps you to find the required information from the database. You can enter the nearest matching keywords to search and filter the results by entering information on the search box. You can search for a Schedule using either the name or description.

The Paging option (Figure 38) at the bottom right corner allows you to see more reports than the ones currently displayed on the window.

NOTE:

Select the  icon on the top right corner to return to the Report Summary window.

icon on the top right corner to return to the Report Summary window.

Each tile or list on the Schedule Summary window corresponds to one schedule under the report. For each schedule, you can view the schedule code and the description, the number of configured non-derived cells for the schedule and the count of utilized derived entities.

For example, the Schedule Page3 tile is displayed as follows. Select the Schedule Code to navigate to the Cell Information window.

Figure 38: Schedule Information

The Cell Summary window provides the non-derived cells or MDRMs configured as a part of the solution for the corresponding schedule under a report. Select the Schedule Code in the Schedule Summary window to navigate to the Cell Summary window (as shown in Figure 39).

For example, the Cells for Schedule E2MSA summary window under the E2 report is displayed as follows.

Figure 39: Cell Summary Window

NOTE:

You can view the summary of all the configured reports in the Tile view  or List view

or List view .

.

The Search Bar helps you to find the required information from the database. You can enter the nearest matching keywords to search and filter the results by entering information on the search box. You can search for a Cell using either the name or description.

The Paging option (Figure 39) at the bottom right corner allows you to see more reports than the ones currently displayed on the window.

NOTE:

Select the  icon on the top right corner to return to the Report Summary window.

icon on the top right corner to return to the Report Summary window.

Topics:

· Measure

· Filters

Each tile or list on the Cell Summary window corresponds to one cell or MDRM under the schedule. For each cell, you can view the MDRM name, count of utilized derived entities, count of utilized OFSAA hierarchies, and measures for that cell.

For example, the cell E2_2349 tile is displayed as follows. Select the cell or MDRM Code to navigate to the Cell Information window.

Figure 40: Cell Information

The Cell Information window is displayed as follows.

Figure 41: Cell Information Window

Each section in the Cell Information window displays the relevant OFSAA Metadata and filters used for the cell.

This displays the name of the OFSAA Materialized View or View that contributes to the Cell.

This displays the name of the OFSAA Measure that is reported for the particular Cell.

The Filter conditions are as follows:

1. All filters that are applied to the cell are displayed under the filter section. It displays all the applied filters as their OFSAA description.

2. On selection, the filter is marked by a  sign on the top left corner of the selected filter.

sign on the top left corner of the selected filter.

3. The section that follows displays the entity or table on top of which the filter is based and the OFSAA Level Description for the selected filter.

4. All filter values that apply to the particular MDRM are available as a ribbon. Each filter value is in a separate box.

For example, in the previous case for MDRM E2_2349, the applied filters are Consolidation Code and Reporting Line Code. Currently, the Consolidation Code filter is selected and the required filter values for the same are 100.

In the case of multiple values, the filters are displayed as follows with an arrow mark.

Figure 42: Multiple Filter Values

The filters in case of not in condition are highlighted in red are displayed as follows.

Figure 43: Not in Condition Filters

The adjustments feature is a new enhancement to adjust the differing values of the report systems. The Adjustments Derived Entity derives its values from the Adjustments Fact table (FCT_REG_REPORT_ADJUSTMENTS) that specifies the adjustment value and the seeded table (DIM_REG_REPORT_CELL) that specifies the Cell ID or MDRM Code and the Report Code to which the MDRM belongs to. This ensures that there can be direct adjustments made to MDRM(s) such that the values from both the derived entities are traceable and efficiently reported.

To implement the Adjustment feature, identify the Cell ID for the report and the line item where adjustment must be implemented.

For example: E2 Report

NOTE:

The Adjustment feature works only for fixed table cells (Open Y cells are not supported).

The report currently displays a Total value = 3,33,161 for the identified cell as shown in the following figure.

Figure 44: Adjustment Feature

Now, the requirement is to adjust this amount to 15,00,000+3,33,161=18,33,161

NOTE:

The Adjustment feature works only for fixed table cells (Open Y cells are not supported).

Topics:

· Refreshing Adjustment Derived Entity

FCT_REG_REPORT_ADJUSTMENTS: This table must be populated with the requisite Adjustment Amount and other related columns.

For example:

N_ADJUSTED_AMT = 5000000000

The corresponding N_CELL_SKEY value must be picked from DIM_REG_REPORT_CELL for the respective CELL_ID. The DIM_REG_REPORT_CELL table is pre-seeded with cell IDs for reports supported for this feature.

The following columns must also be updated accordingly:

1. N_ENTITY_SKEY

2. N_RUN_SKEY

3. N_MIS_DATE_SKEY

NOTE:

V_PAGE_INSTANCE_VALUE shall be populated with appropriate instance value for reports with page instance filters. The page instance filter value shall match the values as populated in the DE hierarchy for page instance. The adjustment value shall be provided for all the page instance values that require adjustment including the “Total” instance. This attribute can be ignored for the reports without page instance.

Execute the resave batch for Adjustments (<<INFODOM>> LIQUIDITY_REPORTS_ACC_RESAVEDE ), to save the Adjustment derived entity - DEADJ001.

This ensures that the adjustment amount is reflected in the adjustment derived entity DEADJ001.

Post adjustments, the retrieved report should reflect the amount that is coming from the sourced systems and the adjusted amount.

The retrieved report should reflect the amount after adjustments as shown in the following figure.

(2,06,24,54,006 +5000000000) = 5002062.454006

Figure 45: Lombard Adjustment Verification

NOTE:

The Adjustment amount can be negative to achieve a subtracted amount.

Figure 46 explains the flow of data between OFSAA and AgileREPORTER.

Figure 46: Data Flow between OFSAA and AgileREPORTER

OFSAA provides the data to AgileREPORTER in the form of derived entities. The derived entity is an existing OFSAA higher-order metadata object and can be physicalized as a materialized view in the database. Derived entities store aggregated data from base fact entities specified in the dataset and have the necessary dimensions and measures.

Dimensional and measure combination stored within the derived entity is mapped to cells within the report. This mapping is maintained within the Dimensional Mapping template.

Decision Process within AgileREPORTER reads the derived entities and dimension mapping information to derive the data for reporting. Derived entities are created based on measures, hierarchies, and datasets.

Figure 47: Decision Process in AgileREPORTER

Some cells in the schedule can be derived as per the logic provided by the regulator. Derivation can be an expression built using values from other cells. Examples of derivation are ratio, node-level rollup, a direct reference to cells in other schedules within the report. These derivations are performed within the AgileREPORTER. OFSAA provides data only for the cells that are not derived.

NOTE:

Metadata for data transformation is available as part of the data warehouse configuration pack provided ready-to-use or preconfigured from OFSAA. You need not perform any mapping for the reports. However, this information can be useful for maintenance or extensions when a ready-to-use pack is not available.

The AgileREPORTER is a web-based regulatory reporting tool provided by Lombard Risk. It provides necessary features to address e-filing workflow, validation, and submission process and supports reports (called as forms or returns) for various jurisdictions. AgileREPORTER provides a reliable and efficient infrastructure to compile, generate, and submit regulatory reports.