4 Disaster Recovery Procedure

Call My Oracle Support (MOS) prior to running the procedure to ensure that the proper recovery planning is performed.

Before disaster recovery, users must appropriately evaluate the outage scenario. This check ensures the correct procedures are run for the recovery.

Note:

Disaster recovery is an exercise that requires collaboration of multiple groups and is expected to be coordinated by the Oracle Support prime. Based on the Oracle Support assessment of Disaster, it may require to deviate from the documented process.4.1 Recovering and Restoring System Configuration

Disaster recovery requires configuring the system as it was before the disaster and restoration of operational information. There are 8 distinct procedures to choose from depending on the type of recovery needed. Only one of these must be followed.

WARNING:

- Whenever there is a need to restore the database backup for NOAM and SOAM servers in any of below Recovery Scenarios, the backup directory may not be there in the system as system will be DRed.

- In this case, refer Workarounds for Issues not fixed in 8.6.0.0.0 Release this will provide steps to check and create the backup directory.

- Following is the standard file format to be used for recovery.

Backup.DSR.HPC02-NO2.FullDBParts.NETWORK_OAMP.20140524_223507.UPG.tar.bz2

4.1.1 Recovery Scenario 1 (Complete Server Outage)

For a complete server outage, NOAM servers are recovered using recovery procedures for software and then running a database restore to the active NOAM server. All other servers are recovered using recovery procedures for software.

Database replication from the active NOAM server will recover the database on these servers. The major activities are summarized in the list below. This list will help to understand the recovery procedure summary. Do not use this list to run the procedure. The major activities are summarized as follows.

- Recover the Standby NOAM server by recovering base software, for a

Non-HA deployment this can be skipped.

- Reconfigure the DSR Application.

- Recover all SOAM and MP servers by recovering software, In a Non-HA

deployment the standby or spare SOAM servers can be skipped.

- Recover the SOAM database.

- Reconfigure the DSR Application.

- Reconfigure the signaling interface and routes on the MPs, the DSR software will automatically reconfigure the signaling interface from the recovered database.

Restart process and re-enable provisioning replication

Note:

Any other applications DR recovery actions (SDS and IDIH) may occur in parallel. These actions must be worked simultaneously, doing so would allow faster recovery of the complete solution (i.e. stale DB on DP servers will not receive updates until SDS-SOAM servers are recovered.This procedure performs recovery if both NOAM servers are failed and all SOAM servers are failed. This procedure also covers the C-Level server failure.

Note:

If this procedure fails, contact My Oracle Support (MOS), and ask for assistance.- Refer Workarounds for Issues not fixed in 8.6.0.0.0 Release to understand or apply any workarounds required during this procedure.

- Gather the documents and required materials listed in the Required Materials section.

- Recover the failed software:

VMware based deployments:

- For NOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure NOAM guests based on resource profile.

- Import DSR OVA

- For SOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure Remaining DSR guests based on resource profile

- Import DSR OVA

- For failed MPs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure remaining DSR guests based on resource profile.

- Import DSR OVA.

- For KVM or Openstack based deployments:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure NOAM guests based on resource profile.

- Import DSR OVA.

- For SOAMs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure remaining DSR guests based on resource profile.

- Import DSR OVA.

- For OVM-S/OVM-M based deployments run the following

procedures:

- Import DSR OVA and prepare for VM creation.

- Configure each DSR VM.

.

Note:

While running procedure 8, configure the required failed VMs only (NOAMs/SOAMs/MPs). For more information, see Oracle Communications Diameter Signaling Router Cloud installation Guide.

- For NOAMs run the following procedures:

- Obtain the most recent database backup file from external backup

sources (ex. file servers) or tape backup sources.

- From Required Materials list, use site survey documents and Network Element report (if available), to determine network configuration data.

- Run DSR installation procedure for the first NOAM:

- Verify the networking data for Network Elements.

Note:

Use the backup copy of network configuration data and site surveys. - Run installation procedures for the first NOAM server:

- Configure the First NOAM NE and Server.

- Configure the NOAM Server Group.

- Verify the networking data for Network Elements.

- Log in to the NOAM GUI as the guiadmin user.

- Navigate to Main Menu, and then

Status & Manage, and then

Files.

- Select the Active NOAM server and click Upload.

- Select "NO Provisioning and Configuration" file, backed up after initial installation and provisioning.

- Click Browse and locate the backup file.

- Check This is a backup file box and

click Upload.

The file will take a few seconds to upload depending on the size of the backup data. The file will be visible on the list of entries after the upload is complete.

- To disable provisioning:

- Navigate to Main Menu, Status & Manage, and then Database.

- click Disable Provisioning. A

confirmation window will appear, press OK to

disable.

Note:

The message "warning Code 002" will appear.

- Verify the archive contents and database compatibility:

- Select the Active NOAM server and

click Compare.

Click restored database file that was uploaded as a part of step 13 of this procedure.

- Verify that the output window matches the database.

Note:

- As expected, the user will see a database mismatch with respect to the VMs NodeIDs. Proceed if this is the only difference, if not, contact My Oracle Support (MOS).

- Archive content and database compatibilities

must consist the following:

- Archive Contents: Configuration data

- Database Compatibility: The databases are compatible.

- When restoring from an existing backup database

to a database using a single NOAM, the expected outcome for

the topology compatibility check is as follows:

- Topology compatibility: Topology must be compatible minus the NODEID.

- Attempting to restore a backed-up database onto

a NOAM database which is empty. In the context of Topology

Compatibility, this text is expected.

If the verification is successful, click Back and continue to next step of this procedure.

- Select the Active NOAM server and

click Compare.

- Restore the database:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active NOAM server and

click Restore. Select the appropriate backup

provisioning and configuration file and click OK.

Note:

As expected, the user will see a database mismatch with respect to the VMs NodeIDs. Proceed if this is the only difference, if not, contact My Oracle Support (MOS). - Select the Force checkbox and click

OK to proceed with the database restore.

Note:

After the restore has started, the user will be logged out of XMI NO GUI since the restored Topology is old data.

- Establish a GUI session on the NOAM server by using the VIP IP

address of the NOAM server. Open the web browser and enter the following url

http://<Primary_NOAM_VIP_IP_Address>. - Monitor and confirm database restoral. Wait for 5 to10 minutes for

the system to stabilize with the new topology.

Monitor the Info tab for Success. This will indicate that the backup is complete and the system is stabilized.

Following alarms must be ignored for NOAM and MP servers until all the servers are configured:

- Alarms with type column as "REPL" , "COLL",

"HA" (with mate NOAM), "DB" (about Provisioning Manually

Disabled).

Note:

- Do not pay attention to alarms until all the servers in the system are completely restored.

- The maintenance and configuration data will be in the same state as when it was first backed up.

- Alarms with type column as "REPL" , "COLL",

"HA" (with mate NOAM), "DB" (about Provisioning Manually

Disabled).

- Log in to the recovered Active NOAM through SSH terminal as admusr user.

- Recover Standby NOAM:

- Install the second NOAM server by running the following

procedures:

- "Configure the Second NOAM server" steps 1, 3, and 7.

- "Complete Configuring the NOAM Server Group " step 4.

- Install the second NOAM server by running the following

procedures:

- Correct the recognized authority table:

- Establish an SSH session to the active NOAM, log in as an admusr.

- Run the following

command:

$ sudo top.setPrimary - Using my cluster: A1789 - New Primary Timestamp: 11/09/15 20:21:43.418 - Updating A1789.022: <DSR_NOAM_B_hostname> - Updating A1789.144: <DSR_NOAM_A_hostname>

- Restart DSR application:

- Navigate to Main Menu, Status & Manage, and then Server.

- Select the recovered standby NOAM server and click Restart.

- Set HA on Standby NOAM:

- Navigate to Main Menu, Status & Manage, and then HA.

- Click Edit, select the standby NOAM server and set it to Active and click OK.

- Perform key exchange with Export Server:

- Navigate to Main Menu, Administration, Remote Servers and then Data Export.

- Click SSH Key Exchange, enter the password and click OK.

- Replication will wipe out the databases on the C-Level servers when

Active SOAM is recovered, hence prevent replication to the operational C-Level

servers that are part of the same site as the failed SOAM servers.

WARNING:

- If the spare SOAM is also present in the site and lost: Inhibit A and B level replication on C-Level servers (when Active, Standby, and Spare SOAMs are lost)

- If the spare SOAM is not deployed in the site: Run Inhibit A and B level replication on C-Level servers.

- Run the steps 1 and 3 to 7 "Configure the SOAM

Servers" to install the SOAM servers.

Note:

Before continuing to next step, wait for the server to restart. - Restart DSR application on recovered Active SOAM Server:

- Navigate to Main Menu, Status & Manage, and then Server.

- Select the recovered server and click Restart.

- Upload the backed up SOAM database file:

- Navigate to Main Menu, Status & Manage, and then Files.

- Select the Active SOAM server. Click Upload and select the file "SOAM Provisioning and Configuration" backed up after initial installation and provisioning.

- Click Browse and locate the backup file.

- Check This is a backup file box and click Open.

- Click Upload.

The file will take a few seconds to upload depending on the size of the backup data. The file will be visible on the list of entries after the upload is complete.

- Establish a GUI session on the recovered SOAM server. Open the web

browser and enter the following url

http://<Recovered_SOAM_IP_Address>. - Recovered SOAM GUI, verify the archive contents and database

compatibility:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active SOAM server and click Compare.

- Click Restored Database file that

was uploaded as a part of step 13 of this procedure. Verify that the

output window matches the database archive.

Note:

- As expected, the user will see a database mismatch with respect to the VMs NodeIDs. Proceed if this is the only difference, if not, contact My Oracle Support (MOS).

- Archive content and database compatibilities

must consist the following:

- Archive Contents: Configuration data

- Database Compatibility: The databases are compatible.

- When restoring from an existing backup database

to a database using a single NOAM, the expected outcome for

the topology compatibility check is as follows:

- Topology compatibility: Topology must be compatible minus the NODEID.

- Attempting to restore a backed-up database onto

a NOAM database which is empty. In the context of Topology

Compatibility, this text is expected.

If the verification is successful, click Back and continue to next step of this procedure.

- Restore the Database:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active NOAM server and

click Restore. Select the appropriate backup

provisioning and configuration file and click OK.

Note:

As expected, the user will see a database mismatch with respect to the VMs NodeIDs. Proceed if this is the only difference, if not, contact My Oracle Support (MOS). - Select the Force checkbox and click

OK to proceed with the database restore.

Note:

After the restore has started, the user will be logged out of XMI NO GUI since the restored topology is old data.

- Monitor and confirm database restoral, wait for 5-10 minutes for

the system to stabilize with the new topology.

Monitor the Info tab for "Success". This will indicate that the backup is complete and the system is stabilized.

Note:

- Do not pay attention to alarms until all the servers in the system are completely restored.

- The maintenance and configuration data will be in the same state as when it was first backed up.

- Establish a GUI session on the NOAM server by using the VIP IP

address of the NOAM server. Open the web browser and enter the following url

http://<Primary_NOAM_VIP_IP_Address>. - Recover remaining SOAM Server:

- Install the SOAM servers by running the following

procedure:

- Run the steps 1 and 3 to 6 "Configure

the SOAM Servers" to Install the SOAM servers.

Note:

Before continuing to next step, wait for the server to reboot.

- Run the steps 1 and 3 to 6 "Configure

the SOAM Servers" to Install the SOAM servers.

- Install the SOAM servers by running the following

procedure:

- Restart DSR application on remaining SOAM Server(s):

- Navigate to Main Menu, Status & Manage, and then Server.

- Select the recovered server and click Restart.

- Set HA on recovered standby SOAM server:

Note:

For Non-HA sites, skip this step.- Navigate to Main Menu, Status & Manage, and then HA.

- Click Edit and set "Max Allowed HA Role" to Active and click OK.

- Start replication on working C-Level servers:

Un-Inhibit (Start) replication to the working C-Level servers which belong to the same site as of the failed SOAM servers.

If the spare SOAM is also present in the site and lost: run un-inhibit A and B level replication on C-level servers (when Active, Standby and Spare SOAMs are lost).

If the spare SOAM is not deployed in the site: run un-inhibit A and B Level replication on C-level servers.

Navigate to Main Menu, Status & Manage, and then Database.

If the Repl Status is set to Inhibited, click Allow Replication using the following order, if none of the servers are inhibited, skip this step and continue with the next step:

- Active NOAM Server

- Standby NOAM Server

- Active SOAM Server

- Standby SOAM Server

- Spare SOAM Server (if applicable)

- MP/IPFE Servers

- SBRS (if SBR servers are configured, start with the active SBR, then standby, then spare)

Verify that the replication on all the working servers is allowed.

- Establish a SSH session to the C-Level server being recovered,

login as an admusr.

- Run the following command to set shared memory to

unlimited:

$ sudo shl.set –m 0 - Run the following step from each server that has been

recovered:

- Run the steps 1, 11 to 14 (step 15 if required) configure the MP Virtual Machines.

- Run the following command to set shared memory to

unlimited:

- Restart DSR application for recovered C- Level server:

- Navigate to Main Menu, Status & Manage, and then Server.

- Select the recovered server and click Restart.

- Start replication on all C-Level servers:

- Navigate to Main Menu, Status & Manage, and then Database.

- If the Repl status is set to

Inhibited, click Allow

Replication by following the order:

- Active NOAM Server

- Standby NOAM Server

- Active SOAM Server

- Standby SOAM Server

- Spare SOAM Server (if applicable)

- MP/IPFE Servers

- Verify that the replication on all the working servers is allowed. This can be done by examining the Repl Status.

- Navigate to Main Menu, Status

& Manage, and then HA.

- Click Edit, for each server whose "Max Allowed HA Role" is set to OOS, set it to Active and click OK.

- Perform key exchange between the Active-NOAM and recovered

servers:

- Establish an SSH session to the Active NOAM, log in as an admusr.

- Run the following command to perform a key exchange from

the Active NOAM to each recovered

server:

$ keyexchange admusr@<Recovered Server Hostname>Note:

Perform this step, If an export server is configured.

- Activate optional features:

- Establish an SSH session to the Active NOAM, log in as

admusr.

Note:

- If PCA is installed in the system that is being

recovered, perform the following procedures on all the

active applications:

- PCA Activation on Standby NOAM on recovered Standby NOAM server

- PCA Activation on Active SOAM on recovered Active SOAM Server from to re-activate PCA

For more information about the above mentioned procedures, see Diameter Signaling Router Policy and Charging Application Feature Activation Guide.

Refer Optional Features to activate any features that were previously activated.

- If PCA is installed in the system that is being

recovered, perform the following procedures on all the

active applications:

Note:

- While running the activation script, the following

error message (and corresponding messages) output may be seen, the

error can be ignored

iload#31000{S/W Fault}. - If any of the MPs are failed and recovered, then these MP servers should be restarted after activation of the feature.

- Establish an SSH session to the Active NOAM, log in as

admusr.

- Fetch and store the database report for the newly restored data and

save it:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active NOAM server and click Report.

- Click Save and save the report to the local machine.

- Verify replication between servers:

- Log in to the Active NOAM through SSH terminal as admusr user.

- Run the following

command:

$ sudo irepstat –m Output like below shall be generated: -- Policy 0 ActStb [DbReplication] --------------------- Oahu-DAMP-1 -- Active BC From Oahu-SOAM-2 Active 0 0.50 ^0.15%cpu 25B/s A=me CC To Oahu-DAMP-2 Active 0 0.10 0.14%cpu 25B/s A=me Oahu-DAMP-2 -- Stby BC From Oahu-SOAM-2 Active 0 0.50 ^0.11%cpu 31B/s A=C3642.212 CC From Oahu-DAMP-1 Active 0 0.10 ^0.14 1.16%cpu 31B/s A=C3642.212 Oahu-IPFE-1 -- Active BC From Oahu-SOAM-2 Active 0 0.50 ^0.03%cpu 24B/s A=C3642.212 Oahu-IPFE-2 -- Active BC From Oahu-SOAM-2 Active 0 0.50 ^0.03%cpu 28B/s A=C3642.212 Oahu-NOAM-1 -- Stby AA From Oahu-NOAM-2 Active 0 0.25 ^0.03%cpu 23B/s Oahu-NOAM-2 -- Active AA To Oahu-NOAM-1 Active 0 0.25 1%R 0.04%cpu 61B/s AB To Oahu-SOAM-2 Active 0 0.50 1%R 0.05%cpu 75B/s Oahu-SOAM-1 -- Stby BB From Oahu-SOAM-2 Active 0 0.50 ^0.03%cpu 27B/s Oahu-SOAM-2 -- Active AB From Oahu-NOAM-2 Active 0 0.50 ^0.03%cpu 24B/s BB To Oahu-SOAM-1 Active 0 0.50 1%R 0.04%cpu 32B/s BC To Oahu-IPFE-1 Active 0 0.50 1%R 0.04%cpu 21B/s irepstat ( 40 lines) (h)elp (m)erged

- Verify the database states:

- Navigate to Main Menu, Status & Manage, and then Database.

- Verify that the "OAM Max HA Role" is either Active or Standby for NOAM and SOAM and "Application Max HA Role" for MPs is Active, and that the status is Normal.

- Verify the HA Status:

- Navigate to Main Menu, Status & Manage, and then HA.

- Select the row for all of the servers, verify that the HA role is either Active or Standby.

- Enable Provisioning:

- Navigate to Main Menu, Status & Manage, and then Database.

- Click Enable Provisioning. A confirmation window will appear, click OK to enable Provisioning.

- Verify the local node Info:

- Navigate to Main Menu, Diameter, Configuration, and then Connections.

- Verify that all the connections are shown.

- Verify the peer node info:

- Navigate to Main Menu, Diameter, Configuration, and then Peer Nodes.

- Verify that all the peer nodes are shown.

- Verify the connections info:

- Navigate to Main Menu, Diameter, Configuration, and then Connections.

- Verify that all the connections are shown.

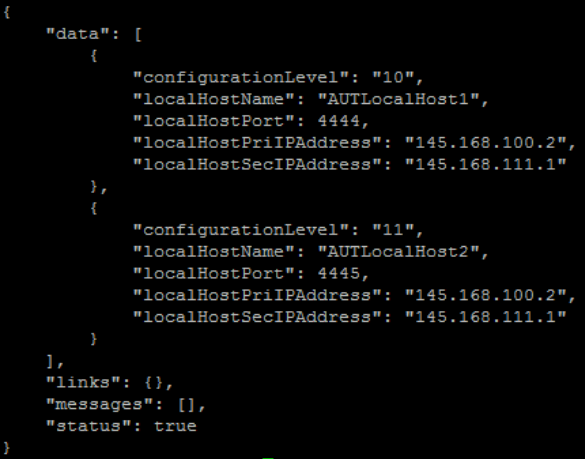

- To verify the vSTP MP local nodes info:

- Log in to the SOAM VIP server console as admusr.

- Run the following command

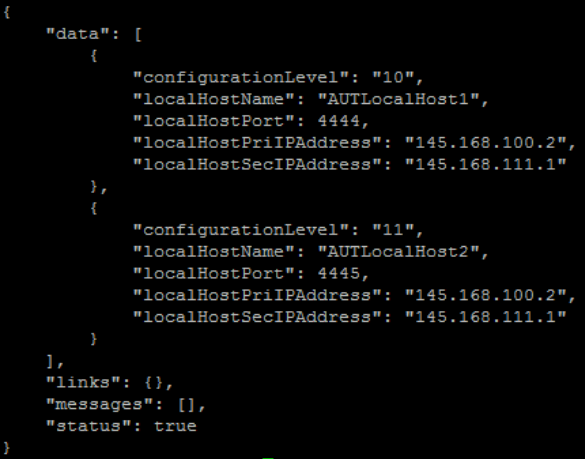

[admusr@SOAM1 ~]$ mmiclient.py /vstp/localhosts - Verify if the output is similar to the following

output.

Figure 4-1 Output

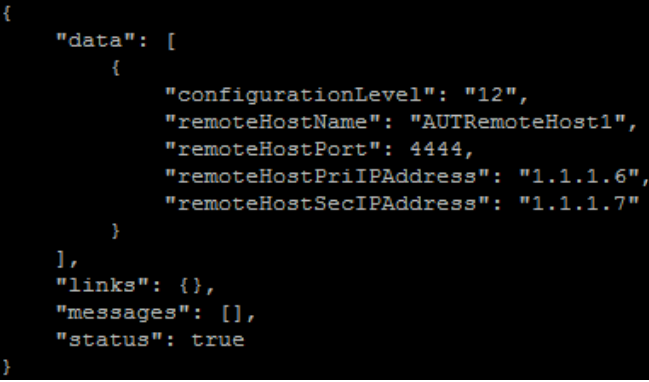

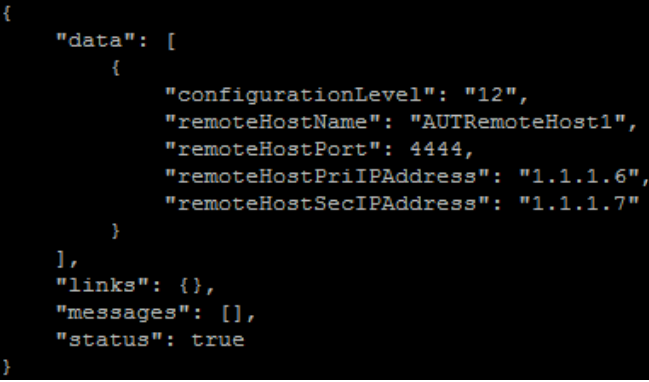

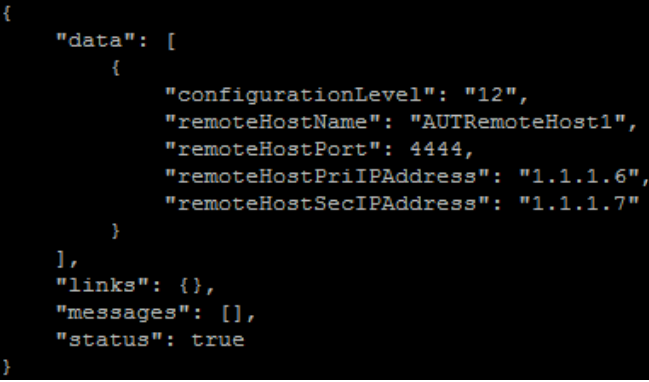

- To verify the vSTP MP remote nodes info:

- Log in to the SOAM VIP server console as admusr.

- Run the following

command:

[admusr@SOAM1 ~]$ mmiclient.py /vstp/remotehosts - Verify if the output is similar to the following

output:

Figure 4-2 Output

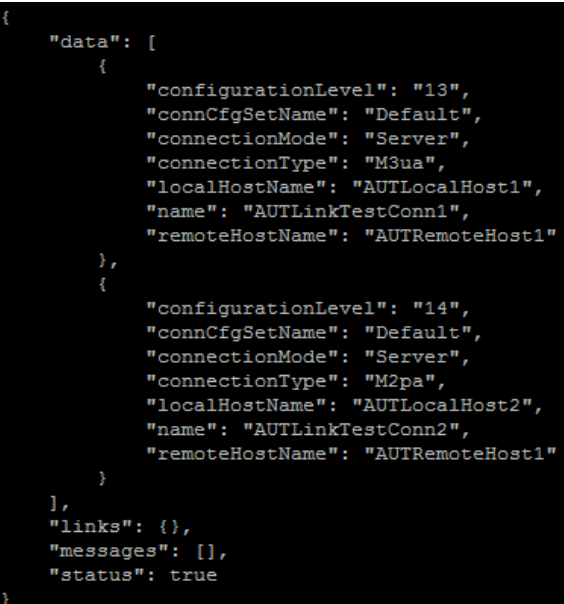

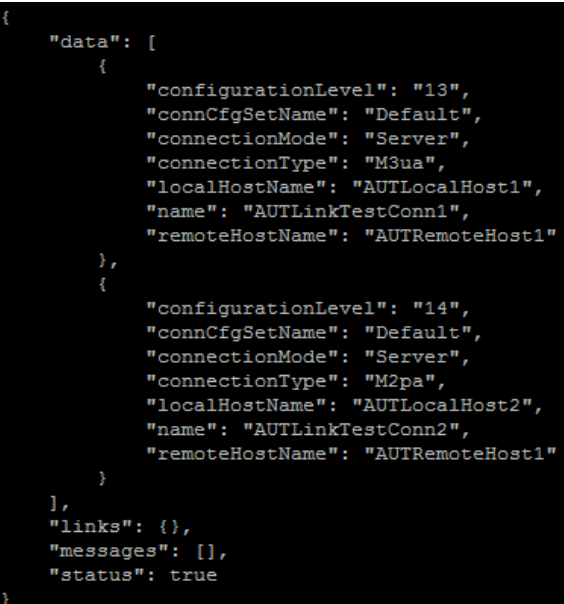

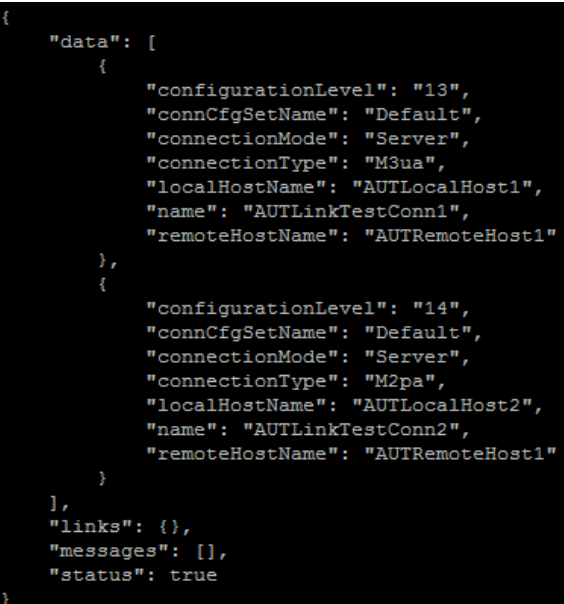

- To verify the vSTP MP connections info:

- Log in to the SOAM VIP server console as admusr.

- Run the following

command:

[admusr@SOAM1 ~]$ mmiclient.py /vstp/connections - Verify if the output is similar to the following

output:

Figure 4-3 Output

- Disable SCTP auth flag:

- For SCTP connections without DTLS enabled, refer to Enable/Disable DTLS Appendix.

- Run this procedure on all Failed MP Servers.

- Enable connections if needed:

- Navigate to Main Menu, Diameter, Maintenance, and then Connections.

- Select each connection and click Enable. Alternatively you can enable all the connections by selecting EnableAll.

- Verify that the "Operational State" is

Available.

Note:

If a disaster recovery was performed on an IPFE server, it may be necessary to disable and re-enable the connections to ensure proper link distribution.

- Enable Optional Features:

- Navigate to Main Menu, Diameter, Maintenance, and then Applications.

- Select the optional feature application configured before and click Enable.

- If required, re-enable transport:

- Navigate to Main Menu, Transport Manager, Maintenance, and then Transport.

- Select each transport and click Enable.

- Verify that the operational status for each transport is up.

- Re-enable MAPIWF application if needed:

- Navigate to Main Menu, Sigtran, Maintenance, and then Local SCCP Users.

- Click Enable corresponding to MAPIWF application Name.

- Verify that the SSN status is Enabled.

- Re-enable links if needed.

- Navigate to Main Menu, Sigtran, Maintenance, and then Links.

- Click Enable for each link.

- Verify that the operational status for each link is up.

- Examine All Alarms:

- Navigate to Main Menu, Alarms & Events and View Active.

- Examine all active alarms and refer to the on-line help on how to address them. For any queries contact My Oracle Support (MOS).

- Restore GUI usernames and passwords:

- If applicable, run steps in section 6.0 to recover the user and group information restored.

- Backup and archive all the databases from the recovered system:

- Run DSR database backup to back up the configuration databases.

4.1.2 Recovery Scenario 2 Partial Server Outage with One NOAM Server Intact and Both SOAMs Failed

For a partial server outage with NOAM server intact and available, SOAM servers are recovered using recovery procedures for software and then running a database restore to the Active SOAM server using a database backup file obtained from the SOAM servers. All other servers are recovered using recovery procedures for software. Database replication from the active NOAM server will recover the database on these servers. The major activities are summarized in the list below. Use this list to understand the recovery procedure summary. Do not use this list to run the procedure. The major activities are summarized as follows:

Recover Standby NOAM server (if needed) by recovering software and the database.

- Recover the Database.

- Recover the software.

- The database has already been restored at the Active SOAM server and does not require restoration at the SO and MP servers.

Note:

If this procedure fails, contact My Oracle Support (MOS)- Refer Workarounds for Issues not fixed in 8.6.0.0.0 Release to understand or apply any workarounds required during this procedure.

- Gather the documents and required materials listed in the Required Materials section.

- Establish a GUI session on the NOAM server by using the VIP IP address

of the NOAM server. Open the web browser and enter the following url

http://<Primary_NOAM_VIP_IP_Address>. - Set failed servers to OOS:

- Navigate to Main Menu, Status & Manage, and then HA.

- Click Edit, set the "Max Allowed HA" role server to OOS for the failed servers and click OK.

- Create VMs recover the failed software:

VMware based deployments:

- For NOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure NOAM guests based on resource profile.

- Import DSR OVA

- For SOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure remaining DSR guests based on resource profile

- Import DSR OVA

- For failed MPs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure Remaining DSR guests based on resource profile.

- Import DSR OVA.

- For NOAMs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure NOAM guests based on resource profile.

- Import DSR OVA.

- For SOAMs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure remaining DSR guests based on resource profile.

- Import DSR OVA.

- For OVM-S/OVM-M based deployments run the following

procedures:

- Import DSR OVA and prepare for VM creation.

- Configure each DSR VM.

Note:

While running procedure 8, configure the required failed VMs only (NOAMs/SOAMs/MPs). For more information, see Diameter Signaling Router Cloud Installation Guide.

- For NOAMs run the following procedures:

- If required, repeat step 5 for all remaining failed servers.

- Establish a GUI session on the NOAM server by using the VIP IP address

of the NOAM server. Open the web browser and enter the following url

http://<Primary_NOAM_VIP_IP_Address>. - Install the second NOAM server by running the following procedures:

- Run step 1 and 3 to 7 of "Configure the Second NOAM server".

- Run step 4 of "Complete Configuring the NOAM server".

Note:

If topology or NODEID alarms are persistent after the database restore, refer to Workarounds for Issues not fixed in 8.6.0.0.0 Release or the next step. - Restart DSR application

- Navigate to Main Menu, Status & Manage, and then Server.

- Select the recovered standby NOAM server and click Restart.

- Navigate to Main Menu, Status &

Manage, and then HA.

- Click Edit, select the standby NOAM server, set it to Active and click OK.

- Replication will wipe out the databases on the C-Level servers when

Active SOAM is recovered, hence prevent replication to the operational C-Level

servers that are part of the same site as the failed SOAM servers.

WARNING:

- If the spare SOAM is also present in the site and lost: Inhibit A and B level replication on C-Level servers (when Active, Standby, and Spare SOAMs are lost)

- If the spare SOAM is not deployed in the site: run Inhibit A and B level replication on C-Level servers.

- Install the SOAM servers by running the following procedure:

- Run steps 1 and 3 to 7 to configure the SOAM servers.

Note:

Before continuing to next step, wait for the server to reboot.

- Run steps 1 and 3 to 7 to configure the SOAM servers.

- Navigate to Main Menu, Status &

Manage, and then HA.

- Click Edit, select the standby NOAM server, set it to Active and click OK.

- Navigate to Main Menu, Status &

Manage, and then Server.

- Select the recovered Active SOAM server and click Restart.

- Upload the backed up SOAM Database file:

- Navigate to Main Menu, Status & Manage, and then Files.

- Select the Active SOAM server and click Upload.

- Select the file "NO Provisioning and Configuration" file backed up

after initial installation and provisioning.

- Click Browse and locate the backup file.

- Check This is a backup file box.

- Click Open and click

Upload.

The file will take a few seconds to upload depending on the size of the backup data. The file will be visible on the list of entries after the upload is complete

- Establish a GUI session on the recovered SOAM server. Open the web

browser and enter the following url

http://<Recovered_SOAM_IP_Address>. - Recovered SOAM GUI, verify the archive contents and database

compatibility:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active SOAM server and click Compare.

- Click Restored Database file that was

uploaded as a part of step 14 of this procedure. Verify that the output

window matches the database archive.

Note:

- As expected, the user will see a database mismatch with respect to the VMs NodeIDs. Proceed if this is the only difference, if not, contact My Oracle Support (MOS).

- Archive content and database compatibilities must

consist the following:

- Archive Contents: Configuration data

- Database Compatibility: The databases are compatible.

- When restoring from an existing backup database to

a database using a single NOAM, the expected outcome for the

topology compatibility check is as follows:

- Topology compatibility: Topology must be compatible minus the NODEID.

- Attempting to restore a backed-up database onto a

NOAM database which is empty. In the context of Topology

Compatibility, this text is expected.

If the verification is successful, click Back and continue to next step of this procedure.

- Restore the Database:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active NOAM server and click

Restore. Select the appropriate backup

provisioning and configuration file and click OK.

Note:

As expected, the user will see a database mismatch with respect to the VMs NodeIDs. Proceed if this is the only difference, if not, contact My Oracle Support (MOS). - Select the Force checkbox and click

OK to proceed with the database restore.

Note:

After the restore has started, the user will be logged out of XMI NO GUI since the restored Topology is old data. The provisioning will be disabled after this step.

- Monitor and confirm database restoral, wait for 5-10 minutes for the

system to stabilize with the new topology.

Monitor the Info tab for "Success". This will indicate that the backup is complete and the system is stabilized.

Note:

- Do not pay attention to alarms until all the servers in the system are completely restored.

- The maintenance and configuration data will be in the same state as when it was first backed up.

- Install the SOAM servers by running the following procedure:

- Run step 1 and 3 to 6 "Configure the SOAM

Servers" to install the SOAM servers.

Note:

Before continuing to next step, wait for the server to reboot.

- Run step 1 and 3 to 6 "Configure the SOAM

Servers" to install the SOAM servers.

- Un-Inhibit (Start) replication to the recovered SOAM servers:

- Navigate to Main Menu, and then Status & Manage, and then Database.

- Click Allow Replication on the recovered SOAM servers.

- Verify that the replication on all SOAMs servers is allowed. This can be done by checking "Repl" status column of respective server.

- Navigate to Main Menu, Status & Manage, and then Server.

- When prompted "Are you sure you wish to Force an NTP Sync on the following server(s)? SOAM2, click OK.

- Navigate to Main Menu, Status &

Manage, and then HA.

- Click Edit, For each SOAM server whose "Max Allowed HA" role is set to Standby, set it to Active.

- Navigate to Main Menu, Status & Manage, and then Server. Select the recovered server and click Restart.

- Enable Provisioning:

- Navigate to Main Menu, Status & Manage, and then Database.

- Click Enable Site Provisioning, a confirmation window will appear, click OK to enable Provisioning.

- Un-Inhibit (Start) replication to the working C Level servers which

belong to the same site as of the failed SOAM servers.

If the spare SOAM is also present in the site and lost: run un-inhibit A and B level replication on C-Level servers (when Active, Standby and Spare SOAMs are lost).

If the spare SOAM is not deployed in the site: run un-inhibit A and B level replication on C-Level servers.

Navigate to Main Menu, Status & Manage, and then Database.

If the "Repl Status" is set to Inhibited, click Allow Replication using the following order, otherwise if none of the servers are inhibited, skip this step and continue with the next step:

- Active NOAM Server

- Standby NOAM Server

- Active SOAM Server

- Standby SOAM Server

- Spare SOAM Server (if applicable)

- MP/IPFE Servers

- SBRS (if SBR servers are configured, start with the active SBR, then standby, then spare.)

Verify that the replication on all the working servers is allowed. This can be done by examining the Repl Status table.

- Establish a SSH session to the C level server being recovered, login as

an admusr.

- Run the following command to set shared memory to

unlimited

$ sudo shl.set –m 0 - Run the following procedures for each server that has been

recovered:

- Run step 1, 8 to 14, (step 15 if required) configure the MP Virtual Machines.

- Run the following command to set shared memory to

unlimited

- Start replication on all C-Level servers:

- Navigate to Main Menu, and then Status & Manage, and then Database.

- If the "Repl" status is set to

Inhibited, click Allow

Replication by following the order:

- Active NOAM Server

- Standby NOAM Server

- Active SOAM Server

- Standby SOAM Server

- Spare SOAM Server (if applicable)

- MP/IPFE Servers

- Verify that the replication on all the working servers is allowed. This can be done by examining the Repl Status table.

- Perform key exchange between the Active NOAM and recovered servers:

- Establish an SSH session to the Active NOAM, log in as an admusr.

- Run the following command to perform a key exchange from the

Active NOAM to each recovered

server:

$ keyexchange admusr@<Recovered Server Hostname>Note:

Perform this step, if an export server is configured.

- Activate optional features:

- Establish an SSH session to the active NOAM, log in as

admusr.

Note:

- If PCA is installed in the system that is being

recovered, perform the following procedures on all the active

applications:

- PCA Activation on Standby NOAM on recovered Standby NOAM server

- PCA Activation on Active SOAM on recovered Active SOAM Server from to re-activate PCA

For more information about the above mentioned procedures, see Diameter Signaling Router Policy and Charging Application Feature Activation Guide.

Refer Optional Features to activate any features that were previously activated.

- If PCA is installed in the system that is being

recovered, perform the following procedures on all the active

applications:

Note:

- While running the activation script, the following error

message (and corresponding messages) output may be seen, the error can

be ignored

iload#31000{S/W Fault}. - If any of the MPs are failed and recovered, then these MP servers should be restarted after activation of the feature.

- Establish an SSH session to the active NOAM, log in as

admusr.

- Fetch and store the database report for the newly restored data and save

it:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active NOAM server and click Report.

- Click Save and save the report to the local machine.

- Verify replication between servers:

- Log in to the Active NOAM through SSH terminal as admusr user.

- Run the following

command:

$ sudo irepstat –m Output like below shall be generated: -- Policy 0 ActStb [DbReplication] --------------------- Oahu-DAMP-1 -- Active BC From Oahu-SOAM-2 Active 0 0.50 ^0.15%cpu 25B/s A=me CC To Oahu-DAMP-2 Active 0 0.10 0.14%cpu 25B/s A=me Oahu-DAMP-2 -- Stby BC From Oahu-SOAM-2 Active 0 0.50 ^0.11%cpu 31B/s A=C3642.212 CC From Oahu-DAMP-1 Active 0 0.10 ^0.14 1.16%cpu 31B/s A=C3642.212 Oahu-IPFE-1 -- Active BC From Oahu-SOAM-2 Active 0 0.50 ^0.03%cpu 24B/s A=C3642.212 Oahu-IPFE-2 -- Active BC From Oahu-SOAM-2 Active 0 0.50 ^0.03%cpu 28B/s A=C3642.212 Oahu-NOAM-1 -- Stby AA From Oahu-NOAM-2 Active 0 0.25 ^0.03%cpu 23B/s Oahu-NOAM-2 -- Active AA To Oahu-NOAM-1 Active 0 0.25 1%R 0.04%cpu 61B/s AB To Oahu-SOAM-2 Active 0 0.50 1%R 0.05%cpu 75B/s Oahu-SOAM-1 -- Stby BB From Oahu-SOAM-2 Active 0 0.50 ^0.03%cpu 27B/s Oahu-SOAM-2 -- Active AB From Oahu-NOAM-2 Active 0 0.50 ^0.03%cpu 24B/s BB To Oahu-SOAM-1 Active 0 0.50 1%R 0.04%cpu 32B/s BC To Oahu-IPFE-1 Active 0 0.50 1%R 0.04%cpu 21B/s irepstat ( 40 lines) (h)elp (m)erged

- Verify the database states:

- Navigate to Main Menu, Status & Manage, and then Database.

- Verify that the "OAM Max HA Role" is either Active or Standby for NOAM and SOAM and "Application Max HA Role" for MPs is Active, and that the status is Normal.

- Verify the HA Status:

- Navigate to Main Menu, and then Status & Manage, and then HA.

- Select the row for all of the servers, verify that the HA role is either Active or Standby.

- Verify the local Node Info:

- Navigate to Main Menu, Diameter, Configuration, and then Connections.

- Verify that all the connections are shown.

- Verify the Peer Node Info:

- Navigate to Main Menu, Diameter, Configuration, and then Peer Nodes.

- Verify that all the peer nodes are shown.

- Verify the Connections Info:

- Navigate to Main Menu, Diameter, Configuration, and then Connections.

- Verify that all the connections are shown.

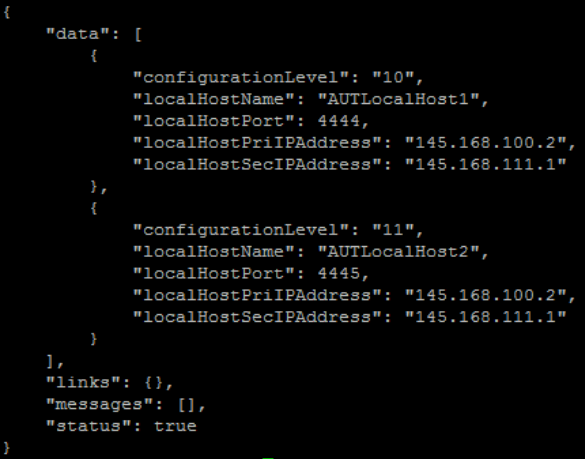

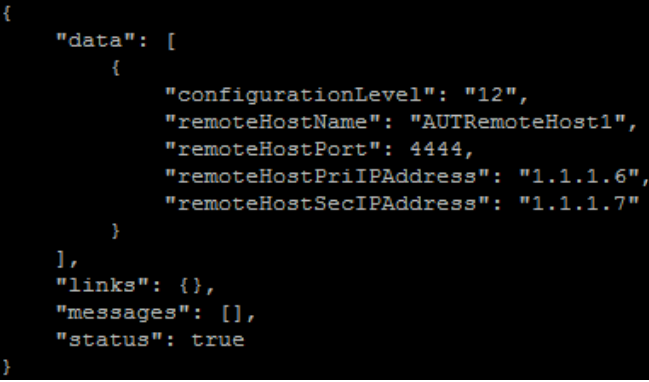

- To verify the vSTP MP Local nodes info:

- Log in to the SOAM VIP Server console as admusr.

- Run the following command

[admusr@SOAM1 ~]$ mmiclient.py /vstp/localhosts - Verify the output similar to the below output.

Figure 4-4 Output

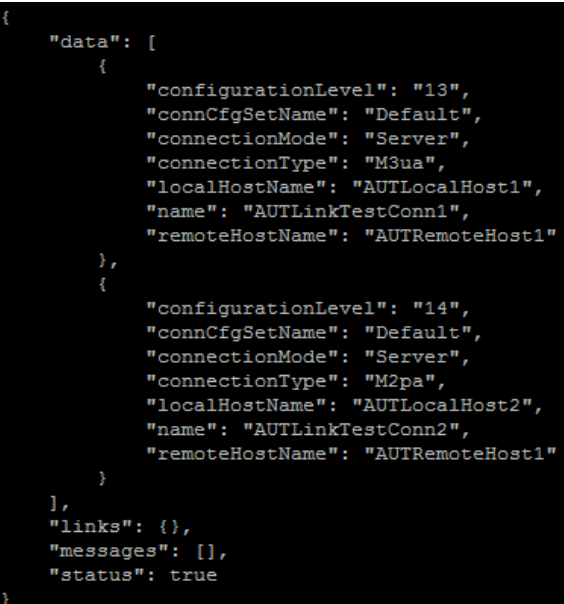

- To verify the vSTP MP remote nodes info:

- Log in to the SOAM VIP server console as admusr.

- Run the following

command:

[admusr@SOAM1 ~]$ mmiclient.py /vstp/remotehosts - Verify the output similar to the below output:

Figure 4-5 Output

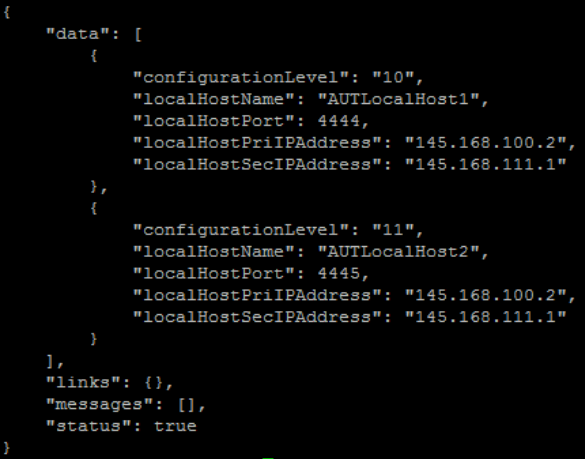

- To verify the vSTP MP Connections info:

- Log in to the SOAM VIP server console as admusr.

- Run the following

command:

[admusr@SOAM1 ~]$ mmiclient.py /vstp/connections - Verify if the output is similar to the below output:

Figure 4-6 Output

- Disable SCTP Auth Flag:

- For SCTP connections without DTLS enabled, refer to Enable/Disable DTLS.

- Run this procedure on all failed MP Servers.

- Enable Connections if needed:

- Navigate to Main Menu, Diameter, Maintenance, and then Connections.

- Select each connection and click Enable. Alternatively you can enable all the connections by selecting EnableAll.

- Verify that the "Operational State" is Available.

- Enable Optional Features:

- Navigate to Main Menu, Diameter, Maintenance, and then Applications.

- Select the optional feature application configured in step 29 and click Enable.

- If required, re-enable transport:

- Navigate to Main Menu, Transport Manager, Maintenance, and then Transport.

- Select each transport and click Enable.

- Verify that the operational status for each transport is up.

- Re-enable MAPIWF application if needed:

- Navigate to Main Menu, Sigtran, Maintenance, and then Local SCCP Users.

- Click Enable corresponding to MAPIWF application Name.

- Verify that the SSN status is Enabled.

- Re-enable links if needed.

- Navigate to Main Menu, Sigtran, Maintenance, and then Links.

- Click Enable for each link.

- Verify that the operational status for each link is up.

- Examine All Alarms:

- Navigate to Main Menu, Alarms & Events and View Active.

- Examine all active alarms and refer to the on-line help on how to address them. For any queries contact My Oracle Support (MOS).

- Backup and archive all the databases from the recovered system:

- Run DSR database backup to back up the configuration databases.

4.1.3 Recovery Scenario 3 (Partial Server Outage with all NOAM servers failed and one SOAM server intact)

For a partial server outage with SOAM server intact and available, NOAM servers are recovered using recovery procedures for software and then running a database restore to the active NOAM server using a NOAM database backup file obtained from external backup sources such as customer servers. All other servers are recovered using recovery procedures for software. Database replication from the Active NOAM or Active SOAM server will recover the database on these servers. The major activities are summarized in the list below. Use this list to understand the recovery procedure summary. Do not use this list to run the procedure. The major activities are summarized as follows:

Recover Active NOAM server by recovering software and the database.

- Recover the software.

- Recover the database

Recover standby NOAM servers by recovering software.

- Recover the software.

Recover any failed SOAM and MP servers by recovering software.

- Recover the software.

- Database is already intact at one SOAM server and does not require restoration at the other SOAM and MP servers.

Note:

If this procedure fails, contact My Oracle Support (MOS), and ask for assistance.- Refer Workarounds for Issues not fixed in 8.6.0.0.0 Release to understand or apply any workarounds required during this procedure.

- Gather the documents and required materials listed in the Required Materials section.

- VMware based deployments:

- For NOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure NOAM guests based on resource profile.

- Import DSR OVA

- For SOAM run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure remaining DSR guests based on resource profile

- Import DSR OVA

- For failed MPs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure remaining DSR guests based on resource profile.

- Import DSR OVA.

- For NOAMs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure NOAM guests based on resource profile.

- Import DSR OVA.

- For SOAM run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure remaining DSR guests based on resource profile.

- Import DSR OVA.

- For OVM-S/OVM-M based deployments run the following

procedures:

- Import DSR OVA and prepare for VM creation.

- Configure each DSR VM.

Note:

While running procedure 8, configure the required failed VMs only (NOAMs/SOAMs/MPs). For more information, see Diameter Signaling Router Cloud Installation Guide.

- For NOAMs run the following procedures:

- Obtain the most recent database backup file from external backup

sources (ex. file servers) or tape backup sources.

- From Required Materials list, use site survey documents and Network Element report (if available), to determine network configuration data.

- Run DSR installation procedure for the first NOAM:

- Verify the networking data for Network Elements.

Note:

Use the backup copy of network configuration data and site surveys. - Run installation procedures for the first NOAM server:

- Configure the first NOAM NE and Server.

- Configure the NOAM Server Group.

- Verify the networking data for Network Elements.

- Log in to the NOAM GUI as the guiadmin user.

- Upload the backed up SOAM database file:

- Navigate to Main Menu, Status & Manage, and then Files.

- Select the Active SOAM server. Click Upload and select the file "SO Provisioning and Configuration" backed up after initial installation and provisioning.

- Click Browse and locate the backup file.

- Check This is a backup file box and click Open.

- Click Upload.

The file will take a few seconds to upload depending on the size of the backup data. The file will be visible on the list of entries after the upload is complete.

- To disable provisioning:

- Navigate to Main Menu, Status & Manage, and then Database.

- Click Disable Provisioning. A

confirmation window will appear, press OKto

disable.

Note:

The message "warning Code 002" will appear.

- Verify the archive contents and database compatibility:

- Select the Active NOAM server and click

Compare.

Click Restored database file that was uploaded as a part of step 13 of this procedure.

- Verify that the output window matches the database.

Note:

- As expected, the user will see a database mismatch with respect to the VMs NodeIDs. Proceed if this is the only difference, if not, contact My Oracle Support (MOS).

- Archive content and database compatibilities must

consist the following:

- Archive Contents: Configuration data

- Database Compatibility: The databases are compatible.

- When restoring from an existing backup database to

a database using a single NOAM, the expected outcome for the

topology compatibility check is as follows:

- Topology compatibility: Topology must be compatible minus the NODEID.

- Attempting to restore a backed-up database onto a

NOAM database which is empty. In the context of Topology

Compatibility, this text is expected.

If the verification is successful, click Back and continue to next step of this procedure.

- Select the Active NOAM server and click

Compare.

- Restore the database:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active NOAM server and click

Restore. Select the appropriate backup

provisioning and configuration file and click OK.

Note:

As expected, the user will see a database mismatch with respect to the VMs NodeIDs. Proceed if this is the only difference, if not, contact My Oracle Support (MOS). - Select the Force checkbox and click

OK to proceed with the database restore.

Note:

After the restore has started, the user will be logged out of XMI NO GUI since the restored Topology is old data.

- Establish a GUI session on the NOAM server by using the VIP IP address

of the NOAM server. Open the web browser and enter the following url

http://<Primary_NOAM_VIP_IP_Address>. Log in as the guiadmin user. - Monitor and confirm database restoral. Wait for 5 to10 minutes for the

System to stabilize with the new topology.

Monitor the Info tab for Success. This will indicate that the backup is complete and the system is stabilized.

Following alarms must be ignored for NOAM and MP Servers until all the Servers are configured:

- Alarms with Type Column as "REPL" , "COLL", "HA"

(with mate NOAM), DB (about Provisioning Manually Disabled).

Note:

- Do not pay attention to alarms until all the servers in the system are completely restored.

- The maintenance and configuration data will be in the same state as when it was first backed up.

- Alarms with Type Column as "REPL" , "COLL", "HA"

(with mate NOAM), DB (about Provisioning Manually Disabled).

- Log in to the recovered Active NOAM through SSH terminal as admusr user.

- Re-enable provisioning:

- Navigate to Main Menu, Status & Manage, and then Database.

- Click Enable Provisioning a pop-up window will appear to confirm and click OK.

- Install the second NOAM server by running the following procedure:

- Run steps 1 and 3 to 7 Configure the Second NOAM Server.

- Navigate to Main Menu, Status &

Manage, and then Server.

- Click Restart and click Ok on the confirmation screen.

Note:

If topology or NODEID alarms are persistent after the database restore, refer to Workarounds for Issues not fixed in 8.6.0.0.0 Release or the next step. - Recover the remaining SOAM servers (standby, spare) by repeating the following steps

for each SOAM server:

- Install the remaining SOAM servers by running steps 1 and 3 to

7 configure the SOAM Servers.

Note:

Before continuing, wait for server to reboot.

- Install the remaining SOAM servers by running steps 1 and 3 to

7 configure the SOAM Servers.

- Restart DSR application:

- Navigate to Main Menu, Status & Manage, and then Server.

- Select the recovered server and click Restart.

- Navigate to Main Menu, Status &

Manage, and then HA.

- Click Edit, for each server whose "Max Allowed HA role" is not Active, set it to Active and click OK.

- Restart DSR application:

- Navigate to Main Menu, Status & Manage, and then Server.

- Select each recovered server and click Restart.

- Activate optional features:

- Establish an SSH session to the active NOAM, log in as

admusr.

Note:

- If PCA is installed in the system that is being

recovered, perform the following procedures on all the active

applications:

- PCA Activation on Standby NOAM on recovered Standby NOAM server

- PCA Activation on Active SOAM on recovered Active SOAM Server from to re-activate PCA

For more information about the above mentioned procedures, see Diameter Signaling Router Policy and Charging Application Feature Activation Guide.

Refer to Optional Features to activate any features that were previously activated.

- If PCA is installed in the system that is being

recovered, perform the following procedures on all the active

applications:

Note:

- While running the activation script, the following error

message (and corresponding messages) output may be seen, the error can

be ignored

iload#31000{S/W Fault}. - If any of the MPs are failed and recovered, then these MP servers should be restarted after activation of the feature.

- Establish an SSH session to the active NOAM, log in as

admusr.

- Fetch and store the database report for the newly restored data and save

it:

- Navigate to Main Menu, Status & Manage, and then Database.

- Select the Active NOAM server and click Report.

- Click Save and save the report to the local machine.

- Verify replication between servers:

- Log in to the Active NOAM through SSH terminal as admusr user.

- Run the following

command:

$ sudo irepstat –m Output like below shall be generated: -- Policy 0 ActStb [DbReplication] ----------------------------------- ---------------------------------------------------------------------- -------------------------------------- RDU06-MP1 -- Stby BC From RDU06-SO1 Active 0 0.50 ^0.17%cpu 42B/s A=none CC From RDU06-MP2 Active 0 0.10 ^0.17 0.88%cpu 32B/s A=none RDU06-MP2 -- Active BC From RDU06-SO1 Active 0 0.50 ^0.10%cpu 33B/s A=none CC To RDU06-MP1 Active 0 0.10 0.08%cpu 20B/s A=none RDU06-NO1 -- Active AB To RDU06-SO1 Active 0 0.50 1%R 0.03%cpu 21B/s RDU06-SO1 -- Active AB From RDU06-NO1 Active 0 0.50 ^0.04%cpu 24B/s BC To RDU06-MP1 Active 0 0.50 1%R 0.04%cpu 21B/s BC To RDU06-MP2 Active 0 0.50 1%R 0.07%cpu 21B/s

- Verify the database states:

- Navigate to Main Menu, Status & Manage, and then Database.

- Verify that the "OAM Max HA Role" is either Active or Standby for NOAM and SOAM and "Application Max HA Role" for MPs is Active, and that the status is Normal.

- Verify the HA Status:

- Navigate to Main Menu, Status & Manage, and then HA.

- Select the row for all of the servers, verify that the HA role is either Active or Standby.

- Verify the local Node Info:

- Navigate to Main Menu, Diameter, Configuration, and then Local Node.

- Verify that all the local nodes are shown.

- Verify the Peer Node Info:

- Navigate to Main Menu, Diameter, Configuration, and then Peer Node.

- Verify that all the peer nodes are shown.

- Verify the Connections Info:

- Navigate to Main Menu, Diameter, Configuration, and then Connections.

- Verify that all the connections are shown.

- To verify the vSTP MP local nodes info:

- Log in to the SOAM VIP Server console as admusr.

- Run the following command

[admusr@SOAM1 ~]$ mmiclient.py /vstp/localhosts - Verify if the output is similar to the below output.

Figure 4-7 Output

- To verify the vSTP MP remote nodes info:

- Log in to the SOAM VIP server console as admusr.

- Run the following

command:

[admusr@SOAM1 ~]$ mmiclient.py /vstp/remotehosts - Verify if the output is similar to the below output:

Figure 4-8 Output

- To verify the vSTP MP Connections info:

- Log in to the SOAM VIP server console as admusr.

- Run the following

command:

[admusr@SOAM1 ~]$ mmiclient.py /vstp/connections - Verify if the output is similar to the below output:

Figure 4-9 Output

- Enable Connections if needed:

- Navigate to Main Menu, Diameter, Maintenance, and then Connections.

- Select each connection and click Enable. Alternatively you can enable all the connections by selecting EnableAll.

- Verify that the "Operational State" is Available.

- Enable optional features:

- Navigate to Main Menu, Diameter, Maintenance, and then Applications.

- Select the optional feature application configured before and click Enable.

- If required, re-enable transport:

- Navigate to Main Menu, Transport Manager, Maintenance, and then Transport.

- Select each transport and click Enable.

- Verify that the operational status for each transport is Up.

- Re-enable MAPIWF application if needed:

- Navigate to Main Menu, Sigtran, Maintenance, and then Local SCCP Users.

- Click Enable corresponding to MAPIWF application Name.

- Verify that the SSN status is Enabled.

- Re-enable links if needed.

- Navigate to Main Menu, Sigtran, Maintenance, and then Links.

- Click Enable for each link.

- Verify that the operational status for each link is up.

- Examine All Alarms:

- Navigate to Main Menu, Alarms & Events and View Active.

- Examine all active alarms and refer to the on-line help on how to address them. For any queries contact My Oracle Support (MOS).

- Perform key exchange with Export Server:

- Navigate to Main Menu, Administration, Remote Servers and then Data Export.

- Click Key Exchange, enter the password and click OK.

- Examine All Alarms:

- Navigate to Main Menu, Alarms & Events and View Active.

- Examine all active alarms and refer to the on-line help on how to address them. For any queries contact My Oracle Support (MOS).

- Restore GUI usernames and passwords:

- If applicable, run steps in Section 6.0 to recover the user and group information restored.

- Backup and archive all the databases from the recovered system:

- Run DSR database backup to back up the Configuration databases.

4.1.4 Recovery Scenario 4 (Partial Server Outage with one NOAM server and one SOAM server intact)

For a partial outage with an NOAM server and an SOAM server intact and available, only base recovery of software is needed. The intact NOAM and SOAM servers are capable of restoring the database via replication to all servers. The major activities are summarized in the list below. Use this list to understand the recovery procedure summary. Do not use this list to execute the procedure. The major activities are summarized as follows:

Recover Standby NOAM server by recovering software.

The database is intact at the active NOAM server and does not require restoration at the standby NOAM server.

- Recover any failed SO and MP servers by recovering software.

- Recover the software.

The database in intact at the active NOAM server and does not require restoration at the SOAM and MP servers.

- Re-apply signaling networks configuration if the failed VM is an MP.

Note:

If this procedure fails, contact My Oracle Support (MOS).- Refer Workarounds for Issues not fixed in 8.6.0.0.0 Releasee to understand or apply any workarounds required during this procedure.

- Gather the documents and required materials listed in the Required Materials section.

- Establish a GUI session on the NOAM server by using the VIP IP address of the

NOAM server. Open the web browser and enter the following url

http://<Primary_NOAM_VIP_IP_Address>. Log in as the guiadmin user. - Navigate to Main Menu, Status &

Manage, and then HA.

- Click Edit and set "Max Allowed HA Role" to OOS and click OK.

- VMware based deployments:

- For NOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure NOAM guests based on resource profile.

- Import DSR OVA

- For SOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure Remaining DSR guests based on resource profile

- Import DSR OVA

- For failed MPs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure Remaining DSR guests based on resource profile.

- Import DSR OVA.

- For NOAMs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure NOAM guests based on resource profile.

- Import DSR OVA.

- For SOAMs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure Remaining DSR guests based on resource profile.

- Import DSR OVA.

- For OVM-S/OVM-M based deployments run the following

procedures:

- Import DSR OVA and prepare for VM creation.

- Configure each DSR VM.

Note:

While running procedure 8, configure the required failed VMs only (NOAMs/SOAMs/MPs). For more information, see Diameter Signaling Router Cloud Installation Guide.

- For NOAMs run the following procedures:

- If required, repeat step 5 for all remaining failed servers.

- Establish a GUI session on the NOAM server by using the VIP IP address of the

NOAM server. Open the web browser and enter the following url

http://<Primary_NOAM_VIP_IP_Address>. Login as the guiadmin user: - Install the second NOAM server by running the following procedures:

- Configure the Second NOAM Server, steps 1, 3 to 7.

- Configuring the NOAM Server Group, step 4

Note:

If topology or nodeId alarms are persistent after the database restore, refer to Workarounds for Issues not fixed in 8.6.0.0.0 Release or continue with the next step.

- If the failed server is an SOAM, recover the remaining SOAM servers (standby,

spare) by repeating the following steps for each SOAM server:

- Install the remaining SOAM servers, configure the SOAM

Servers, step 1 and 3 to 7.

Note:

Before continuing to next step, wait for the server to restart.

- Install the remaining SOAM servers, configure the SOAM

Servers, step 1 and 3 to 7.

- Navigate to Main Menu, Status &

Manage, and then HA.

- Click Edit for each server whose "Max Allowed HA Role" is set to Standby, set it to Active and click OK.

- Navigate to Main Menu, Status & Manage, and then Server. Select the recovered server and click Restart.

- Establish a SSH session to the C Level server being recovered, log in as admusr.

- Run the following procedure for each server that has been recovered.

- Configure the MP Virtual Machines, Step 1 and 8 to 14 ( step 15 if required).

- Run the following procedure for each server that has been recovered.

- Navigate to Main Menu, Status &

Manage, and then HA.

- Click Edit for each server whose "Max Allowed HA Role" is set to Standby, set it to Active and click OK.

- Navigate to Main Menu, Status & Manage, and then Server. Select the recovered servers and click Restart.

- Log in to the recovered Active NOAM through SSH terminal as admusr user.

- Perform key exchange between the Active NOAM and recovered servers:

- Establish an SSH session to the Active NOAM, log in as admusr.

- Run the following command to perform a keyexchange from the active NOAM

to each recovered

server:

$ keyexchange admusr@<Recovered Server Hostname>

- Activate optional features:

- Establish an SSH session to the active NOAM, log in as

admusr.

Note:

- If PCA is installed in the system that is being

recovered, perform the following procedures on all the

active applications:

- PCA Activation on Standby NOAM on recovered Standby NOAM server

- PCA Activation on Active SOAM on recovered Active SOAM Server from to re-activate PCA

For more information about the above mentioned procedures, see Diameter Signaling Router Policy and Charging Application Feature Activation Guide.

- If PCA is installed in the system that is being

recovered, perform the following procedures on all the

active applications:

- Refer to Optional Features to activate any features that were previously

activated.

Note:

- While running the activation script, the

following error message (and corresponding messages) output

may be seen, which can be ignored

iload#31000{S/W Fault}. - If any of the MPs are failed and recovered, then these MP servers should be restarted after Activation of the feature.

- While running the activation script, the

following error message (and corresponding messages) output

may be seen, which can be ignored

- Establish an SSH session to the active NOAM, log in as

admusr.

- Navigate to Main Menu, Status &

Manage, and then Database.

- Select the Active NOAM server and click Report.

- Click Save to save the report to the local machine.

- Log in to the Active NOAM through SSH terminal as admusr user. Run the following

command:

$ sudo irepstat –m Output like below shall be generated: -- Policy 0 ActStb [DbReplication] ----------------------------------- ---------------------------------------------------------------------- -------------------------------------- RDU06-MP1 -- Stby BC From RDU06-SO1 Active 0 0.50 ^0.17%cpu 42B/s A=none CC From RDU06-MP2 Active 0 0.10 ^0.17 0.88%cpu 32B/s A=none RDU06-MP2 -- Active BC From RDU06-SO1 Active 0 0.50 ^0.10%cpu 33B/s A=none CC To RDU06-MP1 Active 0 0.10 0.08%cpu 20B/s A=none RDU06-NO1 -- Active AB To RDU06-SO1 Active 0 0.50 1%R 0.03%cpu 21B/s RDU06-SO1 -- Active AB From RDU06-NO1 Active 0 0.50 ^0.04%cpu 24B/s BC To RDU06-MP1 Active 0 0.50 1%R 0.04%cpu 21B/s BC To RDU06-MP2 Active 0 0.50 1%R 0.07%cpu 21B/s - Navigate to Main Menu, Status &

Manage, and then Database.

- Verify that the "OAM Max HA Role" is either "Active" or "Standby" for NOAM and SOAM and "Application Max HA Role" for MPs is Active, and the status is Normal.

- Navigate to Main Menu, Status &

Manage, and then HA.

- Select the row for all the servers. Verify that the HA Role is either Active or Standby.

- Navigate to Main Menu, Diameter, Configuration and then Local Node. Verify that all the peer nodes are shown.

- Navigate to Main Menu, Diameter, Configuration and then Connections. Verify that all the connections are shown.

- To verify the vSTP MP local nodes info:

- Log in to the SOAM VIP Server console as admusr.

- Run the following command

[admusr@SOAM1 ~]$ mmiclient.py /vstp/localhosts - Verify if the output is similar to the below output.

Figure 4-10 Output

- To verify the vSTP MP Remote nodes info:

- Log in to the SOAM VIP server console as admusr.

- Run the following

command:

[admusr@SOAM1 ~]$ mmiclient.py /vstp/remotehosts - Verify if the output is similar to the below output:

Figure 4-11 Output

- To verify the vSTP MP Connections info:

- Log in to the SOAM VIP server console as admusr.

- Run the following

command:

[admusr@SOAM1 ~]$ mmiclient.py /vstp/connections - Verify if the output is similar to the below output:

Figure 4-12 Output

- Disable SCTP Auth Flag:

- For SCTP connections without DTLS enabled, refer to Enable/Disable DTLS Appendix.

- Run this procedure on all failed MP servers.

- Navigate to Main Menu, Diameter,

and then Connections.

- Select each connection and click Enable.

- Alternatively enable all the connections by selecting EnableAll.

- Verify that the operational state is Available.

- Navigate to Main Menu, Diameter,

Maintenance and then

Applications.

- Select the optional feature application and click Enable.

- Navigate to Main Menu, Transport

Manager, Maintenance and then

Transport.

- Select each transport and click Enable.

- Verify that the operational status for each transport is Up.

- Navigate to Main Menu, Sigtran,

Maintenance and then Local SCCP

Users.

- Click Enable corresponding to MAPIWF application name.

- Verify that the SSN Status is Enabled.

- Navigate to Main Menu, Sigtran,

Maintenance and then Links.

- Click Enable for each link.

- Verify that the operational status for each link is up.

- Examine All Alarms:

- Navigate to Main Menu, Alarms &

Events, and then View Active.

- Examine all active alarms and refer to the on-line help on how to address them.

- If required contact My Oracle Support (MOS).

- Navigate to Main Menu, Alarms &

Events, and then View Active.

- If required, restart

oampAgent.Note:

If alarm 10012 the responder for a monitored table failed to respond to a table change is raised, theoampAgentneeds to be restarted.- Establish an SSH session to each server that has the alarm. Log in as admusr.

- Run the following

commands:

$ sudo pm.set off oampAgent $ sudo pm.set on oampAgent

- Run DSR database backup to backup the configuration databases.

4.1.5 Recovery Scenario 5 (Partial server outage with both NOAM servers failed with DR-NOAM Available)

For a partial outage with both NOAM servers failed but a DR NOAM available, the DR NOAM is switched from secondary to primary then recovers the failed NOAM servers. The major activities are summarized in the list below. Use this list to understand the recovery procedure summary. Do not use this list to run the procedure. The major activities are summarized as follows:

Switch DR NOAM from secondary to primary

Recover the failed NOAM servers by recovering base hardware and software.

- Recover the base hardware.

- Recover the software.

- The database is intact at the newly active NOAM server and does not require restoration.

If applicable, recover any failed SOAM and MP servers by recovering base hardware and software.

- Recover the base hardware.

- Recover the software.

- The database in intact at the Active NOAM server and does not require restoration at the SOAM and MP servers.

Note:

If this procedure fails, contact My Oracle Support (MOS).- Refer Workarounds for Issues not fixed in 8.6.0.0.0 Release to understand or apply any workarounds required during this procedure.

- Gather the documents and required materials listed in the Required Materials section.

- Refer Diameter Signaling Router SDS NOAM Failover User Guide.

- For VMWare based deployments:

- For NOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure NOAM guests based on resource profile.

- Import DSR OVA

- For SOAMs run the following procedures:

- Import DSR OVA

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure Remaining DSR guests based on resource profile

- Import DSR OVA

- For failed MPs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure remaining DSR guests based on resource profile.

For KVM or Openstack based deployments:

- Import DSR OVA.

- For NOAMs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA - Configure NOAM guests based on resource profile.

- Import DSR OVA.

- For SOAMs run the following procedures:

- Import DSR OVA.

Note:

If OVA is already imported and present in the Infrastructure Manager, skip this procedure of importing OVA. - Configure Remaining DSR guests based on resource profile.

- Import DSR OVA.

- For OVM-S/OVM-M based deployments run the following

procedures:

- Import DSR OVA and prepare for VM creation.

- Configure each DSR VM.

Note:

Configure the required failed VMs only (NOAMs/SOAMs/MPs).

- For NOAMs run the following procedures:

- If all SOAM servers have failed, run Partial Server Outage with One NOAM Server Intact and both SOAMs Failed.

- Establish a GUI session on the DR-NOAM server by using the VIP IP address of the

DR-NOAM server. Open the web browser and enter the following url

http://<Primary_DR-NOAM_VIP_IP_Address>. Log in as the guiadmin user. - Navigate to Main Menu, click

Status & Manage, and then

HA.

- Click Edit, select the standby for the failed NOAM servers and click OK.

- Navigate to Main Menu,

Configuration, and then

Servers.

From the GUI screen, select the failed NOAM server and then select Export to generate the initial configuration data for that server

- Obtain a terminal session to the DR-NOAM VIP, log in as the admusr user. Run the

following command to configure the failed NOAM

server:

$ sudo scp -r /var/TKLC/db/filemgmt/TKLCConfigData.<Failed_NOAM_Hostnam e>.sh admusr@<Failed_NOAM_xmi_IP_address>:/var/tmp/TKLCConfigDa ta.sh - Establish an SSH session to the recovered NOAM server

(Recovered_NOAM_xmi_IP_address).

- Log in as the admusr user.

The automatic configuration daemon will look for the file named "TKLCConfigData.sh" in the

/var/tmpdirectory, implement the configuration in the file, and then prompt the user to reboot the server. - Verify

awpushcfgcalled by checking the following file$ sudo cat /var/TKLC/appw/logs/Process/install.log Verify the following message is displayed: [SUCCESS] script completed successfully! - Following is the command to reboot the

server:

$ sudo init 6Wait for the server to restart.

- Log in as the admusr user.

- Run the following command on the failed NOAM server and ensure that no errors

are

returned:

$ sudo syscheck Running modules in class hardware...OK Running modules in class disk...OK Running modules in class net...OK Running modules in class system...OK Running modules in class proc...OK LOG LOCATION: /var/TKLC/log/syscheck/fail_log - Repeat steps 8 to 11 for the 2nd failed NOAM server.

- Perform a keyexchange between the newly active NOAM and the recovered NOAM

servers:

From a terminal window connection on the active NOAM as the admusr user, exchange SSH keys for admusr between the active NOAM and the recovered NOAM servers using the keyexchange utility, using the host names of the recovered NOAMs.

When prompted for the password, enter the password for the admusr user of the recovered NOAM servers.

$ keyexchange admusr@<Recovered_NOAM Hostname> - Navigate to Main Menu,

Configuration, and then HA

- Click Edit, for each NOAM server whose "Max Allowed HA" role is set to Standby, set it to Active and click OK.

- Navigate to Main Menu, click

Status & Manage, and then

Server.

Select each recovered NOAM server and click Restart.

- Activate the features Map-Diameter Interworking (MAP-IWF) and Policy and

Charging Application (PCA) as follows:

- For PCA: Establish SSH sessions to all the recovered NOAM

servers and log in as admusr. Refer and run procedure "PCA Activation on Standby NOAM" server on all recovered NOAM

Servers to re-activate PCA.

Establish SSH session to the recovered active NOAM, login as admusr. For MAP-IWF:

- Establish SSH session to the recovered active NOAM,

login as admusr. Refer activate Map-Diameter Interworking

(MAP-IWF).

Note:

- While running the activation script,

the following error message (and corresponding

messages) output may be seen which can be ignored

iload#31000{S/W Fault}. - If any of the MPs are failed and recovered, then these MP servers should be restarted after activation of the feature.

- While running the activation script,

the following error message (and corresponding

messages) output may be seen which can be ignored

- Establish SSH session to the recovered active NOAM,

login as admusr. Refer activate Map-Diameter Interworking

(MAP-IWF).

- For PCA: Establish SSH sessions to all the recovered NOAM

servers and log in as admusr. Refer and run procedure "PCA Activation on Standby NOAM" server on all recovered NOAM

Servers to re-activate PCA.

- Switch DR NOAM back to secondary: After the system has been recovered. Refer to Diameter Signaling router SDS NOAM Failover Users Guide.

- Navigate to Main Menu,

Administration, Remote Servers

and then Data Export.

- Click Key Exchange. Enter the password and click OK.

- Navigate to Main Menu, Alarms & Events, and then View Active.

- Verify the recovered servers that are not contributing to any active alarms (Replication, Topology misconfiguration, database impairments, NTP, etc).