2.4 Configuring Flash and Replacing a Defective Flash Card on a Non-Virtual Exalytics Machine

This section contains the following topics:

2.4.1 License to Receive Open Source Code

The license for each component is located in the documentation, which may be delivered with the Oracle Linux programs or accessed online at http://oss.oracle.com/linux/legal/oracle-list.html and/or in the component's source code.

For technology in binary form that is licensed under an open source license that gives you the right to receive the source code for that binary, you may be able to obtain a copy of the applicable source code at these site(s):

https://edelivery.oracle.com/linux

If the source code for such technology was not provided to you with the binary, you can also receive a copy of the source code on physical media by submitting a written request to:

Oracle America, Inc.

Attn: Oracle Linux Source Code Requests

Development and Engineering Legal

500 Oracle Parkway, 10th Floor

Redwood Shores, CA 94065

Your request should include:

-

The name of the component or binary file(s) for which you are requesting the source code

-

The name and version number of the Oracle Software

-

The date you received the Oracle Software

-

Your name

-

Your company name (if applicable)

-

Your return mailing address and email

-

A telephone number in the event we need to reach you.

We may charge you a fee to cover the cost of physical media and processing. Your request must be sent (i) within three (3) years of the date you received technology that included the component or binary file(s) that are the subject of your request, or (ii) in the case of code licensed under the GPL v3, for as long as Oracle offers spare parts or customer support for that Software model.

2.4.2 Prerequisites for Configuring Flash

The following prerequisites must be met before configuring Flash on the Exalytics Machine:

-

You are running Exalytics Base Image 2.0.0.2.el6.

-

Oracle Field Services engineers have installed and configured six Flash cards (on an X2-4 or X3-4 Exalytics Machine) or have configured three Flash cards (on an X4-4, X5-4, or X6-4 Exalytics Machine).

2.4.3 Configuring Flash

When you upgrade to Oracle Exalytics Release 2.2, Flash drivers are included in the Oracle Exalytics Base Image 2.0.0.2.el6. To improve the performance and storage capacity of the Exalytics Machine you must configure Flash.

Note:

The installed Flash drives use software RAID.

Oracle supports two types of RAID configurations:

-

RAID10: Is usually referred to as stripe of mirrors and is the most common RAID configuration. RAID10 duplicates and stripes data from one drive on a second drive so that if either drive fails, no data is lost. This is useful when reliability is more important than data storage capacity.

-

RAID05: In RAID05 all drives are combined to work as a single drive. Data is transferred to disks by independent read and write operations. You need at least three disks for a RAID05 array.

This section consists of the following topics:

2.4.3.1 Configuring Flash on an X2-4 or X3-4 Exalytics Machine

Note:

This section is for users who want to configure new Flash, or re-configure existing Flash configuration. If you do not want to re-configure an existing Flash configuration, you can skip this section. Note that reconfiguring Flash will delete all existing data on the Flash cards.

An X2-4 or X3-4 Exalytics Machine is configured with six Flash cards. Each Flash card has four 100 GB drives.

Depending on your requirements, you can configure Flash for the following combinations:

-

RAID10

Note:

Oracle recommends that you do not configure RAID05 on an X2-4 or X3-4 Exalytics Machine.

-

EXT3 or EXT4 file system

Note:

Oracle recommends you configure Flash on the EXT4 file system.

To configure Flash:

-

Restart the Exalytics Machine using ILOM.

-

Log on to the ILOM web-based interface.

-

In the left pane of the ILOM, expand Host Management, then click Power Control. In the Select Action list, select Power Cycle, then click Save.

The Exalytics Machine restarts.

-

-

Enter the following command as a root user:

# /opt/exalytics/bin/configure_flash.sh --<RAID_TYPE> --<FS_TYPE>For example:

-

To configure Flash for a RAID10 configuration, enter the following command:

# /opt/exalytics/bin/configure_flash.sh --RAID10 --EXT4

Note:

The following procedure assumes configuring Flash for a RAID10 configuration.

The following warning, applicable only for software RAID, is displayed: "This flash configuration script will remove any existing RAID arrays, as well as remove any partitions on any flash drives and will create a new RAID array across all these flash drives. This will result in ALL DATA BEING LOST from these drives. Do you still want to proceed with this flash configuration script? (yes/no)"

-

-

Enter Yes at the prompt to continue running the script.

The script performs the following tasks:

-

Erases all existing RAID configuration on the Flash cards.

-

Distributes multiple RAID1 disks so that two parts of the RAID belong to different cards.

-

Stripes all Flash RAID1 drives into a single RAID0.

-

-

To verify that Flash has been configured correctly, enter the following command:

# df -hThe output should look similar to the following:

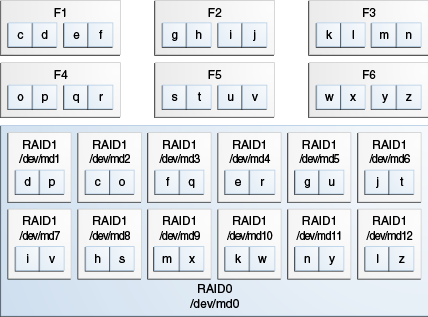

Filesystem Size Used Avail Use% Mounted on /dev/mapper/VolGroup00-LogVol00 718G 4.4G 677G 1% //dev/sda1 99M 15M 79M 16% /boottmpfs 1010G 0 1010G 0% /dev/shm/dev/md0 1.1T 199M 1.1T 1% /u02Flash is configured as shown in Figure 2-1.

-

Each Flash card (F1, F2, F3, and so on) has four 100 GB drives. Each drive maps to a device, such as /dev/sdg, /dev/sdh, /dev/sdi, /dev/sdj, and so on.

-

Two Flash drives are configured on RAID1 /dev/md1, two Flash drives are configured on RAID1 /dev/md2, two Flash drives are configured on RAID1 /dev/md3, and so on, for a total of twelve RAID1s.

-

The twelve RAID1s are configured on the parent RAID0 /dev/md0.

Figure 2-1 Flash Configuration on RAID10 for an X2-4 and X3-4 Exalytics Machine

Description of "Figure 2-1 Flash Configuration on RAID10 for an X2-4 and X3-4 Exalytics Machine"

-

-

To verify the status of Flash cards, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shThe output for all the six Flash cards should look similar to the following:

Fetching some info on installed flash drives .... Driver version : 01.250.41.04 (2012.06.04) Supported number of flash drives detected (6) Flash card 1 : Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 108.05.00.00 Devices: /dev/sdd /dev/sdc /dev/sdf /dev/sde Flash card 2 : Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 108.05.00.00 Devices: /dev/sdg /dev/sdj /dev/sdi /dev/sdh Flash card 3 : Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 108.05.00.00 Devices: /dev/sdm /dev/sdk /dev/sdn /dev/sdl Flash card 4 : Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 108.05.00.00 Devices: /dev/sdp /dev/sdo /dev/sdq /dev/sdr Flash card 5 : Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 108.05.00.00 Devices: /dev/sdu /dev/sdt /dev/sdv /dev/sds Flash card 6 : Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 108.05.00.00 Devices: /dev/sdx /dev/sdw /dev/sdy /dev/sdz

Parent topic: Configuring Flash

2.4.3.2 Configuring Flash on an X4-4 Exalytics Machine

An X4-4 Exalytics Machine is configured with three Flash cards. Each Flash card has four 200 GB drives.

Depending on your requirements, you can configure Flash for the following combinations:

-

RAID10 or RAID05

-

EXT3 or EXT4 file system

Note:

Oracle recommends you configure Flash on the EXT4 file system.

To configure Flash:

-

Restart the Exalytics Machine using ILOM.

-

Log on to the ILOM web-based interface.

-

In the left pane of the ILOM, expand Host Management, then click Power Control. In the Select Action list, select Power Cycle, then click Save.

The Exalytics Machine restarts.

-

-

Enter the following command as a root user:

# /opt/exalytics/bin/configure_flash.sh--<RAID_TYPE> --<FS_TYPE>For example:

-

To configure Flash for a RAID10 configuration, enter the following command:

# /opt/exalytics/bin/configure_flash.sh --RAID10 --EXT4 -

To configure Flash for a RAID05 configuration, enter the following command:

# /opt/exalytics/bin/configure_flash.sh --RAID05 --EXT4Flash is configured for a RAID05 configuration as shown in Figure 2-3.

Note:

The following procedure assumes configuring Flash for a RAID10 configuration on an X4-4 Exalytics Machine.

The following warning, applicable only for software RAID, is displayed: "This flash configuration script will remove any existing RAID arrays, as well as remove any partitions on any flash drives and will create a new RAID array across all these flash drives. This will result in ALL DATA BEING LOST from these drives. Do you still want to proceed with this flash configuration script? (yes/no)"

-

-

Enter Yes at the prompt to continue running the script.

The script performs the following tasks:

-

Erases all existing RAID configuration on the Flash cards.

-

Distributes multiple RAID1 disks so that two parts of the RAID belong to different cards.

-

Stripes all Flash RAID1 drives into a single RAID0.

-

-

To verify that Flash has been configured correctly, enter the following command:

# df -hThe output should look similar to the following:

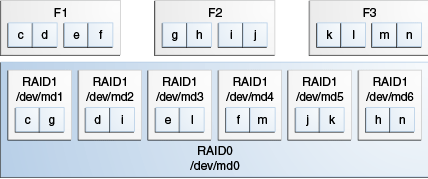

Filesystem Size Used Avail Use% Mounted on/dev/mapper/VolGroup00-LogVol00 989G 23G 915G 3% //dev/mapper/VolGroup01-LogVol00 3.2T 13G 3.0T 1% /u01/dev/sda1 99M 25M 70M 27% /boottmpfs 1010G 0 1010G 0% /dev/shm/dev/md0 1.1T 199M 1.1T 1% /u02Flash is configured as shown in Figure 2-2.

-

Each Flash card (F1, F2, and F3) has four 200 GB drives. Each drive maps to a device, such as /dev/sdg, /dev/sdh, /dev/sdi, /dev/sdj, and so on.

-

Two Flash drives are configured on RAID1 /dev/md1, two Flash drives are configured on RAID1 /dev/md2, two Flash drives are configured on RAID1 /dev/md3, and so on, for a total of six RAID1s.

-

The six RAID1s are configured on the parent RAID0 /dev/md0.

Figure 2-2 Flash Configuration on RAID10 for an X4-4 Exalytics Machine

Description of "Figure 2-2 Flash Configuration on RAID10 for an X4-4 Exalytics Machine"

-

-

To verify the status of Flash cards, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shThe output for all the three Flash cards should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version : 01.250.41.04 (2012.06.04) Supported number of flash drives detected (3) Flash card 1 : Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdc /dev/sdd /dev/sde /dev/sdf Flash card 2 : Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdj /dev/sdh /dev/sdg /dev/sdi Flash card 3 : Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdl /dev/sdm /dev/sdk /dev/sdn Summary: Healthy flash drives : 3 Broken flash drives : 0 Pass : Flash card health check passed

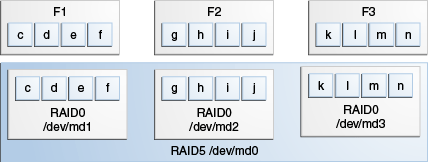

You can similarly configure Flash on RAID05. Flash is configured on RAID05 as shown Figure 2-3.

Figure 2-3 Flash Configuration on RAID05 for an X4-4 Exalytics Machine

Description of "Figure 2-3 Flash Configuration on RAID05 for an X4-4 Exalytics Machine"

Parent topic: Configuring Flash

2.4.3.3 Configuring Flash on an X6-4 and X5-4 Exalytics Machine

An X6-4 Exalytics Machine is configured with either three 3.2TB F320 Flash cards for a total of 9.6TB or three 6.4TB F640 Flash cards for a total of 19.2TB. An X5-4 Exalytics Machine is configured with three 1.6TB Flash cards for a total of 4.8TB.

Depending on your requirements, you can configure Flash for the following combinations:

-

RAID10 or RAID5

-

EXT3 or EXT4 file system

Note:

Oracle recommends you configure Flash on the EXT4 file system.

To configure Flash:

Parent topic: Configuring Flash

2.4.4 Replacing a Defective Flash Card

This section consists of the following topics:

2.4.4.1 Replacing a Defective Flash Card on an X2-4 or X3-4 Exalytics Machine

An X2-4 and X3-4 Exalytics Machine is configured with six Flash cards. Each Flash card contains four devices. When one device on a Flash card fails, the entire Flash card becomes defective and has to be replaced. The following procedure assumes you have a defective device (/dev/sdb) mapped to a Flash card which is installed on the parent RAID0 /dev/md0, see Figure 2-1.

To replace a failed or defective Flash card on an X2-4 or X3-4 Exalytics Machine:

-

To verify the status of Flash cards, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shIf a defective Flash card is detected, the output displays an "Overall Health: ERROR" message, and the summary indicates the defective card and the location of the Peripheral Component Interconnect Express (PCIe) card.

The output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version : 01.250.41.04 (2012.06.04) Supported number of flash drives detected (6) Flash card 1 : Slot number: 1 Overall health status : ERROR. Use --detail for more info Size (in MB) : 286101 Capacity (in bytes) : 300000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdc /dev/sdd /dev/sda Flash card 2 : Slot number: 2 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdh /dev/sdg /dev/sdf /dev/sde Flash card 3 : Slot number: 3 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdl /dev/sdk /dev/sdj /dev/sdi Flash card 4 : Slot number: 5 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdp /dev/sdn /dev/sdo /dev/sdm Flash card 5 : Slot number: 7 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdt /dev/sds /dev/sdq /dev/sdr Flash card 6 : Slot number: 8 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdu /dev/sdx /dev/sdv /dev/sdw Raid Array Info (/dev/md0): /dev/md0: raid0 12 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 5 Broken flash drives : 1 Fail : Flash card health check failed. See above for more details.

Note the following:

-

Device /dev/sdb has failed and is no longer seen by Flash card 1. Make a note of the devices assigned to this card so you can check them later against the devices assigned to the replaced Flash card.

-

Slot number is the location of the PCIe card.

Note:

A defective Flash card can also be identified by an amber or red LED. Check the back panel of the Exalytics Machine to confirm the location of the defective Flash card.

-

-

Shut down and unplug the Exalytics Machine.

-

Replace the defective Flash card.

-

Restart the Exalytics Machine.

Note:

You can also start the Exalytics Machine using Integrated Lights Out Manager (ILOM).

-

To confirm that all Flash cards are now working and to confirm the RAID configuration, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shThe output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version : 01.250.41.04 (2012.06.04) Supported number of flash drives detected (6) Flash card 1 : Slot number: 1 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdd /dev/sda /dev/sdb /dev/sdc Flash card 2 : Slot number: 2 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdh /dev/sdg /dev/sdf /dev/sde Flash card 3 : Slot number: 3 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdi /dev/sdk /dev/sdl /dev/sdj Flash card 4 : Slot number: 5 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdm /dev/sdo /dev/sdp /dev/sdn Flash card 5 : Slot number: 7 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sds /dev/sdt /dev/sdr /dev/sdq Flash card 6 : Slot number: 8 Overall health status : GOOD Size (in MB) : 381468 Capacity (in bytes) : 400000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdw /dev/sdx /dev/sdv /dev/sdu Raid Array Info (/dev/md0): /dev/md0: 1116.08GiB raid0 12 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 6 Broken flash drives : 0 Pass : Flash card health check passed

Note the following:

-

New devices mapped to Flash card 1 are displayed.

-

In this example, the same devices (/dev/sdd, /dev/sda, /dev/sdb, /dev/sdc) are mapped to Flash card 1. If different devices are mapped to Flash card 1, you must use the new devices when re-assembling RAID1.

-

Since the replaced Flash card contains four new flash devices, the RAID configuration shows four missing RAID1 devices (one for each flash device on the replaced Flash Card). You must re-assemble RAID1 to enable the RAID1 configuration to recognize the new devices on the replaced Flash card.

Note:

A working Flash card can also be identified by the green LED. Check the back panel of the Exalytics Machine to confirm that LED of the replaced Flash card is green.

-

-

Re-assemble RAID1, by performing the following tasks:

-

To display all devices on the RAID1 configuration, enter the following command:

# cat /proc/mdstatThe output should look similar to the following:

Personalities : [raid1] [raid0] md0 : active raid0 md12[11] md7[6] md1[0] md8[7] md5[4] md11[10] md10[9] md9[8] md3[2] md6[5] md2[1] md4[3] 1170296832 blocks super 1.2 512k chunks md1 : active raid1 sdm[1] 97590656 blocks super 1.2 [2/1] [_U] md2 : active raid1 sdo[1] 97590656 blocks super 1.2 [2/1] [_U] md4 : active raid1 sdp[1] 97590656 blocks super 1.2 [2/1] [_U] md3 : active raid1 sdn[1] 97590656 blocks super 1.2 [2/1] [_U] md8 : active raid1 sdq[1] sdh[0] 97590656 blocks super 1.2 [2/2] [UU] md12 : active raid1 sdw[1] sdi[0] 97590656 blocks super 1.2 [2/2] [UU] md6 : active raid1 sdt[1] sdg[0] 97590656 blocks super 1.2 [2/2] [UU] md9 : active raid1 sdx[1] sdk[0] 97590656 blocks super 1.2 [2/2] [UU] md10 : active raid1 sdv[1] sdl[0] 97590656 blocks super 1.2 [2/2] [UU] md5 : active raid1 sdr[1] sdf[0] 97590656 blocks super 1.2 [2/2] [UU] md11 : active raid1 sdu[1] sdj[0] 97590656 blocks super 1.2 [2/2] [UU] md7 : active raid1 sds[1] sde[0] 97590656 blocks super 1.2 [2/2] [UU] unused devices: <none>Note the following:

-

Four md devices (md1, md2, md3, and md4) have a single sd device assigned.

-

The second line for these devices ends with a notation similar to [2/1] [_U].

-

-

To check the correct configuration of the md devices, enter the following command:

# cat /etc/mdadm.confThe output should look similar to the following:

ARRAY /dev/md1 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:1 UUID=d1e5378a:80eb2f97:2e7dd315:c08fc304 devices=/dev/sdc,/dev/sdm ARRAY /dev/md2 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:2 UUID=8226f5c2:e79dbd57:1976a29b:46a7dce1 devices=/dev/sdb,/dev/sdo ARRAY /dev/md3 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:3 UUID=79c16c90:eb67f53b:a1bdc83c:b717ebb7 devices=/dev/sda,/dev/sdn ARRAY /dev/md4 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:4 UUID=2b1d05bf:41447b90:40c84863:99c5b4c6 devices=/dev/sdd,/dev/sdp ARRAY /dev/md5 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:5 UUID=bbf1ce46:6e494aaa:eebae242:c0a56944 devices=/dev/sdf,/dev/sdr ARRAY /dev/md6 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:6 UUID=76257b58:ff74573a:a1ffef2b:12976a80 devices=/dev/sdg,/dev/sdt ARRAY /dev/md7 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:7 UUID=fde5cab2:e6ea51bd:2d2bd481:aa834fb9 devices=/dev/sde,/dev/sds ARRAY /dev/md8 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:8 UUID=9ed9b987:b8f52cc0:580485fb:105bf381 devices=/dev/sdh,/dev/sdq ARRAY /dev/md9 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:9 UUID=2e6ec418:ab1d29f6:4434431d:01ac464f devices=/dev/sdk,/dev/sdx ARRAY /dev/md10 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:10 UUID=01584764:aa8ea502:fd00307a:c897162e devices=/dev/sdl,/dev/sdv ARRAY /dev/md11 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:11 UUID=67fd1bb3:348fd8ce:1a512065:906bc583 devices=/dev/sdj,/dev/sdu ARRAY /dev/md12 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:12 UUID=6bb498e0:26aca961:78029fe9:e3c8a546 devices=/dev/sdi,/dev/sdw ARRAY /dev/md0 level=raid0 num-devices=12 metadata=1.2 name=localhost.localdomain:0 UUID=7edc1371:e8355571:c7c2f504:8a94a70f devices=/dev/md1,/dev/md10,/dev/md11,/dev/md12,/dev/md2,/dev/md3,/dev/md4,/dev/md5,/dev/md6,/dev/md7,/dev/md8,/dev/md9

Note the correct configuration of the following devices:

-

md1 device contains the /dev/sdc and /dev/sdm sd devices

-

md 2 device contains the /dev/sdb and /dev/sdo sd devices

-

md3 device contains the /dev/sda and /dev/sdn sd devices

-

md4 device contains the /dev/sdd and /dev/sdp sd devices

-

-

To add the missing sd devices (/dev/sdc, /dev/sdb, /dev/sda/, and /dev/sdd) to each of the four md devices (md1, md2, md3, and md4 respectively), enter the following commands:

# mdadm /dev/md1 --add /dev/sdcThe sd device /dev/sdc is added to the md1 device. The output should look similar to the following:

mdadm: added /dev/sdc

# mdadm /dev/md2 --add /dev/sdbThe sd device /dev/sdb is added to the md2 device. The output should look similar to the following:

mdadm: added /dev/sdb

# mdadm /dev/md3 --add /dev/sdaThe sd device /dev/sda is added to the md3 device. The output should look similar to the following:

mdadm: added /dev/sda

# mdadm /dev/md4 --add /dev/sdd

The sd device /dev/sdd is added to the md4 device. The output should look similar to the following:

mdadm: added /dev/sdd

RAID1 starts rebuilding automatically.

-

To check the recovery progress, enter the following command:

# cat /proc/mdstatMonitor the output and confirm that the process completes. The output should look similar to the following:

Personalities : [raid1] [raid0] md0 : active raid0 md12[11] md7[6] md1[0] md8[7] md5[4] md11[10] md10[9] md9[8] md3[2] md6[5] md2[1] md4[3] 1170296832 blocks super 1.2 512k chunks md1 : active raid1 sdc[2] sdm[1] 97590656 blocks super 1.2 [2/1] [_U] [==>..................] recovery = 10.4% (10244736/97590656) finish=7.0min speed=206250K/sec md2 : active raid1 sdb[2] sdo[1] 97590656 blocks super 1.2 [2/1] [_U] [=>...................] recovery = 8.1% (8000000/97590656) finish=7.4min speed=200000K/sec md4 : active raid1 sdd[2] sdp[1] 97590656 blocks super 1.2 [2/1] [_U] [>....................] recovery = 2.7% (2665728/97590656) finish=7.7min speed=205056K/sec md3 : active raid1 sda[2] sdn[1] 97590656 blocks super 1.2 [2/1] [_U] [=>...................] recovery = 6.3% (6200064/97590656) finish=7.3min speed=206668K/sec md8 : active raid1 sdq[1] sdh[0] 97590656 blocks super 1.2 [2/2] [UU] md12 : active raid1 sdw[1] sdi[0] 97590656 blocks super 1.2 [2/2] [UU] md6 : active raid1 sdt[1] sdg[0] 97590656 blocks super 1.2 [2/2] [UU] md9 : active raid1 sdx[1] sdk[0] 97590656 blocks super 1.2 [2/2] [UU] md10 : active raid1 sdv[1] sdl[0] 97590656 blocks super 1.2 [2/2] [UU] md5 : active raid1 sdr[1] sdf[0] 97590656 blocks super 1.2 [2/2] [UU] md11 : active raid1 sdu[1] sdj[0] 97590656 blocks super 1.2 [2/2] [UU] md7 : active raid1 sds[1] sde[0] 97590656 blocks super 1.2 [2/2] [UU] unused devices: <none>

-

Parent topic: Replacing a Defective Flash Card

2.4.4.2 Replacing a Defective Flash Card on an X4-4 Exalytics Machine

Each X4-4Exalytics Machine is configured with three Flash cards. Each Flash card contains four devices. When one device on a Flash card fails, the entire Flash card becomes defective and has to be replaced. Depending on the RAID configuration, you can replace a defective Flash card on a RAID10 or RAID5 configuration.

This section consists of the following topics:

- Replacing a Defective Flash Card on a RAID10 Configuration

- Replacing a Defective Flash Card on a RAID05 Configuration

Parent topic: Replacing a Defective Flash Card

2.4.4.2.1 Replacing a Defective Flash Card on a RAID10 Configuration

For RAID10 configuration, the following procedure assumes you have a defective device (/dev/sdb) mapped to a Flash card which is installed on the parent RAID0 /dev/md0, see Figure 2-2.

To replace a failed or defective Flash card on a RAID10 configuration:

-

To verify the status of Flash cards, enter the following command:

Output for X2-4, X3-4 and X4-4

# /opt/exalytics/bin/exalytics_CheckFlash.shIf a defective Flash card is detected, the output displays an "Overall Health: ERROR" message, and the summary indicates the defective card and the location of the Peripheral Component Interconnect Express (PCIe) card.

The output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version: 01.250.41.04 (2102.06.04) Supported number of flash drives detected (3) Flash card 1 : Slot number: 6 Overall health status : ERROR. Use --detail for more info Size (in MB) : 572202 Capacity (in bytes) : 600000000000 Firmware Version : 109.05.26.00 Devices: /dev/sde /dev/sdc /dev/sdf Flash card 2 : Slot number: 7 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdh /dev/sdj /dev/sdg /dev/sdi Flash card 3 : Slot number: 10 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdn /dev/sdl /dev/sdk /dev/sdm Raid Array Info (/dev/md0): /dev/md0: 1116.08GiB raid0 6 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 2 Broken flash drives : 1 Fail : Flash card health check failed. See above for more details. Output for X5-4 and X6-4

# /opt/exalytics/bin/exalytics_CheckFlash.shIf a defective Flash card is detected, the output displays an "Overall Health: ERROR" message, and the summary indicates the defective card and the location of the Peripheral Component Interconnect Express (PCIe) card.

The output should look similar to the following:

Checking Exalytics Flash Drive Status cardindex=0cIndex=0 Driver version not available Supported number of flash drives detected (3) Flash card 1 : Slot number: 0 Overall health status : GOOD Capacity (in bytes) : 819364513185792 Firmware Version : SUNW-NVME-1 PCI Vendor ID: 8086 Serial Number: CVMD421500421P6DGN Model Number: INTEL SSDPEDME016T4 Firmware Revision: 8DV10054 Number of Namespaces: 1 cardindex=1 cIndex=1 ********************************************************************* nvme0n1p1 ********************************************************************* Devices: /dev/nvme0n1p1 /dev/nvme0n1p2 /dev/nvme0n1p3 /dev/nvme0n1p4 Flash card 2 : Slot number: 0 Overall health status : GOOD Capacity (in bytes) : 819364513185792 Firmware Version : SUNW-NVME-2 PCI Vendor ID: 8086 Serial Number: CVMD421600081P6DGN Model Number: INTEL SSDPEDME016T4 Firmware Revision: 8DV10054 Number of Namespaces: 1 cardindex=2 cIndex=2 ********************************************************************* nvme1n1p1 ********************************************************************* Devices: /dev//dev/nvme1n1p1 /dev/nvme1n1p2 /dev/nvme1n1p3 /dev/nvme1n1p4 Flash card 3 : Slot number: 0 Overall health status : GOOD Capacity (in bytes) : 819364513185792 Firmware Version : SUNW-NVME-3 PCI Vendor ID: 8086 Serial Number: CVMD421600021P6DGN Model Number: INTEL SSDPEDME016T4 Firmware Revision: 8DV10054 Number of Namespaces: 1 cardindex=3 cIndex=3 ********************************************************************* nvme2n1p1 ********************************************************************* Devices: /dev/nvme2n1p1 /dev/nvme2n1p2 /dev/nvme2n1p3 /dev/nvme2n1p4 Raid Array Info (/dev/md0): mdadm --cannot open /dev/md0: No such file or directory Summary: Healthy flash drives : 3 Broken flash drives : 0 Pass : Flash card health check passed.Note the following:

-

Device /dev/sdb has failed and is no longer seen by Flash card 1. Make note of the devices assigned to this card so you can check them later against the devices assigned to the replaced Flash card.

-

Slot number is the location of the PCIe card.

Note:

A defective Flash card can also be identified by an amber or red LED. Check the back panel of the Exalytics Machine to confirm the location of the defective Flash card.

-

-

Shut down and unplug the Exalytics Machine.

-

Replace the defective Flash card.

-

Restart the Exalytics Machine.

Note:

You can also start the Exalytics Machine using Integrated Lights Out Manager (ILOM).

-

To confirm that all Flash cards are now working and to confirm the RAID configuration, enter the following command:

Output for X2-4, X3-4 and X4-4

# /opt/exalytics/bin/exalytics_CheckFlash.shThe output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version: 01.250.41.04 (2012.06.04) Supported number of flash drives detected (3) Flash card 1 : Slot number: 6 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdd /dev/sdf /dev/sdc /dev/sde Flash card 2 : Slot number: 7 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdi /dev/sdj /dev/sdh /dev/sdg Flash card 3 : Slot number: 10 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdn /dev/sdm /dev/sdl /dev/sdk Raid Array Info (/dev/md0): /dev/md0: raid0 6 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 3 Broken flash drives : 0 Pass : Flash card health check passed

Output for X5-4 and X6-4

# /opt/exalytics/bin/exalytics_CheckFlash.shThe output should look similar to the following:

Checking Exalytics Flash Drive Status cardindex=0 cIndex=0 Fetching some info on installed flash drives .... Driver version not available Supported number of flash drives detected (3) Flash card 1 : Slot number: 0 Overall health status : GOOD Capacity (in bytes) : 819364513185792 Firmware Version : SUNW-NVME-1 PCI Vendor ID: 8086 Serial Number: CVMD421500421P6DGN Model Number: INTEL SSDPEDME016T4 Firmware Revision: 8DV10054 Number of Namespaces: 1 cardindex=1 cIndex=1 ********************************************************************* nvme0n1p1 ********************************************************************* Devices: /dev/nvme0n1p1 /dev/nvme0n1p2 /dev/nvme0n1p3 /dev/nvme0n1p4 Flash card 2 : Slot number: 0 Overall health status : GOOD Capacity (in bytes) : 819364513185792 Firmware Version : SUNW-NVME-2 PCI Vendor ID: 8086 Serial Number: CVMD421600081P6DGN Model Number: INTEL SSDPEDME016T4 Firmware Revision: 8DV10054 Number of Namespaces: 1 cardindex=2 cIndex=2 ********************************************************************* nvme1n1p1 ********************************************************************* Devices: /dev/nvme1n1p1 /dev/nvme1n1p2 /dev/nvme1n1p3 /dev/nvme1n1p4 Flash card 3 : Slot number: 0 Overall health status : GOOD Capacity (in bytes) : 819364513185792 Firmware Version : SUNW-NVME-3 PCI Vendor ID: 8086 Serial Number: CVMD421600021P6DGN Model Number: INTEL SSDPEDME016T4 Firmware Revision: 8DV10054 Number of Namespaces: 1 cardindex=3 cIndex=3 ********************************************************************* nvme2n1p1 ********************************************************************* Devices: /dev/nvme2n1p1 /dev/nvme2n1p2 /dev/nvme2n1p3 /dev/nvme2n1p4 Raid Array Info (/dev/md0): mdadm: cannot open /dev/md0: No such file or directory. Summary: Healthy flash drives : 3 Broken flash drives : 0 Pass : Flash card health check passed

Note the following:

-

New devices mapped to the Flash card1 are displayed.

-

The RAID10 configuration shows /dev/md0 as a RAID0 with six devices. See Figure 2-2.

-

In this example, new devices /dev/sdd, /dev/sdf, /dev/sdc, and /dev/sde are mapped to Flash card 1.

-

Since the replaced Flash card contains four new flash devices, the RAID configuration shows four missing devices (one for each flash device on the replaced Flash Card). You must re-assemble RAID1 to enable the RAID configuration to recognize the new devices on the replaced Flash card.

Note:

A working Flash card can also be identified by the green LED. Check the back panel of the Exalytics Machine to confirm that LED of the replaced Flash card is green.

-

-

Re-assemble RAID1, by performing the following tasks:

-

To display all devices on the RAID1 configuration, enter the following command:

# cat /proc/mdstatThe output should look similar to the following:

Personalities : [raid1] [raid0] md3 : active (auto-read-only) raid1 sdm[1] 195181376 blocks super 1.2 [2/1] [_U] bitmap: 0/2 pages [0KB], 65536KB chunk md4 : active (auto-read-only) raid1 sdk[1] 195181376 blocks super 1.2 [2/1] [_U] bitmap: 0/2 pages [0KB], 65536KB chunk md2 : active (auto-read-only) raid1 sdi[1] 195181376 blocks super 1.2 [2/1] [_U] bitmap: 0/2 pages [0KB], 65536KB chunk md6 : active raid1 sdn[1] sdj[0] 195181376 blocks super 1.2 [2/2] [UU] bitmap: 0/2 pages [0KB], 65536KB chunk md0 : inactive md6[5] md5[4] md1[0] 585149952 blocks super 1.2 md1 : active raid1 sdh[1] 195181376 blocks super 1.2 [2/1] [_U] bitmap: 1/2 pages [4KB], 65536KB chunk md5 : active raid1 sdl[1] sdg[0] 195181376 blocks super 1.2 [2/2] [UU] bitmap: 0/2 pages [0KB], 65536KB chunk unused devices: <none>

Note the following:

-

Four md devices (md3, md4, md2, and md1) have a single sd device assigned.

-

The second line for these devices ends with a notation similar to [2/1] [_U].

-

-

To check the correct configuration of the md devices, enter the following command:

# cat /etc/mdadm.confThe output should look similar to the following:

ARRAY /dev/md1 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:1 UUID=2022e872:f5beb736:5a2f10a7:27b209fc devices=/dev/sdd,/dev/sdh ARRAY /dev/md2 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:2 UUID=b4aff5bf:18d7ab45:3a9ba04e:a8a56288 devices=/dev/sde,/dev/sdi ARRAY /dev/md3 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:3 UUID=4e54d5d1:51314c65:81c00e25:030edd38 devices=/dev/sdc,/dev/sdm ARRAY /dev/md4 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:4 UUID=f8a533ed:e195f83a:9dcc86eb:0427a897 devices=/dev/sdf,/dev/sdk ARRAY /dev/md5 level=raid1 num-devices=2 metadata=1.2 name=localhost.localdomain:5 UUID=ec7eb621:35b9233f:049a5e1b:987e7d72 devices=/dev/sdg,/dev/sdl ARRAY /dev/md6 level=raid1 num-devices=2 metadata=1.2 n

Note the correct configuration of the following devices:

-

md1 device contains the /dev/sdd and /dev/sdh sd devices

-

md 2 device contains the /dev/sde and /dev/sdi sd devices

-

md3 device contains the /dev/sdc and /dev/sdm sd devices

-

md4 device contains the /dev/sdf and /dev/sdk sd devices

-

-

To add the missing sd devices (/dev/sdd, /dev/sde, /dev/sdc/, and /dev/sdf) to each of the four md devices (md1, md2, md3, and md4 respectively), enter the following commands:

# mdadm /dev/md1 --add /dev/sddThe sd device /dev/sdd is added to the md1 device. The output should look similar to the following:

mdadm: added /dev/sdd

# mdadm /dev/md2 --add /dev/sdeThe sd device /dev/sde is added to the md2 device. The output should look similar to the following:

mdadm: added /dev/sde

# mdadm /dev/md3 --add /dev/sdcThe sd device /dev/sdc is added to the md3 device. The output should look similar to the following:

mdadm: added /dev/sdc

# mdadm /dev/md4 --add /dev/sdf

The sd device /dev/sdf is added to the md4 device. The output should look similar to the following:

mdadm: added /dev/sdf

RAID1 starts rebuilding automatically.

-

To check the recovery progress, enter the following command:

# cat /proc/mdstatMonitor the output and confirm that the process completes. The output should look similar to the following:

Personalities : [raid1] [raid0] md3 : active raid1 sdc[2] sdm[1] 195181376 blocks super 1.2 [2/1] [_U] [>....................] recovery = 1.8% (3656256/195181376) finish=15.7min speed=203125K/sec bitmap: 0/2 pages [0KB], 65536KB chunk md4 : active raid1 sdf[2] sdk[1] 195181376 blocks super 1.2 [2/1] [_U] [>....................] recovery = 1.2% (2400000/195181376) finish=16.0min speed=200000K/sec bitmap: 0/2 pages [0KB], 65536KB chunk md2 : active raid1 sde[2] sdi[1] 195181376 blocks super 1.2 [2/1] [_U] [>....................] recovery = 3.4% (6796736/195181376) finish=15.2min speed=205961K/sec bitmap: 0/2 pages [0KB], 65536KB chunk md6 : active raid1 sdn[1] sdj[0] 195181376 blocks super 1.2 [2/2] [UU] bitmap: 0/2 pages [0KB], 65536KB chunk md0 : inactive md6[5] md5[4] md1[0] 585149952 blocks super 1.2 md1 : active raid1 sdd[2] sdh[1] 195181376 blocks super 1.2 [2/1] [_U] [>....................] recovery = 4.4% (8739456/195181376) finish=15.0min speed=206062K/sec bitmap: 1/2 pages [4KB], 65536KB chunk md5 : active raid1 sdl[1] sdg[0] 195181376 blocks super 1.2 [2/2] [UU] bitmap: 0/2 pages [0KB], 65536KB chunk unused devices: <none>

-

2.4.4.2.2 Replacing a Defective Flash Card on a RAID05 Configuration

For RAID05 configuration, the following procedure assumes you have a defective device (/dev/sdb) mapped to a Flash card which is installed on the parent RAID5 /dev/md0, see Figure 2-3.

-

To verify the status of Flash cards, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shIf a defective Flash card is detected, the output displays an "Overall Health: ERROR" message, and the summary indicates the defective card and the location of the Peripheral Component Interconnect Express (PCIe) card.

The output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version : 01.250.41.04 (2012.06.04) Supported number of flash drives detected (3) Flash card 1 : Slot number: 6 Overall health status : ERROR. Use --detail for more info Size (in MB) : 572202 Capacity (in bytes) : 600000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdf /dev/sdc /dev/sde Flash card 2 : Slot number: 7 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdh /dev/sdj /dev/sdi /dev/sdg Flash card 3 : Slot number: 10 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdn /dev/sdk /dev/sdm /dev/sdl Raid Array Info (/dev/md0): /dev/md0: 1488.86GiB raid5 3 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 2 Broken flash drives : 1 Fail : Flash card health check failed. See above for more details.Note the following:

-

Device /dev/sdb has failed and is no longer seen by Flash card 1. Make note of the devices assigned to this card so you can check them later against the devices assigned to the replaced Flash card.

-

Slot number is the location of the PCIe card.

Note:

A defective Flash card can also be identified by an amber or red LED. Check the back panel of the Exalytics Machine to confirm the location of the defective Flash card.

-

-

Shut down and unplug the Exalytics Machine.

-

Replace the defective Flash card.

-

Restart the Exalytics Machine.

Note:

You can also start the Exalytics Machine using Integrated Lights Out Manager (ILOM).

-

To confirm that all Flash cards are now working and to confirm the RAID configuration, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shThe output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version : 01.250.41.04 (2012.06.04) Supported number of flash drives detected (3) Flash card 1 : Slot number: 6 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdd /dev/sdf /dev/sdc /dev/sde Flash card 2 : Slot number: 7 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdg /dev/sdi /dev/sdh /dev/sdj Flash card 3 : Slot number: 10 Overall health status : GOOD Size (in MB) : 762936 Capacity (in bytes) : 800000000000 Firmware Version : 109.05.26.00 Devices: /dev/sdl /dev/sdk /dev/sdn /dev/sdm Raid Array Info (/dev/md0): /dev/md0: 1488.86GiB raid5 3 devices, 1 spare. Use mdadm --detail for more detail. Summary: Healthy flash drives : 3 Broken flash drives : 0 Pass : Flash card health check passed

Note the following:

-

New devices mapped to the Flash card1 are displayed.

-

The RAID05 configuration shows /dev/md0 as a RAID5 with 3 devices. See Figure 2-3.

-

In this example, new devices /dev/sdd, /dev/sdf, /dev/sdc, and /dev/sde are mapped to Flash card 1.

-

Since the replaced Flash card contains four new flash devices, the RAID configuration shows four missing devices (one for each flash device on the replaced Flash Card). You must re-assemble RAID05 to enable the RAID configuration to recognize the new devices on the replaced Flash card.

Note:

A working Flash card can also be identified by the green LED. Check the back panel of the Exalytics Machine to confirm that LED of the replaced Flash card is green.

-

-

Re-assemble RAID05, by performing the following steps:

-

To check the RAID05 configuration, enter the following command:

# cat /proc/mdstatThe output should look similar to the following:

Personalities : [raid0] [raid6] [raid5] [raid4] md3 : active raid0 sdn[2] sdk[1] sdm[3] sdl[0] 780724224 blocks super 1.2 512k chunks md0 : active raid5 md3[3] md2[1] 1561186304 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU] bitmap: 6/6 pages [24KB], 65536KB chunk md2 : active raid0 sdi[1] sdh[2] sdj[3] sdg[0] 780724224 blocks super 1.2 512k chunks unused devices: <none>Note the following:

-

Since the replaced Flash card contains four new flash devices on RAID0 /dev/md1, the configuration shows RAID5 /dev/md0 as missing md1.

-

The second line of the RAID5 configuration ends with a notation similar to [3/2] [_UU].

-

To enable RAID5 to recognize md1, you need to recreate md1.

-

-

To recreate md1, enter the following command:

# mdadm /dev/md1 --create --raid-devices=4 --level=0 /dev/sdf /dev/sde /dev/sdc /dev/sddThe output should look similar to the following:

mdadm: array /dev/md1 started.

-

After confirming that md1 created successfully, you add the newly created md1 to RAID5.

-

To add md1 to RAID5, enter the following command:

# mdadm /dev/md0 --add /dev/md1The output should look similar to the following:

mdadm: added /dev/md1

RAID5 starts rebuilding automatically.

-

To check the recovery progress, enter the following command:

# cat /proc/mdstatMonitor the output and confirm that the process completes. The output should look similar to the following:

Personalities : [raid0] [raid6] [raid5] [raid4] md1 : active raid0 sdd[3] sdc[2] sde[1] sdf[0] 780724224 blocks super 1.2 512k chunks md3 : active raid0 sdn[2] sdk[1] sdm[3] sdl[0] 780724224 blocks super 1.2 512k chunks md0 : active raid5 md1[4] md3[3] md2[1] 1561186304 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU] [>....................] recovery = 0.5% (4200064/780593152) finish=64.6min speed=200003K/sec bitmap: 6/6 pages [24KB], 65536KB chunk md2 : active raid0 sdi[1] sdh[2] sdj[3] sdg[0] 780724224 blocks super 1.2 512k chunks unused devices: <none> -

To recreate the mdadm.conf configuration file, which ensures that RAID5 details are maintained when you restart the Exalytics Machine, enter the following command:

# mdadm --detail --scan --verbose > /etc/mdadm.confTo confirm that the file is recreated correctly, enter the following command:

# cat /etc/mdadm.confMonitor the output and confirm that the process completes. The output should look similar to the following:

ARRAY /dev/md2 level=raid0 num-devices=4 metadata=1.2 name=localhost.localdomain:2 UUID=d7a213a4:d3eab6de:b62bb539:7f8025f4 devices=/dev/sdg,/dev/sdh,/dev/sdi,/dev/sdj ARRAY /dev/md3 level=raid0 num-devices=4 metadata=1.2 name=localhost.localdomain:3 UUID=b6e6a4d9:fb740b73:370eb061:bb9ded07 devices=/dev/sdk,/dev/sdl,/dev/sdm,/dev/sdn ARRAY /dev/md1 level=raid0 num-devices=4 metadata=1.2 name=localhost.localdomain:1 UUID=185b52fc:ca539982:3c4af30c:5578cdfe devices=/dev/sdc,/dev/sdd,/dev/sde,/dev/sdf ARRAY /dev/md0 level=raid5 num-devices=3 metadata=1.2 spares=1 name=localhost.localdomain:0 UUID=655b4442:94160b2f:0d40b860:e9eef70f devices=/dev/md1,/dev/md2,/dev/md3

Note:

If you have other customizations in the mdadm.conf configuration file (such as updating the list of devices that are mapped to each RAID), you must manually edit the file.

-

2.4.4.3 Replacing a Defective Flash Card on an X5-4 or X6-4 Exalytics Machine

Each X5-4, or X6-4 Exalytics Machine is configured with three Flash cards.

In case of X5-4 Exalytics machine, each Flash card contains four partitions configured in a single 1.6 TB device.

In case of X6-4 Exalytics machine, for F320 card, each Flash card contains four partitions configured in a single 3.2 TB device, and for F640 card, each Flash card contains four partitions configured in two 3.2TB devices.

When one device on a Flash card fails, the entire Flash card becomes defective and has to be replaced. Depending on the RAID configuration, you can replace a defective Flash card on a RAID10 or RAID5 configuration.

This section consists of the following topics:

- Replacing a Defective Flash Card on a RAID10 Configuration

- Replacing a Defective Flash Card on a RAID5 Configuration

Parent topic: Replacing a Defective Flash Card

2.4.4.3.1 Replacing a Defective Flash Card on a RAID10 Configuration

For RAID10 configuration, the following procedure assumes you have a defective device (/dev/nvme0n1) mapped to a Flash card which is installed on the parent RAID0 /dev/md0, see Figure 2-2.

To replace a failed or defective Flash card on a RAID10 configuration:

-

To verify the status of Flash cards, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shIf a defective Flash card is detected, the output displays an "Overall Health: ERROR" message, and the summary indicates the defective card and the location of the Peripheral Component Interconnect Express (PCIe) card.

The output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version: 01.250.41.04 (2102.06.04) Supported number of flash drives detected (3) Flash card 1 : Slot number: 6 Overall health status : ERROR. Use --detail for more info Capacity (in bytes) : 1638723477307392 Firmware Version : : SUNW-NVME-1 PCI Vendor ID: 144d Serial Number: S2T7NAAH200021 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA8R3Q Number of Namespaces: 1 cardindex=1 cIndex=1 ********************************************************************* nvme0n1p1 ********************************************************************* Devices: /dev/nvme0n1p1 /dev/nvme0n1p2 /dev/nvme0n1p3 /dev/nvme0n1p4 Flash card 2 : Slot number: 7 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-2 PCI Vendor ID: 144d Serial Number: S2T7NAAH200042 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA7R3Q Number of Namespaces: 1 cIndex=2 ********************************************************************* nvme0n1p1 ********************************************************************* Devices: /dev/nvme1n1p1 /dev/nvme1n1p2 /dev/nvme1n1p3 /dev/nvme1n1p4 Flash card 3 : Slot number: 10 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-3 PCI Vendor ID: 144d Serial Number: S2T7NAAH200019 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA7R3Q Number of Namespaces: 1 cardindex=3 cIndex=3 ********************************************************************* nvme2n1p1 ********************************************************************* Devices: /dev/nvme2n1p1 /dev/nvme2n1p2 /dev/nvme2n1p3 /dev/nvme2n1p4 Raid Array Info (/dev/md0): /dev/md0: 4469.73GiB raid0 6 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 2 Broken flash drives : 1 Fail : Flash card health check passed.

Note the following:

-

Device /dev/nvme0n1 has failed and is no longer seen by Flash card 1. Make note of the devices assigned to this card so you can check them later against the devices assigned to the replaced Flash card.

-

Slot number is the location of the PCIe card.

Note:

A defective Flash card can also be identified by an amber or red LED. Check the back panel of the Exalytics Machine to confirm the location of the defective Flash card.

-

-

Shut down and unplug the Exalytics Machine.

-

Replace the defective Flash card.

-

Restart the Exalytics Machine.

Note:

You can also start the Exalytics Machine using Integrated Lights Out Manager (ILOM).

-

To confirm that all Flash cards are now working and to confirm the RAID configuration, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shThe output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version: 01.250.41.04 (2012.06.04) Supported number of flash drives detected (3) Flash card 1 : Slot number: 6 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-1 PCI Vendor ID: 144d Serial Number: S2T7NAAH200021 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA8R3Q Number of Namespaces: 1 cardindex=1 cIndex=1 ********************************************************************* nvme0n1p1 ********************************************************************* Devices: /dev/nvme0n1p1 /dev/nvme0n1p2 /dev/nvme0n1p3 /dev/nvme0n1p4 Flash card 2 : Slot number: 7 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-2 PCI Vendor ID: 144d Serial Number: S2T7NAAH200042 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA7R3Q Number of Namespaces: 1 cardindex=2 cIndex=2 ********************************************************************* nvme1n1p1 ********************************************************************* Devices: /dev/nvme1n1p1 /dev/nvme1n1p2 /dev/nvme1n1p3 /dev/nvme1n1p4 Flash card 3 : Slot number: 10 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-3 PCI Vendor ID: 144d Serial Number: S2T7NAAH200019 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA7R3Q Number of Namespaces: 1 cardindex=3 cIndex=3 ********************************************************************* nvme2n1p1 ********************************************************************* Devices: /dev/nvme2n1p1 /dev/nvme2n1p2 /dev/nvme2n1p3 /dev/nvme2n1p4 Raid Array Info (/dev/md0): /dev/md0: 4469.73GiB raid0 6 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 3 Broken flash drives : 0 Pass : Flash card health check passed

Note the following:

-

New devices mapped to the Flash card1 are displayed.

-

The RAID10 configuration shows /dev/md0 as a RAID0 with six devices. See Figure 2-2.

-

In this example, new devices/dev/nvme0n1p1, /dev/nvme0n1p2, /dev/nvme0n1p3, and /dev/nvme0n1p4 are mapped to Flash card 1.

-

Since the replaced Flash card contains four new flash devices, the RAID configuration shows four missing devices (one for each flash device on the replaced Flash Card). You must re-assemble RAID1 to enable the RAID configuration to recognize the new devices on the replaced Flash card.

Note:

A working Flash card can also be identified by the green LED. Check the back panel of the Exalytics Machine to confirm that LED of the replaced Flash card is green.

-

-

Re-assemble RAID1, by performing the following tasks:

-

Create four partitions on replaced flash ( i.e. on /dev/nvme0n1) by entering following parted commands:

# parted -a optimal /dev/nvme0n1 mkpart primary 0% 25%# parted -a optimal /dev/nvme0n1 mkpart primary 25% 50%# parted -a optimal /dev/nvme0n1 mkpart primary 25% 75%# parted -a optimal /dev/nvme0n1 mkpart primary 75% 100% -

To display all devices on the RAID1 configuration, enter the following command:

# cat /proc/mdstatThe output should look similar to the following:

Personalities : [raid1] [raid0] md0 : inactive md5[4] md6[5] 780880896 blocks super 1.2 md4 : active (auto-read-only) raid1 nvme2n1p2[1] 390572032 blocks super 1.2 [2/1] [_U] bitmap: 0/3 pages [0KB], 65536KB chunk md6 : active raid1 nvme1n1p2[0] nvme2n1p4[1] 390572032 blocks super 1.2 [2/2] [UU] bitmap: 0/3 pages [0KB], 65536KB chunk md2 : active (auto-read-only) raid1 nvme1n1p4[1] 390572032 blocks super 1.2 [2/1] [_U] bitmap: 0/3 pages [0KB], 65536KB chunk md3 : active (auto-read-only) raid1 nvme2n1p1[1] 390571008 blocks super 1.2 [2/1] [_U] bitmap: 0/3 pages [0KB], 65536KB chunk md1 : active (auto-read-only) raid1 nvme1n1p3[1] 390571008 blocks super 1.2 [2/1] [_U] bitmap: 0/3 pages [0KB], 65536KB chunk md5 : active raid1 nvme2n1p3[1] nvme1n1p1[0] 390571008 blocks super 1.2 [2/2] [UU] bitmap: 0/3 pages [0KB], 65536KB chunk unused devices: <none>Note the following:

-

Four md devices (md3, md4, md2, and md1) have each nvme0n1 partition assigned.

-

The second line for these devices ends with a notation similar to [2/1] [_U].

-

-

To check the correct configuration of the md devices, enter the following command:

# cat /etc/mdadm.confThe output should look similar to the following:

ARRAY /dev/md1 level=raid1 num-devices=2 metadata=1.2 name=sca05-0a81e7a5:1 UUID=0e733ea5:fd42b3f9:81f5c5b2:84bf781c devices=/dev/nvme0n1p1,/dev/nvme1n1p3 ARRAY /dev/md2 level=raid1 num-devices=2 metadata=1.2 name=sca05-0a81e7a5:2 UUID=547ff945:8c53fc0a:fbeca454:adeba61d devices=/dev/nvme0n1p2,/dev/nvme1n1p4 ARRAY /dev/md3 level=raid1 num-devices=2 metadata=1.2 name=sca05-0a81e7a5:3 UUID=7c4e6ed9:71776997:10158365:ad43017f devices=/dev/nvme0n1p3,/dev/nvme2n1p1 ARRAY /dev/md4 level=raid1 num-devices=2 metadata=1.2 name=sca05-0a81e7a5:4 UUID=76b1d6e1:4347d7fa:f2f48b72:551d447a devices=/dev/nvme0n1p4,/dev/nvme2n1p2 ARRAY /dev/md5 level=raid1 num-devices=2 metadata=1.2 name=sca05-0a81e7a5:5 UUID=781576fe:31c22712:d018f992:03b1e7a0 devices=/dev/nvme1n1p1,/dev/nvme2n1p3 ARRAY /dev/md6 level=raid1 num-devices=2 metadata=1.2 name=sca05-0a81e7a5:6 UUID=92d57799:89ee990d:01f1a9ed:4a3eeb8c devices=/dev/nvme1n1p2,/dev/nvme2n1p4 ARRAY /dev/md0 level=raid0 num-devices=6 metadata=1.2 name=sca05-0a81e7a5:0 UUID=3033354a:07ad233f:3d3846ef:836bb07c devices=/dev/md1,/dev/md2,/dev/md3,/dev/md4,/dev/md5,/dev/md ARRAY /dev/md6 level=raid1 num-devices=2 metadata=1.2 n

Note the correct configuration of the following devices:

-

md1 device contains the /dev/nvme0n1p1 and /dev/nvme1n1p3 nvme flash partitions

-

md2 device contains the /dev/nvme0n1p2 and /dev/nvme1n1p4 nvme flash partitions

-

md3 device contains the /dev/nvme0n1p3 and /dev/nvme2n1p1 nvme flash partitions

-

md4 device contains the /dev/nvme0n1p4 and /dev/nvme2n1p2 nvme flash partitions

-

-

To add the missing nvme partitions (/dev/nvme0n1p1, /dev/nvme0n1p2, /dev/nvme0n1p3 and /dev/nvme0n1p4) to each of the four md devices (md1, md2, md3, and md4 respectively), enter the following commands:

# mdadm /dev/md1 --add /dev/nvme0n1p1 mdadm: added /dev/nvme0n1p1 # mdadm /dev/md2 --add /dev/nvme0n1p2 mdadm: added /dev/nvme0n1p2 # mdadm /dev/md3 --add /dev/nvme0n1p3 mdadm: added /dev/nvme0n1p3 # mdadm /dev/md4 --add /dev/nvme0n1p4 mdadm: added /dev/nvme0n1p4 mdadm: added /dev/sddRAID1 starts rebuilding automatically.

-

To check the recovery progress, enter the following command:

# cat /proc/mdstatMonitor the output and confirm that the process completes. The output should look similar to the following:

Personalities : [raid1] [raid0] md0 : inactive md5[4] md6[5] 780880896 blocks super 1.2 md4 : active raid1 nvme0n1p4[0] nvme2n1p2[1] 390572032 blocks super 1.2 [2/1] [_U] resync=DELAYED bitmap: 0/3 pages [0KB], 65536KB chunk md6 : active raid1 nvme1n1p2[0] nvme2n1p4[1] 390572032 blocks super 1.2 [2/2] [UU] bitmap: 0/3 pages [0KB], 65536KB chunk md2 : active (auto-read-only) raid1 nvme1n1p4[1] 390572032 blocks super 1.2 [2/1] [_U] bitmap: 0/3 pages [0KB], 65536KB chunk md3 : active raid1 nvme0n1p3[0] nvme2n1p1[1] 390571008 blocks super 1.2 [2/1] [_U] resync=DELAYED bitmap: 0/3 pages [0KB], 65536KB chunk md1 : active raid1 nvme0n1p1[2] nvme1n1p3[1] 390571008 blocks super 1.2 [2/1] [_U] [=>...................] recovery = 8.1% (31800064/390571008) finish=29.0min speed=206060K/sec bitmap: 0/3 pages [0KB], 65536KB chunk md5 : active raid1 nvme2n1p3[1] nvme1n1p1[0] 390571008 blocks super 1.2 [2/2] [UU] bitmap: 0/3 pages [0KB], 65536KB chunk unused devices: <none>

-

2.4.4.3.2 Replacing a Defective Flash Card on a RAID5 Configuration

For RAID5 configuration, the following procedure assumes you have a defective device (/dev/nvme0n1) mapped to a Flash card which is installed on the parent RAID5 /dev/md0, see Figure 2-3.

-

To verify the status of Flash cards, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shIf a defective Flash card is detected, the output displays an "Overall Health: ERROR" message, and the summary indicates the defective card and the location of the Peripheral Component Interconnect Express (PCIe) card.

The output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Supported number of flash drives detected (3) Flash card 1 : Slot number: 6 Overall health status : ERROR. Use --detail for more info Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-1 PCI Vendor ID: 144d Serial Number: S2T7NAAH200021 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA8R3Q Number of Namespaces: 1 cardindex=1 cIndex=1 ********************************************************************* nvme0n1p1 ********************************************************************* Devices: /dev/nvme0n1p1 /dev/nvme0n1p2 /dev/nvme0n1p3 /dev/nvme0n1p4 Flash card 2 : Slot number: 7 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-2 PCI Vendor ID: 144d Serial Number: S2T7NAAH200042 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA7R3Q Number of Namespaces: 1 cardindex=2 cIndex=2 ********************************************************************* nvme1n1p1 ********************************************************************* Devices: /dev/nvme1n1p1 /dev/nvme1n1p2 /dev/nvme1n1p3 /dev/nvme1n1p4 Flash card 3 : Slot number: 10 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-3 PCI Vendor ID: 144d Serial Number: S2T7NAAH200019 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA7R3Q Number of Namespaces: 1 cardindex=3 cIndex=3 ********************************************************************* nvme2n1p1 ********************************************************************* Devices: /dev/nvme2n1p1 /dev/nvme2n1p2 /dev/nvme2n1p3 /dev/nvme2n1p4 Raid Array Info (/dev/md0): /dev/md0: 4469.73GiB raid0 6 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 2 Broken flash drives : 1 Fail : Flash card health check passed.

Note the following:

-

Device /dev/nvme0n1 has failed and is no longer seen by Flash card 1. Make note of the devices assigned to this card so you can check them later against the devices assigned to the replaced Flash card.

-

Slot number is the location of the PCIe card.

Note:

A defective Flash card can also be identified by an amber or red LED. Check the back panel of the Exalytics Machine to confirm the location of the defective Flash card.

-

-

Shut down and unplug the Exalytics Machine.

-

Replace the defective Flash card.

-

Restart the Exalytics Machine.

Note:

You can also start the Exalytics Machine using Integrated Lights Out Manager (ILOM).

-

To confirm that all Flash cards are now working and to confirm the RAID configuration, enter the following command:

# /opt/exalytics/bin/exalytics_CheckFlash.shThe output should look similar to the following:

Checking Exalytics Flash Drive Status Fetching some info on installed flash drives .... Driver version : 01.250.41.04 (2012.06.04) Supported number of flash drives detected (3) Flash card 1 : Slot number: 6 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-1 PCI Vendor ID: 144d Serial Number: S2T7NAAH200021 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA8R3Q Number of Namespaces: 1 cardindex=1 cIndex=1 ********************************************************************* nvme0n1p1 ********************************************************************* Devices: /dev/nvme0n1p1 /dev/nvme0n1p2 /dev/nvme0n1p3 /dev/nvme0n1p4 Flash card 2 : Slot number: 7 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-2 PCI Vendor ID: 144d Serial Number: S2T7NAAH200042 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA7R3Q Number of Namespaces: 1 cardindex=2 cIndex=2 ********************************************************************* nvme1n1p1 ********************************************************************* Devices: /dev/nvme1n1p1 /dev/nvme1n1p2 /dev/nvme1n1p3 /dev/nvme1n1p4 Flash card 3 : Slot number: 10 Overall health status : GOOD Capacity (in bytes) : 1638723477307392 Firmware Version : SUNW-NVME-3 PCI Vendor ID: 144d Serial Number: S2T7NAAH200019 Model Number: MS1PC5ED3ORA3.2T Firmware Revision: KPYA7R3Q Number of Namespaces: 1 cardindex=3 cIndex=3 ********************************************************************* nvme2n1p1 ********************************************************************* Devices: /dev/nvme2n1p1 /dev/nvme2n1p2 /dev/nvme2n1p3 /dev/nvme2n1p4 Raid Array Info (/dev/md0): /dev/md0: 4469.73GiB raid0 6 devices, 0 spares. Use mdadm --detail for more detail. Summary: Healthy flash drives : 3 Broken flash drives : 0 Pass : Flash card health check passed

Note the following:

-

New devices mapped to the Flash card1 are displayed.

-

The RAID5 configuration shows /dev/md0 as a RAID5 with three devices. See Figure 2-3.

-

In this example, partitions /dev/nvme0n1p1, /dev/nvme0n1p4, /dev/nvme0n1p3, and /dev/nvme0n1p4 are in /dev/nvme0n1

-

Recreate the partitions by:

# parted -a optimal /dev/nvme0n1 mkpart primary 0% 25%# parted -a optimal /dev/nvme0n1 mkpart primary 25% 50%# parted -a optimal /dev/nvme0n1 mkpart primary 50% 75%# parted -a optimal /dev/nvme0n1 mkpart primary 75% 100% -

Since the replaced Flash card contains four new flash devices, the RAID configuration shows four missing devices (one for each flash device on the replaced Flash Card). You must re-assemble RAID5 to enable the RAID configuration to recognize the new devices on the replaced Flash card.

Note:

A working Flash card can also be identified by the green LED. Check the back panel of the Exalytics Machine to confirm that LED of the replaced Flash card is green.

-

-

Re-assemble RAID5, by performing the following steps:

-

To check the RAID5 configuration, enter the following command:

# cat /proc/mdstatThe output should look similar to the following:

Personalities : [raid0] [raid6] [raid5] [raid4] md3 : active raid0 nvme2n1p3[2] nvme2n1p4[3] nvme2n1p2[1] nvme2n1p1[0] 1562288128 blocks super 1.2 512k chunks md0 : active raid5 md3[3] md2[1] 3124314112 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU] bitmap: 1/12 pages [4KB], 65536KB chunk md2 : active raid0 nvme1n1p2[1] nvme1n1p3[2] nvme1n1p4[3] nvme1n1p1[0] 1562288128 blocks super 1.2 512k chunks unused devices: <none>Note the following:

-

Since the replaced Flash card contains four new flash devices on RAID0 /dev/md1, the configuration shows RAID5 /dev/md0 as missing md1.

-

The second line of the RAID5 configuration ends with a notation similar to [3/2] [_UU].

-

To enable RAID5 to recognize md1, you need to recreate md1.

-

-

To recreate md1, enter the following command:

# mdadm /dev/md1 --create --raid-devices=4 --level=0 /dev/nvme0n1p1 /dev/nvme0n1p2 /dev/nvme0n1p3 /dev/nvme0n1p4The output should look similar to the following:

mdadm: array /dev/md1 started.

-

After confirming that md1 created successfully, you add the newly created md1 to RAID5.

-

To add md1 to RAID5, enter the following command:

# mdadm /dev/md0 --add /dev/md1The output should look similar to the following:

mdadm: added /dev/md1

RAID5 starts rebuilding automatically.

-

To check the recovery progress, enter the following command:

# cat /proc/mdstatMonitor the output and confirm that the process completes. The output should look similar to the following:

Personalities : [raid0] [raid6] [raid5] [raid4] md1 : active raid0 nvme0n1p4[3] nvme0n1p1[1] nvme0n1p3[2] nvme0n1p2[0] 1562288128 blocks super 1.2 512k chunks md3 : active raid0 nvme2n1p2[1] nvme2n1p3[2] nvme2n1p4[3] nvme2n1p1[0] 1562288128 blocks super 1.2 512k chunks md0 : active raid5 md1[0] md2[1] md3[3] 3124314112 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [_UU] [>....................] recovery = 0.5% (4200064/780593152) finish=64.6min speed=200003K/sec bitmap: 0/12 pages [0KB], 65536KB chunk md2 : active raid0 nvme1n1p1[0] nvme1n1p4[3] nvme1n1p3[2] nvme1n1p2[1] 1562288128 blocks super 1.2 512k chunks unused devices: <none> -

To recreate the mdadm.conf configuration file, which ensures that RAID5 details are maintained when you restart the Exalytics Machine, enter the following command:

# mdadm --detail --scan --verbose > /etc/mdadm.confTo confirm that the file is recreated correctly, enter the following command:

# cat /etc/mdadm.confMonitor the output and confirm that the process completes. The output should look similar to the following:

ARRAY /dev/md3 level=raid0 num-devices=4 metadata=1.2 name=sca05-0a81e7a5:3 UUID=eb329367:1d1b1208:1f42ee01:e30c8000 devices=/dev/nvme2n1p1,/dev/nvme2n1p2,/dev/nvme2n1p3,/dev/nvme2n1p4 ARRAY /dev/md1 level=raid0 num-devices=4 metadata=1.2 name=sca05-0a81e7a5:1 UUID=4073b225:4c719547:8c1d90ad:a727e725 devices=/dev/nvme0n1p1,/dev/nvme0n1p2,/dev/nvme0n1p3,/dev/nvme0n1p4 ARRAY /dev/md2 level=raid0 num-devices=4 metadata=1.2 name=sca05-0a81e7a5:2 UUID=a163bb3a:ee143609:9cded167:6cb2c64f devices=/dev/nvme1n1p1,/dev/nvme1n1p2,/dev/nvme1n1p3,/dev/nvme1n1p4 ARRAY /dev/md0 level=raid5 num-devices=3 metadata=1.2 name=sca05-0a81e7a5:0 UUID=56171748:6afe951c:41c0fd2e:a0527961 devices=/dev/md1,/dev/md2,/dev/md3

Note:

If you have other customizations in the mdadm.conf configuration file (such as updating the list of devices that are mapped to each RAID), you must manually edit the file.

-