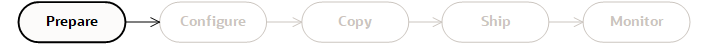

Preparing for Disk Data Transfers

Learn about how to prepare for a disk-based data import job.

This topic describes the tasks associated with preparing for the Disk-Based Data Import. The Project Sponsor role typically performs these tasks. See Roles and Responsibilities.

Import Disk Requirements

You're responsible for performing following tasks in order:

-

Obtaining the required number of hard drives to migrate the data to Oracle Cloud Infrastructure. Use USB 2.0/3.0 external hard disk drives (HDD) with a single partitioned file system containing the data.

Note

Oracle doesn't certify or test disks you intend to use for disk import jobs. Calculate the disk capacity requirements and disk I/O to decide what USB 2.0/3.0 disk works best for the data transfer needs.

-

Copying the data to the HDDs following the procedures described in this import disk documentation.

-

Shipping the disks to the specified Oracle data transfer site.

After the data is copied successfully to an Oracle Cloud Infrastructure Object Storage bucket, the hard drives are sanitized to remove the data before they're shipped back to you.

Installing the Data Transfer Utility

Learn about the installation of the Data Transfer Utility for running disk-based data import jobs..

This topic describes how to install and configure the Data Transfer Utility for use in disk-based data transfers. In addition, this topic describes the syntax for the Data Transfer Utility commands.

With this release, the Data Transfer Utility only supports disk-based data transfers. Use of the Data Transfer Utility for appliance-based transfers has been replaced with the Oracle Cloud Infrastructure command line interface (CLI).

The Data Transfer Utility is licensed under the Universal Permissive License 1.0 and the Apache License 2.0. Third-party content is separately licensed as described in the code.

The Data Transfer Utility must be run as the root user.

Prerequisites

Learn about prerequisites for installing the Data Transfer Utility.

To install and use the Data Transfer Utility, obtain the following:

-

An Oracle Cloud Infrastructure account.

-

The required Oracle Cloud Infrastructure users and groups with the required IAM policies.

See Creating the Required IAM Users, Groups, and Policies for details.

-

A Data Host machine with the following installed:

-

Oracle Linux 6 or greater, Ubuntu 14.04 or greater, or SUSE 11 or greater. All Linux operating systems must have the ability to create an EXT file system.

Note

Windows-based machines are not supported in disk-based transfer jobs.

-

Java 1.8 or Java 1.11

-

hdparm 9.0 or later

-

Cryptsetup 1.2.0 or greater

-

-

Firewall access: If you have a restrictive firewall in the environment where you are using the Data Transfer Utility, you may need to open your firewall configuration to allow access to the Data Transfer service as well as Object Storage. See Configuring Firewall Settings for the specific IP address ranges required for your region.

Installation

Download and install the Data Transfer Utility installer that corresponds to your Data Host's operating system.

Installing on Debian or Ubuntu

-

Download the installation .deb file.

-

Issue the

apt installcommand as therootuser that has write permissions to the/optdirectory.sudo apt install ./dts-X.Y.Z.x86_64.debX.Y.Zrepresents the version numbers that match the installer you downloaded. -

Confirm that the Data Transfer Utility installed successfully.

sudo dts --versionYour Data Transfer Utility version number is returned.

Installing on Oracle Linux or Red Hat Linux

-

Download the installation .rpm file.

-

Issue the

yum installcommand as therootuser that has write permissions to the/optdirectory.sudo yum localinstall ./dts-X.Y.Z.x86_64.rpmX.Y.Zrepresents the version numbers that match the installer you downloaded. -

Confirm that the Data Transfer Utility installed successfully.

sudo dts --versionYour Data Transfer Utility version number is returned.

Configuration

Before using the Data Transfer Utility, you must create a base Oracle Cloud Infrastructure directory and two configuration files with the required credentials. One configuration file is for the data transfer administrator, the IAM user with the authorization and permissions to create and manage transfer jobs. The other configuration file is for the data transfer upload user, the temporary IAM user that Oracle uses to upload your data on your behalf.

Base Data Transfer Directory

Create a base Oracle Cloud Infrastructure directory:

mkdir /root/.oci/Configuration File for the Data Transfer Administrator

Create a data transfer administrator configuration file /root/.oci/config with the following structure:

[DEFAULT]

user=<The OCID for the data transfer administrator>

fingerprint=<The fingerprint of the above user's public key>

key_file=<The _absolute_ path to the above user's private key file on the host machine>

tenancy=<The OCID for the tenancy that owns the data transfer job and bucket>

region=<The region where the transfer job and bucket should exist. Valid values are:

us-ashburn-1, us-phoenix-1, eu-frankfurt-1, and uk-london-1.>For example:

[DEFAULT]

user=ocid1.user.oc1..unique_ID

fingerprint=4c:1a:6f:a1:5b:9e:58:45:f7:53:43:1f:51:0f:d8:45

key_file=/home/user/ocid1.user.oc1..unique_ID.pem

tenancy=ocid1.tenancy.oc1..unique_ID

region=us-phoenix-1For the data transfer administrator, you can create a single configuration file that contains different profile sections with the credentials for multiple users. Then use the ‑‑profile option to specify which profile to use in the command. Here is an example of a data transfer administrator configuration file with different profile sections:

[DEFAULT]

user=ocid1.user.oc1..unique_ID

fingerprint=4c:1a:6f:a1:5b:9e:58:45:f7:53:43:1f:51:0f:d8:45

key_file=/home/user/ocid1.user.oc1..unique_ID.pem

tenancy=ocid1.tenancy.oc1..unique_ID

region=us-phoenix-1

[PROFILE1]

user=ocid1.user.oc1..unique_ID

fingerprint=4c:1a:6f:a1:5b:9e:58:45:f7:53:43:1f:51:0f:d8:45

key_file=/home/user/ocid1.user.oc1..unique_ID.pem

tenancy=ocid1.tenancy.oc1..unique_ID

region=us-ashburn-1By default, the DEFAULT profile is used for all Data Transfer Utility commands. For example:

dts job create --compartment-id compartment_id --bucket bucket_name --display-name display_name --device-type disk

Instead, you can issue any Data Transfer Utility command with the --profile option to specify a different data transfer administrator profile. For example:

dts job create --compartment-id compartment_id --bucket bucket_name --display-name display_name --device-type disk --profile profile_name

Using the example configuration file above, the <profile_name> would be profile1.

Configuration File for the Data Transfer Upload User

Create a data transfer upload user /root/.oci/config_upload_user configuration file with the following structure:

[DEFAULT]

user=<The OCID for the data transfer upload user>

fingerprint=<The fingerprint of the above user's public key>

key_file=<The _absolute_ path to the above user's private key file on the host machine>

tenancy=<The OCID for the tenancy that owns the data transfer job and bucket>

region=<The region where the transfer job and bucket should exist. Valid values are:

us-ashburn-1, us-phoenix-1, eu-frankfurt-1, and uk-london-1.>For example:

[DEFAULT]

user=ocid1.user.oc1..unique_ID

fingerprint=4c:1a:6f:a1:5b:9e:58:45:f7:53:43:1f:51:0f:d8:45

key_file=/home/user/ocid1.user.oc1..unique_ID.pem

tenancy=ocid1.tenancy.oc1..unique_ID

region=us-phoenix-1Creating an upload user configuration file with multiple profiles is not supported.

Configuration File Entries

The following table lists the basic entries that are required for each configuration file and where to get the information for each entry.

Data Transfer Service does not support passphrases on the key files for both data transfer administrator and data transfer upload user.

|

Entry |

Description and Where to Get the Value |

Required? |

|---|---|---|

|

|

OCID of the data transfer administrator or the data transfer upload user, depending on which profile you are creating. To get the value, see Required Keys and OCIDs. |

Yes |

|

|

Fingerprint for the key pair being used. To get the value, see Required Keys and OCIDs. |

Yes |

|

|

Full path and filename of the private key. Important: The key pair must be in PEM format. For instructions on generating a key pair in PEM format, see Required Keys and OCIDs. |

Yes |

|

|

OCID of your tenancy. To get the value, see Required Keys and OCIDs. |

Yes |

|

|

An Oracle Cloud Infrastructure region. See Regions and Availability Domains. Data transfer is supported in |

Yes |

You can verify the data transfer upload user credentials using the following command:

dts job verify-upload-user-credentials --bucket bucket_nameConfiguration File Location

The location of the configuration files is /root/.oci/config.

Using the Data Transfer Utility

This section provides an overview of the syntax for the Data Transfer Utility.

The Data Transfer Utility must be run as the

root user.

You can specify Data Transfer Utility command options using the following commands:

--option valueor--option=value

Syntax

The basic Data Transfer Utility syntax is:

dts resource action [options]This syntax is applied to the following:

dtsis the shortened utility command namejobis an example of a<resource>createis an example of an<action>- Other utility strings are

[options]

The following examples show typical Data Transfer Utility commands to create a transfer job.

dts job create --compartment-id ocid.compartment.oc1..exampleuniqueID --display-name "mycompany transfer1" --bucket mybucket --device-type diskOr:

dts job create --compartment-id=compartment-id ocid.compartment.oc1..exampleuniqueID --display-name="mycompany transfer1" --bucket=mybucket --device-type=diskIn the previous examples, provide a friendly name for the transfer job using the ‑‑display‑name option.

Finding Out the Installed Version of the Data Transfer Utility

You can get the installed version of the Data Transfer Utility using --version or -v. For example:

dts --version

0.6.183Accessing Data Transfer Utility Help

All Data Transfer Utility help commands have an associated help component you can access from the command line. To view the help, enter any command followed by the --help or -h option. For example:

dts job --help

Usage: job [COMMAND]

Transfer disk or appliance job operations - {job action [options]}

Commands:

create Creates a new transfer disk or appliance job.

show Shows the transfer disk or appliance job details.

update Updates the transfer disk or appliance job details.

delete Deletes the transfer disk or appliance job.

close Closes the transfer disk or appliance job.

list Lists all transfer disk or appliance jobs.

verify-upload-user-credentials Verifies the transfer disk or appliance upload user credentials.When you run the help option (--help or -h) for a specified command, all the subordinate commands and options for that level of the Data Transfer Utility are displayed. If you want to access the Data Transfer Utility help for a specific subordinate command, include it in the Data Transfer Utility string, for example:

dts job create --help

Usage: job create --bucket=<bucket> --compartment-id=<compartmentId>

[--defined-tags=<definedTags>] --device-type=<deviceType>

--display-name=<displayName>

[--freeform-tags=<freeformTags>] [--profile=<profile>]

Creates a new transfer disk or appliance job.

--bucket=<bucket> Upload bucket for the job.

--compartment-id=<compartmentId> Compartment OCID.

--defined-tags=<definedTags> Defined tags for the new transfer job in JSON format.

--device-type=<deviceType> Device type for the job: DISK or APPLIANCE.

--display-name=<displayName> Display name for the job.

--freeform-tags=<freeformTags> Free-form tags for the new transfer job in JSON format.

--profile=<profile> Profile.Creating the Required IAM Users, Groups, and Policies

Each service in Oracle Cloud Infrastructure integrates with IAM for authentication and authorization.

To use Oracle Cloud Infrastructure, you must be given the required type of access in a policy written by an administrator, whether you're using the Console or the REST API with an SDK, CLI, or other tool. If you try to perform an action and get a message that you don't have permission or are unauthorized, confirm with your administrator the type of access you've been granted and which compartment you should work in.

Access to resources is provided to groups using policies and then inherited by the users that are assigned to those groups. Data transfer requires the creation of two distinct groups:

-

Data transfer administrators who can create and manage transfer jobs.

-

Data transfer upload users who can upload data to Object Storage. For your data security, the permissions for upload users allow Oracle personnel to upload standard and multi-part objects on your behalf and inspect bucket and object metadata. The permissions do not allow Oracle personnel to inspect the actual data.

The Data Administrator is responsible for generating the required RSA keys needed for the temporary upload users. These keys should never be shared between users.

For details on creating groups, see Managing Groups.

An administrator creates these groups with the following policies:

-

The data transfer administrator group requires an authorization policy that includes the following:

Allow group group_name to manage data-transfer-jobs in compartment compartment_name Allow group group_name to manage objects in compartment compartment_name Allow group group_name to manage buckets in compartment compartment_nameAlternatively, you can consolidate the

manage bucketsandmanage objectspolicies into the following:Allow group group_name to manage object-family in compartment compartment_name

-

The data transfer upload user group requires an authorization policy that includes the following:

Allow group group_name to manage buckets in compartment compartment_name where all { request.permission='BUCKET_READ', target.bucket.name='<bucket_name>' } Allow group group_name to manage objects in compartment compartment_name where all { target.bucket.name='<bucket_name>', any { request.permission='OBJECT_CREATE', request.permission='OBJECT_OVERWRITE', request.permission='OBJECT_INSPECT' }}

To enable notifications, add the following policies:

Allow group group name to manage ons-topics in tenancy

Allow group group name to manage ons-subscriptions in tenancy

Allow group group name to manage cloudevents-rules in tenancy

Allow group group name to inspect compartments in tenancySee Notifications and Overview of Events for more information.

The Oracle Cloud Infrastructure administrator then adds a user to each of the data transfer groups created. For details on creating users, see Managing Users.

For security reasons, we recommend that you create a unique IAM data transfer upload user for each transfer job and then delete that user once your data is uploaded to Oracle Cloud Infrastructure.

Creating Object Storage Buckets

The Object Storage service is used to upload your data to Oracle Cloud Infrastructure. Object Storage stores objects in a container called a bucket within a compartment in your tenancy. For details on creating the bucket to store uploaded data, see Object Storage Buckets.

Configuring Firewall Settings

The firewall port number is 443 for all data transfer methods.

Ensure that your local environment's firewall can communicate with the Data Transfer Service running on the IP address ranges for your Oracle Cloud Infrastructure region based on the following table. Also ensure that open access exists to the Object Storage IP address range. You only need to configure this IP access for the region where your data transfer job is associated.

|

Region |

Data Transfer |

Object Storage |

|---|---|---|

|

US East (Ashburn) |

140.91.0.0/16 |

134.70.24.0/21 |

|

US West (Phoenix) |

129.146.0.0/16 |

134.70.8.0/21 |

|

US Gov East (Ashburn) |

splat-api.us-langley-1.oraclegovcloud.com |

objectstorage.us-gov-ashburn-1.oraclegovcloud.com |

|

US Gov West (Phoenix) |

splat-api.us-luke-1.oraclegovcloud.com |

objectstorage.us-luke-1.oraclegovcloud.com |

|

US DoD East (Ashburn) |

splat-api.us-gov-ashburn-1.oraclegovcloud.com |

objectstorage.us-gov-ashburn-1.oraclegovcloud.com |

|

US DoD West (Phoenix) |

splat-api.us-gov-phoenix-1.oraclegovcloud.com |

objectstorage.us-gov-phoenix-1.oraclegovcloud.com |

|

Brazil East (Sao Paulo) |

140.204.0.0/16 |

134.70.84.0/22 |

|

Canada Southeast (Toronto) |

140.204.0.0/16 |

134.70.116.0/22 |

|

Germany Central (Frankfurt) |

130.61.0.0/16 |

134.70.40.0/21 |

|

India West (Mumbai) |

140.204.0.0/16 |

134.70.76.0/22 |

|

Japan Central (Osaka) |

140.204.0.0/16 |

134.70.112.0/22 |

|

Japan East (Tokyo) |

140.204.0.0/16 |

134.70.80.0/22 |

|

South Korea Central (Seoul) |

140.204.0.0/16 |

134.70.96.0/22 |

|

UK South (London) |

132.145.0.0/16 |

134.70.56.0/21 |

Creating Transfer Jobs

This section describes how to create a disk-based transfer job as part of the preparation for the data transfer. See Disk Import Transfer Jobs for complete details on all tasks related to transfer jobs.

You can use the Console or the Data Transfer Utility to create a transfer job.

A disk-based transfer job represents the collection of files that you want to transfer and signals the intention to upload those files to Oracle Cloud Infrastructure. A disk-based transfer job combines at least one transfer disk with a transfer package. Identify which compartment and Object Storage bucket that Oracle is to upload your data to. Create the disk-based transfer job in the same compartment as the upload bucket and supply a human-readable name for the transfer job.

It is recommended that you create a compartment for each transfer job to minimize the required access your tenancy.

Creating a transfer job returns a job ID that you specify in other transfer tasks. For example:

ocid1.datatransferjob.region1.phx..exampleuniqueIDUsing the Console

-

Open the navigation menu and click Migration & Disaster Recovery. Under Data Transfer, click Imports. The Transfer Jobs page appears.

-

Choose a Compartment you have permission to work in under List scope. All transfer jobs in that compartment are listed in tabular form.

-

Click Create Transfer Job. The Create Transfer Job dialog box appears.

-

Complete the following:

-

Job Name: Enter a name for the transfer job.

-

Bucket: Select the bucket that contains the transfer data from the list. All available buckets for the selected compartment are listed. If you want to select a bucket in a different compartment, click Change Compartment and select the compartment that contains the bucket you want.

-

Transfer Type Device: Select the Disk option.

-

(Optional) Complete the tagging settings:

-

Tag namespace: Select a namespace from the list.

-

Tag key: Enter a tagging key.

-

Tag value: Enter a value for the tagging key.

See Overview of Tagging for more information.

-

-

-

Click Create Transfer Job.

Using the Data Transfer Utility

Use the dts job create command and required parameters to create a transfer job.

dts job create --bucket bucket --compartment-id compartment_id --display-name display_namedisplay_name is the name of the transfer job.

Run dts job create --help to view the complete list of flags and variable options.

For example:

oci dts job create --bucket MyBucket1 --compartment-id ocid.compartment.oc1..exampleuniqueID --display-name MyDiskImportJob

Transfer Job :

ID : ocid1.datatransferjob.oc1..exampleuniqueID

CompartmentId : ocid.compartment.oc1..exampleuniqueID

UploadBucket : MyBucket1

Name : MyDiskImportJob

Label : JZM9PAVWH

CreationDate : 2019/06/04 17:07:05 EDT

Status : PREPARING

freeformTags : *** none ***

definedTags : *** none ***

Packages :

[1] :

Label : PBNZOX9RU

TransferSiteShippingAddress : Oracle Data Transfer Service; Job:JZM9PAVWH Package:PBNZOX9RU ; 21111 Ridgetop Circle; Dock B; Sterling, VA 20166; USA

DeliveryVendor : FedEx

DeliveryTrackingNumber : *** none ***

ReturnDeliveryTrackingNumber : *** none ***

Status : PREPARING

Devices : [*** none ***]

UnattachedDevices : [*** none ***]

Appliances : [*** none ***]When you use the to display the details of a job, tagging details are also included in the output if you specified tags.Optionally, you can specify one or more defined or free-form tags when you create a transfer job. For more information about tagging, see Resource Tags.

Defined TagsTo specify defined tags when creating a job:

dts job create --bucket bucket --compartment-id compartment_id --display-name display_name --defined-tags '{ "tag_namespace": { "tag_key":"value" }}'oci dts job create --bucket MyBucket1 --compartment-id ocid.compartment.oc1..exampleuniqueID --display-name MyDiskImportJob --defined-tags '{"Operations": {"CostCenter": "01"}}'

Transfer Job :

ID : ocid1.datatransferjob.oc1..exampleuniqueID

CompartmentId : ocid.compartment.oc1..exampleuniqueID

UploadBucket : MyBucket1

Name : MyDiskImportJob

Label : JZM9PAVWH

CreationDate : 2019/06/04 17:07:05 EDT

Status : PREPARING

freeformTags : *** none ***

definedTags :

Operations :

CostCenter : 01

Packages :

[1] :

Label : PBNZOX9RU

TransferSiteShippingAddress : Oracle Data Transfer Service; Job:JZM9PAVWH Package:PBNZOX9RU ; 21111 Ridgetop Circle; Dock B; Sterling, VA 20166; USA

DeliveryVendor : FedEx

DeliveryTrackingNumber : *** none ***

ReturnDeliveryTrackingNumber : *** none ***

Status : PREPARING

Devices : [*** none ***]

UnattachedDevices : [*** none ***]

Appliances : [*** none ***]When you use the to display the details of a job, tagging details are also included in the output if you specified tags.

Users create tag namespaces and tag keys with the required permissions. These items must exist before you can specify them when creating a job. See Tags and Tag Namespace Concepts for details.

To specify freeform tags when creating a job:

dts job create --bucket bucket --compartment-id compartment_id --display-name display_name --freeform-tags '{ "tag_key":"value" }'oci dts job create --bucket MyBucket1 --compartment-id ocid.compartment.oc1..exampleuniqueID --display-name MyDiskImportJob --defined-tags '{"Operations": {"CostCenter": "01"}}'

Transfer Job :

ID : ocid1.datatransferjob.oc1..exampleuniqueID

CompartmentId : ocid.compartment.oc1..exampleuniqueID

UploadBucket : MyBucket1

Name : MyDiskImportJob

Label : JZM9PAVWH

CreationDate : 2019/06/04 17:07:05 EDT

Status : PREPARING

freeformTags :

Pittsburg_Team : brochures

definedTags : *** none ***

Packages :

[1] :

Label : PBNZOX9RU

TransferSiteShippingAddress : Oracle Data Transfer Service; Job:JZM9PAVWH Package:PBNZOX9RU ; 21111 Ridgetop Circle; Dock B; Sterling, VA 20166; USA

DeliveryVendor : FedEx

DeliveryTrackingNumber : *** none ***

ReturnDeliveryTrackingNumber : *** none ***

Status : PREPARING

Devices : [*** none ***]

UnattachedDevices : [*** none ***]

Appliances : [*** none ***]When you use the to display the details of a job, tagging details are also included in the output if you specified tags.

To specify multiple tags, comma separate the JSON-formatted key/value pairs:

dts job create --bucket bucket --compartment-id compartment_id --display-name display_name --device-type disk --freeform-tags '{ "tag_key":"value" }', '{ "tag_key":"value" }'Getting a Transfer Job's OCID

Each transfer job you create has a unique OCID within Oracle Cloud Infrastructure. For example:

ocid1.datatransferjob.region1.phx..unique_IDYou will need to forward this transfer job OCID to the Data Administrator.

Using the Console

-

Open the navigation menu and click Migration & Disaster Recovery. Under Data Transfer, click Imports. The Transfer Jobs page appears.

-

Choose a Compartment you have permission to work in under List scope. All transfer jobs in that compartment are listed in tabular form.

-

Click the transfer job whose OCID you want to get. The transfer job's Details page appears.

-

Find the OCID field and click Show to display it or Copy to copy it to your computer.

Using the Data Transfer Utility

Use the dts job list command and required parameters to list the transfer jobs in your compartment. Here you can view the job OCID.

dts job list --compartment-id compartment_idRun dts job list --help to view the complete list of flags and variable options.

For example:

dts job list --compartment-id ocid.compartment.oc1..exampleuniqueID

Transfer Job List :

[1] :

ID : ocid1.datatransferjob.oc1..exampleuniqueID

Name : MyDiskImportJob

Label : JVWK5YWPU

BucketName : MyBucket1

CreationDate : 2020/06/01 17:33:16 EDT

Status : INITIATED

FreeformTags : *** none ***

DefinedTags :

Financials :

key1 : nondefaultThe ID for each transfer job is returned:

ID : ocid1.datatransferjob.oc1..exampleuniqueIDWhen you create a transfer job using the

dts job create CLI, the transfer job ID is displayed in the CLI's return.Creating Upload Configuration Files

The Project Sponsor is responsible for creating or obtaining configuration files that allow the uploading of user data to the transfer appliance. Send these configuration files to the Data Administrator where they can be placed in the Data Host. The config file is for the data transfer administrator, the IAM user with the authorization and permissions to create and manage transfer jobs. The config_upload_user file is for the data transfer upload user, the temporary IAM user that Oracle uses to upload your data on your behalf.

Create a base Oracle Cloud Infrastructure directory and two configuration files with the required credentials.

Creating the Data Transfer Directory

Create a Oracle Cloud Infrastructure directory (.oci) on the same Data Host where the CLI is installed. For example:

mkdir /root/.oci/The two configuration files (config and config_upload_user) are placed in this directory.

Creating the Data Transfer Administrator Configuration File

Create the data transfer administrator configuration file /root/.oci/config with the following structure:

[DEFAULT]

user=<The OCID for the data transfer administrator>

fingerprint=<The fingerprint of the above user's public key>

key_file=<The _absolute_ path to the above user's private key file on the host machine>

tenancy=<The OCID for the tenancy that owns the data transfer job and bucket>

region=<The region where the transfer job and bucket should exist. Valid values are:

supported regions>.where supported regions are the regions listed in

Data Transfer Supported Regions.

For example:

[DEFAULT]

user=ocid1.user.oc1..exampleuniqueID

fingerprint=4c:1a:6f:a1:5b:9e:58:45:f7:53:43:1f:51:0f:d8:45

key_file=/home/user/ocid1.user.oc1..exampleuniqueID.pem

tenancy=ocid1.tenancy.oc1..exampleuniqueID

region=us-phoenix-1For the data transfer administrator, you can create a single configuration file that contains different profile sections with the credentials for multiple users. Then use the ‑‑profile option to specify which profile to use in the command.

Here is an example of a data transfer administrator configuration file with different profile sections:

[DEFAULT]

user=ocid1.user.oc1..exampleuniqueID

fingerprint=4c:1a:6f:a1:5b:9e:58:45:f7:53:43:1f:51:0f:d8:45

key_file=/home/user/ocid1.user.oc1..exampleuniqueID.pem

tenancy=ocid1.tenancy.oc1..exampleuniqueID

region=us-phoenix-1

[PROFILE1]

user=ocid1.user.oc1..exampleuniqueID

fingerprint=4c:1a:6f:a1:5b:9e:58:45:f7:53:43:1f:51:0f:d8:45

key_file=/home/user/ocid1.user.oc1..exampleuniqueID.pem

tenancy=ocid1.tenancy.oc1..exampleuniqueID

region=us-ashburn-1By default, the DEFAULT profile is used for all CLI commands. For example:

oci dts job create --compartment-id ocid.compartment.oc1..exampleuniqueID --bucket MyBucket --display-name MyDisplay --device-type disk

Instead, you can issue any CLI command with the --profile option to specify a different data transfer administrator profile. For example:

oci dts job create --compartment-id ocid.compartment.oc1..exampleuniqueID --bucket MyBucket --display-name MyDisplay --device-type disk --profile MyProfile

Using the example configuration file above, the <profile_name> would be profile1.

If you created two separate configuration files, use the following command to specify the configuration file to use:

oci dts job create --compartment-id compartment_id

--bucket bucket_name --display-name display_nameCreating the Data Transfer Upload User Configuration File

The config_upload_user configuration file is for the data transfer upload user, the temporary IAM user that Oracle uses to upload your data on your behalf. Create this configuration file with the following structure:

[DEFAULT]

user=<The OCID for the data transfer upload user>

fingerprint=<The fingerprint of the above user's public key>

key_file=<The _absolute_ path to the above user's private key file on the host machine>

tenancy=<The OCID for the tenancy that owns the data transfer job and bucket>

region=<The region where the transfer job and bucket should exist. Valid values are:

supported regions>where supported regions are the regions listed in

Data Transfer Supported Regions.

Configuration File Entries

The following table lists the basic entries that are required for each configuration file and where to get the information for each entry.

Data Transfer Service does not support passphrases on the key files for both data transfer administrator and data transfer upload user.

|

Entry |

Description and Where to Get the Value |

Required? |

|---|---|---|

|

|

OCID of the data transfer administrator or the data transfer upload user, depending on which profile you are creating. To get the value, see Required Keys and OCIDs. |

Yes |

|

|

Fingerprint for the key pair being used. To get the value, see Required Keys and OCIDs. |

Yes |

|

|

Full path and filename of the private key. Important: The key pair must be in PEM format. For instructions on generating a key pair in PEM format, see Required Keys and OCIDs. |

Yes |

|

|

OCID of your tenancy. To get the value, see Required Keys and OCIDs. |

Yes |

|

|

An Oracle Cloud Infrastructure region. See Regions and Availability Domains. Data transfer is supported in |

Yes |

You can verify the data transfer upload user credentials using the following command:

dts job verify-upload-user-credentials --bucket bucket_nameCreating Transfer Packages

A transfer package is the virtual representation of the physical disk package that you are shipping to Oracle for upload to Oracle Cloud Infrastructure. See Transfer Packages for complete details on all tasks related to transfer packages.

Creating a transfer package requires the job ID returned from when you created the transfer job. For example:

ocid1.datatransferjob.region1.phx..exampleuniqueIDUsing the Console

-

Open the navigation menu and click Migration & Disaster Recovery. Under Data Transfer, click Imports. The Transfer Jobs page appears.

-

Choose a Compartment you have permission to work in under List scope. All transfer jobs in that compartment are listed in tabular form.

-

Click transfer job for which you want to create a transfer package. The transfer job's Details page appears.

-

Click Transfer Packages under Resources. The Transfer Packages page appears. All transfer packages are listed in tabular form.

-

Click Create Transfer Package. The Create Transfer Package dialog box appears.

-

Select a shipping vendor from the Shipping Carrier list.

-

Click Create Transfer Package.

The Transfer Packages page displays the transfer package you just created.

Using the Data Transfer Utility

Use the dts package create command and required parameters to create a transfer package.

dts package create --job-id job_idRun dts package create --help to view the complete list of flags and variable options.

For example:

dts package create --job-id ocid1.datatransferjob.oc1..exampleuniqueID

Transfer Package :

Label :

TransferSiteShippingAddress :

DeliveryVendor :

DeliveryTrackingNumber :

ReturnDeliveryTrackingNumber :

Status :

Devices :Getting Transfer Package Labels

Using the Console

-

Open the navigation menu and click Migration & Disaster Recovery. Under Data Transfer, click Imports. The Transfer Jobs page appears.

-

Choose a Compartment you have permission to work in under List scope. All transfer jobs in that compartment are listed in tabular form.

-

Click the transfer job for which you want to get a transfer package's label. The transfer job's Details page appears.

-

Click Transfer Packages under Resources. The Transfer Packages page appears. All transfer packages are listed in tabular form.

-

View the Labels column for a list of transfer package labels.

Using the Data Transfer Utility

Use the dts job show command and required parameters to display the details of a transfer jobs in your compartment.

dts job show --job-id job_idRun dts job show --help to view the complete list of flags and variable options.

For example:

dts job show --job-id ocid1.datatransferjob.oc1..exampleuniqueID

Transfer Job :

ID : ocid1.datatransferjob.oc1..exampleuniqueID

CompartmentId : ocid.compartment.oc1..exampleuniqueID

UploadBucket : MyBucket1

Name : MyDiskImportJob

Label : JZM9PAVWH

CreationDate : 2019/06/04 17:07:05 EDT

Status : PREPARING

freeformTags : *** none ***

definedTags : *** none ***

Packages :

[1] :

Label : PBNZOX9RU

TransferSiteShippingAddress : Oracle Data Transfer Service; Job:JZM9PAVWH Package:PBNZOX9RU ; 21111 Ridgetop Circle; Dock B; Sterling, VA 20166; USA

DeliveryVendor : FedEx

DeliveryTrackingNumber : *** none ***

ReturnDeliveryTrackingNumber : *** none ***

Status : PREPARING

Devices : [*** none ***]

UnattachedDevices : [*** none ***]

Appliances : [*** none ***]The transfer package label is displayed as part of the job details.

Getting Shipping Labels

You can find the shipping address in the transfer package details. Use this information to get a shipping label for the transfer package that is used to send the disk to Oracle.

After getting the shipping labels from the Console or Data Transfer Utility, go to the supported carrier you are using (UPS, FedEx, or DHL) and manually create both the SHIP TO ORACLE and RETURN TO CUSTOMER labels. See Shipping Import Disks and Monitoring the Import Disk Shipment and Data Transfer for information.

Using the Console

-

Open the navigation menu and click Migration & Disaster Recovery. Under Data Transfer, click Imports. The Transfer Jobs page appears.

-

Choose a Compartment you have permission to work in under List scope. All transfer jobs in that compartment are listed in tabular form.

-

Click the transfer job for which you want to see the details. The transfer job's Details page appears.

- Click Transfer Packages under Resources. The Transfer Packages page appears. All transfer packages are listed in tabular form.

-

Click the transfer package whose details you want to get. The transfer package's Details page appears.

-

Get the shipping label.

Using the Data Transfer Utility

Use the dts package show command and required parameters to get the shipping label for a transfer package.

dts package show --job-id job_id --package-label package_labelRun dts package show --help to view the complete list of flags and variable options.

For example:

dts package show --job-id ocid1.datatransferjob.oci1..exampleuniqueID --package-label PWA8O67MI

Transfer Package :

Label : PWA8O67MI

TransferSiteShippingAddress : Oracle Data Transfer Service; Job:JZM9PAVWH Package:PWA8O67MI ; 21111 Ridgetop Circle; Dock B; Sterling, VA 20166; USA

DeliveryVendor : *** none ***

DeliveryTrackingNumber : *** none ***

ReturnDeliveryTrackingNumber : *** none ***

Status : PREPARING

Devices : [*** none ***]Notifying the Data Administrator

When you have completed all the tasks in this topic, provide the Data Administrator of the following:

-

IAM login credentials

-

Data Transfer Utility configuration files

-

Transfer job ID

-

Package label

What's Next

You are now ready to configure your system for the data transfer. See Configuring Import Disk Data Transfers.