Query Apache Iceberg Tables

Autonomous AI Database supports querying Apache Iceberg tables.

- About Querying Apache Iceberg Tables

Autonomous AI Database supports querying of Apache Iceberg tables. - Concepts Related to Querying Apache Iceberg Tables

An understanding of the following concepts is helpful for querying Apache Iceberg tables. - Setup Requirements for Iceberg

This topic outlines the setup requirements for using Iceberg with Oracle Autonomous AI Database. - Typical Workflow of Querying Apache Iceberg Tables

Before you begin querying Apache Iceberg Tables, you should be familiar with it's workflow. This section explains how to set up external tables to access data presented as an end-to-end setup workflow with five main steps. - Provider Quickstarts

This chapter describes the process for setting up external data access with different cloud data providers. - References

This section provides a list of references for links cited throughout this chapter:

Parent topic: Query External Data with Autonomous AI Database

About Querying Apache Iceberg Tables

Autonomous AI Database supports querying of Apache Iceberg tables.

Supported Configurations

Here is the compatibility matrix of supported configuration:

| Catalog | Object store(s) | Catalog Auth (REST) | Storage auth | Notes |

|---|---|---|---|---|

| Unity (Databricks) | Amazon S3, Azure ADLS Gen2 | OAuth2 service principal (/oidc/v1/token) - recommended; PAT - quick tests | S3 access/secret key; ADLS Gen2 SAS key |

|

| Polaris (Snowflake) | Amazon S3, Azure ADLS Gen2 | OAuth2 (client credentials) or Polaris-supported token | S3 access/secret key; ADLS Gen2 SAS key | Object store credential vending is not supported. |

| AWS Glue | Amazon S3 | N/A (uses AWS account auth) | S3 access/secret key; | Object store credential vending is not supported. Same credentials must be used for S3 and Glue. S3 and Glue must be in the same AWS region. |

| JSON metadata (non-catalog option) | Amazon S3, Azure ADLS Gen2, OCI Object Store | N/A (no REST) | S3 access/secret key; ADLS Gen2 SAS key, OCI Native Credentials | Point ADB to the table’s metadata.json (root manifest). Point-in-time snapshot; recreate external table after schema change or new snapshot.

|

| Hadoop (non-Catalog) | OCI Object Storage | N/A (no REST) | OCI native credentials | Points to a lakehouse folder that contains data and metadata files. |

Delta Lake UniForm Tables

Autonomous AI Database Serverless can also query Delta Lake tables that have UniForm enabled (formerly known as Delta Lake Universal Format). UniForm writes Apache Iceberg metadata for the Delta table, so you can create an external table in Autonomous Database against that Iceberg metadata (via an Iceberg catalog or the Iceberg root metadata file) and query it like any other Iceberg table. The same Iceberg limitations on this page still apply. See the Delta Lake Documentation for enabling UniForm.

- Restrictions of Querying Apache Iceberg Tables

This chapter lists restrictions of querying Apache Iceberg tables.

Parent topic: Query Apache Iceberg Tables

Restrictions of Querying Apache Iceberg Tables

This chapter lists restrictions of querying Apache Iceberg tables.

-

Unity native Iceberg (REST): Not supported.

Workaround: Use Delta + UniForm to publish an Iceberg-readable view via Unity Catalog’s Iceberg REST endpoint.

- Certified REST catalogs: ADB is certified with Snowflake Polaris and Databricks Unity Catalog (UniForm only) for Iceberg read access.

-

Catalog credential vending: Not supported.

Cloud-native role-based vending, such as automatic role assumption or temporary credentials issued by STS, is not supported. Use explicit access/secret keys or static tokens.)

- AWS ARN credentials: Not supported. IAM role ARNs and AssumeRole via ARN are not accepted.

- Partitioned Iceberg tables are not supported; only non-partitioned tables are allowed.

- Row-level updates (merge-on-read): Not supported. If Iceberg metadata references delete files, queries will fail.

- The schema of a fixed external table is determined at creation and must align with the Iceberg schema version in the metadata. If the Iceberg schema is updated, the external table must be recreated.

- No query-time time travel: Querying by snapshot, version, or timestamp is not supported.

- Non_catalog only: New snapshots are not picked up automatically. To read a specific snapshot, target that snapshot’s metadata.json and recreate the external table.

- Credential alignment: The same credentials must be used for AWS S3 and AWS Glue.

- Region co-location: S3 buckets and the AWS Glue catalog must be in the same AWS region.

Parent topic: About Querying Apache Iceberg Tables

Concepts Related to Querying Apache Iceberg Tables

An understanding of the following concepts is helpful for querying Apache Iceberg tables.

Iceberg Catalog

The Iceberg catalog is a service that manages table metadata, such table snapshots, the table schema and partitioning information. In order to query the latest snapshot for an Iceberg table, query engines must first access the catalog and obtain the location of the most recent metadata file. There are already a number of available catalog implementations, including AWS Glue, Hive, Nessie, and Hadoop. Autonomous AI Database supports the AWS Glue catalog and the HadoopCatalog implementation used by Spark.

For more information, see Optimistic Concurrency.

Metadata Files

The metadata file is a JSON document that keeps track of the table snapshots, partitioning scheme and schema information. The metadata file is the entry point to a hierarchy of manifest lists and manifest files. The manifests track the table's data files along with information including partitioning and column statistics. See the Iceberg Table Specification, for more information.

Transactions

Iceberg supports row-level updates to tables using either copy-on-write or merge-on-read. Copy-on-write generates new data files that reflect the updated rows, while merge-on-read generates new "delete files" that must be merged with the data files during reading. Oracle supports copy-on-write. Queries on iceberg tables fail if they encounter a delete file. For more information, see RowLevelOperationMode.

Schema Evolution

Iceberg supports schema evolution. Schema changes are reflected in the Iceberg metadata using a schema ID. Note that Oracle external tables have a fixed schema, determined by the most current schema version at table creation time. Iceberg queries fail when the queried metadata points to a different schema version compared to the one used at table creation time. For more information, see Schema Evolution.

Partitioning

Iceberg supports advanced partitioning options such as hidden partitioning and partition evolution that rely on processing/altering the table's metadata without costly data layout changes.

Parent topic: Query Apache Iceberg Tables

Setup Requirements for Iceberg

This topic outlines the setup requirements for using Iceberg with Oracle Autonomous AI Database.

Topics

- Credentials for Iceberg: REST Catalog vs. Object Store

This topic explains how Apache Iceberg manages and accesses data through the two credentials: REST Catalog and Object Store. You can also refer to two different ways of managing table information in data lake table formats like Apache Iceberg. - Prerequisite: Grant Network Access Control Lists (ACLs) for Iceberg Operations

This topic explains how to grant network Access Control Lists (ACL) privileges to the database schema that runs Apache Iceberg statements. - Grant Schema Roles for Iceberg Operations

TheDWROLEshould be granted to any schema that creates or manages Iceberg external tables.

Parent topic: Query Apache Iceberg Tables

Credentials for Iceberg: REST Catalog vs. Object Store

This topic explains how Apache Iceberg manages and accesses data through the two credentials: REST Catalog and Object Store. You can also refer to two different ways of managing table information in data lake table formats like Apache Iceberg.

Catalog-Managed vs Direct-Metadata External Tables

The following section compares catalog-managed external tables with direct-metadata external tables highlighting their key differences.

-

Catalog-Managed (Unity / Polaris / AWS Glue)

What it is: Metadata, schema, and “current” snapshot resolved via a REST catalog.

Behavior: Automatically reflects the catalog’s latest snapshot; centralized permissions, tags, and lineage.

Best for: Enterprise data products, cross-engine sharing, consistent governance, discoverability (the catalog is the single point of truth).

-

-

Direct-Metadata (Filesystem via

metadata.json)What it is: External table points directly to a specific

metadata.json.Behavior: Fixed, reproducible snapshot; no automatic advances; governance limited to object-store ACLs.

Best for: Experiments, tests, audits.

-

REST vs. Object Store Credentials

REST Catalog Credentials

REST credentials are required when connecting to an Apache Iceberg REST catalog. The REST catalog manages metadata for Iceberg tables by exposing RESTful endpoints. To authenticate, REST credentials are often OAuth-based, requiring you to obtain a bearer token from a token endpoint using a client ID and secret.

-

rest_auth_cred: authenticates with the catalog service (e.g., Unity or Polaris). credential_name: authenticates to the object store where the Iceberg data and metadata reside.

Vending credentials are not supported at this time. Vending credentials refers to the controlled process of distributing or fetching access credentials (like usernames and passwords, API keys, or tokens) when they are needed, often automatically or on-demand, rather than storing them statically in configuration files or scripts.

Object Store Credentials

Object store credentials are used when Apache Iceberg tables are stored directly on cloud object storage like Oracle Cloud Infrastructure (OCI) Object Storage or Amazon S3.

The Credentials allow the Autonomous AI Database to access and read files (such as Parquet data and metadata manifests) directly from the cloud object store.

Use object store credentials when defining external tables that directly point to Parquet/metadata files in OCI/S3 buckets.

Parent topic: Setup Requirements for Iceberg

Prerequisite: Grant Network Access Control Lists (ACLs) for Iceberg Operations

This topic explains how to grant network Access Control Lists (ACL) privileges to the database schema that runs Apache Iceberg statements.

Before querying Apache Iceberg tables in Oracle Database 23ai, you must configure network access privileges. Iceberg operations communicate with external services over HTTPS, including system HTTP proxies, catalog service endpoints, and Object Storage repositories such as Amazon S3 or OCI Object Storage.

To allow these connections, grant network Access Control Lists (ACLs) to the database schema that runs Iceberg queries or Data Definition Language (DDL) statements. If the required ACLs are not granted, operations fail with the error such as, ORA-20000: Failed to generate column list, ORA-24247: network access denied by access control list (ACL).

- The schema that creates and owns the Iceberg external table must have outbound HTTPS access to the required host names.

- Users who only need to run

SELECTstatements on an existing Iceberg external table do not require these ACLs; they only need standard privileges such asDWROLEandSELECTon the table.

BEGIN

DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE(

host => 'objectstorage.<region>.oraclecloud.com',

lower_port => 443,

upper_port => 443,

ace => xs$ace_type(

privilege_list => xs$name_list('http'),

principal_name => '<schema>',

principal_type => xs_acl.ptype_db

)

);

END;

/ Provide appropriate values for the <schema> name and <region> with your OCI region.

This example grants an Oracle database schema permission to make outbound HTTPS requests to an OCI Object Storage endpoint.

The DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE procedure adds an Access Control Entry (ACE) that allows the specified schema to use the http privilege (which includes HTTPS) when connecting to the OCI Object Storage regional endpoint on port 443. This access is required for reading Iceberg table metadata and retrieving data files stored in OCI Object Storage.

See DBMS_NETWORK_ACL_ADMIN for more information.

Host Names by Configuration

DBMS_CLOUD.CREATE_EXTERNAL_TABLE:

| Storage | Host names |

|---|---|

|

OCI Object Storage (no external catalog or HadoopCatalog) |

|

|

AWS S3 (no external catalog) |

|

|

AWS Glue Catalog + S3 |

|

|

Databricks Unity Catalog + Azure (ADLS Gen2) |

|

|

Databricks Unity Catalog + S3 |

|

|

Snowflake Polaris + S3 |

|

|

Snowflake Polaris + Azure (ADLS Gen2) |

|

If you use an HTTPS proxy, you must configure ACLs for the proxy host as well. See Call Web Services from Autonomous AI Database for more information.

Parent topic: Setup Requirements for Iceberg

Grant Schema Roles for Iceberg Operations

The DWROLE should be granted to any schema that creates or manages Iceberg external tables.

In Autonomous AI Database, DWROLE is the standard privilege set for executing data-loading and related operations using the DBMS_CLOUD package. Assigning this role ensures that the schema has the required privileges to access cloud storage and to create, query, and manage Iceberg external tables.

Parent topic: Setup Requirements for Iceberg

Typical Workflow of Querying Apache Iceberg Tables

Before you begin querying Apache Iceberg Tables, you should be familiar with it's workflow. This section explains how to set up external tables to access data presented as an end-to-end setup workflow with five main steps.

- Decide Your Access Model:

- Catalog-Managed: Use this model when you want a continuously updated, governed catalog to serve as the single source of truth for data metadata. This central catalog helps maintain consistency and governance over your data.

- Direct-Metadata: Use this model when you work with a fixed snapshot of metadata (via metadata.json). This model is simpler but static, not recommended for production since it lacks automatic updates and governance.

- Gather What You Need:

- Catalog-Managed: You must have access to the catalog endpoint (if applicable), the exact table path, and the object store location where the actual data files reside.

- Direct-Metadata: You only need the URI pointing to the root

metadata.jsonfile plus the object store location of these data files.

- Prepare Credentials:

- For Catalog-Managed setups, acquire credentials to access the catalog.

- Object store credentials are always necessary regardless of model to read both data and metadata files.

Note

Automated credential vending and AWS AssumeRole for catalog access are unsupported.

- Create the External Table:

- In Catalog-Managed, the table queries data through the catalog and accesses files in the object store.

- In Direct-Metadata, the table points directly to the specific

metadata.jsonfile without catalog involvement.

-

Quick Check & Expectations:

Run a simple query such as

COUNT(*)to verify your table setup and ensure it can access data correctly.

Parent topic: Query Apache Iceberg Tables

Provider Quickstarts

This chapter describes the process for setting up external data access with different cloud data providers.

Topics:

- Databricks Unity Catalog

This section explains the workflow that links Databricks with open table formats through UniForm, making it easier to access Delta Lake data in environments that support Iceberg. - Snowflake Polaris

This topic describes Snowflake Polaris (REST catalog) allowing secure access to Apache Polaris Iceberg tables via a REST API using OAuth2 authentication. - AWS Glue Catalog

This topic describes how to access Amazon S3 data via Glue Data Catalog with registered Iceberg tables using AWS credentials. - Hadoop/Filesystem (direct metadata file)

This topic explains how to create a storage credential for accessing an Iceberg table’s metadata file directly from object stores like ADLS, S3, or OCI. It explains categorizing the types of direct metadata management for Iceberg tables stored directly in the filesystem (usually Hadoop compatible file systems) without using a catalog service.

Parent topic: Query Apache Iceberg Tables

Databricks Unity Catalog

This section explains the workflow that links Databricks with open table formats through UniForm, making it easier to access Delta Lake data in environments that support Iceberg.

Databricks Unity Catalog (UniForm path)

-

A Delta table created with UniForm so Iceberg clients can read it.

-

Table files in Azure ADLS Gen2 or AWS S3.

-

Unity Catalog privileges for external access (e.g., External data access enabled); grant

EXTERNAL USE SCHEMAto your principal). -

Auth: OAuth2 (recommended) or Personal Access Token (for quick tests).

-

Configure network ACLs: Before you create an external table that uses Databricks Unity Catalog with data stored in Azure Blob Storage, you must grant the Oracle schema permission to make outbound HTTPS connections to the required Azure services. Because Oracle Database blocks network access by default, so you must update the Network Access Control List (ACL) to permit the schema to reach:

-

The Databricks workspace, which hosts Unity Catalog APIs and token/authentication endpoints.

-

The Azure Storage account, which stores the Iceberg table’s metadata and data files.

Example to configure network ACLsBEGIN -- Databricks workspace (Unity Catalog and token endpoint) DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE( host => '<workspace>.azuredatabricks.net', lower_port => 443, upper_port => 443, ace => xs$ace_type( privilege_list => xs$name_list('http'), principal_name => '<schema>', principal_type => xs_acl.ptype_db ) ); -- Azure Storage (metadata and data files) DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE( host => '<storage-account>.blob.core.windows.net', lower_port => 443, upper_port => 443, ace => xs$ace_type( privilege_list => xs$name_list('http'), principal_name => '<schema>', principal_type => xs_acl.ptype_db ) ); END; /Replace the following placeholders:

<workspace>: The Databricks workspace name used in your Azure Databricks URL.<storage-account>: The Azure Storage account name that contains Iceberg metadata and data files.<schema>: The Oracle schema that will runDBMS_CLOUD.CREATE_EXTERNAL_TABLEand requires HTTPS access.

-

Native Iceberg via Iceberg REST is not supported by our integration yet. Use Delta with UniForm (Iceberg-readable) and expose it via Unity Iceberg REST: https://<workspace-host>/api/2.1/unity-catalog/iceberg.

Create a UniForm (Iceberg-readable) table in Databricks:

customers_iceberg in Databricks within the specified Unity Catalog catalog and schema:USE CATALOG <your_catalog>;

USE SCHEMA <your_schema>;

CREATE TABLE customers_iceberg (

id INT,

name STRING

)

TBLPROPERTIES(

'delta.columnMapping.mode'='name',

'delta.enableIcebergCompatV2'='true',

'delta.universalFormat.enabledFormats'='iceberg'

);

INSERT INTO customers_iceberg (id, name) VALUES

(1,'Alice'), (2,'Bob'), (3,'Carol');Object store credential (ADLS Gen2)

BEGIN

BEGIN DBMS_CLOUD.DROP_CREDENTIAL('AZURE_BLOB_CRED'); EXCEPTION WHEN OTHERS THEN NULL; END;

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'AZURE_BLOB_CRED',

username => '<storage-account-or-sas-username>',

password => '<storage-key-or-sas-token>'

);

END;

/Create REST Catalog Credentials with OAuth2

-- Databricks service principal (client_id / client_secret)

BEGIN

BEGIN DBMS_CLOUD.DROP_CREDENTIAL('UNITY_OAUTH'); EXCEPTION WHEN OTHERS THEN NULL; END;

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'UNITY_OAUTH',

username => '<client_id>',

password => '<client_secret>'

);

END;

/

BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => 'CUSTOMERS_ICEBERG',

credential_name => 'AZURE_BLOB_CRED',

format => '{

"access_protocol": {

"protocol_type": "iceberg-rest",

"protocol_config": {

"iceberg_catalog_type": "unity",

"rest_catalog_endpoint": "https://<workspace-host>/api/2.1/unity-catalog/iceberg",

"rest_authentication": {

"rest_auth_cred": "UNITY_OAUTH",

"rest_auth_endpoint": "https://<workspace-host>/oidc/v1/token"

},

"table_path": ["<your_catalog>","<your_schema>","customers_iceberg"]

}

}

}'

);

END;

/

SELECT COUNT(*) FROM CUSTOMERS_ICEBERG;Create REST Catalog Credentials with Personal Access Token (PAT)

BEGIN

BEGIN DBMS_CLOUD.DROP_CREDENTIAL('UNITY_PAT'); EXCEPTION WHEN OTHERS THEN NULL; END;

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'UNITY_PAT',

username => 'token',

password => '<dapiXXXXXXXXXXXXXXXXXXXXXXXX>'

);

END;

/

BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => 'CUSTOMERS_ICEBERG',

credential_name => 'AZURE_BLOB_CRED',

format => '{

"access_protocol": {

"protocol_type": "iceberg-rest",

"protocol_config": {

"iceberg_catalog_type": "unity",

"rest_catalog_endpoint": "https://<workspace-host>/api/2.1/unity-catalog/iceberg",

"rest_authentication": { "rest_auth_cred": "UNITY_PAT" },

"table_path": ["<your_catalog>","<your_schema>","customers_iceberg"]

}

}

}'

);

END;

/

SELECT COUNT(*) FROM CUSTOMERS_ICEBERG;

Parent topic: Provider Quickstarts

Snowflake Polaris

This topic describes Snowflake Polaris (REST catalog) allowing secure access to Apache Polaris Iceberg tables via a REST API using OAuth2 authentication.

Snowflake Polaris (REST catalog)

-

Polaris Iceberg catalog and endpoint available to your account.

-

Table files accessible in your object store (S3/ADLS as applicable).

-

Auth: OAuth2 recommended (client credentials) or another Polaris-supported token mechanism.

-

Before you create an external table that accesses an Iceberg catalog stored in Snowflake and Amazon S3, the database schema must be allowed to make outbound HTTPS connections to those services. Oracle Database restricts network access by default, so you must update the Network Access Control List (ACL) to permit the schema to reach:

-

Your Snowflake account endpoints, used for catalog access, authentication, and token exchange

-

The Amazon S3 regional endpoint where Iceberg metadata and data files are stored

-

(Optional) The non-regional S3 endpoint, required by some AWS APIs and region lookup operations

Example to configure network ACLsBEGIN -- Allow HTTPS access to your Snowflake account (Polaris and token endpoints) DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE( host => '<account>.snowflakecomputing.com', lower_port => 443, upper_port => 443, ace => xs$ace_type( privilege_list => xs$name_list('http'), principal_name => '<schema>', principal_type => xs_acl.ptype_db ) ); -- Allow HTTPS access to the S3 bucket (regional endpoint for data/metadata) DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE( host => '<bucket>.s3.<region>.amazonaws.com', lower_port => 443, upper_port => 443, ace => xs$ace_type( privilege_list => xs$name_list('http'), principal_name => '<schema>', principal_type => xs_acl.ptype_db ) ); -- Optional: non-regional S3 endpoint (used for some APIs/region lookup) DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE( host => '<bucket>.s3.amazonaws.com', lower_port => 443, upper_port => 443, ace => xs$ace_type( privilege_list => xs$name_list('http'), principal_name => '<schema>', principal_type => xs_acl.ptype_db ) ); END; /Replace the following placeholders:

-

<account>: Your Snowflake account identifier. -

<schema>: The schema that needs outbound HTTPS access. -

<bucket>: The name of the Amazon S3 bucket containing the Iceberg data and metadata. -

<region>: The AWS region where the S3 bucket is hosted.

-

Create an OAuth2 Credential:

The following procedure creates an OAuth2 credential with the name POLARIS_OAUTH for authenticating access to an Apache Polaris Iceberg catalog.

BEGIN

BEGIN DBMS_CLOUD.DROP_CREDENTIAL('POLARIS_OAUTH'); EXCEPTION WHEN OTHERS THEN NULL; END;

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'POLARIS_OAUTH',

username => '<client_id>',

password => '<client_secret>'

);

END;

/

Create Storage Credential

The following procedure creates a storage credential named S3_CRED for accessing object storage (example: Amazon S3) with an AWS access key ID and secret access key.

-- Storage credential for your object store (example: S3)

BEGIN

BEGIN DBMS_CLOUD.DROP_CREDENTIAL('S3_CRED'); EXCEPTION WHEN OTHERS THEN NULL; END;

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'S3_CRED',

username => '<aws_access_key_id>',

password => '<aws_secret_access_key>'

);

END;

/Create External Table

<external_table_name> in Autonomous AI Database that accesses data stored using Iceberg format managed by Polaris catalog.BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => '<external_table_name>',

credential_name => 'S3_CRED',

format => q'[

{

"access_protocol": {

"protocol_type": "iceberg",

"protocol_config": {

"iceberg_catalog_type": "polaris",

"rest_catalog_prefix": "<polaris_catalog_name>",

"rest_catalog_endpoint": "https://<account>.snowflakecomputing.com/polaris/api/catalog",

"rest_authentication": {

"rest_auth_cred": "POLARIS_OAUTH",

"rest_auth_endpoint": "https://<account>.snowflakecomputing.com/polaris/api/catalog/v1/oauth/tokens"

},

"table_path": ["<my_namespace>", "<my_table>"]

}

}

}

]',

);

END;

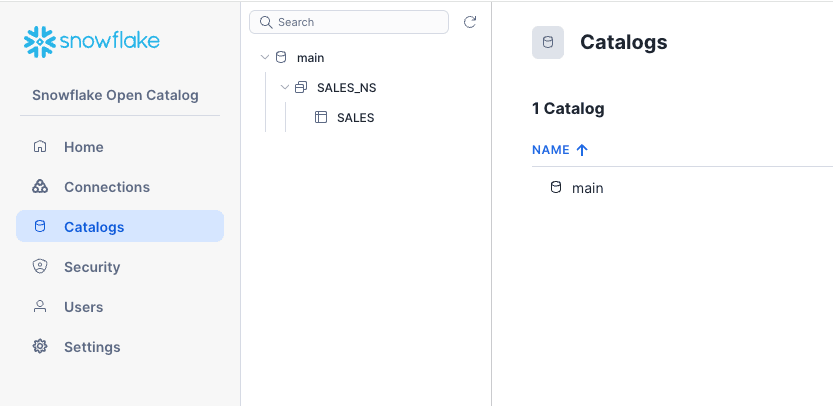

/Snowflake Polaris Open Catalog UI example: This shows the Snowflake Polaris Open Catalog UI; the main catalog, which contains the namespace SALES_NS and the table SALES.

Description of the illustration adbs_polaris_main_ui.png

Example: This example creates the SALES_POLARIS_EXT external table in Autonomous AI Database, that connects directly to the SALES table in the SALES_NS namespace of the main catalog in Polaris, as shown in the screenshot abovr. This allows you to access the Polaris table as an external resource.

BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => 'SALES_POLARIS_EXT',

credential_name => 'S3_CRED',

format => q'[

{

"access_protocol": {

"protocol_type": "iceberg",

"protocol_config": {

"iceberg_catalog_type": "polaris",

"rest_catalog_prefix": "main",

"rest_catalog_endpoint": "https://<account>.snowflakecomputing.com/polaris/api/catalog",

"rest_authentication": {

"rest_auth_cred": "POLARIS_OAUTH",

"rest_auth_endpoint": "https://<account>.snowflakecomputing.com/polaris/api/catalog/v1/oauth/tokens"

},

"table_path": ["SALES_NS", "SALES"]

}

}

}

]'

);

END;

/Quick Functionality Check

SELECT COUNT(*) FROM SALES_POLARIS;Keep endpoint and token URL placeholders as they vary by Polaris configuration.

Parent topic: Provider Quickstarts

AWS Glue Catalog

This topic describes how to access Amazon S3 data via Glue Data Catalog with registered Iceberg tables using AWS credentials.

-

Glue Data Catalog with the Iceberg table registered (S3 objects accessible).

Region name for Glue (e.g.,

us-east-1). -

Auth: Access key/secret for S3; Glue access via catalog configuration.

-

Credential vending via AWS ARN is unsupported. Explicit credentials must be supplied.

-

Before you create an external table that uses AWS Glue as the Iceberg catalog and Amazon S3 as the storage layer, the Oracle schema must be granted permission to make outbound HTTPS requests to these AWS services. Oracle Database restricts network access by default, so you must update the Network Access Control List (ACL) to permit the schema to reach:

-

AWS Glue, which provides the Iceberg catalog API

-

The S3 regional endpoint, where Iceberg metadata and data files are stored

-

The non-regional S3 endpoint, which is required for certain AWS APIs and region lookup operations

Example to configure network ACLsBEGIN -- AWS Glue (catalog API) DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE( host => 'glue.<region>.amazonaws.com', lower_port => 443, upper_port => 443, ace => xs$ace_type( privilege_list => xs$name_list('http'), principal_name => '<schema>', principal_type => xs_acl.ptype_db ) ); -- S3 bucket (regional endpoint for data and metadata files) DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE( host => '<bucket>.s3.<region>.amazonaws.com', lower_port => 443, upper_port => 443, ace => xs$ace_type( privilege_list => xs$name_list('http'), principal_name => '<schema>', principal_type => xs_acl.ptype_db ) ); -- Optional: non-regional S3 endpoint (used for some APIs or region lookup) DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE( host => '<bucket>.s3.amazonaws.com', lower_port => 443, upper_port => 443, ace => xs$ace_type( privilege_list => xs$name_list('http'), principal_name => '<schema>', principal_type => xs_acl.ptype_db ) ); END; /Replace the following placeholders:

-

<region>: The AWS region where Glue and the S3 bucket are located. -

<bucket>: The name of the S3 bucket that stores Iceberg data and metadata files. -

<schema>: The Oracle schema that runsDBMS_CLOUD.CREATE_EXTERNAL_TABLEand requires HTTPS access.

-

Create a storage credential

The following procedure creates a storage credential named S3_CRED in Databricks to enable access to data stored in an Amazon S3 bucket.

-- S3 credential

BEGIN

BEGIN DBMS_CLOUD.DROP_CREDENTIAL('S3_CRED'); EXCEPTION WHEN OTHERS THEN NULL; END;

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'S3_CRED',

username => '<aws_access_key_id>',

password => '<aws_secret_access_key>'

);

END;

/Create an external Iceberg table

ORDERS_GLUE in Databricks.BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => 'ORDERS_GLUE',

credential_name => 'S3_CRED',

format => '{

"access_protocol": {

"protocol_type": "iceberg",

"protocol_config": {

"iceberg_catalog_type": "aws_glue",

"iceberg_glue_region": "us-east-1",

"iceberg_table_path": ["<database>.<table>"]

}

}

}'

);

END;

/Quick Functionality Check

ORDERS_GLUE verifying the connection and data accessibility.SELECT COUNT(*) FROM ORDERS_GLUE;Parent topic: Provider Quickstarts

Hadoop/Filesystem (direct metadata file)

This topic explains how to create a storage credential for accessing an Iceberg table’s metadata file directly from object stores like ADLS, S3, or OCI. It explains categorizing the types of direct metadata management for Iceberg tables stored directly in the filesystem (usually Hadoop compatible file systems) without using a catalog service.

Example: Query an Iceberg table using JSON Metadata

- You can access the table’s Iceberg root manifest (

metadata.json) in your object store (ADLS/S3/OCI). - This path is point-in-time. To follow new snapshots, recreate the external table.

Create a Storage Credential

This procedure first tries to drop an existing credential called STORE_CRED if it exists (ignoring any errors). Then, it creates a new credential named STORE_CRED.

-- Storage credential for wherever the metadata.json lives

BEGIN

BEGIN DBMS_CLOUD.DROP_CREDENTIAL('STORE_CRED'); EXCEPTION WHEN OTHERS THEN NULL; END;

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'STORE_CRED',

username => '<user-or-key>',

password => '<secret-or-token>'

);

END;

/Create an External Table

CUSTOMERS_META.BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name => 'CUSTOMERS_META',

credential_name => 'STORE_CRED',

file_uri_list => 'https://<bucket-or-container>/<path>/metadata.json',

format => '{"access_protocol":{"protocol_type":"iceberg"}}'

);

END;

/Quick Functionality Check

The following procedure runs a query to count all rows in the external table.

SELECT COUNT(*) FROM CUSTOMERS_META;Example: Query an Iceberg table using Hadoop Catalog on OCI

In this example, we query the Iceberg table db.icebergTablePy ,

created using OCI Data Flow, where Spark uses the HadoopCatalog implementation for the

Iceberg catalog. HadoopCatalog uses a lakehouse folder iceberg in bucket

my-iceberg-bucket and puts the Iceberg metadata in a

$database_name/$table_name subfolder under this directory. It also uses a

version-hint.text file that contains the version number for the most

recent metadata file version.

db.icebergTablePy as follows:BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE (

table_name => 'iceberg_parquet_time_dim3',

credential_name => 'OCI_CRED',

format => '{

"access_protocol": {

"protocol_type": "iceberg",

"protocol_config": {

"iceberg_catalog_type": "hadoop",

"iceberg_lakehouse": "https://objectstorage.uk-cardiff-1.oraclecloud.com/n/my-tenancy/b/my-iceberg-bucket/o/iceberg",

"iceberg_table_path": "db.icebergTablePy"

}

}

}'

);

END;

/Parent topic: Provider Quickstarts

References

This section provides a list of references for links cited throughout this chapter:

Parent topic: Query Apache Iceberg Tables