About Data Flows

A data flow defines how the data is moved and transformed between different systems.

When you run a data flow, Data Transforms uses the joins, filters, mappings, and constraints to transform source data and load it to target tables. Note that you can run only one execution flow at a time. You cannot put multiple flows on a Data Flow and a flow cannot diverge into multiple flows.

Topics

- Create a Data Flow

Create data flows to load data from a source connection, run transformations, and move the data to a target database. - View and Manage Data Flows

The Data Flows page displays the list of data flows. - About Data Flow Editor

The Data flow editor is divided into five parts, the Data Entity Panel, the Database Functions Toolbar, the Design Canvas, the Properties Panel, and the Status Panel. - Supported Database Functions

Oracle Data Transforms supports various database functions that you can drag and drop on the Design Canvas to connect components within a data flow. - Add Components

Add the data entities and database functions to the Design Canvas, and connect them in a logical order to complete your data flows. - Use Text Embedding Vector in a Data Flow

Data Transforms supports the use of vector datatype and embedding vectors in a data flow. Currently, Data Transforms integrates with OCI Generative AI service to convert input text into vector embeddings that you can use for data analysis and searches. - Use Auto-join to Map Associated Tables in a Data Flow

You can use the auto-join feature to establish join conditions between tables based on predefined relationships, such as primary key/foreign key relationships or common column names. - Generate Data Flow From SQL Statements

You can use SQL statements to generate data flows using the SQL parser feature of Data Transforms. - Create and Use a Materialized View in a Data Flow

A materialized view is a database object that contains the results of a query. To use materialized views in Data Transforms you need to first build the materialized view data entity using the Data Flow editor, and then use the data entity in a data flow as source. - Component Properties

The Properties Panel displays various settings for components selected in the Design Canvas. - Map Data Columns

When you connect the source data entity with the target data entity, the column names are automatically mapped by the column names. You have a choice to map the columns by Position or by Name or map the columns manually using the Expression Editor. - Validate and Execute a Data Flow

After your mappings are ready, you can proceed to validate and execute the data flow.

Parent topic: The Data Transforms Page

Create a Data Flow

Create data flows to load data from a source connection, run transformations, and move the data to a target database.

You can create data flows in any of the following ways:

The newly created data flow is displayed in the Data Flows page of the

associated project. Click the Actions icon (![]() ) next to the selected data flow to edit, rename, copy, change folder,

start, export, or delete it. See View and Manage Data Flows.

) next to the selected data flow to edit, rename, copy, change folder,

start, export, or delete it. See View and Manage Data Flows.

From the Projects page

To create a data flow from the Projects page,

- On the Projects page, click Create Data Flow.

Create Data Flow page appears:

- In the Name field, enter a name for the new data flow.

- Select Create New Project, if you wish to create new project folder for the newly created data flow.

- Else, click Add to Existing Projects, if you wish to add the newly created data flow to an existing project folder.

- If you have selected Create New Project for the previous option, in the Project Name field, enter the name of the newly created project.

- Else, if you have selected Add to Existing Projects for the previous option, select the required project from the Project Name drop-down arrow.

- In the Description field, enter a description for the newly created data flow.

- Click Create.

From the Data Flows page within a project

To create a data flow from the Data Flows page within a project,

- On the Projects page click the project tile you wish to create a new data flow for. The Project Details page appears.

- In the Data Flows page, click Create Data Flow.

- Provide the Name and Description of the new data flow.

- Click Next.

- To define your source connection, from the Connection drop-down, select the required connection from which you wish to add the data entities.

- In the Schema drop-down, all schema corresponding to the selected connection are listed in two groups:

- Existing Schema (ones that you've imported into Oracle Data Transforms) and

- New Database Schema (ones that you've not yet imported).

Select the schema that you want to use from the drop down. For Oracle Object Storage connections, the Schema drop-down lists the name of the bucket that you specified in the URL when you created the connection.

- Click Save.

The Data Flow Editor appears that allows you to create a new data flow.

From the Home page

To create a data flow from the Home page,

- On the Home page, click Transform Data. The Create Data Flow page appears.

- Provide the Name and Description of the new data flow.

- Select a project name from the drop-down. Alternatively, click the + icon to create a project.

- Click Next.

- From the Connection drop-down, select the required connection from which you wish to add the data entities. Alternatively, click the + icon to create a new connection.

- In the Schema drop-down, all schema corresponding to the selected connection are listed in two groups:

- Existing Schema (ones that you've imported into Oracle Data Transforms) and

- New Database Schema (ones that you've not yet imported).

Select the schema that you want to use from the drop down.

- Click Save.

Parent topic: About Data Flows

View and Manage Data Flows

The Data Flows page displays the list of data flows.

You can search for the required data flow to know its details based on the following filters:

- Name of the data flow.

- Folder associated with the data flow.

Click the Actions icon (![]() ) next to the selected data flow to perform the following

operations:

) next to the selected data flow to perform the following

operations:

- Select Edit to go to the Data Flow Details page where you can make changes to and run the data flow.

- Select Rename to rename the data flow.

- Select Copy to create a copy of the selected data flow.

- Select Change Folder to change the folder where the data flow is located in.

- Click Export to export the data flow. See Export Objects.

- Click Start to run the data flow.

- Click View Dependencies to check the dependent workflows, if any, that are associated with the selected data flow. The dependencies are grouped by project.

- You can delete a single or multiple data flows. To

delete data flows:

- Select the data flows.

- Click the Delete button. The first confirmation screen appears listing the selected data flows.

- Click Delete. Another confirmation screen appears listing the workflows that will be affected as part of the deletion. For data flows that have no dependencies, the confirmation screen displays the status as "Deleted".

- Select one or more data flows, as required, and click Delete. A confirmation screen appears displaying the status of the data flows as "Deleted".

The data flow and any workflow steps that reference this data flow are deleted.

Parent topic: About Data Flows

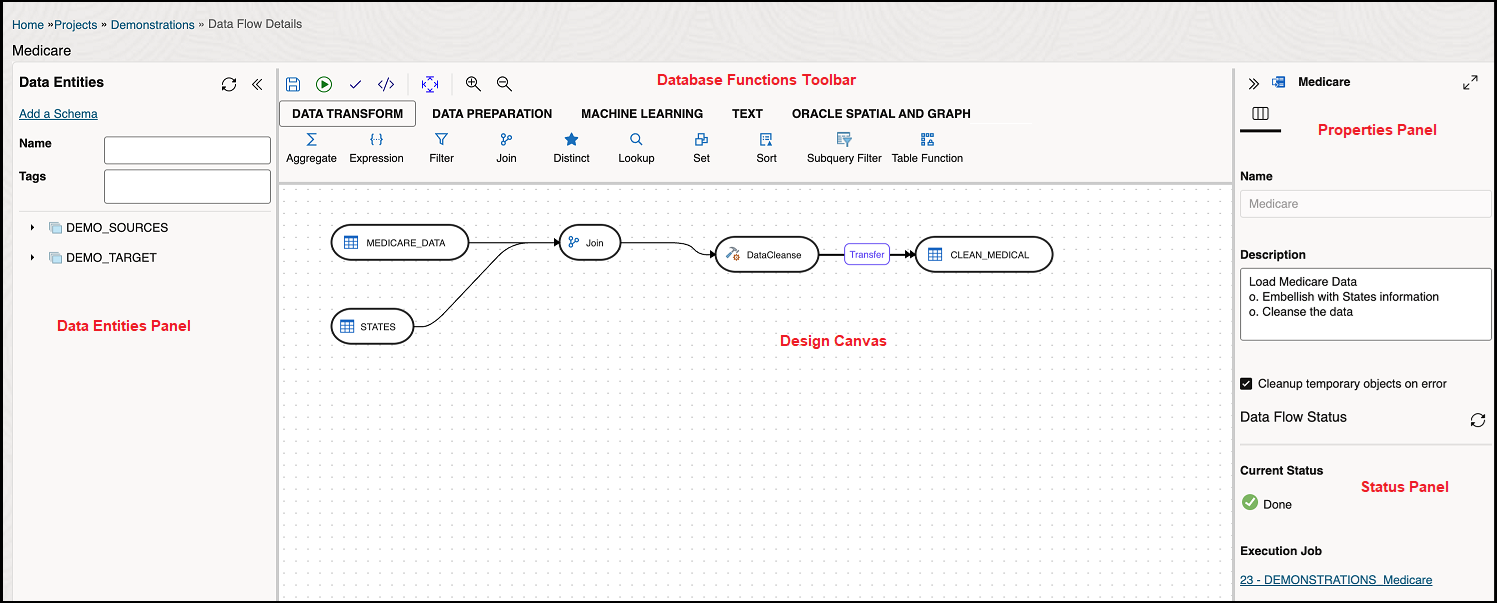

About Data Flow Editor

The Data flow editor is divided into five parts, the Data Entity Panel, the Database Functions Toolbar, the Design Canvas, the Properties Panel, and the Status Panel.

- Data Entities Panel: The data entity panel displays the Data Entities that

are available to use in your Data flows. The displayed list can be filtered using

the Name and Tags fields. The panel includes options that let you add schemas,

import data entities, remove any of the schemas that are associated with the data

flow, and refresh data entities. See Add Components for information about how to use these options.

This panel also includes the following controls:

: Saves the data flow.

: Saves the data flow.

-

: Executes the data flow.

: Executes the data flow.

: Allows you to schedule the data flow to run at a specified time

interval.

: Allows you to schedule the data flow to run at a specified time

interval.

: Validates the data flow.

: Validates the data flow.

: Allows you to check the code that will run when you execute the

data flow job.

: Allows you to check the code that will run when you execute the

data flow job.

-

: Aligns the nodes of the data flow.

: Aligns the nodes of the data flow.

-

: Maximizes or minimizes the data flow diagram in the design

canvas.

: Maximizes or minimizes the data flow diagram in the design

canvas.

: Allows you to use SQL queries to create a data flow. See Generate Data Flow From SQL Statements.

: Allows you to use SQL queries to create a data flow. See Generate Data Flow From SQL Statements.

: Synchronizes any updates made to the data entities within the data

flow.

: Synchronizes any updates made to the data entities within the data

flow.

- Database Functions Toolbar: The Database Functions toolbar display the database functions that can be used in your data flows. Just like Data Entities, you can drag and drop the Database tools you want to use on the design canvas. See Supported Database Functions for more information.

- Design Canvas: The design canvas is where you build your transformation logic. After adding the Data Entities and Database Functions to the design canvas, you can connect them in a logical order to complete your data flows.

- Properties Panel: The properties panel displays the properties of the selected object on the design canvas. The Properties Panel is grouped into four Tabs. General, Attributes, Preview Data, Column Mapping, and Options. Not all tabs are available as they vary based on the selected object. See Component Properties to know more about these options.

- Status Panel: When you run a data flow, the Status Panel shows the status of the job that is running in the background to complete the request. You can see the status of the job that is currently running or the status of the last job. For more information about the Status panel, see Monitor Status of Data Loads, Data Flows, and Workflows.

Parent topic: About Data Flows

Supported Database Functions

Oracle Data Transforms supports various database functions that you can drag and drop on the Design Canvas to connect components within a data flow.

The Database Functions toolbar in the Data Flow editor includes the following database functions that can be used in your data flows. See Oracle Database SQL Language Reference for information about the database functions.

- Data Transformation

It contains the following components:

- Aggregate

- Expression

- Filter

- Join

- Distinct

- Lookup

- Set

- Sort

- Subquery Filter

- Table Function

- Data Preparation

It contains the following components:

- Data Cleanse

- Substitution

- Equi_Width Binning

- Quantile Binning

- Lead

- Lag

- Replace

- Machine Learning

It contains the following components:

- Prediction

- Prediction Model

- Outlier Detection

- Text Embedding Vector

- Text

It contains the following components:

- REGEXP COUNT

- REGEXP INSTR

- REGEXP SUBSTR

- REGEXP REPLACE

- Edit Distance Similarity

- Contains

- Oracle Spatial and Graph

It contains the following components:

- Buffer Dim

- Buffer Tol

- Distance Dim

- Distance Tol

- Nearest

- Simplify

- Point

- Geocode Tools:

Note

The following Geocode Tools work only in non-Autonomous Database environment.- Geocode As Geometry

- Geocode

- Geocode Addr

- Geocode All

- Geocode Addr All

- Reverse Geocode

Note

The following Geocode Tool works only in an Autonomous Database environment.- Geocode Cloud

- Spatial Join

Parent topic: About Data Flows

Add Components

Add the data entities and database functions to the Design Canvas, and connect them in a logical order to complete your data flows.

- In the Data Entities panel, click Add a Schema to add schemas that contain the data entities that you want to use in the data flow.

- In the Add a Schema page, select the connection and schema name.

- Click Import.

- In the Import Data Entities page, select the Type of Objects you want to import. Choose a Mask/filter if you don't want to import every object in the schema and click Start.

- The Data Entities panel lists the imported data entities. The panel includes various options that let you do the following:

- Refresh Data Entities – Click the Refresh icon

to refresh the displayed list.

to refresh the displayed list.

- Name - Search for data entities by name.

- Tags - Filter the data entities by the name of the tag used.

- Import Data Entities - Right-click the schema to see this option. Use this option to import the data entities.

- Remove Schema - Right-click the data entity to see this option. Use this option to remove the schema from the list. Note that this option does not delete the schema, it only removes the association of the schema with this data flow.

- Refresh Data Entities – Click the Refresh icon

- Similarly add more schemas to the Data Flow, if required.

- Drag the required Data Entities that you want to use in the data flow and drop them on the design canvas.

- From the Database Functions toolbar, drag the transformation component that you want to use in the data flow and drop them on the design canvas. You can use variables in the data flow. See Use Variables in a Data Flow for more information.

- Select an object on the design canvas, and drag the Connector icon (

) next to it to connect the components.

) next to it to connect the components.

- After saving the data flow, there may be a Transfer icon overlaid on one or more of the component connections. This indicates that ODI has detected an additional step and it is required to move the data between data servers. You can click on this Icon to view properties associated with this step.

Parent topic: About Data Flows

Use Text Embedding Vector in a Data Flow

Data Transforms supports the use of vector datatype and embedding vectors in a data flow. Currently, Data Transforms integrates with OCI Generative AI service to convert input text into vector embeddings that you can use for data analysis and searches.

For text embedding Data Transforms supports both the text stored in a column and from the http links stored in a column. Before you use embedding vectors in a data flow, you need to do the following:

- Create an Oracle AI Database 26ai connection. See Work with Connections for generic instructions on how to create a connection in Data Transforms.

- Create an Oracle Cloud Infrastructure (OCI) Generative AI connection. See Create and use an Oracle Cloud Infrastructure Generative AI Connection.

To use vector embeddings in a data flow:

- Follow the instructions in Create a Data Flow to create a new data flow.

- In the Data Flow Editor click Add a Schema to define your source connection. From the Connection drop-down, select the Oracle AI Database 26ai connection and the schema that you want to use from the drop down. Click OK.

- Drag the tables that you want to use as a source in the data flow and drop them on the design canvas.

- From the Database Functions toolbar, click Machine Learning and drag the Text Embedding Vector transformation component drop it on the design canvas.

- Click the Text Embedding Vector transformation component to view its properties.

- In the General tab, specify the following:

- AI Service - Select OCI Generative AI from the drop-down.

- Connection - The drop-down lists all the available connections for the selected AI Service. Select the connection that you want to use.

- AI Model - The drop-down lists all the available models for the

selected AI Service and Connection. The following models are listed:

- "cohere.embed-english-light-v2.0"

- "cohere.embed-english-light-v3.0"

- "cohere.embed-english-v3.0"

- "cohere.embed-multilingual-light-v3.0"

- "cohere.embed-multilingual-v3.0"

- In the Column Mapping tab, map the source column that you want

to embed to the INPUT attribute of the operator. The only column available in the

column mappings is

input_text. Drag a text column from the available columns to the Expression column. This is the data that the vectors will be built on. - Drag the table that you want to use as a target in the data flow and drop it on the design canvas.

- Save and execute the data flow.

Data Transforms will build vectors for each of the rows in the source table and write that to the target table.

Parent topic: About Data Flows

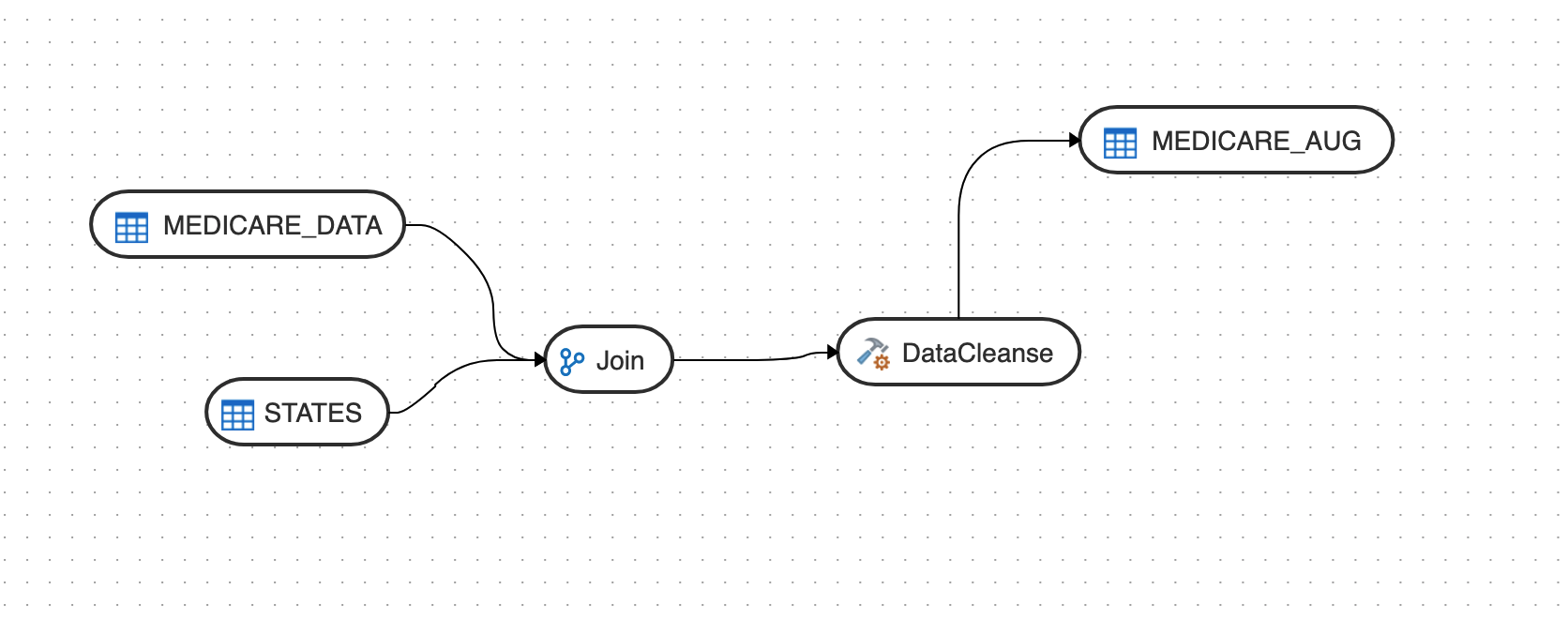

Use Auto-join to Map Associated Tables in a Data Flow

You can use the auto-join feature to establish join conditions between tables based on predefined relationships, such as primary key/foreign key relationships or common column names.

To use auto-joins in a data flow:

- Follow the instructions in Create a Data Flow to create a new data flow.

- In the Data Flow Editor click Add a Schema to define your source connection.

- Drag the table that has the foreign key and drop it on the design canvas.

- Select the object on the design canvas, and click the

icon.

icon.

The Join Data Entity page appears listing the tables where the foreign key of this table references the primary key of another table.

- Select the table(s) you want to use and click Save. This will automatically create the join condition.

- Save and execute the data flow.

Data Transforms will join the tables and insert the matching records into the target table.

Parent topic: About Data Flows

Generate Data Flow From SQL Statements

You can use SQL statements to generate data flows using the SQL parser feature of Data Transforms.

You can provide a representation of a data flow that has target data entity and source data entity by having both sections insertion and selection in the SQL statement. The Data Transforms SQL parser feature will analyze the SQL statement, convert it to a data flow, and display it on the Design Canvas.

The SQL parser supports the following operators:

- Data entities: Data entities are generated based on where they are referenced within the SQL statements received as input by the SQL Parser.

- Expression: Expression components are generated when a function is identified in the SQL statement. However, this type of component is not generated if the function is an aggregation. In such cases, it is replaced by an Aggregation component. Expression components are also generated if the SQL Parser component finds arithmetic or logic expressions within the statement.

- Aggregation: Aggregation components are generated exclusively when aggregation functions are detected in the SQL statement. These components can be created regardless of whether they include other aggregation operators, such as GROUP BY and HAVING.

- Filter: Filter components are generated whenever the SQL Parser component encounters the WHERE keyword, and it is capable of handling any type of condition.

- Join: Join components are generated for every type of join supported by Oracle, and they can handle any joining condition specified in the SQL statement.

- Set: Set components are generated for all types of set operations - such as UNION, INTERSECT, and EXCEPT - when they are present in the SQL statement.

To generate a data flow using SQL statements:

- Follow the instructions in Create a Data Flow to create a new data flow.

- In the Data Flow Editor, click the

icon.

icon.

- On the Generate Data Flow From SQL page, insert your SQL statement in the Enter SQL Query window. See Example.

- Click Generate.

The SQL Parser feature generates the data flow, and displays a preview.

- Click Apply to load the data flow on to the Design Canvas.

- Save the data flow.

- Drag and drop more elements on to the Design Canvas, if required.

- Save and execute the data flow.

You cannot use the SQL parser feature to add to an existing data flow. A data flow added using the SQL parser will overwrite any existing data flow that is on the Design Canvas.

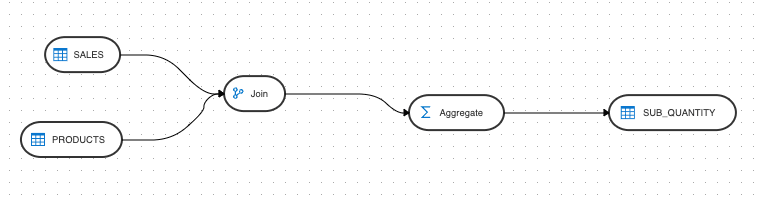

Example

The following example shows the use of an aggregation component in a SQL statement to generate a data flow:

create table DEMO_TARGET.SUB_QUANTITY

(

QUANTITY_SOLD NUMBER(10,2),

PROD_SUBCATEGORY VARCHAR2(50 CHAR)

)

INSERT

/*+ APPEND PARALLEL */

INTO DEMO_TARGET.SUB_QUANTITY

(

QUANTITY_SOLD ,

PROD_SUBCATEGORY

)

SELECT

(SUM(SALES.QUANTITY_SOLD)) ,

PRODUCTS.PROD_SUBCATEGORY

FROM

SH.SALES SALES INNER JOIN SH.PRODUCTS PRODUCTS

ON SALES.PROD_ID=PRODUCTS.PROD_ID

GROUP BY

PRODUCTS.PROD_SUBCATEGORY The generated data flow will look like this:

Parent topic: About Data Flows

Create and Use a Materialized View in a Data Flow

A materialized view is a database object that contains the results of a query. To use materialized views in Data Transforms you need to first build the materialized view data entity using the Data Flow editor, and then use the data entity in a data flow as source.

To use a materialized view data entity in the Data Flow editor,

- Drag the data entity that you want to build the materialized view on onto the Design Canvas.

- Select the component and click the Add Data Entity icon

present on the top right corner of the component.

present on the top right corner of the component.

- The Add Data Entity page appears allowing you to configure the following

details of the target component:

General tab

- In the Name text box, enter the name of the newly created Data Entity.

- From the Entity Type drop-down, select

Materialized View as the data entity type.

When you select this entity type the Connection Type drop-down only lists Oracle as the option, and the Connection drop-down is populated with the same connection as the source data entity. Both options are grayed out.

- In the Schema drop-down, all schema corresponding to

the selected connection are listed in two groups.

- New Database Schema (ones that you've not imported from before) and

- Existing Database Schema (ones that you've imported from before and are potentially replacing data entities).

- In the Tags text box, enter a tag of your choice. You can use tags to filter the Data Entities displayed in the Data Entity Page.

- If you want to mark this data entity as a feature group, expand Advanced Options and click the Treat as Feature Group check box.

- Click Next.

Columns tab

- Click the

Add Columns icon, to add new columns to the newly created Data

Entity.

Add Columns icon, to add new columns to the newly created Data

Entity.

A new column is added to the displayed table.

- The table displays the following columns:

- Name

- Data Type - Click the cell to configure the required Data Type.

- Scale

- Length

- Actions - Click the cross icon to delete the created column.

- To delete the columns in bulk, select the columns and click

the

Delete icon.

Delete icon.

- To search for the required column details, in the Search text box enter the required column name and click enter. The details of the required column are displayed.

- Click Next.

Preview Data Entity tab

This tab displays a preview of all the created columns and their configured details.

- Click Save to save the configuration and exit the wizard. The materialized view data entity is added as a component on the Design Canvas.

- Click the materialized view data entity and in the Properties pane

to the right, click Options (

).

).

Set the following options to define the refresh settings:

- Recreate materialized view: This option allows you to

drop the existing materialized view and create a new one if the

definition changes. Set this to

trueto recreate the materialized view to match the query from the updated data flow. This only needs to be done once. Default value isfalse. - Refresh with: This option allows you to specify whether the incremental refresh should be done using PRIMARY KEY or the internal ROWID. Default value is ROWID.

- Recreate materialized view: This option allows you to

drop the existing materialized view and create a new one if the

definition changes. Set this to

- Save and execute the data flow. The left panel of the Data flow Details page lists the materialized view data entities that you can use as a source component in a data flow.

- Drag and drop the materialized view data entity onto the Design Canvas. Drag the required Data Entities that you want to use in the data flow and drop them on the design canvas.

- Save and execute the data flow. On data flow execution, the materialized view will refresh according to the configured settings.

Parent topic: About Data Flows

Component Properties

The Properties Panel displays various settings for components selected in the Design Canvas.

Depending on the component selected, you may see any of the following icons:

- General (

) - Displays the name of the component along with its connection and schema details. You can edit some of these properties.

) - Displays the name of the component along with its connection and schema details. You can edit some of these properties.

- Attributes (

) - Displays the details of all the attributes associated with the component.

) - Displays the details of all the attributes associated with the component.

- Column Mapping (

) - Allows you to map all the columns automatically. See Map Data Columns for more information.

) - Allows you to map all the columns automatically. See Map Data Columns for more information.

- Preview (

) - Displays a preview of the component. For Oracle tables, you can also view the statistics of the selected data entity. See View Statistics of Data Entities for details about the statistical information available.

) - Displays a preview of the component. For Oracle tables, you can also view the statistics of the selected data entity. See View Statistics of Data Entities for details about the statistical information available.

- Options (

) - Displays options such as

) - Displays options such as

- Truncate Table - Replaces any existing target table content with new data.

- Append - Inserts records from the flow into the target. Existing records are not updated.

- Incremental - Integrates data in the target table by comparing the records of the flow with existing records and updating the records when their associated data is not the same. Those that don't yet exist in the target are inserted.

The option includes an Auto compression feature that is set to

Trueby default. For data flow jobs that use the Incremental Update mode to load data onto a compressed Oracle target partition, the Auto compression feature recompresses the modified target partitions after the load completes successfully. For table partitions that are not originally compressed, the compression is skipped irrespective of whether Auto compression is set to true.Note

The Auto compression option is available to the ADMIN user or to a user with the DWROLE role. For data flows that have schema users other than ADMIN you need to either assign the DWROLE to the user or disable Auto compression to avoid execution errors.

Parent topic: About Data Flows

Map Data Columns

When you connect the source data entity with the target data entity, the column names are automatically mapped by the column names. You have a choice to map the columns by Position or by Name or map the columns manually using the Expression Editor.

To map columns by Position or by Name:

- Select the target Data Entity.

- Click the arrow icon present on the top right corner to expand the Properties Panel. This will give you more space to work with.

- In the Properties Panel, click the Column Mapping icon (

).

).

- To map the columns by Position or by Name, from the Auto Map drop-down menu, select By Position or By Name.

To map the columns manually:

- From the Auto Map drop-down menu, select Clear to clear the existing mappings.

- Drag and drop the attributes from the tree on the left to map with the Expression column.

- To edit an expression, click the Edit icon of the respective column. The Expression Editor appears allowing you to perform the required changes (for example, you can just add an expression-"UPPER" or open the Expression Editor to edit the expression).

Note

Use the expression editor only if you have complex expressions for a particular column. - Click OK.

Parent topic: About Data Flows

Validate and Execute a Data Flow

After your mappings are ready, you can proceed to validate and execute the data flow.

- Click Save.

After saving, if data needs to be staged before transforming, Transfer button is added to one or more links. You can click these buttons to set more options, if available.

- Click the Code Simulation icon (

) if you want to check the code that will run to complete the tasks that

are performed when you execute the data flow job. The source and target details

are displayed in different colors for ease of reference. This is handy if you

want to check if the mapping is correct before you run the job or if the job

fails. Note that the code cannot be used for debugging. For detailed information

about the job, see the Job Details page.

) if you want to check the code that will run to complete the tasks that

are performed when you execute the data flow job. The source and target details

are displayed in different colors for ease of reference. This is handy if you

want to check if the mapping is correct before you run the job or if the job

fails. Note that the code cannot be used for debugging. For detailed information

about the job, see the Job Details page.

- Click the Validate icon (

) in the toolbar above the design canvas to validate the data flow.

) in the toolbar above the design canvas to validate the data flow.

- After a successful validation, click the Execute icon (

) next to the Validate icon to execute the data flow.

) next to the Validate icon to execute the data flow.

If you have added variables to the data flow, the Variable values page appears that displays the list of variables that you have added to the data flow. You can choose to use the current value, the default value, or set a custom value for each variable. Note that the custom value is applied only to the current run of the data flow. The custom value does not persist for any subsequent sessions.

A message appears that displays the execution Job ID and name. To check the status of the data flow, see the Status panel on the right below the Properties Panel. For details about the Status panel, see Monitor Status of Data Loads, Data Flows, and Workflows. This panel also shows the link to the Job ID that you can click to monitor the progress on the Jobs page. For more information, see Create and Manage Jobs.

For data flows created using Oracle Object Storage connections, the data from the source CSV file is loaded into the target Oracle Autonomous Database. You can also export data from an Oracle Autonomous Database table to a CSV file in Oracle Object Storage.

Parent topic: About Data Flows