4 Customizing the Oracle Business Analytics Warehouse

You can customize the ETL functionality in Oracle Business Intelligence Applications (Oracle BI Applications).

What Is Customization in Oracle BI Applications?

In Oracle BI Applications, customization allows you to change the preconfigured behavior and analyze new information in your business intelligence dashboards.

For example, you might want to add a column to a dashboard by extracting data from the field HZ_CUST_ACCOUNTS.ATTRIBUTE1 and storing it in the Oracle Business Analytics Warehouse in the X_ACCOUNT_LOG field.

The type of data source that you have determines the types of customization available for you. Data sources are one of the following types:

-

Packaged applications (for example, Oracle Fusion or Oracle E-Business Suite), which use prepackaged adapters.

-

Non-packaged data sources, which use the Universal adapter.

The figure summarizes the category of customization that you can perform for each type of data source and type of modification.

Customizations are grouped into the following categories:

-

Category 1. In a Category 1 customization, you add additional columns from the source systems that have pre-packaged adapters and load the data into the existing Oracle Business Analytics Warehouse tables. See Category 1 Customizations: Adding Columns to Existing Fact or Dimension Tables.

-

Category 2. In a Category 2 customization, you use pre-packaged adapters to add new fact or dimension tables to the Oracle Business Analytics Warehouse. Category 2 customizations normally require that you build new Source Dependent Extract (SDE) and Source Independent Load (SIL) mappings. See Category 2 Customizations: Adding Additional Tables.

-

Category 3. In a Category 3 customization, you use the Universal adapter to load data from sources that do not have pre-packaged adapters. See Category 3 Customizations: Adding New Data.

When you customize ETL Packages and Interfaces, you usually work in the \Oracle BI Applications\Mappings folder in the Projects view in the Designer of Oracle Data Integrator (ODI).

Note:

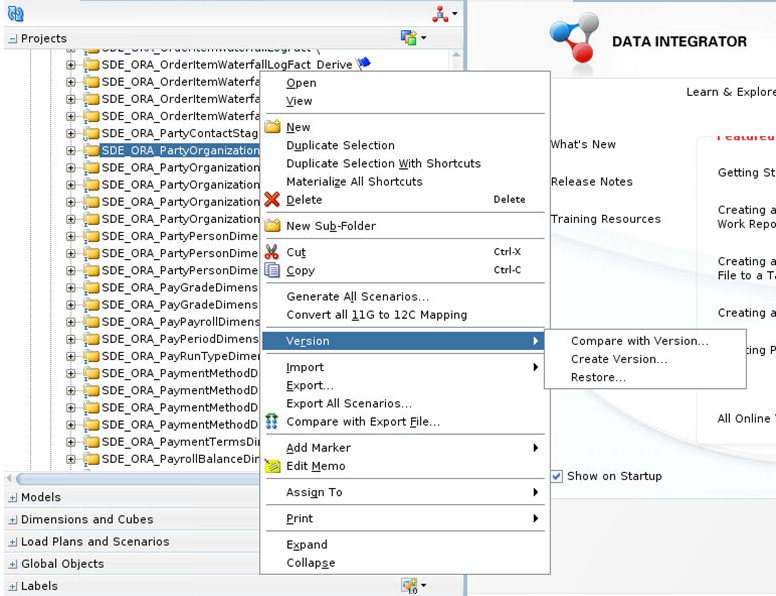

The customization methodology is to make a copy of the ETL task and version both the original and copy while a datastore is simply versioned. These versions allow you to revert functionality if required as well as identify changes that have been introduced through customization, patches, or upgrades.Note:

You must not rename, delete, or move packaged objects in the ODI repository unless you are directed to do so by Oracle Support. For example, you must not move a datastore in the Fact Staging folder to the Dimension Staging folder, or must not rename a packaged index named W_EXAMPLE_F_U1 to W_EXAMPLE_F_U2, unless directed by Oracle Support. You can make copies of packaged ODI objects and place them in custom folders, but do not delete, rename, or move the original objects.About the Customization Process

You can customize your ETL functionality after you have performed a Business Analysis and Technical Analysis.

However, this information does not cover the other typical tasks that you must perform:

-

Business Analysis — before you start customization, you typically analyze your current BI dashboards to determine the changes you need to support your business or organization.

-

Technical Analysis — when you have identified your business requirements, you must determine the technical changes you need to make by identifying source tables, staging tables, target tables, and ODI Packages and Interfaces that you must modify.

-

Repository (RPD) Modification — having made the customizations in the ETL functionality, you must modify your repository to expose the new data in your dashboards.

Patching Customized Objects

This section explains how to re-apply a customization to an object that has been patched. For example, if you install an Oracle BI Applications patch that modifies the Supply Chain and Order Management application, you must manually re-apply customizations that you have made to the Supply Chain and Order Management application.

A patch only installs changed repository objects, not the whole Work Repository. Therefore, you must re-apply customizations to mappings that have been changed by the patch. For example, if a patch only modifies the Supply Chain and Order Management application, you must manually re-apply customizations that you have made to the Supply Chain and Order Management application. The patch does not affect customizations in other applications.

As part of customizing an ETL task (including interfaces and package under a specific task folder), you copy the task folder to be customized to a Custom folder, version the copy once to freeze the original state, and version the copy again to capture the customized state. To modify and version ETL customizations, see Extending Mappings in Oracle Business Analytics Warehouse.

You can customize and patch different ODI objects. Depending on the object, you must follow different steps to merge the patch and customizations.

If an object is customized, but is not being patched, this section does not apply. Similarly, if an object is patched but is not customized, you don’t need to follow these steps.

Note:

All customization steps have you create a Custom adaptor folder where customized ETL tasks are stored. This is not required, but is considered a best practice to make identifying customized content easier.Patching Customized ETL Tasks

If you have customized the ETL task to be patched, follow these steps. Note that there are two use cases where an ETL task might have been customized, either at the direction of Oracle to quickly apply a fix before a patch becomes available or as the result of normal implementation activities to enhance Oracle BI Applications with additional functionality.

Customization Performed at Direction of Oracle

If you have customized at the direction of Oracle as a means to manually implement a fix, you must have a separate copy of the original object in a separate Custom or Patch folder. The ETL task has a scenario associated with it whose version is set to a value higher than 001. To apply a patch, simply delete this scenario. The patched out-of-the-box scenario is automatically used the next time when the load plan runs.

Customization Performed as an Extension of Functionality

If you have customized the object being patched to introduce new behavior, merge the fix and the customizations into a single ETL task. When you first customize the task, the customization methodology requires that you create a copy of the ETL task and version it, for example to version 001. This version represents the original state of the ETL task. Prior to customization, you version the ETL task again, for example to version 002. You then apply your customizations to the new version. You can use the version compare utility of ODI to note the changes that have been introduced.

Prior to patching the out-of-the-box ETL task, you must version the out-of-the-box ETL task, to version 001 for example, then version it again, for example to 002. You then apply the patch. The 001 version represents the original state of the ETL task and 002 now represents the patched state. You can compare the out-of-the-box 002 version to the out-of-the-box 001 version to determine the changes introduced by the patch.

Note that you now have two original, or 001, versions, both representing the original state of the ETL task. You also now have two 002 versions, one a custom version and the other a patched version.

To get the fixes from the patch to appear in the custom folder, one approach is to manually apply the changes noted in the patched 002 version directly in the customized 002 version and then regenerate the scenario. This is the simplest approach and is recommended when a patch fix is relatively simple to determine and deploy.

If the patch fix is relatively complex while the customizations are relatively simple, an alternate approach is to re-apply the customizations to a new copy of the patched version. In this case, you rename the 002 custom version of the ETL task, for example to include Old or Prior as a suffix, copy the 002 patched version to the Custom adaptor, version it, version it again, then re-apply your customizations to the latest version. Generate the scenario with a larger number. If the original ETL task uses 001 and your prior customized ETL task uses 002, then you use 003 with this latest copy.

Patching Customized Datastores

As part of the customization methodology, datastores that are customized are first versioned to represent the original out-of-the-box state and versioned again to represent the customized state. If you have customized a datastore and want to patch the datastore, the patching process merges the datastore to reflect the custom and patch changes in the same datastore. You might want to create another version of the datastore prior to applying the patch, which represents the patched and customized state.

Patching Other Objects

Oracle does not recommend directly customizing commonly used objects such as Knowledge Modules, Variables, and User Defined Functions. If you need to customize such an object to manually apply a fix at Oracle’s direction or to enhance out-of-the-box functionality, make a copy of the object, version to freeze the original state, and then version again to reflect the customized state. At this point, ETL tasks follow the same customization steps and are modified to use the custom copies of these objects. Follow the same steps as patching customized ETL tasks.

Category 1 Customizations: Adding Columns to Existing Fact or Dimension Tables

Category 1 customizations add columns from the source systems that have pre-packaged adapters and load the data into the existing Oracle Business Analytics Warehouse tables.

Category 1 customizations involve extracting additional columns from the source systems for which pre-packaged adapters are included (for example, Oracle E-Business Suite) and loading the data into the existing Oracle Business Analytics Warehouse tables. For Category 1 customizations, data can also come from non-packaged sources, but this section assumes that you have already mapped the sources with a Universal adapter and you need to extend to capture additional columns. The initial mapping of a Universal adapter is considered a Category 3 customization. For information, see Category 3 Customizations: Adding New Data.

To see additional columns in the Oracle Business Analytics Warehouse, you must pass the columns first through the ETL process. The existing mappings and tables are extensible. Oracle BI Applications provides a methodology to extend preconfigured mappings to include these additional columns and load the data into the existing tables.

Oracle BI Applications recognizes two types of customization: extension and modification. The supported extension logic allows you to add to the existing objects. For example, you can extract additional columns from a source, pass them through the existing mappings, and populate new columns added to an existing table. Generally, Oracle BI Applications does not allow you to modify the existing logic or columns. You should not change the existing calculations to use different columns, and you should not remap the existing columns to be loaded from different sources.

For example, if you want to calculate revenue differently from the existing logic, create a new column (for example, X_REVENUE) and populate it with a custom mapping expression. You can then remap the Oracle Business Intelligence repository to point to the new X_REVENUE column.

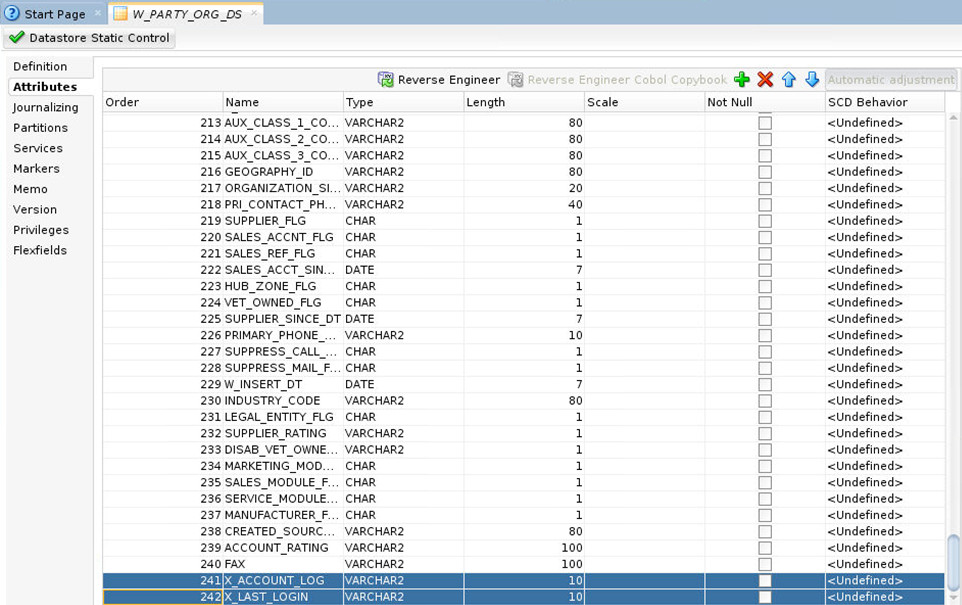

Most datastores have a single placeholder column named X_CUSTOM. Each ETL task has mapping expressions to populate this column. These serve as templates for customizing ODI datastores and interfaces. When creating new custom columns, follow the naming convention of including the X_ prefix to help distinguish custom columns.

In the figure, the preconfigured logic is shaded in gray. Do not modify anything contained within these objects. Add customizations to the existing objects rather than creating new packages and interfaces, which allows them to run in parallel to the existing logic.

Extending Mappings in Oracle Business Analytics Warehouse

The common reason for extending the Oracle Business Analytics Warehouse is to extract the existing columns from a source system and map them to an existing Oracle Business Analytics Warehouse table (either fact or dimension). This type of change typically requires you to extend the interfaces within a SIL package.

If the data is coming from a packaged source, then you must extend the interfaces within an appropriate SDE adapter package. If the data is coming from a non-packaged source, then you must use a Universal adapter package. If an appropriate package does not already exist, you must create a Universal adapter package with interfaces.

To extend an ODI package in the Oracle Business Analytics Warehouse:

Example of Extending the Oracle Business Analytics Warehouse

This working example describes adding columns from source systems that have pre-packaged adapters and loading the data into the existing Oracle Business Analytics Warehouse tables (known as a Category 1 customization).

In this example, a company has identified additional fields in a source system table HZ_CUST_ACCOUNTS that must be added to the Oracle Business Analytics Warehouse table W_ORG_D. Data is passed from an existing source table to an existing target table, known as a category 1 customization. The company uses an extension field to capture information related to organizations referred to as ACCOUNT_LOG. In addition, the company wants to include the name of the person who last updated the record as an attribute of the organization.

In this example, you want to extract information from the following two fields that are not extracted by the out-of-the-box application:

-

HZ_CUST_ACCOUNTS.ATTRIBUTE1

ATTRIBUTE1 is currently not extracted from the source table HZ_CUST_ACCOUNTS into the temporary table SQ_BCI_CUSTOMERS.

-

HZ_CUST_ACCOUNTS.LAST_UPDATE_LOGIN

LAST_UPDATE_LOGIN is currently extracted from the source table HZ_CUST_ACCOUNTS into the temporary table SQ_BCI_CUSTOMERS but is not loaded into the staging table W_PARTY_ORG_DS.

The following diagram shows the two fields ATTRIBUTE1 and LAST_UPDATE_LOGIN as they pass from the source system table to the target table via the tables: HZ_CUST_ACCOUNTS to SQ_BCI_CUSTOMERS to W_PARTY_ORG_DS to W_PARTY_ORG_DS_SQ_Joiner to W_PARTY_ORG_D.

The customization is done in two parts:

-

SDE Processing that extracts data from the source system and loads it into the staging area. See Example of Extracting Data from an Oracle EBS 12.2 Data Packaged Source into the ODI Staging Area .

-

SIL Processing that extracts data from the staging area and loads it into the target table. See Example of Loading Data from the Staging Area into an Existing Target Table.

Example of Extracting Data from an Oracle EBS 12.2 Data Packaged Source into the ODI Staging Area

This section shows how data is extracted from an existing source table into the staging area.

The following diagram shows the new ETL mappings that load data into the staging area and the ODI interfaces to modify.

Note:

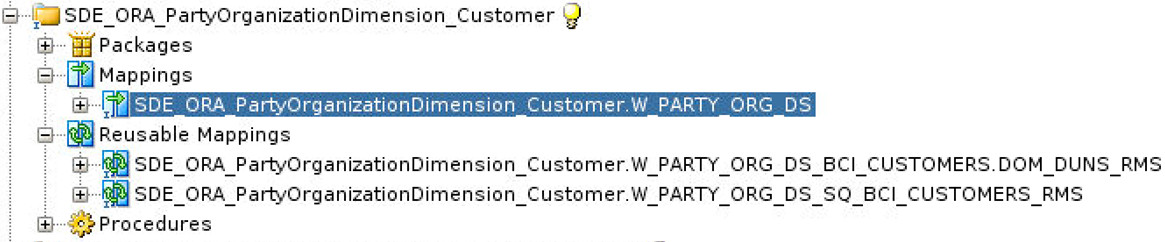

The new ETL mappings diagram shows only the incremental interfaces.To customize the ETL process for loading these two fields into the staging area:

-

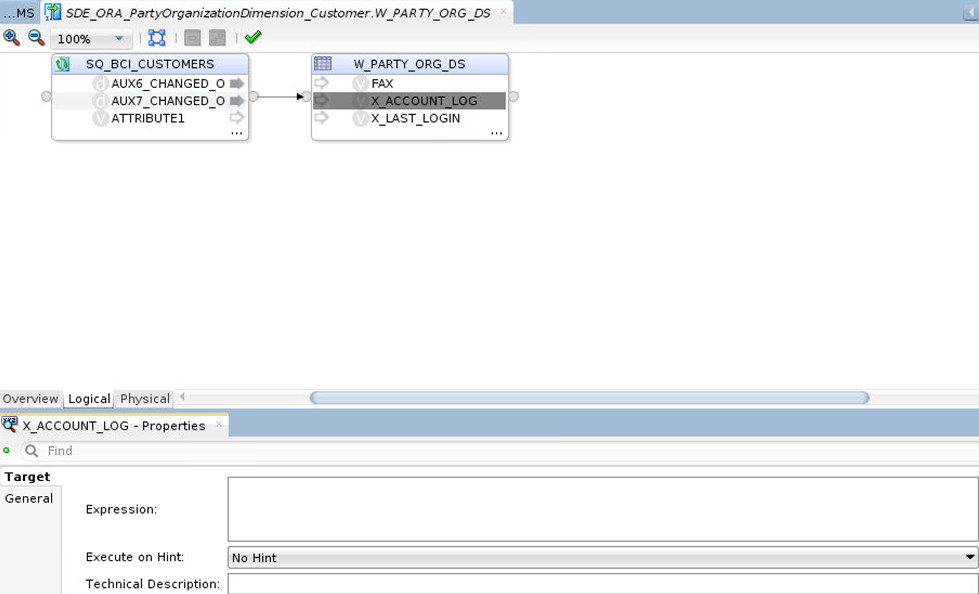

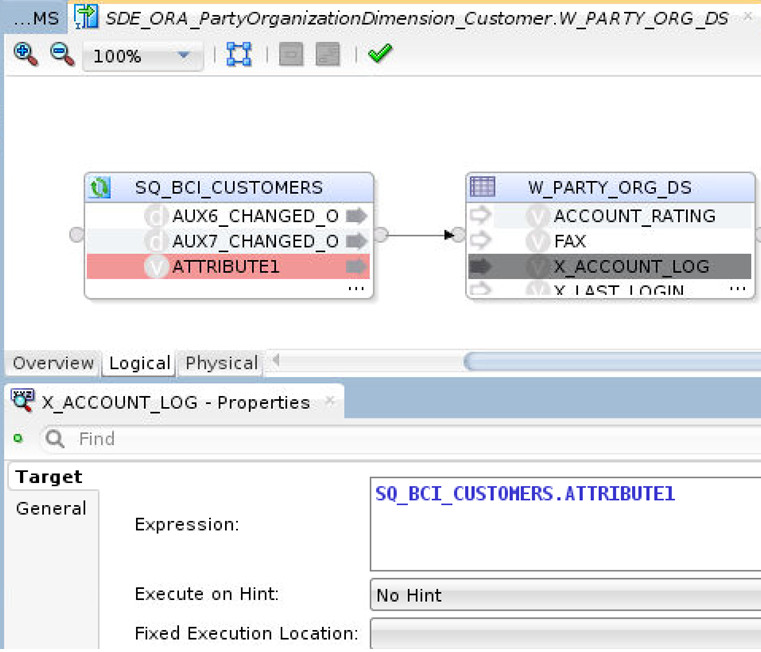

Extract the HZ_CUST_ACCOUNTS.ATTRIBUTE1 value from the source table HZ_CUST_ACCOUNTS into the temporary table SQ_BCI_CUSTOMERS using the Interface SDE_ORA_PartyOrganizationDimension_Customer.W_PARTY_ORG_DS_SQ_BCI_CUSTOMERS_RMS.

Then, load the SQ_BCI_CUSTOMERS.ATTRIBUTE1 value from the temporary table SQ_BCI_CUSTOMERS into the X_ACCOUNT_LOG field in the staging table W_PARTY_ORG_DS using the Interface SDE_ORA_PartyOrganizationDimension_Customer.W_PARTY_ORG_DS.

-

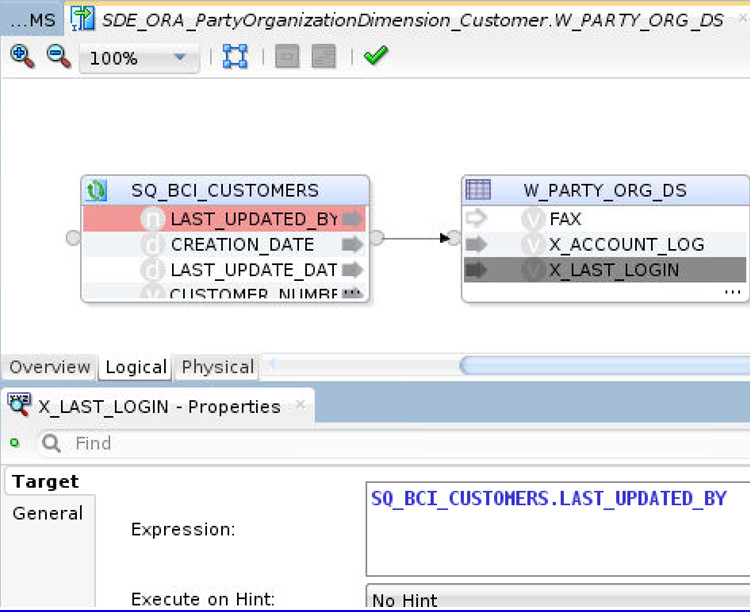

Load the SQ_BCI_CUSTOMERS.LAST_UPDATE_LOGIN value from the temporary table SQ_BCI_CUSTOMERS into the X_LAST_LOGIN field in the staging table W_PARTY_ORG_DS using the Interface SDE_ORA_PartyOrganizationDimension_Customer.W_PARTY_ORG_DS.

Note:

Remember that LAST_UPDATE_LOGIN value is already extracted from the source table HZ_CUST_ACCOUNTS into the temporary table SQ_BCI_CUSTOMERS, but is not loaded into the staging table W_PARTY_ORG_DS.

To extract data from an Oracle EBS 12.2 Data Packaged Source:

Now that you have set up the ETL process for extracting and staging the data, you must load the new data into the data warehouse. See Example of Loading Data from the Staging Area into an Existing Target Table.

Example of Loading Data from the Staging Area into an Existing Target Table

This section shows how data is loaded from the staging area into an existing target table.

The following diagram shows the new ETL mappings to load the new data from the staging area into the target table and the ODI Interfaces to modify.

To customize the ETL process to load these two fields into the staging area:

-

Load the X_ACCOUNT_LOG value and X_LAST_LOGIN value from the staging table W_PARTY_ORG_DS into the temporary table W_PARTY_ORG_DS_SQ_Joiner using the Interface SIL_PartyOrganizationDimension.W_PARTY_ORG_D_SQ_Joiner_RMS.

-

Load the X_ACCOUNT_LOG value and X_LAST_LOGIN value from the temporary table W_PARTY_ORG_DS_SQ_Joiner into the Target table W_PARTY_ORG_D using the Interface SIL_PartyOrganizationDimension.W_PARTY_ORG_D.

To extract data from an Oracle EBS 12.2 Data Packaged Source:

Tips for Modifying the SQ_BCI_ Interface

Follow these tips to modify the SQ_BCI_Interface:

-

Make sure that you define a new source table on the right side of a LEFT OUTER join syntax with the existing source tables. Using an INNER join or a RIGHT OUTER join might result in loss of records.

-

Make sure that you define joins to match on a unique set of values. If you do not define a join that ensures a unique relationship, you might get a Cartesian product, which changes the granularity and results in duplicate errors.

If it is not possible to define a unique join, then do the following:

Other Types of Customizations Requiring Special Handling

These types of customization require special handling.

Modifying Category 2 SCD Behavior

The Oracle BI Applications ETL process supports Type I and Type II slowly changing dimension behavior. Some dimensions are enabled only for Type I behavior while other dimensions are enabled to also support Type II behavior.

Of those dimensions that support Type II behavior, different dimension attributes have different Slowly Changing behavior including some attributes being treated as Type I.

Note:

Modifying the Type-II tracking logic is the only change that you should make to the ready-to-use logic.To modify a Category 2 SCD Trigger:

- In ODI Designer, modify the dimension datastore.

- In the Models view, expand the Oracle BI Applications folder, Oracle BI Applications (Model), and Dimension (Submodel).

- Double-click the Dimension table.

- In the Definition tab, change the OLAP type value to either Dimension (only supports Type I changes) or Slowly Changing Dimension (supports Type II changes).

- Modify the SIL Dimension Task.

- Navigate to the SIL task that populates this dimension.

- Double-click the Main interface.

- In the Flow subtab, select the Target (ORACLE_BI_APPLICATIONS) window.

- If the Property Window is not visible, open it by selecting Property under Window.

- Change the IKM Selector value to IKM BIAPPS Oracle Slowly Changing Dimension if enabling Type II behavior or IKM BIAPPS Oracle Incremental Update if removing Type II behavior.

- Regenerate the scenario.

Enabling or Disabling Type II Behavior Associated with a Dimension

You can modify which columns are treated as Type I or Type II in a dimension that is configured to support Type II behavior. If a dimension is configured to support only Type I behavior, the following changes have no effect as all columns are treated as Type I.

Adding a Dimension to an Existing Fact

Add a dimension and dimension staging datastores along with the associated SDE and SIL processes to an existing fact table. This requires extending the fact and fact staging tables to reflect the association with the new dimension.

Adding a DATE_WID Column to a Fact

This use case is similar to adding a regular Dimension to a fact but in this case, a Date dimension is used. There are several Date related dimension, each representing dates in a different manner (fiscal, enterprise, and so on) and different granularities (day, week, month, etc.).

Joins between a fact and Date dimension table are performed on a Date specific WID column. The Date WID column is a smart key value that represents the date in YYYYMMDD format. You must not perform a lookup to resolve the ROW_WID of the Date dimension, rather you pass the Date column through the ETL process and convert it to this format.

Each fact table has exactly one canonical Date specific WID column. This is the primary date used to drive various date-related calculations. There is no particular metadata to identify this column, but lookups to effective dated tables use this column in the ETL and various date-related expressions in the repository also use this column. All packaged fact tables have a single canonical date already identified. When creating custom fact tables, nominate one Date WID column as the canonical date and use consistently.

Follow the same steps as adding a dimension to a fact with the following changes. You must not create a custom SDE as you use the existing Date dimension.

You must extend the facts related datastores, W_Fact Name_FS and W_Fact Name_F and tasks to reflect the new dimension.

To extend the facts related datastores and tasks:

Category 2 Customizations: Adding Additional Tables

Category 2 customizations use pre-packaged adapters to add new fact or dimension tables to the Oracle Business Analytics Warehouse.

You can build entirely new tables to load the data from a source table that is not already extracted from. For example, you might want to create a new Project dimension table. In this case, you create new dimension and staging tables as well as new extract and load ETL mappings.

When creating a new custom table, use the prefix WC_ to help distinguish custom tables from tables provided by Oracle as well as to avoid naming conflicts in case Oracle later releases a table with a similar name. For example, for your Project dimension, you might create a WC_PROJECT_DS and a WC_PROJECT_D table.

When you create a new dimension or fact table, use the required system columns that are part of each of the Oracle Business Analytics Warehouse tables to maintain consistency and enable you to reference the existing table structures. When you create a new table, you must define the table and indices in ODI Designer Models area first. The destination model for the Oracle Business Analytics Warehouse is Oracle BI Applications.

About the Main Required Columns

For custom staging tables, the required columns are:

-

INTEGRATION_ID — Stores the primary key or the unique identifier of a record as in the source table.

-

DATASOURCE_NUM_ID — Stores the data source from which the data is extracted.

For dimension and fact tables, the required columns are the INTEGRATION_ID and DATASOURCE_NUM_ID columns plus these:

-

ROW_WID — Stores a sequence number generated during the ETL process, which is used as a unique identifier for the Oracle Business Analytics Warehouse.

-

ETL_PROC_WID — Stores the ID of the ETL process information.

About the DATASOURCE_NUM_ID Column

The tables in the Oracle Business Analytics Warehouse schema have DATASOURCE_NUM_ID as part of their unique user key. While the transactional application normally ensures that a primary key is unique, it is possible that a primary key is duplicated between transactional systems. To avoid problems when loading this data into the data warehouse, uniqueness is ensured by including the DATASOURCE_NUM_ID as part of the user key. This means that the rows are loaded in the same data warehouse tables from different sources if this column is given a different value for each data source.

Additional Information About Customizing

Learn about customization in Oracle BI Applications.

About the Update Strategy

For loading new fact and dimension tables, design a custom process on the source side to detect the new and modified records. The SDE process is designed to load only the changed data (new and modified). If the data is loaded without the incremental process, the loaded data is erroneously updated again. For example, the logic in the preconfigured SIL mappings looks up the destination tables based on the INTEGRATION_ID and DATASOURCE_NUM_ID and returns the ROW_WID if the combination exists, in which case it updates the record. If the lookup returns null, it inserts the record instead. In some cases, last update date(s) stored in target tables are also compared in addition to the columns specified to determine insert or update. Look at the similar mappings in the preconfigured folder for more details.

About Indices and Naming Conventions

Staging tables typically do not require any indices. Use care to determine if indices are required on staging tables. Create indices on all the columns that the ETL uses for dimensions and facts (for example, ROW_WIDs of Dimensions and Facts, INTEGRATION_ID and DATASOURCE_NUM_ID and flags). Carefully consider which columns or combination of columns filter conditions should exist, and define indices to improve query performance. Inspect the preconfigured objects for guidance. Name all the newly created tables as WC_. This helps visually isolate the new tables from the preconfigured tables. Keep good documentation of the customizations done; this helps when upgrading your data warehouse. Once the indices are decided upon, register the indices in the ODI Model. See Adding an Index to an Existing Fact or Dimension Table.

Adding New Fact Tables to Oracle Business Analytics Warehouse

Custom tables must follow the WC_ naming convention to help distinguish from preconfigured tables.

To add a new fact table:

Creating Fact Tables

You can create a new fact table using the WC_SAMPLE_FS and WC_SAMPLE_F datastores as templates. You should set the OLAP type to Fact Table and use the Generate DDL procedure to create the tables in the database.

Importing Fact Tables into ODI

You can import the existing fact tables into ODI using the RKM of Oracle BI Applications.

To import fact tables into ODI:

Creating Custom Dimension Datastore and Tasks

You can create the custom dimension datastores and tasks.

Create a WC_<dimension name>_D datastore under the Oracle BI Applications – Dimension model. Create a WC_<dimension name>_DS datastore under the Oracle BI Applications – Dimension Stage model. Use the WC_SAMPLE_DS and WC_SAMPLE_D datastores as templates. These datastores include all required system columns. Custom tables should follow the WC_ naming convention to help distinguish from shipped tables.

Note:

The specific submodel that a table belongs to drives the table maintenance behavior. For example, tables in the Dimension Stage submodel are always truncated at each ETL run while tables in the Dimension submodel are truncated only during a Full ETL run. Do not create a Custom submodel to place your datastores in as table maintenance is not implemented properly for tables in such a submodel.You can define a dimension either in ODI, generating DDL to create the table in the database, or by defining the table in the database and importing the definition into ODI using the RKM of Oracle BI Applications. If you use the RKM, the imported table is automatically placed in the Other submodel and you must move the imported table into the Dimension Staging and Dimension submodels as appropriate. Also, set the OLAP type for the dimension to Dimension or Slowly Changing Dimension as appropriate.

The X_CUSTOM placeholder load plan components are provided as templates, but do not use them for new custom content. Create a new dimension group in Oracle BI Applications Configuration Manager. See About Creating Custom Fact and Dimension Groups.

Creating Dimension Tables in ODI

You can create a new dimension table using the WC_SAMPLE_DS and WC_SAMPLE_D datastores as templates. You should set the OLAP type to Dimension or Slowly Changing Dimension as appropriate.

Importing Custom Dimension Tables into ODI

You can import the existing dimension tables into ODI using the RKM of Oracle BI Applications.

To import a dimension table into ODI:

Creating ODI Sequences for Custom Dimensions

You can create an ODI sequence for a custom dimension.

You can use a database sequence to populate the ROW_WID column of the dimension. You can use the Generate DDL procedure to generate the required DDL for creating the database trigger in the database. Use WC_SAMPLE_D_SEQ as a template.

To create an ODI sequence for the custom dimension:

Creating SDE and SIL Tasks

You can create SDE and SIL tasks in the Custom SDE and SIL adaptor folders.

About Creating Custom Fact and Dimension Groups

Once you have completed customization steps to include custom tables and ETL tasks, create custom fact and dimension groups in Configuration Manager and ODI to incorporate your changes into the Load Plans that orchestrate ETL.

The Data Warehouse tables are populated by ETL tasks which are orchestrated by a Load Plan. When creating custom content, new data warehouse tables and associated ETL tasks are created and you must incorporate them into a Load Plan to populate the custom tables along with the standard tables.

You can build a Load Plan by assembling several Load Plan Components into an integrated load plan. You can organize Load Plan Components into level 0, 1, 2, and 3 components. Level 3 Load Plan Components execute the actual ETL tasks, while the other Load Plan Components are system Load Plan Components, and you can use the other load plan components in building up and organizing the Load Plan. The Load Plan Generator assembles these Load Plan Components into a combined Load Plan.

You must create custom Load Plan Components to have custom content such as custom dimensions or custom facts incorporated into a Load Plan that includes out-of-the-box content. The Load Plan Generator can then include these components when building a load plan.

To create custom tables and ETL tasks, see Customizing the Oracle Business Analytics Warehouse. The following topics describe how to create new Load Plan Components to execute these ETL tasks. Use Pre-existing 3 Dims X_CUSTOM_DIM and 3 Dims X_CUSTOM_FG Load Plan Components as templates and the steps in the following topics describe copying these X_CUSTOM components and updating the copies to reflect custom content. The steps are essentially the same regardless of which group you are adding; only the template you start with changes.

Creating Custom Fact and Dimension Groups in ODI

The first step in creating custom fact and dimension groups is performed in ODI Designer.

To create custom fact and dimension groups in ODI:

Creating Custom Fact and Dimension Groups in Configuration Manager

The last step in creating custom fact and dimension groups is performed in Configuration Manager.

Note:

If you encounter an error while creating a fact or dimension group with CUSTOM_FG or CUSTOM_DIM code, then create with a different code and rename the code to CUSTOM_FG/DIM later.Creating Custom Dimension Groups

To create a Custom Dimension Group, you must associate it to an existing Fact Group, so if the custom Dimension Group is to be associated with a custom Fact Group, be sure to add the Fact Group first.

To create a Custom Dimension Group:

Category 3 Customizations: Adding New Data

Category 3 customizations use the Universal adapter to load data from sources that do not have pre-packaged adapters.

Adding New Data as a Whole Row Into a Standard Dimension Table

Follow this procedure to add new data as a whole row into a standard dimension table in the Oracle Business Analytics Warehouse.

Configuring Extracts

Each application has prepackaged logic to extract particular data from a particular source. You must capture all data relevant to your reports and ad hoc queries by addressing what type of records you want and do not want to load into the Oracle Business Analytics Warehouse.

Extract interfaces generally consist of source tables, expressions used in the target columns, and a staging table. If you want to extract new data using the existing interface, modify the extract interface to include the new data.

Extracting Data from a New Source Table

Extract interfaces (which have the SQ_* naming convention) reside in source-specific folders within the repository. Use the Extract interfaces to extract data from the source system. You can configure these extract interfaces to perform the following:

-

Extract data from a new source table.

-

Set incremental extraction logic.

Extracting New Data Using an Existing Source Table

You can configure extract mappings and Interfaces in the Oracle Business Analytics Warehouse to accommodate additional source data.

For example, if your business divides customer information into separate tables based on region, then you might need to set up the extract interface to include data from these tables.

To modify an existing interface to include new data:

- Modify the existing interface to extract information from the source, and add it to an appropriate extension column.

- Modify the Expressions in the target table to perform any necessary transformations.

- Save the changes.

- Regenerate the scenario.

You must determine which type of extension column to map the data to in the staging table. After you modified the extract interface, you must also modify the corresponding load interfaces (SDE and SIL) to make sure that the extension columns that you added are connected all the way from the staging table to the target data warehouse table.

Setting Up the Delimiter for a Source File

When you load data from a Comma Separated Values (CSV) formatted source file, if the data contains a comma character (,), you must enclose the source data with a suitable enclosing character known as a delimiter that does not exist in the source data.

Note:

Alternatively, you can configure your data extraction program to enclose the data with a suitable enclosing character automatically.For example, you might have a CSV source data file with the following data:

Months, Status January, February, March, Active April, May, June, Active

If you load this data without modification, ODI loads January as the Months value, and February as the Status value. The remaining data for the first record (that is, March, Active) is not loaded.

To enable ODI to load this data correctly, you might enclose the data in the Months field within the double-quotation mark enclosing character (" ") as follows:

Months, Status "January, February, March", Active "April, May, June", Active

After modification, ODI loads the data correctly. In this example, for the first record, ODI loads January, February, March as the Months value, and Active as the Status value.

To set up the delimiter for a source file:

You can now load data from the modified CSV file.

Configuring Loads

You can customize the way Oracle BI Applications loads data into the Oracle Business Analytics Warehouse.

About Primary Extract and Delete Mappings Process

Before you decide to enable primary extract and delete sessions, it is important to understand their function within the Oracle Business Analytics Warehouse. Primary extract and delete mappings allow your analytics system to determine which records are removed from the source system by comparing primary extract staging tables with the most current Oracle Business Analytics Warehouse table.

The primary extract mappings perform a full extract of the primary keys from the source system. Although many rows are generated from this extract, the data only extracts the Key ID and Source ID information from the source table. The primary extract mappings load these two columns into staging tables that are marked with a *_PE suffix.

The figure provides an example of the beginning of the extract process. It shows the sequence of events over a two-day period during which the information in the source table has changed. On day one, the data is extracted from a source table and loaded into the Oracle Business Analytics Warehouse table. On day two, Sales Order number three is deleted and a new sales order is received, creating a disparity between the Sales Order information in the two tables.

The figure shows the primary extract and delete process that occurs when day two's information is extracted and loaded into the Oracle Business Analytics Warehouse from the source. The initial extract brings record four into the Oracle Business Analytics Warehouse. Then, using a primary extract mapping, the system extracts the Key IDs and the Source IDs from the source table and loads them into a primary extract staging table.

The extract mapping compares the keys in the primary extract staging table with the keys in the most current the Oracle Business Analytics Warehouse table. It looks for records that exist in the Oracle Business Analytics Warehouse, but do not exist in the staging table (in the preceding example, record three), and sets the delete flag to Y in the Source Adapter, causing the corresponding record to be marked as deleted.

The extract mapping also looks for any new records that have been added to the source, and which do not already exist in the Oracle Business Analytics Warehouse; in this case, record four. Based on the information in the staging table, Sales Order number three is physically deleted from Oracle Business Analytics Warehouse. When the extract and load mappings run, the new sales order is added to the warehouse.

About Working with Primary Extract and Delete Mappings

The primary extract (*_Primary) and delete mappings (*_IdentifyDelete and *_Softdelete) serve a critical role in identifying which records have been physically deleted from the source system. However, there are some instances when you can disable or remove the primary extract and delete mappings, such as when you want to retain records in the Oracle Business Analytics Warehouse that are removed from the source systems' database and archived in a separate database.

Because delete mappings use Source IDs and Key IDs to identify purged data, if you are using multiple source systems, you must modify the SQL Query statement to verify that the proper Source ID is used in the delete mapping. In addition to the primary extract and delete mappings, configuration of the delete flag in the load mapping also determines how record deletion is handled.

You can manage the extraction and deletion of data in the following ways:

-

Deleting the configuration for source-archived records

-

Deleting records from a particular source

-

Enabling delete and primary-extract sessions

-

Configuring the Record Deletion flag

-

Configuring the Record Reject flag

Deleting the Configuration for Source-Archived Records

Some sources archive records in separate databases and retain only the current information in the main database. If you have enabled the delete mappings, you must reconfigure the delete mappings in the Oracle Business Analytics Warehouse to retain the archived data.

To retain source-archived records in the Oracle Business Analytics Warehouse, make sure the LAST_ARCHIVE_DATE parameter value is set properly to reflect your archive date. The delete mappings do not mark the archived records as deleted.

Customizing Stored Lookups and Adding Indexes

Customizing stored lookups and adding indexes applies to all categories of customization in Oracle BI Applications.

How Dimension Keys are Looked Up and Resolved

By default, dimension key resolution is performed by the Oracle Business Analytics Warehouse in the load mapping. The load interface uses prepackaged, reusable lookup transformations to provide pre-packaged dimension key resolution.

There are two commonly used methods for resolving dimension keys. The first method, which is the primary method used, is to perform a lookup for the dimension key. The second method is to supply the dimension key directly into the fact load mapping.

If the dimension key is not provided to the Load Interface through database joins, the load mapping performs the lookup in the dimension table. The load mapping does this using prepackaged Lookup Interfaces. To look up a dimension key, the Load Interface uses the INTEGRATION_ID, the DATASOURCE_NUM_ID, and the Lookup date, which are described in the following table:

| Port | Description |

|---|---|

|

INTEGRATION ID |

Uniquely identifies the dimension entity within its source system. Formed from the transaction in the Source Adapter of the fact table. |

|

DATASOURCE_NUM_ID |

Unique identifier of the source system instance. |

|

Lookup Date |

The primary date of the transaction; for example, receipt date, sales date, and so on. |

If Type II slowly changing dimensions are enabled, the load mapping uses the unique effective dates for each update of the dimension records. When a dimension key is looked up, it uses the fact's primary or canonical date to resolve the appropriate dimension key. The effective date range gives the effective period for the dimension record. The same entity can have multiple records in the dimension table with different effective periods due to Type II slowly changing dimensions. This effective date range is used to exactly identify a record in its dimension, representing the information in a historically accurate manner.

There are four columns needed for the load interface lookup: INTEGRATION ID, DATASOURCE_NUM_ID, and Lookup Date (EFFECTIVE_FROM_DT and EFFECTIVE_TO_DATE). The lookup outputs the ROW_WID (the dimension's primary key) to the corresponding fact table's WID column (the fact tables foreign key).

About Resolving Dimension Keys

By default, dimension key resolution is performed by the Oracle Business Analytics Warehouse in the load mapping. The load interface uses prepackaged, reusable lookup transformations to provide pre-packaged dimension key resolution.

There are two commonly-used methods for resolving dimension keys. The first method, which is the primary method used, is to perform a lookup for the dimension key. The second method is to supply the dimension key directly into the fact load mapping.

Resolving the Dimension Key Using Lookup

If the dimension key is not provided to the Load Interface through database joins, the load mapping performs the lookup in the dimension table. The load mapping does this using prepackaged Lookup Interfaces. To look up a dimension key, the Load Interface uses the INTEGRATION_ID, the DATASOURCE_NUM_ID, and the Lookup date, which are described in the following table:

| Port | Description |

|---|---|

|

INTEGRATION ID |

Uniquely identifies the dimension entity within its source system. Formed from the transaction in the Source Adapter of the fact table. |

|

DATASOURCE_NUM_ID |

Unique identifier of the source system instance. |

|

Lookup Date |

The primary date of the transaction; for example, receipt date, sales date, and so on. |

If Type II slowly changing dimensions are enabled, the load mapping uses the unique effective dates for each update of the dimension records. When a dimension key is looked up, it uses the fact's primary or canonical date to resolve the appropriate dimension key. The effective date range gives the effective period for the dimension record. The same entity can have multiple records in the dimension table with different effective periods due to Type II slowly changing dimensions. This effective date range is used to exactly identify a record in its dimension, representing the information in a historically accurate manner.

There are four columns needed for the load interface lookup: INTEGRATION ID, DATASOURCE_NUM_ID, and Lookup Date (EFFECTIVE_FROM_DT and EFFECTIVE_TO_DATE). The lookup outputs the ROW_WID (the dimension's primary key) to the corresponding fact table's WID column (the fact tables foreign key).

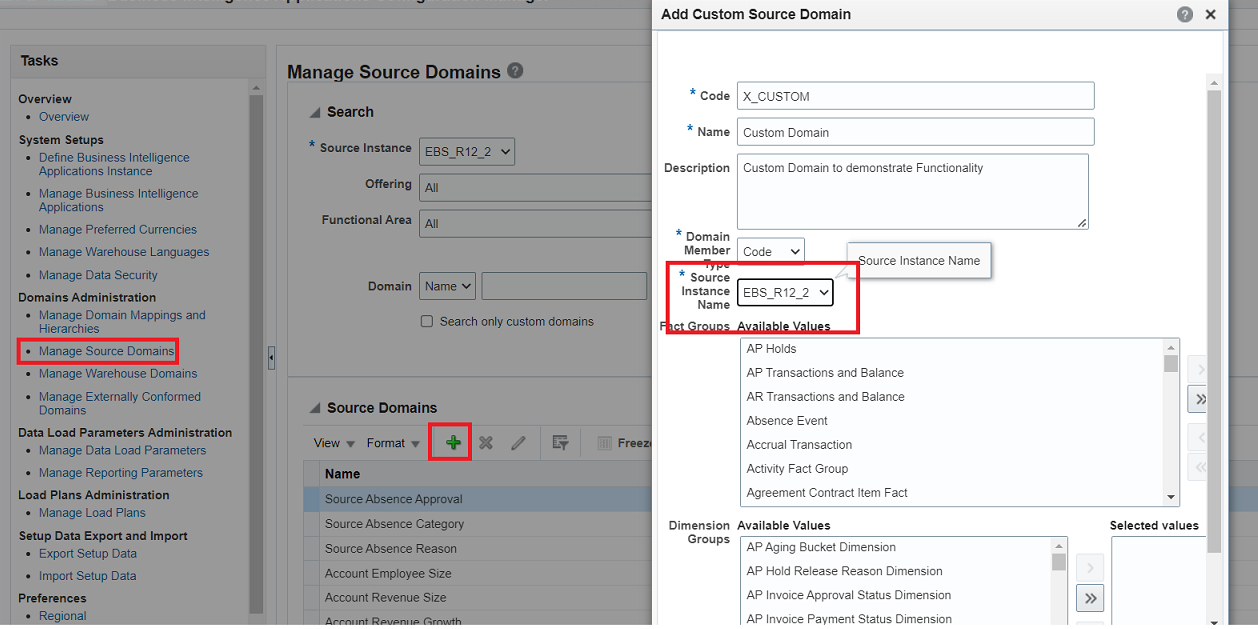

Custom Domains

Domains are similar to the list of values and lookups commonly found in transactional systems. These often have language-independent code, and one or more language-dependent values associated with them. In Oracle BI Applications, you can use domains to support multiple languages and aggregations.

For example, you might have the domain GENDER with the domain members M and F. To support multiple languages, you might have corresponding English values of Man and Woman, and French values of Homme and Femme – the appropriate value returned based on the user’s preferred language.

Domains are often used as criteria in analyses. For example, a report such as Total # of Employees by Gender issues a SQL statement similar to SELECT GENDER, COUNT(*) FROM Table GROUP BY GENDER.

Oracle BI Applications ships a number of domains out-of-the-box to support multiple languages and analyses. You might need to extend Oracle BI Applications with additional domains to support your users’ multi-language and analytical needs.

-

Source-based domains are values that are extracted directly from the source system and loaded into the data warehouse with minimal changes (other than to default for NULL values).

-

Conformed domains are meant to provide a consistent set of analyses across multiple heterogeneous source systems that might use different codes to represent the same thing – the ETL process takes the source-based code and matches or conforms it to a Oracle BI Applications-specific code.

Oracle BI Applications supports only custom source domains in the data warehouse. Mapping a new custom source domain to an existing conformed domain is not supported.

See About Multi-Language Support in Oracle Business Intelligence Applications Administrator's Guide.

Extending Oracle BI Applications with Custom Source Domains

Typically, extending Oracle BI Applications involves defining a custom domain in Configuration Manager, extending data warehouse and staging tables with the new domain column, creating custom domain SDE tasks, extending regular SDE and SIL ETL tasks, and extending the repository’s layers.

To extend Oracle BI Applications with custom source domains: