1 Introduction

This guide describes how to install Oracle Big Data SQL, how to reconfigure or extend the installation to accommodate changes in the environment, and, if necessary, how to uninstall the software.

This installation is done in phases. The first two phases are:

-

Installation on the node of the Hadoop cluster where the cluster management server is running.

-

Installation on each node of the Oracle Database system.

If you choose to enable optional security features available, then there is an additional third phase in which you activate the security features.

The two systems must be networked together via Ethernet or InfiniBand. (Connectivity to Oracle SuperCluster is InfiniBand only).

Note:

For Ethernet connections between Oracle Database and the Hadoop cluster, Oracle recommends 10 Gb/s Ethernet.The installation process starts on the Hadoop system, where you install the software manually on one node only (the node running the cluster management software). Oracle Big Data SQL leverages the adminstration facilities of the cluster management software to automatically propagate the installation to all DataNodes in the cluster.

The package that you install on the Hadoop side also generates an Oracle Big Data SQL installation package for your Oracle Database system. After the Hadoop-side installation is complete, copy this package to all nodes of the Oracle Database system, unpack it, and install it using the instructions in this guide. If you have enabled Database Authentication or Hadoop Secure Impersonation, you then perform the third installation step.

1.1 Supported System Combinations

Oracle Big Data SQL supports connectivity between a number of Oracle Engineered Systems and commodity servers.

The current release supports Oracle Big Data SQL connectivity for the following Oracle Database platforms/Hadoop system combinations:

-

Oracle Database on commodity servers with Oracle Big Data Appliance.

-

Oracle Database on commodity servers with commodity Hadoop systems.

-

Oracle Exadata Database Machine with Oracle Big Data Appliance.

-

Oracle Exadata Database Machine with commodity Hadoop systems.

Oracle SPARC SuperCluster support is not available for Oracle Big Data SQL 3.2 .1 at this time.

Note:

The phrase “Oracle Database on commodity systems” refers to Oracle Database hosts that are not the Oracle Exadata Database Machine. Commodity database systems may be either Oracle Linux or RHEL-based. “Commodity Hadoop systems” refers to Hortonworks HDP systems and to Cloudera CDH-based systems other than Oracle Big Data Appliance.

1.2 Oracle Big Data SQL Master Compatibility Matrix

See the Oracle Big Data SQL Master Compatibility Matrix (Doc ID 2119369.1 in My Oracle Support) for up-to-date information on Big Data SQL compatibility with the following:

-

Oracle Engineered Systems.

-

Other systems.

-

Linux OS distributions and versions.

-

Hadoop distributions.

-

Oracle Database releases, including required patches.

1.3 Prerequisites for Installation on the Hadoop Cluster

The following active services, installed packages, and available system tools are prerequisites to the Oracle Big Data SQL installation. These prerequisites apply to all DataNodes of the cluster.

The Oracle Big Data SQL installer checks all prerequisites before beginning the installation and reports any missing requirements on each node.

Platform requirements, such as supported Linux distributions and versions, as well as supported Oracle Database releases and required patches and are not listed here. See the Oracle Big Data SQL Master Compatibility Matrix (Doc ID 2119369.1 in My Oracle Support) for this information.

Important:

-

Oracle Big Data SQL 3.2.1.2 does not support single user mode for Cloudera clusters.

-

On CDH, if you install the Hadoop services required by Oracle Big Data SQL as packages, be sure that they are installed from within CM. Otherwise, CM will not be able to manage them. This is not an issue with parcel-based installation.

Services Running

These Apache Hadoop services must be running on the cluster.

-

HDFS

-

YARN

-

Hive

You do not need to take any extra steps to ensure that the correct HDFS and Hive clients URLs are specified in the database-side installation bundle.

The Apache Hadoop services listed above may be installed as parcels or packages on Cloudera CDH and as stacks on Hortonworks HDP.

Packages

The following packages must be pre-installed on all Hadoop cluster nodes before installing Oracle Big Data SQL. These packages are already installed on versions of Oracle Big Data Appliance supported by Oracle Big Data SQL.

-

Oracle JDK version 1.7 or later is required on Oracle Big Data Appliance. Non-Oracle commodity Hadoop servers must also use the Oracle JDK.

-

dmidecode

-

net-snmp, net-snmp-utils

-

perl

PERL LibXML – 1.7.0 or higher, e.g. perl-XML-LibXML-1.70-5.el6.x86_64.rpm

perl-libwww-perl, perl-libxml-perl, perl-Time-HiRes, perl-libs, perl-XML-SAX

The yum utility is the recommended method for installing these packages:

# yum -y install dmidecode net-snmp net-snmp-utils perl perl-libs perl-Time-HiRes perl-libwww-perl perl-libxml-perl perl-XML-LibXML perl-XML-SAX

Conditional Requirements

-

perl-Env is required for systems running Oracle Linux 7 or RHEL 7 only.

# yum -y install perl-Env

System Tools

-

curl

-

libaio

-

rpm

-

scp

-

tar

-

unzip

-

wget

-

yum

-

zip

# yum install -y libaio Environment Settings

The following environment settings are required prior to the installation.

-

NTP enabled

-

The path to the Java binaries must exist in

/usr/java/latest. -

The path

/usr/java/defaultmust exist and must point to/usr/java/latest. -

Check that these system settings meet the requirements indicated. All of these settings can be temporarily set using the

sysctlcommand. To set them permanently, add or update them in/etc/sysctl.conf.-

kernel.shmmaxandkernel.shmallmust each be greater than physical memory size. -

kernel.shmmaxandkernel.shmallvalues should fit this formula:

(You can determinekernel.shmmax = kernel.shmall * PAGE_SIZEPAGE_SIZEwith# getconf PAGE_SIZE.) -

vm.swappiness=10If cell startup fails with an error indicating that the

SHMALLlimit has been exceeded, then increase the memory allocation and restart Oracle Big Data SQL. -

socket buffer size:

net.core.rmem_default >= 4194304 net.core.rmem_max >= 4194304 net.core.wmem_default >= 4194304 net.core.wmem_max >= 4194304

-

Proxy-Related Requirements:

-

The installation process requires Internet access in order to download some packages from Cloudera or Hortonworks sites. If a proxy is needed for this access, then either ensure that the following are set as Linux environment variables, or, enable the equivalent parameters in the installer configuration file,

bds-config.json)-

http_proxyandhttps_proxy -

no_proxySet

no_proxyto include the following: "localhost,127.0.0.1,<Comma—separated list of the hostnames in the cluster (in FQDN format).>".

-

-

On Cloudera CDH, clear any proxy settings in Cloudera Manager administration before running the installation. You can restore them after running the script that creates the database-side installation bundle (

bds-database-create-bundle.sh).

See Also:

Table 2-1 describes the use ofhttp_proxy , https_proxy, and other parameters in the installer configuration file.

Hardware Requirements

-

Physical memory >= 12GB

-

Number of cores >= 8

Networking

If Hadoop traffic is over VLANs, all DataNodes must be on the same VLAN.

Python 2.7 < 3.0 (for the Oracle Big Data SQL Installer)

The Oracle Big Data SQL installer requires Python (version 2.7 or greater, but less than 3.0) locally on the node where you run the installer. This should be the same node where the cluster management service (CM or Ambari) is running.

If any installation of Python in the version ranged described above is already present, you can use it to run the Oracle Big Data installer.

If an earlier version than Python 2.7.0 is already installed on the cluster management server and you need to avoid overwriting this existing installation, you can add Python 2.7.x as a secondary installation.Restriction:

On Oracle Big Data Appliance do not overwrite or update the pre-installed Python release. This restriction may also apply other supported Hadoop platforms. Consult the documentation for the CDH or HDP platform you are using.On Oracle Linux 5, add Python 2.7 as a secondary installation. On Oracle Linux 6, both Python 2.6 and 2.7 are pre-installed and you should use the provided version of Python 2.7 for the installer. Check whether the default interpreter is Python 2.6 or 2.7. To run the Oracle Big Data SQL installer, you may need to invoke Python 2.7 explicitly. On Oracle Big Data Appliance, SCL is installed so you can use to enable version 2.7 for the shell as in this example:

[root@myclusteradminserver:BDSjaguar] # scl enable python27 "./jaguar install bds-config.json"Below is a procedure for adding the Python 2.7.5 as a secondary installation.

Tip:

If you manually install Python, first ensure that the openssl-devel package is installed:

# yum install -y openssl-develIf you create a secondary installation of Python, it is strongly recommended that you apply Python update regularly to include new security fixes. On Oracle Big Data Appliance, do not update the mammoth-installed Python unless directed to do so by Oracle.

# pyversion=2.7.5

# cd /tmp/

# mkdir py_install

# cd py_install

# wget https://www.python.org/static/files/pubkeys.txt

# gpg --import pubkeys.txt

# wget https://www.python.org/ftp/python/$pyversion/Python-$pyversion.tgz.asc

# wget https://www.python.org/ftp/python/$pyversion/Python-$pyversion.tgz

# gpg --verify Python-$pyversion.tgz.asc Python-$pyversion.tgz

# tar xfzv Python-$pyversion.tgz

# cd Python-$pyversion

# ./configure --prefix=/usr/local/python/2.7

# make

# mkdir -p /usr/local/python/2.7

# make install

# export PATH=/usr/local/python/2.7/bin:$PATH1.4 Prerequisites for Installation on Oracle Database Nodes

Installation prerequisites vary, depending on type of Hadoop system and Oracle Database system where Oracle Big Data SQL will be installed.

See the Oracle Big Data SQL Master Compatibility Matrix (Doc ID 2119369.1) in My Oracle Support.

Patch Level

Patches must be applied on Oracle Database 12.x and Oracle Grid Infrastructure (when used). The applicable patches depend on the database version in use. Please refer to the Oracle Support Document 2119369.1 (Oracle Big Data SQL Master Compatibility Matrix) for details.Also check the compatibility matrix for supported Linux distributions and Oracle Database release levels.

Packages Required for Kerberos

If you are installing on a Kerberos-enabled Oracle Database System, these package must be pre-installed:

-

krb5-workstation

-

krb5-libs

Packages for the “Oracle Tablespaces in HDFS” Feature

Oracle Big Data SQL provides a method to store Oracle Database tablespaces in the Hadoop HDFS file system. The following RPMs must be installed:

-

fuse -

fuse-libs

# yum -y install fuse fuse-libsRequired Environment Variables

The following are always required. Be sure that these environment variables are set correctly.

-

ORACLE_SID

-

ORACLE_HOME

Note:

GI_HOME (which was required in Oracle Big Data SQL 3.1 and earlier) is no longer required.

Required Credentials

-

Oracle Database owner credentials (The owner is usually the

oracleLinux account.)Big Data SQL 3.2.1.2 is installed as an add-on to Oracle Database. Tasks related directly to database instance are performed through database owner account (

oracleor other). -

Grid user credentials

In some cases where Grid infrastructure is present, it must be restarted. If the system uses Grid then you should have the Grid user credentials on hand in case a restart is required.

The Linux users grid and oracle (or other database owner) must both in the same group (usually oinstall). This user requires permission to read all files owned by the grid user and vice versa.

All Oracle Big Data SQL files and directories are owned by the oracle:oinstall user and group.

1.5 Downloading Oracle Big Data SQL

You can download Oracle Big Data SQL from either of two locations:

-

Oracle Software Delivery Cloud (also known as “eDelivery”).

-

My Oracle Support My Oracle Support

Downloading from Oracle Software Delivery Cloud

-

Log in as

rootto the Hadoop node that hosts the cluster management server (CDH or Ambari). Create a new directory or choose an existing one to be Big Data SQL installation directory. -

Log on to the Oracle Software Delivery Cloud.

-

Search for “Oracle Big Data SQL”.

-

Select

Oracle Big Data SQL 3.2.1.2 for Linux x86-64.The same bundle is compatible with all supported systems.

-

Read and agree to the Oracle Standard Terms and Restrictions.

-

Download the bundle.

-

On the Hadoop cluster management server (where CM or Ambari is running) create a new directory that will be your base for installing and maintain Oracle Big Data SQL. Copy the installation bundle zip file into this directory and unzip it there.

Downloading from My Oracle Support

Note:

On My Oracle Support, the installation bundle is delivered inside of a patch zip file.-

Log on to My Oracle Support.

-

Click on the Patches & Updates tab and then search for bug number: 29489551.

-

Download the file p29489551_3212_Linux-x86-64.zip file and unzip it.

The extracted contents are :readme.txt BigDataSQL-3.2.1.2.zip -

On the Hadoop cluster management server (where CM or Ambari is running) create a new directory that will be your base for installing and maintain Oracle Big Data SQL. Copy the installation bundle zip file into this directory and unzip it there.

Contents of the Oracle Big Data SQL Installation Bundle

When you unzip the bundle, the<Big Data SQL Install Directory>/BDSjaguar directory will include the content listed the table below, with the exception of the db-bundles and dbkeys directories. These do no appear until after you have run an installation that produces the output for these directories.

Note:

Throughout the instructions, this guide uses the placeholder<Big Data SQL Install Directory> to refer to the location where you unzip the bundle. This is the working directory from which you configure and install Oracle Big Data SQL. You should secure this directory against accidental or unauthorized modification or deletion. The primary file to protect is your installation configuration file (by default, bds-config.json). As you customize the configuration to your needs, this file becomes the record of the state of the installation. It is useful for recovery purposes and as a basis for further changes.

Table 1-1 Oracle Big Data SQL Product Extracted Bundle Inventory

| Directory or File | Description |

|---|---|

jaguar |

Hadoop cluster-side installation and configuration utility. |

|

These configuration file examples show how to apply the various parameter options. The file |

deployment_manager/* |

Python webserver for deployment utilities). |

deployment_server/* |

Deployment server Python package utility. |

bdsrepo/* |

Resources to build Cloudera parcels or Ambari stacks for the installation. |

db-bundles/ |

When you run |

dbkeys/ |

If you choose to use the Database Authentication security feature, then as part of the process, the Jaguar install operation generates a |

1.6 Upgrading From a Prior Release of Oracle Big Data SQL

On the Oracle Database side, Oracle Big Data SQL can now be installed over a previous release with no need to remove the older software. The install script automatically detects and upgrades an older version of the software.

Upgrading the Oracle Database Side of the Installation

On the database side, you need to perform the installation only once to upgrade the database side for any clusters connected to that particular database. This is because the installations on the database side are not entirely separate. They share the same set of Oracle Big Data SQL binaries. This results in a convenience for you – if you upgrade one installation on a database instance then you have effectively upgraded the database side of all installations on that database instance.

Upgrading the Hadoop Cluster Side of the Installation

If existing Oracle Big Data SQL installations on the Hadoop side are not upgraded, these installations will continue to work with the new Oracle Big Data SQL binaries on the database side, but will not have access to the new features in this release.

1.7 Important Terms and Concepts

These are special terms and concepts in the Oracle Big Data SQL installation.

Oracle Big Data SQL Installation Directory

On both the Hadoop side and database side of the installation, the directory where you unpack the installation bundle is not a temporary directory which you can delete after running the installer. These directories are staging areas for any future changes to the configuration. You should not delete them and may want to secure them against accidental deletion.

Database Authentication Keys

Database Authentication uses a key that must be identical on both sides of the installation (the Hadoop cluster and Oracle Database). The first part of the key is created on the cluster side and stored in the .reqkey file. This file is consumed only once on the database side, to connect the first Hadoop cluster to the database. Subsequent cluster installations use the configured key and the .reqkey file is no longer required. The full key (which is completed on the database side) is stored in an .ackkey file. This key is included in the part of the ZIP file created by the database-side installation and must be copied back to the Hadoop cluster by the user.

Request Key

By default, the Database Authentication feature is enabled in the configuration. (You can disable it by setting the parameter database_auth_enabled to “false” in the configuration file.) When this setting is true, then the Jaguar install, reconfigure and updatenodes operations can all generate a request key (stored in a file with the extension .reqkey ). This key is part of a unique GUID-key pair used for Database Authorization. This GUID-key pair is generated during the database side of the installation. The Jaguar operation creates a request key if the command line includes the --requestdb command line parameter along with a single database name (or a comma separated list of names). In this example, the install operation creates three keys, one for each of three different databases:

# ./jaguar --requestdb orcl,testdb,proddb install<Oracle Big Data SQL install directory>/BDSJaguar/dbkeys. In this example, Jaguar install would generate these request key files:orcl.reqkey

testdb.reqkey

proddb.reqkeyPrior to the database side of the installation, you copy request key to the database node and into the path of the database-side installer, which at runtime generates the GUID-key pair.

Acknowledge Key

After you copy a request key into the database-side installation directory, then when you run the database-side Oracle Big Data SQL installer it generates a corresponding acknowledge key . The acknowledge key is the original request key, paired with a GUID. This key is stored in a file that is included in a ZIP archive along with other information that must be returned to the Hadoop cluster by the user. .

Database Request Operation (databasereq)

The Jaguar databasereq operation is “standalone” way to generate a request key. It lets you create one or more request keys without performing an install , reconfigure , or updatenodes operation:

# ./jaguar --requestdb <database name list> databasereq {configuration file | null}Database Acknowledge ZIP File

If Database Authentication, or Hadoop Secure Impersonation is enabled for the configuration, then the database-side installer creates a ZIP bundle configuration information . If Database Authentication is enabled, this bundle includes the acknowledge key file. Information required for Hadoop Secure Impersonation is also included if that option was enabled. Copy this ZIP file back to/opt/oracle/DM/databases/conf on the Hadoop cluster management server for processing.

Database Acknowledge is a third phase of the installation and is performed only when any of the three security features cited above are enabled.

Database Acknowledge Operation (databaseack)

If you have opted to enable any or all of three new security features (Database Authentication, or Hadoop Secure Impersonation), then after copying the Database Acknowledge ZIP file back to the Hadoop cluster, run the Jaguar Database Acknowledge operation.

The setup process for these features is a “round trip” that starts on the Hadoop cluster management server, where you set the security directives in the configuration file and run Jaguar, to the Oracle Database system where you run the database-side installation, and back to the Hadoop cluster management server where you return a copy of the ZIP file generated by the database-side installation. The last step is when you run databaseack, the Database Acknowledge operation described in the outline below. Database Acknowledge completes the setup of these security features.

Default Cluster

The default cluster is the first Oracle Big Data SQL connection installed on an Oracle Database. In this context, the term default cluster refers to the installation directory on the database node where the connection to the Hadoop cluster is established. It does not literally refer to the Hadoop cluster itself. Each connection between a Hadoop cluster and a database has its own installation directory on the database node.

An important aspect of the default cluster is that the setting for Hadoop Secure Impersonation in the default cluster determines that setting for all other cluster connections to a given database. If you run a Jaguar reconfigure operation some time after installation and use it to turn Hadoop Secure Impersonation in the default cluster on or off, this change is effective for all clusters associated with the database.

If you perform installations to add additional clusters, the first cluster remains the default. If the default cluster is uninstalled, then next one (in chronological order of installation) becomes the default.

1.8 Installation Overview

The Oracle Big Data SQL software must be installed on all Hadoop cluster DataNodes and all Oracle Database compute nodes.

Important: About Service Restarts

On the Hadoop-side installation, the following restarts may occur.

-

Cloudera Configuration Manager (or Ambari) may be restarted. This in itself does not interrupt any services.

-

Hive, YARN , and any other services that have a dependency on Hive or YARN (such as Impala) are restarted.

The Hive libraries parameter is updated in order to include Oracle Big Data SQL JARs. On Cloudera installations, if the YARN Resource Manager is enabled, then it is restarted in order to set cgroup memory limit for Oracle Big Data SQL and the other Hadoop services. On Oracle Big Data Appliance, the YARN Resource Manager is always enabled and therefore always restarted.

On the Oracle Database server(s), the installation may require a database and/or Oracle Grid infrastructure restart in environments where updates are required to Oracle Big Data SQL cell settings on the Grid nodes. See Potential Requirement to Restart Grid Infrastructure for details.

If A Previous Version of Oracle Big Data SQL is Already Installed

On commodity Hadoop systems (those other than Oracle Big Data Appliance) the installer automatically uninstalls any previous release from the Hadoop cluster.

You can install Oracle Big Data SQL on all supported Oracle Database systems without uninstalling a previous version.

Before installing this Oracle Big Data SQL release on Oracle Big Data Appliance, you must use bdacli to manually uninstall the older version if it had been enabled via bdacli or Mammoth. If you are not sure, try bdacli bds disable. If the disable comment fails, then the installation was likely done with the setup-bds installer. In that case, you can install the new version Oracle Big Data SQL without disabling the old version.

How Long Does It Take?

The table below estimates the time required for each phase of the installation. Actual times will vary.

Table 1-2 Installation Time Estimates

| Installation on the Hadoop Cluster | Installation on Oracle Database Nodes |

|---|---|

|

Eight minutes to 28 minutes The Hadoop side installation may take eight minute if all resources are locally available. An additional 20 minutes or more may be required if resources must be downloaded from the Internet. |

The average installation time for the database side can be estimated as follows:

|

The installation process on Hadoop side includes installation on the Hadoop cluster as well as generation of the bundle for the second phase of the installation on the Oracle Database side. The database bundle includes Hadoop and Hive clients and other software. The Hadoop and Hive client software enable Oracle Database to communicate with HDFS and the Hive Metastore. The client software is specific to the version of the Hadoop distribution (i.e. Cloudera or Hortonworks). As explained later in this guide, you can download these packages prior to the installation, set up an URL or repository within your network, and make that target available to the installation script. If instead you let the installer download them from the Internet, the extra time for the installation depends upon your Internet download speed.

Pre-installation Steps

-

Check to be sure that the Hadoop cluster and the Oracle Database system both meet all of the prerequisites for installation. On the database side, this includes confirming that all of the required patches are in installed. Check against these sources:

- Oracle Big Data SQL Master Compatibility Matrix (Doc ID 2119369.1 in My Oracle Support)

-

Sections 2.1 in this guide, which identifies the prerequisites for installing on the Hadoop cluster. Also see Section 3.1, which describes the prerequisites for installing the Oracle Database system component of Oracle Big Data SQL.

-

Have these login credentials available:

-

rootcredentials for both the Hadoop cluster and all Oracle Grid nodes.On the grid nodes you have the option of using passwordless SSH with the root user instead.

-

oracleLinux user (or other, if the database owner is notoracle) -

The Oracle Grid user (if this is not the same as the database owner).

-

The Hadoop configuration management service (CM or Amabari) admin password.

-

-

On the cluster management server (where CM or Ambari is running), download the Oracle Big Data SQL installation bundle and unzip it into a permanent location of your choice. (See Downloading Oracle Big Data SQL.)

-

On the cluster management server, check to see if Python (at least 2.7, but less Python 3.0) is installed, as described in Prerequisites for Installation on the Hadoop Cluster.

Outline of the Installation Steps

This is an overview to familiarize you with the process. Complete installation instructions are provided in Chapters 2 and 3.

The installation always has two phases – the installation on the Hadoop cluster and the subsequent installation on the Oracle Database system. It may also include the third, “Database Acknowledge,” phase, depending on your configuration choices.

-

Start the Hadoop-Side Installation

Review the installation parameter options described in Chapter 2. The installation on the Hadoop side is where you make all of the decisions about how to configure Oracle Big Data SQL, including those that affect the Oracle Database side of the installation.

-

Edit the

bds-config.json fileprovided with the bundle in order to configure the Jaguar installer as appropriate for your environment. You could also create your own configuration file using the same parameters. -

Run the installer to perform the Hadoop-side installation as described in Installing or Upgrading the Hadoop Side of Oracle Big Data SQL.

If the Database Authentication feature is enabled, then Jaguar must also output a “request key” (

.reqkey) file for each database that will connect to the Hadoop cluster. You generate this file by including the—-requestdbparameter in the Jaguarinstallcommand (the recommended way). You can also generate the file later with other Jaguar operations that support the—-requestdb.This file contains one half of a GUID-key pair that is used in Database Authentication. The steps to create and install the key are explained in more detail in the installation steps.

-

Copy the database-side installation bundle to any temporary directory on each Oracle Database compute node.

-

If a request key file was generated, copy over that file to the same directory.

-

Start the Database-Side Installation

Log on to the database server as the database owner Unzip bundle and execute the run file it contained. The run file does not install the software. It sets up an installation directory under

$ORACLE_HOME. -

As the database owner, perform the Oracle Database server-side installation. (See Installing or Upgrading the Oracle Database Side of Oracle Big Data SQL.)

In this phase of the installation, you copy the database-side installation bundle to a temporary location on each compute node. If a

.reqkeyfile was generated for the database, then copy the file into the installation directory before proceeding. Then run thebds-database-install.shinstallation program.The database-side installer does the following:

-

Copies the Oracle Big Data SQL binaries to the database node.

-

Creates all database metadata and MTA extprocs (external processes) required for access to the Hadoop cluster, and configures the communication settings.

Important:

Be sure to install the bundle on each database compute node. The Hadoop-side installation automatically propagates the software to each node of the Hadoop cluster. However, the database-side installation does not work this way. You must copy the software to each database compute node and install it directly.

In Oracle Grid environments, if cell settings need to be updated, then a Grid restart may be needed. Be sure that you know the Grid password. If a Grid restart is required, then you will need the Grid credentials to complete the installation.

-

-

If Applicable, Perform the “Database Acknowledge” Step

If Database Authentication or Hadoop Secure Impersonation were enabled, the database-side installation generates a ZIP file that you must copy back to Hadoop cluster management server. The file is generated in the installation directory under

$ORACLE_HOMEand has the following filename format.

Copy this file back to<Hadoop cluster name>-<Number nodes in the cluster>-<FQDN of the cluster management server node>-<FQDN of this database node>.zip/opt/oracle/DM/databases/confon the Hadoop cluster management server and then asrootrun the Database Acknowledge command from the BDSJaguar–3.2.1.2 directory:# cd <Big Data SQL install directory>/BDSJaguar # ./jaguar databaseack <bds-config.json>

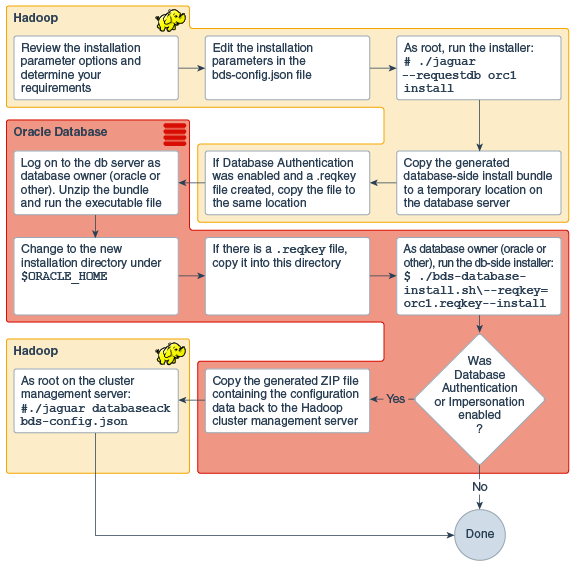

Workflow Diagrams

Complete Installation Workflow

The figure below illustrates the complete set of installation steps as described in this overview.

Note:

Before you start the steps shown in the workflow, be sure that both systems meet the installation prerequisites.Figure 1-1 Installation Workflow

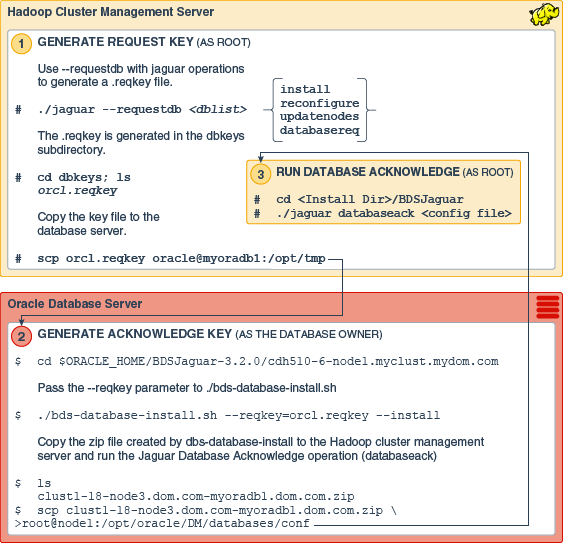

Key Generation and Installation

The figure below focuses on the three steps required to create and installing the GUID-key pair used in Database Authentication. The braces around parameters of the Jaguar command indicate that one of the operations in the list is required. Each of these operations supports use of the —-requestdb parameter.

Figure 1-2 Generating and Installing the GUID-Key Pair for Database Authentication

1.9 Post-Installation Checks

Validating the Installation With bdschecksw and Other Tests

-

The scriptSee Running Diagnostics With bdachecksw in the Oracle Big Data SQL User’s Guide for a complete description.

bdscheckswnow runs automatically as part of the installation. This script gathers and analyzes diagnostic information about the Oracle Big Data SQL installation from both the Oracle Database and the Hadoop cluster sides of the installation. You can also run this script as a troubleshooting check at any time after the installation. The script is in$ORACLE_HOME/binon the Oracle Database server.$ bdschecksw --help -

Also see How to do a Quick Test in the user’s guide for some additional functionality tests.

Checking the Installation Log Files

You can examine these log files after the installation.

On the Hadoop cluster side:

/var/log/bigdatasql

/var/log/oracleOn the Oracle Database side:

$ORACLE_HOME/install/bds* (This is a set of files, not a directory)

$ORACLE_HOME/bigdatasql/logs

/var/log/bigdatasqlTip:

If you make a support request, create a zip archive that includes all of these logs and include it in your email to Oracle Support.

Other Post-Installation Steps to Consider

-

Read about measures you can take to secure the installation. (See Securing Big Data SQL.)

-

Learn how to modify the Oracle Big Data SQL configuration when changes occur on the Hadoop cluster and in the Oracle Database installation. (See Expanding or Shrinking an Installation.)

-

If you have used Copy to Hadoop in earlier Oracle Big Data SQL releases, learn how Oracle Shell for Hadoop Loaders can simplify Copy to Hadoop tasks. (See Additional Tools Installed.)

1.10 Using the Installation Quick Reference

Once you are familiar with the functionality of the Jaguar utility on the Hadoop side and bds-database-install.sh on the Oracle Database side, you may find it useful to work from the Installation Quick Reference for subsequent installations. This reference provides an abbreviated description of the installation steps. It does not fully explain each step, so users should already have a working knowledge of the process. Links to relevant details in this and other documents are included.