Create a Multi-Tier Topology with IP Networks Using Terraform

Terraform is a third-party tool that you can use to create and manage your IaaS and PaaS resources on Oracle Cloud at Customer. This guide shows you how to use Terraform to launch and manage a multi-tier topology of Compute Classic instances attached to IP networks.

Scenario Overview

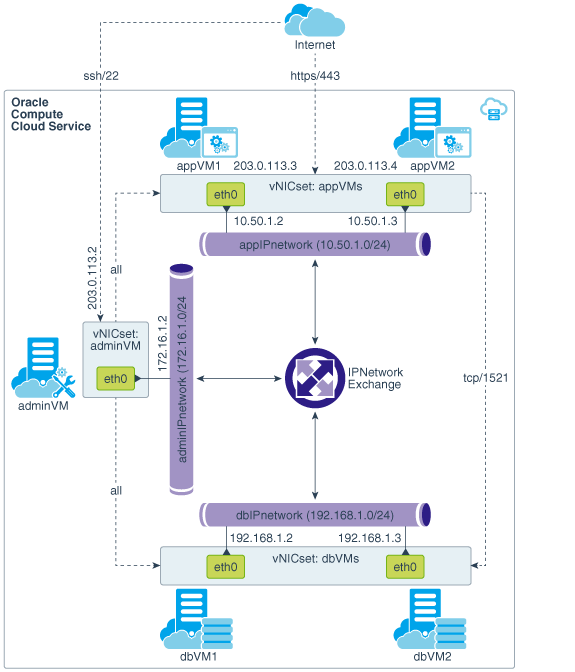

The application and the database that the application uses are hosted on instances attached to separate IP networks. Users outside Oracle Cloud have HTTPS access to the application instances. The topology also includes an admin instance that users outside the cloud can connect to using SSH. The admin instance can communicate with all the other instances in the topology.

Note:

The focus of this guide is the network configuration for instances attached to IP networks in a sample topology. The framework and the flow of the steps can be applied to other similar or more complex topologies. The steps for provisioning or configuring other resources (like storage) are not covered in this guide.

Compute Topology

The topology that you are going to build using the steps in this tutorial contains the following Compute Classic instances:

-

Two instances –

appVM1andappVM2– for hosting a business application, attached to an IP network, each with a fixed public IP address. -

Two instances –

dbVM1anddbVM2– for hosting the database for the application. These instances are attached to a second IP network. -

An admin instance –

adminVM– that's attached to a third IP network and has a fixed public IP address.

Note:

You won't actually install any application or database. Instead, you'll simulate listeners on the required application and database ports using the nc utility. The goal of this section is to demonstrate the steps to configure the networking that's necessary for the traffic flow requirements described next.

Traffic Flow Requirements

Only the following traffic flows must be permitted in the topology that you'll build:

-

HTTPS requests from anywhere to the application instances

-

SSH connections from anywhere to the admin instance

-

All traffic from the admin instance to the application instances

-

All traffic from the admin instance to the database instances

-

TCP traffic from two application instances to port 1521 of the database instances

Network Resources Required for this Topology

-

Public IP address reservations for the application instances and for the admin instance

-

Three IP networks, one each for the application instances, the database instances, and the admin instance

-

An IP network exchange to connect the IP networks in the topology

-

Security protocols for SSH, HTTPS, and TCP/1521 traffic

-

ACLs that will contain the required security rules

-

vNICsets for the application instances, database instances, and the admin instance

-

Security rules to allow SSH connections to the admin instance, HTTPS traffic to the application instances, and TCP/1521 traffic to the database instances

Create the Required Resources Using Terraform

Define all the resources required for the multi-tier topology in a Terraform configuration and then apply the configuration.

Note:

The procedure described here shows how to define resources in a simple Terraform configuration. It does not use advanced Terraform features, such as variables and modules.(Optional) Verify Network Access to the VMs

Verify SSH Connections from Outside the Cloud to the Admin VM

Run the following command from your local machine:

[localmachine ~]$ ssh -i path-to-privateKeyFile opc@publicIPaddressOfAdminVM You should see the following prompt:

opc@adminvmThis confirms that SSH connections can be made from outside the cloud to the admin VM.

Verify SSH Connections from the Admin VM to the Database and Application VMs

-

Copy the private SSH key file corresponding to the public key that you associated with your VMs from your local machine to the admin VM, by running the following command on your local machine:

[localmachine ~]$ scp -i path-to-privateKeyFile path-to-privateKeyFile opc@publicIPaddressOfAdminVM:~/.ssh/privatekey -

From your local machine, connect to the admin VM using SSH:

[localmachine ~]$ ssh -i path-to-privateKeyFile opc@publicIPaddressOfAdminVM -

From the admin VM, connect to each of the database and application VMs using SSH:

[opc@adminvm]$ ssh -i ~/.ssh/privatekey opc@privateIPaddress -

Depending on the VM you connect to, you should see one of the following prompts after the

sshconnection is established.-

opc@appvm1 -

opc@appvm2 -

opc@dbvm1 -

opc@dbvm2

-

Verify Connectivity from Outside the Cloud to Port 443 of the Application VMs

You can use the nc utility to simulate a listener on port 443 on one of the application VMs, and then run nc from any host outside the cloud to verify connectivity to the application VM.

Note:

The verification procedure described here is specific to VMs created using the Oracle-provided images for Oracle Linux 7.2 and 6.8.-

On your local host, download the

ncpackage from http://yum.oracle.com/repo/OracleLinux/OL6/latest/x86_64/getPackage/nc-1.84-24.el6.x86_64.rpm. -

Copy

nc-1.84-24.el6.x86_64.rpmfrom your local host to the admin VM.[localmachine ~]$ scp -i path-to-privateKeyFile path_to_nc-1.84-24.el6.x86_64.rpm opc@publicIPaddressOfAdminVM:~ -

From your local machine, connect to the admin VM using SSH:

[localmachine ~]$ ssh -i path-to-privateKeyFile opc@publicIPaddressOfAdminVM -

Copy

nc-1.84-24.el6.x86_64.rpmto one of the application VMs.[opc@adminvm]$ scp -i ~/.ssh/privatekey ~/nc-1.84-24.el6.x86_64.rpm opc@privateIPaddressOfAppVM1:~ -

Connect to the application VM:

[opc@adminvm]$ ssh -i ~/.ssh/privatekey opc@privateIPaddressOfAppVM1 -

On the application VM, install

nc.[opc@appvm1]$ sudo rpm -i nc-1.84-24.el6.x86_64.rpm -

Configure the application VM to listen on port 443. Note that this step is just for verifying connections to port 443. In real-life scenarios, this step would be done when you configure your application on the VM to listen on port 443.

[opc@appvm1]$ sudo nc -l 443 -

From any host outside the cloud, run the following

nccommand to test whether you can connect to port 443 of the application VM:[localmachine ~]$ nc -v publicIPaddressOfAppVM1 443The following message is displayed:Connection to publicIPaddressOfAppVM1 443 port [tcp/https] succeeded!This message confirms that the application VM accepts connection requests on port 443.

-

Press Ctrl + C to exit the

ncprocess.

Verify Connectivity from the Application VMs to Port 1521 of the Database VMs

You can use the nc utility to simulate a listener on port 1521 on one of the database VMs, and then run nc from one of the application VMs to verify connectivity from the application tier to the database tier.

Note:

The verification procedure described here is specific to VMs created using the Oracle-provided images for Oracle Linux 7.2 and 6.8.-

From your local machine, connect to the admin VM using SSH:

[localmachine ~]$ ssh -i path-to-privateKeyFile opc@publicIPaddressOfAdminVM -

Copy

nc-1.84-24.el6.x86_64.rpmto one of the database VMs.[opc@adminvm]$ scp -i ~/.ssh/privatekey ~/nc-1.84-24.el6.x86_64.rpm opc@privateIPaddressOfDBVM1:~ -

Connect to the database VM:

[opc@adminvm]$ ssh -i ~/.ssh/privatekey opc@privateIPaddressOfDBVM1 -

On the database VM, install

nc.[opc@dbvm1]$ sudo rpm -i nc-1.84-24.el6.x86_64.rpm -

Configure the VM to listen on port 1521. Note that this step is just for verifying connections to port 1521. In real-life scenarios, this step would be done when you set up your database to listen on port 1521.

[opc@dbvm1]$ nc -l 1521 -

Leave the current terminal session open. Using a new terminal session, connect to the admin VM using SSH and, from there, connect to one of the application VMs.

[localmachine ~]$ ssh -i path-to-privateKeyFile opc@publicIPaddressOfAdminVM [opc@adminvm]$ ssh -i ~/.ssh/privatekey opc@privateIPaddressOfAppVM1 -

From the application VM, run the following

nccommand to test whether you can connect to port 1521 of the database VM:[opc@appvm1 ~]$ nc -v privateIPaddressOfDBVM1 1521The following message is displayed:Connection to privateIPaddressOfDBVM1 1521 port [tcp/ncube-lm] succeeded!This message confirms that the database VM accepts connection requests received on port 1521 from the application VMs.

-

Press Ctrl + C to exit the

ncprocess.