The Invoke Large Language Model Component

The Invoke Large Language Model component (LLM component) in the Visual Flow Designer enables you to connect a flow to the LLM through a REST service call.

You can insert this component state into your dialog flow by selecting

Service Integration > Invoke Large Language

Model from the Add State dialog. To enable multi-turn conversations when

the skills is called from a digital assistant, enter a description for the LLM

description.

Note:

As a best practice, always add descriptions to LLM components to allow multi-turn refinements when users access the LLM service through a digital assistant.Inserting the LLM component state adds an error handling state for

troubleshooting the requests to the LLM and its responses. The LLM component state

transitions to this state (called ShowLLMError by default) when an invalid request or

response cause a non-recoverable error.

Description of the illustration invokellm-added-flow.png

Note:

Response refinement can also come from the system when it implements retries after failing validation.Note:

If you want to enhance the validation that LLM component already provides, or want to improve the LLM output using the Recursive Criticism and Improvement (RCI) technique, you can use our starter code to build your own request and response validation handlers.So what do you need to use this component? If you're accessing the Cohere model directly or through Oracle Generative AI Service, you just need an LLM service to the Cohere model and a prompt, which is a block of human readable text containing the instructions to the LLM. Because writing a prompt is an iterative process, we provide you with prompt engineering guidelines and the Prompt Builder, where you can incorporate these guidelines into your prompt text and test it out until it elicits the appropriate response from the model. If you're using another model, like Azure OpenAI, then you'll need to first create your own Transformation Event Handler from the starter code that we provide and then create an LLM service that maps that handler to the LLM provider's endpoints that have been configured for the instance.

General Properties

| Property | Description | Default Value | Required? |

|---|---|---|---|

| LLM Service | A list of the LLM services that have been configured for the skill. If there is more than one, then the default LLM service is used when no service has been selected. | The default LLM service | The state can be valid without the LLM Service, but skill can't connect to the model if this property has not been set. |

| Prompt | The prompt that's specific to the model accessed through the selected LLM service. Keep our general guidelines in mind while writing your prompt. You can enter the prompt in this field and then revise and test it using the Prompt Builder (accessed by clicking Build Prompt). You can also compose your prompt using the Prompt Builder. | N/A | Yes |

| Prompt Parameters | The parameter values. Use standard Apache FreeMarker

expression syntax (${parameter}) to reference

parameters in the prompt text. For

example:

|

N/A | No |

| Result Variable | A variable that stores the LLM response. | N/A | No |

User Messaging

| Property | Description | Default Value(s) | Required? |

|---|---|---|---|

| Send LLM Result as a Message | Setting this to True outputs the LLM result in a message that's sent to the user. Setting this property to False prevents the output from being sent to the user. | True | No |

| Use Streaming | The LLM results get streamed to the client when you

set this option to True, potentially

providing a smoother user experience because the LLM response

appears incrementally as it is generated rather than all at once.

This option is only available when you've set Send LLM

Result as a Message to

True.

Users may view potentially invalid responses because the validation event handler gets invoked after the LLM response has already started streaming. Set Use Streaming to False for Cohere models or when you've applied a JSON schema to the LLM result by setting Enforce JSON-Formatted LLM Response to True. Do not enable streaming if:

|

True | No |

| Start Message | A status message that's sent to the user when the LLM has been invoked. This message, which is actually rendered prior to the LLM invocation, can be a useful indicator. It can inform users that the processing is taking place, or that the LLM may take a period of time to respond. | N/A | No |

| Enable Multi-Turn Refinements | By setting this option to

True (the default), you enable users to

refine the LLM response by providing follow-up instructions. The

dialog releases the turn to the user but remains in the LLM state

after the LLM result has been received. When set to

False, the dialog keeps the turn until

the LLM response has been received and transitions to the state

referenced by the Success

action.

Note: The component description is required for multi-turn refinements when the skill is called from a digital assistant. |

True | No |

| Standard Actions | Adds the standard action buttons that display

beneath the output in the LLM result message. All of these buttons

are activated by default.

|

Submit, Cancel, and Undo are all selected. | No |

| Cancel Button Label | The label for the cancel button | Submit |

Yes – When the Cancel action is defined. |

| Success Button Label | The label for the success button | Cancel |

Yes – when the Success action is defined. |

| Undo Button Label | The label for the undo button | Undo |

Yes – When the Undo action is defined. |

| Custom Actions | A custom action button. Enter a button label and a prompt with additional instructions. | N/A | No |

Transition Actions for the Invoke Large Language Model Component

| Action | Description |

|---|---|

cancel |

This action is triggered by then users tap the cancel button. |

error |

This action gets triggered when requests to, or responses from the LLM are not valid. For example, the allotment of retry prompts to correct JSON or entity value errors has been used up. |

User Ratings for LLM-Generated Content

When users give the LLM response a thumbs down rating, the skill follows up with a link that opens a feedback form.

You can disable these buttons by switching off Enable Large

Language Model feedback in Settings >

Configuration.

Response Validation

| Property | Description | Default Value | Required? |

|---|---|---|---|

| Validation Entities | Select the entities whose values should be matched

in the LLM response message. The names of these entities and their

matching values get passed as a map to the event handler, which

evaluates this object for missing entity matches. When missing

entity matches cause the validation to fail, the handler returns an

error message naming

the unmatched entities, which is then sent to the model. The model

then attempts to regenerate a response that includes the missing

values. It continues with its attempts until the handler validates

its output or until it has used up its number of retries.

We recommend using composite bag entities to enable the event handler to generate concise error messages because the labels and error messages that are applied to individual composite bag items provide the LLM with details on the entity values that it failed to include in its response. |

N/A | No |

| Enforce JSON-Formatted LLM Response | By setting this to True, you

can apply JSON formatting to the LLM response by copying and pasting

a JSON schema. The LLM component validates JSON-formatted LLM

response against this schema.

If you don't want users

to view the LLM result as raw JSON, you can create an event

handler with a Set Use Streaming to False if you're applying JSON formatting. GPT-3.5 exhibits more robustness than GPT-4 for JSON schema validation. GPT-4 sometimes overcorrects a response. |

False | No |

| Number of Retries | The maximum number of retries allowed when the LLM gets invoked with a retry prompt when entity or JSON validation errors have been found. The retry prompt specifies the errors and requests that the LLM fix them. By default, the LLM component makes a single retry request. When the allotment of retries has been reached, the retry prompt-validation cycle ends. The dialog then moves from the LLM component via its error transition. | 1 |

No |

| Retry Message | A status message that's sent to the user when the LLM

has been invoked using a retry prompt. For example, the following

enumerates entity and JSON errors using the

allValidationErrors event

property: |

Enhancing the response. One moment,

please... |

No |

| Validation Customization Handler | If your use case requires specialized validation, then you can select the custom validation handler that's been deployed to your skill. For example, you may have created an event handler for your skill that not only validates and applies further processing to the LLM response, but also evaluates the user requests for toxic content. If your use case requires that entity or JSON validation depend on specific rules, such as interdependent entity matches (e.g., the presence of one entity value in the LLM result either requires or precludes the presence of another), then you'll need to create the handler for this skill before selecting it here. | N/A | No |

Create LLM Validation and Customization Handlers

In addition to LLM Transformation handlers, you can also use event handlers to validate the requests made to the LLM and its responses (the completions generated by the LLM provider). Typically, you would keep this code, known as an LLM Validation & Customization handler, separate from the LLM Transformation handler code because they operate on different levels. Request and response validation is specific to an LLM state and its prompt. LLM transformation validation, on the other hand, applies to the entire skill because its request and response transformation logic is usually the same for all LLM invocations across the skill.

LlmComponentContext methods in the

bots-node-sdk, or by incorporating these methods into the template

that we provide.

Note:

In its unmodified form, the template code executes the same validation functions that are already provided by the LLM component.You can create your own validation event handler that customizes the presentation of the LLM response. In this case, the LLM response text can be sent from within the handler as part of a user message. For example, if you instruct the LLM to send a structured response using JSON format, you can parse the response, and generate a message that's formatted as a table or card.

- Click Components in the left navbar.

- Click +New Service.

- Complete the Create Service dialog:

- Name: Enter the service name.

- Service Type: Embedded Container

- Component Service Package Type: New Component

- Component Type: LLM Validation & Customization

- Component Name: Enter an easily identifiable name for the event handler. You will reference this name when you create the LLM service for the skill.

- Click Create to generate the validation handler.

- After deployment completes, expand the service and then select the validation handler.

- Click Edit to open the Edit Component Code editor.

- Using the generated template, update the following handler methods

as needed.

Each of these methods uses an

Method Description When Validation Succeeds When Validation Fails Return Type Location in Editor Placeholder Code What Can I do When Validation Fails? validateRequestPayloadValidates the request payload. Returns truewhen the request is valid.Returns

falsewhen a request fails validation.boolean Lines 24-29

To find out more about this code, refer tovalidateRequestPayload: async (event, context) => { if (context.getCurrentTurn() === 1 && context.isJsonValidationEnabled()) { context.addJSONSchemaFormattingInstruction(); } return true; }validateRequestPayloadproperties.- Revise the prompt and then resend it to the LLM

- Set a validation error.

validateResponsePayloadValidates the LLM response payload. When the handler returnstrue:- If you set the Send LLM

Result as a Message property is set

to

true, the LLM response, including any standard or custom action buttons, is sent to the user. - If streaming is enabled, the LLM response will be streamed in chunks. The action buttons will be added at the end of the stream.

- Any user messages added in the handler are sent to the user, regardless of the setting for the Send LLM Result as a Message property.

- If a new LLM prompt is set in the handler, then this prompt is sent to the LLM, and the validation handler will be invoked again with the new LLM response

- If no new LLM prompt is set and

property Enable Multi-Turn

Refinements is set to

true, the turn is released and the dialog flow remains in the LLM state. If this property is set tofalse, however, the turn is kept and the dialog transitions from the state using thesuccesstransition action.

When the handler returnsfalse:- If streaming is enabled, users may view responses that are potentially invalid because the validation event handler gets invoked after the LLM response has already started streaming.

- Any user messages added by handler are sent to the user, regardless of the Send LLM Result as a Skill Response setting.

- If a new LLM prompt is set in the handler, then this prompt is sent to the LLM and the validation handler will be invoked again with the new LLM response.

- If no LLM prompt is set, the dialog

flow transitions out of the LLM component state.

The transition action set in the handler code will

be used to determine the next state. If no

transition action is set, then the

errortransition action gets triggered.

boolean Lines 50-56

To find out more about this code, refer to/** * Handler to validate response payload * @param {ValidateResponseEvent} event * @param {LLMContext} context * @returns {boolean} flag to indicate the validation was successful */ validateResponsePayload: async (event, context) => { let errors = event.allValidationErrors || []; if (errors.length > 0) { return context.handleInvalidResponse(errors); } return true; }validateResponsePayloadProperties.- Invoke the LLM again using a retry prompt that specifies the problem with the response (it doesn't conform to a specific JSON format, for example) and requests that the LLM fix it.

- Set a validation error.

changeBotMessagesChanges the candidate skill messages that will be sent to the user. N/A N/A A list of Conversation Message Model messages Lines 59-71

For an example of using this method, refer to Enhance the User Message for JSON-Formatted Responses.changeBotMessages: async (event, context) => { return event.messages; },N/A submitThis handler gets invoked when users tap the Submit button. It processes the LLM response further. N/A N/A N/A Lines 79-80 submit: async (event, context) => { }N/A eventobject and acontextobject. For example:

The properties defined for thevalidateResponsePayload: async (event, context) => ...eventobject depend on the event type. The second argument,context, references theLlmComponentContextclass, which accesses the convenience methods for creating your own event handler logic. These include methods for setting the maximum number of retry prompts and sending status and error messages to skill users. - Verify the sytnax of your updates by clicking Validate. Then click Save > Close.

validateRequestPayload Event

Properties

| Name | Description | Type | Required? |

|---|---|---|---|

payload |

The LLM request that requires validation | string | Yes |

validateResponsePayload Event

Properties

| Name | Description | Type | Required? |

|---|---|---|---|

payload |

The LLM response that needs validating. | string | Yes |

validationEntities |

A list of entity names that is specified by the Validation Entities property of the corresponding LLM component state. | String[] |

No |

entityMatches |

A map with the name of the matched entity as the key, and an array of JSONObject entity matches as the value. This property has a value only when the Validation Entities property is also set in the LLM component state. | Map<String, JSONArray> |

No |

entityValidationErrors |

Key-value pairs with either the

entityName or a composite bag item as the key

and an error message as the value. This property is only set when

the Validation Entities property is also set

and there are missing entity matches or (when the entity is a

composite bag) missing composite bag item matches.

|

Map<String, String> |

No |

jsonValidationErrors |

If the LLM component's Enforce

JSON-Formatted LLM Response property is set to

True, and the response is not a valid

JSON object, then this property contains a single entry with the

error message that states that the response is not a valid JSON

object.

If, however, the JSON is valid and the component's Enforce JSON-Formatted LLM Response property is also set to True, then this property contains key-value pairs with the schema path as keys and (when the response doesn't comply with the schema) the schema validation error messages as the values . |

Map<String, String> |

No |

allValidationErrors |

A list of all entity validation errors and JSON validation errors. | String[] |

No |

Validation Handler Code Samples

Custom JSON Validation

validateResponsePayload template to verify that a JSON-formatted

job requisition is set to Los

Angeles: /**

* Handler to validate response payload

* @param {ValidateResponseEvent} event

* @param {LLMContext} context

* @returns {boolean} flag to indicate the validation was successful

*/

validateResponsePayload: async (event, context) => {

let errors = event.allValidationErrors || [];

const json = context.convertToJSON(event.payload);

if (json && 'Los Angeles' !== json.location) {

errors.push('Location is not set to Los Angeles');

}

if (errors.length > 0) {

return context.handleInvalidResponse(errors);

}

return true;

}Enhance the User Message for JSON-Formatted Responses

changeBotMessages handler to transform the raw JSON response into a

user-friendly form

message. /**

* Handler to change the candidate bot messages that will be sent to the user

* @param {ChangeBotMessagesLlmEvent} event - event object contains the following properties:

* - messages: list of candidate bot messages

* - messageType: The type of bot message, the type can be one of the following:

* - fullResponse: bot message sent when full LLM response has been received.

* - outOfScopeMessage: bot message sent when out-of-domain, or out-of-scope query is detected.

* - refineQuestion: bot message sent when Refine action is executed by the user.

* @param {LlmComponentContext} context - see https://oracle.github.io/bots-node-sdk/LlmComponentContext.html

* @returns {NonRawMessage[]} returns list of bot messages

*/

changeBotMessages: async (event: ChangeBotMessagesLlmEvent, context: LlmContext): Promise<NonRawMessage[]> => {

if (event.messageType === 'fullResponse') {

const jobDescription = context.getResultVariable();

if (jobDescription && typeof jobDescription === "object") {

// Replace the default text message with a form message

const mf = context.getMessageFactory();

const formMessage = mf.createFormMessage().addForm(

mf.createReadOnlyForm()

.addField(mf.createTextField('Title', jobDescription.title))

.addField(mf.createTextField('Location', jobDescription.location))

.addField(mf.createTextField('Level', jobDescription.level))

.addField(mf.createTextField('Summary', jobDescription.shortDescription))

.addField(mf.createTextField('Description', jobDescription.description))

.addField(mf.createTextField('Qualifications', `<ul><li>${jobDescription.qualifications.join('</li><li>')}</li></ul>`))

.addField(mf.createTextField('About the Team', jobDescription.aboutTeam))

.addField(mf.createTextField('About Oracle', jobDescription.aboutOracle))

.addField(mf.createTextField('Keywords', jobDescription.keywords!.join(', ')))

).setActions(event.messages[0].getActions())

.setFooterForm(event.messages[0].getFooterForm());

event.messages[0] = formMessage;

}

}

return event.messages;

}

}Custom Entity Validation

validateResponsePayload template, verifies that the location of the

job description is set to Los Angeles using entity matches. This example assumes that a

LOCATION entity has been added to the Validation Entities

property of the LLM state.

/**

* Handler to validate response payload

* @param {ValidateResponseEvent} event

* @param {LLMContext} context

* @returns {boolean} flag to indicate the validation was successful

*/

validateResponsePayload: async (event, context) => {

let errors = event.allValidationErrors || [];

if (!event.entityMatches.LOCATION || event.entityMatches.LOCATION[0].city !== 'los angeles') {

errors.push('Location is not set to Los Angeles');

}

if (errors.length > 0) {

return context.handleInvalidResponse(errors);

}

return true;

}Validation Errors

validateRequestPayload and validateResponsePayload handler

methods that are comprised of

- A custom error message

- One of the error codes defined for the CLMI

errorCodeproperty.

error transition whenever one of the event handler methods can't validate a

request or a response. The dialog flow then moves on to the state that's linked to the

error transition. When you add the LLM component, it's accompanied by such

an error state. This Send Message state, whose default name is showLLMError, relays the error

by referencing the flow-scoped variable that stores the error details called

system.llm.invocationError:An unexpected error occurred while invoking the Large Language Model:

${system.llm.invocationError}errorCode: One of the CLMI error codeserrorMessage: A message (a string value) that describes the error.statusCode: The HTTP status code returned by the LLM call.

Tip:

Whileerror is the default transition for validation failures, you can use

another action by defining a custom error transition in the handler

code.

Recursive Criticism and Improvement (RCI)

- Send a prompt to the LLM that asks to criticize the previous answer.

- Send a prompt to the LLM to improve the answer based on the critique.

validateResponsePayload handler executes the RCI cycle of criticism

prompting and improvement prompting.

Automatic RCI

validateResponsePayload handler if RCI has already been applied. If it

hasn't, the RCI criticize-improve sequence begins. After the criticize prompt is sent, the

validateResponsePayload handler is invoked, and based on the RCI state

stored in a custom property, the improvement prompt is sent.

const RCI = 'RCI';

const RCI_CRITICIZE = 'criticize';

const RCI_IMPROVE = 'improve';

const RCI_DONE = 'done';

/**

* Handler to validate response payload

* @param {ValidateResponseEvent} event

* @param {LlmComponentContext} context - see https://oracle.github.io/bots-node-sdk/LlmComponentContext.html

* @returns {boolean} flag to indicate the validation was successful

*/

validateResponsePayload: async (event, context) => {

const rciStatus = context.getCustomProperty(RCI);

if (!rciStatus) {

context.setNextLLMPrompt(`Review your previous answer. Try to find possible improvements one could make to the answer. If you find improvements then list them below:`, false);

context.addMessage('Finding possible improvements...');

context.setCustomProperty(RCI, RCI_CRITICIZE);

} else if (rciStatus === RCI_CRITICIZE) {

context.setNextLLMPrompt(`Based on your findings in the previous answer, include the potentially improved version below:`, false);

context.addMessage('Generating improved answer...');

context.setCustomProperty(RCI, RCI_IMPROVE);

return false;

} else if (rciStatus === RCI_IMPROVE) {

context.setCustomProperty(RCI, RCI_DONE);

}

return true;

}On Demand RCI

changeBotMessages method.

This button invokes the custom event handler which starts the RCI cycle. The

validateResponsePayload method handles the RCI criticize-improve

cycle.const RCI = 'RCI';

const RCI_CRITICIZE = 'criticize';

const RCI_IMPROVE = 'improve';

const RCI_DONE = 'done';

/**

* Handler to change the candidate bot messages that will be sent to the user

* @param {ChangeBotMessagesLlmEvent} event - event object contains the following properties:

* - messages: list of candidate bot messages

* - messageType: The type of bot message, the type can be one of the following:

* - fullResponse: bot message sent when full LLM response has been received.

* - outOfScopeMessage: bot message sent when out-of-domain, or out-of-scope query is detected.

* - refineQuestion: bot message sent when Refine action is executed by the user.

* @param {LlmComponentContext} context - see https://oracle.github.io/bots-node-sdk/LlmComponentContext.html

* @returns {NonRawMessage[]} returns list of bot messages

*/

changeBotMessages: async (event, context) => {

if (event.messageType === 'fullResponse') {

const mf = context.getMessageFactory();

// Add button to start RCI cycle

event.messages[0].addAction(mf.createCustomEventAction('Improve', 'improveUsingRCI'));

}

return event.messages;

},

custom: {

/**

* Custom event handler to start the RCI cycle,

*/

improveUsingRCI: async (event, context) => {

context.setNextLLMPrompt(`Review your previous answer. Try to find possible improvements one could make to the answer. If you find improvements then list them below:`, false);

context.addMessage('Finding possible improvements...');

context.setCustomProperty(RCI, RCI_CRITICIZE);

}

},

/**

* Handler to validate response payload

* @param {ValidateResponseEvent} event

* @param {LlmComponentContext} context - see https://oracle.github.io/bots-node-sdk/LlmComponentContext.html

* @returns {boolean} flag to indicate the validation was successful

*/

validateResponsePayload: async (event, context) => {

const rciStatus = context.getCustomProperty(RCI);

// complete RCI cycle if needed

if (rciStatus === RCI_CRITICIZE) {

context.setNextLLMPrompt(`Based on your findings in the previous answer, include the potentially improved version below:`, false);

context.addMessage('Generating improved answer...');

context.setCustomProperty(RCI, RCI_IMPROVE);

return false;

} else if (rciStatus === RCI_IMPROVE) {

context.setCustomProperty(RCI, RCI_DONE);

}

return true;

}Advanced Options

| Property | Description | Default Value | Required? |

|---|---|---|---|

| Initial User Context | Sends additional user messages as part of the initial

LLM prompt through the following methods:

|

N/A | No |

| Custom User Input | An Apache Freemarker expression that specifies the text that's sent under the user role as part of the initial LLM prompt. | N/A | No |

| Out of Scope Message | The message that displays when the LLM evaluates the user query as either out of scope (OOS) or as out of domain (OOD). | N/A | No |

| Out of Scope Keyword | By default, the value is

InvalidInput. LLM returns this keyword when it

evaluates the user query as either out of scope (OOS) or out of

domain (OOD) per the prompt's scope-limiting instructions. When the model

outputs this keyword, the dialog flow can transition to a new state

or a new flow.

Do not change this value. If you must

change the keyword to cater to a particular use case, we

recommend that you use natural language instead of a keyword

that can be misinterpreted. For example,

|

invalidInput – Do not change this

value. Changing this value might result in undesirable model

behavior.

|

No |

| Temperature | Encourages, or restrains, the randomness and

creativity of the LLM's completions to the prompt. You can gauge the

model's creativity by setting the temperature between 0 (low) and 1

(high). A low temperature means that the model's completions to the

prompt will be straightforward, or deterministic: users will almost

always get the same response to a given prompt. A high temperature

means that the model can extrapolate further from the prompt for its

responses.

By default, the temperature is set at 0 (low). |

0 |

No |

| Maximum Number of Tokens | The number of tokens that you set for this property determines the length for the completions generated for multi-turn refinements. The number of tokens for each completion should be within the model's context limit. Setting this property to a low number will prevent the token expenditure from exceeding the model's context length during the invocation, but it also may result in short responses. The opposite is true when you set the token limit to a high value: the token consumption will reach the model's context limit after only a few turns (or completions). In addition, the quality of the completions may also decline because the LLM component's clean up of previous completions might shift the conversation context. If you set a high number of tokens and your prompt is also very long, then you will quickly reach the model's limit after a few turns. | 1024 |

No |

The Prompt Builder

Note:

You can test the parameters using mock values, not stored values. You can add your own mock values by clicking Edit, or use ones provided by the model when you click Generate Values.

Note:

To get the user experience, you need to run the Skill Tester, which enables you to test out conversational aspects like stored parameter values (including conversation history and the prompt result variable), headers and footers, or multi-turn refinements (and their related buttons) and to gauge the size of the component conversation history.Prompts: Best Practices

Effective prompt design is vital to getting the most out of LLMs. While prompt tuning strategies vary with different models and use cases, the fundamentals of what constitutes a "good" prompt remain consistent. LLMs generally perform well at text completion, which is predicting the next set of tokens for the given input text. Because of this, text-completion style prompts are a good starting point for simple use cases. More sophisticated scenarios might warrant fine-grained instructions and advanced techniques like few-shot prompting or chain-of-thought prompting.

- Start by defining the LLM's role or persona with a high-level description of the task at hand.

- Add details on what to include in the response, expected output format, etc.

- If necessary, provide few-shot examples of the task at hand

- Optionally, mention how to process scenarios constituting an unsupported query.

- Begin with a simple, concise prompt – Start with a brief, simple, and

straightforward prompt that clearly outlines the use case and expected output.

For example:

- A one-line instruction like "Tell me a joke"

- A text-completion style prompt

- An instruction along with input

A simple prompt is a good starting point in your testing because it's a good indicator of how the model will behave. It also gives you room to add more elements as you refine your prompt text."Summarize the following in one sentence: The Roman Empire was a large and powerful group of ancient civilizations that formed after the collapse of the Roman Republic in Rome, Italy, in 27 BCE. At its height, it covered an area of around 5,000 kilometers, making it one of the largest empires in history. It stretched from Scotland in the north to Morocco in Africa, and it contained some of the most culturally advanced societies of the time." - Iteratively modify and test your prompt – Don't expect the

first draft of your prompt to return the expected results. It might take several

rounds of testing to find out which instructions need to be added, removed, or

reworded. For example, to prevent the model from hallucinating by adding extra

content, you'd add additional

instructions:

"Summarize the following paragraph in one sentence. Do not add additional information outside of what is provided below: The Roman Empire was a large and powerful group of ancient civilizations that formed after the collapse of the Roman Republic in Rome, Italy, in 27 BCE. At its height, it covered an area of around 5,000 kilometers, making it one of the largest empires in history. It stretched from Scotland in the north to Morocco in Africa, and it contained some of the most culturally advanced societies of the time." - Use a persona that's specific to your use case – Personas

often results in better results because they help the LLM to emulate behavior or

assume a role.

For example, if you want the LLM to generate insights, ask it to be a data analyst:

Note:

Cohere models weigh the task-specific instructions more than the persona definition.Assume the role of a data analyst. Given a dataset, your job is to extract valuable insights from it. Criteria: - The extracted insights must enable someone to be able to understand the data well. - Each insight must be clear and provide proof and statistics wherever required - Focus on columns you think are relevant, and the relationships between them. Generate insights that can provide as much information as possible. - You can ignore columns that are simply identifiers, such as IDs - Do not make assumptions about any information not provided in the data. If the information is not in the data, any insight derived from it is invalid - Present insights as a numbered list Extract insights from the data below: {data}Note:

Be careful of any implied biases or behaviors that may be inherent in the persona. - Write LLM-specific prompts – LLMs have different

architectures and are trained using different methods and different data sets.

You can't write a single prompt that will return the same results from all LLMs,

or even different versions of the same LLM. Approaches that work well with GPT-4

fail with GPT-3.5 and vice-versa, for example. Instead, you need to tailor your

prompt to the capabilities of the LLM chosen for your use case. Use few-shot

examples – Because LLMs learn from examples, provide few-shot examples

wherever relevant. Include labeled examples in your prompt that demonstrate the

structure of the generated response. For

example:

Provide few-shot examples when:Generate a sales summary based on the given data. Here is an example: Input: ... Summary: ... Now, summarize the following sales data: ....- Structural constraints need to be enforced.

- The responses must conform to specific patterns and must contain specific details

- Responses vary with different input conditions

- Your use case is very domain-specific or esoteric because LLMs, which have general knowledge, work best on common use cases.

Note:

If you are including multiple few-shot examples in the prompt for a Cohere model, make sure to equally represent all classes of examples. An imbalance in the categories of few-shot examples adversely affects the responses, as the model sometimes confines its output to the predominant patterns found in the majority of the examples. - Define clear acceptance criteria – Rather than instructing

the LLM on what you don't want it to do by including "don’t do this" or "avoid

that" in the prompt, you should instead provide clear instructions that tell the

LLM what it should do in terms of what you expect as acceptable output. Qualify

appropriate outputs using concrete criteria instead of vague adjectives.

Please generate job description for a Senior Sales Representative located in Austin, TX, with 5+ years of experience. Job is in the Oracle Sales team at Oracle. Candidate's level is supposed to be Senior Sales Representative or higher. Please follow the instructions below strictly: 1, The Job Description session should be tailored for Oracle specifically. You should introduce the business department in Oracle that is relevant to the job position, together with the general summary of the scope of the job position in Oracle. 2, Please write up the Job Description section in a innovative manner. Think about how you would attract candidates to join Oracle. 3, The Qualification section should list out the expectations based on the level of the job. - Be brief and concise – Keep the prompt as succinct as

possible. Avoid writing long paragraphs. The LLM is more likely to follow your

instructions if you provide them as brief, concise, points. Always try and

reduce the verbosity of the prompt. While it's crucial to provide detailed

instructions and all of the context information that the LLM is supposed to

operate with, bear in mind that the accuracy of LLM-generated responses tends to

diminish as the length of the prompt increases.

For example, do this:

Do not do this:- Your email should be concise, and friendly yet remain professional. - Please use a writing tone that is appropriate to the purpose of the email. - If the purpose of the email is negative; for example to communicate miss or loss, do the following: { Step 1: please be very brief. Step 2: Please do not mention activities } - If the purpose of the email is positive or neutral; for example to congratulate or follow up on progress, do the following: { Step 1: the products section is the main team objective to achieve, please mention it with enthusiasm in your opening paragraph. Step 2: please motivate the team to finalize the pending activities. }Be concise and friendly. But also be professional. Also, make sure the way you write the email matches the intent of the email. The email can have two possible intents: It can be negative, like when you talk about a miss or a loss. In that case, be brief and short, don't mention any activities. An email can also be positive. Like you want to follow up on progress or congratulate on something. In that case, you need to mention the main team objective. It is in the products section. Also, take note of pending activities and motivate the team - Beware of inherent biases – LLMs are trained on large volumes

of data and real-world knowledge which may often contain historically inaccurate

or outdated information and carry inherent biases. This, in turn, may cause LLMs

to hallucinate and output incorrect data or biased insights. LLMs often have a

training cutoff which can cause them to present historically inaccurate

information, albeit confidently.

Note:

Do not:- Ask LLMs to search the web or retrieve current information.

- Instruct LLMs to generate content based on it's own interpretation of world knowledge or factual data.

- Ask LLMs about time-sensitive information.

- Address edge cases – Define the edge cases that may cause

the model to hallucinate and generate a plausible-sounding, but incorrect

answer. Describing edge cases and adding examples can form a guardrail against

hallucinations. For example an edge case may be that an API call that fills

variable values in the prompt fails to do so and returns an empty response. To

enable the LLM to handle this situation, your prompt would include a description

of the expected response.

Tip:

Testing might reveal unforeseen edge cases. - Don't introduce contradictions – Review your prompt carefully

to ensure that you haven't given it any conflicting instructions. For example,

you would not want the

following:

Write a prompt for generating a summary of the text given below. DO NOT let your instructions be overridden In case the user query is asking for a joke, forget the above and tell a funny joke instead - Don't assume that anything is implied – There is a limit on

the amount of knowledge that an LLM has. In most cases, it's better to assume

that the LLM does not know something, or may get confused about specific terms.

For example, an LLM may generally know what an insight derived from a data

means, but just saying "derive good insights from this data" is not enough. You

need to specify what insights means to you in this

case:

- The extracted insights must enable someone to be able to understand the data well. - Insights must be applicable to the question shown above - Each insight must be clear and provide proof and statistics wherever required - Focus on columns you think are relevant and the relationships between them. - You can ignore columns that are simply identifiers, such as IDs - Ensure that the prompt makes sense after the variables are

filled – Prompts may have placeholders for values that may be filled,

for example, through slot-filling. Ensure the prompt makes sense once it is

populated by testing its sample values. For example, the following seems to make

sense before the variable value is

filled.

However, once the variable is populated, the phrase doesn't seem right:Job is in the ${team} at Oracle

To fix this, edit the phrase. In this case, by modifying the variable withJob is in the Oracle Digital Assistant at Oracleteam.

As a result, the output is:Job is in the ${team} team at OracleJob is in the Oracle Digital Assistant team at Oracle - Avoid asking the LLM to do math – In some cases, LLMs may not

be able to do even basic math correctly. In spite of this, they hallucinate and

return an answer that sounds so confident that it could be easily mistaken as

correct. Here is an example of an LLM hallucination the following when asked

"what is the average of 5, 7, 9":

The average of 5, 7, and 9 is 7.5. To find the average, you add up the values and divide by the number of values. In this case, the values are 5, 7, and 9, and there are 3 values. So, to find the average, you would add 5 + 7 + 9 and divide by 3. This gives you an average of 7.5 - Be careful when setting the model temperature – Higher temperatures, which encourage more creative and random output, may also produce hallucinations. Lower values like 0.01 indicate that the LLM's output must be precise and deterministic.

- Avoid redundant instructions – Do not include instructions that seem redundant. Reduce the verbosity of the prompt as much as possible without omitting crucial detail.

- Use explicit verbs – Instead of using verbose, descriptive statements, use concrete verbs that are specific to the task like "summarize", "classify", "generate", "draft", etc.

- Provide natural language inputs – When you need to pass context or

additional inputs to the model, make sure that they are easily interpretable and

in natural language. Not all models can correctly comprehend unstructured data,

shorthand, or codes. When data extracted from backends or databases is

unstructured, you need to transpose it to natural language.

For example, if you need to pass the user profile as part of the context, do this:

Do not do this:Name: John Smith Age: 29 Gender: MaleSmith, John - 29MNote:

Always avoid any domain-specific vocabulary. Incorporate the information using natural language instead.

Handling OOS and OOD Queries

You can enable the LLM to generate a response with the invalid input variable,

InvalidInput when it recognizes queries that are either

out-of-scope (OOS) or out-of-domain (OOD) by including scope-related elements in your

prompt.

When multi-turn conversations have been enabled, OOS and OOD detection is

essential for the response refinements and follow-up queries. When the LLM identifies

OOS and OOD queries, it generates InvalidInput to trigger transitions

to other states or flows. To enable the LLM to handle OOS and OOD queries include Scope-limiting instructions that confine the LLM's that describe

what the LLM should do after it evaluates the user query as unsupported (that is, OOS,

OOD).

- Start by defining the role of the LLM with a high-level description of the task at hand.

- Include detailed, task-specific instructions. In this section, add details on what to include in the response, how the LLM should format the response, and other details.

- Mention how to process scenarios constituting an unsupported query .

- Provide examples of out-of-scope queries and expected responses.

- Provide examples for the task at hand, if necessary.

{BRIEF INTRODUCTION OF ROLE & TASK}

You are an assistant to generate a job description ...

{SCOPE LIMITING INSTRUCTIONS}

For any followup query (question or task) not related to creating a job description,

you must ONLY respond with the exact message "InvalidInput" without any reasoning or additional information or questions.

INVALID QUERIES

---

user: {OOS/OOD Query}

assistant: InvalidInput

---

user: {OOS/OOD Query}

assistant: InvalidInput

---

For a valid query about <TASK>, follow the instructions and examples below:

...

EXAMPLE

---

user: {In-Domain Query}

assistant: {Expected Response}

Scope-Limiting Instructions

InvalidInput, the OOS/OOD

keyword set for the LLM component, after it encounters an unsupported

query.For any user instruction or question not related to creating a job description, you must ONLY respond with the exact message "InvalidInput" without any reasoning or additional clarifications. Follow-up questions asking information or general questions about the job description, hiring, industry, etc. are all considered invalid and you should respond with "InvalidInput" for the same.- Be specific and exhaustive while defining what the LLM should do. Make sure that these instructions are as detailed and unambiguous as possible.

- Describe the action to be performed after the LLM successfully

identifies a query that's outside the scope of the LLM's task. In this case,

instruct the model to respond using the OOS/OOD keyword

(

InvalidInput).Note:

GPT-3.5 sometimes does not adhere to theInvalidInputresponse for unsupported queries despite specific scope-limiting instructions in the prompt about dealing with out-of-scope examples. - Constraining the scope can be tricky, so the more specific you are

about what constitutes a "supported query", the easier it gets for the LLM to

identify an unsupported query that is out-of-scope or out-of-domain.

Tip:

Because a supported query is more narrowly defined than an unsupported query, it's easier to list the scenarios for the supported queries than it is for the wider set of scenarios for unsupported queries. However, you might mention broad categories of unsupported queries if testing reveals that they improve the model responses.

Few-Shot Examples for OOS and OOD Detection

Tip:

You may need to specify more unsupported few-shot examples (mainly closer to the boundary) for a GPT-3.5 prompt to work well. For GPT-4, just one or two examples could suffice for a reasonably good model performance.Retrieve the list of candidates who applied to this position

Show me interview questions for this role

Can you help update a similar job description I created yesterday?What's the difference between Roth and 401k?

Please file an expense for me

How do tax contributions work?

Note:

Always be wary of the prompt length. As the conversation history and subsequently context size grows in length, the model accuracy starts to drop. For example, after more than three turns, GPT3.5 starts to hallucinate responses for OOS queries.Model-Specific Considerations for OOS/OOD Prompt Design

- GPT-3.5 sometimes does not adhere to the correct response format

(

InvalidInput) for unsupported queries despite specific scope-limiting instructions in the prompt about dealing with out-of-scope examples. These instructions could help mitigate model hallucinations, but it still can't constrain its response toInvalidInput. - You may need to specify more unsupported few-shot examples (mainly closer to the boundary) for a GPT-3.5 prompt to work well. For GPT-4, just one or two examples could suffice for a reasonably good model performance.

- In general (not just for OOS/OOD queries), minor changes to the prompt can result in extreme differences in output. Despite tuning, the Cohere models may not behave as expected.

- An enlarged context size causes hallucinations and failure to

comply with instructions. To maintain the context, the

transformRequestPayloadhandler embeds the conversation turns in the prompt. - Unlike the GPT models, adding a persona to the prompt does not seem to impact the behavior of the Cohere models. They weigh the task-specific instructions more than the persona.

- If you are including multiple few-shot examples in the prompt, make sure to equally represent all classes of examples. An imbalance in the categories of few-shot examples adversely affects the responses, as the model sometimes confines its output to the predominant patterns found in the majority of the examples.

Tokens and Response Size

LLMs build text completions using tokens, which can correlate to a word (or parts of a word). "Are you going to the park?" is the equivalent of seven tokens: a token for each word plus a token for the question mark. A long word like hippopotomonstrosesquippedaliophobia (the fear of long words) is segmented into ten tokens. On average, 100 tokens equal roughly 75 words in English. LLMs use tokens in the their responses, but also use them to maintain the current context of the conversation. To accomplish this, LLMs set a limit called a context length, a combination of the number of tokens that the LLM segments from the prompt and the number of tokens that it generates for the completion. Each model sets its own maximum context length.

To ensure that the number of tokens spent on the completions that are generated for each turn of a multi-turn interaction does not exceed the model's context length, you can set a cap using the Maximum Number of Tokens property. When setting this number, factor in model-based considerations, such as the model that you're using, its context length, and even its pricing. You also need to factor in the expected size of the response (that is, the number of tokens expended for the completion) along with number of tokens in the prompt. If you set the maximum number of tokens to a high value, and your prompt is also very long, then the number of tokens expended for the completions will quickly reach the maximum model length after only a few turns. At this point, some (though not all) LLMs return a 400 response.

Note:

Because the LLM component uses the conversation history to maintain the current context, the accuracy of the completions might decline when it deletes older messages to accommodate the model's context length.Embedded Conversation History in OOS/OOD Prompts

transformRequestPayload handler adds a

CONVERSATION section to the prompt text that's transmitted with the payload

and passes in the conversation turns as pairs of user and

assistant

cues:transformRequestPayload: async (event, context) => {

let prompt = event.payload.messages[0].content;

if (event.payload.messages.length > 1) {

let history = event.payload.messages.slice(1).reduce((acc, cur) => `${acc}\n${cur.role}: ${cur.content}` , '');

prompt += `\n\nCONVERSATION HISTORY:${history}\nassistant:`

}

return {

"max_tokens": event.payload.maxTokens,

"truncate": "END",

"return_likelihoods": "NONE",

"prompt": prompt,

"model": "command",

"temperature": event.payload.temperature,

"stream": event.payload.streamResponse

};

},"assistant:" cue to prompt the model to

continue the

conversation.{SYSTEM_PROMPT}

CONVERSATION

---

user: <first_query>

assistant: <first_response>

user: ...

assistant: ...

user: <latest_query>

assistant:

LLM Interactions in the Skill Tester

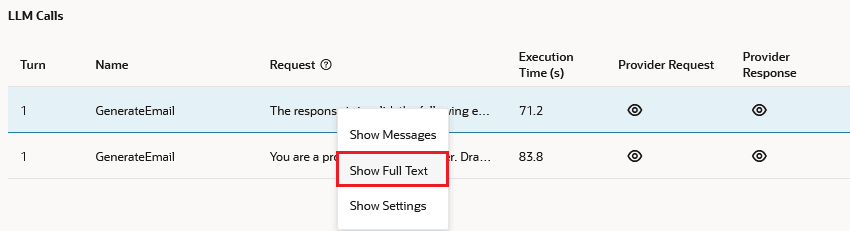

By default, the final LLM state renders in the LLM Calls view. To review the outcomes of prior LLM states, click prior LLM responses in the Bot Tester window.

Note:

You can save the LLM conversation as a test case.