8 Train Your Model for Natural Language Understanding

Here are some best practices for training your digital assistant for natural language understanding.

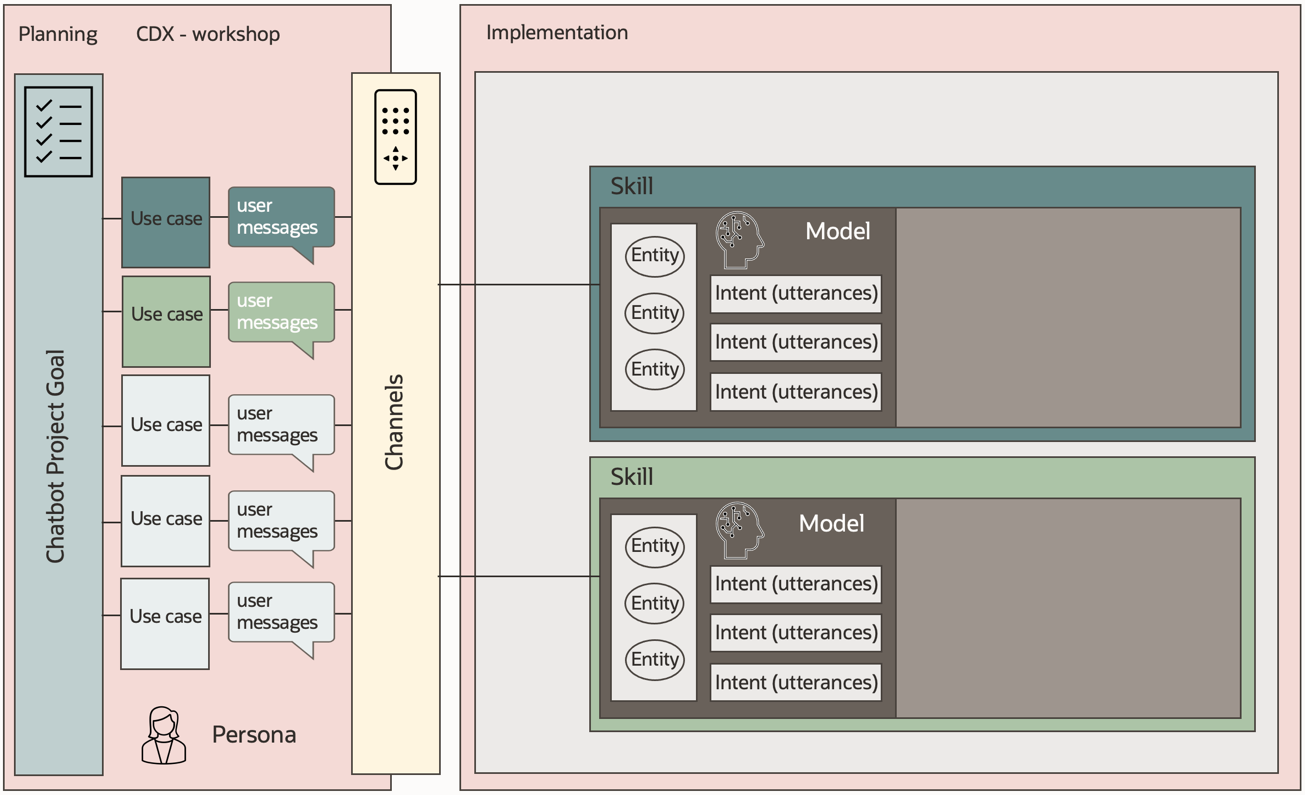

Building digital assistants is about having goal-oriented conversations between users and a machine. To do this, the machine must understand natural language to classify a user message for what the user wants. This understanding is not a semantic understanding, but a prediction the machine makes based on a set of training phrases (utterances) that a model designer trained the machine learning model with.

Defining intents and entities for a conversational use case is the first important step in your Oracle Digital Assistant implementation. Using skills and intents you create a physical representation of the use cases and sub-tasks you defined when partitioning your large digital assistant project in smaller manageable parts.

When collecting utterances for training intents, keep in mind that conversational AI learns by example and not by heart. What this means is that, once you have trained the intents on representative messages you have anticipated for a task, the linguistic model will be able to also classify messages that were not part of the training set for an intent.

Oracle Digital Assistant offers two linguistic models to detect what users want and to kick-off a conversation or display an answer for a question: Trainer Ht and Trainer Tm.

Trainer Ht is good to use early during development when you don't have a well-designed and balanced set of training utterances as it trains faster and requires fewer utterances.

We recommend you use Trainer Tm as soon as you have collected between 20 and 30 high quality utterances for each intent in a skill. It is also the model you should be using for serious conversation testing and when deploying your digital assistant to production. Note that when deploying your skill to production, you should aim for more utterances and we recommend having at least 80 to 100 per intent.

In the following section, we discuss the role of intents and entities in a digital assistant, what we mean by "high quality utterances", and how you create them.

Create Intents

The Two Types of Intents

Oracle Digital Assistant has support for two types of intents: regular intents and answer intents. Both intent types use the same NLP model, which should be set to Trainer Tm for pre-production testing and in production.

The difference between the two intent types is that answer intents are associated with a pre-defined message that is displayed whenever the intent resolves from a user message, whereas regular intents, when resolved, lead to a user – bot conversation defined in the dialog flow.

You use answer intents for the bot to respond to frequently asked question that always produce a single answer. Regular intents are used to start a longer user-bot interaction that leads to the completion of a transactional task, querying a backend system, or providing a response to a frequently asked question that needs to consider external dependency like time, date or location, when providing an answer.

Consider a Naming Convention

To make developing and maintaining the skill more efficient, you should come up with a naming convention for your intents that makes it easy for you to immediately understand what a particular intent stands for and to use the filter option when searching for intents.

For example, a part of the name should delineate between answer intents and regular intents (and perhaps also regular intents that you need so you can return a direct answer but with more complex processing, such as with an attachment or based on a query to a remote service). With that in mind, you might start with a scheme similar to the following:

-

Regular intents:

reg.<name_of_intent> -

Answer intents:

ans.<name_of_intent> -

Regular intents that are answers:

reg.ans.<name_of_intent>

If your skill handles related tasks that can be categorized, then this can be used too in the intent naming. Let's assume you have an intent to create orders and to cancel orders. Using these two use cases, the naming convention could be:

-

Regular intents:

create.reg.<name_of_intent>,cancel.reg.<name_of_intent> -

Answer intents:

create.ans.<name_of_intent>,cancel.ans.<name_of_intent> -

Regular intents that are answers:

create.reg.ans.<name_of_intent>,cancel.reg.ans.<name_of_intent>

There is no strict rule as to whether you use dot notation, underscores, or something of your own. However, keep the names short enough so that are not truncated in the Oracle Digital Assistant intent editor panel.

Use Descriptive Conversation Names

Every intent also has a Conversation Name field. The conversation name is used in disambiguation dialogs that are automatically created by the digital assistant or the skill, if a user message resolves to more than one intent.

Note:

The value of the conversation name is saved in a resource bundle entry, so it can be translated to the different languages supported by your skill.Define the Scope of Your Intents

Intents are defined in skills and map user messages to a conversation that ultimately provides information or a service to the user. Think of the process of designing and training intents as the help you provide to the machine learning model to resolve what users want with a high confidence.

The better an intent is designed, scoped, and isolated from other intents, the more likely it is that it will work well when the skill to which the intent belongs is used with other skills in the context of a digital assistant. How well it works in the context of a digital assistant can only be determined by testing digital assistants, which we will discuss later.

Intents should be narrow in scope rather than broad and overloaded. That said, you may find that the scope of an intent is too narrow when the intent engine is having troubles to distinguish between two related use cases. If this is a problem you experience while testing your intents, and if it still remains a problem after reviewing and correcting the intent training and test utterances, then it is probably best to combine the conflicting intents into a one intent and use an entity to distinguish the use cases.

Example: Intent Scope Too Narrow

An example of scoping intents too narrowly is defining a separate intent for each product that you want to be handled by a skill. Let's take the extension of an existing insurance policy as an example. If you have defined intents per policy, the message "I want to add my wife to my health insurance" is not much different from "I want to add my wife to my auto insurance" because the distinction between the two is a single word. As another negative example, imagine if we at Oracle created a digital assistant for our customers to request product support, and for each of our products we created a separate skill with the same intents and training utterances.

As a young child, you probably didn't develop separate skills for holding bottles, pieces of paper, toys, pillows, and bags. Rather, you simply learned how to hold things. The same principle applies to creating intents for a bot.

Example: Intent Scope Too Broad

An intent’s scope is too broad if you still can’t see what the user wants after the intent is resolved. For example, suppose you created an intent that you named "handleExpenses" and you have trained it with the following utterances and a good number of their variations.

-

"I want to create a new expense report"

-

"I want to check my recent expense report"

-

"Cancel my recent expense report"

-

"My recent report requires corrections"

With this, further processing would be required to understand whether an expense report should be created, updated, deleted or searched for. To avoid complex code in your dialog flow and to reduce the error surface, you should not design intents that are too broad in scope.

Always remember that machine learning is your friend and that your model design should make you an equally good friend of conversational AI in Oracle Digital Assistant.

Create Intents for What You Don't Know

There are use cases for your digital assistant that are in-domain but out-of-scope for what you want the digital assistant to handle. For the bot to be aware of what it should not deal with, you create intents that then cause a message to be displayed to the user informing her about the feature that wasn't implemented and how she could proceed with her request.

Defining intents for in-domain but out-of-scope tasks is important. Based on the utterances defined for these intents, the digital assistant learns where to route requests for tasks that it doesn't handle.

Create Entities for the Information You Want to Collect from Users

There are two things you want to learn from a user:

-

what she wants

-

the information required to give her what she wants

Using entities and associating them with intents, you can extract information from user messages, validate input, and create action menus.

Here are the main ways you use entities:

-

Extract information from the original user message. The information gathered by entities can be used in the conversation flow to automatically insert values (entity slotting). Entity slotting ensures that a user is not prompted again for information she has provided before.

-

Validate user input. For this you define a variable for an entity type and reference that variable within input components. This will extract the information even if the user provides a sentence instead of an exact value. For example, when prompted for an expense date, the user may message "I bought this item on June 12th 2021". In this case the value "June 12th 2021" would be extracted from the user entry and saved in the variable. At the same time, it also validates the user input, since the user is re-prompted for the information if no valid date is extracted.

Entities are also used to create action menus and lists of values that can be operated via text or voice messages, in addition to the option for the user to press a button or select a list item.

Other Entity Features

And there is more functionality provided by entities that makes it worthwhile to spend time identifying information that can be collected with them.

For example, what if a user enters the message "I bought this item on June 12, 2021 and July 2, 2021" when asked for a purchase date? In this case, the entity, if used with the Common Response or Resolve Entities component, will auto-generate a disambiguation dialog for the user to select a single value as her data input. As in human conversation where a person would ask another to disambiguate a statement or order, entities will do the same for you. And of course, entities can also be configured to accept multiple values if the use case supports it.

Also, when using the Common Response or Resolve Entities component with custom entities, the prompts displayed to users can be defined in the entity such that users get alternating prompts when re-prompted for previously failed data input. When the prompt message changes in between invocations, it makes your bot less robotic and more conversational. In addition, you can use alternating prompts to progressively reveal more information to assist users in providing a correct input.

As a general practice, it is recommended that you use entities to perform user input validation and display validation error messages, as well as for displaying prompts and disambiguation dialogs.

Consider a Naming Convention

As with intents, we recommend a naming convention to follow when creating entity names. For starters, if you include the entity type in the name of the entity, it makes it easier to understand at a glance and provides an easy way to filter a list of entities. For example, you could use the following scheme:

-

Value list entity:

list.<name_of_entity> -

Regular expression entity:

reg.<name_of_entity> -

Derived entity:

der.<name_of_entity>, order.DATE.<name_of_entity> -

Composite bag entity:

cbe.<name_of_entity> -

Machine learning entity:

ml.<name_of_entity>

Whether you use dot notation as in the examples above, underscores, or something of your own is up to you. Beyond that, we’d suggest that you try to keep the names fairly short.

Create Utterances for Training and Testing

Utterances are messages that model designers use to train and test intents defined in a model.

Oracle Digital Assistant provides a declarative environment for creating and training intents and an embedded utterance tester that enables manual and batch testing of your trained models. This section focuses on best practices in defining intents and creating utterances for training and testing.

Allow yourself the time it takes to get your intents and entities right before designing the bot conversations. In a later section of this document, you will learn how entities can help drive conversations and generate the user interface for them, which is another reason to make sure your models rock.

Training Utterances vs. Test Utterances

When creating utterances for your intents, you’ll use most of the utterances as training data for the intents, but you should also set aside some utterances for testing the model you have created. An 80/20 data split is common in conversational AI for the ratio between utterances to create for training and utterances to create for testing.

Another ratio you may find when reading about machine learning is to a 70/15/15 split. A 70/15/15 split means that 70% of your utterances would be used to train an intent, 15% to test the intent during development, and the other 15% to be used for testing before you go production with it. A good analogy for intent training is a school exam. The 70% is what a teacher teaches you about a topic. The first 15% are then tests that you take while you study. Then when it comes to writing your exam you get another 15% set of tests you haven't seen before.

Though the 70/15/15 is compelling, we still recommend using an 80/20 split for training Oracle Digital Assistant intents. As you will see later in this guide, you’ll get additional data for testing from cross-testing utterances in the context of the digital assistant (neighbour testing). So, if you follow the recommendations in this guide, you will be gathering enough data for testing to ensure your models work (even if it doesn’t end up being a 70/15/15 split). And you will be able to repeatedly run those tests to get an idea of improvements or regression over time.

How to Build Good Utterances

There's no garbage in, diamonds out when it comes to conversational AI. The quality of the data with which you train your model has a direct impact on the bot's understanding and its ability to extract information.

The goal of defining utterances is to create an unbiased and balanced set of training and testing data that does not clutter the intent model. In order to do so, here are some rules for you to follow that, in our experience, give good results

-

Do not use generation tools to create utterances. Chances are you will get many utterances with little variation.

-

Consider different approaches to trigger an intent, such as "I want to reset my password" vs. "I cannot log into my email".

-

Do not use utterances that consist of single words, as they lack the context from which the machine model can learn.

-

Avoid words like “please”, “thank you”, etc. that don’t contribute much to the semantic meaning of the utterances.

-

Use a representative set of entity values, but not all.

-

Vary the placement of the entity. You can place the entity at the beginning, middle and end of the utterance.

-

Keep the number of utterances balanced between intents (e.g. avoid 300 for one intent and 15 for another).

-

Strive for semantically and syntactically complete sentences.

-

Use correct spellings. Only by exception would you add some very likely misspellings and typos in the utterances. The model is generally able to deal with misspellings and typos on its own.

-

Add country-specific variations (e.g. trash bin vs. garbage can, diaper vs. nappy).

-

Vary the sentence structure (e.g. "I want to order a pizza", "I want a pizza, can I order").

-

Change the personal pronoun (e.g. I, we are, we are, I would, I would, we, you, your, we).

-

Use different terms for the verb (e.g. order, get, ask, want, like, want).

-

Use different terms for the noun (e.g. pizza, calzone, Hawaiian).

-

Create utterances of varying lengths (short, medium, and long sentences).

-

Consider pluralization (e.g. "I want to order pizzas", "Can I order some pizzas").

-

Consider using different verb forms and gerunds ("Fishing is allowed", "I want to fish, can I do this").

-

Use punctuation in some cases and omit it in others.

-

Use representative terms (e.g. avoid too many technical terms if the software is used by consumers).

What to Avoid When Writing Utterances

Utterances should not be defined the same way you would write command line arguments or list keywords. Make sure that all utterances you define have the notion of "conversational" to them. Creating utterances that only have keywords listed lack context or just are too short for the machine learning model to learn from.

How to Get Started with Writing Utterances

Ideally, good utterances are curated from real data. If you don't have existing conversation logs to start with, consider crowdsourcing utterances rather than merely synthesizing them. Synthesizing them should be your last resort.

For crowd-sourced utterances, email people who you know either represent or know how to represent your bot's intended audience. In addition, you can use Oracle Digital Assistant’s Data Manufacturing feature to set up an automated process for collecting (crowdsourcing) suggestions for utterances, which you’ll then probably want to curate so that they comply with the rules we have outlined for what makes a good utterance.

Note that you may find that people you ask for sample utterances feel challenged to come up with exceptionally good examples, which can lead to unrealistic niche cases or an overly artistic use of language requiring you to curate the sentences.

Also, keep in mind that curating sample utterances also involves creating multiple variations of individual samples that you have harvested through crowdsourcing.

How Many Utterances to Create

The quality of utterances is more important than the quantity. A few good utterances is better than many poorly designed utterances.Our recommendation is to start with 20-30 good utterances per intent in development and eventually increase that number to 80-100 for serious testing of your intents. Over time and as the bot is tested with real users, you will collect additional utterances that you then curate and use to train the intent model.

Depending on the importance and use case of an intent, you may end up with different numbers of utterances defined per intent, ranging from a hundred to several hundred (and, rarely, in to the thousands). However, as mentioned earlier, the difference in utterances per intent should not be extreme.

Note:

It's important that you maintain a baseline against which new test results are compared to ensure that the bot's understanding is improving and not dropping.What Level of Confidence Should You Aim For?

A machine learning model evaluates a user message and returns a confidence score for what it thinks is the top-level label (intent) and the runners-up. In conversational AI, the top-level label is resolved as the intent to start a conversation.

So, based on the model training and the user message, imagine one case where the model has 80% confidence that Intent A is a good match, 60% confidence for Intent B, and 45% for Intent C. In this case, you would probably be pretty comfortable that the user wants Intent A.

But what if the highest scoring label has only 30% confidence that this is what the user wants? Would you risk the model to follow this intent, or would you rather play it safe and assume the model can't predict what a user would want and display a message to the user to rephrase a request?

To help the intent model make a decision about what intents to consider matching with a user utterance, conversational AI uses a setting called the confidence threshold. The intent model evaluates a user utterance against all intents and assigns confidence scores for each intent. The confidence threshold is a value within the range of possible confidence scores that marks the line:

-

below which an intent is considered to not correspond at all with the utterance; and,

-

above which an intent is considered to be a candidate intent for starting a conversation.

In Oracle Digital Assistant, the confidence threshold is defined for a skill in the skill’s settings and has a default value of 0.7.

A setting of 0.7 is a good value to start with and test the trained intent model. If tests show the correct intent for user messages resolves well above 0.7, then you have a well-trained model.

Note:

If you find that two intents both resolve to a given phrase and their confidence scores are close together (for example, 0.71 vs. 0.72), you should review the two intents and see if they can be merged into a single intent.If you get good results with a setting of 0.7, try 0.8. The higher the confidence, the more likely you are to remove the noise from the intent model, which means that the model will not respond to words in a user message that are not relevant to the resolution of the use case.

However, the higher the confidence threshold, the more likely it is that the overall understanding will decrease (meaning many viable utterances might not match), which is not what you want. In other words, 100 percent “understanding” (or 1.0 as the confidence level) might not be a realistic goal.

Remember that conversational AI is about understanding what a user wants, even though they may express that in many different ways. E.g, in a pizza bot case, users should be able to order pizza with phrases as diverse as "I want to order a pizza" and "I am hungry".

Checklist for Training Your Model

- ☑ Use Trainer Tm.

- ☑ Review the scope of your intents. Find and correct intents that are too narrow and intents that are too broad.

- ☑ Use a good naming convention for intents and entities.

- ☑ Make use of the Description fields that exist for intents and entities.

- ☑ Always curate the phrases you have collected before you use them as utterances.

- ☑ Create an 80/20 split for utterances you use for training and testing. Training utterances should never be used for testing.

- ☑ Determine the optimum confidence threshold for your skills, preferably

0.7or higher. - ☑ Identify the information you need in a conversation and build entities for them.

- ☑ Look out for entities with a large number of values and synonyms whose only role is to identify what the user wants. Consider re-designing those to using intents instead.