- Using the Oracle HCM Cloud Adapter with Oracle Integration Generation 2

- Implement Common Patterns Using the Oracle HCM Cloud Adapter

- Import Business Objects with the HCM Data Loader (HDL)

Import Business Objects with the HCM Data Loader (HDL)

You can design an integration that imports business objects using the HCM Data Loader. This use case provides a high-level overview.

- Create an orchestrated integration to read source files staged on an FTP server that have Oracle HCM Cloud information. As an example, the source file information can consist of new hires information in a CSV file from Oracle Taleo Enterprise Edition.

- Add a stage file action to the integration to generate the worker-delimited

data file using a business object template file you obtained from Oracle HCM

Cloud.

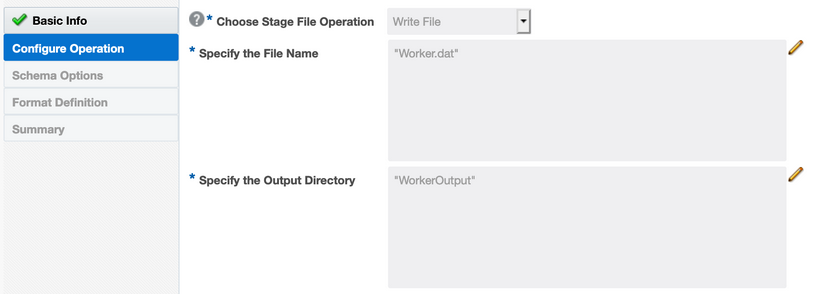

- On the Configure Operation page, select the Write

File operation, specify the file name as

Worker.dat, and specify the output directory

as WorkerOutput.

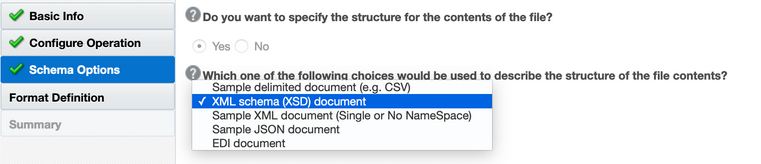

- On the Schema Options page, select XML schema (XSD)

document. You provide the business object template file

in the next step.

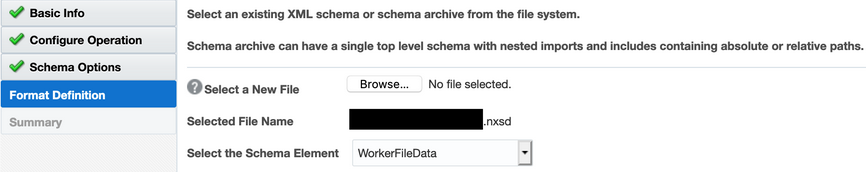

- Click Browse and upload the business object template file you obtain from Oracle HCM Cloud.

- On the Format Definition page, select the

WorkerFileData schema element. This is the

main element for the delimited data file.

- On the Summary page, click Done to save the stage file action.

- On the Configure Operation page, select the Write

File operation, specify the file name as

Worker.dat, and specify the output directory

as WorkerOutput.

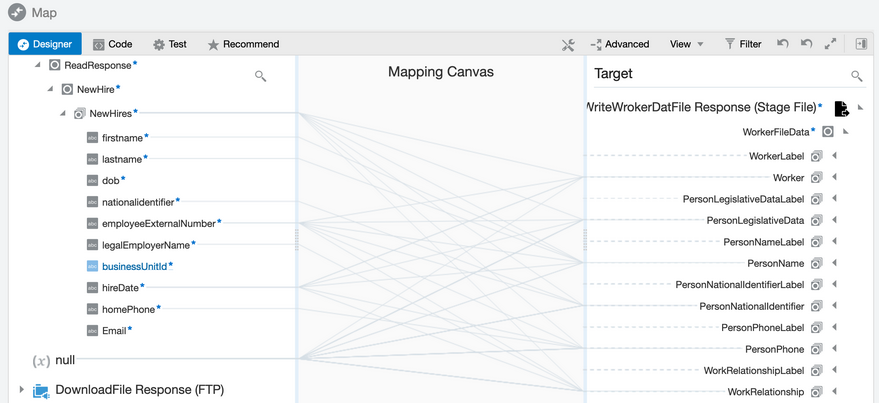

- Map the elements from the source CSV file to the business object template file.

Use the mapper to map the source file elements with the target elements depicted

in the schema. The Sources tree shows all available values and fields to use in this mapping. The Target tree shows the Write hierarchy. This is a representation of the basic structure for a

Worker.datfile.- For the field labels in the Target tree, enter the corresponding header title. For example, under the WorkerLabel parent, the EffectiveStartDateLabel has the value of EffectiveStartDate. These values correspond to the header column within the final DAT file generated.

- For the data sections of the Target tree, map the values from the Sources tree or enter default values.

- Map the repeating element recordName value to

the repeating element of the parent-specific section values. In this

example, NewHires requires mapping with parent

data sections such as Worker,

PersonLegislativeData,

PersonName, and so on.

- Create a second stage file action using the ZIP File operation to generate the ZIP file to send to the Submit HDL Job operation.

- Configure the Oracle HCM Cloud Adapter in the Adapter Endpoint Configuration Wizard to use the

Submit an HCM Data Loader job operation.

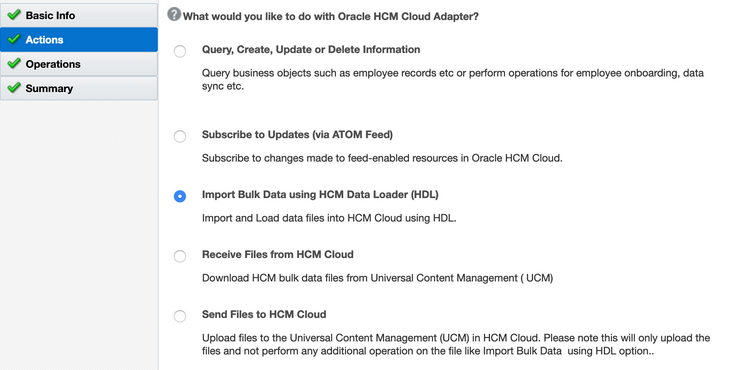

- Select the Import Bulk Data using HCM Data Loader

(HDL) action.

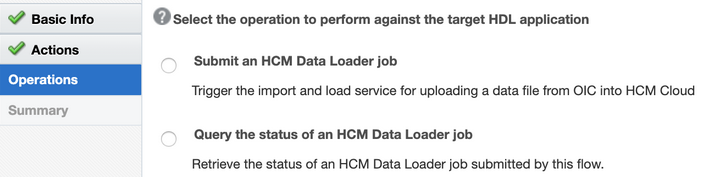

- Select the Submit an HCM Data Loader job

operation.

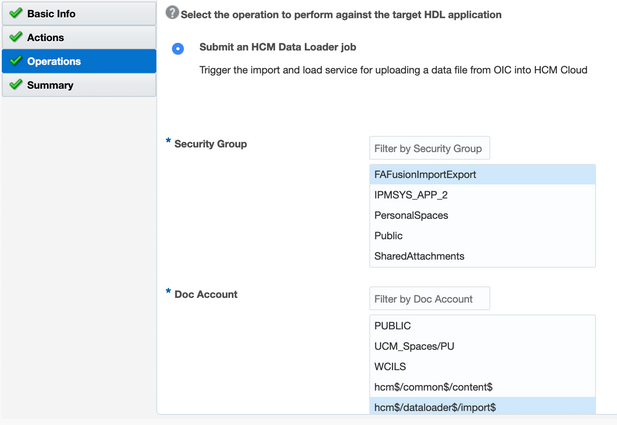

- Select the security group and doc account configuration parameters for

submitting the Oracle HDL job.

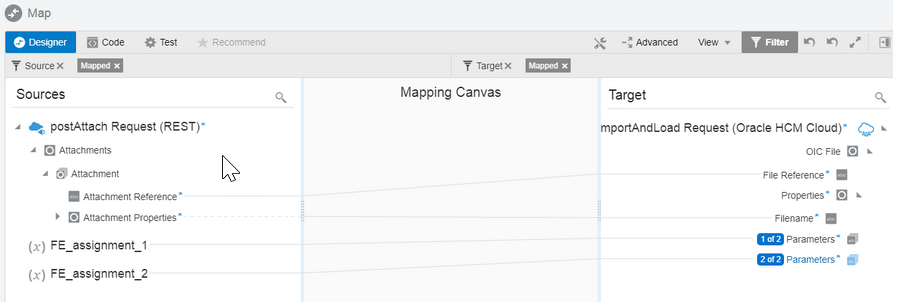

- Map the file reference. If you want to send additional parameters to

the importAndLoad operation, use the

Parameters element.

- Select the Import Bulk Data using HCM Data Loader

(HDL) action.

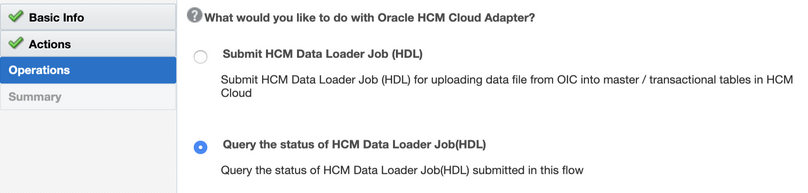

- Configure a second Oracle HCM Cloud Adapter in the Adapter Endpoint Configuration Wizard to use the

Query the status of HCM Data Loader Job (HDL)

operation. This action can run inside a loop until the HDL job status is

identified as started or any other status that you want.

- Select Query the status of HCM Data Loader Job

(HDL).

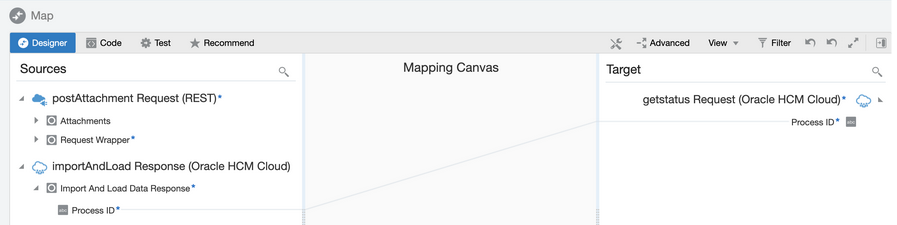

- Map the Process ID to getStatus

Request (Oracle HCM Cloud) > Process

ID.

- Select Query the status of HCM Data Loader Job

(HDL).

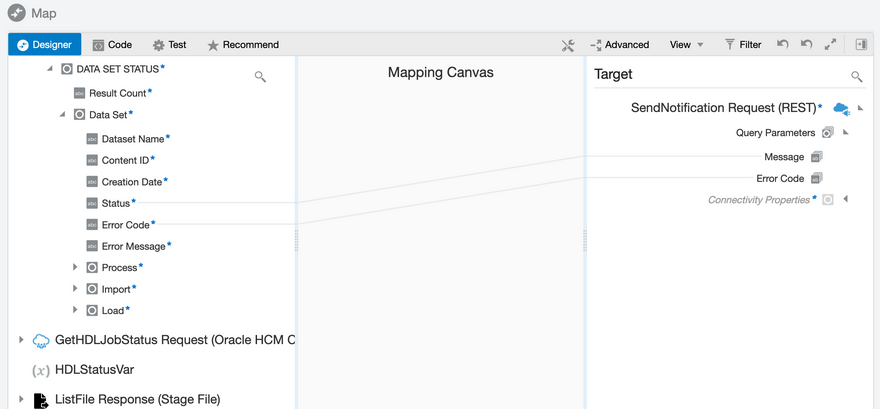

- Map the response from the Query the status of HCM Data Loader job

(HDL) operation.Oracle Integration provides extensive visibility into the job status by including status information at various states (for example, job, load, and import states). Compare the status of the job as per your business requirement. The overall status can be one of the following values:

Status Meaning Comments NOT STARTED The process has not yet started. It is waiting or ready. If this value is returned, poll again after waiting for some time. DATA_SET_UNPROCESSED The process is running, but the data set has not yet been processed. If this value is returned, poll again after waiting for some time. IN_PROGRESS The process is running. If this value is returned, poll again after waiting for some time. COMPLETED The data set completed successfully. Job is completed, you can fetch the output. CANCELLED Either the data set load or data set import was canceled. Job is canceled.