Preprocess Log Events

For certain log sources, such as Database Trace Files and MySQL, Oracle Log Analytics provides functions that allows you to preprocess log events and enrich the resultant log entries.

Currently, Oracle Log Analytics provides the following functions to preprocess log events:

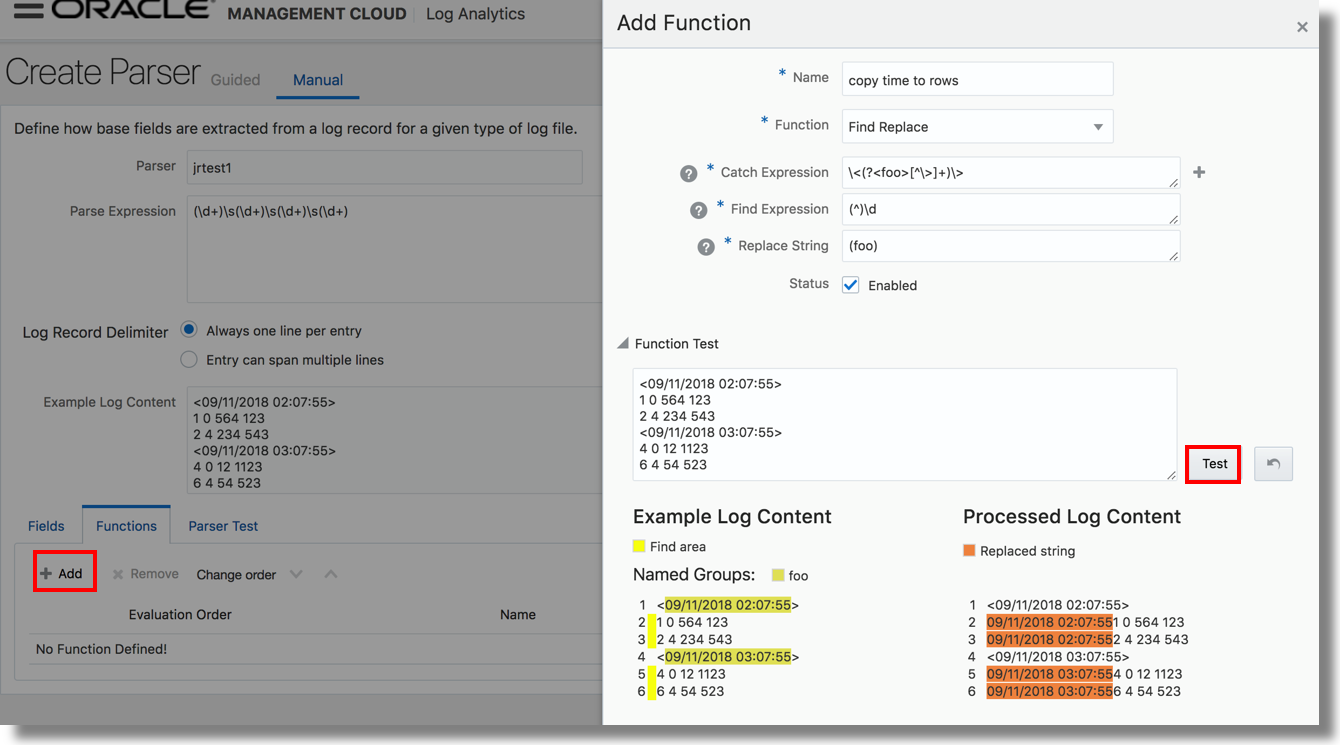

To preprocess log events, click the Functions tab and then click the Add button while creating a parser.

In the resultant Add Function dialog box, select the required function (based on the log source) and specify the relevant field values.

To view the result of application of the function on the example log content, under Function Test section, click Test. A comparative result is displayed to help you determine the correctness of the field values.

Master Detail Function

This function lets you enrich log entries with the fields from the header of log files. This function is particularly helpful for logs that contain a block of body as a header and then entries in the body.

This function enriches each log body entry with the fields of the header log entry. Database Trace Files are one of the examples of these types of logs.

To capture the header and its corresponding fields for enriching the time-based body log entries, at the time of parser creation, you need to select the corresponding Header Parser name in the Add Function dialog box.

Examples:

-

In these types of logs, the header mostly appears somewhere at the beginning in the log file, followed by other entries. See the following:

Trace file /scratch/emga/DB1212/diag/rdbms/lxr1212/lxr1212_1/trace/lxr1212_1_ora_5071.trc Oracle Database 12c Enterprise Edition Release 12.1.0.2.0 - 64bit Production With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP, Advanced Analytics and Real Application Testing options ORACLE_HOME = /scratch/emga/DB1212/dbh System name: Linux Node name: slc00drj Release: 2.6.18-308.4.1.0.1.el5xen Version: #1 SMP Tue Apr 17 16:41:30 EDT 2012 Machine: x86_64 VM name: Xen Version: 3.4 (PVM) Instance name: lxr1212_1 Redo thread mounted by this instance: 1 Oracle process number: 35 Unix process pid: 5071, image: oracle@slc00drj (TNS V1-V3) *** 2015-10-12 21:12:06.169 *** SESSION ID:(355.19953) 2015-10-12 21:12:06.169 *** CLIENT ID:() 2015-10-12 21:12:06.169 *** SERVICE NAME:(SYS$USERS) 2015-10-12 21:12:06.169 *** MODULE NAME:(sqlplus@slc00drj (TNS V1-V3)) 2015-10-12 21:12:06.169 *** CLIENT DRIVER:() 2015-10-12 21:12:06.169 *** ACTION NAME:() 2015-10-12 21:12:06.169 2015-10-12 21:12:06.169: [ GPNP]clsgpnp_dbmsGetItem_profile: [at clsgpnp_dbms.c:345] Result: (0) CLSGPNP_OK. (:GPNP00401:)got ASM-Profile.Mode='legacy' *** CLIENT DRIVER:(SQL*PLUS) 2015-10-12 21:12:06.290 SERVER COMPONENT id=UTLRP_BGN: timestamp=2015-10-12 21:12:06 *** 2015-10-12 21:12:10.078 SERVER COMPONENT id=UTLRP_END: timestamp=2015-10-12 21:12:10 *** 2015-10-12 21:12:39.209 KJHA:2phase clscrs_flag:840 instSid: KJHA:2phase ctx 2 clscrs_flag:840 instSid:lxr1212_1 KJHA:2phase clscrs_flag:840 dbname: KJHA:2phase ctx 2 clscrs_flag:840 dbname:lxr1212 KJHA:2phase WARNING!!! Instance:lxr1212_1 of kspins type:1 does not support 2 phase CRS *** 2015-10-12 21:12:39.222 Stopping background process SMCO *** 2015-10-12 21:12:40.220 ksimdel: READY status 5 *** 2015-10-12 21:12:47.628 ... KJHA:2phase WARNING!!! Instance:lxr1212_1 of kspins type:1 does not support 2 phase CRSFor the preceding example, using the Master Detail function, Oracle Log Analytics enriches the time-based body log entries with the fields from the header content.

-

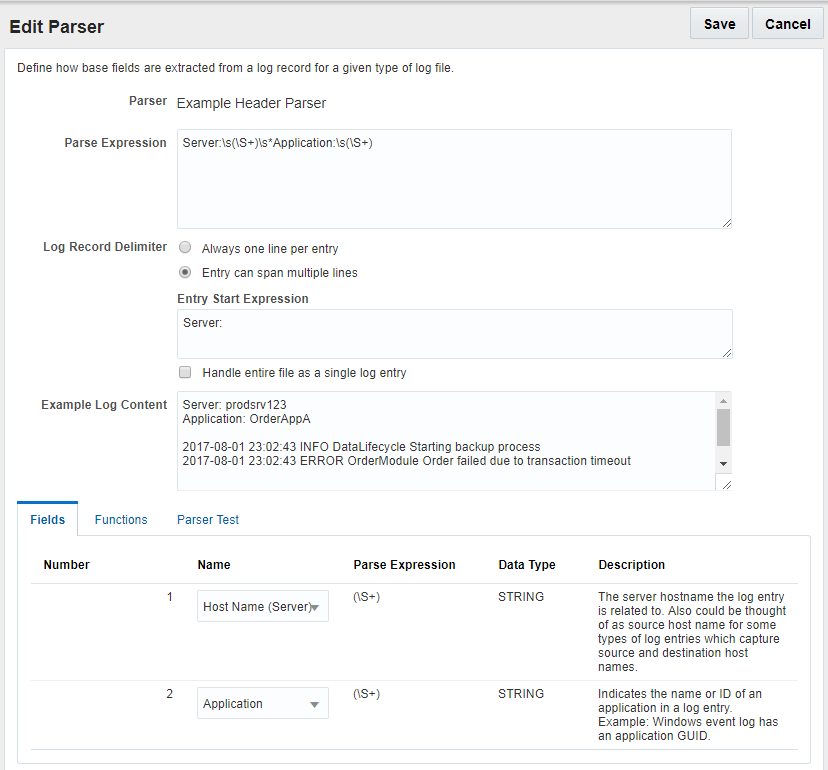

Observe the following log example:

Server: prodsrv123 Application: OrderAppA 2017-08-01 23:02:43 INFO DataLifecycle Starting backup process 2017-08-01 23:02:43 ERROR OrderModule Order failed due to transaction timeout 2017-08-01 23:02:43 INFO DataLifecycle Backup process completed. Status=success 2017-08-01 23:02:43 WARN OrderModule Order completed with warnings: inventory on backorderIn the preceding example, we have four log entries that must be captured in Oracle Log Analytics. The server name and application name appear only at the beginning of the log file. To include the server name and the application name in each log entry:

-

Define a parser for the header that’ll parse the server and application fields:

Server:\s*(\S+).*?Application:\s*(\S+) -

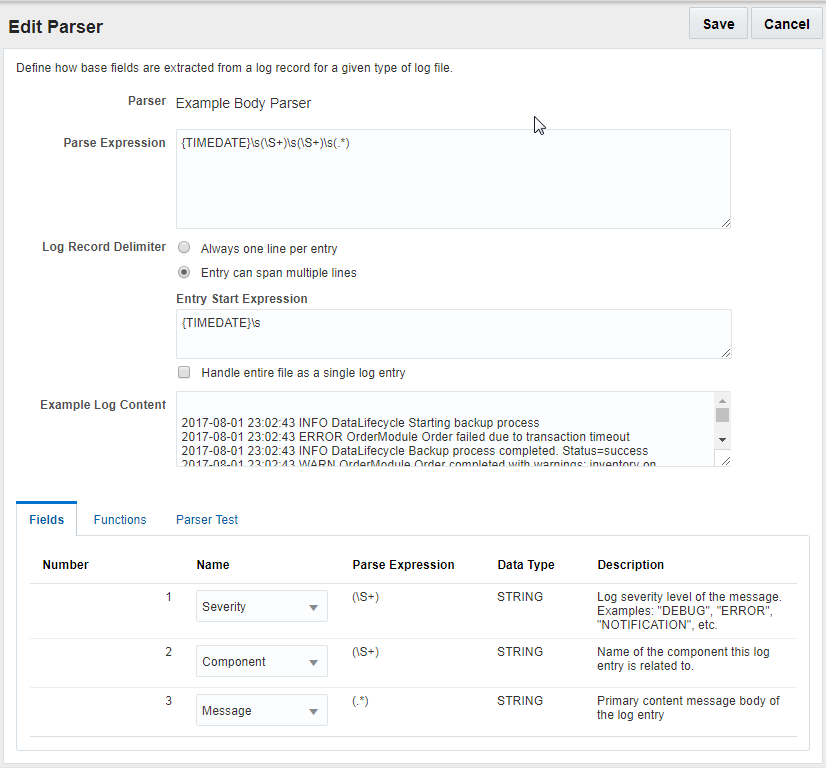

Define a second parser to parse the remaining body of the log:

-

To the body parser, add a Master-Detail function instance and select the header parser that you’d defined in step 1.

-

Add the body parser that you’d defined in the step 2 to a log source, and associate the log source with an entity to start the log collection.

You’ll then be able to get four log entries with the server name and application name added to each entry.

-

Find Replace Function

This function lets you extract text from a log line and add it to other log lines conditionally based on given patterns. For instance, you can use this capability to add missing time stamps to MySQL general and slow query logs.

The find-replace function has the following attributes:

-

Catch Expression: Regular expression that is matched with every log line and the matched regular expression named group text is saved in memory to be used in Replace Expression.

-

If the catch expression matches a complete line, then the replace expression will not be applied to the subsequent log line. This is to avoid having the same line twice in cases where you want to prepend a missing line.

-

A line matched with the catch expression will not be processed for the find expression. So, a find and replace operation cannot be performed in the same log line.

-

You can specify multiple groups with different names.

-

-

Find Expression: This regular expression specifies the text to be replaced by the text matched by named groups in Catch Expression in log lines.

-

The pattern to match must be grouped.

-

The find expression is not run on those lines that matched catch expression. So, a search and replace operation cannot be performed in the same log line.

-

The find expression can have multiple groups. The text matching in each group will be replaced by the text created by the replace expression.

-

-

Replace Expression: This custom notation indicates the text to replace groups found in Find Expression. The group names should be enclosed in parentheses.

-

The group name must be enclosed in brackets.

-

You can include the static text.

-

The text created by the replace expression will replace the text matching in all the groups in the find expression.

-

Click the Help ![]() icon next to the fields Catch Expression, Find Expression, and Replace String to get the description of the field, sample expression, sample content, and the action performed.

icon next to the fields Catch Expression, Find Expression, and Replace String to get the description of the field, sample expression, sample content, and the action performed.

Examples:

- The objective of this example is to get the time stamp from the log line containing the text

# Time:and add it to the log lines staring with# User@Hostthat have no time stamp.Consider the following log data:

# Time: 160201 1:55:58 # User@Host: root[root] @ localhost [] Id: 1 # Query_time: 0.001320 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 1 select @@version_comment limit 1; # User@Host: root[root] @ localhost [] Id: 2 # Query_time: 0.000138 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 2 SET timestamp=1454579783; SELECT DATABASE();The values of the Catch Expression, Find Expression, and Replace Expression attributes can be:

-

The Catch Expression value to match the time stamp log line and save it in the memory with the name timestr is

^(?<timestr>^# Time:.*). -

The Find Expression value to find the lines to which the time stamp log line must be prepended is

(^)# User@Host:.*. -

The Replace Expression value to replace the start of the log lines that have the time stamp missing in them is

(timestr).

After adding the find-replace function, you’ll notice the following change in the log lines:

# Time: 160201 1:55:58 # User@Host: root[root] @ localhost [] Id: 1 # Query_time: 0.001320 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 1 select @@version_comment limit 1; # Time: 160201 1:55:58 # User@Host: root[root] @ localhost [] Id: 2 # Query_time: 0.000138 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 2 SET timestamp=1454579783; SELECT DATABASE();In the preceding result of the example, you can notice that the find-replace function has inserted the timestamp before the

User@hostentry in each log line that it encountered while pre-processing the log. -

-

The objective of this example is to catch multiple parameters and replace at multiple places in the log data.

Consider the following log data:

160203 21:23:54 Child process "proc1", owner foo, SUCCESS, parent init 160203 21:23:54 Child process "proc2" - 160203 21:23:54 Child process "proc3" -In the preceding log lines, the second and third lines don't contain the user data. So, find-replace function must pick the values from the first line of the log, and replace the values in the second and third lines.

The values of the Catch Expression, Find Expression, and Replace Expression attributes can be:

-

The Catch Expression value to obtain the

fooandinitusers info from the first log line and save it in the memory with the parameters user1 and user2 is^.*?owner\s+(?<user1>\w+)\s*,\s*.*?parent\s+(?<user2>\w+).*. -

The Find Expression value to find the lines that have the hyphenation (

-) character is.*?(-).*. -

The Replace Expression value is

, owner (user1), UNKNOWN, parent (user2).

After adding the find-replace function, you’ll notice the following change in the log lines:

160203 21:23:54 Child process "proc1", owner foo, SUCCESS, parent init 160203 21:23:54 Child process "proc2", owner foo, UNKNOWN, parent init 160203 21:23:54 Child process "proc3", owner foo, UNKNOWN, parent initIn the preceding result of the example, you can notice that the find-replace function has inserted the user1 and user2 info in place of the hyphenation (

-) character entry in each log line that it encountered while pre-processing the log. -

Time Offset Function

Some of the log records will have time stamp missing, some will have only the time offset, and some will have neither. Oracle Log Analytics extracts the required information and assigns the time stamp to each log record.

These are some of the scenarios and the corresponding solutions for assigning the time stamp using the time ffset function:

| Example Log Content | Scenario | Solution |

|---|---|---|

|

Log file has time stamp in the initial logs and has offsets later. |

Pick the time stamp from the initial log records and assign it to the later log records adjusted with time offsets. |

|

Log file has initial logs with time offsets and no prior time stamp logs. |

Pick the time stamp from the later log records and assign to to previous log records adjusted with time offsets. When the time offset is reset in between, that is, a smaller time offset occurs in a log record, it is corrected by considering the time stamp of the previous log record as reference. |

|

The log file has log records with only time offsets and no time stamp. |

time stamp from file's last modified time: After all the log records are traversed, the time stamp is calculated by subtracting the calculated time offset from the file's last modified time. timestamp from filename: When this option is selected in the UI, then the time stamp is picked from the filename in the format as specified by the time stamp expression. |

The time offsets of log entries will be relative to previously matched timestamp. We refer to this timestamp as base timestamp in this document.

Use the parser time offset function to extract the time stamp and the time stamp offset from the log records. In the Parser Function dialog box:

-

Name: Specify a name for the parser function that you're creating.

-

Function: Select Time Offset.

-

Where to find the timestamp?: If you want to specify where the time stamp must be picked from, then select from Filename, File Last Modified Time, and Log Entry. By default, Filename is selected.

If this is not specified, then search for the time stamp is performed in the following order:

- Traverse through the log records, and look for a match to the timestamp parser, which you will specify in the next step.

- Pick the file last modified time as the time stamp.

- If last modified time is not available for the file, then select system time as the time stamp.

- Look for a match to the timestamp expression in the file name, which you will specify in the next step.

-

Timestamp Expression: Specify the regex to find the time stamp in the file name. By default, it uses the

{TIMEDATE}directive.OR

Timestamp Parser: If you've selected Log Entry in step 3, then select the parser from the menu to specify the time stamp format.

-

Timestamp Offset Expression: Specify the regex to extract the time offset in seconds and milliseconds to assign the time stamp to a log record. Only the

secandmsecgroups are supported. For example,(?<sec>\d+)\.(?<msec>\d+).Consider the following example log record:

15.225 hostA debug services startedThe example offset expression picks

15seconds and225milliseconds as the time offset from the log record. -

Validate Function: If you selected Filename in step 3, then under Sample filename, specify the file name of a sample log that can used to test the above settings for time stamp expression or time stamp parser, and timestamp offset expression. The log content is displayed in the Sample Log Content field.

If you selected Log Entry or File Last Modified Time in step 3, then paste some sample log content in the Sample Log Content field.

-

Click Validate to test the settings.

You can view the comparison between the original log content and the computed time based on your specifications.

-

To save the above time offset function, click Save.