Add a call transcript

Call transcripts are used to provide suggestions during the call and for generating the summary of the phone call.

A call transcript within MCA is a written record of a phone call that's created by

converting audio to text. If your CTI supplier provides the live transcription of a

phone call in the form of text stream, you can render those transcript messages in the

Fusion Engagement panel. Once a call is started, you can use the UEF

FeedLiveTranscript action API to convert audio to text.

With this API the integrator can render transcript messages for both the agent and the customer. After it's enabled, the notes input box in Fusion Engagement panel is replaced with a transcript component and the messages "agent joined the call" and "customer joined the call" are shown in the Engagement Panel along with a Call Details button. The agent can enter notes during the call by clicking the Call Details button.

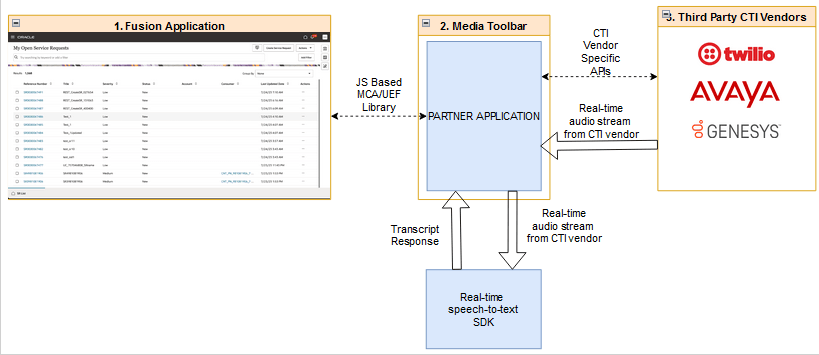

Here's a diagram that shows a high level view of how to add call transcripts during a phone call.

As the diagram shows, your CTI supplier should provide the real-time audio stream of the ongoing call, which you then pass to a real-time speech-to-text service to get the textual data behind the ongoing call. OCI Realtime Speech SDK is one such speech to text service that you can use. If your CTI supplier directly provides real-time transcripts, you can pass them to the UEF APIs to render it on the Fusion screen.

How to enable or disable call transcripts in your media toolbar application

Define a functionsubscribeToSupportedFeatures in fusionHandler.ts

file as shown in the following example: public static async subscribeToSupportedFeatures(): Promise<any> {

const request = FusionHandler.frameworkProvider.requestHelper.createSubscriptionRequest('onToolbarAgentCommand');

FusionHandler.phoneContext.subscribe(request, (response) => {

const agentCommandResponse: IMcaOnToolbarAgentCommandEventResponse = response as IMcaOnToolbarAgentCommandEventResponse;

return new Promise((resolve, reject) => {

const agentCommandResponseData = agentCommandResponse.getResponseData();

const commandObject = agentCommandResponse.getResponseData().getData();

const command = agentCommandResponse.getResponseData().getCommand();

if (command === 'getActiveInteractionCommands') {

const outData = {

'supportedCommands': [],

'supportedFeatures': [

{

'name': 'transcriptEnabled',

'isEnabled': true // Set as true to enable transcripts

}

]

};

agentCommandResponseData.setOutdata(outData);

commandObject.result = 'success';

}

resolve(commandObject);

})

})

}In the code sample, the supportedFeatures array in the

outData variable is used to enable or disable the transcript,

summary and suggestions features. You can set the isEnabled key to

true or false to enable or disable this feature.

Next, you need to call this

function while initializing the application. For that, call the function shown in

the previous example from the makeAgentAvailable function in the

integrationEventsHandler.ts as shown in the following

graphic:

You can use real-time-speech SDK's to convert the audio stream from your CTI supplier to text data, which are suitable for transcript.

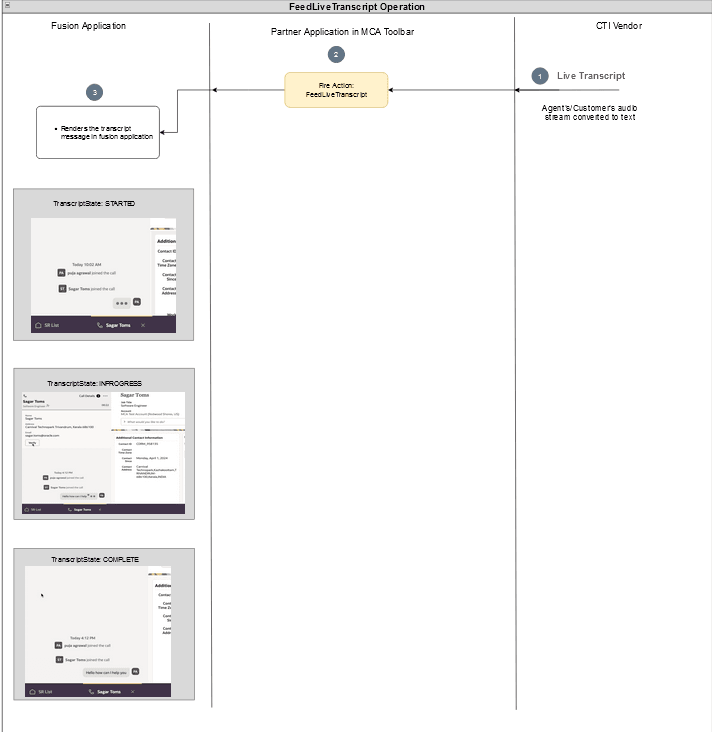

Once you

receive the text converted from the audio stream, you can use the

FeedLiveTranscript API to render it in Fusion. Based on the

audio stream source, its transcript can be rendered as and agent's message or a

user's message.

Based on the FeedLiveTranscript request

object, the transcript is rendered on the engagement panel.

Render Transcript Messages

The FeedLiveTranscript API should be executed from the engagement

context, which is available from the startComm event action

response. Once the engagement context is available, create the request object

for FeedLiveTranscript API and set the required properties

(message, message id, state, role). The role should be set as AGENT for adding the

agent transcript messages. The role should be set as END_USER for adding the agent

transcript messages. Once this API is executed, the agent's transcript message will

be rendered in the fusion engagement panel.

In your media toolbar application, add the following function in

fusionHandler.ts:

public static async addRealTimeTranscript(messageId: string, message: string, role: string, state: string): Promise<any> {

var requestObject: IMcaFeedLiveTranscriptActionRequest = FusionHandler.frameworkProvider.requestHelper.createPublishRequest('FeedLiveTranscript') as IMcaFeedLiveTranscriptActionRequest;

requestObject.setMessageId(messageId);

requestObject.setMessage(message);

requestObject.setRole(role);

requestObject.setState(state);

var res = await FusionHandler.engagementContext.publish(requestObject);

return res;

}Also, add another function in integrationEventsHandler.ts to invoke

the previous function:

public async addRealTimeTranscript(messageId: string, message: string, role: string, state: string): Promise<any> {

var resp: any = await FusionHandler.addRealTimeTranscript(messageId, message, role, state);

return resp;

}You need to invoke this function from your vendorHandler.ts file

upon receiving a transcription result from your real-time speech to text

service.

See Introduction to the Gen AI accelerator for more information.

Verify your progress

Once you complete these steps, use OJET serve to start you application and sign in to your Fusion application. Open the media toolbar and make your agent available for calls by clicking on the agent availability button. Now, start a call to your customer care number. You'll receive the incoming call notification in your media toolbar application and in your Fusion window. Once you accept the call, your media toolbar state will be changed to ACCEPTED state and the engagement will get opened in your Fusion application. You'll see that a transcript component is loaded in the engagement panel instead of the notes field, which contains messages, agent joined the call and customer joined the call. Start speaking and you'll see the transcripts are getting added to the transcript component.