Tune Query Performance

You can tune query performance to optimize queries and speed up query execution times.

About Queries and Performance

When an analysis runs, database aggregation and processing is prioritized so that there's as little data as possible sent to the BI Server for more processing. When creating analyses, you should reinforce this principle to maximize performance.

Here's what happens when a query runs, in three phases:

- SQL compiles.

- Database SQL runs.

- Result set is retrieved and displayed in an analysis.

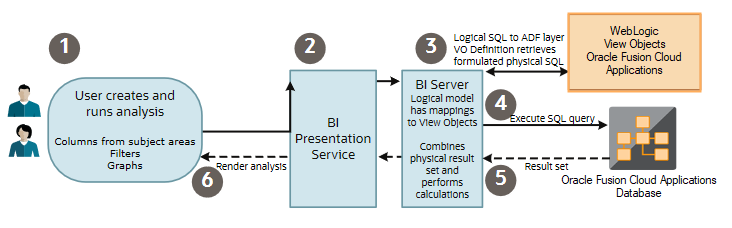

Here's the query architecture with the steps that happen when a query runs.

Description of the illustration otbi_query_arch_phases.png

| Phase | Step | Description |

|---|---|---|

| 1 | 1 | You create an analysis with columns from one or more subject areas, add appropriate filters and graphs, and then run it. |

| 1 | 2 | Presentation Services receives the request and sends a logical SQL statement based on subject areas and columns to the BI Server. |

| 1 | 3 | The BI Server does these things:

|

| 2 | 4 | The database query, including the aggregations and data security, runs in the database. |

| 2 | 5 | The aggregated dataset is returned to the BI Server, which merges the results and applies any additional calculations or filters. |

| 3 | 6 | The final dataset is returned to Presentation Services, where it's formatted for display and shown in the analysis. |

Maximize Query Performance

The way you design an analysis affects how the BI Server builds the physical query, which determines how much processing is focused in the database to maximize performance.

You should consider some of the factors described here, while creating ad-hoc analyses.

Here are some key things you can do to improve how your analyses perform:

- Select only required subject areas and columns. What you select determines which view objects and database tables are used in the database query. Any unnecessary table means more queries and joins to process.

- Add proper filters to use database indexes and reduce the result data returned to the BI Server.

- Remove unnecessary visual graphs.

- Remove unused data columns. Any columns not used in visual graphs are included in the physical SQL execution, which may increase database query processing overhead.

Here are some of the factors that can hurt query performance, and what you can do to improve it.

| Factor | Description | Suggestions |

|---|---|---|

| Security | Analyses may perform well for a user with broad security grants, but worse for those with more restrictions. | Review and simplify your security. Minimize the number of roles and don't use complex custom queries to enforce data security. |

| Cross-subject areas | Analyses including two or more subject areas can impact performance. | Review your analyses to see if you can remove some of the subject areas in the queries. Are all of the subject areas required? When you built the analysis, did you notice a performance impact while adding a particular subject area? |

| Hierarchies | Hierarchies, particularly large ones, can impact

performance.

Queries on trees and hierarchical dimensions such as manager can have an impact on performance. The BI Server uses a column-flattening approach to quickly fetch data for a specific node in the hierarchy. But there's no pre-aggregation for the different levels of the hierarchy. |

Remove hierarchies to see if performance improves. It's also important to carefully craft any query to apply enough filters to keep the result set small. |

| Number of attributes | Analyses often use a large number of columns that aren't required. | Reduce the number of columns in the criteria as much as possible. |

| Flexfields | Using too many flexfields in analyses can hurt performance in some cases. | Remove flexfields to see if the performance improves. Avoid flexfields in filters. |

| Data volumes | Analyses that query large volumes of data take longer. Some may query all records, but only return a few rows, requiring a lot of processing in the database or BI Server. | Consider adding filters in your analysis,especially on

columns with indexes in thedatabase.

Avoid blind queries because they're runwithout filters and fetch large data sets.All queries on large transactional tablesmust be time bound. For example, includea time dimension filter and additionalfilters to restrict by key dimensions, suchas worker. Apply filters to columns thathave database indexes in the transactionaltables. This ensures that a good executionplan is generated for the query from the BI Server. |

| Subquery (NOT IN, IN) | Filtering on IN (or NOT IN) based on the results of another query means that the subquery is executed for every row in the main query. | Replace NOT IN or IN queries with union set operators or logical SQL. |

| Calculated measures | Calculating measures can involve queryinga lot of data. | Use the predefined measures in yoursubject areas wherever possible. |

| Filters | Analyses with no filters or filters thatallow a large volume of data may hurtperformance. | Add filters and avoid applying functions orflexfields on

filter columns. If possible, useindexed columns for filters.

Refer to OTBI subject area lineage for your offering, which documents indexed columns for each subject area. |

Review further guidelines about analysis and reporting considerations in My Oracle Cloud Support KM ID KB151083.

Diagnose Performance Using Subject Areas

These examples illustrate how you could use the OTBI Usage Real Time and Performance Real Time subject areas to understand usage and performance, so that you can diagnose performance bottlenecks and understand whether analyses are running slowly or could be optimized.

The OTBI Usage Real Time subject area monitors usage trends for OTBI by user, analysis and dashboard, and subject area. The OTBI Performance Real Time subject area monitors usage as well as analysis execution time, execution errors, and database physical SQL execution statistics. See OTBI Usage and Performance Subject Areas Reference.

The OTBI usage subject areas are provisioned for two job roles: IT Security Manager and Application Implementation Consultant. To use them for other job roles, grant OTBI Usage Transactional Analysis Duty and OTBI Performance Transactional Analysis Duty to custom job roles in the Security Console. Refer to specific subject area guides to see security details for each subject area.

Analyze the Number of Users

Use this example to understand whether the number of users might impact performance.

In this example, you create an analysis to determine the current number of users accessing OTBI so that you can determine system load, and a histogram analysis that identifies trends in long-running queries.

Tune Performance for Reports

You can use SQL query tuning to improve the performance of reports.

Here are some factors that can slow down query performance, and some suggestions for improvement.

| Factor | Description | Suggestions |

|---|---|---|

| Filters | Reports with no filters or filters that allow a large volume of data may hurt performance. | Use filter conditions to limit data. |

| Joins | Reports that join a lot of tables can run slowly. | Remove any unnecessary joins. |

| Data volumes | Reports with no filters or filters that allow a large volume of data may hurt performance. | Add filter conditions to limit data, preferably using columns with database indexes. Use caching for small tables. |

| Indexes | Filters that use database indexes can improve performance. | Use SQL hints to manage which indexes are used. |

| Sub-queries | Sub-queries can impact performance. |

|

| Aggregation | It helps performance to prioritize aggregation in the database. |

|

Review SQL Statements Used in Analyses

You can review logical and physical SQL statements using these steps.

Logical SQL is non-source specific SQL that's issued to the BI Server for an analysis. Logical queries use column names from the subject areas in the Presentation Layer in the repository (RPD) metadata. Based on the logical request, the BI Server issues optimized source-specific SQL to the actual data sources in the Physical Layer of the metadata. If you have administrative privileges, you can review both logical and physical SQL for analyses.

- If you want to view the logical SQL when editing an

analysis:

- In the Reports and Analytics work area, click Edit to open the analysis for editing, then click the Advanced tab.

- In the SQL Issued section, review the logical SQL statement.

- If you want to view the SQL statement from the

Administration page.