Staging Table Maintenance Changes

You can now submit the Delete HCM Data Loader Stage Table Data process as a multi-threaded process using the Initiate Multithreaded Processing parameter on the Schedule Delete Stage Table Data Process.

When there are large volumes of data to be deleted from the staging tables, data needs to be removed efficiently by not clogging resources for extended periods. The elapsed time for deleting large volumes of data is reduced by submitting the Delete HCM Data Loader Stage Table Data process as a multi-threaded process.

Steps to Enable

The new HCM Data Loader parameter Multithread the Delete HCM Data Loader Stage Table Data Process in the Configure HCM Data Loader page can be used to set the default processing for the delete process as a single or multi-threaded process.

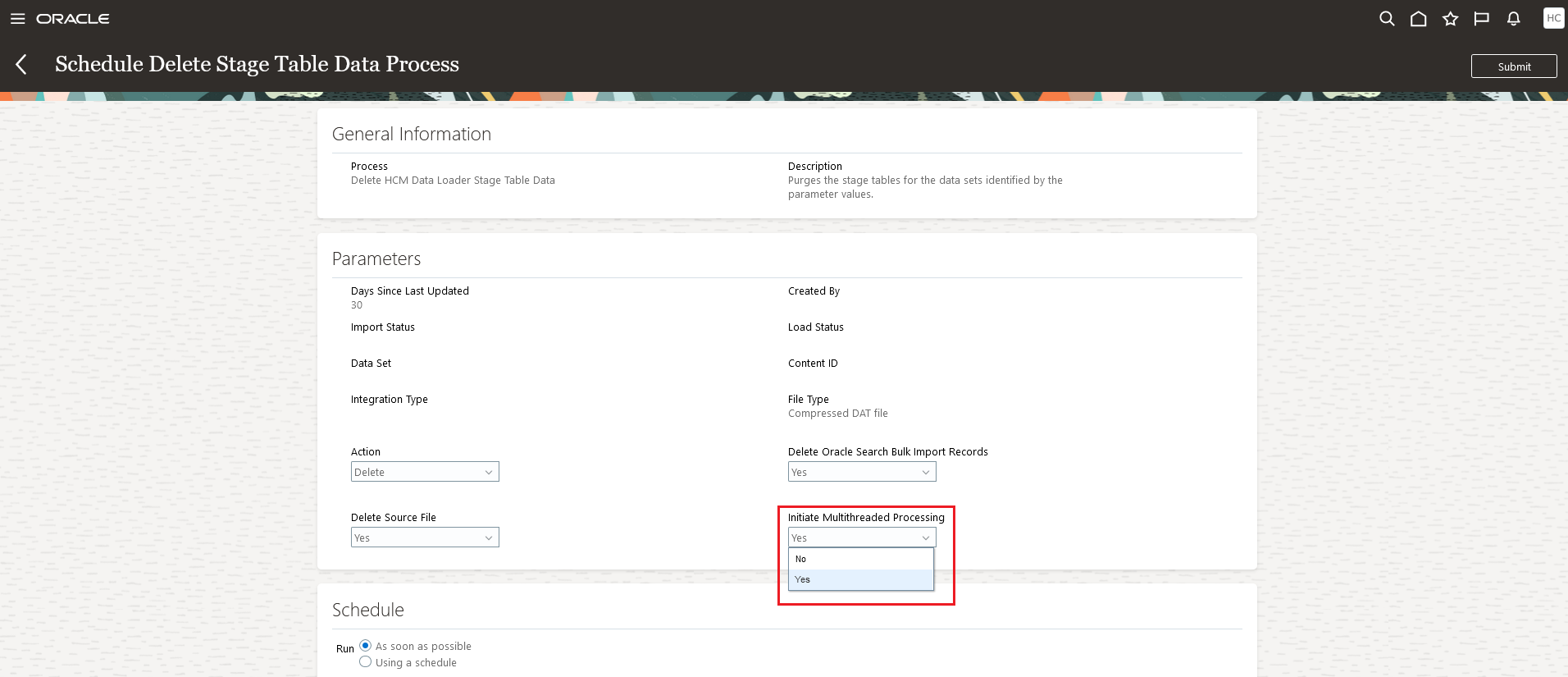

This configuration can also be overridden on the Schedule Delete Stage Table Data Process page using the Initiate Multithreaded Processing parameter while scheduling and/or submitting the delete process on the Delete Stage Table Data task.

- On the Data Exchange area, select the Delete Stage Table Data task.

- Select Actions > Scheduled Deletion to navigate to the Schedule Delete Stage Table Data Process page.

- Review and set the parameters:

- Action

- Delete Oracle Search Bulk Import Records

- Delete Source File

- Initiate Multithreaded Processing

- Review and set the Schedule to submit the delete process using the options:

- As soon as possible

- Using a schedule

- Click Submit

Multithreaded processing for Delete Stage Table Data Process

To review the submitted processes,

- On the Data Exchange area, select Delete Stage Table Data task.

- Select Actions > Review Processes.

- Review the submitted processes on the Recent Processes page.

Tips And Considerations

The Delete HCM Data Loader Stage Table Data process will only submit multiple subprocesses if the volume of data to be deleted is sufficient to warrant it.

This process also archives data sets if the remaining volume of data after deleting the expired data set is still too high.

Previously, the retention period of archived data sets was extended to a further 30 days, or the number of days configured. Now, the retention status of archived data sets won't be impacted, so a data set that was due to be purged tomorrow but was archived today will now be purged tomorrow rather than in another 30 days.