5 Advanced Topics in the DMU

This chapter discusses advanced topics to consider when working with the Database Migration Assistant for Unicode (DMU).

5.1 Excluding Columns and Tables From Migration

In some situations, you may want to exclude selected columns or tables from scanning or conversion.

Situations include the following:

-

You need to solve a problem of multiple character sets in a single column (see "Working with Multilingual Columns").

-

There is a character column in the database that contains binary data. You cannot migrate the column to a binary data type (

RAW,LONGRAW, orBLOB), because you are unable to modify applications accessing the database, for example, because they are not developed in-house. You want to avoid converting the column so that the applications may continue to access the data in a pass-through configuration. -

There is a very large table in the database, with terabytes of data, which – you are absolutely sure – contains no convertible data. Such tables:

-

Cannot contain

CLOBcolumns, unless the current database character set is multibyte. -

Can contain only character columns with internal application codes, yes/no flags, credit card numbers or other data known to contain only basic ASCII characters.

-

Must not contain data entered by end users from keyboards that support non-ASCII characters even if the users are supposed to enter English text only. Standard keyboards of computers running Microsoft Windows always allow such characters to be entered.

You may want to avoid scanning such a large table to reduce the scanning time of the whole database. Oracle strongly recommends that you scan even very large tables at least once.

-

-

As with the case of very large tables, you may want to avoid scanning archival data stored in read-only tablespaces on slow storage devices, such as DVD-ROM jukeboxes. Again, you should be absolutely sure that the tables contain no data requiring conversion.

-

There are read-only tablespaces or a read-only table that you cannot change to read/write for some important reason. You want to be able to convert the rest of the database to Unicode, even though the tablespace or the table contains convertible data that the DMU will not be able to convert. You accept that data in the tablespace or the table will be unreadable after the conversion unless appropriate workarounds are implemented or the DMU converts the data later in validation mode.

-

As with read-only tablespaces, if you have an offline tablespace or offline data file that you cannot switch back online, the DMU will not be able to scan or convert data in this tablespace or data file. You still want to convert the rest of the database and you accept that the contents of the tablespace or the data file may be unreadable if you later switch the tablespace or the data file back online. You can use the DMU in validation mode to later convert this data.

See "Handling Non-Accessible Data" for further discussion about database objects in read-only and offline mode.

To exclude a table from scanning, simply deselect it on the object selection page of the Scan Wizard.

To exclude a column from conversion, open its Column Properties tab (see "Viewing and Setting Column Properties") and set the Exclude from Conversion property on the Converting subtab to Yes. The DMU does not consider columns excluded from conversion when checking for convertibility issues that prevent you from starting the conversion step. To exclude a table from conversion, exclude all its convertible columns.

5.2 Handling Non-Accessible Data

Some database data might be inaccessible to applications. The data may be non-updatable or even unreadable.

The DMU cannot convert non-updatable data and it can neither convert nor scan unreadable data. Data with access restrictions is data contained in any of the following database objects:

5.2.1 Read-Only Tables Considerations

To protect a table contents against updates, you can alter a table to read-only mode. All Oracle Databases since release 11.1 support the read-only mode for database tables.

A read-only table can be queried, its constraints modified, and its segment moved, shrunk, or expanded. However, none of its column values can be modified in any way and no new rows can be added. You can alter the table back to read/write mode and again to read-only, as many times as required.

Read-only tables cannot be updated and so their contents cannot be converted by the DMU. Therefore, the DMU automatically alters read-only tables to read/write mode before attempting conversion. After the conversion, the mode is changed back to read-only.

A read-only table may also be re-created, if it is converted using the "Copy data using CREATE TABLE AS SELECT" conversion method.

5.2.2 Read-Only Tablespaces Considerations

If any segment of a table in a read-only tablespace contains data requiring conversion, then the DMU cannot successfully convert the table

You can place a tablespace into read-only mode, thereby preventing its data from being updated. Data files of read-only tablespaces may reside on read-only media or on standard read/write disk storage devices. For example, historical data in very large databases is frequently moved to read-only tablespaces. The tablespaces are backed up only once, just after putting them into read-only mode. Because read-only tablespaces cannot change, no further backups are required. This significantly reduces backup time.

If any segment of a table, including partition, CLOB, VARRAY, and IOT overflow segments, contains data that requires conversion, and if the segment belongs to a read-only tablespace, the DMU cannot successfully convert the table. The DMU reports this as a convertibility issue on the Migration Status tab and does not allow you to start the conversion step until the problem is resolved.

The approach to resolve this convertibility issue depends on the reason for which the problematic tablespaces have been put into read-only mode. If the reason was to reduce backup time, and if the data is still on standard storage devices, then perform the following steps:

-

Put the tablespaces back into read/write mode.

-

Convert them with the rest of the database.

-

Put them into read-only mode again.

-

Refresh the backup.

If the tablespaces have been put into read-only mode and moved to read-only media, the possible solutions are:

-

If enough disk storage can be arranged for, permanently or temporarily, to accommodate all read-only tablespaces with convertible data, copy the tablespaces to this storage, make them read/write, convert together with the rest of the database, make read-only again, and either leave on the disk storage, or put back on read-only media. With large number of read-only tablespaces containing convertible data, this may not be a viable solution.

-

Create an auxiliary database in the same character set as the main database, that is, the database to be migrated. Move the read-only tablespaces logically to the new database using the transportable tablespace feature, leaving the data files physically in their current location. Create a database link in the main database pointing to the new database. By creating auxiliary views or through application changes, make the read-only data in the auxiliary database visible to applications connecting to the main database. The data will be converted while being transported over the database link.

5.2.3 Offline Tablespaces and Data Files Considerations

If a segment of a table in an offline tablespace or data file contains character data requiring conversion, then the DMU cannot scan or convert the table.

You can put a tablespace or selected data files in a tablespace into offline mode. Data contained in an offline tablespace or data file is inaccessible for both reading and writing. Offline mode is required by various administrative operations on data files, such as renaming or moving from one storage device to another.

If any segment of a table, including partition, LOB, VARRAY, and IOT overflow segments, contains character data that may require conversion, and the segment belongs to an offline tablespace or data file, the DMU can neither scan nor convert the table. You must put such a tablespace or data file back to online mode before scanning or converting the affected tables. Otherwise, errors, such as ORA-00376, will be reported in the scan and conversion steps.

The DMU will report an error on the Migration Status tab and prevent you from starting the conversion step if convertible data is found in an offline data file.

5.2.4 Working With External Tables

An external table is a table whose data resides in files outside of the database. External files pose special considerations for character sets.

The database contains definitions of table columns (metadata) but table rows are fetched from external files when a query referencing the table is issued. Oracle Database supports two access drivers that read the external files: ORACLE_LOADER and ORACLE_DATAPUMP. ORACLE_LOADER reads text files that are in the format supported by the SQL*Loader. ORACLE_DATAPUMP supports binary files that are compatible with the Data Pump Export and Import utilities (expdp and impdp).

DML statements modifying external tables are not supported. An ORACLE_DATAPUMP external file may be created and filled with data when an external table is created with the statement CREATE TABLE ... ORGANIZATION EXTERNAL ... AS SELECT but it cannot be later modified from inside the database. ORACLE_LOADER files must be created and modified outside of the database. The DMU does not convert external files along with the database contents.

When an external table is created, the character set of its data files is established as follows:

-

The character set of an

ORACLE_DATAPUMPfile is stored in the data file itself when the file is created by theORACLE_DATAPUMPdriver or the Data Pump Export utility. -

You can specify the character set of an

ORACLE_LOADERfile in theCHARACTERSETparameter in the access parameters clause of the external table definition. If the parameter is not specified, then the database character set is used to interpret the contents of the file.

If the declared character set of an external file differs from the database character set, then the database converts the data automatically while reading it. If the database character set changes but the character set of the external file does not change, then the database adapts itself to the new configuration and converts external files to the new database character set.

Caution:

If the character set of an ORACLE_LOADER file is not declared explicitly in the access parameters clause of an external table definition, then a change to the database character set also changes the implicit declaration of the character set of the external file. If the file itself is not converted to the new database character set, then the external file declaration no longer corresponds to the real character set of the file and the external table is no longer correctly readable.

Before migrating a database to Unicode, you must either add missing explicit character set declarations to all external table definitions or convert the file contents to the new database character set.

5.2.4.1 Cleansing External Tables

Even though the DMU does not convert external tables, it does include them in the scanning step of the migration process.

The scan results show you, if the file contents will still fit into the declared column lengths after migration, even if data expands in conversion to the new Unicode database character set. The results also warn you if any illegal character codes are present in the external files. These codes will no longer be readable if the file contents must be converted on the fly.

If the scan report shows invalid binary representation issues, you must identify the source of the invalid codes, as discussed in "Invalid Binary Storage Representation of Data" and "Cleansing Scenario 3: Cleansing Invalid Representation Issues".

The following sections describe actions that you can perform to cleanse the external tables from various reported issues.

5.2.4.2 Cleansing Length Issues

If the scan report shows length issues in external table contents, then you can alter the table to lengthen the affected columns or migrate them to character semantics.

The DMU does not support cleansing actions on external tables so you must do this in another tool, such as SQL*Plus or SQL Developer. Changing a VARCHAR2 column to CLOB may be necessary, if the data expands above 4000 bytes. To change the column data type to CLOB, you must re-create the external table. This is a fast operation, as only metadata changes are involved, but you must remember to re-create any dependent objects, such as grants.

5.2.4.3 Correcting Character Set Declaration of ORACLE_LOADER Files

The character values of an external table may be read by the ORACLE_LOADER driver in a pass-through configuration.

In a pass-through, the declared character set of data files and the database character set are the same, but the real character set of the data content is different. You can repair the configuration by declaring the real character set in the access parameters clause of the external table definition.

You must not change the declaration in a production database before the database is converted, because this would break the pass-through configuration and make the table unreadable.

Before the database is converted, you should change the Assumed Column Character Set property of the affected external table columns (described in "Setting the Assumed Character Set") to the identified real character set of the data files and rescan the table to identify any additional length and invalid binary representation issues that might come up after the data file character set declaration is corrected.

Oracle recommends that you create a script to modify all affected access parameter clauses in the database and run it directly after the conversion phase of the DMU finishes successfully.

5.2.4.4 Correcting Character Set Declaration of ORACLE_DATAPUMP Files

The character values of an external table may be read by the ORACLE_DATAPUMP driver in a pass-through configuration.

In the character set configuration described in the previous section, ensure that the Data Pump input files are correctly re-tagged with their real character set directly after the database is converted to Unicode.

Unfortunately, the character set declaration is stored internally in Data Pump files and cannot be easily modified. A more complex procedure is needed to fix the declaration. Alternatively, you can arrange for the external table files to be provided in ORACLE_LOADER format, so that you have full control over their character set declaration in the external table's access parameters clause.

If a Data Pump file is used in a pass-through configuration, it means that its source (exported) database also works in this configuration, as otherwise it could not produce an incorrectly tagged file. The recommended approach to fix the character set declaration of a Data Pump file is, therefore, to fix the database character set of its source database.

If you have no control over the character set of the source database, you must:

-

Create an auxiliary, empty database in the character set of the Data Pump files

-

Import the files – this will happen in the pass-through configuration

-

Change the database character set to the real character set of the files

-

Export the files and use them for the external table in the main database (after it is migrated to Unicode)

Contact Oracle Support for information about fixing a pass-through configuration by changing the database character set with the Character Set Scanner utility (csscan) and the csalter.plb script.

The following PL/SQL code enables you to identify the character set of a Data Pump file:

DECLARE

et_directory_name VARCHAR2(30) := '<directory object name>';

-- for example, 'DATA_PUMP_DIR'

et_file_name VARCHAR2(4000) := '<file name>';

-- for example, 'EXPDAT.DMP'

et_file_info ku$_dumpfile_info;

et_file_type NUMBER;

BEGIN

dbms_datapump.get_dumpfile_info

( filename => et_file_name

, directory => et_directory_name

, info_table => et_file_info

, filetype => et_file_type );

FOR i IN et_file_info.FIRST..et_file_info.LAST LOOP

IF et_file_info.EXISTS(i) THEN

IF et_file_info(i).item_code = 11 THEN

dbms_output.put_line( 'Character set of the file is ' ||

et_file_info(i).value );

END IF;

END IF;

END LOOP;

END;5.2.4.5 Fixing Corrupted Character Codes

Analysis of invalid binary representation issues in an external table may show that some corrupted character codes exist in some character values.

Typically, corruptions occur because of a user error, an application defect, or a temporary configuration problem. In any case, correct the values in the source database and the affected files, and then re-export or unload them again.

With ORACLE_LOADER files, you can fix the invalid codes directly in the files with a text editor. However, the solution is effective only if the files are not regularly replaced with a new version produced from the same source database contents.

5.2.4.6 Handling Binary Data

Invalid binary representation issues in an external table may be caused by binary data being declared and fetched as character data by the external table driver.

To cleanse this type of issues, you must redefine the external table to use binary data types, such as RAW and BLOB, for the affected columns.

Oracle strongly discourages attempts to continue using the pass-through configuration to fetch the binary data into a database with a multibyte character set, such as UTF8 or AL32UTF8. Such configuration may cause unexpected issues now or in the future.

5.2.4.7 Performance Considerations for ORACLE_LOADER Files

The Oracle Database Utilities Guide lists several performance hints for the ORACLE_LOADER driver.

The following hints are especially relevant in the context of character set migration to Unicode:

-

Single-byte character sets are the fastest to process.

-

Fixed-width character sets are faster to process than varying-width character sets.

-

Byte-length semantics for varying-width character sets are faster to process than character-length semantics.

-

Having the character set in the data file match the character set of the database is faster than a character set conversion.

If you can choose between leaving an ORACLE_LOADER file in its current character set and arranging for the file to be provided in the new Unicode database character set, you should consider the following conclusions drawn from the above hints:

-

If the current character set of the file is multibyte, using UTF8 or AL32UTF8 database character set for the file will not significantly influence the parsing time, that is, time needed to divide the file into records and fields, but it will save on conversion time. Performance of the queries referencing the external table will be better.

-

If the current character set of the file is single-byte, using UTF8 or AL32UTF8 database character set for the file will slow down parsing but it will save on conversion time. You should benchmark both configurations to find out which one is more efficient.

-

If you decide to convert the file from a single-byte character set to UTF8 or AL32UTF8, try to express field lengths and positions in bytes versus characters, if maximizing query performance is important.

5.3 Migrating Data Dictionary Contents

The DMU classifies tables as belonging to the data dictionary based on the schema that owns them. The DMU handles data dictionary tables differently from other tables in the database

Schemas that the DMU considers to be in the data dictionary appear under the Data Dictionary node in the Navigator panel. If you create your own table in a data dictionary schema, such as SYS or SYSTEM, then the DMU treats it as other data dictionary tables. Oracle discourages creating user tables in data dictionary schemas. In this release, the DMU supports character set conversion of only a subset of metadata kept in the data dictionary tables.

See Also:

5.3.1 Scanning Data Dictionary Tables

Most data dictionary tables are scanned in the same way as user-defined tables.

The usual convertibility issues – data exceeding column limit, data exceeding data type limit, and data having invalid binary representation – are reported in the same way as well. The difference lies in reporting of data that needs conversion to the target Unicode character set. Because the DMU does not support converting of data dictionary contents in this release, except for a few exceptions, non-convertible columns containing data requiring conversion are marked with the yellow triangle warning icon and are considered a convertibility issue that prevents starting the database conversion step. The Database Scan Report filtering condition "With Some Issues" includes these columns as well – see "Database Scan Report: Filtering".

The View Data tab, described in "Viewing Data", which is a read-only version of the Cleansing Editor for data dictionary and other non-modifiable tables, shows convertible values in non-convertible data dictionary columns in dedicated colors configured on the Cleansing Editor tab in the Preferences dialog box, by default black on orange background.

Convertible data in the few columns that the DMU does convert, which are listed in "Data Dictionary Tables That Are Converted", is not reported as an issue.

For implementation reasons, the tables SYS.SOURCE$, SYS.ARGUMENT$, SYS.IDL_CHAR$, SYS.VIEW$, SYS.PROCEDUREINFO$, and SYS.PLSCOPE_IDENTIFIER$ are always scanned with "Rowids to Collect" parameter set to "All to Convert". See "Data Dictionary Tables That Are Converted" for more details.

5.3.2 Cleansing Data Dictionary Tables

Oracle neither supports altering structure of data dictionary tables nor updating their contents. Therefore, the DMU does not allow cleansing such tables.

You can set the assumed character set of columns of the data dictionary tables. However, the selected character set is considered only when displaying the columns on the View Data tab. The source character set used for conversion is always the assumed database character set.

5.3.2.1 Cleansing Data Length Issues

If data length issues are reported for data dictionary contents, then the only way to cleanse the issues is to replace the metadata with its shorter version.

The same length issues affect all character set migration methods, not only migration with the DMU. Therefore, you must cleanse the issues even if you plan to use alternative conversion methods, such as moving data with the Data Pump utilities.

Because the usual length limit for an identifier is only 30 bytes in Oracle databases earlier to Oracle Database 12c Release 2 (12.2), longer identifiers containing non-ASCII letters, especially those written in non-Latin scripts, may easily exceed the limit. For example, a Greek or Russian identifier longer than 15 characters will not fit into the 30 bytes limit after conversion from EL8MSWIN1253 or CL8MSWIN1251 to UTF8 or AL32UTF8. Chinese, Japanese, and Korean characters usually expand from 2 to 3 bytes, so identifiers longer than 10 characters become an issue.

The most common length issues are object names becoming longer than allowed after conversion. To shorten an identifier, rename the corresponding database object with an appropriate SQL statement or a PL/SQL package call. Although some objects, most must be dropped and re-created under the new name. When you drop an object, some dependent objects may also be dropped, in which case they must be re-created.

Note:

Starting with Oracle Database 12c Release 2 (12.2), the maximum limit for an identifier is 128 bytes. For the earlier Oracle Database versions, the maximum limit for an identifier is 30 bytes.

For example, you can rename a table, view, sequence, or private synonym using the SQL statement RENAME. You can rename a table column using the SQL statement ALTER TABLE RENAME COLUMN. However, you cannot rename a table cluster. You must drop it and re-create under another name. But before you drop a cluster, you must drop all tables stored in the cluster. Because there are many possible auxiliary objects created for tables, such as privileges, indexes, triggers, row-level security policies, outlines, and so on, you may end up re-creating many objects. In this situation, Data Pump utilities and the Metadata API may be helpful.

After renaming a database object, you must change all application code that references this object to use the new name. This includes PL/SQL and Java code in the database, but also all affected client applications.

Another type of metadata that often expands beyond maximum allowed length is free text comments for various database objects. Similarly, you must update the comments with a shorter version to cleanse any reported length issues. Most comments can be updated with an appropriate SQL statement or PL/SQL package call.

See Also:

-

"Identifying Metadata" to learn how to identify the correct statements to cleanse data dictionary issues

-

Oracle Database Utilities to learn more about the Data Pump utilities and the Metadata API

5.3.2.2 Cleansing Invalid Binary Representation Issues

A common reason for invalid binary representation of data is the pass-through scenario.

If SQL statements or PL/SQL calls are issued in a pass-through configuration, it is possible to create and use a database object that is named using characters that are not valid or do not have the expected meaning in the database character set. For example, a seemingly senseless table name in a WE8MSWIN1252 database might be interpreted as correct on a Japanese JA16SJIS client. Alternatively, a PL/SQL module might contain comments that are not legible in the database character set but that make sense when viewed in another character set.

Invalid binary representation of database object names rarely occurs. Restrictions on characters allowed in non-quoted identifiers make it visible from the very beginning. Invalid binary representation of object comments is more probable but also easier to fix.

To cleanse the invalid binary representation issues caused by the pass-through configuration, if they are common to the application data as well, set the assumed database character set property of the database to the real character set of the database contents. Otherwise, update the affected metadata with a version that does not have convertibility issues.

To fix invalid representation issues in PL/SQL and Java source code or in view definitions, use Metadata API to retrieve the DDL statements creating the objects, correct the problematic characters in the statement text and execute the statements to re-create the objects. If object names are not affected, use the CREATE OR REPLACE syntax to change the code without having to re-create related objects, such as privileges.

5.3.2.3 Identifying Metadata

A difficult step in the process of resolving data dictionary convertibility issues is to map the issues shown in a database scan report to the type of metadata that is stored in the affected tables and columns.

The data dictionary tables, presented in the DMU interface, are generally not documented. The documented way to view their contents is through data dictionary views such as DBA_TABLES, DBA_TAB_COLUMNS, DBA_RULES, DBA_SCHEDULER_JOBS, and many others.

To identify the type of metadata that has convertibility issues, try one of the methods below, in the presented order:

-

Look at the problematic character value on the View Data tab. The value itself may already tell you the metadata that it belongs to. For example, the value may be

This column keeps customer e-mail, which suggests that it is a column comment, or it may beHR_EMPLOYEE_V, which may correspond to a common convention to name views, thus showing the value is a view name. -

Search for the name of the table in which the issues are reported in SQL script files named

cat*.sqlandcd*.sqland located in therdbms/admin/subdirectory of your database Oracle home directory. By mapping the table and its columns to the right documented view, you can find out which metadata has the reported issues. -

Contact Oracle Support or post a question on the globalization forum on the Oracle Technology Network (OTN) website.

Once you identified the metadata that has the reported convertibility issues, refer to Oracle documentation to identify the right procedure to change this metadata.

See Also:

-

Oracle Database Reference to learn about the scripts that define data dictionary views

5.3.3 Data Dictionary Tables That Are Converted

The DMU converts some data in the data dictionary.

The DMU converts only the following data in the data dictionary:

-

CLOBcolumns – this is necessary only in a single-byte database -

Binary XML token manager tables, with names like

XDB.X$QN%andXDB.X$NM% -

PL/SQL source code (text of

CREATEPROCEDURE,CREATEFUNCTION,CREATEPACKAGE,CREATEPACKAGEBODY,CREATETYPEBODY,CREATETRIGGER, andCREATELIBRARY); type specifications (CREATETYPE) are not converted -

View definitions (text of

CREATEVIEW) -

The following columns:

-

SYS.SCHEDULER$_JOB.NLS_ENV– NLS environment for Database Scheduler jobs (DBMS_SCHEDULER) -

SYS.SCHEDULER$_PROGRAM.NLS_ENV- NLS environment for Database Scheduler job programs (DBMS_SCHEDULER) -

SYS.JOB$.NLS_ENV– NLS environment for legacy jobs (DBMS_JOB) -

CTXSYS.DR$INDEX_VALUE.IXV_VALUE- attribute values of Oracle Text policies -

CTXSYS.DR$STOPWORD.SPW_WORD- all stopwords in all stoplists of Oracle -

SYS.COM$.COMMENT$- user-specified comments -

SYS.ERROR$.TEXT- error text -

SYS.RULE$.R_COMMENT- rule comments -

SYS.TRIGGER$.WHENCLAUSE- text of when clause for triggers -

SYS.TRIGGER$.DEFINITION- definition text for triggers -

SYS.TRIGGER$.ACTION#- action to fire for triggers -

over 50 different columns in

SYS,SYSTEM, andCTXSYSschemas that contain user comments for various database objects

The PL/SQL source code and the view source text are kept in multiple tables. The DMU checks the following columns when processing the source code and view definitions:

-

SYS.VIEW$.TEXT– view definition text -

SYS.SOURCE$.SOURCE– PL/SQL and Java source code -

SYS.ARGUMENT$.PROCEDURE$– PL/SQL argument definitions: procedure name -

SYS.ARGUMENT$.ARGUMENT– PL/SQL argument definitions: argument name -

SYS.ARGUMENT.DEFAULT$– PL/SQL argument definitions: default value -

SYS.PROCEDUREINFO$.PROCEDURENAME– names of procedures and functions declared in packages -

SYS.IDL_CHAR$.PIECE– internal representation of PL/SQL -

SYS.PLSCOPE_IDENTIFIER$.SYMREP– internal representation of PL/SQL; this table did not exist before version 11.1 of Oracle Database

The DMU does not report convertible character data in the preceding tables and columns as a convertibility issue. Any convertible data in the remaining tables and columns of the data dictionary is flagged as a convertibility issue in scan reports and on the Migration Status tab. The database conversion step cannot be started before the flagged data is removed.

-

5.3.4 Data Dictionary Tables That Are Ignored

The DMU does not scan all data dictionary tables.i

The DMU does not scan the following tables:

-

SYS.HISTGRM$, which contains column histogram statistics -

Automatic Workload Repository object statistics history kept in tables with names such as

SYS.WRI$_OPTSTAT_OPRandSYS.WRI$_OPTSTAT_%_HISTORY -

DMU repository tables in the

SYSTEMschema -

CSSCANrepository tables in theCSMIGschema

The contents of these tables are also not considered when the DMU decides if the database conversion is allowed to start.

The SYS.HISTGRM$ table is not scanned because its EPVALUE column (storing end-point values) may contain binary data. As histograms and other table statistics depend on binary representation of data in character columns, you should anyway re-gather statistics for all converted tables in the database after migration. Collection of statistics refreshes the contents of the SYS.HISTGRM$ table and revalidates it in the new database character set. If you do not refresh the statistics, the optimizer may exhibit incorrect behavior.

Similarly, the historical object statistics kept in Automatic Workload Repository become stale after the migration because they also depend on binary representation of character data. The DMU does not migrate those statistics. You should purge them manually after migration by calling DBMS_STATS.PURGE_STATS(SYSTIMESTAMP).

See Also:

-

Oracle Database PL/SQL Packages and Types Reference for more information about the package

DBMS_STATSand the procedurePURGE_STATS -

Oracle Database Concepts for more information about optimizer statistics

The DMU and CSSCAN repository data becomes invalid after the database is migrated to a new database character set. Therefore, there is no point in migrating it along with the database. After the migration, you should drop the repositories and re-create them, if you still need them. If you re-create the DMU repository after migration, choose the validation mode – see "Validating Data as Unicode".

5.3.5 Handling Automatic Workload Repository Tables

The SYS schema contains a number of tables with names beginning with WRI$, WRH$%, and WRR$_, which comprise the Automatic Workload Repository (AWR).

In addition to historical object statistics, mentioned in "Data Dictionary Tables That Are Ignored", this repository stores snapshots of vital system statistics, such as those visible in various fixed views, for example, V$SYSSTAT and V$SQLAREA.

If non-ASCII characters are used in object names or in SQL statements, such as character literals or comments, they may get captured into the AWR tables. The DMU scan will report such characters as convertible data dictionary content, which prevents conversion of the database. To remove this data completely, re-create the Automatic Workload Repository by logging into SQL*Plus with SYSDBA privileges and running:

SQL> @?/rdbms/admin/catnoawr.sql

SQL> @?/rdbms/admin/catawr.sql

As the catawr.sql script is not present in Oracle Database versions 10.2.0.4 and earlier, Oracle recommends that you install the Oracle Database patch set 10.2.0.5 before purging AWR contents.

5.4 Working with Multilingual Columns

While analyzing contents of a column in the Cleansing Editor, you may discover that none of the assumed character sets set for the column makes all values in the column appear correctly at the same time. Instead, each value seems to be correct in one of the selected character sets.

This result indicates that the column contains a mixture of data in different character sets. You might also gather this information from analysis of data sources for your database.

Multiple character sets in a single column are possible in the pass-through scenario, if clients working in various character sets all store data in this column.

This release of the DMU does not contain any feature dedicated to cleansing this type of convertibility issue. The following procedure is recommended when you must deal with multiple character sets in a single CHAR or VARCHAR2 column:

To work with multiple character sets in a single CHAR or VARCHAR2 column:

Example 5-1 Multilingual Column Considerations

Assume your database contains the table CUSTOMERS, with columns CUSTOMER_NAME_ORIGINAL and CREATED_BY. The column CUSTOMER_NAME_ORIGINAL, defined as VARCHAR2(80 BYTE), contains the names of customers in their mother tongue in multiple character sets. The column CREATED_BY contains system IDs of employees who entered the customer data. You want to migrate the database to the character set AL32UTF8.

To solve the issue of multiple character sets in the CUSTOMER_NAME_ORIGINAL column start with creating a mapping table that maps employees' system IDs to client character sets of the employees' workstations. A table in your application that defines the system IDs may be helpful in locating the country in which the employee works and thus determining the character set of the client workstation that the employee uses. Further assume the created mapping table is named CREATED_BY_TO_CHARSET_MAPPING and has the columns CREATED_BY and CHARACTER_SET. The contents of such a table might resemble the following:

CREATED_BY CHARACTER_SET ---------- ------------- ... JSMITH WE8MSWIN1252 JKOWALSKI EE8MSWIN1250 SKUZNETSOV CL8MSWIN1251 WLI ZHS16GBK ...

Now, set the Exclude from Conversion property of the CUSTOMER_NAME_ORIGINAL column to Yes to prevent data from being corrupted while the rest of the database is converted.

Check for possible length issues in CUSTOMER_NAME_ORIGINAL by running the following SQL:

SELECT c.ROWID

FROM customers c, created_by_to_charset_mapping csm

WHERE VSIZE(CONVERT(c.customer_name_original,

'AL32UTF8',

csm.character_set)) > 80

AND c.created_by = csm.created_by

If no rows are returned, there are no length issues. Otherwise, the returned rowids will help you locate the problematic values.

When the database is ready, convert it to AL32UTF8. Change the application configuration as required for the new database character set.

After the database conversion, run the following update statement:

UPDATE customers c

SET c.customer_name_original =

(SELECT CONVERT(c.customer_name_original,

'AL32UTF8',

csm.character_set)

FROM created_by_to_charset_mapping csm

WHERE csm.created_by = c.created_by)

This will convert the individual values of the column according to their assumed character set. Let employees who entered the customer data or who speak the relevant languages verify the post-conversion values for correctness.

5.5 Advanced Convertibility Issues

Some less frequently encountered convertibility issues are not handled automatically by the DMU. These issues might require additional scanning and cleansing steps outside of the tool.

5.5.1 Convertibility Issues: Uniqueness Validation

After database contents have been converted to Unicode, it is possible that rows in a table no longer satisfy a unique or primary key constraint.

Specifically, the following situations can occur:

-

A unique or primary key column has data with length issues, that is, some values expand in conversion beyond the column or data type length limit, and you set the property "Allow Conversion of Data with Issues" of this column to Yes. In such a case, the DMU will automatically truncate column values during the conversion step so that they fit into the existing length constraint. However, a truncated value may become identical with another value already in the column from which it differed only be the truncated suffix.

-

In various Oracle character sets, there are multiple character codes that map to a single Unicode code point. This is usually a result of:

-

an attempt to provide compatibility mapping for historical changes to a character set definition or to its interpretation by different vendors

-

an attempt to provide simplified mapping for codes that cannot be exactly mapped to Unicode, for example, because the actual mapping consists of a sequence of Unicode codes, and this is not supported by Oracle's conversion architecture

-

Unicode Standard unification rules, which cause certain groups of Han (Chinese) characters to get a single code point assigned, even though characters in such a group may be separately encoded in legacy East Asian character sets

If two character values in a single column differ only by characters that have the same mapping to Unicode in the assumed character set of the column, they become identical after conversion to Unicode.

The following character sets supported by the DMU have multiple codes that map to the same Unicode code point:

-

BG8PC437S

-

IW8MACHEBREW

-

IW8MACHEBREWS

-

JA16EUCTILDE

-

JA16MACSJIS

-

JA16SJIS

-

JA16SJISTILDE

-

JA16SJISYEN

-

JA16VMS

-

KO16KSCCS

-

LA8ISO6937

-

ZHS16MACCGB231280

-

ZHT16BIG5

-

ZHT16CCDC

-

ZHT16HKSCS

-

ZHT16HKSCS31

-

ZHT16MSWIN950

You can use the Oracle Locale Builder utility to check which character codes are affected.

See Also:

Oracle Database Globalization Support Guide for more information about the Oracle Locale Builder utility

If you suspect that a unique or primary key constraint might be affected by one of the above two issues, you can verify if you indeed have a problem by attempting to create an appropriate unique functional index. For example, if you have a unique or primary key constraint on character columns

tab1.col1andtab1.col2and a numeric columntab1.col3, attempt to create the following index:CREATE UNIQUE INDEX i_test ON tab1(SYS_OP_CSCONV(col1,'AL32UTF8','<assumed character set of col1>'), SYS_OP_CSCONV(col2,'AL32UTF8','<assumed character set of col2>'), col3) TABLESPACE ...Substitute

'UTF8'for'AL32UTF8', if this is the actual target character set. The third parameter toSYS_OP_CSCONVmay be omitted, if the assumed character set of a column is the same as the database character set (default). If the statement fails reporting "ORA-01452: cannot CREATE UNIQUE INDEX; duplicate keys found", there is a uniqueness problem in the column.The

CREATEINDEXstatement above assumes that you do not plan any scheduled lengthening of the columns and the columns are not defined using character length semantics. If you plan to extendcol1to n1 bytes andcol2to n2 bytes, use the following statement:CREATE UNIQUE INDEX i_test ON tab1(SYS_OP_CSCONV(SUBSTRB(RPAD(col1,n1,' '),1,n1), 'AL32UTF8','<assumed character set of col1>'), SYS_OP_CSCONV(SUBSTRB(RPAD(col2,n2,' '),1,n2), 'AL32UTF8','<assumed character set of col2>'), col3) TABLESPACE ...;Substitute

'UTF8'for'AL32UTF8', if the target character set is UTF8.If

col1andcol2are defined using character length semantics and their character lengths are, respectively, n1 and n2, use the following statement:CREATE UNIQUE INDEX i_test ON tab1(SYS_OP_CSCONV(SUBSTRB(RPAD(col1,4*n1,' '),1,4*n1), 'AL32UTF8','<assumed character set of col1>'), SYS_OP_CSCONV(SUBSTRB(RPAD(col2,4*n2,' '),1,4*n2), 'AL32UTF8','<assumed character set of col2>'), col3) TABLESPACE ...;Substitute

'UTF8'for'AL32UTF8'and 3* for 4*, if the target character set is UTF8. -

5.5.2 Convertibility Issues: Index Size

The maximum size of an index key in an Oracle database index cannot exceed the data block size in the tablespace of the index minus around 25% overhead.

The maximum is equal to the sum of maximum byte lengths of all key columns plus rowid length plus the number of length bytes. If the maximum byte length of a character column belonging to an index key increases during database conversion, either because:

-

it is being recalculated from the column character length for the new database character set

or

-

a lengthening cleansing action is defined on the column

The maximum byte length may cause the index key to exceed its allowed maximum length.

In this release, the DMU does not proactively verify index key lengths that change in the conversion step. Therefore, "ORA-01450: maximum key length (maximum) exceeded" or "ORA-01404: ALTER COLUMN will make an index too large" may be reported during conversion from an ALTER TABLE, CREATE INDEX, or ALTER DATABASE CHARACTER SET statement.

Before attempting conversion, you should review all indexes defined on VARCHAR2 and CHAR columns that have a lengthening cleansing actions scheduled or that use character length semantics to verify that they are not affected by this issue. The easiest approach is to test the migration on a copy of the original database. The copy should include the DMU repository with all planned cleansing actions. The actual application data does not affect the test so you can truncate all large convertible tables to shorten the test time.

5.5.3 Convertibility Issues: Partition Range Integrity

The DMU does not support converting a database in which any partition bounds require conversion. Therefore, you may want to migrate such a database using Data Pump utilities.

When preparing for the migration process, consider the following potential issues with partition integrity. Oracle Database distributes table rows among range partitions by comparing values of the partitioning key columns with partition bounds. This comparison uses binary sort order, that is, the byte representations of values, as stored on disk, are compared byte by byte. With character columns, the representation depends on the database character set and may change in conversion to Unicode. If the binary representation of partition bounds changes, the partitions might get reordered and the distribution of rows among partitions might change significantly.

You should analyze all range partitioned tables with convertible data in partitioning columns. If required, adjust their partition definitions, so that rows are still distributed according to your expectations after the database migration to Unicode.

Partition range bounds and partition list values may also suffer from the uniqueness issue described in "Convertibility Issues: Uniqueness Validation". If such an issue is encountered for a partitioned table, the table cannot be imported successfully without partition definitions being adjusted accordingly.

5.5.4 Convertibility Issues: Objects in the Recycle Bin

The DMU scans character columns in tables that have been dropped and are in the recycle bin like normal tables.

Scan results are included in the database scan report. Unlike the normal application tables, the dropped tables in recycle bin are not allowed to contain data that requires conversion. You cannot start the conversion step until tables with convertible data are removed from the recycle bin. No cleansing actions are supported on dropped tables.

5.5.5 Convertibility Issues: PL/SQL Local Identifiers Greater Than 30 Bytes

The DMU does not support converting names of PL/SQL stored modules, that is, stored procedures, stored functions and packages.

However, the DMU does automatically convert PL/SQL source code and view definitions including non-ASCII characters in:

-

names of procedures, functions, and types defined in packages

-

local identifiers, such as variable names and type names

-

character literals

-

comments

In the conversion step, the DMU fetches the relevant CREATE OR REPLACE PACKAGE|PACKAGE BODY|PROCEDURE|FUNCTION|TYPE|TYPE BODY|VIEW statements from the data dictionary, converts them to the target character set and, after the database character set has been changed to UTF8 or AL32UTF8, executes them.

The DMU does not check if any converted identifier exceeds its length constraint (usually 30 bytes for Oracle databases earlier to Oracle Database 12c Release 2 (12.2)). If an identifier becomes longer than allowed after conversion to Unicode, the resulting PL/SQL module will be created but its compilation will fail, usually reporting "PLS-00114: identifier '<identifier>' too long". The status of the module will be "invalid".

You should verify the status of all PL/SQL stored modules after the database conversion. If any module is in the invalid state because of an identifier being too long, you must manually shorten the identifier – in its definition and in all places where it is referenced.

Note:

Starting with Oracle Database 12c Release 2 (12.2), the maximum limit for an identifier is 128 bytes. For the earlier Oracle Database versions, the maximum limit for an identifier is 30 bytes.

5.6 Adapting Applications for Unicode Migration

The migration of a database to Unicode always impacts applications connecting to this database.

The scale of the impact depends on multiple factors, such as the following:

-

Do you want the applications to process new languages, which the database will now be able to store?

Support for new languages usually entails the need to adapt applications to process Unicode data. Applications that have been programmed to process only single-byte character sets will need significant changes to be able to process the full Unicode character repertoire. On the other hand, applications that will work with the same limited number of characters as before may require only minimal changes, taking advantage of Oracle client/server character set conversion.

-

Will languages with complex scripts require GUI support from the applications?

Complex script-rendering capabilities are necessary to display and accept text written in complex scripts, such as Arabic or Indian. Complex rendering includes, among other requirements, combining adjacent characters, where some fragments read from right-to-left, while some are left-to-right, as well as changing character shape depending on the character's position in a word.

-

What technologies are the applications built with?

Depending on the development framework in which the applications have been developed, modifying them to accept new languages may be relatively easy or very difficult. Fortunately, most modern environments, such as Java or Microsoft Windows, offer built-in or installable support for complex script rendering and Unicode processing. Applications can take advantage of this support, which simplifies the adaptation process, but does not eliminate it.

-

Are the applications developed in-house or by a third-party vendor?

Obviously, you can adapt only those applications of which you have the source code. Applications developed by vendors must be adapted by those vendors. If this turns out to be impossible, you may have to replace your applications with another software solution.

In addition to these considerations, requirements to make migration-related changes to applications can result from:

-

Table structure changes coming from cleansing actions, columns being lengthened or migrated to another data type.

-

Changed characteristics of the database character set, such as higher maximum byte width of a character.

-

Additional requirements of the new languages to be processed. This might include new sorting rules, new date or number formatting rules, non-Gregorian calendar support, and so on.

-

Changed binary sort order of strings in Unicode compared to the old legacy character set.

Details of adapting applications for new languages exceed the scope of this guide, but the following sections describe the minimal changes that may be required to continue running existing applications with a migrated database.

5.6.1 Running Legacy Applications Unchanged

You might want to run some of your existing applications unchanged after migration of their back-end database to Unicode.

For example, you might not have access to the source code of the applications to adapt them for Unicode or modifying the applications may be economically unjustified.

The main requirement for running an application unchanged after migration of its back-end database to Unicode is that all Unicode characters that the application may encounter also exist in a legacy character set for which the application was originally written. That is, if the application was written, for example, to process only the ISO 8859-1 standard character set (WE8ISO8859P1), then only the corresponding Unicode characters U+0000 - U+007F and U+00A0 - U+00FF are allowed in the subset of database contents accessed by the application.

If this requirement is fulfilled, the client character set for the application, as specified in the NLS_LANG environment setting, shall be set to the above legacy character set (in practice, it usually means that NLS_LANG remains the sam before and after the migration) and the client/server communication protocol will take care of converting character data between the legacy encoding used by the application and the Unicode encoding used by the database.

If the only characters processed by an application are standard US7ASCII characters, the application does not require any modification at all, because UTF8 and AL32UTF8 binary codes of all ASCII characters are identical to US7ASCII. Therefore, the processed bytes do not differ before and after the database character set migration.

Binary codes of characters outside of the ASCII range - for example, accented Latin letters - remain the same on the client side but change on the database side after the database character set change. The most important difference is usually the number of bytes in the character codes. While accented Latin letters, and also Cyrillic or Greek letter, occupy one byte each in single-byte legacy character sets, such as WE8MSWIN1252 or CL8MSWIN1251, they occupy two bytes in UTF8 and AL32UTF8. Certain special characters, such as the Euro currency symbol, occupy three bytes. Therefore, any affected columns, PL/SQL variables and user-defined data type attributes on the database side whose lengths are expressed in bytes need to be adjusted to accommodate additional bytes that are added in the client/server character set conversion when data comes from the application to the database.

If an application does not rely on the database to control data lengths, controlling the length limits itself, and if all data processed by the application is entered into the database only through the application, byte lengths of affected columns, PL/SQL variables, and user-defined data types attributes may be adjusted by increasing them appropriately, usually by multiplying by three. If an application relies on the database to control data lengths, by handling the returned errors (such as ORA-12899), or if data for the application may come from external sources, the recommended way of adjusting lengths in SQL and PL/SQL is to keep the original absolute length number and to change the length semantics from bytes to characters. That is, a VARCHAR2(10 [BYTE]) column or PL/SQL variable should become a VARCHAR2(10 CHAR) column or variable.

As the above length issues obviously affect the existing database contents as well, the column and attribute lengths usually have to be increased already as part of the cleansing step of the database character set migration process. The PL/SQL variables must be adjusted independently, unless their data type is expressed using the %TYPE attribute.

A significant problem exists because of absolute length limits of basic character data types. A VARCHAR2 value stored in a database cannot exceed 4000 bytes and a CHAR value cannot exceed 200 types. Therefore, you can expand VARCHAR2 columns and attributes only up to 1333 (in UTF8) or 1000 (in AL32UTF8) characters to have a guarantee that they can really store that number of random character codes without hitting the data type limit. If longer values are already commonly processed by an application, it may be impossible to continue running the application without modifying it, for example, to use the CLOB data type instead of VARCHAR2.

Independently of the above length issues, some applications may rely on a specific binary sorting order of queried character values coming from the database. Characters of the US7ASCII and WE8ISO8859P1 character sets keep the same order in UTF8 and AL32UTF8 but characters from other character sets do not. You may need to create custom linguistic definitions using the Oracle Local Builder utility to simulate the binary order of a legacy character set in an UTF8 or AL32UTF8 database. In general, Oracle recommends that you modify the application to not rely on a specific binary sort order instead of creating custom linguistic definitions, which increase complexity and cost of database administration and may impact query performance.

In addition to these changes, the database side of the application code might require further changes required by the new Unicode character encoding, as described in the next section.

See Also:

Oracle Database Globalization Support Guide for more information about creating custom linguistic definitions

5.6.2 Changes to SQL and PL/SQL Code

Because SQL and PL/SQL code runs inside the database, its processing character set always changes after a database migration to Unicode. The processing character set is the one in which the processed data is encoded, which in this case is the database character set.

This is different from the client-side application code, which can retain its processing character set after migration if NLS_LANG setting is left unchanged.

Most PL/SQL statements, expressions, functions, and procedures work independently of the database character set and require no adaptation. However, there are still of number of functions that provide different results for different processing character sets. SQL and PL/SQL code containing the following functions must be reviewed and modified as required to account for the migration to Unicode:

-

Functions depending on specific binary character codes:

CHR,ASCII, andDUMP -

Functions depending on character code widths in bytes:

LENGTHB,VSIZE -

Functions working with byte offsets:

INSTRB,SUBSTRB -

Character set conversion functions:

CONVERT -

String-to-binary casting:

UTL_RAW.CAST_TO_VARCHAR2,UTL_RAW.CAST_TO_RAW,UTL_I18N.STRING_TO_RAW,UTL_I18N.RAW_TO_CHAR

Also, the standard package UTL_FILE allows character data to be stored in external files in the database character set. Consumers of those files may need to be adapted to deal with the new file encoding.

The SQL and PL/SQL expressions must be reviewed in view definitions, PL/SQL stored modules, user-defined data type methods, triggers, check constraints, but also event rule conditions (see DBMS_RULE_ADM), row-level security (RLS/VPD) policies (see DBMS_RLS), fine-grained auditing policies (see DBMS_FGA) and any other auxiliary database objects definitions that may reference SQL or PL/SQL expressions.

Caution:

While uncommon, row-level security or fine-grained auditing policies may be defined using SQL expressions that are sensitive to character encoding. Ensure that you review all such policies to verify that protection of sensitive data is not compromised because of the database character set change.

5.7 Exporting and Importing Migration Profile

Before performing Unicode migration on a production database instance, you may want to perform multiple iterations of trial migrations on a test database instance cloned from your production database instance.

Once the trial migration is successful, you can then apply the same procedure and related settings for migrating the production database instance. DMU provides the functionality of exporting and importing of migration profiles, so as to enable the reuse of database migration settings across multiple trial migrations and for the production database migration.

Migration profile includes general information about the database, user-specified migration settings for scanning and conversion operations, and any scheduled cleansing actions.

The following migration profile information can be exported and imported using DMU:

-

Database General Information

-

Current Database Character Set

-

Assumed Database Character Set

-

Target Database Character Set

-

-

Database Scan Parameters

-

Number of Scanning Processes

-

Scan Buffer Size

-

-

Database Conversion Parameters

-

Degree of Parallelism

-

Number of Converting Processes

-

Enable Row Movement for Partitioned Tables

-

Consider CTAS with Row Movement Disabled

-

Consider CTAS with User-named LOB Segments

-

-

Database Post Conversion Settings

-

Handling of Read-Only Materialized Views

-

Handling of Updatable Materialized Views

-

Error Handling for Refreshing Materialized Views

-

Handling of Dropped Domain Indexes

-

Error Handling for Recreating Domain Indexes

-

Error Handling for Rebuilding Other Indexes

-

-

Table Scan Parameters (Set by User)

-

Rowids to Collect

-

-

Table Conversion Parameters (set by user)

-

User-preferred Conversion Method

-

Target Tablespace

-

Preserve Position of LONG Column

-

-

Column Scheduled Cleansing Actions (set by user)

-

Define assumed column character set (example, WE8ISO8859P15)

-

Change column semantics (example, BYTE to CHAR)

-

Modify column size (example, VARCHAR2(10) to VARCHAR2(20))

-

Migrate data type (example, VARCHAR2(4000) to CLOB)

-

Exclude from Conversion

-

Allow Conversion of Data with Issues

-

-

Attribute Scheduled Cleansing Actions (set by user)

-

Modify column size (example, VARCHAR2(10) to VARCHAR2(20))

-

Change column semantics

-

5.8 Near-Zero Downtime Database Migration to Unicode

Oracle provides a solution to migrate Oracle Database to Unicode in near-zero downtime.

Oracle provides the following software tools:

-

DMU for doing database scanning, cleansing, and character set conversion.

-

Oracle Recovery Manager (RMAN) for creating the target database by cloning the source database using initial data load.

-

Oracle GoldenGate for replicating incremental changes on the source database to the target Unicode database.

Note:

To implement the near-zero downtime database migration for Unicode, you must have the following software versions:

-

Oracle Database version 11g or later

-

Oracle GoldenGate version 12.1.2.1.0 or later

-

Oracle DMU version 2.1 or later

At a high-level, the near-zero downtime database migration procedure involves the following steps:

-

Preparing the source database to be migrated by scanning the data and cleansing all the reported convertibility issues in it using DMU.

-

Generating the parameter files and script files required by Oracle GoldenGate based on any scheduled data cleansing actions performed on the source database using DMU.

-

Setting up Oracle GoldenGate to capture incremental data changes in the source database.

-

Setting up a target database by creating a clone of the source database.

-

Converting the target database to Unicode using DMU.

-

Replicating the incremental data changes in the source database to the target database using Oracle GoldenGate.

-

Switching over from the source non-Unicode database to the target Unicode database with the minimal system interruption, once the target database is ready and is fully synced-up with the source database.

In order to correctly replicate the incremental data changes in the source non-Unicode database to the target Unicode database, Oracle GoldenGate requires certain parameter files containing the source database information about schemas, tables, columns, and any scheduled data cleansing actions that were performed using DMU to address the convertibility issues to Unicode, such as, assumed character sets, scheduled column modifications, and so on. As DMU repository already contains this information, DMU provides the functionality of exporting this information in the form of the following parameter files and script files (called as Obey scripts):

-

Manager parameter file (mgr.prm): This file is used by the GoldenGate Manager process mgr, which is responsible for managing other GoldenGate processes.

-

Defgen parameter file (defgen.prm): This file is used by the GoldenGate utility defgen, which creates a file with data definitions for source or target database tables. The data definition information is required when the source and target database tables have different definitions, such as, when DMU scheduled cleansing actions are defined to address convertibility issues to Unicode.

-

Extract parameter file (ext.prm): This file is used by the GoldenGate process Extract, which captures the transactional data from the source database.

-

Replicat parameter file (rep.prm): This file is used by the GoldenGate process Replicat, which reads the data extracted by the Extract process from the source database and populates this data in the target database.

-

Obey start scripts files (start_extract and start_replicat): These files contain the Obey scripts for starting the GoldenGate processes - Extract and Replicat.

-

Obey stop scripts files (stop_extract and stop_replicat): These files contain the Obey scripts for stopping the GoldenGate processes - Extract and Replicat.

-

Defgen script files (defgen.bat and defgen.sh): These files contain the script for running the GoldenGate process defgen for Windows and UNIX platforms.

Note:

-

If Extract and Replicat processes are running on different host systems, then DMU generates two sets of above parameter files - one set of files for the Extract process and another set of files for the Replicat process.

-

For replicating pluggable databases (PDBs) in Oracle Database 12c, the Oracle GoldenGate administrator needs to have additional privileges. Run the following command to provide additional privileges to the Oracle GoldenGate administrator:

SQL> EXEC dbms_goldengate_auth.grant_admin_privilege( 'C##OGG_OWNER', CONTAINER=>'ALL');

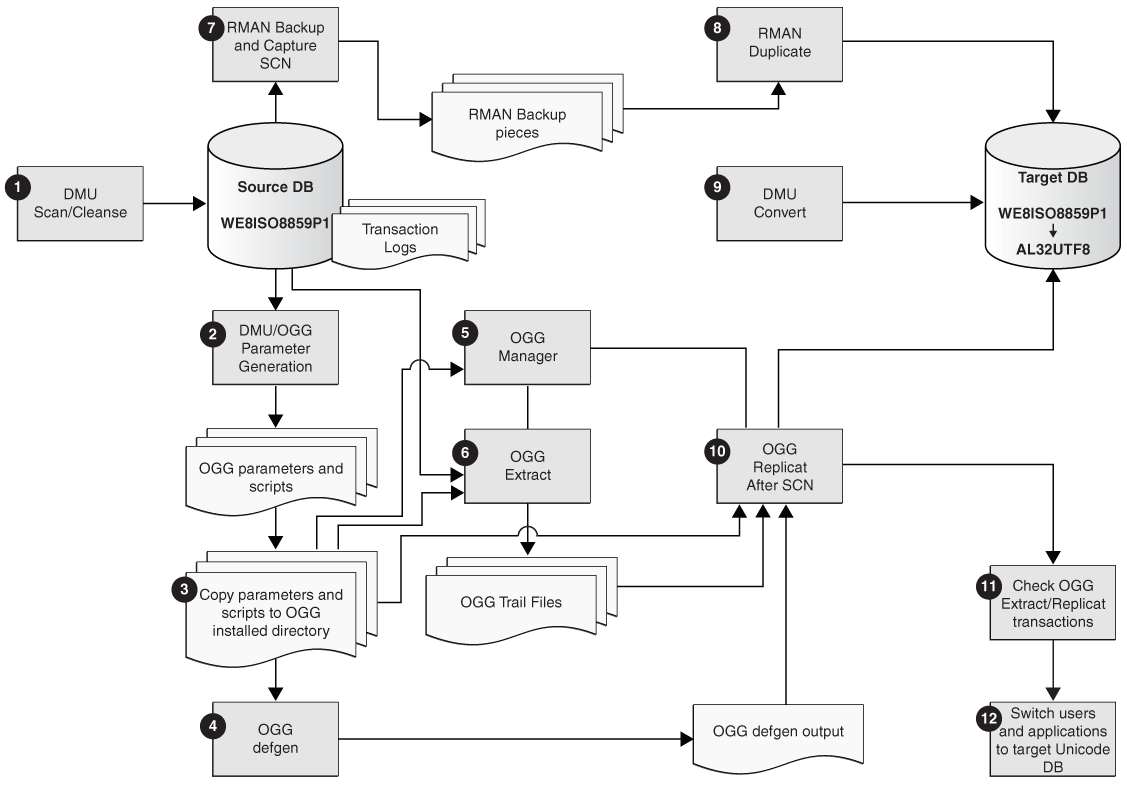

The following figure shows the detailed steps for migrating Oracle Database to Unicode in a near-zero downtime. In this diagram, OGG stands for Oracle GoldenGate.

Figure 5-1 Steps for Migrating Oracle Database to Unicode in Near-Zero Downtime

Description of "Figure 5-1 Steps for Migrating Oracle Database to Unicode in Near-Zero Downtime"

-

Use DMU to scan and cleanse the source database until all the reported convertibility issues are resolved.

-

Use DMU for generating parameter files and script files required by Oracle GoldenGate. The following are the steps to generate Oracle GoldenGate parameter files and script files from DMU:

-

In DMU, select Generate GoldenGate Parameters from the Migration menu or the context menu. The Welcome page of Generate GoldenGate Parameters Wizard is displayed.

-

Click Next. The Extract Parameters page is displayed.

-

Enter the following information on the Extract Parameters page that is required for the GoldenGate Extract process:

-

Userid alias and domain name of the database user stored in the Oracle GoldenGate credential store.

-

Name of the GoldenGate database schema.

-

-

Click Next. The Replicat Parameters page is displayed.

-

Enter the following information on the Replicat Parameters page that is required for the GoldenGate Replicat process:

-

Userid alias and domain name of the database user stored in the Oracle GoldenGate credential store. You can either specify to use the same user information as that of the Extract process or enter different user information.

-

Host name where the Replicat process will be running. You can either specify to use the same host name as that of the Extract process or enter different host name.

-

Oracle SID and Oracle Home of the target database.

-

-

Click Next. The Output Files page is displayed.

-

On the Output Files page, enter the absolute directory path for storing the output parameter files. You can either use the default directory path provided by DMU or specify any other directory path.

-

Click Finish. A progress bar is displayed when the parameter files and script files are being generated.

-

If the parameter files and script files are generated successfully, the Generation Complete dialog box containing the information about all the files that are generated is displayed.

If the parameter files generation process finishes with warnings, then those warnings are displayed on the Generation Complete dialog box.

If IOException occurs during the parameter files generation process, then the Error dialog box is displayed with the IOException details, and the parameter files generation process is terminated.

-

-

Copy the DMU generated parameter files and script files that were generated in step 2 to the Oracle GoldenGate installed directory.

The following table contains the information about the source directory and the destination directory for copying the parameter files and script files, when the Extract and Replicat processes are running on the same system. This document refers to this configuration as local Replicat configuration.

In this table, DMU_DIR is the directory on the DMU system where parameter files and script files are stored during execution of step 2. OGG_HOME is the Oracle GoldenGate home directory on the system where Oracle GoldenGate software is installed.

Table 5-1 Source directory and the destination directory for copying parameter files and script files from DMU system to GoldenGate system for local Replicat process

Parameter/Script Source Directory Destination Directory Manager parameter

DMU_DIR/dirprm/mgr.prmOGG_HOME/dirprm/mgr.prmExtract parameter

DMU_DIR/dirprm/ext.prmOGG_HOME/dirprm/ext.prmReplicat parameter

DMU_DIR/dirprm/rep.prmOGG_HOME/dirprm/rep.prmDefgen parameter

DMU_DIR/dirprm/defgen.prmOGG_HOME/dirprm/defgen.prmDefgen batch file

DMU_DIR/defgen.batOGG_HOME/defgen.batDefgen shell script

DMU_DIR/defgen.shOGG_HOME/defgen.shObey start Extract script

DMU_DIR/start_extractOGG_HOME/start_extractObey start Replicat script

DMU_DIR/start_replicatOGG_HOME/start_replicatObey stop Extract script

DMU_DIR/stop_extractOGG_HOME/stop_extractObey stop Replicat script

DMU_DIR/stop_replicatOGG_HOME/stop_replicatThe following table contains the information about the source directory and the destination directory for copying the parameter files and script files when the Extract and Replicat processes are running on different systems. This document refers to this configuration as remote Replicat configuration.

In this table, DMU_DIR is the directory on the DMU system where parameter files and script files are stored during step 2. OGG_HOME_EXT is the Oracle GoldenGate home directory on the Extract process host system. OGG_HOME_REP is the Oracle GoldenGate home directory on the Replicat process host system.

Table 5-2 Source directory and the destination directory for copying parameter files and script files from DMU system to GoldenGate system for remote Replicat process

Parameter/Script Source Directory Destination Directory Manager parameter (Extract)

DMU_DIR/extract/dirprm/mgr.prmOGG_HOME_EXT/dirprm/mgr.prmManager parameter (Replicat)

DMU_DIR/replicat/dirprm/mgr.prmOGG_HOME_REP/dirprm/mgr.prmExtract parameter

DMU_DIR/extract/dirprm/ext.prmOGG_HOME_EXT/dirprm/ext.prmReplicat parameter

DMU_DIR/ replicat/dirprm/rep.prmOGG_HOME_REP/dirprm/rep.prmDefgen parameter

DMU_DIR/extract/dirprm/defgen.prmOGG_HOME_EXT/dirprm/defgen.prmDefgen batch file

DMU_DIR/extract/defgen.batOGG_HOME_EXT/defgen.batDefgen shell script

DMU_DIR/extract/defgen.shOGG_HOME_EXT/defgen.shObey start Extract script

DMU_DIR/extract/start_extractOGG_HOME_EXT/start_extractObey stop Extract script

DMU_DIR/extract/stop_extractOGG_HOME_EXT/stop_extractObey start Replicat script

DMU_DIR/replicat/start_replicatOGG_HOME_REP/start_replicatObey stop Replicat script

DMU_DIR/replicat/stop_replicatOGG_HOME_REP/stop_replicat -

For the local Replicat configuration, on the Oracle GoldenGate system, execute the defgen script present in the OGG_HOME directory to generate source table definitions which were scheduled for cleansing by DMU. The defgen script generates the output file defgen.def in the OGG_HOME/dirdef directory.

For the remote Replicat configuration, on the Oracle GoldenGate system, execute the defgen script present in the OGG_HOME_EXT directory on the Extract process host system to generate source table definitions which were scheduled for cleansing by DMU. The defgen script generates the output file defgen.def in the OGG_HOME_EXT/dirdef directory. Copy the output file defgen.def to the OGG_HOME_REP/dirdef directory on the Replicat process host system.

-

For the local Replicat configuration, start the Oracle GoldenGate Manager process in the Oracle GoldenGate Command Interface (GGSCI):

GGSCI>start managerFor the remote Replicat configuration, start the Oracle GoldenGate Manager processes on both, the Extract and the Replicat host systems.

-

For local and remote Replicat configurations, start the Oracle GoldenGate Extract process in GGSCI:

GGSCI>obey start_extract -

Use RMAN to take backup of the source database and capture the checkpoint SCN value to be used by the Oracle GoldenGate Replicat process.

To take backup of the database, run the command:

RMAN>backup database plus archivelog;To capture the checkpoint System Change Number (SCN) value, run the command:

RMAN>list backup;or run the command:

RMAN>restore database preview summary; -

Use RMAN to create the target database by cloning the source database.

-

Use DMU to convert the target database character set to Unicode, for example from WE8ISO8859P1 to AL32UTF8. You should be able to start the DMU conversion on the target database immediately, as all the convertibility issues on the source database have been cleansed in Step 1 and the corresponding migration-related metadata information in the DMU repository has been cloned from the source database.

Note:

Restart the target database after the DMU conversion is complete. -

Edit start_replicat Obey script by replacing the SCN value in it with the SCN value captured in step 7 and start the Oracle GoldenGate Replicat process.

In the following start_replicat Obey script sample, the SCN value of 123456 is entered:

START REPLICAT rep, AFTERCSN 123456For the local Replicat configuration, start the Oracle GoldenGate Replicat process in the Oracle GoldenGate Command Interface (GGSCI):

GGSCI>obey start_replicatFor the remote Replicat configuration, start the Oracle GoldenGate Replicat process on the Replicat host system.

-

Ensure that all the transactions have been extracted and replicated by Oracle GoldenGate:

-

Check that there are no active transactions in the source and the target databases:

SQL> select count(*) from v$transaction; COUNT(*) -------- 0 -

Query the current SCN of the source database:

SQL> select current_scn from v$database; CURRENT_SCN ----------- 1439706 -

Query the status of the Extract process and verify that it has read past the last SCN value (for example, the SCN value of 1439706 mentioned in the above step):

GGSCI> send extract ext, status Sending STATUS request to EXTRACT EXT ... EXTRACT EXT (PID 6312) Current status: Recovery complete: Processing data Current read position: Redo thread #: 1 Sequence #: 161 RBA: 10483752 Timestamp: 2015-01-09 13:56:14.000000 SCN: 0.1439718 Current write position: Sequence #: 0 RBA: 1534 Timestamp: 2015-01-09 13:56:13.756998 Extract Trail: ./dirdat/dm -

Query the status of the Replicat process and verify that it shows At EOF status:

GGSCI> send replicat rep, status Sending STATUS request to REPLICAT REP ... Current status: At EOF Sequence #: 0 RBA: 1534 0 records in current transaction

-

-

Switch users and applications to the target Unicode database.

5.9 Repairing Database Character Set Metadata

You database may be in what is commonly called a pass-through configuration. The client character set may be defined, usually through the NLS_LANG client setting, to be equal to the database character set. Consequently, the character data in your database could differ from the declared database character set.

In this scenario, the recommended solution is to migrate your database to Unicode by using the DMU assumed database character set feature to indicate the actual character set for the data. In case migrating to Unicode is not immediately feasible due to business or technical constraints, it would be desirable to at least correct the database character set declaration to match with the database contents.

With Database Migration Assistant for Unicode, you can repair the database character set metadata in such cases using the CSREPAIR script. The CSREPAIR script works in conjunction with the DMU client and accesses the DMU repository. It can be used to change the database character set declaration to the real character set of the data only after the DMU has performed a full database scan by setting the Assumed Database Character Set property to the target character set and no invalid representation issues have been reported, which verifies that all existing data in the database is defined according to the assumed database character set. Note that CSREPAIR only changes the database character set declaration in the data dictionary metadata and does not convert any database data.

You can find the CSREPAIR script under the admin subdirectory of the DMU installation. The requirements when using the CSREPAIR script are: