C.4 Multi-Master Cluster Issues

Review these troubleshooting tips for common Multi-Master Cluster related errors when working with Oracle Key Vault.

- Heartbeat Lag or High Replication Lag in Multi-Master Cluster Environment

Heartbeat lag or replication lag is very high or undetermined in a multi-master cluster environment. - Cluster Node Pairing Failure

Cluster node pairing fails in multi-master cluster environment. - Adding a Node to Cluster Fails with Invalid Certificate or Certificate Expired Error

When adding a node to the cluster certificate expired or invalid certificate error is displayed. - How to Diagnose Oracle Key Vault 18c Cluster Issues

Based on the issue, use the paths in this topic to troubleshoot the Oracle Key Vault cluster issues.

Parent topic: Troubleshooting Oracle Key Vault

C.4.1 Heartbeat Lag or High Replication Lag in Multi-Master Cluster Environment

Heartbeat lag or replication lag is very high or undetermined in a multi-master cluster environment.

Example

Cluster Monitoring page on Oracle Key Vault Management console shows,

Heartbeat Lag or Replication Lag higher than 120 sec and increasing consistently.Probable Cause 1

This issue may occur because of network connectivity failures. This issue may occur because of network connectivity failures, the required ports are not open between cluster nodes or intermittent network performance issues.

Solution

- On each cluster node, log in to the Oracle Key Vault management console as a System Administrator and navigate to the Cluster Monitoring page.

- Check the heartbeat lag and replication lags.

- If the heartbeat lag or replication lag is high or Cluster Services Status is down (a down red arrow), Click Restart Cluster Services to restart the cluster services. Wait for a few minutes and refresh the page.

- If the lag is still high, for each cluster node, log in to the cluster node through SSH as the support user, switch user

sutoroot, and ping the problematic nodes.curl -v telnet://other-node-ip-address:7093 curl -v telnet://other-node-ip-address:7443 - If connectivity fails, then resolve network connection issues to ensure that the preceding commands are successful.

- Wait for few minutes for the network connectivity to be restored.

- Check if the issue is resolved.

Probable Cause 2

Cluster node replication has failed.

Solution

- Identify the cluster nodes that have a replication lag.

- Reboot the problematic cluster node(s).

- Check if the issue is resolved.

Probable Cause 3

Oracle Key Vault CA certificate, node certificates, nodes have expired.

Solution

- On all cluster nodes, check if the Oracle Key Vault node certificates has expired.

- Log in to the Oracle Key Vault management console as System Administrator.

- To check the node certificate expiration status, navigate to System, Status and check the Node Certificate Expiration Date. You can also check the Oracle Key Vault Home page for any node certificate expiration alerts.

- Regenerate the node certificate if it has expired. Go to System, select Settings.

- Select Service Certificates and click Manage Node Certificate to regenerate the new node certificate.

- On all cluster nodes, check if the Oracle Key Vault CA certificate has expired.

- Log in to the Oracle Key Vault management console as System Administrator.

- To check the CA certificate expiration status, go to System, select Status and check the CA Certificate Expiration Date. You can also check the Oracle Key Vault Home page for any CA certificate expiration alerts.

- In Oracle Key Vault release 21.5 and later, you cannot start a CA certificate rotation if the CA has already expired. You must generate a new CA certificate manually and re-enroll all endpoints instead. See Managing CA Certificate Rotation. For Oracle Key Vault 21.4 and earlier, contact Oracle Support.

- Check if the issue is resolved.

Parent topic: Multi-Master Cluster Issues

C.4.2 Cluster Node Pairing Failure

Cluster node pairing fails in multi-master cluster environment.

Example

Adding a node to cluster fails,

Status of the node in Cluster Management page on the Oracle Key Vault Management console shows Pairing for indefinite amount of time.Probable Cause 1

The NTP settings are different on the nodes being paired.

Solution

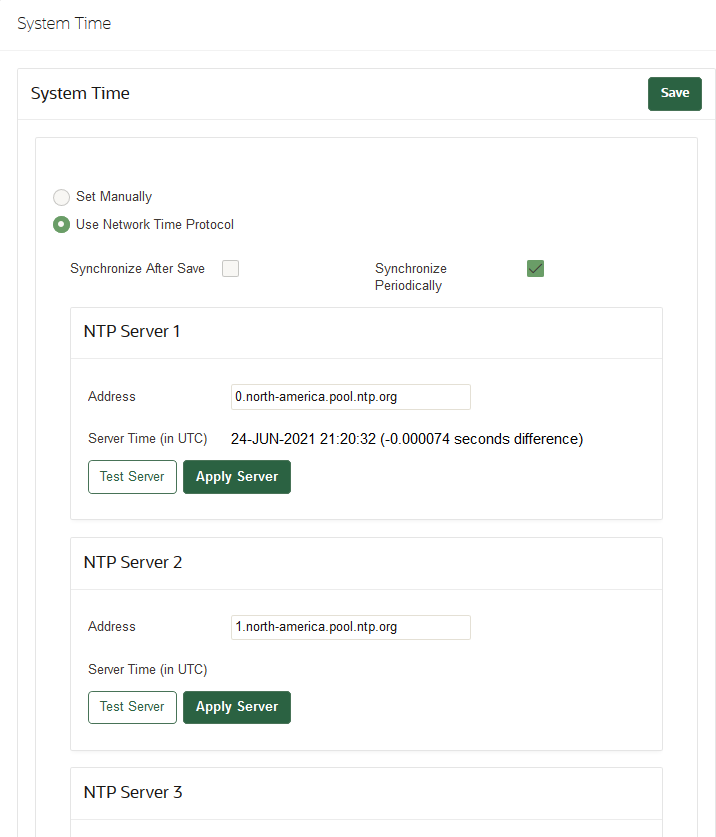

- Log in to the Oracle Key Vault management console of the controller node as System Administrator.

- Log in to the Oracle Key Vault management console on both the nodes.

- Go to the System, Settings page.

- In the Network Services area, click NTP to

display the System Time window.

- Verify if the pairing status of the nodes has changed to ACTIVE after some time.

- Check if the issue is resolved.

Probable Cause 2

The controller node and the new node are not reachable through the required ports.

Solution

- Log in to all the nodes through SSH as the support user. Switch user

sutorootand ping the problematic nodes with the required ports.curl -v telnet://ipaddress:7093 curl -v telnet://ipaddress:7443 - If connectivity fails, then enable the required ports between nodes. See, Oracle Key Vault Installation and Upgrade Guide for the network port requirements.

- Verify if the pairing status has changed to ACTIVE.

- Check if the issue is resolved.

Parent topic: Multi-Master Cluster Issues

C.4.3 Adding a Node to Cluster Fails with Invalid Certificate or Certificate Expired Error

When adding a node to the cluster certificate expired or invalid certificate error is displayed.

Example

Adding a node to cluster fails with,

OAV-4783 Invalid certificate certificate has expiredProbable Cause

Invalid and the AV Certificates are expired on the node.

Solution

- Log in to the controller node using SSH as a support user and switch to the root user.

Note:

If you add node 2 as a peer read/write node to node 1, then node 1 is the controller node. - Go to the certificates directory.

cd /usr/local/dbfw/etc - Verify the CA certificate expiry date.

openssl x509 -noout -startdate -enddate -in /usr/local/dbfw/etc/ca.crt - Run these commands if the CA certificate is expired. Ignore any errors.

/usr/local/bin/gensslcert create-ca /usr/local/bin/gensslcert create-certs chmod 600 /usr/local/dbfw/etc/ca.key chmod 644 /usr/local/dbfw/etc/ca.crt - Verify if the

ca.crtfile in the server is refreshed or not.

.openssl x509 -noout -startdate -enddate -in /usr/local/dbfw/etc/ca.crt - Restart HTTPD server daemon and tomcat

services.

service httpd restart service tomcat restart - Reboot the node if the Oracle Key Vault management console is still not accessible.

- Check if the issue is resolved.

Parent topic: Multi-Master Cluster Issues

C.4.4 How to Diagnose Oracle Key Vault 18c Cluster Issues

Based on the issue, use the paths in this topic to troubleshoot the Oracle Key Vault cluster issues.

- Cluster-Node Pairing Issues

The error logs provide the path to look for issues related to cluster-node pairing. Different log files capture the issue details at different locations. Refer these log files to understand more about issues related to cluster-node pairing.

Parent topic: Multi-Master Cluster Issues

C.4.4.1 Cluster-Node Pairing Issues

The error logs provide the path to look for issues related to cluster-node pairing. Different log files capture the issue details at different locations. Refer these log files to understand more about issues related to cluster-node pairing.

General Troubleshooting from Oracle Key Vault Management Console

- Monitoring Page - displays the nodes status.

- Verify the Cluster Monitoring page for replication lag below 120 seconds or recommended value, if objects like endpoints, or wallets (except node) are in the Pending state and not changing to Active state.

Table C-1 Controller Node Key Files

| File Path | Description |

|---|---|

/var/log/messages |

Location that reports errors. |

/var/log/debug |

Details operations such as, HTTPS requests. The file records errors that occur during pairing. |

/var/okv/log/mmha/welcome_node_status.txt |

Records the timestamps and steps run by the controller node. Also records errors that occur during the recent pairing on the controller node. |

/var/okv/log/mmha/okv_replicate_to_new_node*.log |

Look for the most recent pairing with ls -lrt | grep

okv_replicate_to_new_node. This may have more details than

welcome_node_status.txt |

/var/okv/log/mmha/okv_new_node_added*.log |

Same as okv_replicate_to_new_node*.log except

that it is used at a later step in the pairing, after

okv_load_node_tune_restored_bkp* has finished

on the candidate node.

|

/var/okv/og/mmha/output_date_ogg_* |

These files are the output of OGG commands |

Table C-2 Candidate Node Key Files

| File Path | Description |

|---|---|

/var/log/messages |

Location that reports errors. |

/var/log/debug |

Detail operations such as, HTTPS requests. The file records errors that occur during pairing. |

/var/lib/oracle/ogg/okv/load_node_info.txt |

Generated by the candidate node. The controller node attempts to retrieve this file via HTTPS and verifies the information as one of the starting steps in the pairing process. |

/var/okv/log/mmha/new_node_status.txt |

Timestamps and steps are written here. If there is an error during pairing on the candidate node, then it is located here. |

/var/okv/log/mmha/okv_load_node_from_backup*.log |

Occurs after okv_replicate_to_new_node on the controller node. More

details in new_node_status.txt.

|

/var/okv/log/mmha/okv_load_node_tune_restored_bkp*.log |

Occurs after okv_load_node_from_backup (after a reboot). More details

than new_node_status.txt.

|

/var/okv/log/db/output*okv_backup_restore.log

|

Backup restores during pairing records the output here. |

Parent topic: How to Diagnose Oracle Key Vault 18c Cluster Issues