Association Rules Use Case Scenario

A popular movie rental website is being updated. The movie rental company wishes to provide movie recommendations to their customers based on their frequently rented movies and purchase transaction history. They approach you, a data scientist, for assistance with movie recommendations. Using the Apriori algorithm, you solve this problem by analysing popular movies that are frequently watched together.

Related Content

| Topic | Link |

|---|---|

| OML4SQL GitHub Example | Apriori - Association Rules |

CREATE_MODEL2 Procedure

|

CREATE_MODEL2 Procedure |

| Generic Model Settings | DBMS_DATA_MINING - Model Settings |

| Apriori Settings | DBMS_DATA_MINING - Machine Learning Function Settings |

| Data Dictionary Settings | Oracle Machine Learning Data Dictionary Views |

| Association Rules - Model Detail Views | |

| About Association | About Association |

| About Apriori | About Apriori |

Before you start your OML4SQL use case journey, ensure that you have the following:

- Data set

The data set used for this use case is called MovieStream data set.

Note:

This data set is used for illustrative purpose only. - Database

Select or create database out of the following options:

- Get your FREE cloud account. Go to https://cloud.oracle.com/database and select Oracle Database Cloud Service (DBCS), or Oracle Autonomous Database. Create an account and create an instance. See Autonomous Database Quick Start Workshop.

- Download the latest version of Oracle Database (on premises).

- Machine Learning Tools

Depending on your database selection,

- Use OML Notebooks for Oracle Autonomous Database.

- Install and use Oracle SQL Developer connected to an on-premises database or DBCS. See Installing and Getting Started with SQL Developer.

- Other Requirements

Data Mining Privileges (this is automatically set for ADW). See System Privileges for Oracle Machine Learning for SQL.

Load Data

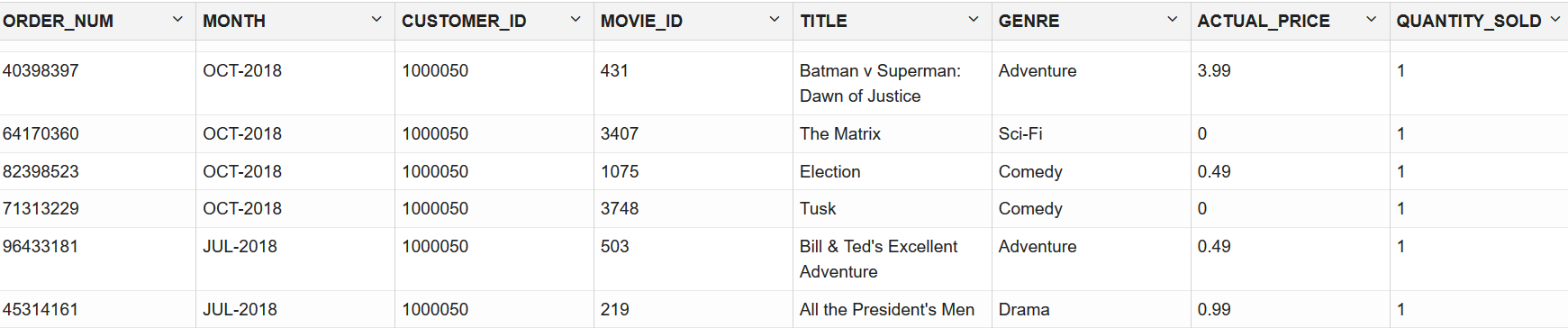

Examine the data set and its attributes. Load the data in your database.

In this use case, you will load the data set to your database. If you are using Oracle Autonomous Database, you will use an existing data file from the Oracle Cloud Infrastructure (OCI) Object Storage. You will create a sample table, load data into the sample table from files on the OCI Object Storage, and explore the data. If you are using the on-premises database, you will use Oracle SQL developer to import the data set and explore the data.

- Access the data.

- Examine the various attributes or columns of the data set.

- Assess data quality (by exploring the data).

Examine Data

The following table displays information about the attributes from MOVIES_SALES_FACT:

| Attribute Name | Information |

|---|---|

ORDER_NUM |

Specifies the order number |

ACTUAL_PRICE |

Specifies the actual price of the movie |

AGE |

Specifies the age of the customer |

AGE_BAND |

Specifies the age band of the customer. The possible values are 20-29, 30-39, 40-49, 50-59, 60-69, 70-79, 80-89 and so on. |

APP |

Specifies the application used for the movie |

CITY |

Specifies the name of the city |

CITY_ID |

Specifies the city ID |

COMMUTE_DISTANCE |

Specifies the commute distance |

COMMUTE_DISTANCE_BAND |

Specifies the commute distance band |

CONTINENT |

Specifies the continent name |

COUNTRY |

Specifies the country name |

COUNTRY_CODE |

Specifies the country code |

COUNTRY_ID |

Specifies the country ID |

CREDIT_BALANCE |

Specifies the credit balance of the customer |

CUSTOMER_ID |

Specifies the customer ID |

CUSTOMER_NAME |

Specifies the customer name |

DAY |

Specifies the day of the week in YYYY-mm-dd hh:mm:ss format

|

DAY_NAME |

Specifies the day of the week |

DAY_NUM_OF_WEEK |

Specifies the day number of the week |

DEVICE |

Specifies the device information used by the customer |

DISCOUNT_PERCENT |

Specifies the discount percent |

DISCOUNT_TYPE |

Specifies the discount type availed by the customer. Possible values are referral, coupon, promotion, volume, none |

EDUCATION |

Specifies customer's education |

EMAIL |

Specifies email ID of the customer |

FULL_TIME |

Specifies customer's employment status such as full time, not employed, part time |

GENDER |

Specifies the gender of the customer |

GENRE |

Specifies the genre of the movie |

HOUSEHOLD_SIZE |

Specifies the household size of the customer |

HOUSEHOLD_SIZE_BAND |

Specifies the household size band |

INCOME |

Specifies the income of the customer |

INCOME_BAND |

Specifies the income band of the customer |

INSUFF_FUNDS_INCIDENTS |

Specifies the number of insufficient funds incidents that the customer had |

JOB_TYPE |

Specifies the cusotmer's job |

LATE_MORT_RENT_PMTS |

Specifies is the customer had any late mortgage or rent payment |

LIST_PRICE |

Specifies the list price of the movie |

MARITAL_STATUS |

Specifies the marital status of the customer |

MONTH |

Specifies the month in MON-YYYY format

|

MONTH_NAME |

Specifies the month. For example, January. |

MONTH_NUM_OF_YEAR |

Specifies the month number of the year |

MORTGAGE_AMT |

Specifies the mortgage amount |

MOVIE_ID |

Specifies the movie ID |

NUM_CARS |

Specifies the number of the cars that the customer owns |

NUM_MORTGAGES |

Specifies the number of mortgages |

OS |

Specifies the OS information |

PAYMENT_METHOD |

Specifies the payment method |

PET |

Specifies if the customer owns a pet |

POSTAL_CODE |

Specifies the postal code of the address |

PROMOTION_RESPONSE |

Specifies the response of the customer to a promotional offer |

QUANTITY_SOLD |

Specifies the quantity sold |

QUARTER_NAME |

Specifies the quarter name in Qn-YYYY format. For example, Q1-2001.

|

QUARTER_NUM_OF_YEAR |

Specifies the quarter number of the year |

RENT_OWN |

Specifies if the customer is living at a rented place or own place |

SEARCH_GENRE |

Specifies the genre of the movies searched |

SEGMENT_DESCRIPTION |

Describes the population segment |

SEGMENT_NAME |

Specifies the population segment name |

SKU |

Specifies the SKU ID |

STATE_PROVINCE |

Specifies the province |

STATE_PROVINCE_ID |

Specifies the province ID |

STREET_ADDRESS |

Specifies the customer's address |

TITLE |

Specifies the movie title |

USERNAME |

Specifies the username provided by the customer |

WORK_EXPERIENCE |

Specifies the work experience of the customer |

WORK_EXPERIENCE_BAND |

Specifies the work experience band of the customer |

YEAR |

Specifies the year |

YEARS_CURRENT_EMPLOYER |

Specifies the current employer of the customer |

YEARS_CURRENT_EMPLOYER_BAND |

Specifies the customer's employment band in years with the current employer |

YEARS_RESIDENCE |

Specifies the number of years the customer has been residing at a place |

YEARS_RESIDENCE_BAND |

Specifies the residence band |

Create a Table

Create a table called MOVIE_SALES_FACT. This table is used in DBMS_CLOUD.COPY_DATA procedure to access the data set.

Enter the following code in the OML Notebooks and run the notebook.

%sql

CREATE TABLE MOVIE_SALES_FACT

( ORDER_NUM NUMBER(38,0),

DAY DATE,

DAY_NUM_OF_WEEK NUMBER(38,0),

DAY_NAME VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

MONTH VARCHAR2(12 BYTE) COLLATE USING_NLS_COMP,

MONTH_NUM_OF_YEAR NUMBER(38,0),

MONTH_NAME VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

QUARTER_NAME VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

QUARTER_NUM_OF_YEAR NUMBER(38,0),

YEAR NUMBER(38,0),

CUSTOMER_ID NUMBER(38,0),

USERNAME VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

CUSTOMER_NAME VARCHAR2(250 BYTE) COLLATE USING_NLS_COMP,

STREET_ADDRESS VARCHAR2(250 BYTE) COLLATE USING_NLS_COMP,

POSTAL_CODE VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

CITY_ID NUMBER(38,0),

CITY VARCHAR2(128 BYTE) COLLATE USING_NLS_COMP,

STATE_PROVINCE_ID NUMBER(38,0),

STATE_PROVINCE VARCHAR2(128 BYTE) COLLATE USING_NLS_COMP,

COUNTRY_ID NUMBER(38,0),

COUNTRY VARCHAR2(126 BYTE) COLLATE USING_NLS_COMP,

COUNTRY_CODE VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

CONTINENT VARCHAR2(128 BYTE) COLLATE USING_NLS_COMP,

SEGMENT_NAME VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

SEGMENT_DESCRIPTION VARCHAR2(128 BYTE) COLLATE USING_NLS_COMP,

CREDIT_BALANCE NUMBER(38,0),

EDUCATION VARCHAR2(128 BYTE) COLLATE USING_NLS_COMP,

EMAIL VARCHAR2(128 BYTE) COLLATE USING_NLS_COMP,

FULL_TIME VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

GENDER VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

HOUSEHOLD_SIZE NUMBER(38,0),

HOUSEHOLD_SIZE_BAND VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

WORK_EXPERIENCE NUMBER(38,0),

WORK_EXPERIENCE_BAND VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

INSUFF_FUNDS_INCIDENTS NUMBER(38,0),

JOB_TYPE VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

LATE_MORT_RENT_PMTS NUMBER(38,0),

MARITAL_STATUS VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

MORTGAGE_AMT NUMBER(38,0),

NUM_CARS NUMBER(38,0),

NUM_MORTGAGES NUMBER(38,0),

PET VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

PROMOTION_RESPONSE NUMBER(38,0),

RENT_OWN VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

YEARS_CURRENT_EMPLOYER NUMBER(38,0),

YEARS_CURRENT_EMPLOYER_BAND VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

YEARS_CUSTOMER NUMBER(38,0),

YEARS_CUSTOMER_BAND VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

YEARS_RESIDENCE NUMBER(38,0),

YEARS_RESIDENCE_BAND VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

AGE NUMBER(38,0),

AGE_BAND VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

COMMUTE_DISTANCE NUMBER(38,0),

COMMUTE_DISTANCE_BAND VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

INCOME NUMBER(38,0),

INCOME_BAND VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

MOVIE_ID NUMBER(38,0),

SEARCH_GENRE VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

TITLE VARCHAR2(4000 BYTE) COLLATE USING_NLS_COMP,

GENRE VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

SKU NUMBER(38,0),

LIST_PRICE NUMBER(38,2),

APP VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

DEVICE VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

OS VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

PAYMENT_METHOD VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

DISCOUNT_TYPE VARCHAR2(26 BYTE) COLLATE USING_NLS_COMP,

DISCOUNT_PERCENT NUMBER(38,1),

ACTUAL_PRICE NUMBER(38,2),

QUANTITY_SOLD NUMBER(38,0)

)

;Load Data in the Table

Load the data set stored in object storage to the MOVIE_SALES_FACT table.

Before you load this data ensure that you set Compute Resources to Medium or High. If you select High, then, set Memory field to 16 for High Resource Service. You must have Administrator privilege to configure the memory settings. See Compute Resource.

%script

BEGIN

DBMS_CLOUD.COPY_DATA (table_name => 'MOVIE_SALES_FACT',file_uri_list => 'https://objectstorage.uk-london-1.oraclecloud.com/n/adwc4pm/b/moviestream_kl/o/d801_movie_sales_fact_m-*.csv', format => '{"delimiter":",", "recorddelimiter":"newline", "skipheaders":"1", "quote":"\\\"", "rejectlimit":"1000", "trimspaces":"rtrim", "ignoreblanklines":"false", "ignoremissingcolumns":"true", "dateformat":"DD-MON-YYYY HH24:MI:SS"}');

END;

/

PL/SQL procedure successfully completed.

---------------------------table_name: is the target table’s name.credential_name: is the name of the credential created earlier.file_uri_list: is a comma delimited list of the source files you want to load. The special character*in the filed801_movie_sales_fact_m-*.csvmeans you are bulk loading the MovieStream data set containing sales data for 2018-2020.format: defines the options you can specify to describe the format of the source file, including whether the file is of type text, ORC, Parquet, or Avro.delimiter: Specifies the field delimiter (special character). Here, it is specified as "," (comma)recorddelimiter: Specifies the record delimiter. The default value isnewline. By default,DBMS_CLOUDtries to automatically find the correct newline character as the delimiter.skipheaders: Specifies how many rows should be skipped from the start of the file. In this use case, it is1.quote: Specifies the quote character for the fields.rejectlimit: The operation will error out after specified number of rows are rejected. Here, the value is1000.trimspaces: Specifies how the leading and trailing spaces of the fields are trimmed. Here it isrtrim. Thertrimvalue indicates that you want trailing spaces trimmed.ignoreblanklines: Blank lines are ignored when set to true. The default value isfalse.ignoremissingcolumns: If there are more columns in thefield_listthan there are in the source files, the extra columns are stored as null. The default value isfalse. In this use case, it is set totrue.dateformat: Specifies the date format in the source file.

In this example, adwc4pm is the Oracle Cloud Infrastructure object storage namespace and moviestream_kl is the bucket name.

Related Topics

Explore Data

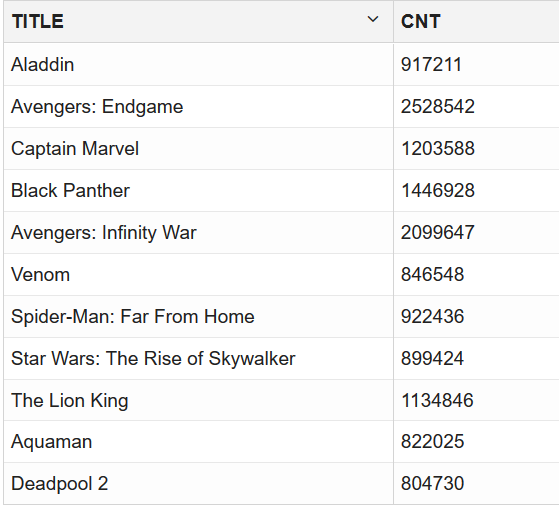

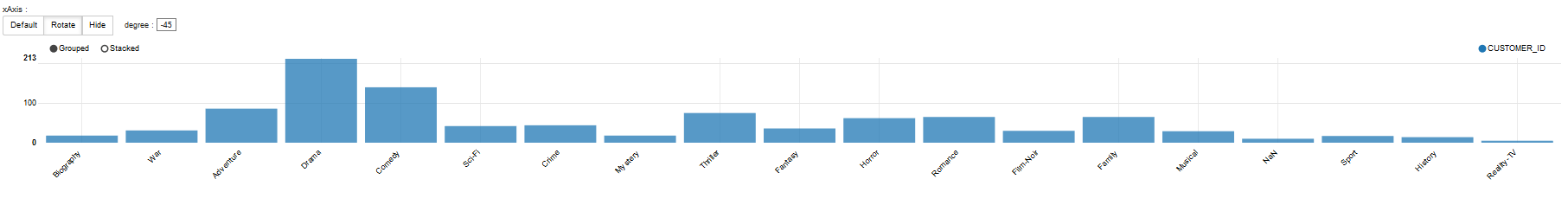

Once the data is loaded into the table, explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values.

Assess Data Quality

To assess the data, first, you must be able to view the data in your database. For this reason, you will use SQL statements to query the table.

If you are working with Oracle Autonomous Database, you can use the Oracle Machine Learning (OML) Notebooks for your data science project, including assessing data quality. If you are using the on-premises Oracle Database, you can use the Oracle SQL Developer to assess data quality. Query the data as described.

Note:

Each record in the database is called a case and each case is identified by acase_id. In this use case, CUSTOMER_ID is the case_id.

The following steps help you with the exploratory analysis of the data:

This completes the data exploration stage. OML supports Automatic Data Preparation (ADP). ADP is enabled through the model settings. When ADP is enabled, the transformations required by the algorithm are performed automatically and embedded in the model. This step is done during the Build Model stage. The commonly used methods of data preparation are binning, normalization, and missing value treatment.

Related Topics

Build Model

Build your model using your data set. Use the DBMS_DATA_MINING.CREATE_MODEL2 procedure to build your model and specify the model settings.

For unsupervised learning, like Association Rules, you do not have labels or predictors to calculate the accuracy or assess the performance. So you don't need to train your model on a separate training data set and then evaluate it on a test set. The entire data set can be used to build the model. For an unsupervised learning, you don't have an objective way to assess your model. So, a training or a test split is not useful.

Algorithm Selection

Oracle supports the Apriori algorithm to build an Association Rules model.

Apriori calculates the probability of an item being present in a frequent itemset, given that another item or group of items is present. An itemset is any combination of two or more items in a transaction. Frequent itemsets are those that occur with a minimum frequency that the user specifies. An association rule states that an item or group of items implies the presence of another item with some probability and support.

The following steps guide you to build your model with the Apriori algorithm.

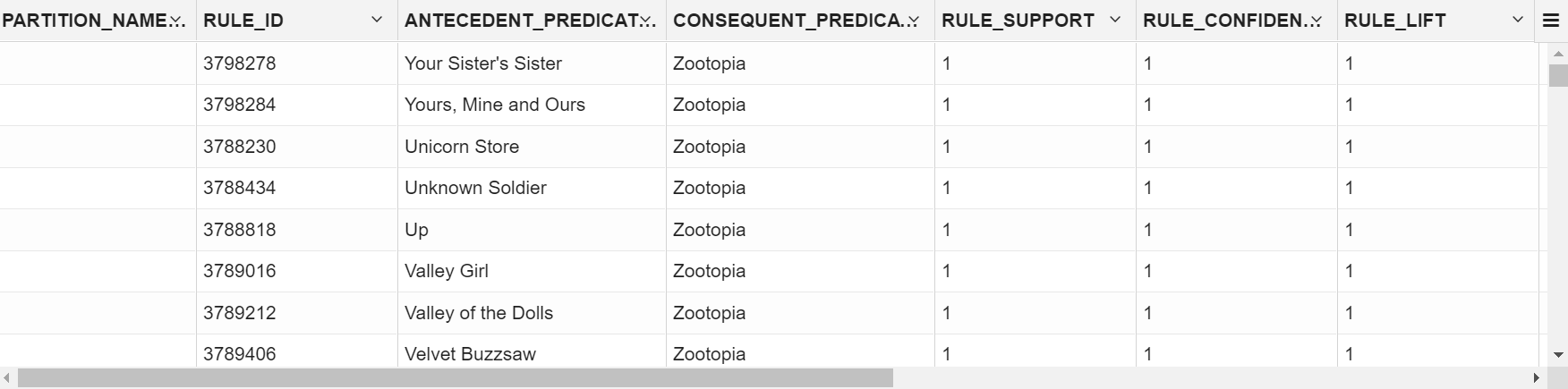

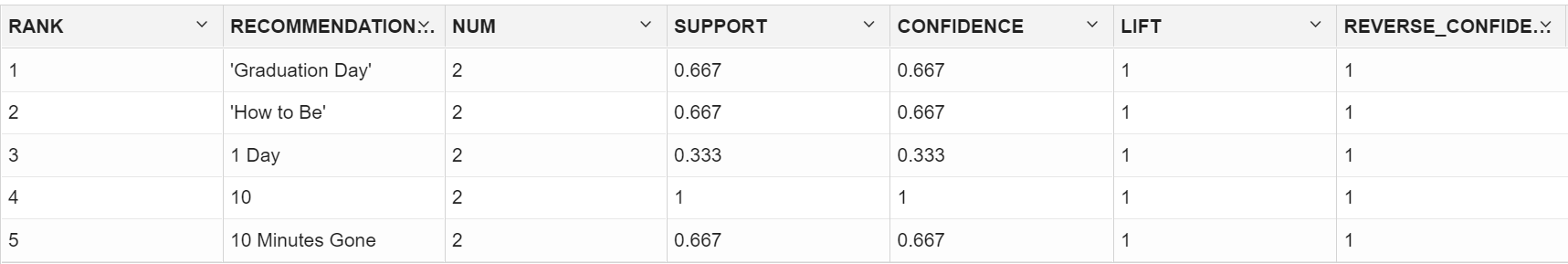

Evaluate

Evaluate your model by viewing diagnostic metrics and performing quality checks.

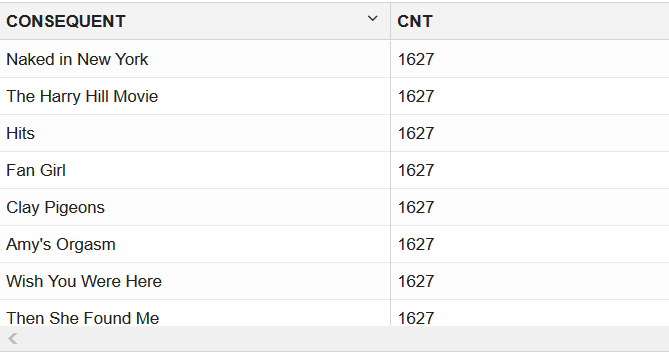

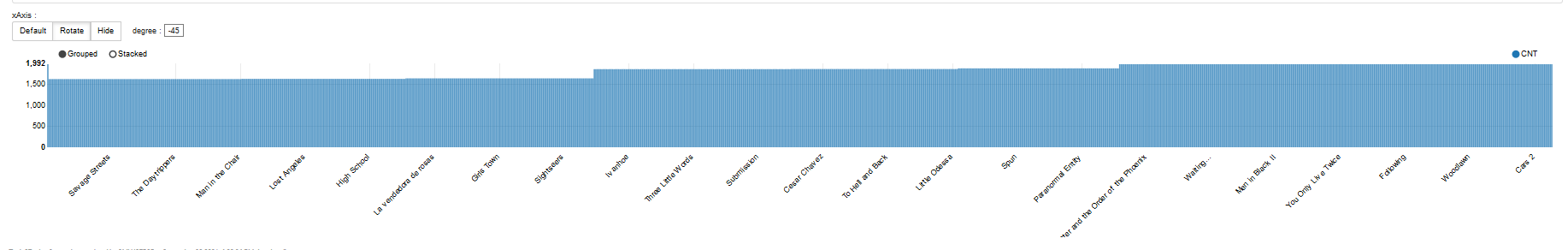

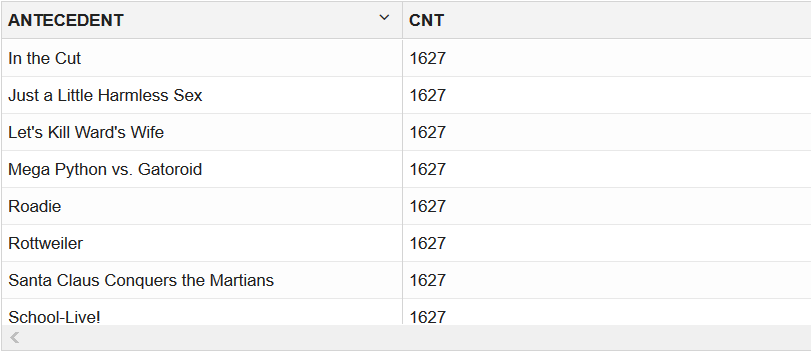

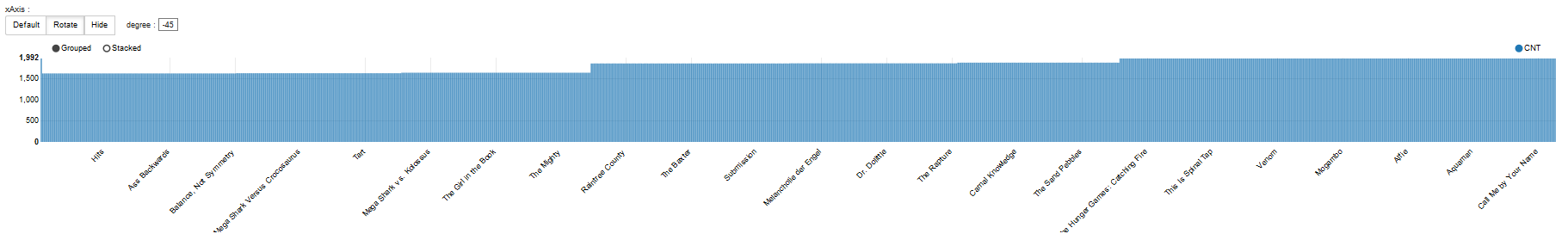

Sometimes querying dictionary views and model detail views is sufficient to measure your model's performance. However, you can evaluate your model by computing test metrics such as Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), confusion matrix, lift statistics, cost matrix, and so on. For Association Rules, you can inspect various rules to see if they reveal new insights for item dependencies (antecedent itemset implying consequent) or for unexpected relationships among items.

Dictionary and Model Views

To obtain information about the model and view model settings, you can query data dictionary views and model detail views. Specific views in model detail views display model statistics which can help you evaluate the model.

The results of an association model are the rules that identify patterns of association within the data. Oracle Machine Learning for SQL does not support a scoring operation for association modeling. Instead, support and confidence are the primary metrics for evaluating the quality of the rules that the model generates. These statistical measures can be used to rank the rules and hence the usefulness of the predictions.

Association rules can be applied as follows:

- Support: How often do these items occur together in the data when you apply Association Rules?

- Confidence: How frequently the consequent occurs in transactions that contain the antecedent.

- Value: How much business value is connected to item associations

Additionally, Oracle Machine Learning for SQL supports lift for association rules. Lift indicates the strength of a rule over the random co-occurrence of the antecedent and the consequent, given their individual support. Lift provides information about the improvement, the increase in probability of the consequent given the antecedent. Lift is defined as confidence of the combination of items divided by the support of the consequent. Any rule with an improvement of less than 1 does not indicate a real cross-selling opportunity, no matter how high its support and confidence, because it actually offers less ability to predict a purchase than does random chance.

The data dictionary views for Oracle Machine Learning are listed in the following table. A database administrator (DBA) and USER versions of the views are also available.

| View Name | Description |

|---|---|

| ALL_MINING_MODELS | Provides information about all accessible machine learning models |

| ALL_MINING_MODEL_ATTRIBUTES | Provides information about the attributes of all accessible machine learning models |

| ALL_MINING_MODEL_SETTINGS | Provides information about the configuration settings for all accessible machine learning models |

| ALL_MINING_MODEL_VIEWS | Provides information about the model views for all accessible machine learning models |

| ALL_MINING_MODEL_XFORMS | Provides the user-specified transformations embedded in all accessible machine learning models. |

Model detail views are specific to the algorithm. You can obtain more insights about the model you created by viewing the model detail views. The names of model detail views begin with DM$xx where xx corresponds to the view prefix. See Model Detail Views.