Feature Extraction Use Case Scenario

You are developing a software application to recognize handwritten digits, which can be used to scan student answer sheets or forms. You are using the feature extraction technique to reduce the dimensionality of the dataset to produce a new feature space. This feature space concentrates the signal of the original data as linear combinations of the original data.

In other scenarios, feature extraction can be used to extract document themes, classify features, and so on.

The reduced features can be used with other machine learning algorithms, for example, classification and clustering algorithms.

In this use case, you'll use the neural network algorithm to recognize handwritten digits on the transformed space and contrast this with the accuracy using the original data.

You are using the default feature extraction algorithm Non-Negative Matrix Factorization (NMF) in two ways:

- Creating the Projections of the Top 16 Features, and feeding a Neural Networks (NN) model with those features

- Using the correlation between the various attributes and the Top 6 feature vectors to do an Attribute Selection manually and then feeding a Neural Network model with those features.

You are creating Neural Networks models to try to predict the correct handwritten digits based on an 8x8 image matrix (64 input attributes).

Related Content

| Topic | Link |

|---|---|

| OML4SQL GitHub Example | Feature Extraction - Non-Negative Matrix Factorization |

CREATE_MODEL2 Procedure

|

CREATE_MODEL2 Procedure |

| Generic Model Settings | DBMS_DATA_MINING - Model Settings |

| Non-negative Matrix Factorization (NMF) Settings | DBMS_DATA_MINING - Algorithm Settings: Non-Negative Matrix Factorization |

| Data Dictionary Settings | Oracle Machine Learning Data Dictionary Views |

| NMF - Model Detail Views | Model Detail Views for Non-Negative Matrix Factorization |

| About Feature Extraction | Feature Extraction |

| About NMF | Non-Negative Matrix Factorization |

| About Classification | Classification |

| About Neural Network | Neural Network |

Before you start your OML4SQL use case journey, ensure that you have the following:

- Data Set

You are using the DIGITS data set from the Scikit library. In this example, a DDL script is used to create and load the DIGITS table into the database. You can also download the raw data set here: https://github.com/scikit-learn/scikit-learn/blob/main/sklearn/datasets/data/digits.csv.gz

- Database

Select or create database out of the following options:

- Get your FREE cloud account. Go to https://cloud.oracle.com/database and select Oracle Database Cloud Service (DBCS), or Oracle Autonomous Database. Create an account and create an instance. See Autonomous Database Quick Start Workshop.

- Download the latest version of Oracle Database (on premises).

- Machine Learning Tools

Depending on your database selection,

- Use OML Notebooks for Oracle Autonomous Database.

- Install and use Oracle SQL Developer connected to an on-premises database or DBCS. See Installing and Getting Started with SQL Developer.

- Other Requirements

Data Mining Privileges (this is automatically set for ADW). See System Privileges for Oracle Machine Learning for SQL.

Related Topics

Load Data

Create a table called DIGITS. This table is used to access the data set.

- Download the DDL script, https://objectstorage.us-ashburn-1.oraclecloud.com/n/adwc4pm/b/OML_Data/o/digits.sql on your system.

- Open the file with a text editor and replace

OML_USER02.DIGITSwith <your username>.DIGITS. For example, if your OML Notebook account username isOML_USER, replaceOML_USER02.DIGITSwithOML_USER.DIGITS. - Save the file.

- Copy the code and enter it into a notebook using OML Notebooks on ADB. Alternately, you can use Oracle SQL Developer with an on-premises Database or DBCS.

- Run the paragraph.

- Access the data.

- Examine the various attributes or columns of the data set.

- Assess data quality (by exploring the data).

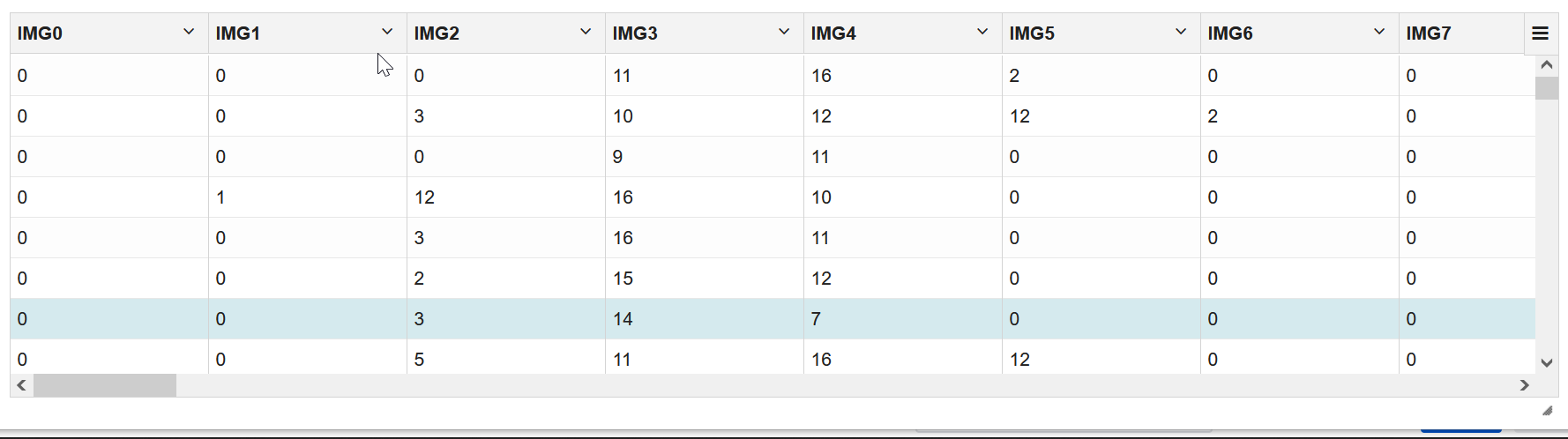

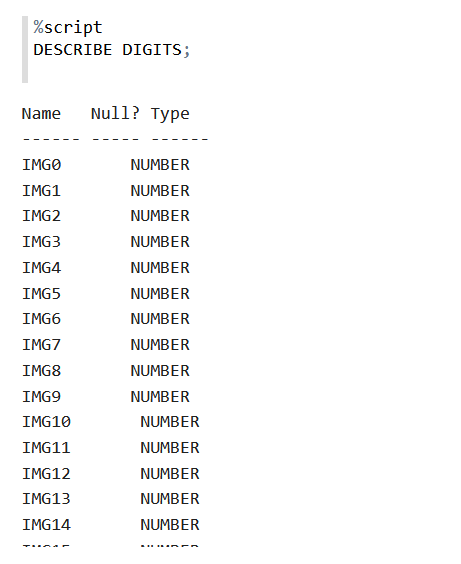

Examine Data

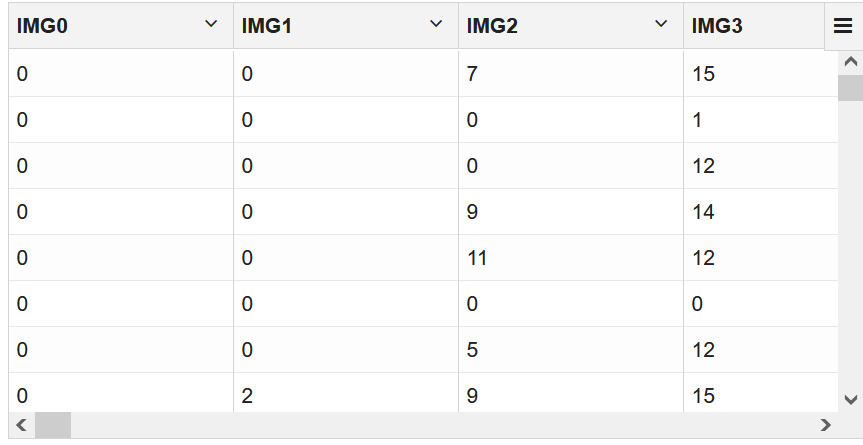

Digits data set has 64 numerical features or columns (8x8 pixel images). Each image is of a hand-written digit. The digits 0-9 are used in this data set.

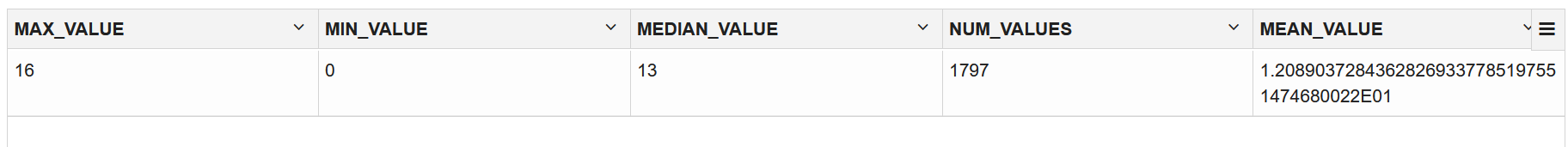

Explore Data

Once the data is accessible, explore the data to understand and assess the quality of the data. .

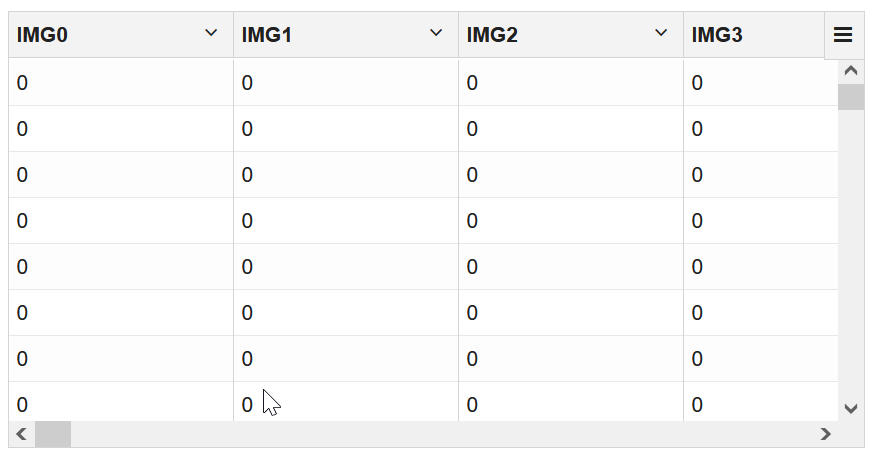

Assess Data Quality

Because this is a well-curated data set, it is free of noise, missing values (systemic or random), and outlier numeric values.

The following steps help you with the exploratory analysis of the data:

This completes the data exploration stage. OML supports Automatic Data Preparation (ADP). ADP is enabled through the model settings. When ADP is enabled, the transformations required by the algorithm are performed automatically and embedded in the model. This step is done during the Build Model stage. The commonly used methods of data preparation are binning, normalization, and missing value treatment.

Related Topics

Build Model

Build your model using your data set. Use the DBMS_DATA_MINING.CREATE_MODEL2 procedure to build your model and specify the model settings.

Algorithm Selection

You can choose one of the following algorithms to solve a Feature Extraction problem:

- Explicit Semantic Analysis (ESA) - this algorithm is not applicable for this use case data set.

- Non-Negative Matrix Factorization (NMF)

- Singular Value Decomposition (SVG)

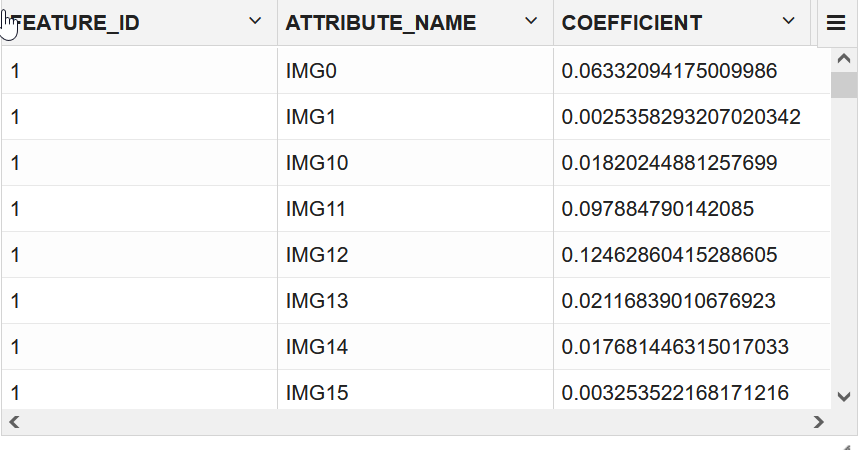

Non-Negative matrix factorization (NMF) is now a popular tool for analyzing high-dimensional data because it automatically extracts sparse (missing values with mostly zero; many cells or pixels in this data set likely have zeros, however they are not truly missing) and meaningful features from a set of non-negative data vectors. NMF uses a low-rank matrix approximation to approximate a matrix X such that X is approximately equal to WH. The sub-matrix W contains the NMF basis column vectors; the sub-matrix H contains the associated coefficients (weights). The ability of NMF to automatically extract sparse and easily interpretable factors has led to its popularity. In the case of image recognition, such as digit images, the base images depict various handwritten digit prototypes and the columns of H indicate which feature is present in which image. Oracle Machine Learning uses NMF as the default algorithm for Feature Extraction.

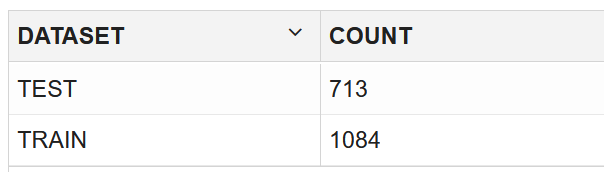

For this use case, split the data into 60/40 as training and test data to further use it to compare the NMF model with that of another model using Neural Network (NN). You are splitting the data because you want to see how the model performs on data that you haven't seen before. If you put the whole data set into the original NMF model and then split it before giving it to NN, the NMF model has already seen the data when you try to test it. When we have completely new data, the extract features will not be based on it. You build the model using the training data and once the model is built, score the test data using the model.

The following steps guide you to build your model with the selected algorithm.

Evaluate

Evaluate your model by viewing diagnostic metrics and performing quality checks.

There is no specific set of testing parameters for feature extraction. In this use case, the evaluation mostly consists of comparing the NN models with and among the NMF models.

Dictionary and Model Views

To obtain information about the model and view model settings, you can query data dictionary views and model detail views. Specific views in model detail views display model statistics which can help you evaluate the model.

You'll be querying dictionary views. A database administrator (DBA) and USER versions of the views are also available. See Oracle Machine Learning Data Dictionary Views to learn more about the available dictionary views. Model detail views are specific to the algorithm. You can obtain more insights about the model you created by viewing the model detail views. The names of model detail views begin with DM$xx where xx corresponds to the view prefix. See Model Detail Views for more information.

The following steps help you to view different dictionary views and model detail views.

Evaluate and Compare Models

You used the NMF feature extraction algorithm to transform your data and feed it into a NN model with the intention of improving predictive accuracy. You will now evaluate these classification models and compare the model accuracy.

You built a neural network classification model using the original data set, and then you used the data set transformed by the NMF model to build another neural network model. You will evaluate these classification models. When comparing these metrics, consider the model's quality by looking at prediction accuracy. Metrics can also be compared between models created by different feature extraction algorithms, the same extraction algorithm with different settings, or different classification algorithms and settings.

-

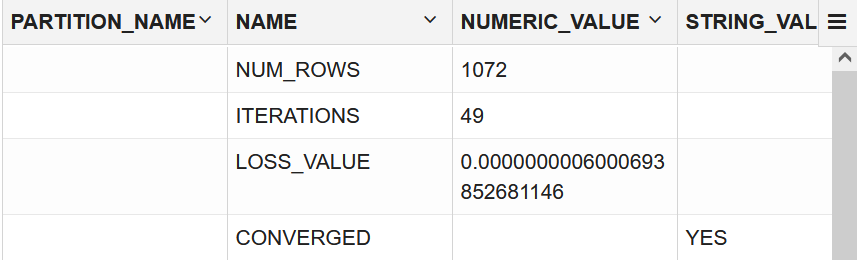

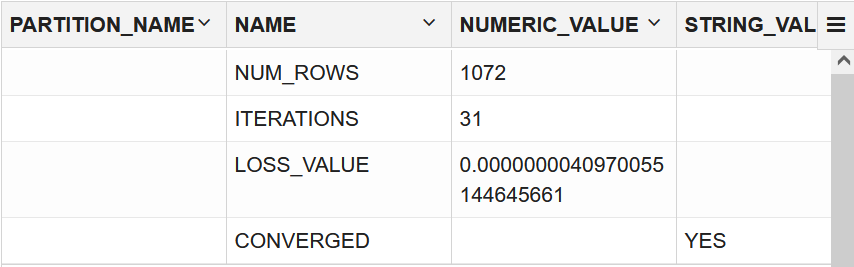

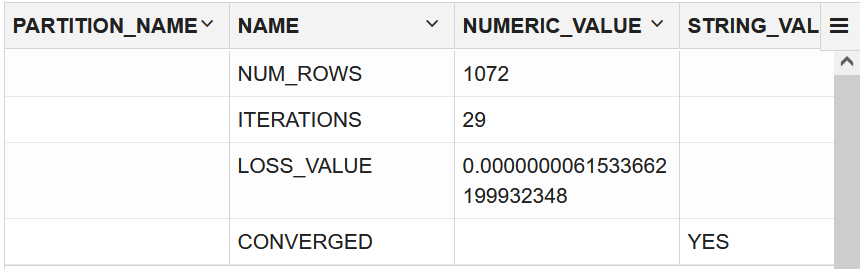

To check if the model is converged, view the model views of the Neural Network model.

%script SELECT VIEW_NAME, VIEW_TYPE FROM USER_MINING_MODEL_VIEWS WHERE MODEL_NAME='NN_ORIG_DIGITS' ORDER BY VIEW_NAME;VIEW_NAME VIEW_TYPE DM$VANN_ORIG_DIGITS Neural Network Weights DM$VCNN_ORIG_DIGITS Scoring Cost Matrix DM$VGNN_ORIG_DIGITS Global Name-Value Pairs DM$VNNN_ORIG_DIGITS Normalization and Missing Value Handling DM$VSNN_ORIG_DIGITS Computed Settings DM$VTNN_ORIG_DIGITS Classification Targets DM$VWNN_ORIG_DIGITS Model Build Alerts 7 rows selected. --------------------------- -

Display the view

DM$VGNN_ORIG_DIGITSto check the global name-value pairs to see if the model is converged which means that the model iterates until it reaches a point where no improvement in the result could be seen.%sql SELECT * from DM$VGNN_ORIG_DIGITS;

-

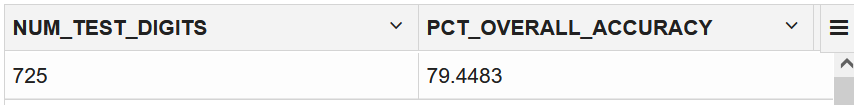

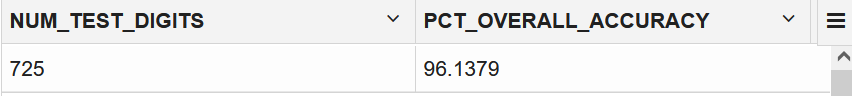

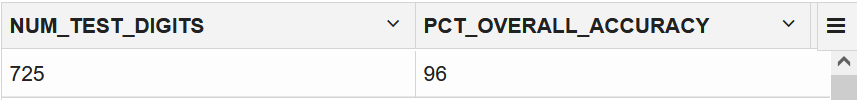

To check the quality of the model, run the following PCT accuracy code:

%sql SELECT count(*) NUM_TEST_DIGITS, ROUND((SUM(CASE WHEN (TARGET - PRED_TARGET) = 0 THEN 1 ELSE 0 END) / COUNT(*))*100,4) PCT_OVERALL_ACCURACY FROM (SELECT TARGET, ROUND(PREDICTION(NN_ORIG_DIGITS USING *), 1) PRED_TARGET FROM TEST_DIGITS)

-

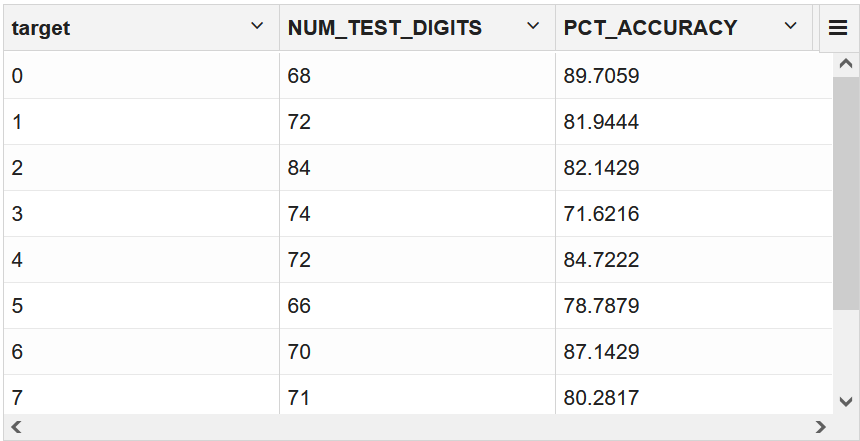

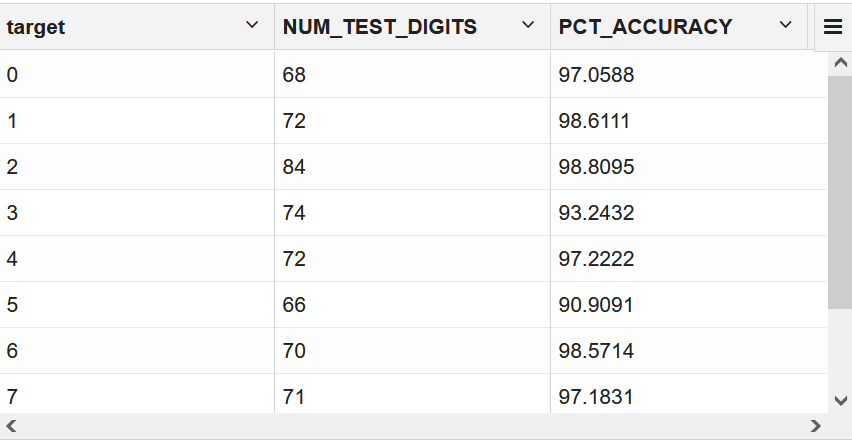

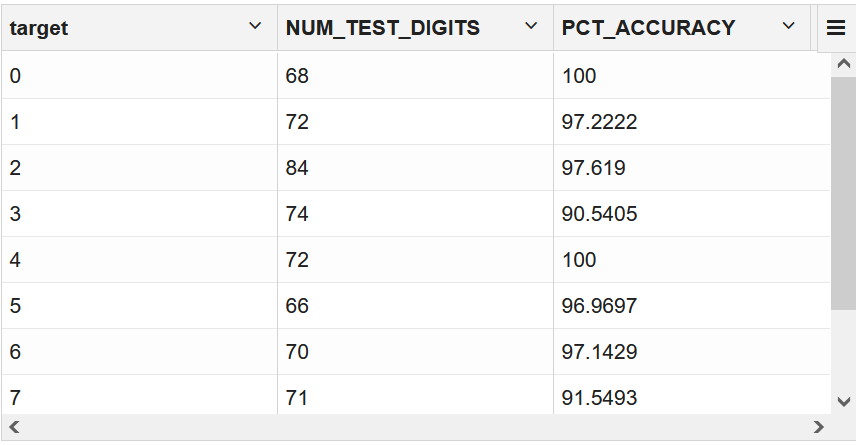

To check the quality of the model per target digit, run the following PCT accuracy code:

%sql SELECT TARGET, count(*) NUM_TEST_DIGITS, ROUND((SUM(CASE WHEN (TARGET - PRED_TARGET) = 0 THEN 1 ELSE 0 END) / COUNT(*))*100,4) PCT_ACCURACY FROM (SELECT TARGET, ROUND(PREDICTION(NN_ORIG_DIGITS USING *), 1) PRED_TARGET FROM TEST_DIGITS) GROUP BY TARGET ORDER BY TARGET

- To evaluate your model, use the following SQL

PREDICTIONfunction to generate a Confusion Matrix:%script SELECT "target" AS actual_target_value, PREDICTION(NN_ORIG_DIGITS USING *) AS predicted_target_value, COUNT(*) AS value FROM TEST_DIGITS GROUP BY "target", PREDICTION(NN_ORIG_DIGITS USING *) ORDER BY 1, 2;ACTUAL_TARGET_VALUE PREDICTED_TARGET_VALUE VALUE 0 0 81 1 1 83 1 8 1 2 1 1 2 2 72 3 2 1 3 3 68 3 5 1 4 4 65 4 7 1 5 5 63 5 6 1 5 7 1 5 9 1 ACTUAL_TARGET_VALUE PREDICTED_TARGET_VALUE VALUE 6 5 2 6 6 64 7 7 63 7 9 1 8 1 1 8 2 1 8 7 1 8 8 55 8 9 1 9 3 1 9 5 1 9 8 1 9 9 81 27 rows selected. ---------------------------

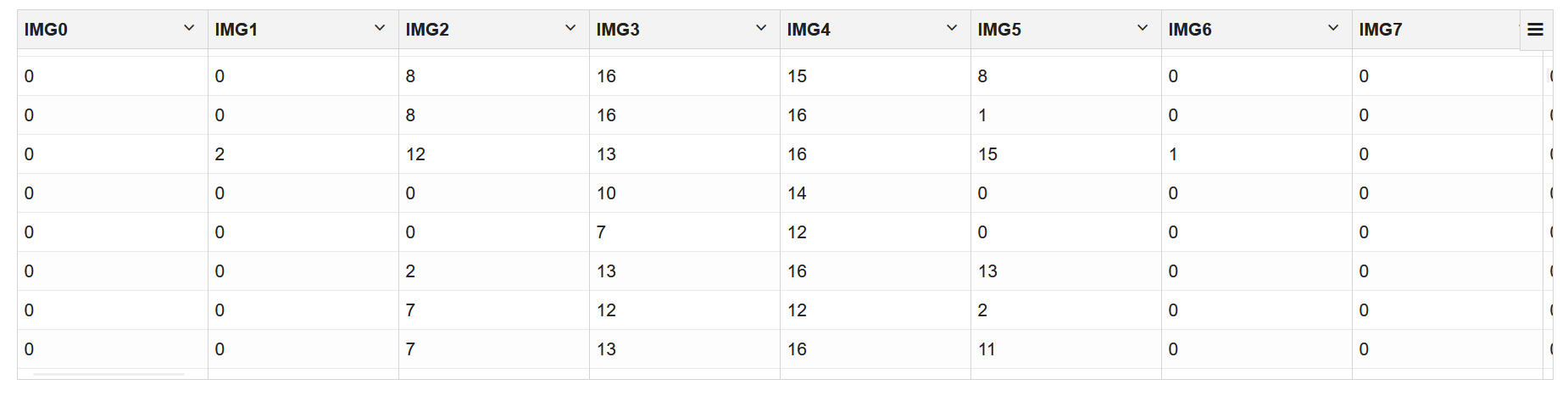

Score

Scoring an NMF model produces data projections in the new feature space. The magnitude of a projection indicates how strongly a record maps to a feature.

In this use case, there are two options to produce projections to compare using the Neural Network model. One is to build a neural network model on the top 16 features of NMF to predict the digits. Another is to build a neural network model by manually selecting the original attributes with the highest coefficients for each feature vector.

For option one, create a new TRAIN and TEST view

and then build a NN model.

Score with Selected Attributes

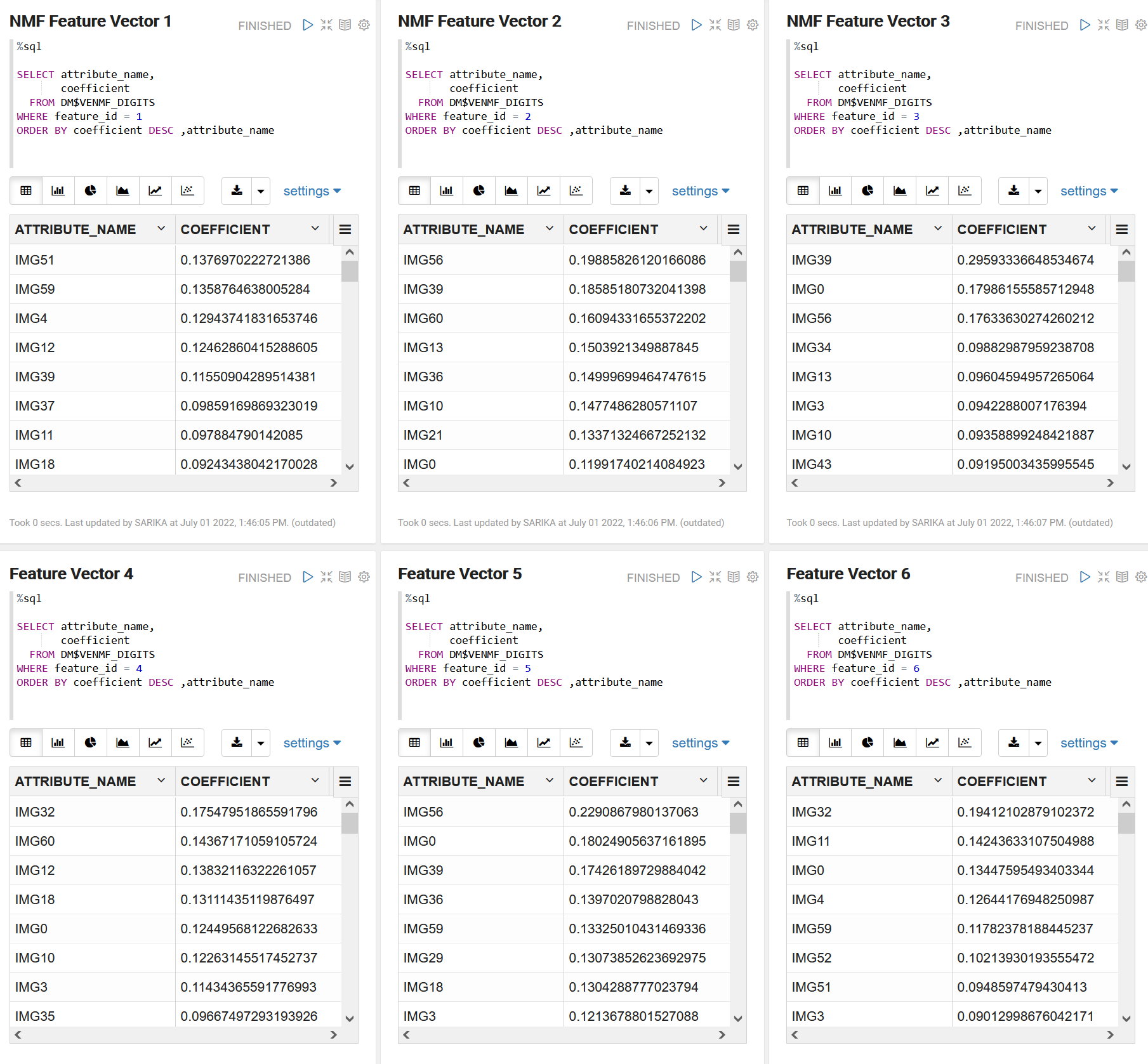

You are creating another Neural Network model by manually selecting the attributes in each extracted feature vector based on their coefficients.

- Feature 1: IMG51, IMG12, IMG59, IMG4, IMG27, IMG28, IMG44, IMG11, IMG37, IMG61, IMG50

- Feature 2: IMG32, IMG39, IMG56, IMG0, IMG20, IMG19,

- Feature 3: IMG18, IMG36, IMG26, IMG21, IMG42

- Feature 4: IMG60, IMG3, IMG43, IMG34,

- Feature 5: IMG53, IMG13, IMG58, IMG10

- Feature 6: IMG29, IMG35, IMG52

Now create a new TRAIN and TEST view and then build

a neural network model.

Table 3-1 PCT Accuracy Comparison

| Model | NUM_TEST_DIGITS | PCT_OVERALL_ACCURACY |

|---|---|---|

| NN model 1 with extracted features as input | 725 | 96 |

| NN model 2 with top 16 features of NMF data | 725 | 79.4483 |

| NN model 3 with original attributes with highest coeffient for each feature vector | 725 | 96.1379 |

You found that the NN model using the 16 NMF Feature projections had a lower overall accuracy. However, the model using a reduced set of Attributes as input to the NN (using 33 out of the 64 total attributes, as suggested by NMF as being the most important) has shown a slightly better overall accuracy when compared to the original.

This way you can use one of these models for your app to read student sheets or forms to recognize handwritten numbers.