Regression Use Case Scenario

A real estate agent approaches you, a data scientist, to provide assistance in evaluating house prices in Boston. The agent requires this information on a daily basis to provide targeted services to clients. Using the Generalized Linear Model algorithm for Regression, you estimate the median value of owner-occupied homes in the Boston area.

Related Content

| Topic | Link |

|---|---|

| OML4SQL GitHub Example | Regression - GLM |

CREATE_MODEL2 Procedure

|

CREATE_MODEL2 Procedure |

| Generic Model Settings | DBMS_DATA_MINING - Model Settings |

| Generalized Linear Model Settings | DBMS_DATA_MINING - Algorithm Settings: Generalized Linear Models |

| Data Dictionary Settings | Oracle Machine Learning Data Dictionary Views |

| Generalized Linear Model - Model Detail Views | Model Detail Views for Generalized Linear Model |

| About Regression | About Regression |

| About Generalized Linear Model (GLM) | About Generalized Linear Models |

Before you start your OML4SQL use case journey, ensure that you have the following:

- Data setDownload the data set from https://github.com/scikit-learn/scikit-learn/blob/master/sklearn/datasets/data/boston_house_prices.csv.

Note:

This data set is used for illustrative purpose only. - Database Select or create database out of the following options:

- Get your FREE cloud account. Go to https://cloud.oracle.com/database and select Oracle Database Cloud Service (DBCS), or Oracle Autonomous Database. Create an account and create an instance. See Autonomous Database Quick Start Workshop.

- Download the latest version of Oracle Database (on premises).

- Machine Learning ToolsDepending on your database selection,

- Use OML Notebooks for Oracle Autonomous Database.

- Install and use Oracle SQL Developer connected to an on-premises database or DBCS. See Installing and Getting Started with SQL Developer.

- Other Requirements

Data Mining Privileges (this is automatically set for ADW). See System Privileges for Oracle Machine Learning for SQL.

Load Data

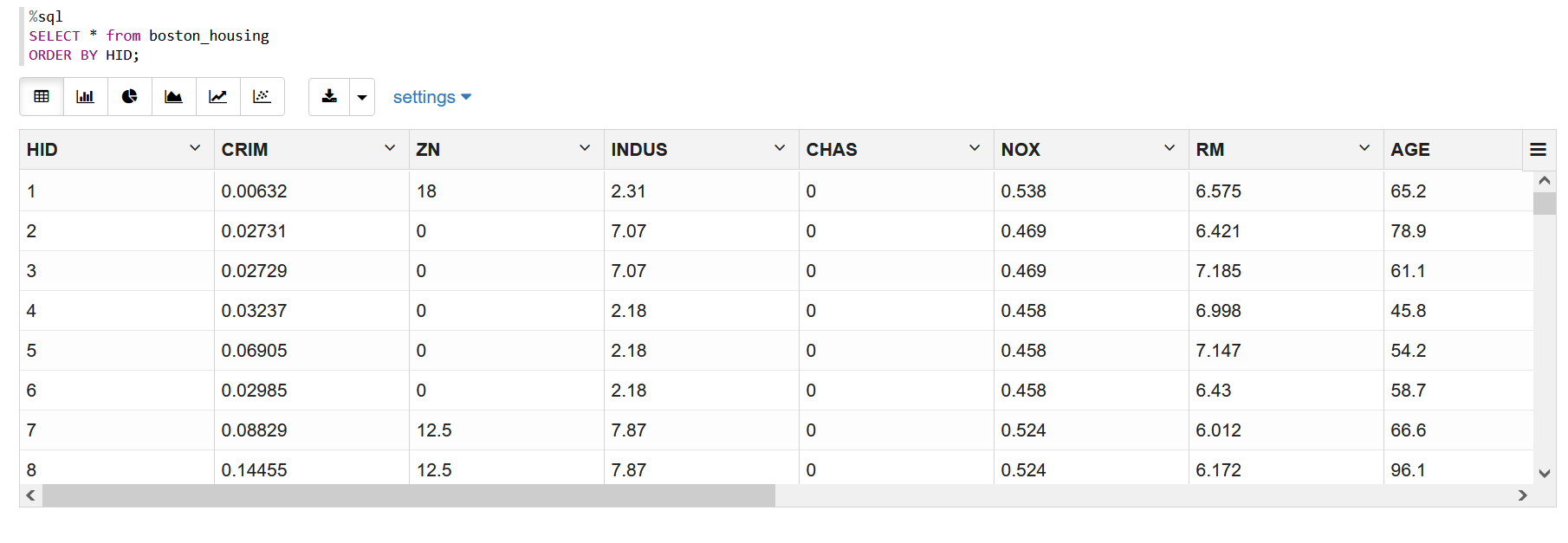

Examine the data set and its attributes. Load the data in your database.

Examine Data

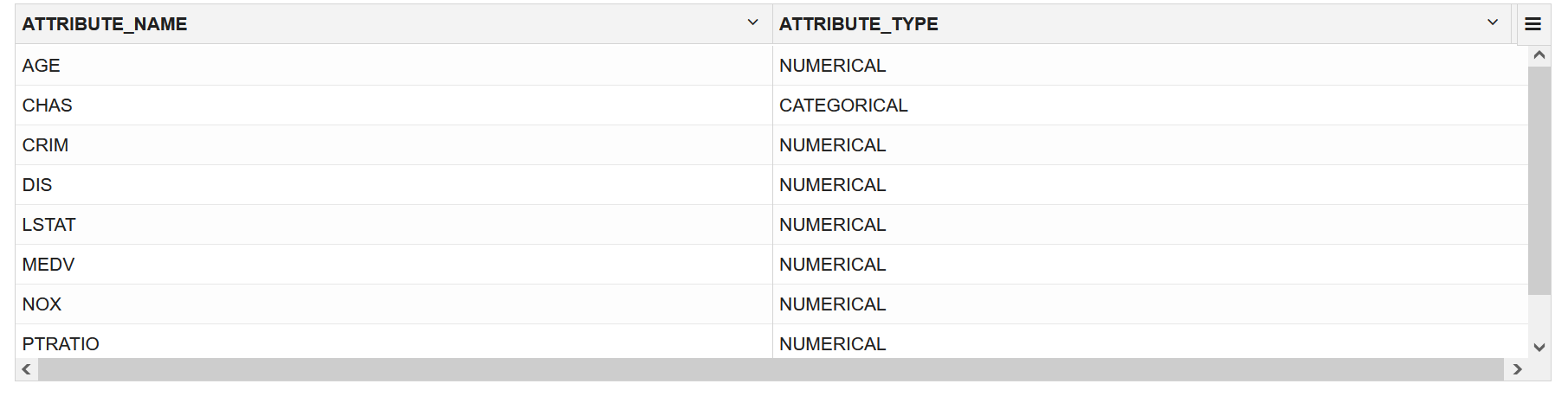

There are 13 attributes in the data set. This is a customized data set that excludes one attribute from the original data set. The following table displays information about the data attributes:

| Attribute Name | Information |

|---|---|

| CRIM | Per capita crime rate by town |

| ZN | The proportion of residential land zoned for lots over 25,000 sq.ft. |

| INDUS | The proportion of non-retail business acres per town |

| CHAS | Charles River dummy variable (= 1 if tract bounds river; 0 otherwise) |

| NOX | Nitric oxides concentration (parts per 10 million) |

| RM | The average number of rooms per dwelling |

| AGE | The proportion of owner-occupied units built before 1940 |

| DIS | Weighted distances to five Boston employment centers |

| RAD | Index of accessibility to radial highways |

| TAX | Full-value property-tax rate per $10,000 |

| PTRATIO | The pupil-teacher ratio by town |

| LSTAT | % lower status of the population |

| MEDV | The median value of owner-occupied homes in $1000’s |

Related Topics

Add a Column

In this data set, no row identifier uniquely identifies each record in the data set. Add a new case_id column. The case_id assists with reproducible results, joining scores for individual customers with other data in, example, scoring data table.

case_id. Here, HID is the case_id.

To add the HID column:

- Open the .csv file in a spreadsheet.

- Delete the first row with 506 and 13. Now, the row with the column names becomes the first row.

- To the left of the data set, add a column.

- Enter HID as the column name.

- In the HID column enter 1 as the first value identifying the first row.

- You will see a + icon in the spreadsheet cell. Drag the + icon right to the bottom till the end of the records.

- Right-click and select Fill Series.

- To remove the column "B" from the data set, select the entire column with the title B by right clicking on the top of the column, and then select Delete.

Import Data

There are various methods to import data into the database. Two methods are explained here. One using SQL Developer (for on-premises) and the other using Object Storage (for Cloud).

Import Data into the Database (On premises)

To access the data set, import the modified data set into the database using SQL Developer.

- Launch SQL Developer on your system.

- Import the modified .csv file. See Tables.

- Set House ID (HID) as a primary key. This column identifies each record and helps in retrieving information about a specific record. The HID column helps when you join tables or views. See Primary Key Constraint.

Import Data to the Cloud

If you are using a cloud account, one of the methods of importing the data is through Object Storage. Upload the data set to an Object Storage. The Object Storage URI will be used in another procedure.

You can load data into your Oracle Autonomous Database (Autonomous Data Warehouse [ADW] or Autonomous Transaction Processing [ATP]) using Oracle Database tools, and Oracle and 3rd party data integration tools. You can load data:

- from local files in your client computer, or

- from files stored in a cloud-based object store

Follow the steps to upload your data file to the Object Storage bucket.

- Login to your cloud account.

- Click the left-side hamburger menu and select Storage from the menu.

- Select Buckets from the Object Storage & Archive Storage option.

- Select the compartment in which you want to upload the data.

- Click Create Bucket.

- Enter a name for your bucket. For example, Bucket1. Leave the rest of the fields as default.

- Click Create.

- Click on the bucket that you created. Scroll down and click Upload under Objects.

- Leave the Object Name Prefix field black. Click select files to navigate to the data file that you want to upload or drag and drop the data file. In this use case, select the modified .csv file.

- Click Upload. The data file appears under Objects.

- Click the ellipses on the right side of the data file to view the menu. Click View Object Details.

- Copy the URL PATH (URI) to a text file. This URI is used in the

DBMS_CLOUD.COPY_DATAprocedure.

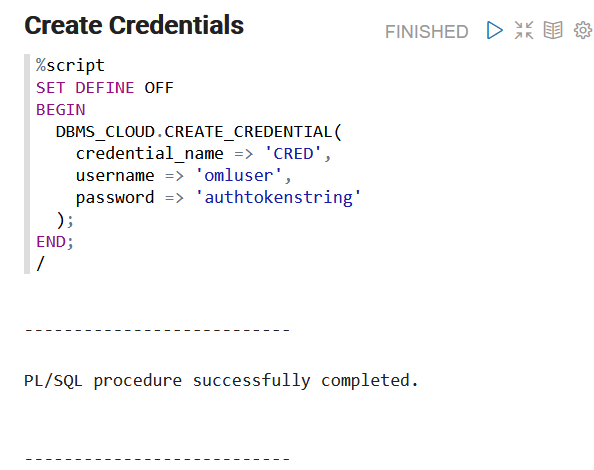

Create Auth Token

The Auth Token is required in the DBMS_CLOUD.CREATE_CREDENTIAL procedure. You can generate the Auth Token in your cloud account.

- Login into your ADW Cloud account.

- Hover your mouse cursor over the human figure icon at the top right of the console and click User Settings from the drop-down menu.

- Click Auth Tokens under Resources on the left of the console.

- Click Generate Token. A pop-up dialog appears.

- Enter a description (optional).

- Click Generate Token.

- Copy the generated token to a text file. The token does not appear again.

- Click Close.

Create a Table

Create a table called BOSTON_HOUSING. This table is used in DBMS_CLOUD.COPY_DATA procedure to access the data set.

Enter the following code in a new pare of the notebook that you created and run the notebook.

%sql

CREATE table boston_housing

(

HID NUMBER NOT NULL,

CRIM NUMBER,

ZN NUMBER,

INDUS NUMBER,

CHAS VARCHAR2(32),

NOX NUMBER,

RM NUMBER,

AGE NUMBER,

DIS NUMBER,

RAD NUMBER,

TAX NUMBER,

PTRATIO NUMBER,

LSTAT NUMBER,

MEDV NUMBER

);Load Data in the Table

Load the data set stored in object storage to the BOSTON_HOUSING table.

%script

BEGIN

DBMS_CLOUD.COPY_DATA(

table_name =>'BOSTON_HOUSING',

credential_name =>'CRED',

file_uri_list =>'https://objectstorage.us-phoenix-1.oraclecloud.com/n/namespace-string/b/bucketname/o/filename.csv',

format => json_object('type' value 'CSV', 'skipheaders' value 1)

);

END;table_name: is the target table’s name.credential_name: is the name of the credential created earlier.file_uri_list: is a comma delimited list of the source files you want to load.format: defines the options you can specify to describe the format of the source file, including whether the file is of type text, ORC, Parquet, or Avro.

In this example, namespace-string is the Oracle Cloud Infrastructure object storage namespace and bucketname is the storage bucket name that you created earlier (for example, Bucket1), and filename.csv is the modified .csv file name that you uploaded to the storage bucket.

Related Topics

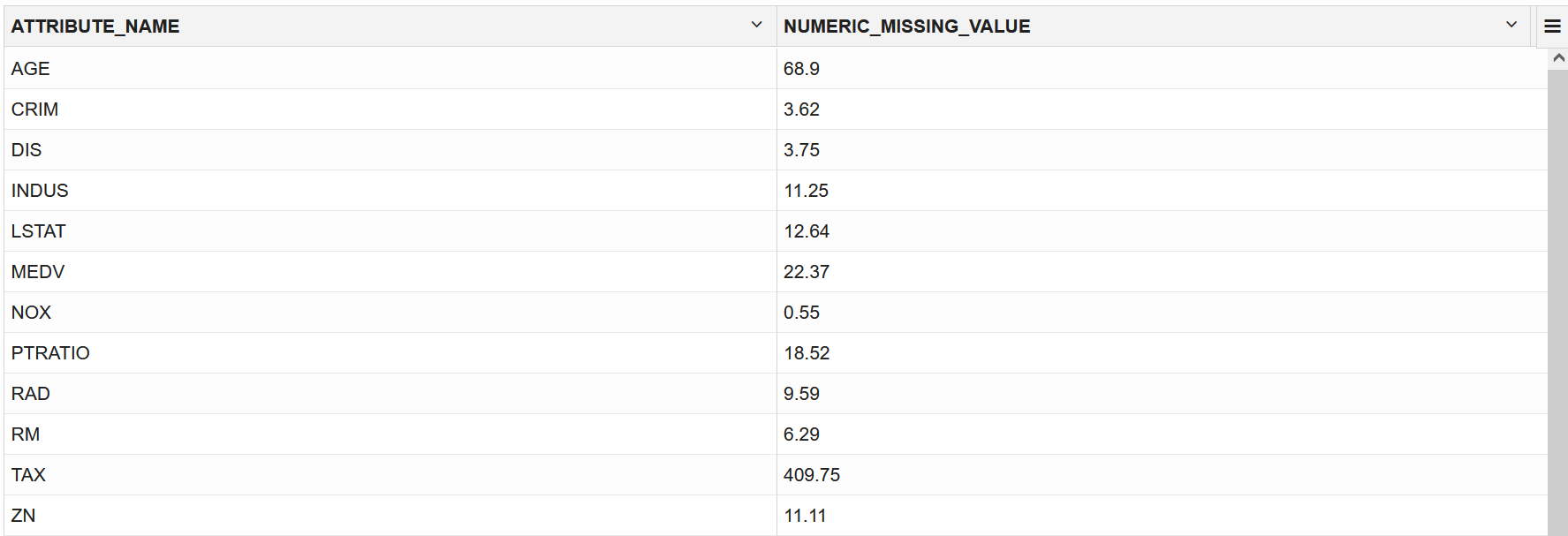

Explore Data

Explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values.

The following steps help you with the exploratory analysis of the data:

Related Topics

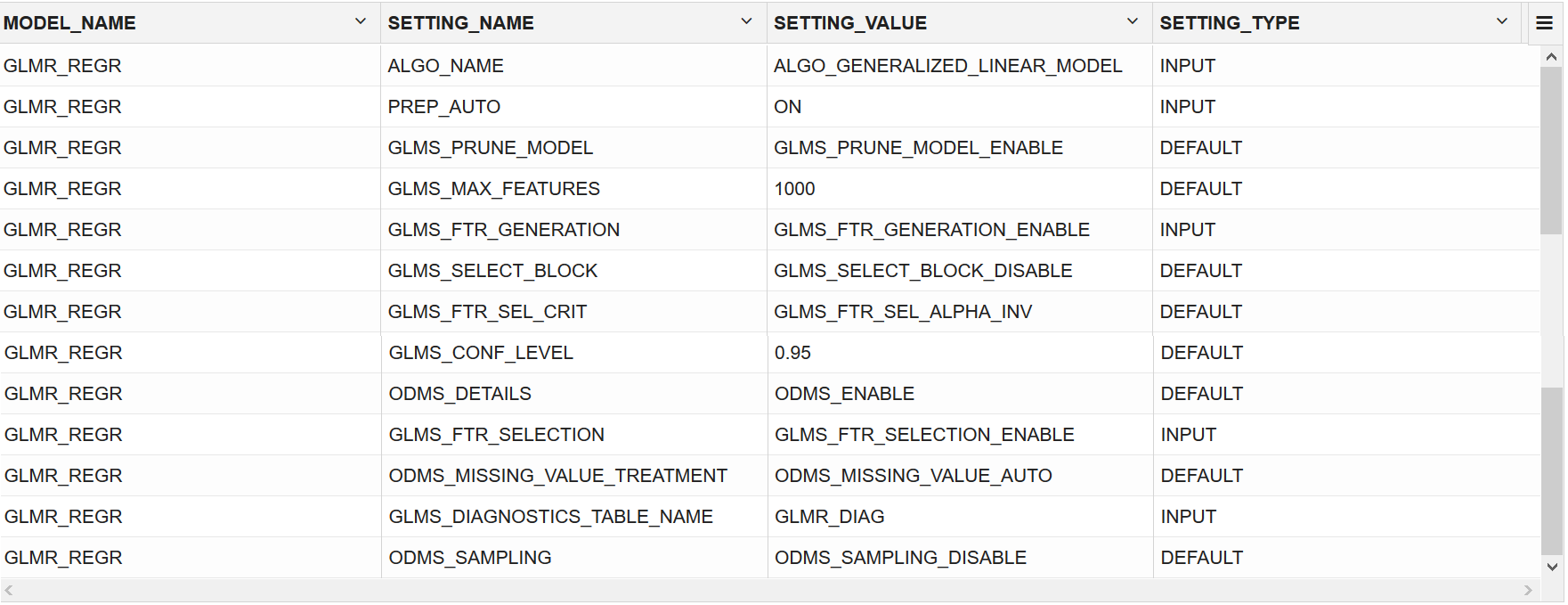

Build Model

Build your model using the training data set. Use the DBMS_DATA_MINING.CREATE_MODEL2 procedure to build your model and specify model settings.

Algorithm Selection

Before you build a model, choose the suitable algorithm. You can choose one of the following algorithms to solve a regression problem:- Extreme Gradient Boosting

- Generalized Linear Model

- Neural Network

- Support Vector Machine

When you want to understand the data set, you always start from a simple and easy baseline model. The Generalized Linear Model algorithm is the right choice because it is simple and easy to interpret since it fits a linear relationship between the feature and the target. You can get an initial understanding of a new data set from the result of the linear model.

The following steps guide you to split your data and build your model with the selected algorithm.

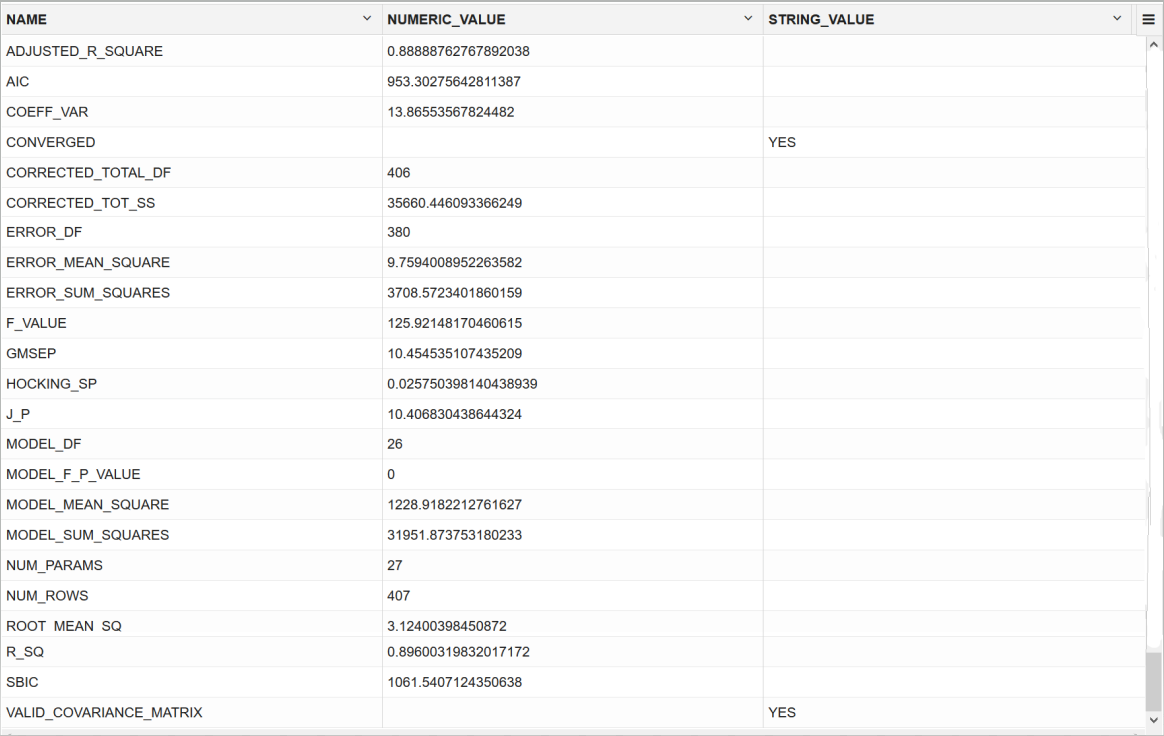

Evaluate

Evaluate your model by viewing diagnostic metrics and performing quality checks.

Sometimes querying dictionary views and model detail views is sufficient to measure your model's performance. However, you can evaluate your model by computing test metrics such as Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), confusion matrix, lift statistics, cost matrix, and so on. For Association Rules, you can inspect various rules to see if they reveal new insights for item dependencies (antecedent itemset implying consequent) or for unexpected relationships among items.

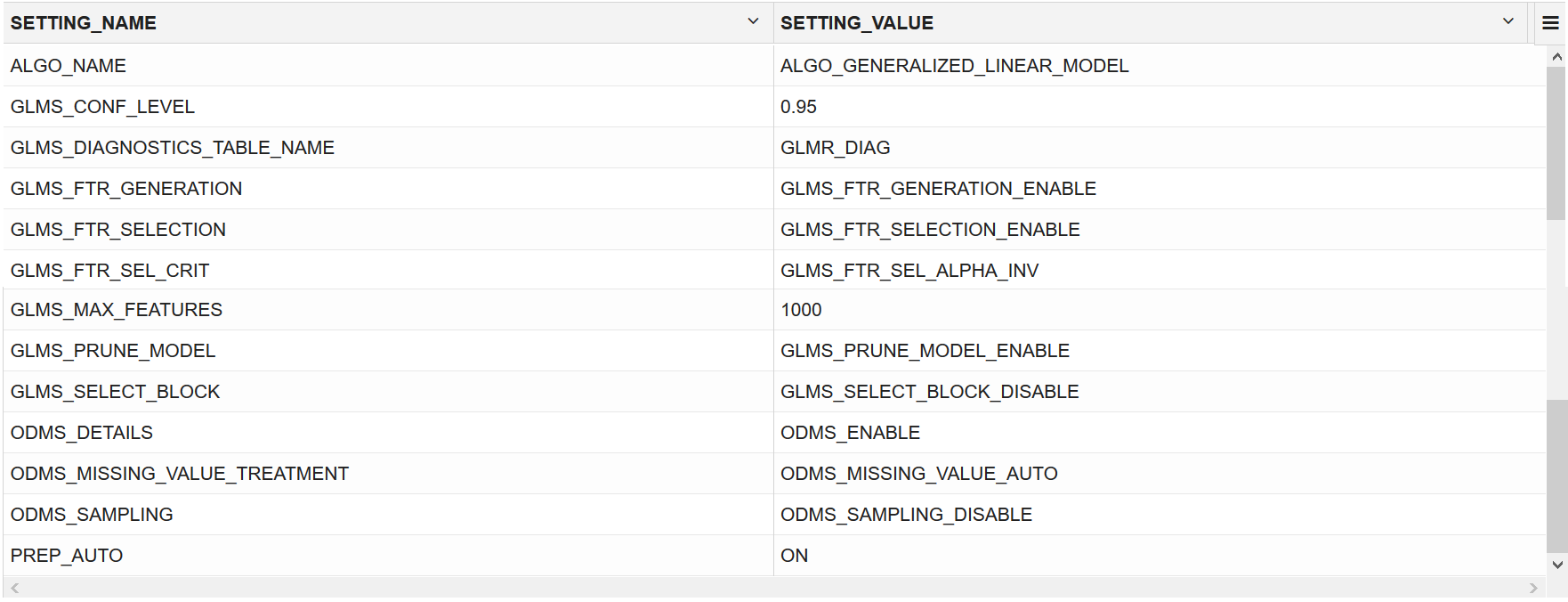

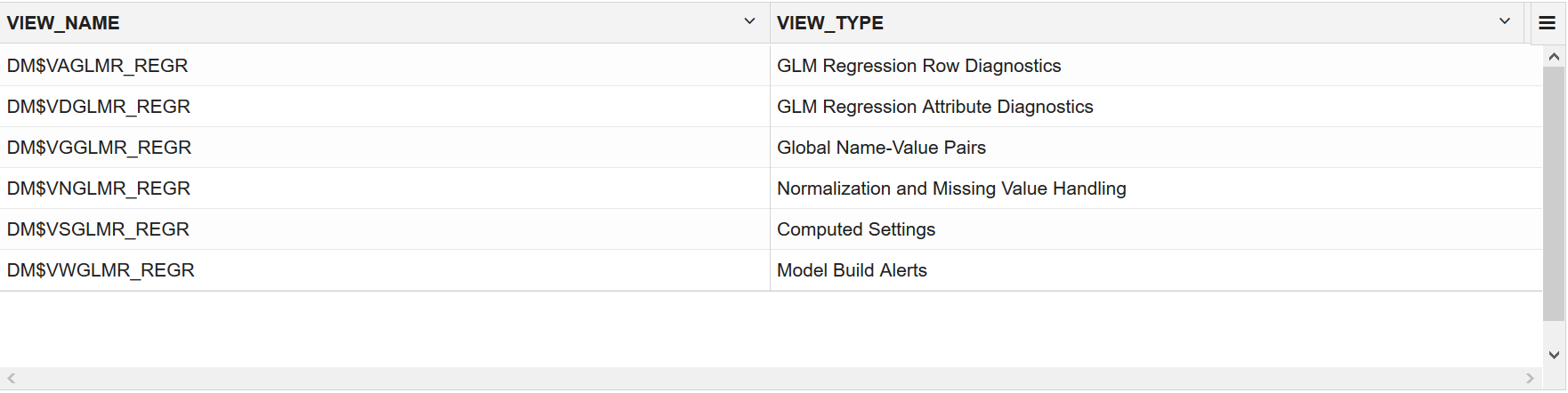

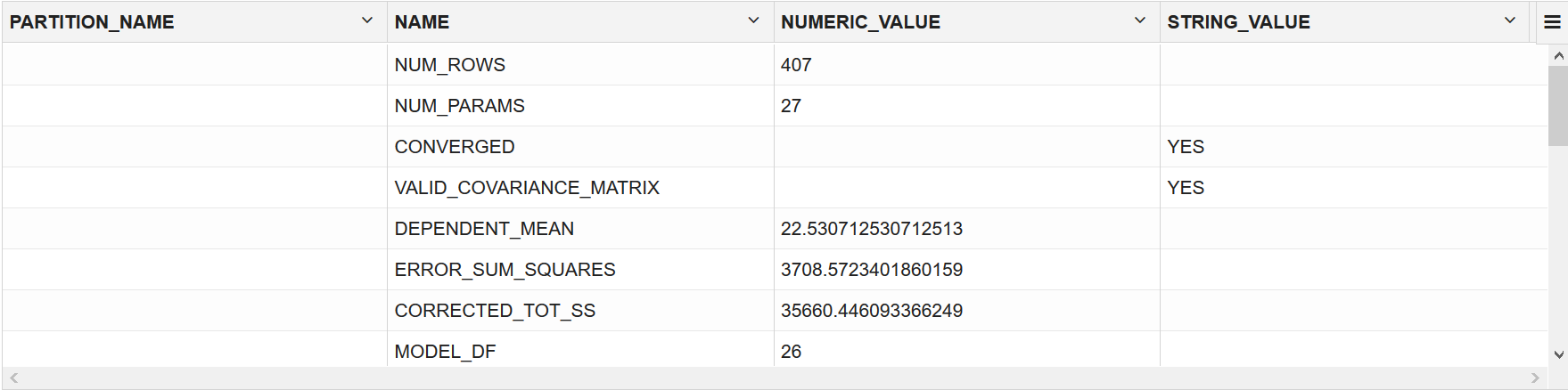

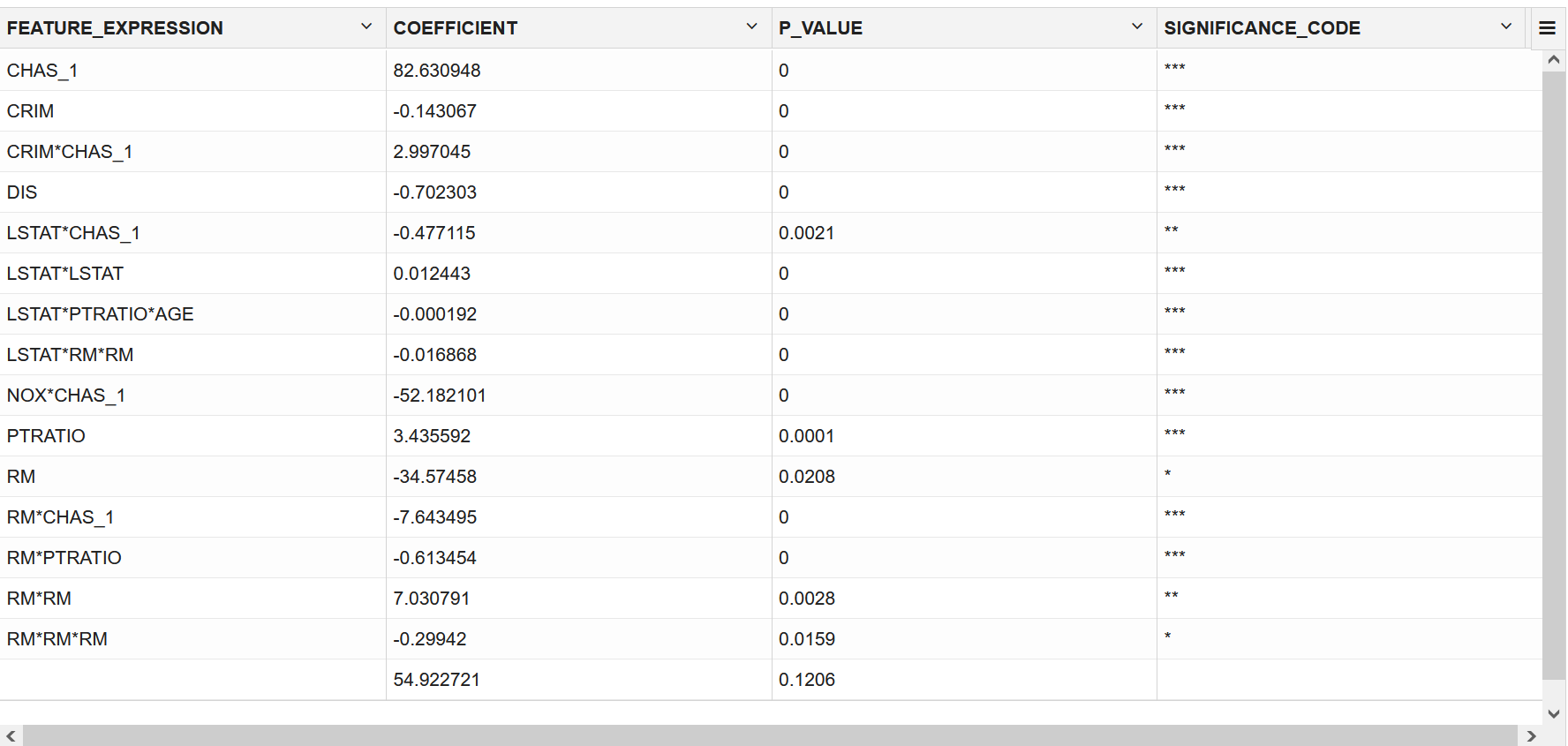

Dictionary and Model Views

To obtain information about the model and view model settings, you can query data dictionary views and model detail views. Specific views in model detail views display model statistics which can help you evaluate the model.

The data dictionary views for Oracle Machine Learning are listed in the following table. A database administrator (DBA) and USER versions of the views are also available.

| View Name | Description |

|---|---|

| ALL_MINING_MODELS | Provides information about all accessible machine learning models |

| ALL_MINING_MODEL_ATTRIBUTES | Provides information about the attributes of all accessible machine learning models |

| ALL_MINING_MODEL_SETTINGS | Provides information about the configuration settings for all accessible machine learning models |

| ALL_MINING_MODEL_VIEWS | Provides information about the model views for all accessible machine learning models |

| ALL_MINING_MODEL_XFORMS | Provides the user-specified transformations embedded in all accessible machine learning models. |

Model detail views are specific to the algorithm. You can obtain more insights about the model you created by viewing the model detail views. The names of model detail views begin with DM$xx where xx corresponds to the view prefix. See Model Detail Views.

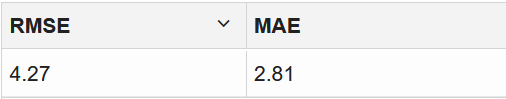

Test Your Model

In this use case, you are evaluating a regression model by computing Root Mean Square Error (RMSE) and Mean Absolute Error Mean (MAE) on the test data with known target values and comparing the predicted values with the known values.

For this use case, you compute Root Mean Square Error (RMSE) and Mean Absolute Error Mean (MAE) values. The RMSE and MAE are popular regression statistics. RMSE is an estimator for predictive models. The score averages the residuals for each case to yield a single indicator of model error. Mean absolute error is useful for understanding how close overall the predictions were to actual values. A smaller score means predictions were more accurate.

The following steps computes the error metrics for your model.

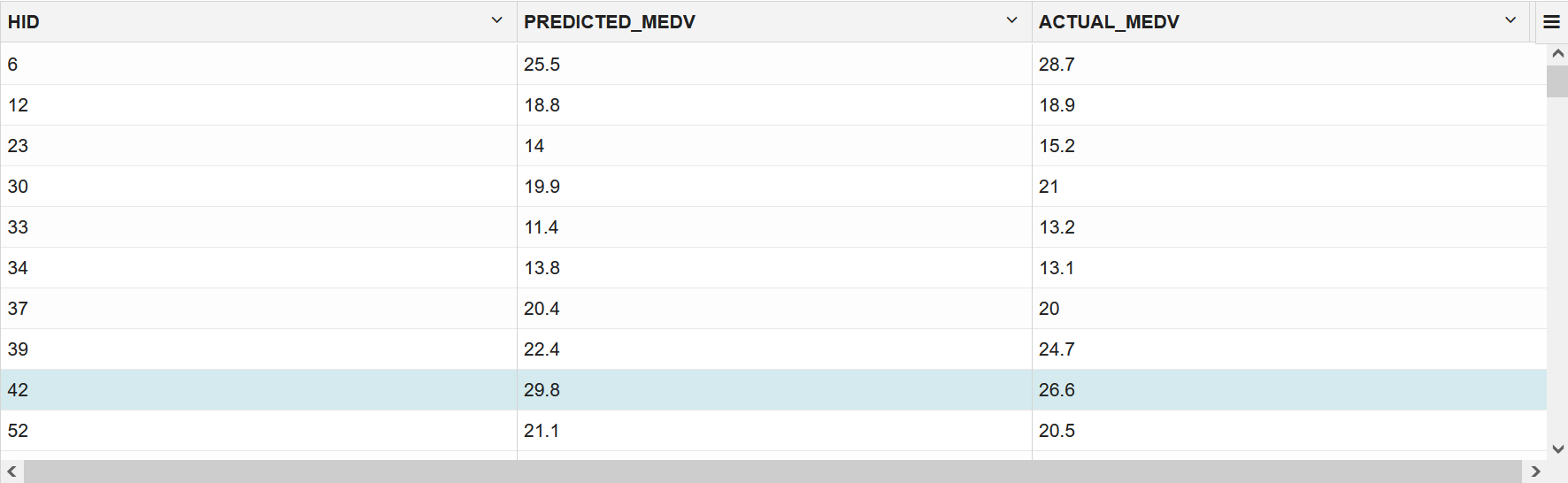

Score

Scoring involves applying the model to the target data. Use PREDICTION query to predict the MEDV value on the test data.

The following step scores the test data comparing with the original data.