Classification Use Case Scenario

You are working in a retail chain company that sells some products. To better target their marketing materials, they need to identify customers who are likely to purchase a home theater package. To resolve this, you are using the Random Forest algorithm to identify the customers.

Related Content

| Topic | Link |

|---|---|

| OML4SQL GitHub Example | Classification - Random Forest |

CREATE_MODEL2 Procedure

|

CREATE_MODEL2 Procedure |

| Generic Model Settings | DBMS_DATA_MINING - Model Settings |

| Random Forest Settings | DBMS_DATA_MINING - Algorithm Settings: Random Forest |

| Data Dictionary Settings | Oracle Machine Learning Data Dictionary Views |

| Random Forest - Model Detail Views | Model Detail Views for Random Forest |

| About Classification | About Classification |

| About Random Forest (RF) | About Random Forest |

Before you start your OML4SQL use case journey, ensure that you have the following:

- Data Set

The data set used for this use case is from the SH schema. The SH schema can be readily accessed in Oracle Autonomous Database. For on-premises databases, the schema is installed during the installation or can be manually installed by downloading the scripts. See Installing the Sample Schemas.

- Database

Select or create database out of the following options:

- Get your FREE cloud account. Go to https://cloud.oracle.com/database and select Oracle Database Cloud Service (DBCS), or Oracle Autonomous Database. Create an account and create an instance. See Autonomous Database Quick Start Workshop.

- Download the latest version of Oracle Database (on premises).

- Machine Learning Tools

Depending on your database selection,

- Use OML Notebooks for Oracle Autonomous Database.

- Install and use Oracle SQL Developer connected to an on-premises database or DBCS. See Installing and Getting Started with SQL Developer.

- Other Requirements

Data Mining Privileges (this is automatically set for ADW). See System Privileges for Oracle Machine Learning for SQL.

Related Topics

Load Data

Access the data set from the SH Schema and explore the data to understand the attributes.

Remember:

The data set used for this use case is from the SH schema. The SH schema can be readily accessed in Oracle Autonomous Database. For on-premises databases, the schema is installed during the installation or can be manually installed by downloading the scripts. See Installing the Sample Schemas.

- Access the data.

- Examine the various attributes or columns of the data set.

- Assess data quality (by exploring the data).

Access Data

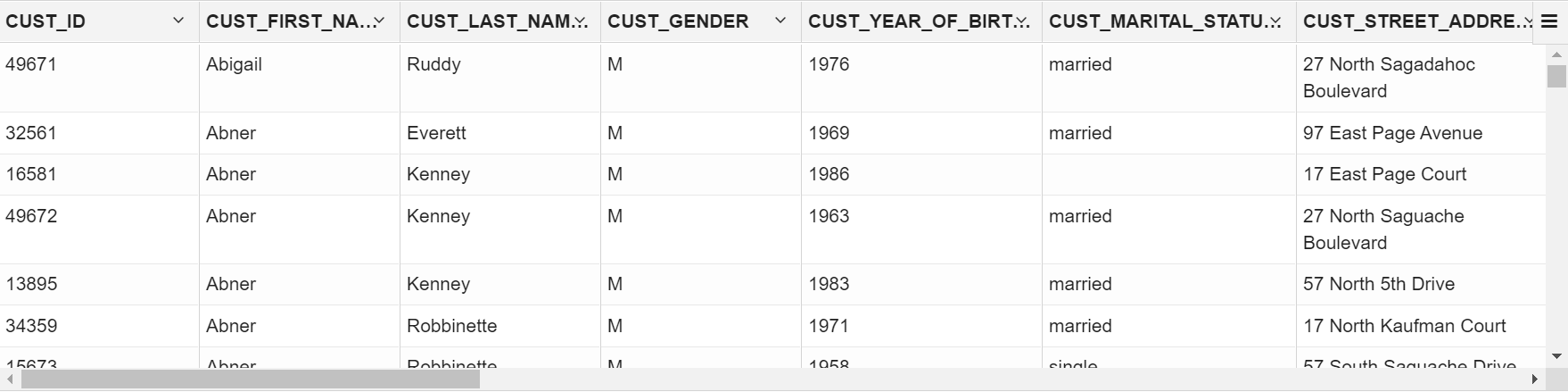

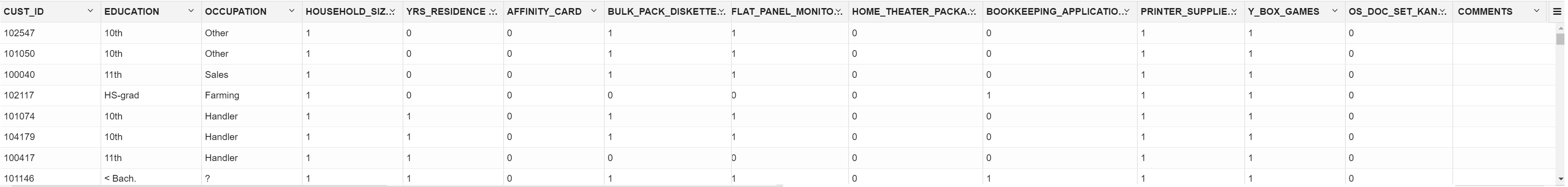

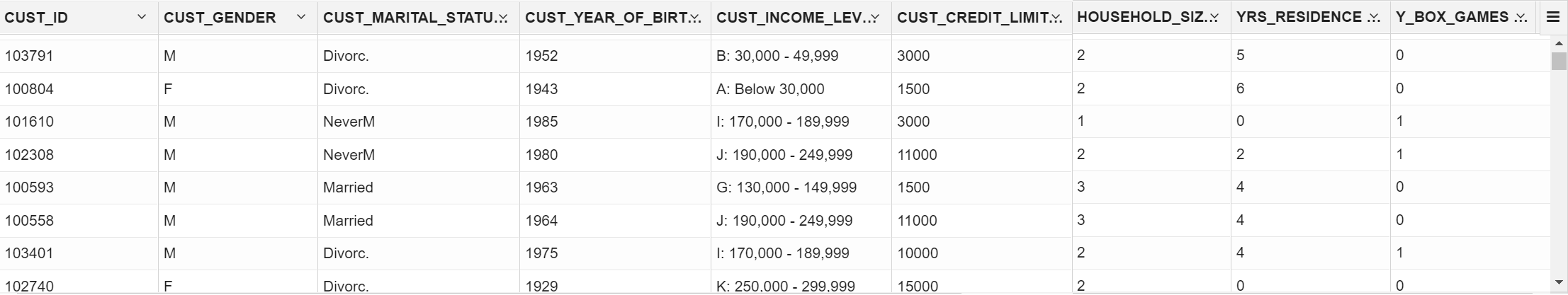

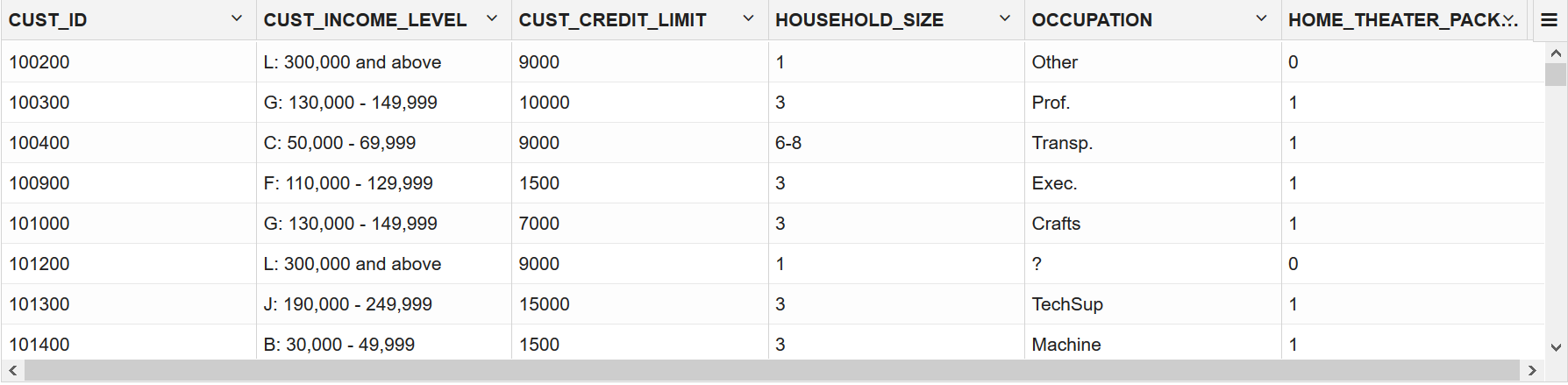

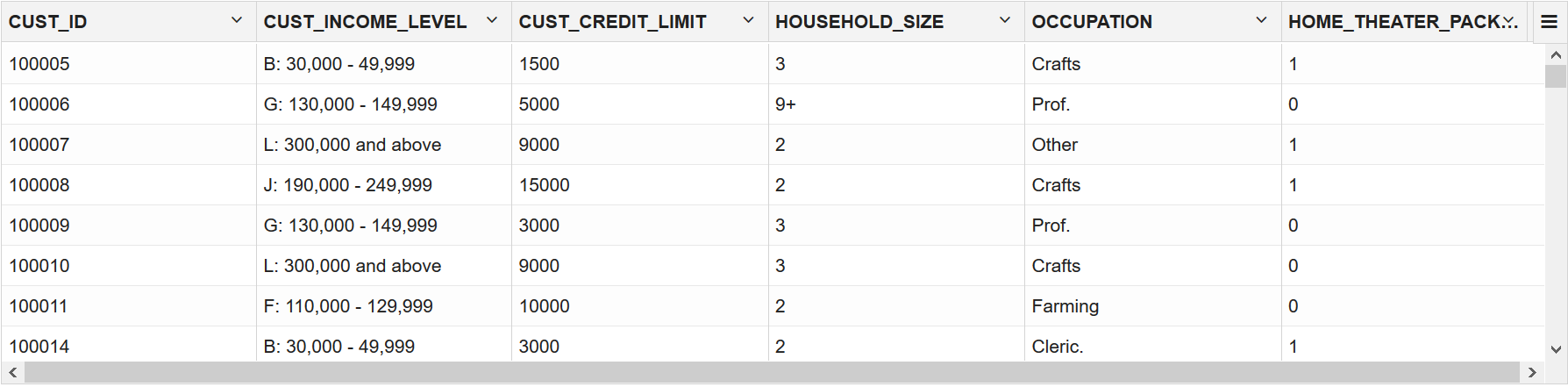

You will use CUSTOMERS and SUPPLEMENTARY_DEMOGRAPHICS table data from the SH schema.

Examine Data

The following table displays information about the attributes from SUPPLEMENTARY_DEMOGRAPHICS:

| Attribute Name | Information |

|---|---|

CUST_ID |

The ID of the customer |

EDUCATION |

Educational information of the customer |

OCCUPATION |

Occupation of the customer |

HOUSEHOLD_SIZE |

People per house |

YRS_RESIDENCE |

Number of years of residence |

AFFINITY_CARD |

Whether the customer holds an affinity card |

BULK_PACK_DISKETTES |

Product. Indicates whether the customer already owns the product. 1 means Yes. 0 means No |

FLAT_PANEL_MONITOR |

Product. Indicates whether the customer already owns the product. 1 means Yes. 0 means No |

HOME_THEATER_PACKAGE |

Product. Indicates whether the customer already owns the product. 1 means Yes. 0 means No |

BOOKKEEPING_APPLICATION |

Product. Indicates whether the customer already owns the product. 1 means Yes. 0 means No |

PRINTER_SUPPLIES |

Product. Indicates whether the customer already owns the product. 1 means Yes. 0 means No |

Y_BOX_GAMES |

Product. Indicates whether the customer already owns the product. 1 means Yes. 0 means No |

OS_DOC_SET_KANJI |

Product. Indicates whether the customer already owns the product. 1 means Yes. 0 means No |

COMMENTS |

Product. Indicates whether the customer already owns the product. 1 means Yes. 0 means No |

Explore Data

Explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values.

Assess Data Quality

To assess the data, first, you must be able to view the data in your database. For this reason, you will use SQL statements to query the SH.CUSTOMERS and the SH.SUPPLEMENTARY_DEMOGRAPHICS table.

SH schema as described.

Note:

Each record in the database is called a case and each case is identified by acase_id. In this use case, CUST_ID is the case_id.

This completes the data exploration stage. OML supports Automatic Data Preparation (ADP). ADP is enabled through the model settings. When ADP is enabled, the transformations required by the algorithm are performed automatically and embedded in the model. This step is done during the Build Model stage. The commonly used methods of data preparation are binning, normalization, and missing value treatment.

Related Topics

Build Model

Build your model using the training data set. Use the DBMS_DATA_MINING.CREATE_MODEL2 procedure to build your model and specify the model settings.

For a supervised learning, like Classification, before creating the model, split the data into training and test data. Although you can use the entire data set to build a model, it is difficult to validate the model unless there are new data sets available. Therefore, to evaluate the model and to accurately assess the performance of the model on the same data, you generally split or separate the data into training and test data. You use the training data set to train the model and then use the test data set to test the accuracy of the model by running prediction queries. The testing data set already contains known values for the attribute that you want to predict. It is thus easy to determine whether the predictions of the model are correct.

Algorithm Selection

Before you build a model, choose the suitable algorithm. You can choose one of the following algorithms to solve a classification problem:

- Decision Tree

- Explicit Semantic Analysis (ESM)

- Generalized Linear Model (GLM)

- Naive Bayes

- Random Forest

- Support Vector Machine (SVM)

- XGBoost

From the above algorithms, ESM is more about Natural Language Processing (NLP) and text mining. ESM does not apply to this use case and data. If you were to select a relatively simple linear model like GLM, the prediction accuracy can be further improved by the Random Forest algorithm. Random Forest is an ensemble method that builds multiple decision trees on subsets of the data re-sampled at each time (bagging). This avoids the overfitting for a single decision tree. The random forest model is a widely used ensemble method that is known to have higher accuracy than linear models. Thus, Random Forest is selected for this use case.

For this use case, split the data into 60/40 as training and test data. You build the model using the training data and once the model is built, score the test data using the model.

The following steps guide you to split your data and build your model with the selected algorithm.

Evaluate

Evaluate your model by viewing diagnostic metrics and performing quality checks.

Sometimes querying dictionary views and model detail views is sufficient to measure your model's performance. However, you can evaluate your model by computing test metrics such as Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), confusion matrix, lift statistics, cost matrix, and so on. For Association Rules, you can inspect various rules to see if they reveal new insights for item dependencies (antecedent itemset implying consequent) or for unexpected relationships among items.

Dictionary and Model Views

To obtain information about the model and view model settings, you can query data dictionary views and model detail views. Specific views in model detail views display model statistics which can help you evaluate the model.

The data dictionary views for Oracle Machine Learning are listed in the following table. A database administrator (DBA) and USER versions of the views are also available.

| View Name | Description |

|---|---|

| ALL_MINING_MODELS | Provides information about all accessible machine learning models |

| ALL_MINING_MODEL_ATTRIBUTES | Provides information about the attributes of all accessible machine learning models |

| ALL_MINING_MODEL_SETTINGS | Provides information about the configuration settings for all accessible machine learning models |

| ALL_MINING_MODEL_VIEWS | Provides information about the model views for all accessible machine learning models |

| ALL_MINING_MODEL_XFORMS | Provides the user-specified transformations embedded in all accessible machine learning models. |

Model detail views are specific to the algorithm. You can obtain more insights about the model you created by viewing the model detail views. The names of model detail views begin with DM$xx where xx corresponds to the view prefix. See Model Detail Views.

The following steps help you to view different dictionary views and model detail views.

Related Topics

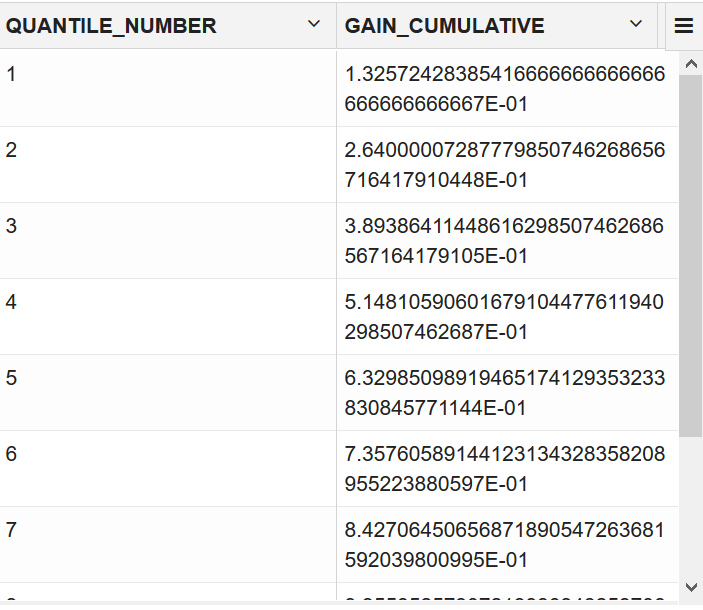

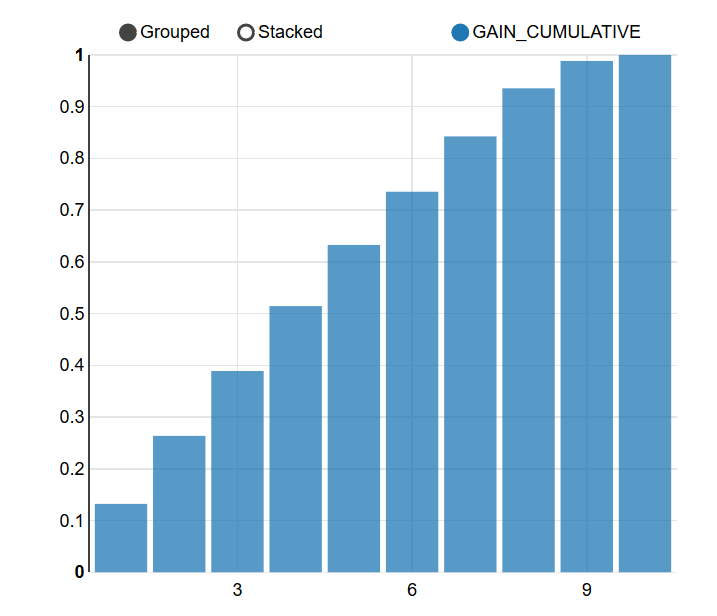

Test Your Model

In this use case, you are evaluating a classification model by computing Lift and Confusion Matrix on the test data with known target values and comparing the predicted values with the known values.

Lift measures the degree to which the predictions of a classification model are better than randomly-generated predictions. Lift can be understood as a ratio of two percentages: the percentage of correct positive classifications made by the model to the percentage of actual positive classifications in the test data.

A confusion matrix displays the number of correct and incorrect predictions made by the model compared with the actual classifications in the test data. The matrix is n-by-n, where n is the number of classes.

Related Topics

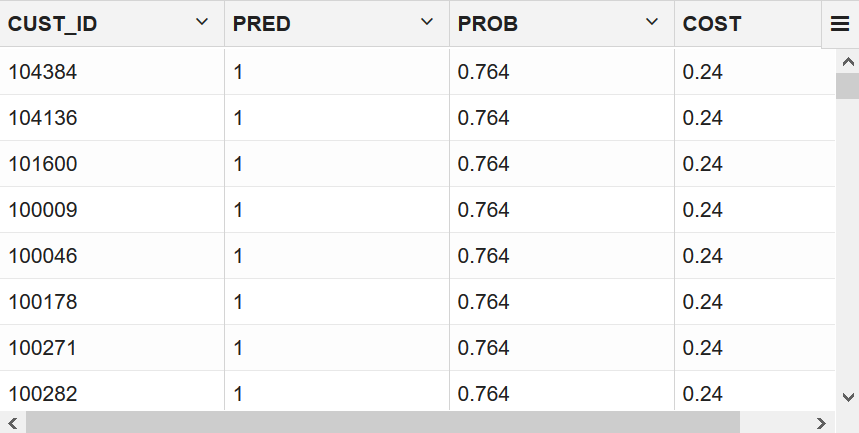

Score

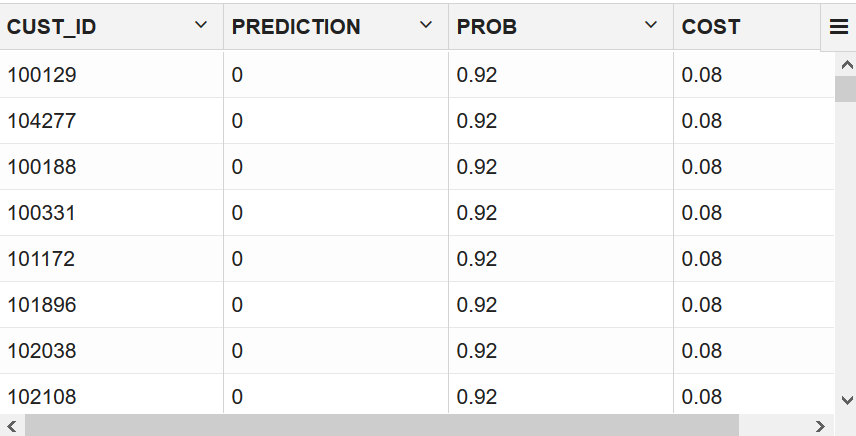

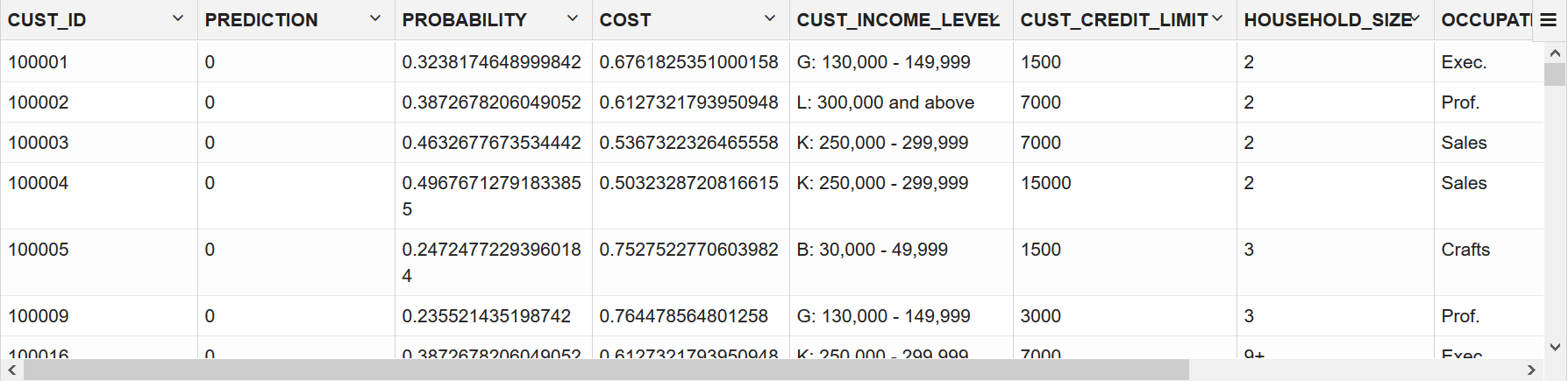

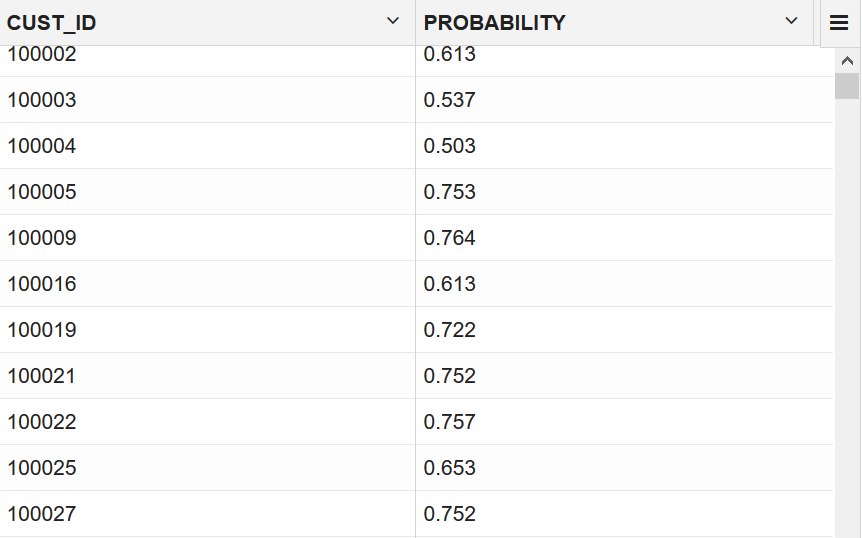

You are ready to predict the likely customers for the HOME_THEATER_PACKAGE responders. For classification problems, you can use PREDICTION, PREDICTION_PROBABILITY, or use analytic syntax to arrive at predictions.

HOME_THEATER_PACKAGE. This prediction helps to promote and offer home theater package to the target customers.