Sharding Methods

Learn about the sharding methods supported by Oracle Sharding, how to choose a method, and how to use subpartitioning.

System-Managed Sharding

System-managed sharding is a sharding method which does not require the user to specify mapping of data to shards. Data is automatically distributed across shards using partitioning by consistent hash. The partitioning algorithm evenly and randomly distributes data across shards.

The distribution used in system-managed sharding is intended to eliminate hot spots and provide uniform performance across shards. Oracle Sharding automatically maintains the balanced distribution of chunks when shards are added to or removed from a sharded database.

Consistent hash is a partitioning strategy commonly used in scalable distributed systems. It is different from traditional hash partitioning. With traditional hashing, the bucket number is calculated as HF(key) % N where HF is a hash function and N is the number of buckets. This approach works fine if N is constant, but requires reshuffling of all data when N changes.

More advanced algorithms, such as linear hashing, do not require rehashing of the entire table to add a hash bucket, but they impose restrictions on the number of buckets, such as the number of buckets can only be a power of 2, and on the order in which the buckets can be split.

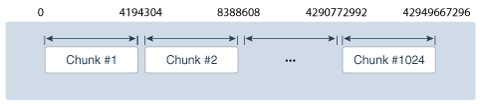

The implementation of consistent hashing used in Oracle Sharding avoids these limitations by dividing the possible range of values of the hash function (for example. from 0 to 232) into a set of N adjacent intervals, and assigning each interval to a chunk , as shown in the figure below. In this example, the sharded database contains 1024 chunks, and each chunk gets assigned a range of 222 hash values. Therefore partitioning by consistent hash is essentially partitioning by the range of hash values.

Figure 2-6 Ranges of Hash Values Assigned to Chunks

Description of "Figure 2-6 Ranges of Hash Values Assigned to Chunks"

Assuming that all of the shards have the same computing power, an equal number of chunks is assigned to each shard in the sharded database. For example, if 1024 chunks are created in a sharded database that contains 16 shards, each shard will contain 64 chunks.

In the event of resharding, when shards are added to or removed from a sharded database, some of the chunks are relocated among the shards to maintain an even distribution of chunks across the shards. The contents of the chunks does not change during this process; no rehashing takes place.

When a chunk is split, its range of hash values is divided into two ranges, but nothing needs to be done for the rest of the chunks. Any chunk can be independently split at any time.

All of the components of a sharded database that are involved in directing connection requests to shards maintain a routing table that contains a list of chunks hosted by each shard and ranges of hash values associated with each chunk. To determine where to route a particular database request, the routing algorithm applies the hash function to the provided value of the sharding key, and maps the calculated hash value to the appropriate chunk, and then to a shard that contains the chunk.

The number of chunks in a sharded database with system-managed sharding can be specified when the shard catalog is created. If not specified, the default value, 120 chunks per shard, is used. Once a sharded database is deployed, the number of chunks can only be changed by splitting chunks.

Before creating a sharded table partitioned by consistent hash, a set of tablespaces

(one tablespace per chunk) has to be created to store the table partitions. The

tablespaces are automatically created by processing the SQL statement, CREATE

TABLESPACE SET.

All of the tablespaces in a tablespace set have the same physical attributes and can only contain Oracle Managed Files (OMF). In its simplest form, the CREATE TABLESPACE SET statement has only one parameter, the name of the tablespace set, for example:

CREATE TABLESPACE SET ts1;In this case each tablespace in the set contains a single OMF file with default attributes. To customize tablespace attributes, the USING TEMPLATE clause (shown in the example below) is added to the statement. The USING TEMPLATE clause specifies attributes that apply to each tablespace in the set.

CREATE TABLESPACE SET ts1

USING TEMPLATE

(

DATAFILE SIZE 10M

EXTENT MANAGEMENT LOCAL UNIFORM SIZE 256K

SEGMENT SPACE MANAGEMENT AUTO

ONLINE

)

;After a tablespace set has been created, a table partitioned by consistent hash can be created with partitions stored in the tablespaces that belong to the set. The CREATE TABLE statement might look as follows:

CREATE SHARDED TABLE customers

( cust_id NUMBER NOT NULL

, name VARCHAR2(50)

, address VARCHAR2(250)

, location_id VARCHAR2(20)

, class VARCHAR2(3)

, signup DATE

, CONSTRAINT cust_pk PRIMARY KEY(cust_id)

)

PARTITION BY CONSISTENT HASH (cust_id)

PARTITIONS AUTO

TABLESPACE SET ts1

;PARTITIONS AUTO in this statement means that the number of partitions is automatically set to the number of tablespaces in the tablespace set ts1 (which is equal to the number of chunks) and each partition will be stored in a separate tablespace.

Each tablespace in a tablespace set belongs to a distinct chunk. In the other words, a chunk can contain only one tablespace from a given tablespace set. However, the same tablespace set can be used for multiple tables that belong to the same table family. In this case, each tablespace in the set will store multiple partitions, one from each table.

Alternatively, each table in a table family can be stored in a separate tablespace set. In this case, a chunk contains multiple tablespaces, one from each tablespace set with each tablespace storing a single partition.

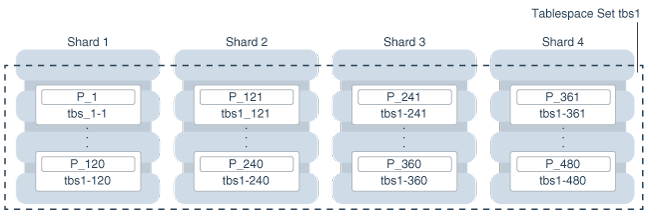

The following figure illustrates the relationship between partitions, tablespaces, and shards for a use case with a single sharded table. In this case, each chunk contains a single tablespace, and each tablespace stores a single partition.

Note:

The sharding method is specified in theGDSCTL CREATE SHARDCATALOG command and cannot be changed later.

User-Defined Sharding

User-defined sharding lets you explicitly specify the mapping of data to individual shards. It is used when, because of performance, regulatory, or other reasons, certain data needs to be stored on a particular shard, and the administrator needs to have full control over moving data between shards.

For a user-defined sharded database, two replication schemes are supported: Oracle Data Guard or Oracle Active Data Guard. User-defined sharding is not supported where Oracle GoldenGate is used as the replication method.

Another advantage of user-defined sharding is that, in case of planned or unplanned outage of a shard, the user knows exactly what data is not available. The disadvantage of user-defined sharding is the need for the database administrator to monitor and maintain balanced distribution of data and workload across shards.

With user-defined sharding, a sharded table can be partitioned by range or list. The CREATE TABLE syntax for a sharded table is not very different from the syntax for a regular table, except for the requirement that each partition should be stored in a separate tablespace.

CREATE SHARDED TABLE accounts

( id NUMBER

, account_number NUMBER

, customer_id NUMBER

, branch_id NUMBER

, state VARCHAR(2) NOT NULL

, status VARCHAR2(1)

)

PARTITION BY LIST (state)

( PARTITION p_northwest VALUES ('OR', 'WA') TABLESPACE ts1

, PARTITION p_southwest VALUES ('AZ', 'UT', 'NM') TABLESPACE ts2

, PARTITION p_northcentral VALUES ('SD', 'WI') TABLESPACE ts3

, PARTITION p_southcentral VALUES ('OK', 'TX') TABLESPACE ts4

, PARTITION p_northeast VALUES ('NY', 'VM', 'NJ') TABLESPACE ts5

, PARTITION p_southeast VALUES ('FL', 'GA') TABLESPACE ts6

)

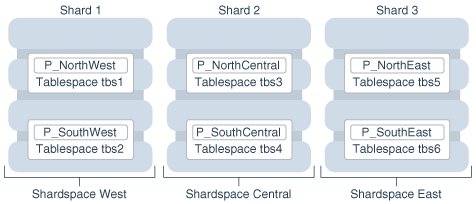

;There is no tablespace set for user-defined sharding. Each tablespace has to be created individually and explicitly associated with a shardspace. A shardspace is set of shards that store data that corresponds to a range or list of key values.

In user-defined sharding, a shardspace consists of a shard or a set of fully replicated shards. For simplicity, assume that each shardspace consists of a single shard.

The following statements can be used to create the tablespaces for the accounts table in the example above.

CREATE TABLESPACE tbs1 IN SHARDSPACE west;

CREATE TABLESPACE tbs2 IN SHARDSPACE west;

CREATE TABLESPACE tbs3 IN SHARDSPACE central;

CREATE TABLESPACE tbs4 IN SHARDSPACE central;

CREATE TABLESPACE tbs5 IN SHARDSPACE east;

CREATE TABLESPACE tbs6 IN SHARDSPACE east;Before executing the CREATE TABLESPACE statements, the shardspaces must be created and populated with shards. For example, you can use the following GDSCTL commands:

ADD SHARDSPACE -SHARDSPACE east

ADD SHARDSPACE -SHARDSPACE central

ADD SHARDSPACE -SHARDSPACE west

ADD CDB -CONNECT cdb1

ADD CDB -CONNECT cdb2

ADD CDB -CONNECT cdb3

ADD SHARD –CONNECT shard-1 -CDB cdb1 –SHARDSPACE west;

ADD SHARD –CONNECT shard-2 -CDB cdb2 –SHARDSPACE central;

ADD SHARD –CONNECT shard-3 -CDB cdb3 –SHARDSPACE east;The following figure shows the mapping of partitions to tablespaces, and tablespaces to shards, for the accounts table in the previous examples.

As with system-managed sharding, tablespaces created for user-defined sharding are

assigned to chunks. However, no chunk migration is

automatically started when a shard is added to the

sharded database. You must run the GDSCTL

MOVE CHUNK command for each chunk

that needs to be migrated.

The total number of chunks is defined by the number

of partitions specified in the sharded table. The number of chunks for a given shardspace is

the number of partitions assigned to it. The ALTER TABLE ADD,

DROP, SPLIT, and MERGE PARTITION commands

on the sharded table increases or decrease the number of chunks.

The GDSCTL SPLIT CHUNK command, which is used to split a chunk in the middle of the hash range for system-managed sharding, is not supported for user-defined sharding. You must use the ALTER TABLE SPLIT PARTITION statement to split a chunk.

Note:

The sharding method is specified in theGDSCTL CREATE SHARDCATALOG command and cannot be changed later.

Composite Sharding

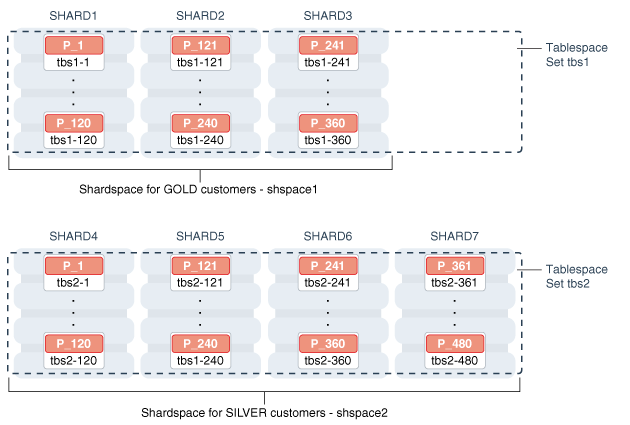

The composite sharding method allows you to create multiple shardspaces for different subsets of data in a table partitioned by consistent hash. A shardspace is set of shards that store data that corresponds to a range or list of key values.

System-managed sharding uses partitioning by consistent hash to randomly distribute data across shards. This provides better load balancing compared to user-defined sharding that uses partitioning by range or list. However, system-managed sharding does not give the user any control on assignment of data to shards.

When sharding by consistent hash on a primary key, there is often a requirement to differentiate subsets of data within a sharded database in order to store them in different geographic locations, allocate to them different hardware resources, or configure high availability and disaster recovery differently. Usually this differentiation is done based on the value of another (non-primary) column, for example, customer location or a class of service.

Composite sharding is a combination of user-defined and system-managed sharding which, when required, provides benefits of both methods. With composite sharding, data is first partitioned by list or range across multiple shardspaces, and then further partitioned by consistent hash across multiple shards in each shardspace. The two levels of sharding make it possible to automatically maintain balanced distribution of data across shards in each shardspace, and, at the same time, partition data across shardspaces.

For example, suppose you want to allocate three shards hosted on faster servers to “gold” customers and four shards hosted on slower machines to “silver” customers. Within each set of shards, customers have to be distributed using partitioning by consistent hash on customer ID.

The following commands would be issued to create this configuration. Note that two shardspaces need to be created for this configuration.

create SHARDCATALOG -sharding composite -database

cat_host:1521/cat_pdb.domain -user gsmcatuser/gsmcatuser_pwd

-region dc1

add gsm -gsm gsm1 -listener 1540 -catalog cat_host:1521/cat_pdb.domain

-region dc1 -pwd gsmcatuser_pwd

gdsctl start gsm

add shardspace -shardspace shspace1 -chunks 60

add shardspace -shardspace shspace2 -chunks 120

ADD SHARDGROUP -shardgroup gold -shardspace shspace1 -region dc1 -deploy_as

primary

ADD SHARDGROUP -shardgroup silver -shardspace shspace2 -region dc1 -deploy_as

primary

add CDB -connect cdb1_host:1521/cdb1.domain -pwd gsmrootuser_pwd

add CDB -connect cdb2_host:1521/cdb2.domain -pwd gsmrootuser_pwd

add CDB -connect cdb3_host:1521/cdb3.domain -pwd gsmrootuser_pwd

add CDB -connect cdb4_host:1521/cdb4.domain -pwd gsmrootuser_pwd

add CDB -connect cdb5_host:1521/cdb5.domain -pwd gsmrootuser_pwd

add CDB -connect cdb6_host:1521/cdb6.domain -pwd gsmrootuser_pwd

add CDB -connect cdb7_host:1521/cdb7.domain -pwd gsmrootuser_pwd

add shard -cdb cdb1 -shardgroup gold -connect

cdb1_host:1521/sh1_pdb.domain -pwd gsmuser_pwd

add shard -cdb cdb2 -shardgroup gold -connect

cdb2_host:1521/sh2_pdb.domain -pwd gsmuser_pwd

add shard -cdb cdb3 -shardgroup gold -connect

cdb3_host:1521/sh3_pdb.domain -pwd gsmuser_pwd

add shard -cdb cdb4 -shardgroup silver -connect

cdb4_host:1521/sh4_pdb.domain -pwd gsmuser_pwd

add shard -cdb cdb5 -shardgroup silver -connect

cdb5_host:1521/sh5_pdb.domain -pwd gsmuser_pwd

add shard -cdb cdb6 -shardgroup silver -connect

cdb6_host:1521/sh6_pdb.domain -pwd gsmuser_pwd

add shard -cdb cdb7 -shardgroup silver -connect

cdb7_host:1521/sh7_pdb.domain -pwd gsmuser_pwd

deployWith composite sharding, as with the other sharding methods, tablespaces are used to specify the mapping of partitions to shards. To place subsets of data in a sharded table into different shardspaces, a separate tablespace set must be created in each shardspace as shown in the following example.

CREATE TABLESPACE SET tbs1 IN SHARDSPACE shspace1;

CREATE TABLESPACE SET tbs2 IN SHARDSPACE shspace2;To store user-defined subsets of data in different tablespaces, Oracle Sharding provides syntax to group partitions into sets and associate each set of partitions with a tablespace set. Support for partition sets can be considered a logical equivalent of a higher level of partitioning which is implemented on top of partitioning by consistent hash.

The statement in the following example partitions a sharded table into two partition sets: gold and silver, based on class of service. Each partition set is stored in a separate tablespace. Then data in each partition set is further partitioned by consistent hash on customer ID.

CREATE SHARDED TABLE customers

( cust_id NUMBER NOT NULL

, name VARCHAR2(50)

, address VARCHAR2(250)

, location_id VARCHAR2(20)

, class VARCHAR2(3)

, signup_date DATE

, CONSTRAINT cust_pk PRIMARY KEY(cust_id, class)

)

PARTITIONSET BY LIST (class)

PARTITION BY CONSISTENT HASH (cust_id)

PARTITIONS AUTO

(PARTITIONSET gold VALUES (‘gld’) TABLESPACE SET tbs1,

PARTITIONSET silver VALUES (‘slv’) TABLESPACE SET tbs2)

;Note:

The sharding method is specified in theGDSCTL CREATE SHARDCATALOG command and cannot be changed later.

Using Subpartitions with Sharding

Because Oracle Sharding is based on table partitioning, all of the subpartitioning methods provided by Oracle Database are also supported for sharding.

Subpartitioning splits each partition into smaller parts and may be beneficial for efficient parallel processing within a shard, especially in the case of sharding by range or list when the number of partitions per shard may be small.

From a manageability perspective, subpartitioning makes it possible to support the tiered storage approach by putting subpartitions into separate tablespaces and moving them between storage tiers. Migration of subpartitions between storage tiers can be done without sacrificing the scalability and availability benefits of sharding and the ability to perform partition pruning and partition-wise joins on a primary key.

The following example shows system-managed sharding by consistent hash combined with subpartitioning by range.

CREATE SHARDED TABLE customers

( cust_id NUMBER NOT NULL

, name VARCHAR2(50)

, address VARCHAR2(250)

, location_id VARCHAR2(20)

, class VARCHAR2(3)

, signup_date DATE

, CONSTRAINT cust_pk PRIMARY KEY(cust_id, signup_date)

)

TABLESPACE SET ts1

PARTITION BY CONSISTENT HASH (cust_id)

SUBPARTITION BY RANGE (signup_date)

SUBPARTITION TEMPLATE

( SUBPARTITION per1 VALUES LESS THAN (TO_DATE('01/01/2000','DD/MM/YYYY')),

SUBPARTITION per2 VALUES LESS THAN (TO_DATE('01/01/2010','DD/MM/YYYY')),

SUBPARTITION per3 VALUES LESS THAN (TO_DATE('01/01/2020','DD/MM/YYYY')),

SUBPARTITION future VALUES LESS THAN (MAXVALUE)

)

PARTITIONS AUTO

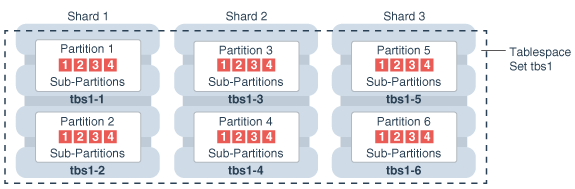

;The following figure offers a graphical view of the table created by this statement.

Figure 2-10 Subpartitions Stored in the Tablespace of the Parent Partition

Description of "Figure 2-10 Subpartitions Stored in the Tablespace of the Parent Partition"

In this example each subpartition is stored in the parent partition’s tablespace. Because subpartitioning is done by date, it makes more sense to store subpartitions in separate tablespaces to provide the ability to archive older data or move it to a read-only storage. The appropriate syntax is shown here.

CREATE SHARDED TABLE customers

( cust_id NUMBER NOT NULL

, name VARCHAR2(50)

, address VARCHAR2(250)

, location_id VARCHAR2(20)

, class VARCHAR2(3)

, signup_date DATE NOT NULL

, CONSTRAINT cust_pk PRIMARY KEY(cust_id, signup_date)

)

PARTITION BY CONSISTENT HASH (cust_id)

SUBPARTITION BY RANGE(signup_date)

SUBPARTITION TEMPLATE

( SUBPARTITION per1 VALUES LESS THAN (TO_DATE('01/01/2000','DD/MM/YYYY'))

TABLESPACE SET ts1,

SUBPARTITION per2 VALUES LESS THAN (TO_DATE('01/01/2010','DD/MM/YYYY'))

TABLESPACE SET ts2,

SUBPARTITION per3 VALUES LESS THAN (TO_DATE('01/01/2020','DD/MM/YYYY'))

TABLESPACE SET ts3,

SUBPARTITION future VALUES LESS THAN (MAXVALUE)

TABLESPACE SET ts4

)

PARTITIONS AUTO

;Note that in the case of a database that is not sharded, when tablespaces are specified in the subpartition template it means that subpartition N from every partition is stored in the same tablespace. This is different in case of sharding when subpartitions that belong to the different partitions must be stored in separate tablespaces so that they can be moved in the event of resharding.

Subpartitioning can be used with composite sharding, too. In this case data in a table is organized in three levels: partition sets, partitions, and subpartitions. Examples of the three levels of data organization are shown below.

Specifying subpartition templates per partitionset is not supported to ensure that there is uniformity in the number and bounds of subpartitions across partitionsets. If you need to specify tablespaces for subpartitions per partitionset, you can use the SUBPARTITIONS STORE IN clause.

CREATE SHARDED TABLE customers

( cust_id NUMBER NOT NULL

, name VARCHAR2(50)

, address VARCHAR2(250)

, location_id VARCHAR2(20)

, class VARCHAR2(3) NOT NULL

, signup_date DATE NOT NULL

, CONSTRAINT cust_pk PRIMARY KEY(cust_id, class, signup_date)

)

PARTITIONSET BY LIST (class)

PARTITION BY CONSISTENT HASH (cust_id)

SUBPARTITION BY RANGE (signup_date)

SUBPARTITION TEMPLATE /* applies to both SHARDSPACEs */

( SUBPARTITION per1 VALUES LESS THAN (TO_DATE('01/01/2000','DD/MM/YYYY'))

, SUBPARTITION per2 VALUES LESS THAN (TO_DATE('01/01/2010','DD/MM/YYYY'))

, SUBPARTITION per3 VALUES LESS THAN (TO_DATE('01/01/2020','DD/MM/YYYY'))

, SUBPARTITION future VALUES LESS THAN (MAXVALUE)

)

PARTITIONS AUTO

(

PARTITIONSET gold VALUES (‘gld’) TABLESPACE SET tbs1

subpartitions store in(tbs1)

, PARTITIONSET silver VALUES (‘slv’) TABLESPACE SET tbs2

subpartitions store in(tbs2)

)

;