7 Diagnosing and Resolving Problems

Oracle Database includes an advanced fault diagnosability infrastructure for collecting and managing diagnostic data, so as to diagnose and resolve database problems. Diagnostic data includes the trace files, dumps, and core files that are also present in previous releases, plus new types of diagnostic data that enable customers and Oracle Support to identify, investigate, track, and resolve problems quickly and effectively.

- About the Oracle Database Fault Diagnosability Infrastructure

Oracle Database includes a fault diagnosability infrastructure for preventing, detecting, diagnosing, and resolving database problems. - About Investigating, Reporting, and Resolving a Problem

You can use the Enterprise Manager Support Workbench (Support Workbench) to investigate and report a problem (critical error), and in some cases, resolve the problem. You can use a "roadmap" that summarizes the typical set of tasks that you must perform. - Diagnosing Problems

This section describes various methods to diagnose problems in an Oracle database. - Reporting Problems

Using the Enterprise Manager Support Workbench (Support Workbench), you can create, edit, and upload custom incident packages. With custom incident packages, you have fine control over the diagnostic data that you send to Oracle Support. - Resolving Problems

This section describes how to resolve database problems using advisor tools, such as SQL Repair Advisor and Data Recovery Advisor, and the resource management tools, such as the Resource Manager and related APIs. - Diagnosis and Tracing in a PDB Using Package DBMS_USERDIAG

This section describes how to use the PL/SQL package DBMS_USERDIAG for diagnosis and allows you to set up a trace within a PDB.

Parent topic: Basic Database Administration

7.1 About the Oracle Database Fault Diagnosability Infrastructure

Oracle Database includes a fault diagnosability infrastructure for preventing, detecting, diagnosing, and resolving database problems.

- Fault Diagnosability Infrastructure Overview

The fault diagnosability infrastructure aids in preventing, detecting, diagnosing, and resolving problems. The problems that are targeted in particular are critical errors such as those caused by code bugs, metadata corruption, and customer data corruption. - Incidents and Problems

A problem is a critical error in a database instance, Oracle Automatic Storage Management (Oracle ASM) instance, or other Oracle product or component. An incident is a single occurrence of a problem. - Fault Diagnosability Infrastructure Components

The fault diagnosability infrastructure consists of several components, including the Automatic Diagnostic Repository (ADR), various logs, trace files, the Enterprise Manager Support Workbench, and the ADRCI Command-Line Utility. - Structure, Contents, and Location of the Automatic Diagnostic Repository

The Automatic Diagnostic Repository (ADR) is a directory structure that is stored outside of the database. It is therefore available for problem diagnosis when the database is down.

Parent topic: Diagnosing and Resolving Problems

7.1.1 Fault Diagnosability Infrastructure Overview

The fault diagnosability infrastructure aids in preventing, detecting, diagnosing, and resolving problems. The problems that are targeted in particular are critical errors such as those caused by code bugs, metadata corruption, and customer data corruption.

When a critical error occurs, it is assigned an incident number, and diagnostic data for the error (such as trace files) are immediately captured and tagged with this number. The data is then stored in the Automatic Diagnostic Repository (ADR)—a file-based repository outside the database—where it can later be retrieved by incident number and analyzed.

The goals of the fault diagnosability infrastructure are the following:

-

First-failure diagnosis

-

Problem prevention

-

Limiting damage and interruptions after a problem is detected

-

Reducing problem diagnostic time

-

Reducing problem resolution time

-

Simplifying customer interaction with Oracle Support

The keys to achieving these goals are the following technologies:

-

Automatic capture of diagnostic data upon first failure—For critical errors, the ability to capture error information at first-failure greatly increases the chance of a quick problem resolution and reduced downtime. An always-on memory-based tracing system proactively collects diagnostic data from many database components, and can help isolate root causes of problems. Such proactive diagnostic data is similar to the data collected by airplane "black box" flight recorders. When a problem is detected, alerts are generated and the fault diagnosability infrastructure is activated to capture and store diagnostic data. The data is stored in a repository that is outside the database (and therefore available when the database is down), and is easily accessible with command line utilities and Oracle Enterprise Manager Cloud Control (Cloud Control).

-

Standardized trace formats—Standardizing trace formats across all database components enables DBAs and Oracle Support personnel to use a single set of tools for problem analysis. Problems are more easily diagnosed, and downtime is reduced.

-

Health checks—Upon detecting a critical error, the fault diagnosability infrastructure can run one or more health checks to perform deeper analysis of a critical error. Health check results are then added to the other diagnostic data collected for the error. Individual health checks look for data block corruptions, undo and redo corruption, data dictionary corruption, and more. As a DBA, you can manually invoke these health checks, either on a regular basis or as required.

-

Incident packaging service (IPS) and incident packages—The IPS enables you to automatically and easily gather the diagnostic data—traces, dumps, health check reports, and more—pertaining to a critical error and package the data into a zip file for transmission to Oracle Support. Because all diagnostic data relating to a critical error are tagged with that error's incident number, you do not have to search through trace files and other files to determine the files that are required for analysis; the incident packaging service identifies the required files automatically and adds them to the zip file. Before creating the zip file, the IPS first collects diagnostic data into an intermediate logical structure called an incident package (package). Packages are stored in the Automatic Diagnostic Repository. If you choose to, you can access this intermediate logical structure, view and modify its contents, add or remove additional diagnostic data at any time, and when you are ready, create the zip file from the package. After these steps are completed, the zip file is ready to be uploaded to Oracle Support.

-

Data Recovery Advisor—The Data Recovery Advisor integrates with database health checks and RMAN to display data corruption problems, assess the extent of each problem (critical, high priority, low priority), describe the impact of a problem, recommend repair options, conduct a feasibility check of the customer-chosen option, and automate the repair process.

-

SQL Test Case Builder—For many SQL-related problems, obtaining a reproducible test case is an important factor in problem resolution speed. The SQL Test Case Builder automates the sometimes difficult and time-consuming process of gathering as much information as possible about the problem and the environment in which it occurred. After quickly gathering this information, you can upload it to Oracle Support to enable support personnel to easily and accurately reproduce the problem.

7.1.2 Incidents and Problems

A problem is a critical error in a database instance, Oracle Automatic Storage Management (Oracle ASM) instance, or other Oracle product or component. An incident is a single occurrence of a problem.

- About Incidents and Problems

To facilitate diagnosis and resolution of critical errors, the fault diagnosability infrastructure introduces two concepts for Oracle Database: problems and incidents. - Incident Flood Control

It is conceivable that a problem could generate dozens or perhaps hundreds of incidents in a short period of time. This would generate too much diagnostic data, which would consume too much space in the ADR and could possibly slow down your efforts to diagnose and resolve the problem. For these reasons, the fault diagnosability infrastructure applies flood control to incident generation after certain thresholds are reached. - Related Problems Across the Topology

For any problem identified in a database instance, the diagnosability framework can identify related problems across the topology of your Oracle Database installation.

7.1.2.1 About Incidents and Problems

To facilitate diagnosis and resolution of critical errors, the fault diagnosability infrastructure introduces two concepts for Oracle Database: problems and incidents.

A problem is a critical error in a database instance, Oracle Automatic Storage Management (Oracle ASM) instance, or other Oracle product or component. Critical errors manifest as internal errors, such as ORA-00600, or other severe errors, such as ORA-07445 (operating system exception) or ORA-04031 (out of memory in the shared pool). Problems are tracked in the ADR. Each problem has a problem key, which is a text string that describes the problem. It includes an error code (such as ORA 600) and in some cases, one or more error parameters.

An incident is a single occurrence of a problem. When a problem (critical error) occurs multiple times, an incident is created for each occurrence. Incidents are timestamped and tracked in the Automatic Diagnostic Repository (ADR). Each incident is identified by a numeric incident ID, which is unique within the ADR. When an incident occurs, the database:

-

Makes an entry in the alert log.

-

Sends an incident alert to Cloud Control.

-

Gathers first-failure diagnostic data about the incident in the form of dump files (incident dumps).

-

Tags the incident dumps with the incident ID.

-

Stores the incident dumps in an ADR subdirectory created for that incident.

Diagnosis and resolution of a critical error usually starts with an incident alert. Incident alerts are displayed on the Cloud Control Database Home page or Oracle Automatic Storage Management Home page. The Database Home page also displays in its Related Alerts section any critical alerts in the Oracle ASM instance or other Oracle products or components. After viewing an alert, you can then view the problem and its associated incidents with Cloud Control or with the ADRCI command-line utility.

7.1.2.2 Incident Flood Control

It is conceivable that a problem could generate dozens or perhaps hundreds of incidents in a short period of time. This would generate too much diagnostic data, which would consume too much space in the ADR and could possibly slow down your efforts to diagnose and resolve the problem. For these reasons, the fault diagnosability infrastructure applies flood control to incident generation after certain thresholds are reached.

A flood-controlled incident is an incident that generates an alert log entry, is recorded in the ADR, but does not generate incident dumps. Flood-controlled incidents provide a way of informing you that a critical error is ongoing, without overloading the system with diagnostic data. You can choose to view or hide flood-controlled incidents when viewing incidents with Cloud Control or the ADRCI command-line utility.

Threshold levels for incident flood control are predetermined and cannot be changed. They are defined as follows:

-

After five incidents occur for the same problem key in one hour, subsequent incidents for this problem key are flood-controlled. Normal (non-flood-controlled) recording of incidents for that problem key begins again in the next hour.

-

After 25 incidents occur for the same problem key in one day, subsequent incidents for this problem key are flood-controlled. Normal recording of incidents for that problem key begins again on the next day.

In addition, after 50 incidents for the same problem key occur in one hour, or 250 incidents for the same problem key occur in one day, subsequent incidents for this problem key are not recorded at all in the ADR. In these cases, the database writes a message to the alert log indicating that no further incidents will be recorded. As long as incidents continue to be generated for this problem key, this message is added to the alert log every ten minutes until the hour or the day expires. Upon expiration of the hour or day, normal recording of incidents for that problem key begins again.

Parent topic: Incidents and Problems

7.1.2.3 Related Problems Across the Topology

For any problem identified in a database instance, the diagnosability framework can identify related problems across the topology of your Oracle Database installation.

In a single instance environment, a related problem could be identified in the local Oracle ASM instance. In an Oracle RAC environment, a related problem could be identified in any database instance or Oracle ASM instance on any other node. When investigating problems, you are able to view and gather information on any related problems.

A problem is related to the original problem if it occurs within a designated time period or shares the same execution context identifier. An execution context identifier (ECID) is a globally unique identifier used to tag and track a single call through the Oracle software stack, for example, a call to Oracle Fusion Middleware that then calls into Oracle Database to retrieve data. The ECID is typically generated in the middle tier and is passed to the database as an Oracle Call Interface (OCI) attribute. When a single call has failures on multiple tiers of the Oracle software stack, problems that are generated are tagged with the same ECID so that they can be correlated. You can then determine the tier on which the originating problem occurred.

Parent topic: Incidents and Problems

7.1.3 Fault Diagnosability Infrastructure Components

The fault diagnosability infrastructure consists of several components, including the Automatic Diagnostic Repository (ADR), various logs, trace files, the Enterprise Manager Support Workbench, and the ADRCI Command-Line Utility.

- Automatic Diagnostic Repository (ADR)

The ADR is a file-based repository for database diagnostic data such as traces, dumps, the alert log, health monitor reports, and more. It has a unified directory structure across multiple instances and multiple products. - Alert Log

The alert log is an XML file that is a chronological log of messages and errors. - Attention Log

The attention log is a structured, externally modifiable file that contains information about critical and highly visible database events. Use the attention log to quickly access information about critical events that need action. - Trace Files, Dumps, and Core Files

Trace files, dumps, and core files contain diagnostic data that are used to investigate problems. They are stored in the ADR. - DDL Log

The data definition language (DDL) log is a file that has the same format and basic behavior as the alert log, but it only contains the DDL statements issued by the database. - Debug Log

An Oracle Database component can detect conditions, states, or events that are unusual, but which do not inhibit correct operation of the detecting component. The component can issue a warning about these conditions, states, or events. The debug log is a file that records these warnings. - Other ADR Contents

In addition to files mentioned in the previous sections, the ADR contains health monitor reports, data repair records, SQL test cases, incident packages, and more. These components are described later in the chapter. - Enterprise Manager Support Workbench

The Enterprise Manager Support Workbench (Support Workbench) is a facility that enables you to investigate, report, and in some cases, repair problems (critical errors), all with an easy-to-use graphical interface. - ADRCI Command-Line Utility

The ADR Command Interpreter (ADRCI) is a utility that enables you to investigate problems, view health check reports, and package first-failure diagnostic data, all within a command-line environment.

7.1.3.1 Automatic Diagnostic Repository (ADR)

The ADR is a file-based repository for database diagnostic data such as traces, dumps, the alert log, health monitor reports, and more. It has a unified directory structure across multiple instances and multiple products.

The database, Oracle Automatic Storage Management (Oracle ASM), the listener, Oracle Clusterware, and other Oracle products or components store all diagnostic data in the ADR. Each instance of each product stores diagnostic data underneath its own home directory within the ADR. For example, in an Oracle Real Application Clusters environment with shared storage and Oracle ASM, each database instance and each Oracle ASM instance has an ADR home directory. ADR's unified directory structure, consistent diagnostic data formats across products and instances, and a unified set of tools enable customers and Oracle Support to correlate and analyze diagnostic data across multiple instances. With Oracle Clusterware, each host node in the cluster has an ADR home directory.

- Attention Log - entries are more verbose and provide more detail for the database administrator. Entries now include attributes such as urgency, target user, a verbose description of the event, a detailed solution to the event, and more.

- Debug Log - all log entries that are not database administrator or customer focused are now recorded in the debug log instead of the alert log.

- Trace Content Classification - every trace entry is now categorized with a label that denotes the type of content written. There are 10 categories denoting highest to lowest security categorization. You may filter the data by security categorization to be selected and packaged for analysis by Oracle for further diagnosis.

-

Trace File Limits and Segmentation - trace files created by the database will no longer default to unlimited size. Segmentation and rotation of the files has been enabled. There is now an upper limit of 1GB per foreground process and 10GB per background process, equally divided into 5 segments resulting in 200MB per segment for foreground processes and 2GB per background process. Any further traces generated will create new segments and delete the older segments. The first segment is preserved, and only last four segments are rotated.

Trace files are limited to 32MB each for the Oracle Database Free Edition.

The initialization parameter

MAX_DUMP_FILE_SIZE, which controls the trace file size, defaults to 1GB per foreground process and 10GB per background process for Oracle Database Enterprise Edition and other editions, except the Free Edition, which default to 32MB. - Trace Content Suppression - repetitive or excessive content is no longer written to the traces.

Note:

Because all diagnostic data, including the alert log, are stored in the ADR, the initialization parameters BACKGROUND_DUMP_DEST and USER_DUMP_DEST are deprecated. They are replaced by the initialization parameter DIAGNOSTIC_DEST, which identifies the location of the ADR.

Parent topic: Fault Diagnosability Infrastructure Components

7.1.3.2 Alert Log

The alert log is an XML file that is a chronological log of messages and errors.

There is one alert log in each ADR home. Each alert log is specific to its component type, such as database, Oracle ASM, listener, and Oracle Clusterware.

For the database, the alert log includes messages about the following:

-

Critical errors (incidents)

-

Administrative operations, such as starting up or shutting down the database, recovering the database, creating or dropping a tablespace, and others.

-

Errors during automatic refresh of a materialized view

-

Other database events

You can view the alert log in text format (with the XML tags stripped) with Cloud Control and with the ADRCI utility. There is also a text-formatted version of the alert log stored in the ADR for backward compatibility. However, Oracle recommends that any parsing of the alert log contents be done with the XML-formatted version, because the text format is unstructured and may change from release to release.

See Also:

Parent topic: Fault Diagnosability Infrastructure Components

7.1.3.3 Attention Log

The attention log is a structured, externally modifiable file that contains information about critical and highly visible database events. Use the attention log to quickly access information about critical events that need action.

There is one attention log for each database instance. The attention log contains a pre-determined, translatable series of messages, with one message for each event. Some important attributes of a message are as follows:

- Attention ID: A unique identifier for the message.

- Attention type: The type of attention message. Possible values are Error, Warning, Notification, or Additional information. The attention type can be modified dynamically.

- Message text

- Urgency: Possible values are Immediate, Soon, Deferrable, or Information.

- Scope: Possible values are Session, Process, PDB Instance, CDB Instance, CDB Cluster, PDB (for issues in persistent storage that a database restart will not fix), or CDB (for issues in persistent storage that a database restart will not fix).

- Target user: The user who must act on this attention log message. Possible values are Clusterware Admin, CDB admin, or PDB admin.

- Cause

- Action

Contents of the Attention Log

The following is an example of an attention log message that needs immediate action.

{

IMMEDIATE : "PMON (ospid: 3565): terminating the instance due to ORA error 822"

CAUSE: "PMON detected fatal background process death"

ACTION: "Termination of fatal background is not recommended, Investigate cause of process termination"

CLASS : CDB-INSTANCE / CDB_ADMIN / ERROR / DBAL-35782660

TIME : 2020-03-28T14:15:16.159-07:00

INFO : "Some additional data on error PMON error"

}

Related Topics

Parent topic: Fault Diagnosability Infrastructure Components

7.1.3.4 Trace Files, Dumps, and Core Files

Trace files, dumps, and core files contain diagnostic data that are used to investigate problems. They are stored in the ADR.

- Trace Files

Each server and background process can write to an associated trace file. Trace files are updated periodically over the life of the process and can contain information on the process environment, status, activities, and errors. In addition, when a process detects a critical error, it writes information about the error to its trace file. - Dumps

A dump is a specific type of trace file. A dump is typically a one-time output of diagnostic data in response to an event (such as an incident), whereas a trace tends to be continuous output of diagnostic data. - Core Files

A core file contains a memory dump, in an all-binary, port-specific format.

Parent topic: Fault Diagnosability Infrastructure Components

7.1.3.4.1 Trace Files

Each server and background process can write to an associated trace file. Trace files are updated periodically over the life of the process and can contain information on the process environment, status, activities, and errors. In addition, when a process detects a critical error, it writes information about the error to its trace file.

The SQL trace facility also creates trace files, which provide performance information on individual SQL statements. You can enable SQL tracing for a session or an instance.

Trace file names are platform-dependent. Typically, database background process trace file names contain the Oracle SID, the background process name, and the operating system process number, while server process trace file names contain the Oracle SID, the string "ora", and the operating system process number. The file extension is .trc. An example of a server process trace file name is orcl_ora_344.trc. Trace files are sometimes accompanied by corresponding trace metadata (.trm) files, which contain structural information about trace files and are used for searching and navigation.

Starting with Oracle Database 21c, all trace file records contain a prefix that is used to classify records. The prefix indicates the level of sensitivity of each trace record. This helps in enhancing security.

Oracle Database includes tools that help you analyze trace files. For more information on application tracing, SQL tracing, and tracing tools, see Oracle Database SQL Tuning Guide.

Related Topics

Parent topic: Trace Files, Dumps, and Core Files

7.1.3.4.2 Dumps

A dump is a specific type of trace file. A dump is typically a one-time output of diagnostic data in response to an event (such as an incident), whereas a trace tends to be continuous output of diagnostic data.

When an incident occurs, the database writes one or more dumps to the incident directory created for the incident. Incident dumps also contain the incident number in the file name.

Parent topic: Trace Files, Dumps, and Core Files

7.1.3.4.3 Core Files

A core file contains a memory dump, in an all-binary, port-specific format.

Core file names include the string "core" and the operating system process ID. Core files are useful to Oracle Support engineers only. Core files are not found on all platforms.

Parent topic: Trace Files, Dumps, and Core Files

7.1.3.5 DDL Log

The data definition language (DDL) log is a file that has the same format and basic behavior as the alert log, but it only contains the DDL statements issued by the database.

The DDL log is created only for the RDBMS component and only if the ENABLE_DDL_LOGGING initialization parameter is set to TRUE. When this parameter is set to FALSE, DDL statements are not included in any log.

The DDL log contains one log record for each DDL statement issued by the database. The DDL log is included in IPS incident packages.

There are two DDL logs that contain the same information. One is an XML file, and the other is a text file. The DDL log is stored in the log/ddl subdirectory of the ADR home.

See Also:

Oracle Database Reference for more information about the ENABLE_DDL_LOGGING initialization parameter

Parent topic: Fault Diagnosability Infrastructure Components

7.1.3.6 Debug Log

An Oracle Database component can detect conditions, states, or events that are unusual, but which do not inhibit correct operation of the detecting component. The component can issue a warning about these conditions, states, or events. The debug log is a file that records these warnings.

These warnings recorded in the debug log are not serious enough to warrant an incident or a write to the alert log. They do warrant a record in a log file because they might be needed to diagnose a future problem.

The debug log has the same format and basic behavior as the alert log, but it only contains information about possible problems that might need to be corrected.

The debug log reduces the amount of information in the alert log and trace files. It also improves the visibility of debug information.

The debug log is included in IPS incident packages. The debug log's contents are intended for Oracle Support. Database administrators should not use the debug log directly.

Note:

Because there is a separate debug log starting with Oracle Database 12c, the alert log and the trace files are streamlined. They now contain fewer warnings of the type that are recorded in the debug log.

Parent topic: Fault Diagnosability Infrastructure Components

7.1.3.7 Other ADR Contents

In addition to files mentioned in the previous sections, the ADR contains health monitor reports, data repair records, SQL test cases, incident packages, and more. These components are described later in the chapter.

Parent topic: Fault Diagnosability Infrastructure Components

7.1.3.8 Enterprise Manager Support Workbench

The Enterprise Manager Support Workbench (Support Workbench) is a facility that enables you to investigate, report, and in some cases, repair problems (critical errors), all with an easy-to-use graphical interface.

The Support Workbench provides a self-service means for you to gather first-failure diagnostic data, obtain a support request number, and upload diagnostic data to Oracle Support with a minimum of effort and in a very short time, thereby reducing time-to-resolution for problems. The Support Workbench also recommends and provides easy access to Oracle advisors that help you repair SQL-related problems, data corruption problems, and more.

Parent topic: Fault Diagnosability Infrastructure Components

7.1.3.9 ADRCI Command-Line Utility

The ADR Command Interpreter (ADRCI) is a utility that enables you to investigate problems, view health check reports, and package first-failure diagnostic data, all within a command-line environment.

You can then upload the package to Oracle Support. ADRCI also enables you to view the names of the trace files in the ADR, and to view the alert log with XML tags stripped, with and without content filtering.

For more information on ADRCI, see Oracle Database Utilities.

Parent topic: Fault Diagnosability Infrastructure Components

7.1.4 Structure, Contents, and Location of the Automatic Diagnostic Repository

The Automatic Diagnostic Repository (ADR) is a directory structure that is stored outside of the database. It is therefore available for problem diagnosis when the database is down.

The ADR root directory is known as ADR base. Its location is set by the DIAGNOSTIC_DEST initialization parameter. If this parameter is omitted or left null, the database sets DIAGNOSTIC_DEST upon startup as follows:

-

If environment variable

ORACLE_BASEis set,DIAGNOSTIC_DESTis set to the directory designated byORACLE_BASE. -

If environment variable

ORACLE_BASEis not set,DIAGNOSTIC_DESTis set to ORACLE_HOME/log.

Within ADR base, there can be multiple ADR homes, where each ADR home is the root directory for all diagnostic data—traces, dumps, the alert log, and so on—for a particular instance of a particular Oracle product or component. For example, in an Oracle Real Application Clusters environment with Oracle ASM, each database instance, Oracle ASM instance, and listener has an ADR home.

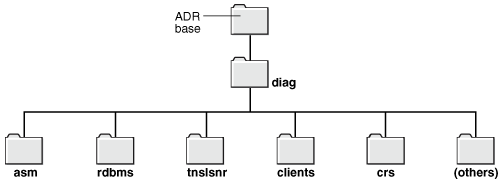

ADR homes reside in ADR base subdirectories that are named according to the product or component type. Figure 7-1 illustrates these top-level subdirectories.

Figure 7-1 Product/Component Type Subdirectories in the ADR

Description of "Figure 7-1 Product/Component Type Subdirectories in the ADR"

Note:

Additional subdirectories might be created in the ADR depending on your configuration. Some products automatically purge expired diagnostic data from ADR. For other products, you can use the ADRCI utility PURGE command at regular intervals to purge expired diagnostic data.

The location of each ADR home is given by the following path, which starts at the ADR base directory:

diag/product_type/product_id/instance_id

As an example, Table 7-1 lists the values of the various path components for an Oracle Database instance.

Table 7-1 ADR Home Path Components for Oracle Database

| Path Component | Value for Oracle Database |

|---|---|

|

product_type |

rdbms |

|

product_id |

|

|

instance_id |

SID |

For example, for a database with a SID and database unique name both equal to orclbi, the ADR home would be in the following location:

ADR_base/diag/rdbms/orclbi/orclbi/

Similarly, the ADR home path for the Oracle ASM instance in a single-instance environment would be:

ADR_base/diag/asm/+asm/+asm/ADR Home Subdirectories

Within each ADR home directory are subdirectories that contain the diagnostic data. Table 7-2 lists some of these subdirectories and their contents.

Table 7-2 ADR Home Subdirectories

| Subdirectory Name | Contents |

|---|---|

|

alert |

The XML-formatted alert log |

|

cdump |

Core files |

|

incident |

Multiple subdirectories, where each subdirectory is named for a particular incident, and where each contains dumps pertaining only to that incident |

|

trace |

Background and server process trace files, SQL trace files, and the text-formatted alert log |

|

(others) |

Other subdirectories of ADR home, which store incident packages, health monitor reports, logs other than the alert log (such as the DDL log and the debug log), and other information |

Figure 7-2 illustrates the complete directory hierarchy of the ADR for a database instance.

Figure 7-2 ADR Directory Structure for a Database Instance

Description of "Figure 7-2 ADR Directory Structure for a Database Instance"

ADR in an Oracle Clusterware Environment

Oracle Clusterware uses ADR and has its own Oracle home and Oracle base. The ADR directory structure for Oracle Clusterware is different from that of a database instance. There is only one instance of Oracle Clusterware on a system, so Clusterware ADR homes use only a system's host name as a differentiator.

When Oracle Clusterware is configured, the ADR home uses crs for both the product type and the instance ID, and the system host name is used for the product ID. Thus, on a host named dbprod01, the CRS ADR home would be:

ADR_base/diag/crs/dbprod01/crs/

ADR in an Oracle Real Application Clusters Environment

In an Oracle Real Application Clusters (Oracle RAC) environment, each node can have ADR base on its own local storage, or ADR base can be set to a location on shared storage. You can use ADRCI to view aggregated diagnostic data from all instances on a single report.

ADR in Oracle Client

Each installation of Oracle Client includes an ADR for diagnostic data associated with critical failures in any of the Oracle Client components. The ADRCI utility is installed with Oracle Client so that you can examine diagnostic data and package it to enable it for upload to Oracle Support.

Viewing ADR Locations with the V$DIAG_INFO View

The V$DIAG_INFO view lists all important ADR locations for the current Oracle Database instance.

SELECT * FROM V$DIAG_INFO;

INST_ID NAME VALUE

------- --------------------- -------------------------------------------------------------

1 Diag Enabled TRUE

1 ADR Base /u01/oracle

1 ADR Home /u01/oracle/diag/rdbms/orclbi/orclbi

1 Diag Trace /u01/oracle/diag/rdbms/orclbi/orclbi/trace

1 Diag Alert /u01/oracle/diag/rdbms/orclbi/orclbi/alert

1 Diag Incident /u01/oracle/diag/rdbms/orclbi/orclbi/incident

1 Diag Cdump /u01/oracle/diag/rdbms/orclbi/orclbi/cdump

1 Health Monitor /u01/oracle/diag/rdbms/orclbi/orclbi/hm

1 Default Trace File /u01/oracle/diag/rdbms/orclbi/orclbi/trace/orcl_ora_22769.trc

1 Active Problem Count 8

1 Active Incident Count 20

The following table describes some of the information displayed by this view.

Table 7-3 Data in the V$DIAG_INFO View

| Name | Description |

|---|---|

|

ADR Base |

Path of ADR base |

|

ADR Home |

Path of ADR home for the current database instance |

|

Diag Trace |

Location of background process trace files, server process trace files, SQL trace files, and the text-formatted version of the alert log |

|

Diag Alert |

Location of the XML-formatted version of the alert log |

|

Default Trace File |

Path to the trace file for the current session |

Viewing Critical Errors with the V$DIAG_CRITICAL_ERROR View

The V$DIAG_CRITICAL_ERROR view lists all of the non-internal errors designated as critical errors for the current Oracle Database release. The view does not list internal errors because internal errors are always designated as critical errors.

The following example shows the output for the V$DIAG_CRITICAL_ERROR view:

SELECT * FROM V$DIAG_CRITICAL_ERROR; FACILITY ERROR ---------- ---------------------------------------------------------------- ORA 7445 ORA 4030 ORA 4031 ORA 29740 ORA 255 ORA 355 ORA 356 ORA 239 ORA 240 ORA 494 ORA 3137 ORA 227 ORA 353 ORA 1578 ORA 32701 ORA 32703 ORA 29770 ORA 29771 ORA 445 ORA 25319 OCI 3106 OCI 3113 OCI 3135

The following table describes the information displayed by this view.

Table 7-4 Data in the V$DIAG_CRITICAL_ERROR View

| Column | Description |

|---|---|

|

|

The facility that can report the error, such as Oracle Database (ORA) or Oracle Call Interface (OCI) |

|

|

The error number |

See Also:

"About Incidents and Problems" for more information about internal errors

7.2 About Investigating, Reporting, and Resolving a Problem

You can use the Enterprise Manager Support Workbench (Support Workbench) to investigate and report a problem (critical error), and in some cases, resolve the problem. You can use a "roadmap" that summarizes the typical set of tasks that you must perform.

Note:

The tasks described in this section are all Cloud Control–based. You can also accomplish all of these tasks (or their equivalents) with the ADRCI command-line utility, with PL/SQL packages such as DBMS_HM and DBMS_SQLDIAG, and with other software tools. See Oracle Database Utilities for more information on the ADRCI utility, and see Oracle Database PL/SQL Packages and Types Reference for information on PL/SQL packages.

- Roadmap — Investigating, Reporting, and Resolving a Problem

You can begin investigating a problem by starting from the Support Workbench home page in Cloud Control. However, the more typical workflow begins with a critical error alert on the Database Home page. - Task 1: View Critical Error Alerts in Cloud Control

You begin the process of investigating problems (critical errors) by reviewing critical error alerts on the Database Home page or Oracle Automatic Storage Management Home page. - Task 2: View Problem Details

You continue your investigation from the Incident Manager Problem Details page. - Task 3: (Optional) Gather Additional Diagnostic Information

You can perform the following activities to gather additional diagnostic information for a problem. This additional information is then automatically included in the diagnostic data uploaded to Oracle Support. If you are unsure about performing these activities, then check with your Oracle Support representative. - Task 4: (Optional) Create a Service Request

At this point, you can create an Oracle Support service request and record the service request number with the problem information. - Task 5: Package and Upload Diagnostic Data to Oracle Support

For this task, you use the quick packaging process of the Support Workbench to package and upload the diagnostic information for the problem to Oracle Support. - Task 6: Track the Service Request and Implement Any Repairs

After uploading diagnostic information to Oracle Support, you might perform various activities to track the service request, to collect additional diagnostic information, and to implement repairs.

See Also:

"About the Oracle Database Fault Diagnosability Infrastructure" for more information on problems and their diagnostic data

Parent topic: Diagnosing and Resolving Problems

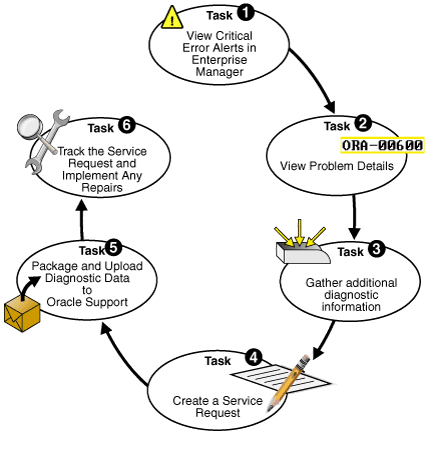

7.2.1 Roadmap — Investigating, Reporting, and Resolving a Problem

You can begin investigating a problem by starting from the Support Workbench home page in Cloud Control. However, the more typical workflow begins with a critical error alert on the Database Home page.

Figure 7-3 illustrates the tasks that you complete to investigate, report, and in some cases, resolve a problem.

Figure 7-3 Workflow for Investigating, Reporting, and Resolving a Problem

Description of "Figure 7-3 Workflow for Investigating, Reporting, and Resolving a Problem"

The following are task descriptions. Subsequent sections provide details for each task.

-

Task 1: View Critical Error Alerts in Cloud Control

Start by accessing the Database Home page in Cloud Control and reviewing critical error alerts. Select an alert for which to view details, and then go to the Problem Details page.

-

Examine the problem details and view a list of all incidents that were recorded for the problem. Display findings from any health checks that were automatically run.

-

Task 3: (Optional) Gather Additional Diagnostic Information

Optionally run additional health checks or other diagnostics. For SQL-related errors, optionally invoke the SQL Test Case Builder, which gathers all required data related to a SQL problem and packages the information in a way that enables the problem to be reproduced at Oracle Support.

-

Task 4: (Optional) Create a Service Request

Optionally create a service request with My Oracle Support and record the service request number with the problem information. If you skip this step, you can create a service request later, or the Support Workbench can create one for you.

-

Task 5: Package and Upload Diagnostic Data to Oracle Support

Invoke a guided workflow (a wizard) that automatically packages the gathered diagnostic data for a problem and uploads the data to Oracle Support.

-

Task 6: Track the Service Request and Implement Any Repairs

Optionally maintain an activity log for the service request in the Support Workbench. Run Oracle advisors to help repair SQL failures or corrupted data.

See Also:

Parent topic: About Investigating, Reporting, and Resolving a Problem

7.2.2 Task 1: View Critical Error Alerts in Cloud Control

You begin the process of investigating problems (critical errors) by reviewing critical error alerts on the Database Home page or Oracle Automatic Storage Management Home page.

To view critical error alerts:

Parent topic: About Investigating, Reporting, and Resolving a Problem

7.2.3 Task 2: View Problem Details

You continue your investigation from the Incident Manager Problem Details page.

To view problem details:

Parent topic: About Investigating, Reporting, and Resolving a Problem

7.2.4 Task 3: (Optional) Gather Additional Diagnostic Information

You can perform the following activities to gather additional diagnostic information for a problem. This additional information is then automatically included in the diagnostic data uploaded to Oracle Support. If you are unsure about performing these activities, then check with your Oracle Support representative.

-

Manually invoke additional health checks.

-

Invoke the SQL Test Case Builder.

Parent topic: About Investigating, Reporting, and Resolving a Problem

7.2.5 Task 4: (Optional) Create a Service Request

At this point, you can create an Oracle Support service request and record the service request number with the problem information.

If you choose to skip this task, then the Support Workbench will automatically create a draft service request for you in "Task 5: Package and Upload Diagnostic Data to Oracle Support".

To create a service request:

-

From the Enterprise menu, select My Oracle Support, then Service Requests.

The My Oracle Support Login and Registration page appears.

-

Log in to My Oracle Support and create a service request in the usual manner.

(Optional) Remember the service request number (SR#) for the next step.

-

(Optional) Return to the Problem Details page, and then do the following:

-

In the Summary section, click the Edit button that is adjacent to the SR# label.

-

Enter the SR#, and then click OK.

The SR# is recorded in the Problem Details page. This is for your reference only. See "Viewing Problems with the Support Workbench" for information about returning to the Problem Details page.

-

Parent topic: About Investigating, Reporting, and Resolving a Problem

7.2.6 Task 5: Package and Upload Diagnostic Data to Oracle Support

For this task, you use the quick packaging process of the Support Workbench to package and upload the diagnostic information for the problem to Oracle Support.

Quick packaging has a minimum of steps, organized in a guided workflow (a wizard). The wizard assists you with creating an incident package (package) for a single problem, creating a zip file from the package, and uploading the file. With quick packaging, you are not able to edit or otherwise customize the diagnostic information that is uploaded. However, quick packaging is the more direct, straightforward method to package and upload diagnostic data.

To edit or remove sensitive data from the diagnostic information, enclose additional user files (such as application configuration files or scripts), or perform other customizations before uploading, you must use the custom packaging process, which is a more manual process and has more steps. See "Reporting Problems" for instructions. If you choose to follow those instructions instead of the instructions here in Task 5, do so now and then continue with Task 6: Track the Service Request and Implement Any Repairs when you are finished.

To package and upload diagnostic data to Oracle Support:

When the Quick Packaging wizard is complete, if a new draft service request was created, then the confirmation message contains a link to the draft service request in My Oracle Support in Cloud Control. You can review and edit the service request by clicking the link.

The package created by the Quick Packaging wizard remains available in the Support Workbench. You can then modify it with custom packaging operations (such as adding new incidents) and upload again at a later time. See "Viewing and Modifying Incident Packages".

Parent topic: About Investigating, Reporting, and Resolving a Problem

7.2.7 Task 6: Track the Service Request and Implement Any Repairs

After uploading diagnostic information to Oracle Support, you might perform various activities to track the service request, to collect additional diagnostic information, and to implement repairs.

Among these activities are the following:

-

Adding an Oracle bug number to the problem information.

To do so, on the Problem Details page, click the Edit button that is adjacent to the Bug# label. This is for your reference only.

-

Adding comments to the problem activity log.

You may want to do this to share problem status or history information with other DBAs in your organization. For example, you could record the results of your conversations with Oracle Support. To add comments, complete the following steps:

-

Access the Problem Details page for the problem, as described in "Viewing Problems with the Support Workbench".

-

Click Activity Log to display the Activity Log subpage.

-

In the Comment field, enter a comment, and then click Add Comment.

Your comment is recorded in the activity log.

-

-

As new incidents occur, adding them to the package and reuploading.

For this activity, you must use the custom packaging method described in "Reporting Problems".

-

Running health checks.

-

Running a suggested Oracle advisor to implement repairs.

Access the suggested advisor in one of the following ways:

-

Problem Details page—In the Self-Service tab of the Investigate and Resolve section

-

Support Workbench home page—on the Checker Findings subpage

-

Incident Details page—on the Checker Findings subpage

Table 7-5 lists the advisors that help repair critical errors.

Table 7-5 Oracle Advisors that Help Repair Critical Errors

Advisor Critical Errors Addressed See Data Recovery Advisor

Corrupted blocks, corrupted or missing files, and other data failures

SQL Repair Advisor

SQL statement failures

-

See Also:

"Viewing Problems with the Support Workbench" for instructions for viewing the Checker Findings subpage of the Incident Details page

Parent topic: About Investigating, Reporting, and Resolving a Problem

7.3 Diagnosing Problems

This section describes various methods to diagnose problems in an Oracle database.

- Identifying Problems Reactively

This section describes how to identify Oracle database problems reactively. - Identifying Problems Proactively with Health Monitor

You can run diagnostic checks on a database with Health Monitor. - Gathering Additional Diagnostic Data

This section describes how to gather additional diagnostic data using alert log and trace files. - Creating Test Cases with SQL Test Case Builder

SQL Test Case Builder is a tool that automatically gathers information needed to reproduce the problem in a different database instance.

Parent topic: Diagnosing and Resolving Problems

7.3.1 Identifying Problems Reactively

This section describes how to identify Oracle database problems reactively.

- Viewing Problems with the Support Workbench

You can use the Support Workbench home page in Cloud Control to view all the problems or the problems in a specific time period. - Adding Problems Manually to the Automatic Diagnostic Repository

You can use Support Workbench in Cloud Control to manually add a problem to the ADR. - Creating Incidents Manually

You can create incidents manually by using the Automatic Diagnostic Repository Command Interpreter (ADRCI) utility. - Using DBMS_HCHECK to Identify Data Dictionary Inconsistencies

DBMS_HCHECK is a read-only and lightweight PL/SQL package procedure that helps you identify database dictionary inconsistencies.

Parent topic: Diagnosing Problems

7.3.1.1 Viewing Problems with the Support Workbench

You can use the Support Workbench home page in Cloud Control to view all the problems or the problems in a specific time period.

Figure 7-4 Support Workbench Home Page in Cloud Control

Description of "Figure 7-4 Support Workbench Home Page in Cloud Control"

To access the Support Workbench home page (database or Oracle ASM):

-

Access the Database Home page in Cloud Control.

-

From the Oracle Database menu, select Diagnostics, then Support Workbench.

The Support Workbench home page for the database instance appears, showing the Problems subpage. By default the problems from the last 24 hours are displayed.

-

To view the Support Workbench home page for the Oracle ASM instance, click the link Support Workbench (+ASM_hostname) in the Related Links section.

To view problems and incidents:

-

On the Support Workbench home page, select the desired time period from the View list. To view all problems, select All.

-

(Optional) If the Performance and Critical Error Timeline section is hidden, click the Show/Hide icon adjacent to the section heading to show the section.

This section enables you to view any correlation between performance changes and incident occurrences.

-

(Optional) Under the Details column, click Show to display a list of all incidents for a problem, and then click an incident ID to display the Incident Details page.

To view details for a particular problem:

See Also:

Parent topic: Identifying Problems Reactively

7.3.1.2 Adding Problems Manually to the Automatic Diagnostic Repository

You can use Support Workbench in Cloud Control to manually add a problem to the ADR.

System-generated problems, such as critical errors generated internally to the database are automatically added to the Automatic Diagnostic Repository (ADR) and tracked in the Support Workbench.

From the Support Workbench, you can gather additional diagnostic data on these problems, upload diagnostic data to Oracle Support, and in some cases, resolve the problems, all with the easy-to-use workflow that is explained in "About Investigating, Reporting, and Resolving a Problem".

There may be a situation in which you want to manually add a problem that you noticed to the ADR, so that you can put that problem through that same workflow. An example of such a situation might be a global database performance problem that was not diagnosed by Automatic Diagnostic Database Monitor (ADDM). The Support Workbench includes a mechanism for you to create and work with such a user-reported problem.

To create a user-reported problem:

See Also:

"About the Oracle Database Fault Diagnosability Infrastructure" for more information on problems and the ADR

Parent topic: Identifying Problems Reactively

7.3.1.3 Creating Incidents Manually

You can create incidents manually by using the Automatic Diagnostic Repository Command Interpreter (ADRCI) utility.

To create an incident manually by using the ADRCI utility:

-

Ensure that the

ORACLE_HOMEandPATHenvironment variables are set properly. ThePATHenvironment variable must includeORACLE_HOME/bindirectory. -

Start the ADRCI utility by running the following command at the operating system command prompt:

ADRCIThe ADRCI utility starts and displays the following prompt:

adrci> -

Run the ADRCI command having the following syntax to create an incident manually:

adrci> dde create incident type incident_typeSpecify incident_type value for the type of incident that you want to create.

See Also:

-

Oracle Database Utilities for more information about the ADRCI utility

Parent topic: Identifying Problems Reactively

7.3.1.4 Using DBMS_HCHECK to Identify Data Dictionary Inconsistencies

DBMS_HCHECK is a read-only and lightweight PL/SQL package procedure that helps you identify database dictionary inconsistencies.

DBMS_HCHECK is a read-only and lightweight PL/SQL

package procedure that helps you identify database dictionary inconsistencies that

are manifested in unexpected entries in the RDBMS dictionary tables or invalid

references between dictionary tables. Database dictionary inconsistencies can cause

process failures and, in some cases, instance crash. Such inconsistencies may be

exposed to internal ORA-00600 errors. DBMS_HCHECK

assists you in identifying such inconsistencies and in some cases provides guided

remediation to resolve the problem and avoid such database failures.

Unexpected entries in the dictionary tables or invalid references between dictionary tables, for example, include the following:

- A lob segment not in

OBJ$ - An entry in

SOURCE$not inOBJ$ - Invalid data between

OBJ$-PARTOBJ$andTABPART$ - A segment with no owner

- A table with no segment

- A segment with no object entry

- A recycle bin object not in the

recyclebin$ - Check if

Control Seqis near the limit

To run all the checks or only the critical checks defined by

dbms_hcheck, connect to the SYS schema, and then run the

following commands as SYS user:

Full check

SQL> set serveroutput on size unlimited

SQL> execute dbms_hcheck.fullCritical check

SQL> set serveroutput on size unlimited

SQL> execute dbms_hcheck.criticalOptionally, turn on the spool to redirect the output to a server-side

flat file. By default, when you query the SYS schema, the

DBMS_HCHECK package creates a trace file named,

HCHECK.trc.

For example: /<path>/diag/rdbms/<db_name>/<oracle_sid>/trace/<oracle_sid>_<ora>_<pid>_HCHECK.trc.

- CRITICAL: Requires an immediate fix.

- FAIL: Requires resolution on priority.

- WARN: Good to resolve.

- PASS: No issues.

Note:

In all cases, any output reporting "problems" must be triaged by Oracle Support to confirm if any action is required.

Example 7-1 Full check run

SQL> set serveroutput on size unlimited

SQL> execute dbms_hcheck.full

dbms_hcheck on 07-MAR-2023 03:17:48

----------------------------------------------

Catalog Version 21.0.0.0.0 (2300000000)

db_name: ORCL

Is CDB?: NO

Trace File: /oracle/log/diag/rdbms/orcl/orcl/trace/orcl_ora_2574906_HCHECK.trc

Catalog Fixed

Procedure Name Version Vs Release Timestamp Result

------------------------------ ... ---------- -- ---------- -------------- ------

.- OIDOnObjCol ... 2300000000 <= *All Rel* 03/07 03:17:48 PASS

.- LobNotInObj ... 2300000000 <= *All Rel* 03/07 03:17:48 PASS

.- SourceNotInObj ... 2300000000 <= *All Rel* 03/07 03:17:48 PASS

.- OversizedFiles ... 2300000000 <= *All Rel* 03/07 03:17:48 PASS

.- PoorDefaultStorage ... 2300000000 <= *All Rel* 03/07 03:17:48 PASS

.- PoorStorage ... 2300000000 <= *All Rel* 03/07 03:17:48 PASS

.- TabPartCountMismatch ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- TabComPartObj ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- Mview ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- ValidDir ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- DuplicateDataobj ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- ObjSyn ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- ObjSeq ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- UndoSeg ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- IndexSeg ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- IndexPartitionSeg ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- IndexSubPartitionSeg ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- TableSeg ... 2300000000 <= *All Rel* 03/07 03:17:49 FAIL

HCKE-0019: Orphaned TAB$ (no SEG$) (Doc ID 1360889.1)

ORPHAN TAB$: OBJ#=83241 DOBJ#=83241 TS=5 RFILE/BLOCK=5/11 TABLE=SYS.ORPHANSEG BOBJ#=

.- TablePartitionSeg ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- TableSubPartitionSeg ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- PartCol ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- ValidSeg ... 2300000000 <= *All Rel* 03/07 03:17:49 FAIL

HCKE-0023: Orphaned SEG$ Entry (Doc ID 1360934.1)

ORPHAN SEG$: SegType=LOB TS=5 RFILE/BLOCK=5/26

.- IndPartObj ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- DuplicateBlockUse ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- FetUet ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- Uet0Check ... 2300000000 <= *All Rel* 03/07 03:17:49 PASS

.- SeglessUET ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ValidInd ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ValidTab ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- IcolDepCnt ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ObjIndDobj ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- TrgAfterUpgrade ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ObjType0 ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ValidOwner ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- StmtAuditOnCommit ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- PublicObjects ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- SegFreelist ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ValidDepends ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- CheckDual ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ObjectNames ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ChkIotTs ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- NoSegmentIndex ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- NextObject ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- DroppedROTS ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- FilBlkZero ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- DbmsSchemaCopy ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- IdnseqObj ... 2300000000 > 1201000000 03/07 03:17:50 PASS

.- IdnseqSeq ... 2300000000 > 1201000000 03/07 03:17:50 PASS

.- ObjError ... 2300000000 > 1102000000 03/07 03:17:50 PASS

.- ObjNotLob ... 2300000000 <= *All Rel* 03/07 03:17:50 FAIL

HCKE-0049: OBJ$ LOB entry has no LOB$ or LOBFRAG$ entry (Doc ID 2125104.1)

OBJ$ LOB has no LOB$ entry: Obj=83243 Owner: SYS LOB Name: LOBC1

.- MaxControlfSeq ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- SegNotInDeferredStg ... 2300000000 > 1102000000 03/07 03:17:50 PASS

.- SystemNotRfile1 ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- DictOwnNonDefaultSYSTEM ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ValidateTrigger ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- ObjNotTrigger ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

.- InvalidTSMaxSCN ... 2300000000 > 1202000000 03/07 03:17:50 CRITICAL

HCKE-0054: TS$ has Tablespace with invalid Maximum SCN (Doc ID 1360208.1)

TS$ has Tablespace with invalid Maximum SCN: TS#=5 Tablespace=HCHECK Online$=1

.- OBJRecycleBin ... 2300000000 <= *All Rel* 03/07 03:17:50 PASS

---------------------------------------

07-MAR-2023 03:17:50 Elapsed: 2 secs

---------------------------------------

Found 4 potential problem(s) and 0 warning(s)

Found 1 CRITICAL problem(s) needing attention

Contact Oracle Support with the output and trace file

to check if the above needs attention or not

BEGIN dbms_hcheck.full; END;

*

ERROR at line 1:

ORA-20000: dbms_hcheck found 1 critical issue(s). Trace file:

/oracle/log/diag/rdbms/orcl/orcl/trace/orcl_ora_2574906_HCHECK.trc

SQL>Example 7-2 Critical check run

SQL> set serveroutput on size unlimited

SQL> execute dbms_hcheck.critical

dbms_hcheck on 07-MAR-2023 03:12:23

----------------------------------------------

Catalog Version 21.0.0.0.0 (2100000000)

db_name: ORCL

Is CDB?: NO

Trace File: /oracle/log/diag/rdbms/orcl/orcl/trace/orcl_ora_2574058_HCHECK.trc

Catalog Fixed

Procedure Name Version Vs Release Timestamp Result

------------------------------ ... ---------- -- ---------- -------------- ------

.- UndoSeg ... 2300000000 <= *All Rel* 03/07 03:12:23 PASS

.- MaxControlfSeq ... 2300000000 <= *All Rel* 03/07 03:12:23 PASS

.- InvalidTSMaxSCN ... 2300000000 > 1202000000 03/07 03:12:23 CRITICAL

HCKE-0054: TS$ has Tablespace with invalid Maximum SCN (Doc ID 1360208.1)

TS$ has Tablespace with invalid Maximum SCN: TS#=5 Tablespace=HCHECK Online$=1

---------------------------------------

07-MAR-2023 03:12:23 Elapsed: 0 secs

---------------------------------------

Found 1 potential problem(s) and 0 warning(s)

Found 1 CRITICAL problem(s) needing attention

Contact Oracle Support with the output and trace file

to check if the above needs attention or not

BEGIN dbms_hcheck.critical; END;

*

ERROR at line 1:

ORA-20000: dbms_hcheck found 1 critical issue(s). Trace file:

/ade/b/959592990/oracle/log/diag/rdbms/orcl/orcl/trace/orcl_ora_2574058_HCHECK.trc

SQL>Related Topics

Parent topic: Identifying Problems Reactively

7.3.2 Identifying Problems Proactively with Health Monitor

You can run diagnostic checks on a database with Health Monitor.

- About Health Monitor

Oracle Database includes a framework called Health Monitor for running diagnostic checks on the database. - Running Health Checks Manually

Health Monitor can run health checks manually either by using theDBMS_HMPL/SQL package or by using the Cloud Control interface, found on the Checkers subpage of the Advisor Central page. - Viewing Checker Reports

After a checker has run, you can view a report of its execution. The report contains findings, recommendations, and other information. You can view reports using Cloud Control, the ADRCI utility, or theDBMS_HMPL/SQL package. The following table indicates the report formats available with each viewing method. - Health Monitor Views

Instead of requesting a checker report, you can view the results of a specific checker run by directly querying the ADR data from which reports are created. - Health Check Parameters Reference

Some health checks require parameters. Parameters with a default value of(none)are mandatory.

Parent topic: Diagnosing Problems

7.3.2.1 About Health Monitor

Oracle Database includes a framework called Health Monitor for running diagnostic checks on the database.

- About Health Monitor Checks

Health Monitor checks (also known as checkers, health checks, or checks) examine various layers and components of the database. - Types of Health Checks

Health monitor runs several different types of checks.

Parent topic: Identifying Problems Proactively with Health Monitor

7.3.2.1.1 About Health Monitor Checks

Health Monitor checks (also known as checkers, health checks, or checks) examine various layers and components of the database.

Health checks detect file corruptions, physical and logical block corruptions, undo and redo corruptions, data dictionary corruptions, and more. The health checks generate reports of their findings and, in many cases, recommendations for resolving problems. Health checks can be run in two ways:

-

Reactive—The fault diagnosability infrastructure can run health checks automatically in response to a critical error.

-

Manual—As a DBA, you can manually run health checks using either the

DBMS_HMPL/SQL package or the Cloud Control interface. You can run checkers on a regular basis if desired, or Oracle Support may ask you to run a checker while working with you on a service request.

Health Monitor checks store findings, recommendations, and other information in the Automatic Diagnostic Repository (ADR).

Health checks can run in two modes:

-

DB-online mode means the check can be run while the database is open (that is, in

OPENmode orMOUNTmode). -

DB-offline mode means the check can be run when the instance is available but the database itself is closed (that is, in

NOMOUNTmode).

All the health checks can be run in DB-online mode. Only the Redo Integrity Check and the DB Structure Integrity Check can be used in DB-offline mode.

Parent topic: About Health Monitor

7.3.2.1.2 Types of Health Checks

Health monitor runs several different types of checks.

Health monitor runs the following checks:

-

DB Structure Integrity Check—This check verifies the integrity of database files and reports failures if these files are inaccessible, corrupt or inconsistent. If the database is in mount or open mode, this check examines the log files and data files listed in the control file. If the database is in

NOMOUNTmode, only the control file is checked. -

Data Block Integrity Check—This check detects disk image block corruptions such as checksum failures, head/tail mismatch, and logical inconsistencies within the block. Most corruptions can be repaired using Block Media Recovery. Corrupted block information is also captured in the

V$DATABASE_BLOCK_CORRUPTIONview. This check does not detect inter-block or inter-segment corruption. -

Redo Integrity Check—This check scans the contents of the redo log for accessibility and corruption, as well as the archive logs, if available. The Redo Integrity Check reports failures such as archive log or redo corruption.

-

Undo Segment Integrity Check—This check finds logical undo corruptions. After locating an undo corruption, this check uses PMON and SMON to try to recover the corrupted transaction. If this recovery fails, then Health Monitor stores information about the corruption in

V$CORRUPT_XID_LIST. Most undo corruptions can be resolved by forcing a commit. -

Transaction Integrity Check—This check is identical to the Undo Segment Integrity Check except that it checks only one specific transaction.

-

Dictionary Integrity Check—This check examines the integrity of core dictionary objects, such as

tab$andcol$. It performs the following operations:-

Verifies the contents of dictionary entries for each dictionary object.

-

Performs a cross-row level check, which verifies that logical constraints on rows in the dictionary are enforced.

-

Performs an object relationship check, which verifies that parent-child relationships between dictionary objects are enforced.

The Dictionary Integrity Check operates on the following dictionary objects:

tab$,clu$,fet$,uet$,seg$,undo$,ts$,file$,obj$,ind$,icol$,col$,user$,con$,cdef$,ccol$,bootstrap$,objauth$,ugroup$,tsq$,syn$,view$,typed_view$,superobj$,seq$,lob$,coltype$,subcoltype$,ntab$,refcon$,opqtype$,dependency$,access$,viewcon$,icoldep$,dual$,sysauth$,objpriv$,defrole$, andecol$. -

Parent topic: About Health Monitor

7.3.2.2 Running Health Checks Manually

Health Monitor can run health checks manually either by using the DBMS_HM PL/SQL package or by using the Cloud Control interface, found on the Checkers subpage of the Advisor Central page.

- Running Health Checks Using the DBMS_HM PL/SQL Package

TheDBMS_HMprocedure for running a health check is calledRUN_CHECK. - Running Health Checks Using Cloud Control

Cloud Control provides an interface for running Health Monitor checkers.

Parent topic: Identifying Problems Proactively with Health Monitor

7.3.2.2.1 Running Health Checks Using the DBMS_HM PL/SQL Package

The DBMS_HM procedure for running a health check is called RUN_CHECK.

-

To call

RUN_CHECK, supply the name of the check and a name for the run, as follows:BEGIN DBMS_HM.RUN_CHECK('Dictionary Integrity Check', 'my_run'); END; / -

To obtain a list of health check names, run the following query:

SELECT name FROM v$hm_check WHERE internal_check='N';

Your output is similar to the following:

NAME ---------------------------------------------------------------- DB Structure Integrity Check Data Block Integrity Check Redo Integrity Check Transaction Integrity Check Undo Segment Integrity Check Dictionary Integrity Check

Most health checks accept input parameters. You can view parameter names and descriptions with the V$HM_CHECK_PARAM view. Some parameters are mandatory while others are optional. If optional parameters are omitted, defaults are used. The following query displays parameter information for all health checks:

SELECT c.name check_name, p.name parameter_name, p.type, p.default_value, p.description FROM v$hm_check_param p, v$hm_check c WHERE p.check_id = c.id and c.internal_check = 'N' ORDER BY c.name;

Input parameters are passed in the input_params argument as name/value pairs separated by semicolons (;). The following example illustrates how to pass the transaction ID as a parameter to the Transaction Integrity Check:

BEGIN DBMS_HM.RUN_CHECK ( check_name => 'Transaction Integrity Check', run_name => 'my_run', input_params => 'TXN_ID=7.33.2'); END; /

See Also:

-

Oracle Database PL/SQL Packages and Types Reference for more examples of using

DBMS_HM.

Parent topic: Running Health Checks Manually

7.3.2.2.2 Running Health Checks Using Cloud Control

Cloud Control provides an interface for running Health Monitor checkers.

To run a Health Monitor Checker using Cloud Control:

- Access the Database Home page.

- From the Performance menu, select Advisors Home.

- Click Checkers to view the Checkers subpage.

- In the Checkers section, click the checker you want to run.

- Enter values for input parameters or, for optional parameters, leave them blank to accept the defaults.

- Click OK, confirm your parameters, and click OK again.

Parent topic: Running Health Checks Manually

7.3.2.3 Viewing Checker Reports

After a checker has run, you can view a report of its execution. The report contains findings, recommendations, and other information. You can view reports using Cloud Control, the ADRCI utility, or the DBMS_HM PL/SQL package. The following table indicates the report formats available with each viewing method.

- About Viewing Checker Reports

Results of checker runs (findings, recommendations, and other information) are stored in the ADR, but reports are not generated immediately. - Viewing Reports Using Cloud Control

You can also view Health Monitor reports and findings for a given checker run using Cloud Control. - Viewing Reports Using DBMS_HM

You can view Health Monitor checker reports with theDBMS_HMpackage functionGET_RUN_REPORT. - Viewing Reports Using the ADRCI Utility

You can create and view Health Monitor checker reports using the ADRCI utility.

Parent topic: Identifying Problems Proactively with Health Monitor

7.3.2.3.1 About Viewing Checker Reports

Results of checker runs (findings, recommendations, and other information) are stored in the ADR, but reports are not generated immediately.

| Report Viewing Method | Report Formats Available |

|---|---|

|

Cloud Control |

HTML |

|

|

HTML, XML, and text |

|

ADRCI utility |

XML |

When you request a report with the DBMS_HM PL/SQL package or with Cloud Control, if the report does not yet exist, it is first generated from the checker run data in the ADR, stored as a report file in XML format in the HM subdirectory of the ADR home for the current instance, and then displayed. If the report file already exists, it is just displayed. When using the ADRCI utility, you must first run a command to generate the report file if it does not exist, and then run another command to display its contents.

The preferred method to view checker reports is with Cloud Control.

Parent topic: Viewing Checker Reports

7.3.2.3.2 Viewing Reports Using Cloud Control

You can also view Health Monitor reports and findings for a given checker run using Cloud Control.

To view run findings using Cloud Control:

Parent topic: Viewing Checker Reports

7.3.2.3.3 Viewing Reports Using DBMS_HM

You can view Health Monitor checker reports with the DBMS_HM package function GET_RUN_REPORT.

This function enables you to request HTML, XML, or text formatting. The default format is text, as shown in the following SQL*Plus example:

SET LONG 100000

SET LONGCHUNKSIZE 1000

SET PAGESIZE 1000

SET LINESIZE 512

SELECT DBMS_HM.GET_RUN_REPORT('HM_RUN_1061') FROM DUAL;

DBMS_HM.GET_RUN_REPORT('HM_RUN_1061')

-----------------------------------------------------------------------

Run Name : HM_RUN_1061

Run Id : 1061

Check Name : Data Block Integrity Check

Mode : REACTIVE

Status : COMPLETED

Start Time : 2007-05-12 22:11:02.032292 -07:00

End Time : 2007-05-12 22:11:20.835135 -07:00

Error Encountered : 0

Source Incident Id : 7418

Number of Incidents Created : 0

Input Parameters for the Run

BLC_DF_NUM=1

BLC_BL_NUM=64349

Run Findings And Recommendations

Finding

Finding Name : Media Block Corruption

Finding ID : 1065

Type : FAILURE

Status : OPEN

Priority : HIGH

Message : Block 64349 in datafile 1:

'/u01/app/oracle/dbs/t_db1.f' is media corrupt

Message : Object BMRTEST1 owned by SYS might be unavailable

Finding

Finding Name : Media Block Corruption

Finding ID : 1071

Type : FAILURE

Status : OPEN

Priority : HIGH

Message : Block 64351 in datafile 1:

'/u01/app/oracle/dbs/t_db1.f' is media corrupt

Message : Object BMRTEST2 owned by SYS might be unavailableSee Also:

Oracle Database PL/SQL Packages and Types Reference for details on the DBMS_HM package.

Parent topic: Viewing Checker Reports

7.3.2.3.4 Viewing Reports Using the ADRCI Utility

You can create and view Health Monitor checker reports using the ADRCI utility.

To create and view a checker report using ADRCI:

Parent topic: Viewing Checker Reports

7.3.2.4 Health Monitor Views

Instead of requesting a checker report, you can view the results of a specific checker run by directly querying the ADR data from which reports are created.

This data is available through the views V$HM_RUN, V$HM_FINDING, and V$HM_RECOMMENDATION.

The following example queries the V$HM_RUN view to determine a history of checker runs:

SELECT run_id, name, check_name, run_mode, src_incident FROM v$hm_run;

RUN_ID NAME CHECK_NAME RUN_MODE SRC_INCIDENT

---------- ------------ ---------------------------------- -------- ------------

1 HM_RUN_1 DB Structure Integrity Check REACTIVE 0

101 HM_RUN_101 Transaction Integrity Check REACTIVE 6073

121 TXNCHK Transaction Integrity Check MANUAL 0

181 HMR_tab$ Dictionary Integrity Check MANUAL 0

.

.

.

981 Proct_ts$ Dictionary Integrity Check MANUAL 0

1041 HM_RUN_1041 DB Structure Integrity Check REACTIVE 0

1061 HM_RUN_1061 Data Block Integrity Check REACTIVE 7418

The next example queries the V$HM_FINDING view to obtain finding details for the reactive data block check with RUN_ID 1061:

SELECT type, description FROM v$hm_finding WHERE run_id = 1061;

TYPE DESCRIPTION

------------- -----------------------------------------

FAILURE Block 64349 in datafile 1: '/u01/app/orac

le/dbs/t_db1.f' is media corrupt

FAILURE Block 64351 in datafile 1: '/u01/app/orac

le/dbs/t_db1.f' is media corruptSee Also:

-

Oracle Database Reference for more information on the

V$HM_*views

Parent topic: Identifying Problems Proactively with Health Monitor

7.3.2.5 Health Check Parameters Reference

Some health checks require parameters. Parameters with a default value of (none) are mandatory.

Table 7-6 Parameters for Data Block Integrity Check

| Parameter Name | Type | Default Value | Description |