1 Introduction to Oracle AI Database In-Memory

Oracle AI Database In-Memory (Database In-Memory) is a suite of features that greatly improves performance for real-time analytics and mixed workloads. The In-Memory Column Store (IM column store) is the key feature of Database In-Memory.

Note:

Database In-Memory features require the Oracle AI Database In-Memory option. For the Database In-Memory Base Level, the IM column store size is limited to 16 GB at the CDB level. See Oracle AI Database Licensing Information User Manual for details on which features are supported for different editions and services.

Challenges for Analytic Applications

Traditionally, obtaining good performance for analytic queries meant satisfying several requirements.

In a typical data warehouse or mixed-use database, requirements include the following:

-

You must understand user access patterns.

-

You must provide good performance, which typically requires creating indexes, and materialized views.

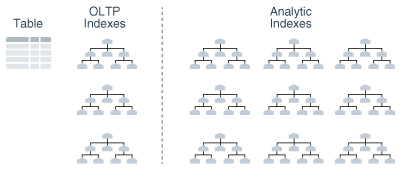

For example, if you create 1 to 3 indexes for a table (1 primary key and 2 foreign key indexes) to provide good performance for an OLTP application, then you may need to create additional indexes to provide good performance for analytic queries.

Meeting the preceding requirements creates manageability and performance problems. Additional access structures cause performance overhead because you must create, manage, and tune them. For example, inserting a single row into a table requires an update to all indexes on this table, which increases response time.

The demand for real-time analytics means that more analytic queries are being executed in a mixed-workload database. The traditional approach is not sustainable.

The Single-Format Approach

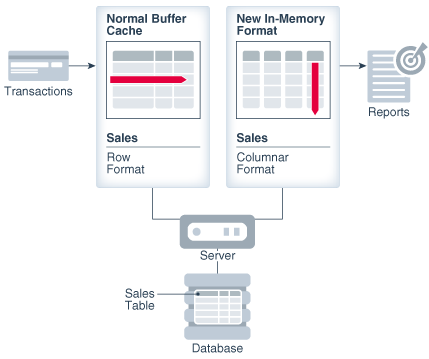

Traditionally, relational databases store data in either row or columnar formats. Memory and disk store data in the same format.

An Oracle database stores rows contiguously in data blocks. For example, in a table with three rows, an Oracle data block stores the first row, and then the second row, and then the third row. Each row contains all column values for the row. Data stored in row format is optimized for transaction processing. For example, updating all columns in a small number of rows may modify only a small number of blocks.

To address the problems relating to analytic queries, some database vendors have introduced a columnar format. A columnar database stores selected columns—not rows—contiguously. For example, in a large sales table, the sales IDs reside in one column, and sales regions reside in a different column.

Analytical workloads access few columns while scanning, but scan the entire data set. For this reason, the columnar format is the most efficient for analytics. Because columns are stored separately, an analytical query can access only required columns, and avoid reading inessential data. For example, a report on sales totals by region can rapidly process many rows while accessing only a few columns.

Database vendors typically force customers to choose between a columnar and row-based format. For example, if the data format is columnar, then the database stores data in columnar format both in memory and on disk. Gaining the advantages of one format means losing the advantages of the alternate format. Applications either achieve rapid analytics or rapid transactions, but not both. The performance problems for mixed-use databases are not solved by storing data in a single format.

The Oracle AI Database In-Memory Solution

The Oracle AI Database In-Memory (Database In-Memory) feature set includes the In-Memory Column Store (IM column store), advanced query optimizations, and availability solutions.

The Database In-Memory optimizations enable analytic queries to run orders of magnitude faster on data warehouses and mixed-use databases.

What Is Database In-Memory?

The Database In-Memory feature set includes the IM column store, advanced query optimizations, and availability solutions.

Database In-Memory features combine to accelerate analytic queries by orders of magnitude without sacrificing OLTP performance or availability.

See Also:

Oracle AI Database Licensing Information User Manual to learn about the Database In-Memory option

IM Column Store

The IM column store maintains copies of tables, partitions, and individual columns in a compressed columnar format that is optimized for rapid scans.

The IM column store stores the data for each table or view by column rather than by row. Each column is divided into separate row subsets. A container called an In-Memory Compression Unit (IMCU) stores all columns for a subset of rows in a table segment.

Video:

Storage in the SGA

The IM column store resides in the In-Memory Area, which is an optional portion of the system global area (SGA). The IM column store does not replace row-based storage or the database buffer cache, but supplements it. The database enables data to be in memory in both a row-based and columnar format, providing the best of both worlds. The IM column store provides an additional transaction-consistent copy of table data that is independent of the disk format.

Note:

Objects populated in the IM column store do not also need to be loaded into the buffer cache.

Population of Objects in the IM Column Store

In-Memory population is the automatic transformation of row-based data on disk into columnar data in the IM column store. When the INMEMORY_AUTOMATIC_LEVEL initialization parameter is set to HIGH, the database automatically decides the optimal segments and columns to populate in the IM column store, evicting infrequently accessed segments. No user decision-making is required.

Alternatively, you can manage the IM column store manually, specifying the INMEMORY clause at the object or column level, and then choosing when to populate objects. You can specify the INMEMORY clause at any of the following levels, listed from lowest level to highest level:

-

Column (nonvirtual or virtual)

-

Table partition (internal or external)

-

Table (internal or external) or materialized view

-

Tablespace

For any object, you can configure all or a subset of its columns for population. Similarly, for a partitioned table or materialized view, you can configure all or a subset of the partitions for population.

See Also:

-

Oracle AI Database SQL Language Reference for more information about the

INMEMORYclause

Advanced Query Optimizations

Database In-Memory includes several performance optimizations for analytic queries.

Optimizations include:

-

An expression is a combination of one or more values, operators, and SQL functions (

DETERMINISTIConly) that resolve to a value. By default, the In-Memory Expression (IM expression) optimization enables theDBMS_INMEMORY_ADMIN.IME_CAPTURE_EXPRESSIONSprocedure to identify and populate “hot” expressions in the IM column store.An IM expression is materialized as a hidden virtual column, but is accessed in the same way as a non-virtual column. IM expressions support the storage of virtual columns with the following data types:

NUMBER,CHAR,VARCHAR2,DATE,TIMESTAMP,TIMESTAMP WITH TIME ZONE,BINARY_FLOAT,BINARY_DOUBLE, andRAW. They do not store virtual columns that have character-set dependent data types, such asNCHARandNVARCHAR2. The also do not support NLS-dependent data types. -

A join group is a user-defined object that specifies a set of columns frequently used to join a set of tables. In certain queries, join groups enable the database to eliminate the performance overhead of decompressing and hashing column values.

-

For aggregation queries that join small dimension tables to a large fact table, In-Memory Aggregation (IM aggregation) uses the

VECTOR GROUP BYoperation to enhance performance. This optimization aggregates data during the scan of the fact table rather than afterward. -

In the IM column store, repopulation is the automatic update of IMCUs after the data within them has been significantly modified. If an IMCU has stale entries but does not meet the staleness threshold, then background processes may instigate trickle repopulation, which is the gradual repopulation of the IM column store.

Related Topics

High Availability Support

Availability is the degree to which an application, service, or function is accessible on demand.

Database In-Memory supports the following availability features:

-

In-Memory FastStart (IM FastStart) reduces the time to populate data into the IM column store when a database instance restarts. IM FastStart achieves this by periodically saving a copy of the data currently populated in the IM column store on the disk in its compressed columnar format.

-

Each node in an Oracle Real Application Clusters (Oracle RAC) environment has its own IM column store. It is possible to have completely different objects populated on every node, or to have larger objects distributed across all IM column stores in the cluster. In Engineered Systems, it is also possible to have the same objects appear in the IM column store on every node.

-

Starting in Oracle Database 12c Release 2 (12.2), an IM column store is supported on a standby database in an Active Data Guard environment.

Related Topics

Improved Performance for Analytic Queries

The compressed columnar format enables faster scans, queries, joins, and aggregates.

Improved Performance for Data Scans

The columnar format provides fast throughput for scanning large amounts of data.

The IM column store enables you to analyze data in real time, enabling you to explore different possibilities and perform iterations. Specifically, the IM column store can drastically improve performance for queries that do the following:

-

Scan many rows and applies filters that use operators such as

<,>,=, andIN -

Select few columns from a table or a materialized view that has many columns, such as a query that accesses 5 out of 100 columns

-

Enable fast In-Memory searching of text, XML, or JSON documents when queries specify the

CONTAINS()orJSON_TEXTCONTAINS()operators

Note:

Pure In-Memory Scans

In a pure In-Memory scan, all data is accessed from the IM column store.

Scans of the IM column store are faster than scans of row-based data for the following reasons:

-

Elimination of buffer cache overhead

The IM column store stores data in a pure, In-Memory columnar format. The data does not persist in the data files or generate redo, so the database avoids the overhead of reading data from disk into the buffer cache.

-

Data pruning

The database scans only the columns necessary for the query rather than entire rows of data. Furthermore, the database uses storage indexes and an internal dictionary to read only the necessary IMCUs for a specific query. For example, if a query requests all sales for a store with a store ID less than 8, then the database can use IMCU pruning to eliminate IMCUs that do not contain this value.

-

Compression

Traditionally, the goal of compression is to save space. In the IM column store, the goal of compression is to accelerate scans. The database automatically compresses columnar data using algorithms that allow

WHEREclause predicates to be applied against the compressed formats. Depending on the type of compression applied, Oracle Database can scan data in its compressed format without decompressing it first. Therefore, the volume of data that the database must scan in the IM column store is less than the corresponding volume in the database buffer cache. -

Vector processing

Each CPU core scans local in-memory columns. To process data as an array, the scans use SIMD (single instructional, multiple data) vector instructions. For example, a query can read a set of values in a single CPU instruction rather than read the values one by one. Vector scans by a CPU core are orders of magnitude faster than row scans.

For example, suppose a user executes the following ad hoc query:

SELECT cust_id, time_id, channel_id

FROM sales

WHERE prod_id BETWEEN 14 and 29

ORDER BY 1, 2, 3;

When using the buffer cache, the database would typically scan an index to find the product IDs, use the rowids to fetch the rows from disk into the buffer cache, and then discard the unwanted column values. Scanning data in row format in the buffer cache requires many CPU instructions, and can result in suboptimal CPU efficiency.

When using the IM column store, the database can scan only the requested sales columns, avoiding disk altogether. Scanning data in columnar format pipelines only necessary columns to the CPU, increasing efficiency. Each CPU core scans local in-memory columns using SIMD vector instructions.

Video:

In-Memory Hybrid Scans

An In-Memory hybrid scan retrieves rows from both the IM column store and row store.

Using the selective columns feature, you can enable a subset of columns in an object for In-Memory access. For example, if the only sales columns specified in application queries are prod_id, cust_id, and amount_sold, then you might decide to save memory by applying the INMEMORY attribute to only these columns. However, a user might issue the following ad hoc query:

SELECT prod_id, time_id FROM sales WHERE cust_id IN (100,200,300);Because time_id is a NO INMEMORY column, the query must retrieve data from the row store, possibly reducing performance. However, the optimizer can consider an In-Memory hybrid scan because the following conditions are met:

-

All columns in the predicate are

INMEMORY. In this example,cust_idis the only predicate column, and it isINMEMORY. -

The

SELECTlist contains an arbitrary mix ofNO INMEMORYandINMEMORYcolumns. In this example,prod_idisINMEMORY, buttime_idisNO INMEMORY.

Within a single table scan of sales, an In-Memory hybrid scan filters data in the IM column store and projects data from the row store. In this way, an In-Memory hybrid scan can increase response time by orders of magnitude.

Note:

Improved Performance for Joins

A Bloom filter is a low-memory data structure that tests membership in a set. The IM column store takes advantage of Bloom filters to improve the performance of joins.

Bloom filters speed up joins by converting predicates on small dimension tables to filters on large fact tables. This optimization is useful when performing a join of multiple dimensions with one large fact table. The dimension keys on fact tables have many repeat values. The scan performance and repeat value optimization speeds up joins by orders of magnitude.

Related Topics

See Also:

Improved Performance for Aggregation

An important aspect of analytics is to determine patterns and trends by aggregating data. Aggregations and complex SQL queries run faster when data is stored in the IM column store.

In Oracle Database, aggregation typically involves a GROUP BY clause. Traditionally, the database used SORT and HASH operators. Starting in Oracle Database 12c Release 1 (12.1), the database offered VECTOR GROUP BY transformations to enable efficient in-memory, array-based aggregation.

During a fact table scan, the database accumulates aggregate values into in-memory arrays, and uses efficient algorithms to perform aggregation. Joins based on the primary key and foreign key relationships are optimized for both star schemas and snowflake schemas.

See Also:

-

Oracle AI Database Data Warehousing Guide to learn more about SQL aggregation

Improved Performance for Mixed Workloads

Although OLTP applications do not benefit from accessing data in the IM column store, the dual-memory format can indirectly improve OLTP performance.

When all data is stored in rows, improving analytic query performance requires creating access structures. The standard approach is to create analytic indexes, materialized views. For example, a table might require 3 indexes to improve the performance of the OLTP application (1 primary key and 2 foreign key indexes) and 10-20 additional indexes to improve the performance of the analytic queries. While this technique can improve analytic query performance, it slows down OLTP performance. Inserting a row into the table requires modifying all indexes on the table. As the number of indexes increases, insertion speed decreases.

When you populate data into the IM column store, you can drop analytic access structures. This technique reduces storage space and processing overhead because fewer indexes, materialized views are required. For example, an insert results in modifying 1-3 indexes instead of 11-23 indexes.

While the IM column store can drastically improve performance for analytic queries in business applications, ad hoc analytic queries, and data warehouse workloads, pure OLTP databases that perform short transactions using index lookups benefit less. The IM column store does not improve performance for the following types of queries:

-

A query with complex predicates

-

A query that selects many columns

-

A query that returns many rows

See Also:

Oracle AI Database Data Warehousing Guide to learn more about physical data warehouse design

In-Memory Support for Exadata Flash Cache

Not all objects marked INMEMORY may fit in DRAM memory at the same time. If you use Oracle Exadata Storage Server Software, then Exadata Smart Flash Cache can serve as supplemental memory.

When the IM column store is enabled, Exadata Smart Flash Cache reformats data automatically into In-Memory columnar format. In previous Exadata releases, only Hybrid Column Compressed data was eligible for flash storage in IM columnar format. The reformatting occurs for both compressed (including OLTP compression) and uncompressed tables.

Note:

If Database In-Memory Base Level is enabled, then the CELLMEMORY feature is disabled for Oracle Exadata.

With this format, most Database In-Memory performance enhancements are supported in Smart Scan, including joins and aggregation. Also, reformatting uncompressed and OLTP-compressed data blocks into IM columnar format can significantly reduce the amount of flash memory required.

Exadata Smart Flash Cache transforms the data in the following stages:

-

Oracle Exadata caches data from eligible scans in a legacy columnar format so that the data is available immediately. This format is columnar, but it is not the same format used by the IM column store.

-

In the background, Oracle Exadata reformats data into the pure IM column store format at a lower priority. The background writes prevent interference with the main workload.

If the database is not running an OLTP workload, then a data warehousing workload can consume 100% of the flash cache. However, an OLTP workload limits the data warehouse workload to no more than 50% of the flash cache. This optimization ensures that OLTP workload performance is not sacrificed for analytic scans.

By default, Exadata Smart

Flash Cache compresses data using the level MEMCOMPRESS FOR CAPACITY LOW.

To change the compression level or disable the columnar format altogether, use the

ALTER TABLE ... NO CELLMEMORY statement.

See Also:

-

Oracle Exadata Database Machine System Overview to learn more about the

CELLMEMORYattribute -

Oracle AI Database Licensing Information User Manual for details on which features are supported for different editions and services

High Availability Support

The IM column store is fully integrated into Oracle Database. All High Availability features are supported.

The columnar format does not change the Oracle database on-disk storage format. Thus, buffer cache modifications and redo logging function in the same way. Features such as RMAN, Oracle Data Guard, and Oracle ASM are fully supported.

In an Oracle Real Application Clusters (Oracle RAC) environment, each node has its own IM column store by default. Depending on your requirements, you can populate objects in different ways:

-

Different tables are populated on every node. For example, the

salesfact table is on one node, whereas theproductsdimension table is on a different node. -

A single table is distributed among different nodes. For example, different partitions of the same hash-partitioned table are on different nodes, or different rowid ranges of a single nonpartitioned table are on different nodes.

-

Some objects appear in the IM column store on every node. For example, you might populate the

productsdimension table in every node, but distribute partitions of thesalesfact table across different nodes.

See Also:

Ease of Adoption

Database In-Memory is simple to implement, and requires no application changes.

Key aspects of Database In-Memory adoption include:

-

Ease of deployment

No user-managed data migration is required. The database stores data in row format on disk and automatically converts row data into columnar format when populating the IM column store.

-

Compatibility with existing applications

No application changes are required. The optimizer automatically takes advantage of the columnar format. If your application connects to the database and issues SQL, then it can benefit from Database In-Memory features.

-

Full SQL compatibility

Database In-Memory places no restrictions on SQL. Analytic queries can benefit whether they use Oracle analytic functions or customized PL/SQL code.

-

Ease of setup

No complex setup is required. The

INMEMORY_SIZEinitialization parameter specifies the amount of memory reserved for use by the IM column store. By configuring the IM column store, you can immediately improve the performance of existing analytic workloads and ad hoc queries. -

Ease of object management

Automatic In-Memory uses access tracking and column statistics to manage objects in the IM column store. When the

INMEMORY_AUTOMATIC_LEVELinitialization parameter is set toHIGH, the database automatically decides the optimal segments and columns to retain in the IM column store, evicting "cold" (infrequently accessed) segments. No user decision-making is required.Note:

If the

INMEMORY_FORCEinitialization parameter is set toBASE_LEVEL, then Automatic In-Memory is disabled even ifINMEMORY_AUTOMATIC_LEVELis set. Even if tables have a compression level ofAUTO, Automatic In-Memory background operations do not run. -

Optional fine-grained control of In-Memory objects and columns

When

INMEMORY_AUTOMATIC_LEVELis not set toHIGH, theINMEMORYclause in DDL statements specifies the objects or columns to be populated into the IM column store. You can specify that only certain objects or certain columns are eligible for In-Memory population.

See Also:

-

"Enabling and Sizing the IM Column Store" to learn how to enable the IM column store

-

Oracle AI Database Reference to learn about the

INMEMORY_SIZE,INMEMORY_FORCE, andINMEMORY_AUTOMATIC_LEVELinitialization parameters

Requirements for Database In-Memory

The Oracle Database In-Memory option is required for all Database In-Memory features. The Database In-Memory Base Level is available for an IM column store that is 16 GB or less.

Requirements include:

-

To use the Database In-Memory Base Level, the

INMEMORY_FORCEinitialization parameter must be set toBASE_LEVELin the initialization parameter file at the CDB level. You cannot set this parameter dynamically, or set it at the PDB level. TheBASE_LEVELsetting has the following consequences:-

All

INMEMORYobjects and columns automatically and transparently use the compression level ofQUERY LOW. -

Automatic In-Memory is disabled.

-

-

To use the CellMemory feature without incurring the overhead of creating an IM column store, set this parameter to

CELLMEMORY_LEVEL.Note that if the value of

INMEMORY_SIZEis greater than0, then settingINMEMORY_FORCE=CELLMEMORY_LEVELis equivalent to settingINMEMORY_FORCE=DEFAULT. In this case, the Database In-Memory option is enabled, even if you use CellMemory only. -

For the Base Level, the IM column store size must not exceed 16 GB.

-

The IM column store requires a minimum of 100 MB of memory. The store size is included in

MEMORY_TARGET. -

For Oracle RAC databases, if the

INMEMORY_FORCEinitialization parameter is set toBASE_LEVEL, then the column store size of each database is limited to 16 GB.

No special hardware is required for an IM column store.

See Also:

-

Oracle AI Database Licensing Information User Manual for all licensing-related information for Database In-Memory

Principal Tasks for Database In-Memory

For queries to benefit from the IM column store, the only required task is sizing the IM column store. Query optimizationf and availability features require additional configuration.

Principal Tasks for Configuring the IM Column Store

The following table lists the principal configuration tasks.

Table 1-1 Configuration Tasks

| Task | Notes | When Required | To Learn More |

|---|---|---|---|

|

Enable the IM column store by specifying its size. |

Set For the Database In-Memory Base Level only, you can allocate up to 16 GB on any CDB or any instance of an Oracle RAC database. The |

Required for all Database In-Memory features |

|

|

For the Database In-Memory Base Level, perform additional configuration. |

For the Database In-Memory Base Level only, the |

Required only for the Database In-Memory Base Level |

|

|

Configure Automatic In-Memory to enable, populate, and evict cold segments to ensure that the working data set is always populated |

When the Note: If the |

Required for fully automated management of Database In-Memory objects |

|

|

Enable columns, partitions, tables or materialized views, or tablespaces for population into the IM column store. |

Unless Note: If the |

Required when |

|

|

Populate objects into to the IM column store manually |

Enabling an object for In-Memory access is a separate step from populating it. Unless When |

Required when the |

|

|

Create Automatic Data Optimization (ADO) policies to set |

For example, a policy can evict the |

Optional |

Principal Tasks for Optimizing In-Memory Queries

In-Memory query optimizations are not required for the IM column store to function. The following optimization tasks are optional.

Table 1-2 Query Optimization Tasks

| Task | Notes | To Learn More |

|---|---|---|

|

Manage automatic detection of IM expressions in the IM column store by using the |

For example, invoke the |

|

|

Define join groups using the |

Candidates are columns that are frequently paired in a join predicate, for example, a column joining a fact and dimension table. |

|

|

If necessary for a query block, specify the |

In-memory aggregation is an automatically enabled feature that cannot be controlled with initialization parameters or DDL. |

|

|

Limit the number of IMCUs updated through trickle repopulation within a two minute interval by setting the initialization parameter |

You can disable trickle repopulation by setting this initialization parameter to |

Principal Tasks for Managing Availability

The principal tasks are shown in the following table.

Table 1-3 Availability Tasks

| Task | Notes | To Learn More |

|---|---|---|

|

Specify an In-Memory FastStart (IM FastStart) tablespace using the |

IM FastStart optimizes the population of database objects in the IM column store when the database is restarted. IM FastStart stores information on disk for faster population of the IM column store. |

|

|

For an object or tablespace, specify |

By default, each In-Memory object is distributed among the Oracle RAC instances, effectively employing a share-nothing architecture for the IM column store. |

|

|

In an Oracle Data Guard environment, you can use the same Database In-Memory initialization parameters and statements on a primary or standby database. |

For example, you can enable the IM column store on both a primary and standby database by setting |

Tools for the IM Column Store

No special tools or utilities are required to manage the IM column store or other Database In-Memory features. Administrative tools such as SQL*Plus, SQL Developer, and Oracle Enterprise Manager (Enterprise Manager) are fully supported.

This section describes tools that have specific Database In-Memory feature support.

In-Memory Eligibility Test

The In-Memory Eligibility Test helps you to determine whether or not a database workload will benefit from use of the Database In-Memory feature.

Many workloads benefit from Database In-Memory, however some may not. The In-Memory Eligibility Test determines if a given workload would benefit or not benefit from Database In-Memory and assesses its eligibility for use of this feature. Eligibility is gauged by the percentage of analytical activity in the workload. If you are planning to implement Database In-Memory, you can use this tool to quickly identify and filter out databases that are ineligible -- those where analytic activity is low and where you would see no substantive gain from the use of Database In-Memory. You can then focus your Database In-Memory deployment on databases whose workload includes more intense analytic activity and could therefore benefit substantially. The higher the percentage of analytical activity in the workload, the more benefit you gain from Database In-Memory.

The In-Memory Eligibility Test is the

IS_INMEMORY_ELIGIBLE procedure within the PL/SQL package

DBMS_INMEMORY_ADVISE. This package is built into Oracle

Database. You do not need to download and install it.

Running the In-Memory Eligibility Test is a preliminary step you should perform before you run In-Memory Advisor on any database where you are considering enabling Database In-Memory. This can save time, because the In-Memory Eligibility Test does a quick analysis that tells you up front, before you run In-Memory Advisor (which takes longer), whether or not Database In-Memory is appropriate for the given workload. You should skip running In-Memory Advisor on workloads where the In-Memory Eligibility Test tells you that the level of analytic activity is insufficient to warrant the use of Database In-Memory.

- Run the In-Memory Eligibility Test on candidate database workloads to find out which workloads can or cannot effectively use Database In-Memory.

- Run the In-Memory Advisor on any workload, except those that the In-Memory Eligibility Test has determined are ineligible.

Below is an example of how to use

DBMS_INMEMORY_ADVISE.IS_INMEMORY_ELIGIBLE. There are three

options for selecting the AWR snapshots to be examined. This one uses snapshot IDs

to specify a range of snapshots. @im sets the context to the

Database In-Memory IM column store. See the Database PL/SQL Packages and Types

Reference linked in below for the syntax details of this procedure.

Example 1-1 Call to DBMS_INMEMORY_ADVISE.IS_INMEMORY_ELIGIBLE

SQL> variable inmem_eligible BOOLEAN

SQL> variable analysis_summary VARCHAR2(4000)

SQL>

SQL> pause

SQL>

SQL> exec dbms_inmemory_advise.is_inmemory_eligible(26, 30, :inmem_eligible, :analysis_summary);

PL/SQL procedure successfully completed.

SQL>

SQL> pause

SQL>

SQL> print inmem_eligible

INMEM_ELIGIBLE

-----------

TRUE

SQL> print analysis_summary

ANALYSIS_SUMMARY

-----------------------------------------------------------------------------------------------------

Observed Analytic Workload Percentage is 40% is greater than target Analytic Workload Percentage 20%

See Also:

- The Database PL/SQL Packages and Types Reference

documents the DBMS_INMEMORY_ADVISE

package, including the

IS_INMEMORY_ELIGIBLEprocedure for testing eligibility for Database In-Memory. - The Exporting AWR Data in the Database Performance Tuning Guide explains how to export AWR snapshot data.

- In-Memory Advisor describes how to use this tool.

In-Memory Advisor

The In-Memory Advisor provides a quantatative analysis of how a database would benefit from the Database In-Memory

The advise it generates gives you recommendations for various column store sizes and lists the recommended set of objects for each size. Run this advisor if you are considering deploying Database In-Memory on your databases.

How the In-Memory Advisor Works

The In-Memory Advisor differentiates analytics processing from other database activity based on SQL plan cardinality, Active Session History (ASH), parallel query usage, and other statistics. The In-Memory Advisor estimates the size of objects in the IM column store based on statistics and heuristic compression factors.

The advisor estimates analytic processing performance improvement factors based on data from AWR, ASH, and heat map statistics.

Using the In-Memory Advisor

The PL/SQL package DBMS_INMEMORY_ADVISE contains the APIs for the

steps to follow.

- Enable Heat Map. This is required in order to use

DBMS_INMEMORY_ADVISE.ALTER SYSTEM SET HEAT_MAP = ON - Use

DBMS_INMEMORY_ADVISE.START_TRACKINGto create an In-Memory Advisor tracking task. - Stop tracking workload statistics for the last created In-Memory

Advisor tracking task.

DBMS_INMEMORY_ADVISE.STOP_TRACKING;You must stop tracking before you can generate the advise.

- Run

DBMS_INMEMORY_ADVISE.GENERATE_ADVISE.The advisor examines the workload statistics, performs its analysis, and then generates the advise.

- List the advise.

DBMS_INMEMORY_ADVISE.LIST_ADVISE(TASK_ID,IN_MEMORY_SIZE);This function returns the advise as the recordINMEMORY_ADVISOR_RECOMMENDATION. Multiple records can be included. You can print the output of the record as shown here.Ownerhere refers to the schema name of the table/partition. Theobject_namerefers to thetable_nameand if this is a partitioned table,subobject_namerefers to the partition name,begin v_im_adv_res := dbms_inmemory_advise.list_advise(v_task_id, 10000); v_tab_im_adv_res := v_im_adv_res.recommended_obj_list; dbms_output.put_line('List of recommended objects '); dbms_output.put_line('v_tab_im_adv_res.count : ' || v_tab_im_adv_res.count); for i in 1..v_tab_im_adv_res.count loop dbms_output.put_line('Owner : ' || v_tab_im_adv_res(i).owner); dbms_output.put_line('Table : ' || v_tab_im_adv_res(i).object_name); dbms_output.put_line('Partition : ' || v_tab_im_adv_res(i).subobject_name); end loop; end; / List of recommended objects v_tab_im_adv_res.count : 2 Owner : tpch Table. : Lineorder Partition : SYS_P258 Owner : tpch Table : Lineorder Partition : SYS_P234The formal definition ofINMEMORY_ADVISOR_RECOMMENDATIONis as follows.type Inmemory_Advisor_Recommendation is Record ( inmemory_size number, db_time_baseline number, /* DB time for IM size = 0 */ db_time_baseline_analytics number, /* Estimated analytics time for IM size = 0 */ db_time_high number, /* High end of estimated DB time */ db_time_low number, /* Low end of estimated DB time */ db_time_analytics_high number, /* High end of estimated analytics db time */ db_time_analytics_low number, /* Low end of estimated analytics db time */ recommended_obj_list Inmemory_Adv_Obj_Tab /* List of objects recommended * for the simulated IM size */ );The list of objects recommended is the data structure

INMEMORY_ADV_OBJ_TAB, which is defined through the following. The typeINMEMORY_ADV_OBJ_TABis table ofINMEMORY_ADV_OBJECT.type Inmemory_Adv_Object is record ( Owner Varchar2(Ora_Max_Name_Len), Object_Name Varchar2(Ora_Max_Name_Len), Subobject_Name Varchar2(Ora_Max_Name_Len) );Note:

You can also review the last advise with the viewDBA_INMEMORY_ADVISOR_RECOMMENDATION.

This procedure returns an optimal list of recommended objects for a

given INMEMORY_SIZE constraint. You can implement the configuration

recommended in the advise in whole or in part, or you can disregard it. It is best

to follow the recommendations in full if you intend to deploy In-Memory on the

database.

Below are the steps for generating an advise with In-Memory Advisor. Note that you should run the In-Memory Eligibility Test first. That test is not included in this example. You can learn about it in the link provided at the end of this topic.

Example 1-2 Steps for Running In-Memory Advisor

1. Turn on HEAT_MAP and start tracking. This starts the

In-Memory Advisor analytic work.

SQL> alter system set heat_map=on;

System altered.

SQL> @start_track

SQL>

SQL> variable taskid NUMBER;

SQL>

SQL> exec dbms_inmemory_advise.start_tracking(:taskid);

PL/SQL procedure successfully completed.

SQL>

SQL> print taskid

TASKID

----------

1

2. Stop tracking. Do this before generating the advise.

SQL> exec dbms_inmemory_advise.stop_tracking;

PL/SQL procedure successfully completed.

3. You can now generate the advise.

SQL> exec dbms_inmemory_advise.generate_advise;

PL/SQL procedure successfully completed.

Results returned in this case are as follows. In-Memory Advisor simulates various Oracle Database In-Memory sizes and estimates the database time for a reference workload for each size.

INMEMORY_SIZE_MB ESTIMATED_DB_TIME_MINUTES RECOMMENDED_OBJECTS

---------------- ------------------------- --------------------------------------------------------------------------------

0 8.6197877

74.1 8.27409783 Owner: SCOTT Table: ANA_5 ;Owner: SCOTT Table: ANA_4 ;

296.4 5.57368015 Owner: SCOTT Table: ANA_5 ;Owner: SCOTT Table: ANA_4 ;Owner: SCOTT Table: ANA_1

518.7 4.22347132 Owner: SCOTT Table: ANA_5 ;Owner: SCOTT Table: ANA_4 ;Owner: SCOTT Table: ANA_1

741 2.87326248 Owner: SCOTT Table: ANA_5 ;Owner: SCOTT Table: ANA_4 ;Owner: SCOTT Table: ANA_1

You can also use the view DBA_INMEMORY_ADVISOR_RECOMMENDATION to

see the results in a formatted layout.

In-Memory Advisor and the In-Memory Eligibility Test

The recommended strategy to prepare for deployment of Database In-Memory is to first run the In-Memory Eligibility Test on the database and then run the In-Memory Advisor.

The In-Memory Eligibility Test is a companion to the the In-Memory Advisor. This tool identifies workloads where In-Memory technology would not be useful, so that you can focus your Database In-Memory efforts on workloads that can benefit. Run the In-Memory Advisor only on databases where the In-Memory Eligibility Test has first determined that the workload is a good candidate for Database In-Memory.

The In-Memory Advisor and the In-Memory Eligibility Test are both bundled

into the PL/SQL package DBMS_INMEMORY_ADVISE. This package is

included with Oracle Database. You do not need to download and install it.

See Also:

- In-Memory Eligibility Test. Run this tool to get a preliminary assessment of a workload before analyzing the workload with In-Memory Advisor.

- The Database PL/SQL Packages and Types Reference documents the DBMS_INMEMORY_ADVISE package.

- The Exporting AWR Data in the Database Performance Tuning Guide explains how to export AWR snapshot data.

- The Oracle Database Reference describes the view DBA_INMEMORY_ADVISOR_RECOMMENDATION.

Cloud Control Pages for the IM Column Store

Enterprise Manager Cloud Control (Cloud Control) provides the In-Memory Column Store Central Home page. This page gives a dashboard interface to the IM column store.

Use this page to monitor in-memory support for database objects such as tables, indexes, partitions and tablespaces. You can view In-Memory functionality for objects and monitor their In-Memory usage statistics. Unless otherwise stated, this manual describes the command-line interface to Database In-Memory features.

Related Topics

See Also:

"Using IM Column Store in Cloud Control" explains how to use Cloud Control to manage the IM column store.

Oracle Compression Advisor

Oracle Compression Advisor estimates the compression ratio that you can realize using the MEMCOMPRESS clause. The advisor uses the DBMS_COMPRESSION interface.

See Also:

-

Oracle AI Database PL/SQL Packages and Types Reference to learn more about

DBMS_COMPRESSION

Oracle Data Pump and the IM Column Store

You can import database objects that are enabled for the IM column store using the TRANSFORM=INMEMORY:y option of the impdp command.

With this option, Oracle Data Pump keeps the IM column store clause for all objects that have one. When the TRANSFORM=INMEMORY:n option is specified, Data Pump drops the IM column store clause from all objects that have one.

You can also use the TRANSFORM=INMEMORY_CLAUSE:string option to override the IM column store clause for a database object in the dump file during import. For example, you can use this option to change the IM column store compression for an imported database object.

Video:

See Also:

Oracle AI Database

Utilities for more information about the TRANSFORM impdb parameter