A SQL Performance Troubleshooting

This appendix provides a list of SQL coding patterns that cause potential performance issues in SQL queries.

- Columns Defined as Literal Constants in Sub-Query Joined Later in Main Query

- Redundant Table in LEFT OUTER JOIN

- Too Many Join Conditions Between Factored WITH Sub Queries

- Data Security Predicates Wrapped Inside Redundant Sub-Query

- Aggregate Functions in Correlated Scalar Sub-query

- Use XMLTYPE Data Type Instead of CLOB

- Large Number of UNION (ALL) Query Blocks in a Single SQL

- Function Calls in WHERE Clause Predicates

- Long CASE Expressions in SELECT and WHERE Clauses

- Long in IN-LISTs in SQL Clauses

- Scalar Sub-Queries with DISTINCT Modifier or ROWNUM Keyword

- Custom PL/SQL Calls in SELECT Clause

Columns Defined as Literal Constants in Sub-Query Joined Later in Main Query

In cases where you have literal constants defined as columns in a single sub-query and later used in joins in the main query, consider passing them as direct filters instead. Refer to the following example of such usage:

(SELECT

...

UPPER('HXT') AS DUMMY

...

) V1,

(SELECT

T2.C2 AS C2

FROM T2

) V2

WHERE

V1.DUMMY = V2.C2

...

The more efficient SQL design is to pass it directly to the main query:

(SELECT

...

) V1,

(SELECT

T2.C2 AS C2

FROM T2

) V2

WHERE

V2.C2 = UPPER('HXT')

...

Redundant Table in LEFT OUTER JOIN

Some of Left Outer Joins (LOJ) may be redundant if you join two tables through LOJ but do not select any attributes from the table on the ‘optional’ side of the LOJ. Review the following example:

SELECT

b.book_title,

b.author,

b.language

FROM

books b,

book_languages l

WHERE

b.language = l.language (+);

The table BOOK_LANGUAGES is on the optional

side of the OUTER join and no columns from it are in the SELECT

clause. The query above is functionally equivalent (that is, it

produces the same exact result set) with both the table and LOJ

removed:

SELECT

b.book_title,

b.author,

b.language

FROM

books b;

Complex SQL queries with redundant OUTER joined tables may result in additional performance overhead during parse time as well as at run time. The optimizer may be unable to eliminate unused outer joined table as outer joins are usually harder to process than the ordinary inner joins. Make sure you carefully validate your functionality to evaluate the impact from removing such joins and tables in your reports.

Too Many Join Conditions Between Factored WITH Sub Queries

When you join two factored WITH sub-queries using too many join conditions, the optimizer may estimate low join cardinality for the respective tables and not choose the best execution plan. Refer to the following example, showing the sub-optimal generated SQL pattern as the result of having too many joins between two factored WITH sub-queries:

FROM SAWITH1 D1

FULL OUTER JOIN SAWITH2 D2

ON SYS_OP_MAP_NONNULL(D1.c3) = SYS_OP_MAP_NONNULL(D2.c3)

AND SYS_OP_MAP_NONNULL(D1.c5) = SYS_OP_MAP_NONNULL(D2.c5)

AND SYS_OP_MAP_NONNULL(D1.c12) = SYS_OP_MAP_NONNULL(D2.c12)

AND SYS_OP_MAP_NONNULL(D1.c14) = SYS_OP_MAP_NONNULL(D2.c14)

AND SYS_OP_MAP_NONNULL(D1.c11) = SYS_OP_MAP_NONNULL(D2.c11)

AND SYS_OP_MAP_NONNULL(D1.c2) = SYS_OP_MAP_NONNULL(D2.c2)

AND SYS_OP_MAP_NONNULL(D1.c13) = SYS_OP_MAP_NONNULL(D2.c13)

AND SYS_OP_MAP_NONNULL(D1.c15) = SYS_OP_MAP_NONNULL(D2.c15)

AND SYS_OP_MAP_NONNULL(D1.c9) = SYS_OP_MAP_NONNULL(D2.c9)

AND SYS_OP_MAP_NONNULL(D1.c10) = SYS_OP_MAP_NONNULL(D2.c10)

AND SYS_OP_MAP_NONNULL(D1.c8) = SYS_OP_MAP_NONNULL(D2.c8)

AND SYS_OP_MAP_NONNULL(D1.c7) = SYS_OP_MAP_NONNULL(D2.c7)

AND SYS_OP_MAP_NONNULL(D1.c18) = SYS_OP_MAP_NONNULL(D2.c18)

AND SYS_OP_MAP_NONNULL(D1.c21) = SYS_OP_MAP_NONNULL(D2.c21)

AND SYS_OP_MAP_NONNULL(D1.c4) = SYS_OP_MAP_NONNULL(D2.c4)

AND SYS_OP_MAP_NONNULL(D1.c6) = SYS_OP_MAP_NONNULL(D2.c6)

AND SYS_OP_MAP_NONNULL(D1.c22) = SYS_OP_MAP_NONNULL(D2.c22)

AND SYS_OP_MAP_NONNULL(D1.c20) = SYS_OP_MAP_NONNULL(D2.c20)

AND SYS_OP_MAP_NONNULL(D1.c16) = SYS_OP_MAP_NONNULL(D2.c16)

AND SYS_OP_MAP_NONNULL(D1.c17) = SYS_OP_MAP_NONNULL(D2.c17)

AND SYS_OP_MAP_NONNULL(D1.c19) = SYS_OP_MAP_NONNULL(D2.c19)

Carefully review all the joins in your SQL logic, and keep them to the bare minimum to address the functional requirements.

Data Security Predicates Wrapped Inside Redundant Sub-Query

Securing data in your reports requires very thorough analysis, and careful coding in your SQL queries. Data Security Predicates (DSP) or security clauses, that you append to ensure secure reporting in your SQLs, could have a performance impact on your SQL execution. For example, if you choose to re-use DSPs from other SQLs that pull in View Object security clauses, you could end up with the pattern described below:

(SELECT ...

FROM T1,

(SELECT ...

FROM T2 ...

WHERE (DSP predicate1) OR (DSP predicate2)

)

WHERE ...

In this example, table T2 got secured

through two DSP predicates in a separate sub-query. As part of the

overall optimization process, Oracle optimizer performs recursive

optimization of each inline view (sub query) before optimizing the

main query. Such a pattern could affect the query parsing time and

possibly produce a less efficient execution plan. Consider using a

more efficient pattern rewrite:

(SELECT ...

FROM T1,

T2, ...

WHERE (DSP predicate1) OR (DSP predicate2))

...

Aggregate Functions in Correlated Scalar Sub-query

Aggregate functions such as MAX/MIN/COUNT in scalar subquery can be safely and efficiently replaced by performing alternative rewrites. Refer to the following example:

--Sub-optimal pattern:

SELECT t1.c1,

(SELECT MAX(t2.c2) as maxc2

FROM t2

WHERE t2.id=t1.id

) as c2

FROM t1;

--Optimal pattern:

SELECT t1.c1,

t2alias.max_c2

FROM t1,

(SELECT MAX(t2.c2) as max_c2

T2.id as id

FROM t2

GROUP BY t2.id

) t2alias

WHERE t2alias.id=t1.id;

A scalar sub-query is executed for every row returned by the enclosing query block's row sources (here t1). It means the aggregated MAX is executed as many times as the number of fetched rows in the main query, resulting in slower performance. Moving the aggregated MAX into the FROM clause of the main query will aggregate the data once and speed up the overall query.

Note:

If the number of rows returned by the enclosing block (here t1) is moderate/low and the scalar sub-query is correlated on a selective indexed column, then the aggregate operation can be kept inside the correlated scalar sub-query.Use XMLTYPE Data Type Instead of CLOB

Avoid using CLOB data type for storing XML data. The conversion of CLOB to XML on the fly using XMLTYPE function during report runtime could be very expensive. Instead, consider using XMLTYPE for storing XML documents in the database.

Large Number of UNION (ALL) Query Blocks in a Single SQL

A single SQL with multiple UNION (or UNION ALL) query blocks should be carefully analyzed to consolidate UNIONs into fewer sub-queries. The excessive use of UNIONs is a strong indicator of logic redundancy and SQL complexity. It results in a much larger SQL statement, which would take much longer to parse and execute in the database, as each UNION query block by default is executed serially, one-by-one. Forcing /*+ PARALLEL */ hint would not help to run multiple UNION branches at the same time. The hint /*+ PQ_CONCURRENT_UNION */ could help with parallel UNION branch executions, but it could result in more database workload, especially if the UNION branches have very expensive tables and no selective filters.

In the most cases, the Union branches may be nearly identical with minor differences such as slightly different filters or different expressions on columns in SELECT lists, and so on. Consider reworking multiple-UNION query into a much simpler shape by factoring out one or two common denominator WITH sub-queries and reusing them as per functional requirements. Such an approach results in much less logical reads, lesser database impact and faster query performance. If the same WITH factored sub-query is called more than once, Oracle would ‘materialize’ its result set in a temporary segment and use it throughout the SQL.

Function Calls in WHERE Clause Predicates

Expressions in WHERE clauses employ filter and join expressions to constrain rows from data sources (tables, views, inline views). When the estimated number of rows after filter/join operation using indexed attributes is low, the optimizer will choose an index to directly access the required data blocks. Applying SQL or PL/SQL functions to columns in filter/join expressions disables index usage and reverts table access to full table scan.

Alternatively, if there is a function-based index (FBI) defined, but filter or join expressions do not use the exact function in the join predicates, the optimizer chooses less optional full table scan as well. For example, the PERSON_NUMBER column in the table PER_ALL_PEOPLE_F is indexed via function-based index (FBI) using UPPER(PERSON_NUMBER). The appropriate use for PERSON_NUMBER in WHERE clause will be UPPER(PERSON_NUMBER). Refer to two separate sections on the recommended use of UPPER and TRUNC in WHERE clauses.

Consider the following example:

SELECT * FROM XLA_AE_HEADERS WHERE AE_HEADER_ID = '1511989';There is a unique index defined on the primary key column AE_HEADER_ID, that makes access to desired data very fast and efficient through the INDEX RANGE SCAN operation:

---------------------------------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

---------------------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | 215 | 4 (0)| 00:00:01 |

| 1 | TABLE ACCESS BY INDEX ROWID BATCHED| xla_ae_headers | 1 | 215 | 4 (0)| 00:00:01 |

|* 2 | INDEX RANGE SCAN | XLA_AE_HEADERS_U1 | 1 | | 3 (0)| 00:00:01 |

---------------------------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - access("AE_HEADER_ID"=1511989)

When the function TO_CHAR() is used on AE_HEADER_ID column, the plan changes to:

SELECT * FROM XLA_AE_HEADERS WHERE TO_CHAR(AE_HEADER_ID) = '1511989';

------------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | 215 | 5788 (1)| 00:00:01 |

|* 1 | TABLE ACCESS FULL| xla_ae_headers | 1 | 215 | 5788 (1)| 00:00:01 |

------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter(TO_CHAR("AE_HEADER_ID")='1511989')

There may be valid cases where SQL or PL/SQL functions in WHERE clause are required by functional design. For example, aggregate functions in WHERE clauses (MAX, MIN, COUNT, AVG, ) may be needed for the functional logic. In such cases, ensure you have effective filters on other columns with supporting indexes to ensure optimal performance.

Long CASE Expressions in SELECT and WHERE Clauses

Complex CASE expressions can affect query performance in multiple ways. CASE expressions found in SELECT list are CPU-intensive operations. CASE expressions in WHERE clause (filter and join predicates) may result in incorrect join cardinality estimate and miscalculating the costs. As a result, sub-optimal join method can be selected causing potentially long run time of the query, increasing probability of ORA-01555 (snapshot too old) error and significant TEMP tablespace usage by the SQL. Carefully inspect your SQL for any large CASE clauses and explore the options to simplify the logic. Refer to the following sub-optimal and rewritten CASE structures:

-- Sub-optimal structure:

(CASE WHEN .. THEN

(CASE WHEN .. THEN

(CASE WHEN .. THEN

(...)

END)

END)

ELSE ... END)

-- Rewritten optimal structure:

(CASE WHEN ... THEN

WHEN ... THEN

WHEN ... THEN

ELSE ...

END)

Long in IN-LISTs in SQL Clauses

The option to pass more than one value to SQL through bind parameters makes reports very flexible and versatile. However, if the number of passed values is not limited, it could generate a SQL with very large number of IN-LIST values, possibly hitting the internal Oracle limit of maximum 1,000 IN-LIST values (and get ORA-01795 maximum number of expressions in a list is 1000).

The Oracle optimizer converts IN-list expressions into Boolean OR-expressions. For instance, the following expression: COL_A IN (1, 2, 3) is converted into COL_A = 1 OR COL_A = 2 OR COL_A = 3. Depending on how statistics are collected on a table and its columns, the optimizer may produce incorrect cardinality estimate for that IN-list expression. The more literals are in the IN-LIST, the larger the deviation from the actual cardinality. The incorrect cardinality may cause the optimizer to select less join method. Refer to the following example of long IN-LIST in CASE clause:

CASE WHEN d1.c40 NOT IN (

'CALL',

'CALL_IB',

'DEMAND_GENERATION',

'DEMO',

'DEMO_IN_PERSON',

'DISCOVERY',

'DISCOVERY_PERSON',

'EMAIL',

'EMAIL_ELOQUA_ENGAGE',

'EMAIL_ELOQUA_ENGCAMPAIGN',

'EMAIL_IB',

'EMAIL_OUTLOOK',

'INPERSON_INTRO_MEETING',

'INPERSON_MEETING',

'INTRO_MEETING',

'MANUAL_CALL',

'MEETING',

'RESEARCH',

'SALES_CHAT',

'SOCIAL_MEDIA',

'TODO',

'WORKSHOPS'

) THEN ...

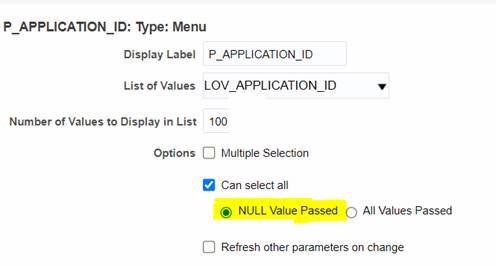

Carefully design the parameters and lists of values that get passed to reports to avoid generating long IN-LISTs in the SQL queries. Do not create long checklists and an option to check all, as that would lead to generate such IN-clauses and affect performance. To avoid passing ‘all’ and causing the performance with long IN-LISTs, select ‘NULL Value Passed’ when you define the parameters for your data model. Then NULL is passed to the bind variable instead of the long IN-LIST:

Scalar Sub-Queries with DISTINCT Modifier or ROWNUM Keyword

Scalar sub-query is a SQL query block that is used as a column in a SELECT clause. It is called scalar because it must produce exactly one value (a scalar) to use in the main SELECT. If a sub-query is written in such a way that it returns more than one row, ORA-01427 exception is raised.

A typical usage pattern of scalar sub-queries is to deliver a single column value by joining a table inside the sub-query to an external table using a unique (primary key) column. That guarantees a single scalar value to be returned by such a sub-query.

If a scalar sub-query produces more than one row, either DISTINCT or ROWNUM may get (wrongly) used as workarounds to enforce a single scalar value. Both workarounds could result in incorrect functionality as well as cause performance overhead, as they get applied as the last step to potentially a very large result set produced by the sub-query.

SELECT

mpm.level_member_id,

archive.archive_id,

mat.category_name,

(

SELECT DISTINCT category_name

FROM msc_catalog_categories mcc1

WHERE mcc1.category_id = mat.parent_category_id

AND mcc1.parent_category_id IS NOT NULL

) cgt_category_name,

...

Note:

When you design your SQLs with correlated sub-queries in SELECT, ensure that they use index access path, as they get executed once for every single row. If a sub-query employs a full table scan due to the lack of indexes in join predicate, you end up with as many full table scans as the number of fetched rows in the main sub-queries. In that case, you should rewrite it to use the join logic in WHERE clause instead of SELECT.Custom PL/SQL Calls in SELECT Clause

Use of PL/SQL functions in SELECT clauses could lead to slower SQL performance. The optimizer has no methods to merge the code from PL/SQL functions into the main SQL query, so the functions are called as many times as the number of rows fetched by the main query. The PL/SQL complexity multiplied by a number of its executions could become a major performance factor, often overlooked in SQL optimization.

Refer to the following example, showing the use of such function call, with another SELECT wrapped inside, with both the function and the SELECT invoked once for every fetched row from the main SELECT:

SELECT ...

ff_user_tables_pkg.get_table_value(

(SELECT l_data_grp.legislative_data_group_id

FROM per_legislative_data_groups_vl l_data_grp

WHERE l_data_grp.name = 'US'),

'RETIREMENT_ELIGIBILITY',

'AGE',

to_char((months_between(sysdate, pp.date_of_birth) / 12)),

trunc(sysdate)

) l_data_grp_pkg_call,

....

Ensure you review the use of such PL/SQL functions, and if their use is required by the functional logic, apply effective filters to reduce the number of fetched rows and the result number of PL/SQL executions for the SQL.