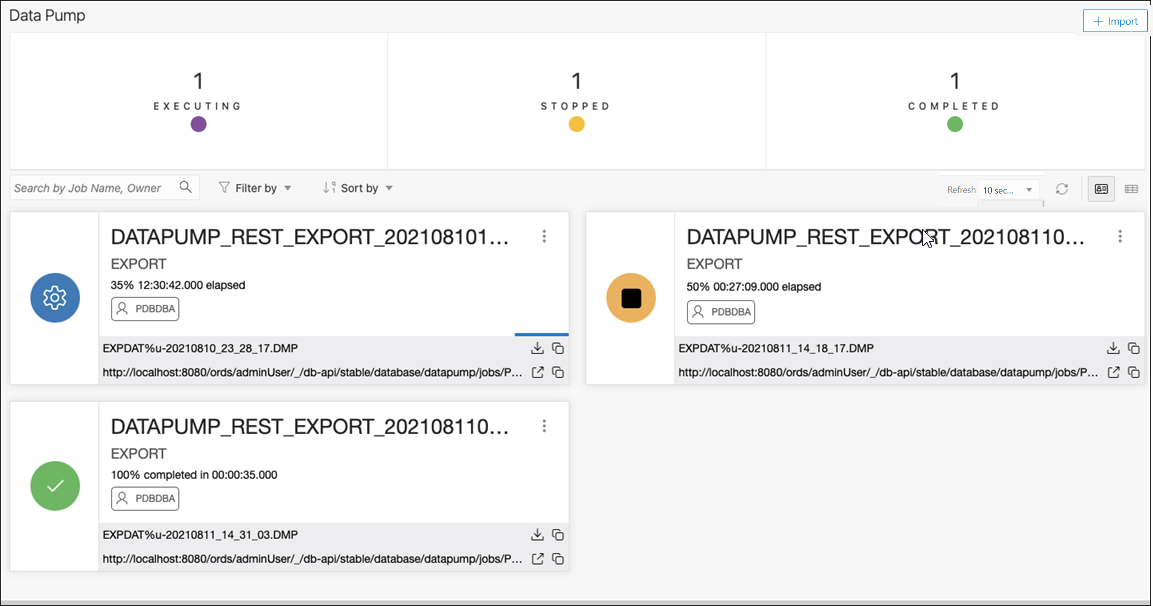

The Data Pump Page

Note:

This feature is only available for Oracle Database 12.2 and later releases.DBMS_DATAPUMP package, or the SQL Developer Data Pump Export and

Import wizards.

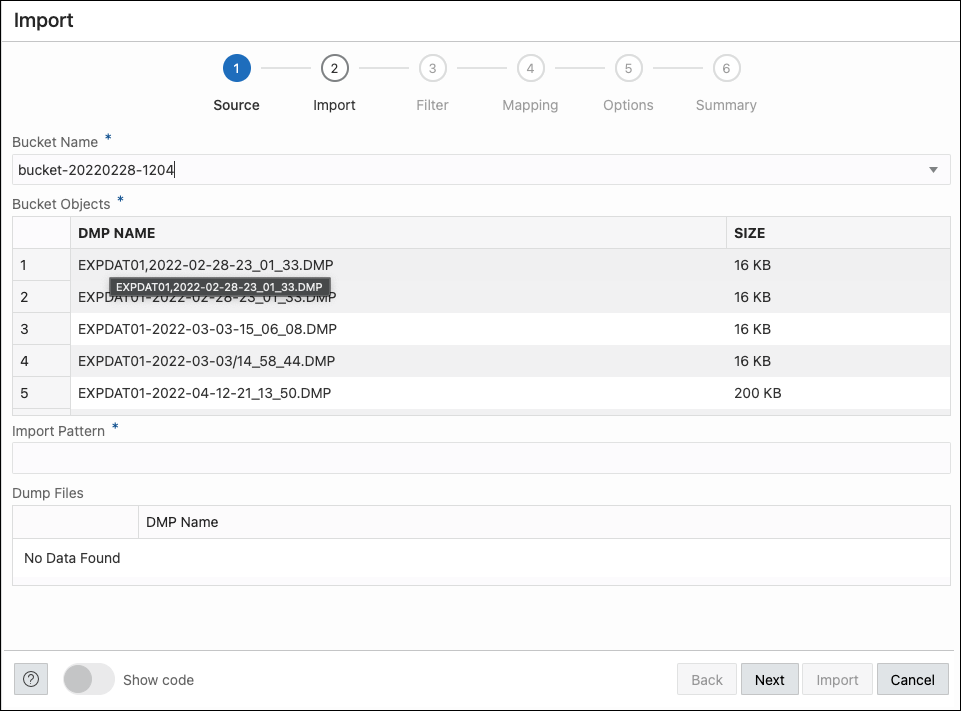

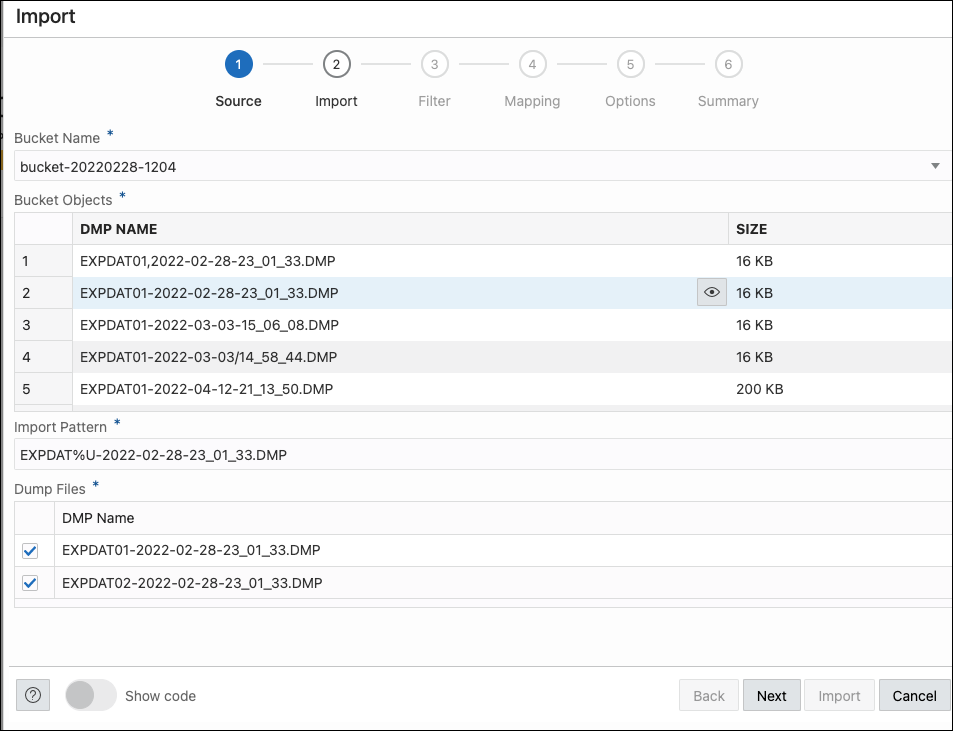

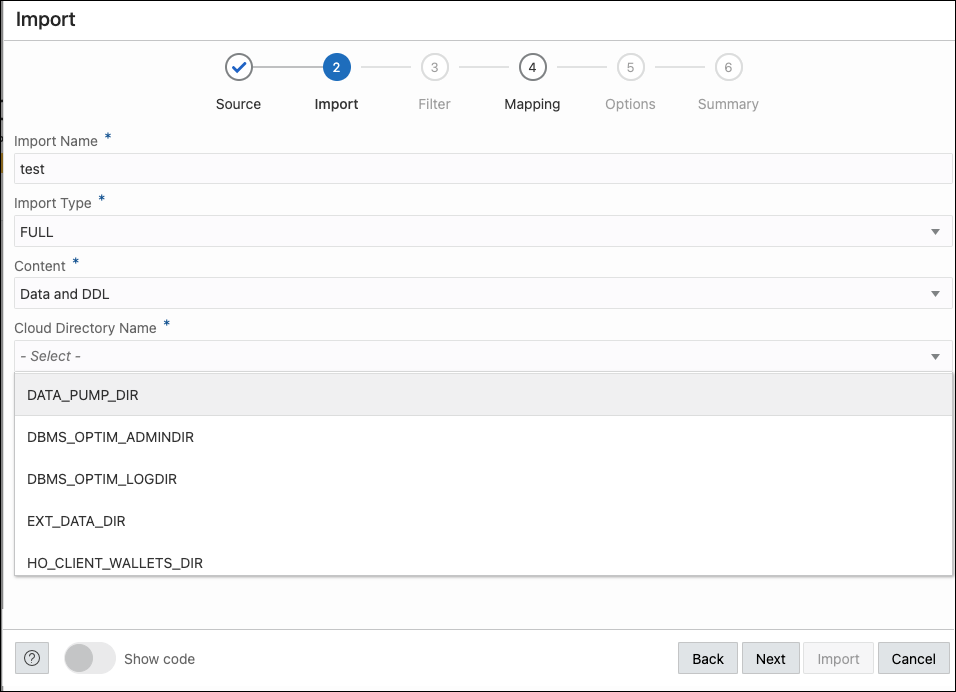

To import data using Data Pump, click Import Data. For more information, see Import Data Using Oracle Data Pump.

The section at the top displays the total number of executing jobs, stopped jobs, and completed jobs. Click a tile (example, STOPPED) to filter and view the corresponding list of STOPPED jobs in the default card format.

You can filter or sort the jobs and set the time period by which to refresh the data.

A job card displays the following details: Job name, import or export operation, percentage of completion and time elapsed, and links to dump files and logs. The status of the job is indicated by the colour of the icon on the left side of the card. Green indicates successful jobs, yellow indicates that the jobs need to be reviewed, and blue indicates that the jobs are in progress.

In a job card, you can:

-

Use

Download to access dump files for completed jobs.

Download to access dump files for completed jobs.

-

Use

Log to access the log files.

Log to access the log files.