Architecture

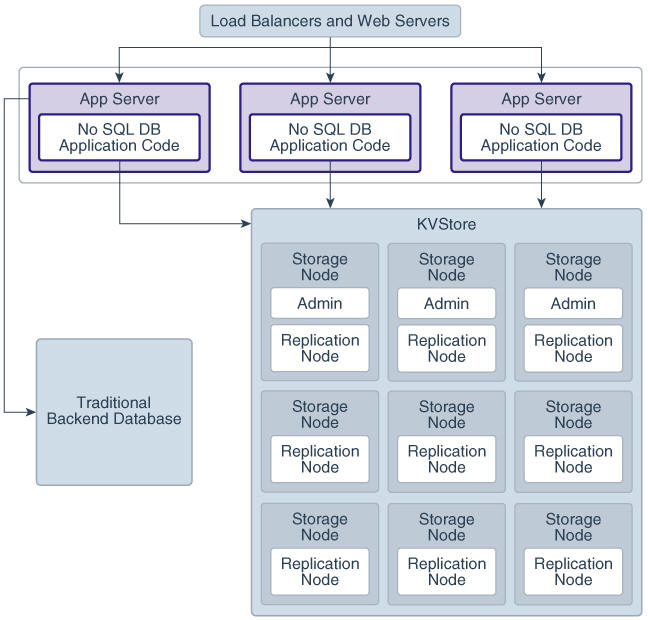

Oracle NoSQL Database applications read and write data by performing network requests against an Oracle NoSQL Database data store, referred to as the KVStore. The KVStore is a collection of Storage Nodes, each of which hosts one or more Replication Nodes. Data is automatically spread across these Replication Nodes by internal KVStore mechanisms. Given a traditional three-tier web architecture, the KVStore either takes the place of your back-end database, or runs alongside it.

Optionally, a KVStore installation can be spread across multiple physical locations, each of which is called a zone. Zones are described in Zones.

Note:

Replication Nodes are implemented using Berkeley DB, Java Edition (JE). JE is an enterprise-class, transaction-protected database, which is fully described in the Oracle Berkeley DB Java Edition.

The store contains multiple Storage Nodes. A Storage Node is a physical (or virtual) machine with its own local storage. The machine is intended to be commodity hardware. While not a requirement, each storage node is typically identical to all other Storage Nodes within the store.

Figure 1-1 Typical Architecture for Oracle NoSQL Database Store

Description of "Figure 1-1 Typical Architecture for Oracle NoSQL Database Store"

Every Storage Node hosts one or more Replication Nodes as determined by its capacity. A Storage Node's capacity serves as a rough measure of the hardware resources associated with it. Stores can contain Storage Nodes with different capacities, and Oracle NoSQL Database ensures that a Storage Node is assigned a proportional load size to its capacity.

A Replication Node, in turn, contains a subset of the store's data. Storage node data is automatically divided evenly into logical collections called partitions. Every Replication Node contains at least one, and typically many, partitions. Partitions are described in greater detail in Partitions.

Finally, each Storage Node contains monitoring software that captures information ensuring the Replication Nodes that it hosts are running and healthy.

For more information on how to associate capacity with a Storage Node and know the best way to balance the number of Storage Nodes and Replication Nodes, see Determining Your Store's Configuration in the Administrator's Guide.

Replication Nodes and Shards

At a high level, you can think of a Replication Node as a single database containing tables or key-value pairs. Storage Nodes host one or more Replication Nodes. Because hosting a Replication Node depends on a healthy amount of resources, generally, Storage Nodes host only a single Replication Node. However, for installations with hardware that has abundant resources (memory, CPUs, and disks), Storage Nodes can, and do, host multiple Replication Nodes.

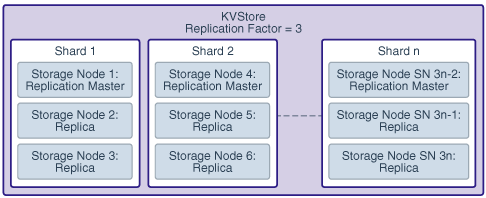

Your store's Replication Nodes are organized into shards. A single shard contains multiple Replication Nodes. Each shard has a master node. The master node performs all database write activities. Each shard also contains one or more read-only replicas. The master node copies all new write activity data to the replicas. The replicas are then used to service read-only operations.

While there can be only one master node per shard at any given time, any of the other shard members can become a master node. An exception to this is for nodes in a secondary zone as described below.

The following illustration shows how the KVStore is divided up into shards:

If the machine hosting the master node fails in any way, the master automatically fails over to one of the other nodes in the shard. One of the replica nodes is promoted automatically to master.

Production KVStores should contain multiple shards. At installation time you provide information that allows Oracle NoSQL Database to automatically decide how many shards the store should contain. The more shards that your store contains, the better your write performance is because the store contains more nodes that are responsible for servicing write requests.

Replication Factor

The number of nodes belonging to a shard is called its Replication Factor. The larger a shard's Replication Factor, the faster its read throughput, because there are more machines servicing the read requests. However, a large replication factor reduces write performance, because there are more machines to which writes must be copied.

A store can be installed across multiple physical locations called zones. You set a Replication Factor on a per-zone basis. Once you set the Replication Factor for each zone in the store, Oracle NoSQL Database makes sure the appropriate number of Replication Nodes are created for each shard residing in every zone in your store. Here are the terms used to describe these aspects of Oracle NoSQL Database:

- Replication Factor: The number of nodes belonging to a shard.

- Zone Replication Factor: The number of copies, or replicas, maintained in a zone.

- Primary Replication Factor: The total number of replicas in all primary zones.

- Secondary Replication factor: The total number in replicas in all secondary zones

- Store Replication Factor The total number of replicas in all zones across the entire store.

For additional information on how to identify the Primary Replication Factor and the implications of its value, as well on multiple zones and replication factors, see Replication Factor in the Administrator's Guide.

Partitions

All data in the store is accessed by one or more keys. A key might be a column in a table, or it might be the key portion of a key/value pair.

Keys are placed in logical containers called partitions, and each shard contains one or more partitions. Once a key is placed in a partition, it cannot be moved to a different partition. Oracle NoSQL Database distributes records evenly across all available partitions by hashing each record's key.

As part of your planning activities, you must decide how many partitions your store should have. You cannot configure the number of partitions after the store has been installed. For information about how to plan your store, see Initial Capacity Planning in the Administrator's Guide.

You can expand and change the number of Storage Nodes in use by the store. The store is then reconfigured to take advantage of the new resources by adding new shards. When this happens, existing data is spread across new and old shards by redistributing partitions from one shard to another. For this reason, it is desirable to have a large number of partitions to support fine-grained reconfiguration of your store.

As a general guideline, each shard should have at least 10 to 20 partitions. The number of partitions should be evenly divisible by the number of shards. Since the number of partitions cannot be changed after the initial deployment, plan the number of partitions for the maximum size of your store in the future. For example, while there is overhead in configuring a large number of shards, it is reasonable to specify a partition number that is 100 times the maximum number of shards you expect your store to contain.

Zones

A zone is a physical location that supports high capacity network connectivity between the Storage Nodes deployed within it. Each zone has some level of physical separation from other zones. Typically, each zone includes redundant or backup power supplies, redundant data communications connections, environmental controls (for example: air conditioning, fire suppression), and security devices. A zone can represent a physical data center building, the floor of a building, a room, pod, or rack, depending on the particular deployment.

Oracle recommends installing and configuring your store across multiple zones. Having multiple zones provides fault isolation, and increases data availability in the event of a single zone failure. Multiple zones help mitigate systemic failures that affect an entire physical location, such as a large scale power or network outage.

There are two types of zones — primary and secondary. Primary zones are the default. They contain nodes that can serve as masters or replicas. Secondary zones contain nodes that can serve only as replicas. You can use secondary zones to make a copy of the data available at a distant location, or to maintain an extra copy of the data to increase redundancy or read capacity.

Only primary zones can have a Replication Factor equal to zero. Zero capacity Storage Nodes are used for Arbiter Nodes, which only primary zones can host.

You can use the command line interface to create and deploy one or more zones. Each zone hosts the deployed storage nodes. For additional information on zones and how to create them, see Create a Zone in the Administrator's Guide.

Arbiter Nodes

An Arbiter Node is a lightweight process that is capable of supporting write availability in two situations. First, when the primary replication factor is two and a single Replication Node becomes unavailable. Second, when two Replication Nodes are unable to communicate to determine which one of them is the master. The role of an Arbiter Node is to participate in elections and respond to acknowledge requests in these situations.

An Arbiter Node does not host any data. You create a Storage Nodes with zero storage capacity to host an Arbiter Node. While you can allocate Arbiter Nodes on Storage Nodes with a capacity greater than zero, those Arbiter Nodes have a lower priority during allocation than those on zero capacity Storage Nodes.

The Arbiter Node is allocated on a Storage Node outside of the shard. An error occurs if there are not enough Storage Nodes to host an Arbiter Node located on a different Storage Node from other shard members. The Arbiter Node provides write availability in the absence of a single Storage Node. The pool of Storage Nodes in a primary zone configured to host Arbiter Nodes is used for allocating an Arbiter Node.

For more information on Arbiter Nodes, see Deploying an Arbiter Node Enabled Topology in the Administrator's Guide.

Topologies

A topology is the collection of zones, storage nodes, shards, replication nodes, and administrative services that make up your NoSQL Database store. A deployed store has one topology that describes its state at a given time.

After initial deployment, the topology is laid out so as to minimize the possibility of a single point of failure for any given shard. This means that while a Storage Node might host more than one Replication Node, those Replication Nodes are never from the same shard. This improves the chances that the shard will have continuous availability for reads and writes, even if a hardware failure takes down the host machine.

Arbiter Nodes are automatically configured in a topology if the primary replication factor is two and a zone is configured to host Arbiter Nodes.

Topologies can be changed to achieve different performance characteristics, or in reaction to changes in the number or characteristics of the Storage Nodes. Changing and deploying a topology is an iterative process. For information on how to use the command line interface to create, transform, view, validate and preview a topology, see Steps for Changing the Store's Topology in the Administrator's Guide.