Recovering When the Replica Set Has a Permanently Failed Element

If an element in the replica set or a full replica set is unrecoverable because there has been a permanent failure, then you need to remove the failed element or evict the failed replica set.

Permanent failure can occur when a host permanently fails or if all elements in the replica set fail.

-

If all elements within a replica set permanently fail, you must evict the entire replica set, which results in the permanent loss of the data on the elements within that replica set.

When k = 1, then the permanent failure of one element is a replica set failure. When k >= 2, all elements in a replica set must fail in order for the replica set to be considered failed. If k >= 2 and the replica set permanently fails, you need to evict all elements of the replica set simultaneously.

Evicting the replica set removes it from the distribution for the grid. However, you cannot evict the replica set if the failed replica set is the only replica set in the database. In this case, save any checkpoint files, transaction log files or daemon log files (if possible) and then destroy and recreate the database.

When a replica set goes down:

-

If

Durability=0, the database goes into read-only mode. -

If

Durability=1, then all transactions that include the failed replica set are blocked until you evict the failed replica set. However, all transactions that do not involve the failed replica set continue to work as if nothing was wrong.

-

-

If k >= 2 and only one element of a replica set fails, one of the active elements takes over all of the requests for data until the failed element can be replaced with a new element. Thus, no data is lost with the failure. The chosen active element in the replica set processes the incoming transactions. You can simply remove and replace the failed element with a new element that is duplicated from the active element in the replica set. The chosen active element provides the base for a duplicate for the new element. See Replace an Element with Another Element.

Note:

If you know about problems that TimesTen Scaleout is not aware of and that a replica set needs to be evicted, you can evict and replace a replica set as needed.

You can evict the replica set from the distribution map for your grid with the ttGridAdmin dbDistribute -evict command. Make sure that all pending requests for adding or removing elements are applied before requesting the eviction of a replica set.

You have the following options when you evict a replica set:

-

Evict the replica set without replacing it immediately.

If the data instances and hosts for this replica set have not failed, then you can recreate the replica set using the same data instances. This is a preferred option if there are other databases on the grid and the hosts are fine.

In this case, you must:

-

Evict the elements of the failed replica set, while the data instances and hosts are still up.

When you evict the replica set, the data is lost within this replica set, but the other replica sets in the database continue to function. There is now one fewer replica set in your grid.

-

Eliminate all checkpoint and transaction logs for the elements within the evicted replica set if you want to add new elements to the distribution map on the same data instances which previously held the evicted elements.

-

Destroy the elements of the evicted replica set, while the data instances and hosts are still up.

-

Optionally, you can replace the evicted replica set with a new replica set either on the same data instances and hosts if they are still viable or on new data instances and hosts. Add the new elements to the distribution map. This restores the grid to its expected configuration.

-

-

Evict the replica set and immediately replace it with a new replica set to restore the grid to its expected configuration.

-

Create new data instances and hosts to replace the data instances and hosts of the failed replica set.

-

Evict the elements of the failed replica set, while replacing it with a new replica set. When you evict the replica set, the data is lost within this replica set, but the other replica sets in the database continue to function.

Use the

ttGridAdmin dbDistribute -evict -replaceWithcommand to evict and replace the replica set with a new replica set, where each new element is created on a new data instance and host. The elements of the new replica set are added to the distribution map. However, the remaining data from the other replica sets are not redistributed to include the new replica. Thus, the new replica set remains empty until you insert data. -

Destroy the elements of the evicted replica set.

-

The following sections demonstrate how to evict a failed replica set when you have one or two elements in the replica set:

Evicting the Element in the Permanently Failed Replica Set When K = 1

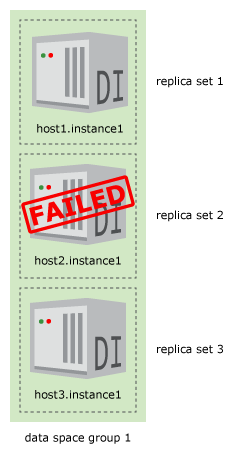

The example shown in Figure 13-4 shows a TimesTen database that has been configured with k set to 1 with three data instances: host1.instance1, host2.instance1 and host3.instance1. The element on the host2.instance1 data instance fails because of a permanent hardware failure.

The following sections demonstrate the eviction options:

Evict the Element to Potentially Replace at Another Time

If you cannot recover a failed element, you evict the replica set.

The following example:

-

Evicts the replica set for the element on the

host2.instance1data instance with thettGridAdmin dbDistribute -evictcommand. -

Destroys the checkpoint and transaction logs for only this element within the evicted replica set with the

ttGridAdmin dbDestroy -instancecommand.Note:

Alternatively, see the instructions in Remove and Replace a Failed Element in a Replica Set if the data instance or host on which the element exists is not reliable.

% ttGridAdmin dbDistribute database1 -evict host2.instance1 -apply

Element host2.instance1 evicted

Distribution map updated

% ttGridAdmin dbDestroy database1 -instance host2.instance1

Database database1 instance host2 destroy started

% ttGridAdmin dbStatus database1 -all

Database database1 summary status as of Thu Feb 22 16:44:15 PST 2018

created,loaded-complete,open

Completely created elements: 2 (of 3)

Completely loaded elements: 2 (of 3)

Open elements: 2 (of 3)

Database database1 element level status as of Thu Feb 22 16:44:15 PST 2018

Host Instance Elem Status Date/Time of Event Message

----- --------- ---- --------- ------------------- -------

host1 instance1 1 opened 2018-02-22 16:42:14

host2 instance1 2 destroyed 2018-02-22 16:44:01

host3 instance1 3 opened 2018-02-22 16:42:14

Database database1 Replica Set status as of Thu Feb 22 16:44:15 PST 2018

RS DS Elem Host Instance Status Date/Time of Event Message

-- -- ---- ----- --------- ------ ------------------- -------

1 1 1 host1 instance1 opened 2018-02-22 16:42:14

2 1 3 host3 instance1 opened 2018-02-22 16:42:14

Database database1 Data Space Group status as of Thu Feb 22 16:44:15 PST 2018

DS RS Elem Host Instance Status Date/Time of Event Message

-- -- ---- ----- --------- ------ ------------------- -------

1 1 1 host1 instance1 opened 2018-02-22 16:42:14

2 3 host3 instance1 opened 2018-02-22 16:42:14This example creates a new element for the replica set as the data instance and host are still viable. Then, adds the new elements to the distribution map.

- Creates a new element with the

ttGridAdmin dbCreate -instancecommand on the same data instance where the previous element existed before its replica set was evicted. - Adds the new element into the distribution map with the

ttGridAdmin dbDistribute -addcommand.

% ttGridAdmin dbCreate database1 -instance host2

Database database1 creation started

% ttGridAdmin dbDistribute database1 -add host2 -apply

Element host2 is added

Distribution map updated

% ttGridAdmin dbStatus database1 -all

Database database1 summary status as of Thu Feb 22 16:53:17 PST 2018

created,loaded-complete,open

Completely created elements: 3 (of 3)

Completely loaded elements: 3 (of 3)

Open elements: 3 (of 3)

Database database1 element level status as of Thu Feb 22 16:53:17 PST 2018

Host Instance Elem Status Date/Time of Event Message

----- --------- ---- ------ ------------------- -------

host1 instance1 1 opened 2018-02-22 16:42:14

host3 instance1 3 opened 2018-02-22 16:42:14

host2 instance1 4 opened 2018-02-22 16:53:14

Database database1 Replica Set status as of Thu Feb 22 16:53:17 PST 2018

RS DS Elem Host Instance Status Date/Time of Event Message

-- -- ---- ----- --------- ------ ------------------- -------

1 1 1 host1 instance1 opened 2018-02-22 16:42:14

2 1 3 host3 instance1 opened 2018-02-22 16:42:14

3 1 4 host2 instance1 opened 2018-02-22 16:53:14

Database database1 Data Space Group status as of Thu Feb 22 16:53:17 PST 2018

DS RS Elem Host Instance Status Date/Time of Event Message

-- -- ---- ----- --------- ------ ------------------- -------

1 1 1 host1 instance1 opened 2018-02-22 16:42:14

2 3 host3 instance1 opened 2018-02-22 16:42:14

3 4 host2 instance1 opened 2018-02-22 16:53:14Evict and Replace the Data Instance Without Re-Distribution

To recover the initial capacity with the same number of replica sets as you started with for the database, evict and replace the evicted element using the ttGridAdmin dbDistribute -evict -replaceWith command.

The following example:

% ttGridAdmin hostCreate host4 -address myhost.example.com -dataspacegroup 1

Host host4 created in Model

% ttGridAdmin installationCreate -host host4 -location /timesten/host4/installation1

Installation installation1 on Host host4 created in Model

% ttGridAdmin instanceCreate -host host4 -location /timesten/host4

Instance instance1 on Host host4 created in Model

% ttGridAdmin modelApply

Copying Model.........................................................OK

Exporting Model Version 2.............................................OK

Marking objects 'Pending Deletion'....................................OK

Deleting any Hosts that are no longer in use..........................OK

Verifying Installations...............................................OK

Creating any missing Installations....................................OK

Creating any missing Instances........................................OK

Adding new Objects to Grid State......................................OK

Configuring grid authentication.......................................OK

Pushing new configuration files to each Instance......................OK

Making Model Version 2 current........................................OK

Making Model Version 3 writable.......................................OK

Checking ssh connectivity of new Instances............................OK

Starting new data instances...........................................OK

ttGridAdmin modelApply complete

% ttGridAdmin dbDistribute database1 -evict host2.instance1

-replaceWith host4.instance1 -apply

Element host2.instance1 evicted

Distribution map updated

% ttGridAdmin dbDestroy database1 -instance host2

Database database1 instance host2 destroy started

% ttGridAdmin dbStatus database1 -all

Database database1 summary status as of Thu Feb 22 17:04:21 PST 2018

created,loaded-complete,open

Completely created elements: 3 (of 4)

Completely loaded elements: 3 (of 4)

Open elements: 3 (of 4)

Database database1 element level status as of Thu Feb 22 17:04:21 PST 2018

Host Instance Elem Status Date/Time of Event Message

----- --------- ---- --------- ------------------- -------

host1 instance1 1 opened 2018-02-22 16:42:14

host3 instance1 3 opened 2018-02-22 16:42:14

host2 instance1 4 destroyed 2018-02-22 17:04:11

host4 instance1 5 opened 2018-02-22 17:03:18

Database database1 Replica Set status as of Thu Feb 22 17:04:21 PST 2018

RS DS Elem Host Instance Status Date/Time of Event Message

-- -- ---- ----- --------- ------ ------------------- -------

1 1 1 host1 instance1 opened 2018-02-22 16:42:14

2 1 3 host3 instance1 opened 2018-02-22 16:42:14

3 1 5 host4 instance1 opened 2018-02-22 17:03:18

Database database1 Data Space Group status as of Thu Feb 22 17:04:21 PST 2018

DS RS Elem Host Instance Status Date/Time of Event Message

-- -- ---- ----- --------- ------ ------------------- -------

1 1 1 host1 instance1 opened 2018-02-22 16:42:14

2 3 host3 instance1 opened 2018-02-22 16:42:14

3 5 host4 instance1 opened 2018-02-22 17:03:18Evicting All Elements in a Permanently Failed Replica Set When K >= 2

If k >= 2 and the replica set permanently fails, then you need to evict all elements of the replica set simultaneously.

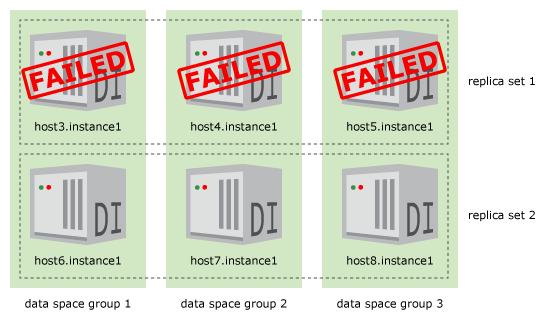

Figure 13-5 shows where replica set 1 fails.

For the example shown in Figure 13-5, replica set 1 contains elements that exist on the

host3.instance1, host4.instance1 and

host5.instance1 data instances. The replica set fails in an unrepairable

way. When you run the ttGridAdmin dbDistribute command to evict the replica

set, specify the data instances of all elements in the replica set that are being evicted.

% ttGridAdmin dbDistribute database1 -evict host3.instance1

-evict host4.instance1 -evict host5.instance1 -apply

Element host3.instance1 evicted

Element host4.instance1 evicted

Element host5.instance1 evicted

Distribution map updatedReplacing the Replica Set with New Elements with No Data Redistribution

If you cannot recover any of the elements in the replica set, then you must evict

all elements in the replica set simultaneously. To recover the initial capacity with the

same number of replica sets as you started with for the database, evict and replace the

evicted elements in the failed replica set using the ttGridAdmin

dbDistribute

-evict

-replaceWith command.

The following example:

% ttGridAdmin hostCreate host9 -internalAddress int-host9 -externalAddress

ext-host9.example.com -like host3 -cascade

Host host9 created in Model

Installation installation1 created in Model

Instance instance1 created in Model

% ttGridAdmin hostCreate host10 -internalAddress int-host10 -externalAddress

ext-host10.example.com -like host4 -cascade

Host host10 created in Model

Installation installation1 created in Model

Instance instance1 created in Model

% ttGridAdmin dbDistribute database1 -evict host3.instance1

-replaceWith host9.instance1 -evict host4.instance1

-replaceWith host10.instance1 -apply

Element host3.instance1 evicted

Element host4.instance1 evicted

Distribution map updated

% ttGridAdmin dbStatus database1 -all

Database database1 summary status as of Fri Feb 23 10:22:57 PST 2018

created,loaded-complete,open

Completely created elements: 8 (of 8)

Completely loaded elements: 6 (of 8)

Completely created replica sets: 3 (of 3)

Completely loaded replica sets: 3 (of 3)

Open elements: 6 (of 8)

Database database1 element level status as of Fri Feb 23 10:22:57 PST 2018

Host Instance Elem Status Date/Time of Event Message

------ --------- ---- ------- ------------------- -------

host3 instance1 1 evicted 2018-02-23 10:22:28

host4 instance1 2 evicted 2018-02-23 10:22:28

host5 instance1 3 opened 2018-02-23 07:28:23

host6 instance1 4 opened 2018-02-23 07:28:23

host7 instance1 5 opened 2018-02-23 07:28:23

host8 instance1 6 opened 2018-02-23 07:28:23

host10 instance1 7 opened 2018-02-23 10:22:27

host9 instance1 8 opened 2018-02-23 10:22:27

Database database1 Replica Set status as of Fri Feb 23 10:22:57 PST 2018

RS DS Elem Host Instance Status Date/Time of Event Message

-- -- ---- ------ --------- ------ ------------------- -------

1 1 8 host9 instance1 opened 2018-02-23 10:22:27

2 7 host10 instance1 opened 2018-02-23 10:22:27

2 1 3 host5 instance1 opened 2018-02-23 07:28:23

2 4 host6 instance1 opened 2018-02-23 07:28:23

3 1 5 host7 instance1 opened 2018-02-23 07:28:23

2 6 host8 instance1 opened 2018-02-23 07:28:23

Database database1 Data Space Group status as of Fri Feb 23 10:22:57 PST 2018

DS RS Elem Host Instance Status Date/Time of Event Message

-- -- ---- ------ --------- ------ ------------------- -------

1 1 8 host9 instance1 opened 2018-02-23 10:22:27

2 3 host5 instance1 opened 2018-02-23 07:28:23

3 5 host7 instance1 opened 2018-02-23 07:28:23

2 1 7 host10 instance1 opened 2018-02-23 10:22:27

2 4 host6 instance1 opened 2018-02-23 07:28:23

3 6 host8 instance1 opened 2018-02-23 07:28:23

% ttGridAdmin dbDestroy database1 -instance host3

Database database1 instance host3 destroy started

% ttGridAdmin dbDestroy database1 -instance host4

Database database1 instance host4 destroy startedMaintaining Database Consistency After an Eviction

Eviction of an entire replica set results in data loss, which can leave the database in an inconsistent state. For example, if the parent records were stored in an evicted replica set, then any child rows on other elements in a different replica set are in a table without a corresponding foreign key parent.

To ensure that you maintain database consistency after an eviction, fix all foreign key references by performing one of the following steps:

-

Delete any child row that does not have a corresponding parent.

-

Drop the foreign key constraint for any child row that does not have a corresponding parent.