75 Configuring ECE for Disaster Recovery

Learn how to configure Oracle Communications Billing and Revenue Management Elastic Charging Engine (ECE) for disaster recovery.

Topics in this document:

About Disaster Recovery

Disaster recovery provides continuity in service for your customers and guards against data loss if a system fails. In ECE, you implement disaster recovery by configuring multiple load-sharing production sites and backup sites. If a production site fails, other load-sharing production sites or a backup site takes over. The production and backup sites are at geographically separate locations.

ECE supports the following types of disaster recovery configurations:

-

Active-cold standby

-

Active-warm standby

-

Active-hot standby

-

Segmented active-active

-

Active-active

Note:

The active-warm standby configuration is supported only when data persistence is enabled in ECE. The remaining disaster recovery configurations can be used when data persistence is enabled or disabled.In the disaster recovery deployment modes described in the following sections, BRM, PDC and OCOMC are always deployed in active-standby mode. Only ECE can be deployed in an active-standby mode or in an active-active mode.

Table 75-1 lists the different disaster recovery deployment modes for BRM, PDC, OCOMC, and ECE.

Table 75-1 Deployment modes for BRM, PDC, OCOMC, and ECE

| Deployment Mode | BRM | PDC | OCOMC | ECE |

|---|---|---|---|---|

| Active-standby | Yes | Yes | Yes | Yes |

| Active-active | No | No | No | Yes |

About the Active-Cold Standby System

The active-cold standby configuration comprises an active production site and one or more idle backup sites. This system requires starting the backup site manually when the production site fails, which might cause a delay in bringing up the backup site to full operational capability. Use this type of system when some downtime is acceptable.

In the active-cold standby configuration, only the production system runs. The backup system is not started, and no data is replicated while the production system operates.

To switch to the backup system, you must start it and configure it to process usage requests.

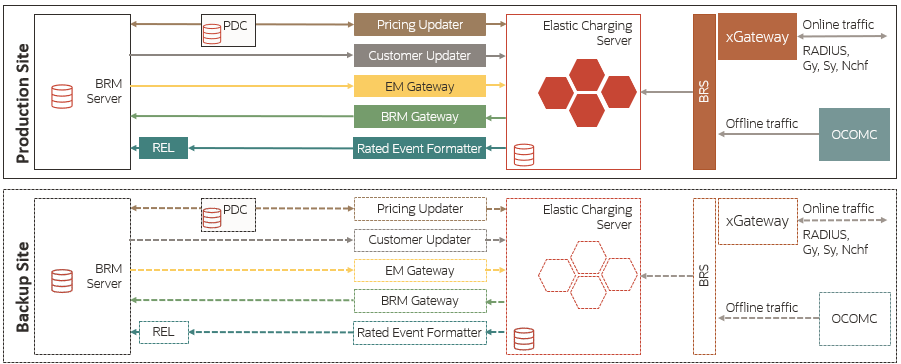

Figure 75-1 shows the architecture for an active-cold standby disaster recovery system.

Figure 75-1 Architecture of an Active-Cold Standby Disaster Recovery System

Description of "Figure 75-1 Architecture of an Active-Cold Standby Disaster Recovery System"

A few critical aspects of the architecture are:

-

Production sites and backup sites include the BRM, ECE, and PDC databases. However, for each type, you manage the configuration differently.

-

In an active-cold standby configuration, only the production system runs. The backup system does not start, and the data is not replicated when the production system operates.

-

To switch to the backup system, you must start it and configure it to process usage requests.

If you are using the Oracle NoSQL database for storing rated events, the ECE data in the Oracle NoSQL database data store is replicated from the production site to the backup site by using the primary and secondary Oracle NoSQL database data store nodes. For more information, see "About Configuring Oracle NoSQL Database Data Store Nodes".

Configuring an Active-Cold Standby System

To configure an active-cold standby system:

-

In the production site and backup sites, do the following:

- Install ECE and other components required for the ECE system.

For more information, see "Installing Elastic Charging

Engine" in ECE Installation Guide.

Note:

If persistence is enabled in ECE, ensure that the name of the cluster and ECE components are identical across sites. - Ensure that any customizations to configuration files and extension implementation files in your production site are reapplied to the corresponding default files on the backup sites.

- Install ECE and other components required for the ECE system.

For more information, see "Installing Elastic Charging

Engine" in ECE Installation Guide.

-

In the production site, start ECE. See "Starting ECE" for more information.

Failing Over to a Backup Site

You enable a backup site to take over the role of the production site. You can then use the backup site as the production site or switch back to the restored production site.

To fail over to a backup site:

-

If persistence is enabled in ECE, switch the ECE persistence database in the backup or remote production sites in the production system based on your Oracle Active Data Guard configuration. For more information on performing a failover, see "Failovers" in Oracle Data Guard Concepts and Administration.

-

On the backup site, start ECE. See "Starting ECE" for more information.

-

Verify that ECE is connected to BRM and PDC on the backup site by performing the following:

Note:

If only ECE in the production site failed and BRM and PDC in the production site are still running, you must change the BRM, PDC, and Customer Updater connection details on the backup site to connect to BRM and PDC in the production site.- Verify that the details about JMS queues for Pricing Updater on the backup site are specified in the ECE configuration MBeans.

- Use a JMX editor to verify that the BRM connection details on the backup site are provided in the ECE configuration MBeans.

-

Load the pricing and customer data into ECE.

Note:

If ECE persistence is enabled, after the ECE persistence database failover, the pricing data is automatically loaded in the ECE cache from the ECE persistence database. The customer data is loaded in the ECE cache on demand from the ECE persistence database during usage processing. -

Ensure that the network clients route all requests to the backup site.

Note:

Information such as the balance, configuration, and rated event data still in the ECE cache when the production site failed is lost.The former backup site is now the new production site.

Note:

If you switch back to the original production site in an active-cold standby system, any data in memory is lost. Oracle does not recommend switching back to the original production site in an active-cold standby system.

About the Active-Warm Standby System

The active-warm standby configuration consists of an active production site and one or more active backup sites. ECE cache, BRM, and PDC data are asynchronously replicated from the production site to the backup sites using Oracle Active Data Guard. The ECE configuration and mediation specification data are not replicated between participant sites. All ECE processes on the backup site are started in standby mode. When the production site fails, ECE requests are diverted from the production site to the backup sites. This type of system requires a minimal amount of downtime.

In the active-warm configuration, the backup system is started and running, but no usage requests are processed by it. The Pricing Updater, Customer Updater, EM Gateway, and BRM Gateway (if a BRM instance is available on the backup site) are configured and started as standby instances in the usage processing state, but these instances are not configured to load data. In addition, the Rated Event Formatter is configured, but not started on the backup system. All changes to customer and pricing data are made on the production system and replicated to the ECE persistence database on the backup system.

When you switch to the backup system, usage request processing is automatically enabled and the standby instances become the primary instances. These processes are connected to the BRM and PDC systems on the backup system.

On the backup system, the pricing data is loaded in the ECE cache from the ECE persistence database, and the customer data is loaded on demand during usage processing. However, you must start the Rated Event Formatter and ensure that it is connected to the BRM system on the backup system.

You use the Secure Shell (SSH) File Transfer Protocol (SFTP) utility to replicate CDR files generated by Rated Event Formatter between participant sites.

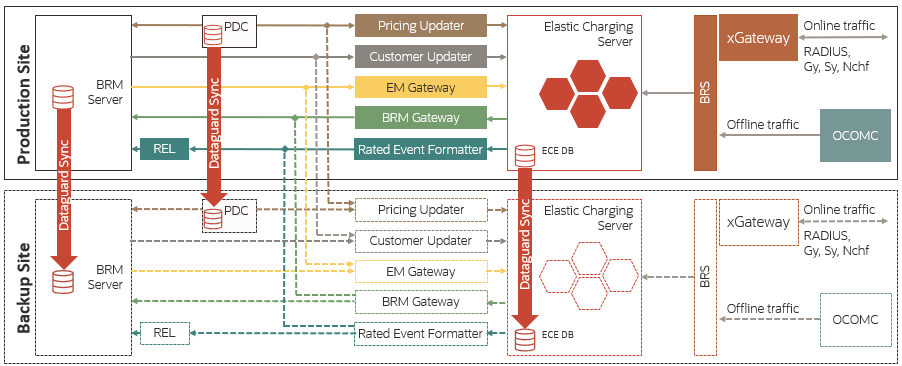

Figure 75-2 shows the architecture for an active-warm standby disaster recovery system.

Figure 75-2 Architecture of an Active-Warm Standby Disaster Recovery System

Description of "Figure 75-2 Architecture of an Active-Warm Standby Disaster Recovery System"

A few key aspects of the architecture are:

-

Production sites and backup sites include the BRM, ECE, and PDC databases. However, for each type, you manage the configuration differently.

-

To switch to the backup system, you must start the backup system and configure it to process usage requests.

-

If only ECE cluster fails in the production site, the ECE cluster in the backup site is used with BRM from the production site. If ECE and BRM fail in the production site, you must switch over to the backup site.

Configuring an Active-Warm Standby System

Note:

In an active-warm standby system, ensure that the name of the cluster and ECE components are identical across sites.To configure an active-warm standby system:

-

In the primary production site, do the following:

-

Configure ECE to persist the cache and rated event data in the ECE persistence database. See "Managing Persisted Data in the Oracle Database" for more information.

-

Configure the ECE components (such as Customer Updater, Pricing Updater, EM Gateway, and so on).

-

Start ECE. See "Starting ECE" for more information.

-

- On the backup site, do the following:

-

Configure ECE to persist the cache and rated event data in the ECE persistence database. See "Managing Persisted Data in the Oracle Database" for more information.

-

Configure the ECE components.

-

Start ECC:

./ecc -

Start the charging server nodes:

start server -

Start the ECE components in standby mode.

-

Failing Over to a Backup Site

You enable a backup or remote production site to take over the role of the primary production site. After the original production site is fixed, you can return the sites to their original state by switching the workload back to the original production site.

To fail over to a backup site:

-

Enable the ECE persistence database in the backup or remote production sites in the primary role. For more information on performing a failover, see "Failovers" in Oracle Data Guard Concepts and Administration.

-

Start BRM and PDC.

-

Ensure that the replicated data in the ECE persistence database is loaded into the ECE cache and that the ECE processes (Customer Updater, Pricing Updater, and so on) are changed to the primary instances.

All ECE and pricing data is now back in the ECE grid in the backup or remote production site.

-

Start Rated Event Formatter by running the following command:

start ratedEventFormatterAll rated events are now processed by Rated Event Formatter in the backup or remote production site.

-

Stop and restart BRM Gateway.

-

Ensure that the network clients route all requests to the backup or remote production site.

Switching Back to the Original Production Site

You restart the restored production site and restore the data in the ECE persistence database on the backup system by using Oracle Active Data Guard.

To switch back to the original production site:

-

Install ECE and other required components in the original primary production site. For more information, see "Installing Elastic Charging Engine" in ECE Installation Guide.

Note:

If only ECE in the original primary production site failed and BRM and PDC in the original primary production site are still running, install only ECE and provide the connection details about BRM and PDC in the original primary production site during ECE installation. -

Switch the ECE persistence database in the original production site in the primary role. For more information on performing a switchover, see "Switchovers" in Oracle Data Guard Concepts and Administration.

-

On the machine on which the Oracle WebLogic server is installed, verify that the JMS queues have been created for loading pricing data and for sending event notification, and that JMS credentials have been configured correctly.

-

Go to the ECE_home/bin directory.

-

Start ECC:

./ecc -

Start the charging server nodes:

start server -

Ensure that the ECE cache data is replicated to the original production site by using Oracle Active Data Guard.

Note:

In the original primary production site, if only ECE has failed, but the ECE persistence database is still running, install only ECE and provide the connection details to the ECE persistence database in the original primary production site. -

Ensure that the ECE processes on the original primary production site (Customer Updater, Pricing Updater, and so on) are changed to the primary instances. All ECE and pricing data is now back in the ECE grid in the original primary production site.

-

Start the following ECE processes and gateways:

start brmGateway start diameterGateway start radiusGateway

-

Stop and restart Pricing Updater, Customer Updater, and EM Gateway in standby mode in the new primary production site and then start them in the original primary production site.

-

Stop the Rated Event Formatter in the new primary production site and then start it in the original primary production site.

-

Stop RE Loader in the new primary production site and then start it in the original primary production site.

-

Stop and restart BRM Gateway in both the new primary production site and the original primary production site.

-

Ensure that the network clients route all requests to the original primary production site.

The roles of the sites are now reversed to the original roles.

About the Active-Hot Standby System

The active-hot standby configuration consists of an active production site and one or more active backup sites. ECE data is asynchronously replicated from the production site to the backup sites. When the production site fails, ECE requests are diverted from the production site to the backup sites. This type of system requires a minimal amount of downtime.

In the active-hot standby configuration, the backup system is started and running, but no usage requests are processed by it. Additionally, the Customer Updater, Pricing Updater, and EM Gateway are configured, but not started on the backup system. All changes to customer and pricing data are made on the production system, and replicated on the backup system.

To switch to the backup system, you enable usage request processing for the system and then start the Customer Updater, Pricing Updater, and EM Gateway. These processes are connected to the BRM and PDC systems on the backup system.

You use the Secure Shell (SSH) File Transfer Protocol (SFTP) utility to replicate CDR files generated by Rated Event Formatter between participant sites.

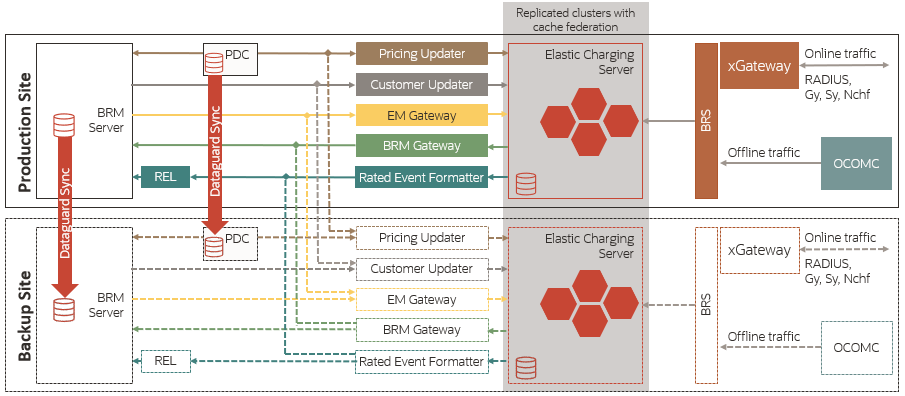

Figure 75-3 shows the architecture for an active-hot standby disaster recovery system.

Figure 75-3 Architecture of an Active-Hot Standby Disaster Recovery System

Description of "Figure 75-3 Architecture of an Active-Hot Standby Disaster Recovery System"

A few key aspects of the architecture are:

-

The production site is active and the backup site is in standby mode. The backup site replicates data from the production site. Also, by starting processes on the backup site, the production site retrieves and processes the data when there is a failover.

-

If only ECE cluster fails in the production site, the ECE cluster in the backup site is used with BRM from the production site. If ECE and BRM fail in the production site, you must switch over to the backup site.

If you are using the Oracle NoSQL database for storing rated events, the ECE data in the Oracle NoSQL database data store is replicated from the production site to the backup site by using the primary and secondary Oracle NoSQL database data store nodes. For more information, see "About Configuring Oracle NoSQL Database Data Store Nodes".

Configuring an Active-Hot Standby System

When configuring active-hot standby with ECE persistence enabled, consider the following points:

-

Do not set the loadConfigSettings parameter in the secondary site, as you need to load appConfig on both sites. For more information, see "Specifying Driver Machine Properties" in ECE Installation Guide.

-

Synchronize the wallet correctly on both sites. Whenever passwords are updated, you need to copy the wallet manually to the other site.

-

Clean the persistence store when you are restarting the system. Whenever a site fails to respond and if you want to bring up the site using the gridsync utility from the other site, you must:

- Start the site from a clean state in the same way the secondary cluster started.

- Clear old data from the persistence store.

-

Run only one RatedEventFormatter instance among the sites for a given RatedEventFormatter configuration.

To configure an active-hot standby system:

-

In the primary production site, do the following:

-

Configure primary and secondary Oracle NoSQL database data store nodes. For more information, see "Configuring the KVStore" in Oracle NoSQL Database Administrator’s Guide.

-

Configure the ECE components (Customer Updater, EM Gateway, and so on).

-

Add details about all participant sites in the federation-config section of the ECE Coherence override file (for example, ECE_home/config/charging-coherence-override-prod.xml).

To confirm which ECE Coherence override file is used, see the tangosol.coherence.override value in the ECE_home/config/ece.properties file.

Table 75-2 provides the federation configuration parameter descriptions and default values.

Table 75-2 Federation Configuration Parameters

Name Description name

The name of the participant site.

Note: The name of the participant site must match the name of the cluster in the participant site.

address

The IP address of the participant site.

port

The port number assigned to the Coherence cluster port of the participant site.

initial-action

Specifies whether the federation service should be started for replicating data to the participant sites. Valid values are:

-

start: Specifies that the federation service has to be started and the data must be automatically replicated to the participant sites.

-

stop: Specifies that the federation service has to be stopped and the data must not be automatically replicated to the participant sites.

Note: Ensure that this parameter is set to stop for all participant sites except for the current site. For example, if you are adding the backup or remote production sites details in the primary production site, this parameter must be set to stop for all backup or remote production sites.

-

-

Start ECE. See "Starting ECE" for more information.

-

-

On the backup site, do the following:

-

Configure primary and secondary Oracle NoSQL database data store nodes. For more information, see "Configuring the KVStore" in Oracle NoSQL Database Administrator’s Guide.

-

Configure the ECE components (Customer Updater, EM Gateway, and so on).

-

Set the following parameter in the ECE_home/config/ece.properties file to false:

loadConfigSettings = falseThe application-configuration data is not loaded into memory when you start the charging server nodes.

-

Add details about all participant sites in the federation-config section of the ECE Coherence override file (for example, ECE_home/config/charging-coherence-override-prod.xml).

To confirm which ECE Coherence override file is used, see the tangosol.coherence.override value in the ECE_home/config/ece.properties file. Table 75-2 provides the federation configuration parameter descriptions and default values.

-

Start the Elastic Charging Controller (ECC):

./ecc -

Start the charging server nodes:

start server

-

-

On the primary production site, run the following commands:

gridSync start gridSync replicate

The federation service is started and all existing data is replicated to the backup or remote production sites.

-

On the backup sites, do the following:

-

Verify that the same number of entries as in the primary production site are available in the customer, balance, configuration, and pricing caches in the backup or remote production sites by using the query.sh utility.

-

Verify that the charging server nodes in the backup or remote production sites are in the same state as the charging server nodes in the primary production site.

-

Configure the following ECE components by using a JMX editor:

-

Rated Event Formatter

-

Rated Event Publisher

-

Diameter Gateway

-

RADIUS Gateway

-

HTTP Gateway

The federation service is started to replicate the data from the backup or remote production sites to the primary production site.

-

-

After starting Rated Event Formatter in the remote production sites, ensure that you copy the CDR files generated by Rated Event Formatter from the remote production sites to the primary production site by using the SFTP utility.

Failing Over to a Backup Site

You enable a backup or remote production site to take over the role of the primary production site. After the original production site is fixed, you can return the sites to their original state by switching the workload back to the original production site.

To fail over to a backup site:

- On the backup site, stop replicating the ECE cache

data to the primary production site by running the following

command:

gridSync stop PrimaryProductionClusterName

where PrimaryProductionClusterName is the name of the cluster in the primary production site.

- On the backup site, do the following:

- Change the BRM, PDC, and Customer Updater connection details to

connect to BRM and PDC on the backup site by using a JMX editor.

Note:

If only ECE in the primary production site failed and BRM and PDC in the primary production site are still running, you need not change the BRM and PDC connection details on the backup site. - Start BRM and PDC.

- Change the BRM, PDC, and Customer Updater connection details to

connect to BRM and PDC on the backup site by using a JMX editor.

- Recover the data in the Oracle NoSQL database data store of the primary

production site by performing the following:

- Convert the secondary Oracle NoSQL database data store node of

the primary production site to the primary Oracle NoSQL database data store

node by performing a failover operation in the Oracle NoSQL database data

store. For more information, see "Performing a

Failover" in Oracle NoSQL Database Administrator's

Guide.

The secondary Oracle NoSQL database data store node of the primary production site is now the primary Oracle NoSQL database data store node of the primary production site.

- On the backup site, convert the rated events from the Oracle NoSQL database data store node that you just converted into the primary node into CDR files by starting Rated Event Formatter.

- In a backup or remote production site, load the CDR files that you just converted into BRM by using Rated Event (RE) Loader.

- Shut down the Oracle NoSQL database data store node that you

just converted into the primary node.

See the "stop" utility in Oracle NoSQL Database Administrator's Guide for more information.

- Stop the Rated Event Formatter that you just started.

- Convert the secondary Oracle NoSQL database data store node of

the primary production site to the primary Oracle NoSQL database data store

node by performing a failover operation in the Oracle NoSQL database data

store. For more information, see "Performing a

Failover" in Oracle NoSQL Database Administrator's

Guide.

- In a backup or remote production site, start Pricing Updater, Customer

Updater, and EM Gateway by running the following

commands:

start pricingUpdater start customerUpdater start emGateway

All pricing and customer data is now back in the ECE grid in the backup or remote production site.

- Stop and restart BRM Gateway.

- Migrate internal BRM notifications from the primary production site to

a backup or remote production site. See "Migrating ECE Notifications" for more information.

Note:

- If the expiry duration is configured for these notifications, ensure that you migrate the notifications before they expire. For the expiry duration, see the expiry-delay entry for the ServiceContext module in the ECE_home/config/charging-cache-config.xml file.

- All external notifications from a production site are published to the respective JMS queue. Diameter Gateway retrieves the notifications from the JMS queue and replicates to other sites based on the configuration.

- Ensure that the network clients route all requests to the backup or remote production site.

Switching Back to the Original Production Site

You restart the restored production site and run the gridSync utility to restore the data.

To switch back to the original production site:

-

Install ECE and other required components in the original primary production site. For more information, see "Installing Elastic Charging Engine" in ECE Installation Guide.

Note:

If only ECE in the original primary production site failed and BRM and PDC in the original primary production site are still running, install only ECE and provide the connection details about BRM and PDC in the original primary production site during ECE installation. -

Configure primary and secondary Oracle NoSQL data store nodes. For more information, see "About Configuring Oracle NoSQL Database Data Store Nodes".

-

On the machine on which the Oracle WebLogic server is installed, verify that the JMS queues have been created for loading pricing data and for sending event notification, and that JMS credentials have been configured correctly.

-

Set the following parameter in the ECE_home/config/ece.properties file to false:

loadConfigSettings = falseThe configuration data is not loaded in memory.

-

Add all details about participant sites in the federation-config section of the ECE Coherence override file (for example, ECE_home/config/charging- coherence-override-prod.xml).

To confirm which ECE Coherence override file is used, see the tangosol.coherence.override value in the ECE_home/config/ece.properties file. Table 75-2 provides the federation configuration parameter descriptions and default values.

-

Go to the ECE_home/bin directory.

-

Start ECC:

./ecc -

Start the charging server nodes:

start server -

Replicate the ECE cache data to the original production site by using the gridSync utility. For more information, see "Replicating ECE Cache Data".

-

Start the following ECE processes and gateways:

start brmGateway start ratedEventFormatter start diameterGateway start radiusGateway start httpGateway

-

Verify that the same number of entries as in the new production site are available in the customer, balance, configuration, and pricing caches in the original production site by using the query.sh utility.

-

Stop Pricing Updater, Customer Updater, and EM Gateway in the new primary production site and then start them in the original primary production site.

-

Migrate internal BRM notifications from the new primary production site to the original primary production site. For more information, see "Migrating ECE Notifications".

-

Change the BRM Gateway, Customer Updater, and Pricing Updater connection details to connect to BRM and PDC in the original primary production site by using a JMX editor.

-

Stop RE Loader in the new primary production site and then start it in the original primary production site.

-

Stop and restart BRM Gateway in both the new primary production site and the original primary production site.

The roles of the sites are now reversed to the original roles.

About the Segmented Active-Active System

The segmented active-active configuration consists of two active production sites at different geographic locations. This system uses one primary production site and one or more remote production sites. All sites concurrently process ECE requests for a different set of customers. ECE requests are routed across the sites based on your load balancing configuration. When any of the production sites do not respond, the requests from that site are diverted to the other sites. This configuration supports a minimal amount of downtime.

In the segmented active-active configuration, the backup system is started and processing usage requests. However, as with an active-active configuration, Customer Updater, Pricing Updater, and EM Gateway are configured, but not started. All changes to customer and pricing data are made on the production system and replicated on the backup system. You also need to configure load balancing to route usage requests to the production and backup systems.

To switch to the backup system, you configure all usage requests to go to it. You also start Customer Updater, Pricing Updater, and EM Gateway. These processes are connected to the BRM and PDC systems on the backup system.

You use the Secure Shell (SSH) File Transfer Protocol (SFTP) utility to replicate CDR files generated by Rated Event Formatter between participant sites.

Figure 75-4 shows the architecture for a segmented active-active disaster recovery system.

Figure 75-4 Architecture of Segmented Active-Active Disaster Recovery System

Description of "Figure 75-4 Architecture of Segmented Active-Active Disaster Recovery System"

A few key aspects of the architecture are:

-

The production site and backup site are active, and process requests. However, the network routes the requests for a subscriber participating in a sharing group to the same site, as the sharing group owner.

-

ECE available on any site is configured to replicate the data with ECE clusters in other sites using Coherence federation. All ECE clusters are configured to work with BRM instance on a particular active site.

If you are using the Oracle NoSQL database for storing rated events, the data in the Oracle NoSQL database data store is replicated between the active sites. For more information, see "About Configuring Oracle NoSQL Database Data Store Nodes".

About Load Balancing in a Segmented Active-Active System

In a segmented active-active system, ECE requests are routed across the sites based on your load balancing configuration. To ensure proper load balancing on your system, you can use a combination of global and local load balancers. The local load balancer routes the connection requests across the full range of available Diameter Gateway, RADIUS Gateway, and HTTP Gateway nodes. The global load balancer routes the connection requests to the gateway nodes on only one site, unless it detects that site is busy or if the local load balancer signals that it cannot reach ECE. You can set up your own load balancing configuration based on your requirements.

Configuring a Segmented Active-Active System

When configuring the segmented active-active system with ECE persistence enabled, consider the following points:

-

Do not set the loadConfigSettings parameter in the secondary site, as you need to load appConfig on both sites. For more information, see "Specifying Driver Machine Properties" in ECE Installation Guide.

-

Synchronize the wallet correctly on both sites. Whenever passwords are updated, you need to copy the wallet manually to the other site.

-

Clean the persistence store when you are restarting the system. Whenever a site fails to respond and if you want to bring up the site using the gridsync utility from the other site, you must:

- Start the site from a clean state in the same way the secondary cluster started.

- Clear old data from the persistence store.

-

Run only one RatedEventFormatter instance among the sites for a given RatedEventFormatter configuration.

To configure a segmented active-active system:

-

In the primary production site, do the following:

-

Configure primary and secondary Oracle NoSQL database data store nodes. See "Configuring the KVStore" in Oracle NoSQL Database Administrator’s Guide for more information.

-

Configure the ECE components (Customer Updater, EM Gateway, and so on).

-

Add details about all participant sites in the federation-config section of the ECE Coherence override file (for example, ECE_home/config/charging-coherence-override-prod.xml).

To confirm which ECE Coherence override file is used, see the tangosol.coherence.override value in the ECE_home/config/ece.properties file.

Table 75-2 provides the federation configuration parameter descriptions and default values.

-

Start ECE. See "Starting ECE" for more information.

-

-

On the backup site, do the following:

-

Configure primary and secondary Oracle NoSQL database data store nodes. See "Configuring the KVStore" in Oracle NoSQL Database Administrator’s Guide for more information.

-

Configure the ECE components (Customer Updater, EM Gateway, and so on).

Note:

- The name of Diameter Gateway, RADIUS Gateway, HTTP Gateway, Rated Event Formatter, and Rated Event Publisher for each site is unique.

- Minimum two instances of Rated Event Formatter are configured to allow for failover.

-

Set the following parameter in the ECE_home/config/ece.properties file to false:

loadConfigSettings = falseThe application-configuration data is not loaded into memory when you start the charging server nodes.

-

Add details about all participant sites in the federation-config section of the ECE Coherence override file (for example, ECE_home/config/charging-coherence-override-prod.xml).

To confirm which ECE Coherence override file is used, see the tangosol.coherence.override value in the ECE_home/config/ece.properties file. Table 75-2 provides the federation configuration parameter descriptions and default values.

-

Start the Elastic Charging Controller (ECC):

./ecc -

Start the charging server nodes:

start server

-

-

On the primary production site, run the following commands:

gridSync start gridSync replicate

The federation service is started and all existing data is replicated to the backup or remote production sites.

-

On the backup sites, do the following:

-

Verify that the same number of entries as in the primary production site are available in the customer, balance, configuration, and pricing caches in the backup or remote production sites by using the query.sh utility.

-

Verify that the charging server nodes in the backup or remote production sites are in the same state as the charging server nodes in the primary production site.

-

Configure the following ECE components by using a JMX editor:

- Rated Event Formatter

- Rated Event Publisher

- Diameter Gateway

- RADIUS Gateway

- HTTP Gateway

Note:

Ensure the following:- The name of Diameter Gateway, RADIUS Gateway, HTTP Gateway, Rated Event Formatter, and Rated Event Publisher for each site is unique.

- A minimum two instances of Rated Event Formatter are configured to allow for failover.

-

Start the following ECE processes and gateways:

start brmGateway start ratedEventFormatter start diameterGateway start radiusGateway start httpGateway

The remote production sites are up and running with all required data.

-

Run the following command:

gridSync start

The federation service is started to replicate the data from the backup or remote production sites to the primary production site.

-

After starting Rated Event Formatter in the remote production sites, ensure that you copy the CDR files generated by Rated Event Formatter from the remote production sites to the primary production site by using the SFTP utility.

Failing Over to a Backup Site

You enable a backup or remote production site to take over the role of the primary production site. After the original production site is fixed, you can return the sites to their original state by switching the workload back to the original production site.

To fail over to a backup site:

- On the backup site, stop replicating the ECE cache

data to the primary production site by running the following

command:

gridSync stop PrimaryProductionClusterName

where PrimaryProductionClusterName is the name of the cluster in the primary production site.

- On the backup site, do the following:

- Change the BRM, PDC, and Customer Updater connection details to

connect to BRM and PDC on the backup site by using a JMX editor.

Note:

If only ECE in the primary production site failed and BRM and PDC in the primary production site are still running, you need not change the BRM and PDC connection details on the backup site. - Start BRM and PDC.

- Change the BRM, PDC, and Customer Updater connection details to

connect to BRM and PDC on the backup site by using a JMX editor.

- Recover the data in the Oracle NoSQL database data store of the primary

production site by performing the following:

- Convert the secondary Oracle NoSQL database data store node of

the primary production site to the primary Oracle NoSQL database data store

node by performing a failover operation in the Oracle NoSQL database data

store. For more information, see "Performing a

Failover" in Oracle NoSQL Database Administrator's

Guide.

The secondary Oracle NoSQL database data store node of the primary production site is now the primary Oracle NoSQL database data store node of the primary production site.

- On the backup site, convert the rated events from the Oracle NoSQL database data store node that you just converted into the primary node into CDR files by starting Rated Event Formatter.

- In a backup or remote production site, load the CDR files that you just converted into BRM by using Rated Event (RE) Loader.

- Shut down the Oracle NoSQL database data store node that you

just converted into the primary node.

See the "stop" utility in Oracle NoSQL Database Administrator's Guide for more information.

- Stop the Rated Event Formatter that you just started.

- Convert the secondary Oracle NoSQL database data store node of

the primary production site to the primary Oracle NoSQL database data store

node by performing a failover operation in the Oracle NoSQL database data

store. For more information, see "Performing a

Failover" in Oracle NoSQL Database Administrator's

Guide.

- In a backup or remote production site, start Pricing Updater, Customer

Updater, and EM Gateway by running the following

commands:

start pricingUpdater start customerUpdater start emGateway

All pricing and customer data is now back in the ECE grid in the backup or remote production site.

- Stop and restart BRM Gateway.

- Migrate internal BRM notifications from the primary production site to

a backup or remote production site. See "Migrating ECE Notifications" for more information.

Note:

- If the expiry duration is configured for these notifications, ensure that you migrate the notifications before they expire. For the expiry duration, see the expiry-delay entry for the ServiceContext module in the ECE_home/config/charging-cache-config.xml file.

- All external notifications from a production site are published to the respective JMS queue. Diameter Gateway retrieves the notifications from the JMS queue and replicates to other sites based on the configuration.

- Ensure that the network clients route all requests to the backup or remote production site.

Switching Back to the Original Production Site

You restart the restored production site and run the gridSync utility to restore the data.

To switch back to the original production site:

-

Install ECE and other required components in the original primary production site. For more information, see "Installing Elastic Charging Engine" in ECE Installation Guide.

Note:

If only ECE in the original primary production site failed and BRM and PDC in the original primary production site are still running, install only ECE and provide the connection details about BRM and PDC in the original primary production site during ECE installation. -

Configure primary and secondary Oracle NoSQL data store nodes. For more information, see "About Configuring Oracle NoSQL Database Data Store Nodes".

-

On the machine on which the Oracle WebLogic server is installed, verify that the JMS queues have been created for loading pricing data and for sending event notification, and that JMS credentials have been configured correctly.

-

Set the following parameter in the ECE_home/config/ece.properties file to false:

loadConfigSettings = falseThe configuration data is not loaded in memory.

-

Add all details about participant sites in the federation-config section of the ECE Coherence override file (for example, ECE_home/config/charging- coherence-override-prod.xml).

To confirm which ECE Coherence override file is used, see the tangosol.coherence.override value in the ECE_home/config/ece.properties file. Table 75-2 provides the federation configuration parameter descriptions and default values.

-

Go to the ECE_home/bin directory.

-

Start ECC:

./ecc -

Start the charging server nodes:

start server -

Replicate the ECE cache data to the original production site by using the gridSync utility. For more information, see "Replicating ECE Cache Data".

-

Start the following ECE processes and gateways:

start brmGateway start ratedEventFormatter start diameterGateway start radiusGateway start httpGateway

-

Verify that the same number of entries as in the new production site are available in the customer, balance, configuration, and pricing caches in the original production site by using the query.sh utility.

-

Stop Pricing Updater, Customer Updater, and EM Gateway in the new primary production site and then start them in the original primary production site.

-

Migrate internal BRM notifications from the new primary production site to the original primary production site. For more information, see "Migrating ECE Notifications".

-

Change the BRM Gateway, Customer Updater, and Pricing Updater connection details to connect to BRM and PDC in the original primary production site by using a JMX editor.

-

Stop RE Loader in the new primary production site and then start it in the original primary production site.

-

Stop and restart BRM Gateway in both the new primary production site and the original primary production site.

The roles of the sites are now reversed to the original roles.

About the Active-Active System

The active-active configuration consists of two active production sites at different geographic locations. All sites concurrently process the ECE requests based on the customerGroup list. ECE requests are routed across the sites based on your load-balancing configuration. All updates occurring in a site's ECE cluster are replicated to other sites using the Coherence cache federation.

When a production site does not respond, the request from that site is diverted to the other backup sites. This configuration supports the least amount of downtime.

In the active-active configuration, you configure Customer Updater, Pricing Updater, and EM Gateway in the production and backup systems. All customer and pricing data changes are made on the production system and then replicated on the backup system.

Each subscriber in the system is assigned to a customerGroup list. ECE distributes customers in the customerGroup list based on the CutomerGroupconfiguration parameters. The customerGroup list helps maintain a balanced workload across the clusters in a two-site ECE deployment. If a primary production site does not respond, the request is routed to the backup production site. Also, you must configure load balancing to route the usage requests to production and backup systems.

Note:

To ensure that all sharing group members are in the same customerGroup, assign each account to only one sharing group.To switch to the backup system, you use Monitor Agent. See "Configuring an Active-Active System" for more information. This action diverts the traffic from a preferred site to the next backup site. When you indicate that the preferred site has started functioning again, the traffic is routed back to the preferred site.

You can configure the ECE active-active mode to process usage requests in the site that receives the usage request, irrespective of the subscriber's preferred site. For example, if production site 1 receives a subscriber's request, it is processed in production site 1. Similarly, if production site 2 receives the usage request, it is processed in production site 2. See “Processing Usage Requests in the Site Received” for more information.

Note:

This configuration does not apply to usage charging requests for members of a sharing group. Usage requests for sharing group members continue to be processed on the same site as the sharing group parent.You use the Secure Shell (SSH) File Transfer Protocol (SFTP) utility to replicate CDR files generated by Rated Event Formatter between participant sites.

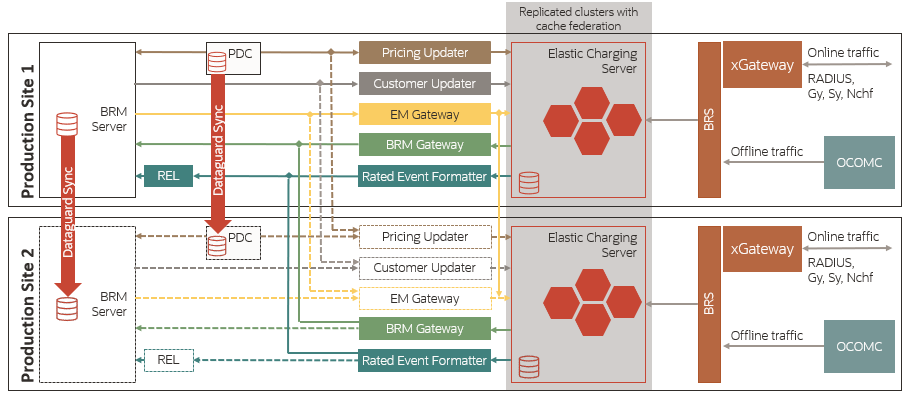

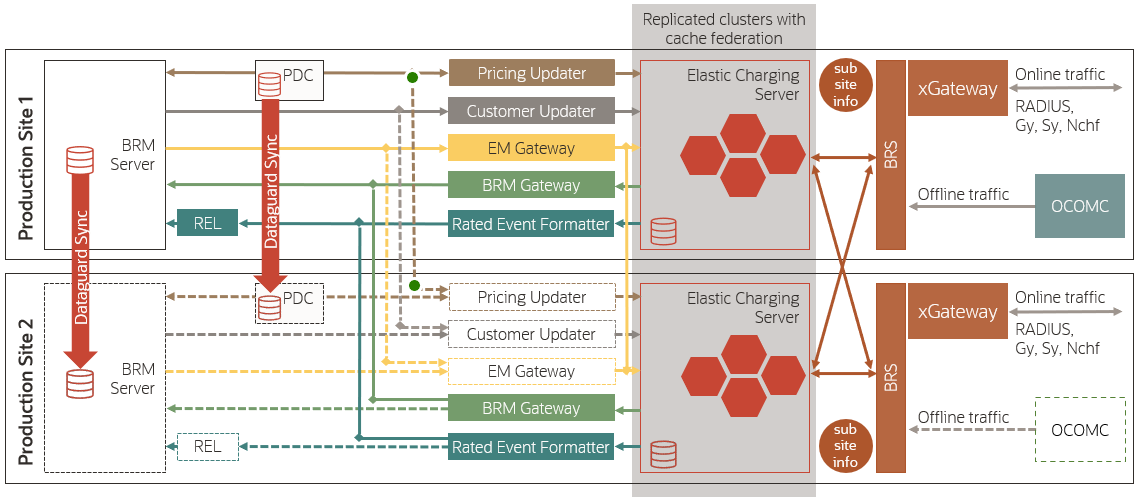

Figure 75-5 shows the architecture for an active-active disaster recovery system.

Figure 75-5 Architecture of Active-Active Disaster Recovery System

Description of "Figure 75-5 Architecture of Active-Active Disaster Recovery System"

A few critical aspects of the architecture are:

-

Two sites are supported in the active-active mode, and both sites are active.

-

BatchRequestService (BRS) ensures intelligent routing to the appropriate site if the site that receives the request is not a subscriber's preferred site.

-

ECE available in any site is configured to replicate data with ECE clusters in other sites using Coherence federation. All ECE clusters are configured to work with a BRM instance on a particular active site.

If you are using the Oracle NoSQL database for storing rated events, the data in the data store is replicated between the active sites. For more information, see "About Configuring Oracle NoSQL Database Data Store Nodes".

About Load Balancing in an Active-Active System

In an active-active system, EM Gateway routes BRM update requests across sites based on the app and site configurations to ensure load balancing.

EM Gateway routes connection requests to Diameter Gateway, RADIUS Gateway, and HTTP Gateway nodes in one of the active sites. If the site does not respond, the request is rerouted to the backup production site.

You can set up load balancing configuration based on your requirements.

About Rated Event Formatter in a Persistence-Enabled Active-Active System

When data persistence is enabled, each site in an active-active system has a primary Rated Event Formatter instance for each schema, and at least one secondary instance for each schema.

As rated events are created, the following happens on each site:

- ECE creates rated events and commits them to the Coherence cache. Each rated event created by ECE includes the Coherence cluster name of the site where it was created.

- The Coherence federation service replicates the events to the remote sites, as it does for other federated objects.

- Coherence caching persists the events to the database in batches. Each schema at each site has its own rated event database table.

- The primary Rated Event Formatter instance processes all rated events from the corresponding site-specific database table.

- The primary Rated Event Formatter instance commits the formatted events to the cache as a checkpoint. The site name is included in the checkpoint data, along with the schema number, timestamp, and plugin type.

- The Coherence federation service replicates the checkpoint to the remote sites, as it does for other federated objects. The remote site ECE servers then purge the events persisted in the checkpoint from the database in batches by schema and by site.

- Coherence caching persists the checkpoint to the database to be consumed by Rated Event Loader. Checkpoints are grouped by schema and by site.

- The ECE server purges the events related to the persisted checkpoint. Events are purged from the database in batches by schema and by site.

Remote sites that receive federated events and checkpoints similarly persist them to and purge them from the database, in site and schema-specific database tables. In this way, all sites contain the same rated events and checkpoints, no matter where they were generated, and each rated event and checkpoint retains information about the site that generated it. If the Rated Event Formatter instance at any one site is down, a secondary instance at a remote site can immediately begin processing the rated events, preserving the site-specific information as though it were the original site. See "Resolving Rated Event Formatter Instance Outages".

Resolving Rated Event Formatter Instance Outages

If a primary Rated Event Formatter instance is down, take one of the following approaches, depending on whether the outage is planned or unplanned, and considering your operational needs:

- Planned outage: Primary instance finishes processing: Choose this

option for planned outages, when rating stops but the primary Rated

Event Formatter instance can keep processing.

- After no new rated events are being generated by the site, wait until the local Rated Event Formatter has finished processing all rated events from the site.

- In the remote sites, drop or truncate the rated event database table for the rated events federated from the site with the outage. Dropping the table means you must recreate it and its indexes after resolving the outage.

- Stop the Rated Event Formatter at the site with the outage.

- When the outage is resolved, you can start Rated Event Formatter again to resume processing events.

- Unplanned outage: Secondary instance takes over

processing: Choose this option for unplanned outages,

when the primary Rated Event Formatter is also down. After failing

over to the backup site as described in "Failing Over to a Backup Site (Active-Active)", perform the following tasks:

- Confirm that the last successful Rated Event Formatter checkpoint for the local site matches the one federated to the remote site. You can use the JMX queryRatedEventCheckPoint operation in the ECE configuration MBeans. See "Getting Rated Event Formatter Checkpoint Information".

- If needed, start the secondary Rated Event Formatter instance on the remote site.

- Activate the secondary Rated Event Formatter

instance on the remote site using the JMX

activateSecondaryInstance operation in the ECE

monitoring MBeans. See "Activating a Secondary Rated Event Formatter Instance".

The secondary instance takes over processing the federated rated events as though it were the primary instance at the site with the outage. The events and checkpoints are persisted in the database tables for the original site, not the remote site.

- Wait until the secondary instance has finished processing all rated events federated from the site with the outage.

- At the site with the outage, drop or truncate the rated event database table for local events. Dropping the table means you must recreate it and its indexes after resolving the outage.

- Stop the secondary Rated Event Formatter instance.

- When the outage is resolved and the site has been recovered as described in "Switching Back to the Original Production Site (Active-Active)", restart the primary Rated Event Formatter again to resume processing events at the local site. If you had the secondary Rated Event Formatter instance running at the remote site before the outage, restart it too.

Getting Rated Event Formatter Checkpoint Information

You can retrieve information about the last Rated Event Formatter checkpoint committed to the database.

To retrieve information about the last Rated Event Formatter checkpoint:

-

Access the ECE configuration MBeans in a JMX editor, such as JConsole. See "Accessing ECE Configuration MBeans".

- Expand the ECE Configuration node.

- Expand the database connection you want checkpoint information from.

- Expand Operations.

- Run the queryRatedEventCheckPoint operation.

Checkpoint information appears for all Rated Event Formatter instances using the database connection. Information includes site, schema, and plugin names as well as the time of the most recent checkpoint.

Activating a Secondary Rated Event Formatter Instance

If a primary Rated Event Formatter instance is down, you can activate a secondary instance to take over rated event processing.

To activate a secondary Rated Event Formatter instance:

-

Access the ECE configuration MBeans in a JMX editor, such as JConsole. See "Accessing ECE Configuration MBeans".

- Expand the ECE Monitoring node.

- Expand RatedEventFormatterMatrices.

- Expand Operations.

- Run the activateSecondaryInstance operation.

The secondary Rated Event Formatter instance begins processing rated events.

About CDR Generator in an Active-Active System

When CDR generation is enabled, each site in an active-active system contains a CDR Generator, and each site can generate unrated CDRs for external systems. For information about CDR Generator, see "Generating CDRs" in ECE Implementing Charging.

When a production site goes down, the CDR database retains all in-progress (or incomplete) CDR sessions, and all unrated 5G usage events are diverted to the CDR Gateway on the other production site.

When the failed production site comes back online, the CDR Formatter finds and purges all incomplete CDR sessions from the CDR database that are older than a configurable duration. For example, if it is 12:00:00 and the configurable duration is 200 seconds, the CDR Formatter would purge from the CDR database all incomplete CDRs that were last updated today at 11:56:40 or earlier. You use the CDR Formatter's cdrOrphanRecordCleanupAgeInSec attribute to set the configurable duration. See "Configuring the CDR Formatter" in ECE Implementing Charging.

Configuring an Active-Active System

Note:

Active-active disaster recovery configurations are supported only by ECE 12.0.0.3.0 with Interim Patch 31848507 and later. The Interim Patch includes an SDK to help you migrate ECE to 12.0.0.3.0 Interim Patch 31848507 so you can use the active-active configuration. See the Interim Patch README for more information.

To configure an active-active system:

- In the primary production site, do the following:

- Configure primary and secondary Oracle NoSQL database data store nodes. See "Configuring the KVStore" in Oracle NoSQL Database Administrator’s Guide for more information.

- Configure the ECE components (Customer Updater, EM Gateway, and so on).

- Add all details about participant sites to the

federation-config section of the ECE Coherence override file (for

example,

ECE_home/config/charging-coherence-override-prod.xml).

To confirm which ECE Coherence override file is used, see the tangosol.coherence.override value in the ECE_home/config/ece.properties file.

See Table 75-2 for more information about providing the federation configuration parameter descriptions and default values.

- Go to the ECE_home/config/management directory, where ECE_home is the directory in which ECE is installed.

- Configure HTTP Gateway. See "Connecting ECE to a 5G Client" in ECE Implementing Charging for more information.

- Open the charging-settings.xml file.

- In the CustomerGroupConfiguration section, set the app

configuration parameters as shown in the following sample

file:

<customerGroupConfigurations config-class="oracle.communication.brm.charging.appconfiguration.beans.customergroup.CustomerGroupConfigurations"> <customerGroupConfigurationList> <customerGroupConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.customergroup.CustomerGroupConfiguration" name="customerGroup5"> <clusterPreferenceList.name config-class="java.util.ArrayList"> <clusterPreferenceConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.customergroup.ClusterPreferenceConfiguration" name="BRM-S2" priority="1" routingGatewayList="host1:port1"/> </clusterPreferenceList> </customerGroupConfiguration> <customerGroupConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.customergroup.CustomerGroupConfiguration" name="customerGroup2"> <clusterPreferenceList config-class="java.util.ArrayList"> <clusterPreferenceConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.customergroup.ClusterPreferenceConfiguration" name="BRM-S2" priority="1" routingGatewayList="host1:port1,host1:port1"/> <clusterPreferenceConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.customergroup.ClusterPreferenceConfiguration" name="BRM-S1" priority="2" routingGatewayList="host2:port2,host2:port2"/> </clusterPreferenceList> </customerGroupConfiguration> </customerGroupConfigurationList> </customerGroupConfigurations>Table 75-3 provides the configuration parameters of the CustomerGroupConfiguration section.

Table 75-3 CustomerGroupConfiguration Parameters

Configuration Parameter and Description CustomerGroupConfiguration name=Customers are processed and distributed in active-active system sites based on customerGroup. The customer names configured in customerGroup are updated to the PublicUserIdentity (PUI) cache when you load the customer information to ECE through customerUpdater or when you create or update information of customers in BRM using EM Gateway.clusterPreferenceList=Includes a list of cluster names and priority for each cluster name for routing the requests during a site failure.

clusterPreferenceConfiguration name=Name of the cluster.clusterPreferenceConfiguration.priority=The priority of the preferred cluster that is assigned in the customerGroup list to process the rating request.The priority to process the request is in the incremental order of numbers and assigned to the lowest number. For example, if you set the value to 1 for priority, the cluster associated with this number processes the request first.

routingGatewayList=A comma-separated list of the host name and port number of chargingServer values used for httpGateway.

- If data persistence is enabled, configure a primary and

secondary Rated Event Formatter instance for each site in the

ratedEventFormatter section, as shown in the following sample

file:

<ratedEventFormatterConfigurationList config-class="java.util.ArrayList"> <ratedEventFormatterConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.ratedeventformatter.RatedEventFormatterConfiguration" name="ref_site1_primary" partition="1" connectionName="oracle1" siteName="site1" threadPoolSize="2" retainDuration="0" ripeDuration="30" checkPointInterval="20" maxPersistenceCatchupTime="0" pluginPath="ece-ratedeventformatter.jar" pluginType="oracle.communication.brm.charging.ratedevent.formatterplugin.internal.SampleFormatterPlugInImpl" pluginName="brmCdrPluginDC1Primary" noSQLBatchSize="25" /> <ratedEventFormatterConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.ratedeventformatter.RatedEventFormatterConfiguration" name="ref_site1_secondary" partition="1" connectionName="oracle2" siteName="site1" primaryInstanceName="ref_site1_primary" threadPoolSize="2" retainDuration="0" ripeDuration="30" checkPointInterval="20" maxPersistenceCatchupTime="0" pluginPath="ece-ratedeventformatter.jar" pluginType="oracle.communication.brm.charging.ratedevent.formatterplugin.internal.SampleFormatterPlugInImpl" pluginName="brmCdrPluginDC1Secondary" noSQLBatchSize="25" /> <ratedEventFormatterConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.ratedeventformatter.RatedEventFormatterConfiguration" name="ref_site2_primary" partition="1" connectionName="oracle2" siteName="site2" threadPoolSize="2" retainDuration="0" ripeDuration="30" checkPointInterval="20" maxPersistenceCatchupTime="0" pluginPath="ece-ratedeventformatter.jar" pluginType="oracle.communication.brm.charging.ratedevent.formatterplugin.internal.SampleFormatterPlugInImpl" pluginName="brmCdrPluginDC2Primary" noSQLBatchSize="25" /> <ratedEventFormatterConfiguration config-class="oracle.communication.brm.charging.appconfiguration.beans.ratedeventformatter.RatedEventFormatterConfiguration" name="ref_site2_secondary" partition="1" connectionName="oracle1" siteName="site2" primaryInstanceName="ref_site2_primary" threadPoolSize="2" retainDuration="0" ripeDuration="30" checkPointInterval="20" maxPersistenceCatchupTime="0" pluginPath="ece-ratedeventformatter.jar" pluginType="oracle.communication.brm.charging.ratedevent.formatterplugin.internal.SampleFormatterPlugInImpl" pluginName="brmCdrPluginDC2Secondary" noSQLBatchSize="25" /> </ratedEventFormatterConfigurationList>The siteName property determines the site that the instance processes rated events for. This lets you configure secondary instances as backups for remote sites. The sample specifies that the ref_site1_secondary instance running is running at site 2, but processes rated events federated from site 1 in case of an outage.

-

Configure the production sites to process the routing requests.

-

Open the site-configuration.xml file.

Configure all monitorAgent instances from all sites. Each Monitor Agent instance includes the Coherence cluster name, host name or IP address, and JMX port.

Table 75-4 provides the configuration parameters of Monitoring Agent.

Table 75-4 Monitor Agent Configuration Parameters

Name Description name The name of the production or remote site where the request should be processed. These should correspond to site names defined for the Rated Event Formatter instances.

host The IP address of the participant site. jmxPort jmxPort of the production or remote site. disableMonitor This configuration allows a monitorAgent instance to disable collecting monitoring results from multiple monitorAgent instances running within a site. It prevents generating redundant monitoring results for a site. Note: Default value is set to false. If you set this value to true, monitorAgent instance disallows collecting redundant monitoring results.

Note:

The monitorAgent properties should match with the properties in the eceTopology.conf file where a monitorAgent instance is configured to start from a specific production site. - Copy the JMSConfiguration.xml file content of all sites

to a single file and enter the following details:

-

Add the

<Cluster>clusterName</Cluster>tag for the queue types. -

Import the wallet for all clusters and specify the wallet path in the

<KeyStoreLocation> and <ECEWalletLocation>locations.

-

-

In the eceTopology.conf file, enable the JMX port for all ECS server nodes and clients, such as Diameter Gateway, HTTP Gateway, RADIUS Gateway, and EM Gateway. Also, enable the JMX port for each Monitor Agent instance.

-

Start ECE. See "Starting ECE" for more information.

-

On the backup or remote site, do the following:

-

Configure primary and secondary Oracle NoSQL database data store nodes. For more information, see "Configuring the KVStore" in Oracle NoSQL Database Administrator’s Guide.

-

Configure the ECE components (Customer Updater, EM Gateway, and so on).

Ensure the following:

- The name of Diameter Gateway, RADIUS Gateway, HTTP Gateway, Rated Event Formatter, and Rated Event Publisher for each site is unique.

- At least two instances of Rated Event Formatter are configured to allow for failover. For a data persistence-enabled system, configure at least one primary and one secondary instance for each site.

-

Set the following parameter in the ECE_home/config/ece.properties file to false:

loadConfigSettings = falseThe application-configuration data is not loaded into memory when you start the charging server nodes.

-

Add all the details of participant sites in the federation-config section of the ECE Coherence override file (for example, ECE_home/config/charging-coherence-override-prod.xml).

To confirm which ECE Coherence override file is used, see the tangosol.coherence.override value in the ECE_ home/config/ece.properties file. Table 75-2 provides the federation configuration parameter descriptions and default values.

-

Start the Elastic Charging Controller (ECC):

./ecc -

Start the charging server nodes:

start server

-

-

On the primary production site, run the following commands:

gridSync start gridSync replicate

The federation service is started and all the existing data is replicated to the backup or remote production sites.

-

On the backup sites, do the following:

-

Verify that the same number of entries as in the primary production site are available in the customer, balance, configuration, and pricing caches in the backup or remote production sites by using the query.sh utility.

-

Verify that the charging server nodes in the backup or remote production sites are in the same state as the charging server nodes in the primary production site.

-

Configure the following ECE components and the Oracle NoSQL database connection details by using a JMX editor:

-

Rated Event Formatter

-

Rated Event Publisher

-

Diameter Gateway

-

RADIUS Gateway

-

HTTP Gateway

Ensure the following:

- The name of Diameter Gateway, RADIUS Gateway, HTTP Gateway, Rated Event Formatter, and Rated Event Publisher for each site is unique.

- At least two instances of Rated Event Formatter are configured to allow for failover. For a data persistence-enabled system, configure at least one primary and one secondary instance for each site.

-

-

Start the following ECE processes and gateways:

start brmGateway start ratedEventFormatter start diameterGateway start radiusGateway start httpGatewayThe remote production sites are up and running with all required data.

-

Run the following command:

gridSync startThe federation service is started to replicate the data from the backup or remote production sites to the preferred production site.

After starting Rated Event Formatter in the remote production sites, ensure that you copy the CDR files generated by Rated Event Formatter from the remote production sites to the primary production site by using the SFTP utility.

-

Including Custom Clients in Your Active-Active Configuration

If your system includes a custom client application that calls the ECE API, you need to add the custom client to your active-active disaster recovery configuration. This enables the active-active system architecture to automatically route requests from your custom client to a backup site when a site failover occurs. To do so, you configure the custom client as an ECE Monitor Framework-compliant node in the ECE cluster.

To add a custom client to an active-active configuration:

-

Modify your custom client to use the ECE Monitor Framework:

-

Add this import statement:

import oracle.communication.brm.charging.monitor.framework.internal.MonitorFramework; -

Add these lines to the program:

if (MonitorFramework.isJMXEnabledApp) { MonitorFramework monitorFramework = (MonitorFramework) context.getBean(MonitorFramework.MONITOR_BEAN_NAME); try { monitorFramework.initializeMonitor(null); // null parameter for any non-ECE Monitor Agent node } catch (Exception ex) { // Failed to initialize Monitor Framework, check log file System.exit(-1); } } ... // continue as before

-

-

When you start your custom client, include these Java system properties:

-

-Dcom.sun.management.jmxremote.port set to the port number for enabling JMX RMI connections. Ensure that you specify an unused port number.

-

-Dcom.sun.management.jmxremote.rmi.port set to the port number to which the RMI connector will be bound.

-

-Dtangosol.coherence.member set to the name of the custom client application instance running within the ECE cluster.

For example:

java -Dcom.sun.management.jmxremote.port=6666 \ -Dcom.sun.management.jmxremote.rmi.port=6666 \ -Dtangosol.coherence.member=customApp1 \ -jar customApp1.jar

-

-

Edit the ECE_home/config/eceTopology.conf file to include a row for each custom client application instance. For each row, enter the following information:

-

node-name: The name of the JVM process for that node.

-

role: The role of the JVM process for that node.

-

host name: The host name of the physical server machine on which the node resides. For a standalone system, enter localhost.

-

host ip: If your host contains multiple IP addresses, enter the IP address so that Coherence can be pointed to a port.

-

JMX port: The JMX port of the JVM process for that node. By specifying a JMX port number for one node, you expose MBeans for setting performance-related properties and collecting statistics for all node processes. Enter any free port, such as 9999, for the charging server node to be the JMX-management enabled node.

-

start CohMgt: Specify whether you want the node to be JMX-management enabled.

For example:

#node-name |role |host name (no spaces!) |host ip |JMX port |start CohMgt |JVM Tuning File customApp1 |customApp |localhost | |6666 |false |

-

Including Offline Mediation Controller in Your Active-Active Configuration

If your system includes Oracle Communications Offline Mediation Controller, you need to add it to your active-active disaster recovery configuration. This enables the active-active system architecture to automatically route requests from Offline Mediation Controller to a backup site when a site failover occurs.

To include Offline Mediation Controller in your active-active configuration:

-

On each active production site, do the following:

-

Log in to your ECE driver machine as the rms user.

-

In your ocecesdk/config/client-charging-context.xml file, add the following line to the beans element:

<importresource="classpath:/META-INF/spring/monitor.framework-context.xml"/>

-

-

On each Offline Mediation Controller machine, do the following:

-

Log in to your Offline Mediation Controller machine as the rms user.

-

Add the following lines to your OCOMC_home/bin/nodemgr file:

-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.rmi.port=rmi_port -Dcom.sun.management.jmxremote.port=port

where:

-

rmi_port is set to the port number to which the RMI connector will be bound.

-

port is set to the port number for enabling JMX RMI connections. Ensure that you specify an unused port number.

-

-

In the OCOMC_home/bin/UDCEnvironment file, set JMX_ENABLED_STATUS to true and set JMX_PORT to the desired JMX port number:

#For enabling jmx in an active-active setup JMX_ENABLED_STATUS=true JMX_PORT=9992

-

-

On each Offline Mediation Controller machine, restart Node Manager by going to the OCOMC_home/bin directory and running this command:

./nodemgr

Failing Over to a Backup Site (Active-Active)

To fail over to a backup site in an active-active configuration:

- Open a JMX editor such as a JConsole.

- Expand the ECE Monitoring node.

- Expand Agent.

- Expand Operations.

- Set the failoverSite() operation to the name of the failed site.

- Repeat the steps from 1 through 7 in the "Failing Over to a Backup Site" section.

For Rated Event Formatter failover when data persistence is enabled, follow the steps in "Resolving Rated Event Formatter Instance Outages".

The former backup site or one of the remote production sites is now the new preferred production site. When the preferred site starts functioning, you mark the recoverSite and the site traffic routes back to the preferred site. For more information, see "Switching Back to the Original Production Site (Active-Active)".

Switching Back to the Original Production Site (Active-Active)

To switch back to the original production site in an active-active system:

- Repeat the steps 1 through 16 in the "Switching Back to the Original Production Site" section.

- Open a JMX editor such as a JConsole.

- Expand the ECE Monitoring node.

- Expand Agent.

- Expand Operations.

- Set the recoverSite() operation to the name of the recovered site.

- If data persistence is enabled and you failed over your Rated Event Formatter instance at the original site to a secondary instance at a remote site, restart any primary and secondary Rated Event Formatter instances at the original site.

Processing Usage Requests in the Site Received

To configure the ECE active-active mode to process usage requests in the site that receives the request irrespective of the subscriber's preferred site, perform the following steps:

-

Access the ECE configuration MBeans in a JMX editor, such as JConsole. See "Accessing ECE Configuration MBeans".

-

Expand the ECE Configuration node.

-

Expand charging.brsConfigurations.default.

-

Expand Attributes.

-

Set the skipActiveActivePreferredSiteRouting attribute to true.

Note:

By default, the skipActiveActivePreferredSiteRouting attribute is set to false.

Replicating ECE Cache Data

In an active-hot standby system, a segmented active-active system, or an active-active system, when you configure or perform disaster recovery, you replicate the ECE cache data to the participant sites by using the gridSync utility.

To replicate the ECE cache data:

-

Go to the ECE_home/bin directory.

-

Start ECC:

./ecc -

Do one of the following:

-

To start replicating data to a specific participant site asynchronously and also replicate all the existing ECE cache data to a specific participant site, run the following commands:

gridSync start [remoteClusterName] gridSync replicate [remoteClusterName]

where remoteClusterName is the name of the cluster in a participant site.

-

To start replicating data to all the participant sites asynchronously and also replicate all the existing ECE cache data to all the participant sites, run the following commands:

gridSync start gridSync replicate

-

See "gridSync" for more information on the gridSync utility.

Migrating ECE Notifications

When you failover to a backup site or switching back to the primary site, you must migrate the notifications to the destination site.

Note:

If you are using Apache Kafka for notification handling, notifications are not migrated to the destination site. Apache Kafka retains the notifications and these notifications appear in the original site or components when they are active.To migrate ECE notifications:

-

Access the ECE configuration MBeans in a JMX editor, such as JConsole. See "Accessing ECE Configuration MBeans".

-

Expand the ECE Configuration node.

-

Expand systemAdmin.

-

Expand Operations.

-

Select triggerFailedClusterServiceContextEventMigration.

-

In the method's failedClusterName field, enter the name of the failed site's cluster.

-

Click the triggerFailedClusterServiceContextEventMigration button.

All the internal BRM notifications are migrated to the destination site. In an active-active system, the external notifications are also migrated to the destination site. If you cannot establish the WebLogic cluster subscription due to a site failover, you should restart Diameter Gateway on the destination site. If a site recovers from a failover, you should restart all the Diameter Gateway instances in the cluster.

About Configuring Oracle NoSQL Database Data Store Nodes