15 Building Your Own Images

Learn how to build your own images of the Oracle Communications Billing and Revenue Management (BRM), Elastic Charging Engine (ECE), Pipeline Configuration Center, Pricing Design Center (PDC), Billing Care, and Business Operations Center applications.

The Podman build commands in this chapter reference Dockerfile and related scripts as is from the oc-cn-docker-files-15.0.x.0.0.tgz package. Ensure you use your own version of Dockerfile and related scripts before running the build command.

Topics in this document:

Sample Dockerfiles included in the BRM cloud native deployment package (oc-cn-docker-files-15.0.x.0.0.tgz) are examples that depict how default images are built for BRM. If you want to build your own images, refer to the sample Dockerfiles shipped with the product as a reference. Create your own Dockerfiles and then build your images.

Caution:

The Dockerfiles and related scripts are provided for reference only. You can refer to them to build or extend your own images. Support is restricted to core product issues only and no support will be provided for custom Dockerfiles and scripts.

Building BRM Server Images

To build images for BRM Server, your staging area ($PIN_HOME) must be available from where the images are built. After you unpack oc-cn-docker-files-15.0.x.0.0.tgz, the BRM Server directory structure will be oc-cn-docker-files/ocbrm.

Note:

If you are using Podman to build your images, pass the --format docker flag with the podman build command.

Building your own BRM Server images involves these high-level steps:

-

You build the BRM Server base image. See "Building Your BRM Server Base Image".

-

You build images for each BRM Server component. See "Building Images of BRM Server Components".

-

You build the Web Services Manager image. See "Building Web Services Manager Images".

-

You build the BRM REST Services Manager image. See "Building BRM REST Services Manager Images".

-

You containerize the Email Data Manager. See "Containerization of Email Data Manager".

-

You containerize the roaming pipeline. See "Containerization of Roaming Pipeline".

-

You build and deploy Vertex Manager. See "Building and Deploying Vertex Manager".

Building Your BRM Server Base Image

To make your directory structure ready for building base images:

-

Edit the $PIN_HOME/bin/orapki binary to replace the staging Java path with ${JAVA_HOME}.

-

Create the $PIN_HOME/installer directory.

-

If you're behind a proxy server, set the $PROXY variable:

export PROXY=ProxyHost:Port

-

Download the Java binary and then copy it to $PIN_HOME. See "BRM Software Compatibility" for the latest supported version of Java.

-

Download the Perl binary and then copy it to $PIN_HOME. See "BRM Software Compatibility" for the latest supported version of Perl.

-

For your database client:

-

Copy oracle_client_response_file.rsp (64 bit), downloadOracleClient.sh, and waitForOracleClientInst.sh from oc-cn-docker-files/ocbrm/base_images to $PIN_HOME.

-

Modify these parameters in the downloadOracleClient.sh file:

-

ORACLE_CLIENT_ZIP: Enter the binary name.

-

REPOSITORY_URL: Enter the location to fetch the database client binary.

-

-

If the db_client binary is already downloaded, copy the binary to the $PIN_HOME/installer directory.

-

After preparing your directory structure, build your BRM Server base image:

-

For database client 12CR2 (64 Bit) + Java + Perl, enter this command:

podman build --format docker --build-arg PROXY=$PROXY --tag db_client_and_java_perl:15.0.x.0.0 --file DockerFileLocation/Dockerfile_db_client_and_java_perl .

-

For database client 12CR2 (64 Bit) + Java, enter this command:

podman build --format docker --build-arg PROXY=$PROXY --tag db_client_and_java:15.0.x.0.0 --file DockerFileLocation/Dockerfile_db_client_and_java .

-

For Java, enter this command:

podman build --format docker --build-arg PROXY=$PROXY --tag java:15.0.x.0.0 --file DockerFileLocation/Dockerfile_java .

-

For Java + Perl, enter this command:

podman build --format docker --build-arg PROXY=$PROXY --tag java_perl:15.0.x.0.0 --file DockerFileLocation/Dockerfile_java_perl .

Note:

If the existing database is used with custom build images, do this:

-

Override the ocbrm.use_oracle_brm_images key in the Helm chart with a value of false.

-

Set the ocbrm.existing_rootkey_wallet key to true.

-

Copy your client wallet files to the oc-cn-helm-chart/existing_wallet directory.

Building Images of BRM Server Components

The oc-cn-docker-files-15.0.x.0.0.tgz package includes references to all of the Dockerfiles and scripts needed to build images of BRM Server components (except for oraclelinux:8).

To build an image of a BRM Server component:

-

Copy these scripts from the oc-cn-docker-files/ocbrm directory to your staging area at $PIN_HOME:

-

entrypoint.sh

-

createWallet.sh

-

cm/preStopHook.sh_cm

-

cm/postStartHook.sh

-

cm/updatePassword.sh

-

eai_js/preStopHook.sh_eai

-

-

Do one of these:

-

For the batch pipeline, roaming pipeline, and real-time pipeline, copy entrypoint.sh and createWallet.sh to $PIN_HOME/.., and copy $PIN_HOME/../setup/BRMActions.jar to the $PIN_HOME/jars directory for building the images.

-

For all other components, copy the $PIN_HOME/../setup/BRMActions.jar file to $PIN_HOME.

-

-

Set these environment variables:

-

$PIN_HOME: Set this to your staging area.

-

$PERL_HOME: Set this to the path of Perl. See "BRM Software Compatibility" for the latest supported version of Perl.

-

$JAVA_HOME: Set this to the Java path. See "BRM Software Compatibility" for the latest supported version of Java.

-

-

Build the image for your BRM component.

For example, to build a CM image, you'd enter this:

podman build --format docker --tag cm:15.0.x.0.0 --build-arg STAGE_PIN_HOME=$PIN_HOME --build-arg STAGE_JAVA_HOME=$JAVA_HOME --build-arg STAGE_PERL_HOME=$PERL_HOME --file DockerfileLocation/Dockerfile .

To build a roaming pipeline image, you'd enter this:

podman build --format docker --tag roam_pipeline:$BRM_VERSION --build-arg STAGE_PERL_HOME=StagePerlPath .where StagePerlPath is the path to the Perl files in your staging area at $PIN_HOME.

To build a dm-oracle image, you'd enter this:

podman build --format docker --force-rm=true --no-cache=true --tag dm_oracle:15.0.x.0.0 --file DockerfileLocation/Dockerfile .

where DockerfileLocation is the path to the Dockerfiles for your BRM component.

Note:

Build batch and realtime pipeline images from the $PIN_HOME/.. directories.

Building Web Services Manager Images

To containerize images for Web Services Manager, your staging area ($PIN_HOME) must be available from where the Docker images are built.

You can create one of these Web Services Manager containers:

Building and Deploying Web Services Manager for Apache Tomcat Image

The Web Services Manager Dockerfile is based on the official Apache Tomcat image. The sample Web Services Manager Dockerfile includes both the XML element-based and XML string-based SOAP Web Services implementation. Use this Dockerfile to build an image that can call any standard BRM opcode that is exposed as a SOAP Web service.

The Web Services Manager Infranet.properties configuration is available as a Kubernetes ConfigMap. To expose a custom opcode as a Web service, place your customized WAR filepath in the Dockerfile. When multiple pod replicas are configured, each pod runs its own copy of Apache Tomcat. By default, Web Services Manager is exposed as a Kubernetes NodePort service running on port 30080.

Containerizing the Web Services Manager for Tomcat image involves these high-level steps:

- Building the Web Services Manager Tomcat Image

- Deploying the Web Services Manager Tomcat Image in Kubernetes

Building the Web Services Manager Tomcat Image

To build the Web Services Manager for Apache Tomcat image:

- Download the JAX-WS reference implementation JARs from JAX-WS Java API for XML Web Services (https://javaee.github.io/metro-jax-ws/).

- Copy the jaxws-ri-2.3.x.zip file to your staging area at $PIN_HOME.

- Unzip the jaxws-ri-2.3.x.zip file.

- Download Apache Tomcat 9 from the Apache Tomcat website:

https://tomcat.apache.org/download-90.cgi

See "Additional BRM Software Requirements" in BRM Compatibility Matrix for information about compatible versions of Apache Tomcat.

- Copy apache-tomcat-9.x.tar.gz to your staging area at $PIN_HOME.

- Copy these files from the oc-cn-docker-files directory to

your staging area at $PIN_HOME.

- wsm_entrypoint.sh

- Dockerfile

- context.xml

- BRMActions.jar

- Update Tomcat in the Dockerfile to the latest version.

- Build the Web Services Manager image by entering this

command:

podman build --format docker --tag brm_wsm:$BRM_VERSION .

Deploying the Web Services Manager Tomcat Image in Kubernetes

To deploy the Web Services Manager for Tomcat image in Kubernetes:

- Configure your Web services by updating the configmap_infranet_properties_wsm.yaml file.

- In the override-values.yaml file for oc-cn-helm-chart,

set the following values:

- ocbrm.wsm.deployment.tomcat.isEnabled: Set this to true.

- ocbrm.wsm.deployment.tomcat.walletPassword: Set this to the Base64-encoded wallet password for the Web Services Manager image.

- ocbrm.wsm.deployment.tomcat.basicAuth: Optionally, set this to true to enable BASIC authentication.

- Optionally, for BASIC authentication, configure users in the

wsm_config/tomcat-users.xml file for oc-cn-helm-chart:

- Open tomcat-users.xml in a text editor.

- Locate the following lines and specify the login details of

the

user:

<role rolename="role"/> <user username="username" password="password" roles="role"/>

where:

- role is the role with permissions to access Web services, for example, brmws.

- username is the user name for accessing Web services.

- password is the password for accessing Web services.

- Save and close the file.

See "User File Format" under MemoryRealm in the Apache Tomcat documentation for more information about the format of tomcat-users.xml.

- Deploy the BRM Helm

chart:

helm install ReleaseName oc-cn-helm-chart --namespace NameSpace --values OverrideValuesFile

where:

- ReleaseName is the release name, which is used to track this installation instance.

- NameSpace is the namespace in which to create BRM Kubernetes objects.

- OverrideValuesFile is the path to the YAML file that overrides the default configurations in the BRM helm chart's values.yaml file.

Building and Deploying Web Services Manager for WebLogic Server Image

To deploy and use Web Services Manager on WebLogic Server, you should be familiar with:

- Oracle WebLogic Server 12.2.1.3. See the Oracle WebLogic Server 12.2.1.3 documentation (https://docs.oracle.com/middleware/12213/wls/index.html).

-

Oracle WebLogic Kubernetes Operator. See the WebLogic Kubernetes Operator documentation (https://oracle.github.io/weblogic-kubernetes-operator/).

The image for deploying BRM Web Services Manager on Oracle Weblogic Server 12.2.1.3 uses the domain in image approach. The image includes a WebLogic domain named brmdomain. When you build the image, the BRM SOAP Web Services application WAR files get deployed in this domain.

Containerizing the Web Services Manager for WebLogic Server image involves these high-level steps:

Building the Web Services Manager WebLogic Image

The BRM Web Services Manager on WebLogic Server image uses two images that run two containers inside each WebLogic Server pod.

To build the brm_wsm_wls15.0.x.0.0 image:

-

Copy the contents of the oc-cn-docker-files/ocbrm/brm_soap_wsm/weblogic/dockerfiles directory to your staging area at $PIN_HOME.

-

Customize the WebLogic domain-related properties by editing the dockerfiles/properties/docker-build/domain.properties file. For example:

DOMAIN_NAME=brmdomain ADMIN_PORT=7111 ADMIN_NAME=admin-server ADMIN_HOST=wlsadmin MANAGED_SERVER_PORT=8111 MANAGED_SERVER_NAME_BASE=managed-server CONFIGURED_MANAGED_SERVER_COUNT=3 CLUSTER_NAME=cluster-1 DEBUG_PORT=8453 DB_PORT=1527 DEBUG_FLAG=true PRODUCTION_MODE_ENABLED=true CLUSTER_TYPE=DYNAMIC JAVA_OPTIONS=-Dweblogic.StdoutDebugEnabled=false T3_CHANNEL_PORT=30012 T3_PUBLIC_ADDRESS=kubernetes IMAGE_TAG=brm_wsm_wls:$BRM_VERSION

-

Set the WebLogic domain user name and password by editing the dockerfiles/properties/docker-build/domain_security.properties file. For example:

username=UserName password=Password

Note:

It is strongly recommended that you set a new user name and password when building the image.For details about securing the domain_security.properties file, see https://github.com/oracle/docker-images/tree/master/OracleWebLogic/samples/12213-domain-home-in-image.

-

Build the brm_wsm_wls:15.0.x.0.0 image by running the build.sh script.

The script creates an image based on the custom tag defined in dockerfiles/properties/docker-build/domain.properties. By default, it creates the brm_wsm_wls:15.0.x.0.0 image and then deploys the BRMWebServices.war and infarnetwebsvc.war files.

Note:

If you don't want to deploy either BRMWebServices.war or infarnetwebsvc.war, modify the dockerfiles/container-scripts/app-deploy.py script.

-

Build the brm_wsm_wl_init:15.0.x.0.0 image by running this command:

podman build --format docker --tag brm_wsm_wl_init:15.0.x.0.0 --file Dockerfile_init_wsm .

This image runs an init container, which populates the Oracle wallet that is used by Web Services Manager to connect to the CM.

Deploying the Web Services Manager WebLogic Image in Kubernetes

You deploy the WebLogic Operator Helm chart so that Web Services Manager can work in a Kubernetes environment.To deploy the Web Services Manager for WebLogic Server image in Kubernetes:

-

Clone the Oracle WebLogic Kubernetes Operator Git project:

git clone https://github.com/oracle/weblogic-kubernetes-operator -

Modify these keys in the override-values.yaml file for oc-cn-helm-chart:

Note:

Ensure that you set the wsm.deployment.weblogic.enabled key to true.wsm: deployment: weblogic: enabled:true imageName:brm_wsm_wls initImageName:brm_wsm_wl_init imageTag:$BRM_VERSION username:d2VibG9naWM= password:password replicaCount:1 adminServerNodePort:30611 log_enabled:false minPoolSize:1 maxPoolSize:8 poolTimeout:30000 -

If the WebLogic user name and password was updated when building the brm_wsm_wls:15.0.x.0.0 image, also update the base64-encoded WebLogic user name and password in these keys:

.Values.ocbrm.wsm.deployment.weblogic.username .Values.ocbrm.wsm.deployment.weblogic.password -

Add the BRM WebLogic Server namespace in the kubernetes/charts/weblogic-operator/values.yaml file:

domainNamespaces: - "default" - "NameSpace" -

Deploy the WebLogic Operator Helm chart:

helm install weblogic-operator kubernetes/charts/weblogic-operator --namespace WebOperatorNameSpace --values WebOperatorOverrideValuesFile --wait

where:

-

WebOperatorNameSpace is the namespace in which to create WebLogic Operator Kubernetes objects.

-

WebOperatorOverrideValuesFile is the path to a YAML file that overrides the default configurations in the WebLogic Operator Helm chart's values.yaml file.

-

- Deploy the BRM helm

chart:

helm install ReleaseName oc-cn-helm-chart --namespace NameSpace --values OverrideValuesFile

where:

-

ReleaseName is the release name, which is used to track this installation instance.

-

NameSpace is the namespace in which oc-cn-helm-chart will be installed.

-

OverrideValuesFile is the path to a YAML file that overrides the default configurations in the BRM Helm chart's values.yaml file.

-

Updating the BRM Web Services Manager Configuration

Update the basic configurations for BRM Web Services Manager by editing the Kubernetes ConfigMap (configmap_infranet_properties_wsm_wl.yaml). After updating the configuration, restart your WebLogic Server pods.

Restarting the WebLogic Server Pods

To restart your WebLogic Server pods:

-

Stop the WebLogic Server pods by doing this:

-

In the domain_brm_wsm.yaml file, set the serverStartPolicy key to NEVER.

-

Update your Helm release.

helm upgrade ReleaseName oc-cn-helm-chart --namespace NameSpace --values OverrideValuesFile

where NameSpace is the namespace in which oc-cn-helm-chart will be installed.

-

-

Start the WebLogic Server pods by doing this:

-

In the domain_brm_wsm.yaml file, set the serverStartPolicy key to IF_NEEDED.

-

Update your Helm release:

helm upgrade ReleaseName oc-cn-helm-chart --namespace NameSpace --values OverrideValuesFile

-

Scaling Your WebLogic Managed Server

The default configuration starts one WebLogic Managed Server pod. To modify the configuration to start up to three pods, do this:

-

In the oc-cn-helm-chart/values.yaml file, set the .Values.ocbrm.wsm.deployment.weblogic.replicaCount key to 1, 2, or 3 WebLogic Managed Server pods.

-

Update your Helm release:

helm upgrade ReleaseName oc-cn-helm-chart --namespace NameSpace --values OverrideValuesFile

You set the maximum number of managed servers in the BRM Web Services Manager image by modifying the CONFIGURED_MANAGED_SERVER_COUNT property in the dockerfiles/properties/docker-build/domain.properties file.

Containerization of Email Data Manager

The Email Data Manager (DM) enables you to send customer notifications and invoices to your customers through email automatically. The Email DM uses the Sendmail client to forward emails to Postfix, which is the SMTP server. In-turn, Postfix sends the emails to your customers.

The Email DM will have the Sendmail client, and the Kubernetes host will have Postfix running. You must install and configure Postfix on your Kubernetes host.

To configure your cm pod to point to the Email DM, add this key to the oc-cn-helm-chart/values.yaml file:

ocbrm.dm_email.deployment.smtp: EmailHostNamewhere EmailHostName is the hostname of the server on which the Email DM is deployed. For example: em389.us.example.com.

To configure the Kubernetes host or SMTP server to accept data from the Email DM, do this:

-

Log in as the root user to the Kubernetes host.

-

Add the IP address for the Kubernetes host to the /etc/postfix/main.cf file:

inet_interfaces=localhost, HostIPAddress

For example, if the Kubernetes host is 10.242.155.149.

inet_interfaces=localhost, 10.242.155.149

-

Retrieve the container network configuration by running this command on the Kubernetes host:

/sbin/ifconfig cni0 | grep netmask | awk '{print$2"\n"$4}'The output will be similar to this:

10.244.0.1 ← The Kubernetes host IP, which is in the container network. 255.255.255.0

-

Edit the mynetworks field in the /etc/postfix/main.cf file to include the Kubernetes network in the list of trusted SMTP clients. If the Kubernetes host IP and Email DM container IP are in different networks, add both networks to the mynetworks field:

mynetworks = TrustedNetworks

where TrustedNetworks is the IP addresses for the SMTP clients that are allowed to relay mail through Postfix.

For example:

mynetworks = 168.100.189.0/28, 127.0.0.0/8, 10.244.0.0/24

-

Do one of these:

-

If Postfix is already running in the host, run this command:

systemctl restart postfix.service -

If Postfix isn't running in the host, run this command:

systemctl start postfix.service

-

Note:

In case of a multi-node environment, you can configure Postfix on the primary node (or any one node).

Containerization of Roaming Pipeline

Roaming allows a wireless network operator to provide services to mobile customers from another wireless network. For example, when a mobile customer makes a phone call from outside the home network, roaming allows the customer to access the same wireless services that he has with his home network provider through a visited wireless network operator.

You feed the input files for the roaming pipeline through a Kubernetes PersistentVolumeClaim (PVC). The EDR output files will be available in a PVC for consumption of the rel-daemon pod. When building the roaming pipeline image, pass the Perl path in these files as part of build-arg.

To containerize the roaming pipeline, update the configmap_infranet_properties_rel_daemon.yaml file to specify how to load your rated CDR output files. For example:

batch.random.events TEL, ROAM ROAM.max.at.highload.time 4 ROAM.max.at.lowload.time 2 ROAM.file.location /oms/ifw/data/roamout ROAM.file.pattern test*.out ROAM.file.type STANDARD

Note:

The input file to the splitter pipeline must start with Roam_.

Building and Deploying Vertex Manager

To deploy Vertex Manager (dm-vertex), you layer the dm-vertex image with the libraries for Vertex Communications Tax Q Series (CTQ) or Vertex Sales Tax Q Series (STQ). For the list of supported library versions, see "Additional BRM Software Requirements" in BRM Compatibility Matrix.

Deploying with Vertex Communications Tax Q Series

You deploy Vertex Manager with Vertex CTQ by doing the following:

-

Building the new Vertex Manager image by layering it with Vertex CTQ libraries.

-

Copy the entire Vertex CTQ installation directory to the $PIN_HOME directory, where $PIN_HOME is set to the path of your staging area.

-

Update the paths in the 64bit/bin/ctqcfg.xml, 64bit/cfg/ctqcfg.xml, and other Vertex CTQ files present in the Vertex CTQ installation directory. For example:

<configuration name="CTQ Test"> <fileControl> <updatePath>/oms/vertex/64bit/dat</updatePath> <archivePath>/oms/vertex/64bit/dat</archivePath> <callFilePath>/oms/vertex/64bit/dat</callFilePath> <reportPath>/oms/vertex/64bit/rpt</reportPath> <logPath>/oms/vertex/64bit/log</logPath> </fileControl>

-

In your copied Vertex CTQ installation directory, update the 64bit/bin/odbc/odbc.ini file. For example:

Note:

Set the Driver and TNSNamesFile entries to the file system path inside the pod.

[CtqTestOracle] Description=Vertex, Inc. 8.0 Oracle Wire Protocol Driver=/oms/vertex/64bit/bin/odbc/lib/VXor827.so … HostName=DBhostname LogonID=DBuser PortNumber=1521 Password=DBpassword ServerName=//IPaddress:1521/DBalias SID=DBalias TNSNamesFile=/oms/ora_k8/tnsnames.ora

where:

-

DBhostname is the host name of the machine on which the Vertex tax calculation database is installed.

-

DBuser is the Vertex database schema user name.

-

DBpassword is the password for the Vertex database schema user.

-

IPaddress is the IP address of the machine on which the Vertex tax calculation database is installed.

-

DBalias is the Vertex database alias name, which is defined in your tnsnames.ora file.

-

-

Layer the default images provided by Oracle.

For example, to layer dm-vertex with Vertex CTQ, you could add these sample commands to its Dockerfile. In this example, $PIN_HOME is set to /oms inside the pod.

FROM dm_vertex:15.0.x.0.0 USER root RUN mkdir -p /oms/vertex/64bit/cfg RUN chown -R omsuser:root /oms/vertex/64bit/cfg COPY ./Vertex_CTQ_30206/ /oms/vertex COPY Vertex_CTQ_30206/64bit/lib/libctq.so /oms/lib/ COPY Vertex_CTQ_30206/64bit/bin/odbc/lib/libodbc.so /oms/lib/libodbc.so RUN chown -R omsuser:root /oms/vertex RUN chown -R omsuser:root /oms/lib/libctq.so RUN chown -R omsuser:root /oms/lib/libodbc.so USER omsuser -

Build your new Vertex Manager image. For example:

podman build --format docker --tag dm_vertex_ctq:15.0.x.0.0 --file Dockerfile_vertex_ctq .

-

-

Enabling and configuring Vertex Manager in your BRM cloud native deployment.

-

Set these environment variables in your oc-cn-helm-chart/templates/dm_vertex.yaml file:

- name: LD_LIBRARY_PATH value: "/oms/vertex/64bit/bin/odbc:/oms/lib:/oms/sys/dm_vertex:/oms/vertex/64bit/lib" - name: CTQ_CFG_HOME value: "/oms/vertex/64bit/bin" - name: ODBCINI value: "/oms/vertex/64bit/bin/odbc/odbc.ini"

-

Uncomment these entries in your oc-cn-helm-chart/templates/configmap_pin_conf_dm_vertex.yaml file:

- dm_vertex commtax_sm_obj ${DM_VERTEX_CTQ_SM} - dm_vertex commtax_config_name ${DM_VERTEX_CTQ_CFG_NAME} - dm_vertex commtax_config_path ${DM_VERTEX_CTQ_CFG_PATH} -

Update these key in your override-values.yaml file for oc-cn-helm-chart:

dm_vertex: isEnabled: true deployment: replicaCount: 1 imageName: dm_vertex_ctq imageTag: 15.0.x.0.0 quantum_db_password: password ctqCfg: /oms/vertex/64bit/cfg ctqCfgName: CTQ Test ctqSmObj: ./dm_vertex_ctq30206.so -

Run the helm upgrade command to update your BRM Helm release:

helm upgrade BrmReleaseName oc-cn-helm-chart --values OverrideValuesFile --namespace BrmNameSpace

where:

-

BrmReleaseName is the release name for oc-cn-helm-chart and is used to track this installation instance.

-

BrmNameSpace is the namespace in which to create BRM Kubernetes objects for the BRM Helm chart.

-

OverrideValuesFile is the path to a YAML file that overrides the default configurations in the values.yaml file for oc-cn-helm-chart.

-

-

Deploying with Vertex Sales Tax Q Series

You deploy Vertex Manager with Vertex STQ by doing the following:

-

Copying the required libraries from the Vertex STQ installation directory to your $PIN_HOME/req_libs directory.

-

Layer the default images provided by Oracle. For example, to layer dm-vertex with Vertex STQ, you could add these sample commands to its Dockerfile:

FROM dm_vertex:15.0.x.0.0 USER root COPY ["req_libs/libvst*.so", "req_libs/libqutil*.so", "req_libs/libloc*.so", "/oms/lib/"] RUN chown omsuser:root -R /oms/lib/ /lib64 USER omsuser -

Build your new Vertex Manager image. For example:

podman build --format docker --tag dm_vertex_stq:15.0.x.0.0 --file Dockerfile_vertex_stq . -

Update these key in your override-values.yaml file for oc-cn-helm-chart:

dm_vertex: isEnabled: true deployment: replicaCount: 1 imageName: dm_vertex imageTag: 15.0.x.0.0 quantum_db_password: password -

Update these entries in your oc-cn-helm-chart/templates/configmap_env_dm_vertex.yaml file:

SERVICE_FQDN: dm-vertex QUANTUM_DB_SOURCE: quantum QUANTUM_DB_SERVER: qsu122a QUANTUM_DB_USER: quantum

-

Update these entries in your oc-cn-helm-chart/templates/configmap_odbc_ini_dm_vertex.yaml file:

data: odbc.ini: | [ODBC Data Sources] Server = Oracle Server v12.2 [Server] Description = Oracle Server v12.2 Driver = /usr/lib/oracle/19.20/client64/lib/libsqora.so.19.1 Servername = PINDB UserID = DBuser Password = DBpassword Port = 1521 Trace = yes TraceFile = /oms_logs/odbc.log Database = //DBhostname:DBportwhere:

-

Server is the name of the server on which the Vertex database is installed.

-

DBuser is the Vertex database schema user name.

-

DBpassword is the password for the Vertex database schema user.

-

DBhostname is the host name of the machine on which the Vertex tax calculation database is installed.

-

DBport is the port number of the Vertex tax calculation database.

-

-

Set these entries in your oc-cn-helm-chart/templates/configmap_pin_conf_dm_vertex.yaml file:

- dm_vertex quantum_sm_obj ./dm_vertex_stq100.so - dm_vertex quantumdb_source ${QUANTUM_DB_SOURCE} - dm_vertex quantumdb_server ${QUANTUM_DB_SERVER} - dm_vertex quantumdb_user ${QUANTUM_DB_USER} -

Run the helm upgrade command to update the BRM Helm release:

helm upgrade BrmReleaseName oc-cn-helm-chart --values OverrideValuesFile --namespace BrmNameSpace

where:

-

BrmReleaseName is the release name for oc-cn-helm-chart and is used to track this installation instance.

-

BrmNameSpace is the namespace in which to create BRM Kubernetes objects for the BRM Helm chart.

-

OverrideValuesFile is the path to a YAML file that overrides the default configurations in the values.yaml file for oc-cn-helm-chart.

-

Building BRM REST Services Manager Images

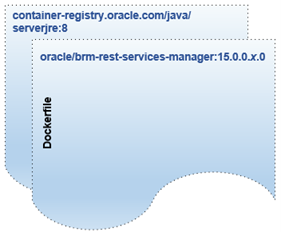

In a production deployment, containers for BRM REST Services Manager will run in their own pods on a Kubernetes node. Figure 15-1 shows how to stack images for BRM REST Services Manager.

Figure 15-1 Image Stack for BRM REST Services Manager

In this figure:

-

container-registry.oracle.com/java/serverjre:8: The base image on which BRM REST Services Manager will be deployed. The official image is available at https://container-registry.oracle.com/.

-

oracle/brm-rest-services-manager:15.0.x.0.0: The sample Dockerfile and related scripts used for creating the BRM REST Services Manager image (oracle/brm-rest-services-manager:15.0.x.0.0).

The oc-cn-docker-files/ocrsm/brm_rest_services_manager directory in the oc-cn-docker-files-15.0.x.0.0.tgz package contains a Dockerfile, container scripts, and an API JAR file.

You can load or build the BRM REST Services Manager image in the following ways:

-

The oc-cn-brm-rest-services-manager-15.0.x.0.0.tar image is included in the package. Apply the image in your machine by running this command:

podman load < oc-cn-brm-rest-services-manager-15.0.x.0.0.tar

-

If the image needs customization, modify the Dockerfile and then deploy it using this command:

podman build --format docker --tag oracle/brm-rest-services-manager:15.0.x.0.0 .

Building PDC REST Services Manager Images

In a production deployment, containers for PDC REST Services Manager will run in their own pods on a Kubernetes node. You create PDC REST Services Manager images by stacking these Dockerfiles in the following order:

-

container-registry.oracle.com/java/serverjre:8: The base image on which PDC REST Services Manager will be deployed. The official image is available at https://container-registry.oracle.com/.

-

oracle/pdcrsm:15.0.x.0.0: The sample Dockerfile and related scripts used for creating the PDC REST Services Manager image (oracle/pdcrsm:15.0.x.0.0).

To build PDC REST Services Manager images:

-

Copy the Dockerfile and the oc-cn-pdc-rsm-jars-15.0.x.0.0 file into the current working directory.

-

Run the following commands:

tar xvf oc-cn-pdc-rsm-jars-15.0.x.0.0.tar podman build --format docker --tag oracle/pdcrsm:15.0.x.0.0 .

Building PDC Images

To build the PDC image:

-

(Release 15.0.0 only) Layer the brm-apps and realtimepipe images by doing the following:

-

Download the brm-apps and realtimepipe images from the repository by entering this command:

podman pull RepoHost:RepoPort/ImageName

where:

-

RepoHost is the IP address or host name of the repository.

-

RepoPort is the port number for the repository.

-

ImageName is either brm_apps:15.0.0.0.0 or realtimepipe:15.0.0.0.0.

-

-

Tag the images by entering these commands:

podman tag RepoHost:RepoPort/brm_apps:15.0.0.0.0 brm_apps:1515.0.0.0.0 podman tag RepoHost:RepoPort/realtimepipe:15.0.0.0.0 realtimepipe:15.0.0.0.0

-

-

Download PricingDesignCenter-15.0.x.0.0.zip to the ParentFolder/Docker_files/PDCImage/other-files directory.

-

Pull the Java Image from the Oracle Container Registry (https://container-registry.oracle.com). This image is regularly updated with the latest security fixes. You can pull this image to your local system, where you will build other images, with the name container-registry.oracle.com/java/serverjre:JavaVersion.

where JavaVersion is the Oracle Java version number. See "Additional BRM Software Requirements" in BRM Compatibility Matrix for supported versions.

-

Set the following environment variables:

-

HTTP_PROXY: Set this to the host name or IP address of your proxy server

-

JAVA_VERSION: Set this to container-registry.oracle.com/java/serverjre:JavaVersion

-

BRM_VERSION: Set this to 15.0.x.0.0

-

-

Build your Oracle PDC BRM integration image by entering this command from the ParentFolder/Docker_files/PDCImage directory:

-

For release 15.0.0 only, run this command:

podman build --format docker --force-rm=true --no-cache=true --build-arg DB_VERSION=DBRelease --build-arg HTTP_PROXY=$HTTP_PROXY --build-arg JAVA_VERSION=$JAVA_VERSION --build-arg BRM_VERSION=$BRM_VERSION --tag $IMAGE_NAME --file Dockerfile .

where DBRelease is the Oracle database version number.

-

For release 15.0.1 or later, run this command:

podman build --format docker --force-rm=true --no-cache=true --build-arg HTTP_PROXY=$HTTP_PROXY --build-arg JAVA_VERSION=$JAVA_VERSION --tag $IMAGE_NAME --file Dockerfile .

-

-

(Optional) To use custom fields in your PDC RUM expressions:

-

Create a custom_flds.h file that contains your custom fields.

For information about the syntax to use in a header file, view the BRM_home/include/pin_flds.h file in the brm-sdk pod.

-

Parse the custom_flds.h file and generate a custom_flds.bat file.

-

Layer the BRM_home/lib/custom_flds.bat file in the following images: brm-apps, pdc, and cm.

For example, to layer the file in the pdc image:

FROM pdc:15.0.x.0.0 USER root COPY custom_flds.bat ${PIN_HOME}/lib/custom_flds.bat RUN chown=oracle:root ${PIN_HOME}/lib/custom_flds.bat -

In your brm-apps-2 ConfigMap (configmap_pin_conf_brm_apps_2.yaml), add the following entry under the load_config.conf section:

load_config.conf: | # Making custom fields entry - - ops_fields_extension_file ${PIN_HOME}/lib/custom_flds.h

-

In your CM ConfigMap (configmap_pin_conf_cm.yaml), add the following entry under the pin.conf section:

data: pin.conf: # Making custom fields entry - - ops_fields_extension_file ${PIN_HOME}/lib/custom_flds.h

-

In your testnap ConfigMap (configmap_pin_conf_testnap), add the following entry under the pin.conf section:

data: pin.conf: | # Making custom fields entry - - ops_fields_extension_file ${PIN_HOME}/lib/custom_flds.h

-

In your override-values.yaml file for oc-cn-helm-chart, update the imageTag keys to point to the new cm, brm-apps and pdc images.

Note:

Skip this step if your override-values.yaml file does not already contain imageTag keys for the cm, brm-apps, and pdc images.

-

Run the helm upgrade command to update your Helm release:

helm upgrade BrmReleaseName oc-cn-helm-chart --values OverrideValuesFile --namespace BrmNameSpace

-

Building Pipeline Configuration Center Images

The Pipeline Configuration Center image extends the Fusion Middleware Infrastructure image by packaging its own installer PipelineConfigurationCenter_15.0.x.0.0_generic.jar file along with scripts and configurations.

To build your own image of Pipeline Configuration Center, you must have these base images ready. The oc-cn-docker-files-15.0.x.0.0.tgz package includes references to all Dockerfiles and scripts that are needed to build images of Pipeline Configuration Center. You can refer to them when building a Pipeline Configuration Center image in your own environment.

Pulling the Fusion Middleware Infrastructure Image

The Fusion Middleware Infrastructure Image is available on the Oracle Container Registry (https://container-registry.oracle.com). This image is regularly updated with the latest security fixes. You can pull this image to your local system, where you will build other images, with the name container-registry.oracle.com/middleware/fmw-infrastructure_cpu:12.2.1.4-jdk8-ol7.

Building the Pipeline Configuration Center Image

To build the Pipeline Configuration Center image, do this:

-

Go to the oc-cn-docker-files/ocpcc/pcc directory.

-

Download the Oracle Communications Pipeline Configuration Center installation JAR file.

-

Copy PipelineConfigurationCenter_15.0.x.0.0_generic.jar to the current working directory (oc-cn-docker-files/ocpcc/pcc).

-

Build the Pipeline Configuration Center image by entering this command:

podman build --format docker --tag oracle/pcc:15.0.x.0.0 .

Building Billing Care Images

The Billing Care image extends the Linux image by packaging the application archive along with scripts and configurations.

To build your own image of Billing Care, you need the Linux and JRE images, available on the Oracle Container Registry (https://container-registry.oracle.com). These images are regularly updated with the latest security fixes. You can pull these images to your local system, where you will build other images, with the names:

- container-registry.oracle.com/os/oraclelinux:8

- container-registry.oracle.com/java/serverjre:8-oraclelinux8

The oc-cn-docker-files-15.0.x.0.0.tgz package includes references to all Dockerfiles and scripts that are needed to build images of Billing Care. You can refer to them when building a Billing Care image in your own environment.

Building the Billing Care Image

To build the Billing Care image, do this:

-

Go to the oc-cn-docker-files/ocbc/billing_care directory.

-

Download the Oracle Communications Billing Care installation JAR file.

-

Copy BillingCare_generic.jar to the current working directory (oc-cn-docker-files/ocbc/billing_care).

-

Build the Billing Care image by entering this command:

podman build --format docker --tag oracle/billingcare:15.0.x.0.0 .

Building the Billing Care REST API Image

To build the Billing Care REST API image:

-

Go to the oc-cn-docker-files/ocbc/bcws directory.

-

Download the Oracle Communications Billing Care REST API installation JAR file.

-

Copy BillingCare_generic.jar to the current working directory (oc-cn-docker-files/ocbc/bcws).

-

Build the Billing Care REST API image by entering this command:

podman build --format docker --tag oracle/bcws:15.0.x.0.0 .

Building Business Operations Center Images

The Business Operations Center image extends the Linux image by packaging the application archive along with scripts and configurations.

To build your own image of Business Operations Center, you need the Linux and JRE images, available on the Oracle Container Registry (https://container-registry.oracle.com). These images are regularly updated with the latest security fixes. You can pull these images to your local system, where you will build other images, with the names:

- container-registry.oracle.com/os/oraclelinux:8

- container-registry.oracle.com/java/serverjre:8-oraclelinux8

The oc-cn-docker-files-15.0.x.0.0.tgz package includes references to all of the Dockerfiles and scripts needed to build images of Business Operations Center. You can refer to them when building a Business Operations Center image in your own environment.

To build the Business Operations Center image, do this:

-

Go to the oc-cn-docker-files/ocboc/boc directory.

-

Download the Oracle Communications Business Operations Center installation JAR file.

-

Copy BusinessOperationsCenter_generic.jar to the current working directory (oc-cn-docker-files/ocboc/boc).

-

Build the Business Operations Center image by entering this command:

podman build --format docker --tag oracle/boc:15.0.x.0.0 .