Pre-upgrade Procedures

Following is the pre-upgrade procedures for OCCNE

upgrade:

- SSH to Bastion Host and

start docker using command below after adding the required parameters:

$ docker run -u root -d --restart=always -p 8080:8080 -p 50000:50000 -v jenkins-data:/var/jenkins_home -v /var/occne/cluster/${OCCNE_CLUSTER}:/var/occne/cluster/${OCCNE_CLUSTER} -v /var/run/docker.sock:/var/run/docker.sock ${CENTRAL_REPO}:${CENTRAL_REPO_DOCKER_PORT/jenkinsci/blueocean:1.19.0 - Get the administrator

password from the Jenkins container to log into the Jenkins user interface

running on the Bastion Host.

- SSH to the Bastion

host and run following command to get the Jenkins docker container ID:

$ docker ps | grep 'jenkins' | awk '{print $1}' Example output- 19f6e8d5639d - Get the admin

password from the Jenkins container running as bash. Execute the following

command run the container in bash mode:

$ docker exec -it <container id from above command> bash -

Run the following command from the Jenkins container while in bash mode. Once complete, capture the password for use later with user-name: admin to log in to the Jenkins GUI.

$ cat /var/jenkins_home/secrets/initialAdminPassword Example output - e1b3bd78a88946f9a0a4c5bfb0e74015 - Execute the following

ssh command from the Jenkins container in bash mode after getting the bastion

host ip address.

Note: The Bare Metal user is admusr and the vCNE user is cloud-user

ssh -t -t -i /var/occne/cluster/<cluster_name>/.ssh/occne_id_rsa <user>@<bastion_host_ip_address> - After executing the SSH command the following prompt appears: 'The authenticity of host can't be established. Are you sure you want to continue connecting (yes/no)' enter yes.

- Exit from bash mode of the Jenkins container (ie. enter exit at the command line).

- SSH to the Bastion

host and run following command to get the Jenkins docker container ID:

- Open the Jenkins GUI in a browser window using url, <bastion-host-ip>:8080 and login using the password from step 3c with user admusr

- Create a job with an

appropriate name after clicking New Item from Jenkins home page. Follow the

steps below:

- Select New Item on the Jenkins home page.

- Add a name and select the Pipeline option for creating the job

- Once the job is created and visible on the Jenkins home page, select Job. Select Configure.

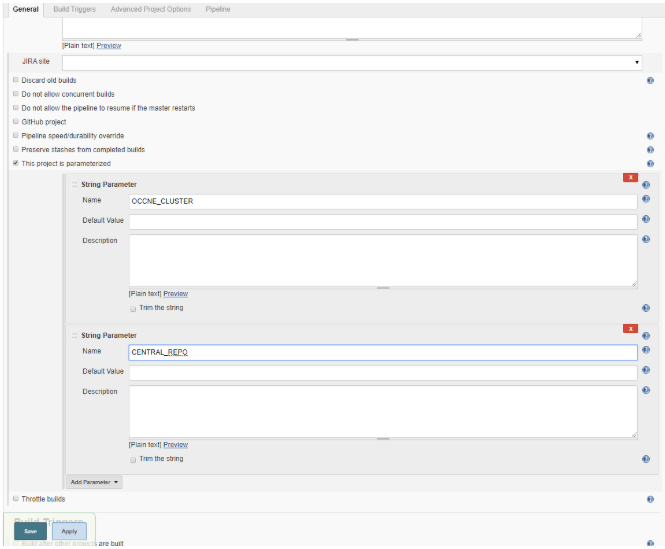

- Add parameters

OCCNE_CLUSTER and CENTRAL_REPO from configure screen. Two String Parameter

dialogs will appear, one for OCCNE_CLUSTER and one for OCCNE_REPO. Add default

value for the Default Value field in the OCCNE_CLUSTER dialog and the Default

Value field in theCENTRAL_REPO dialog.

Figure 3-1 Jenkins UI

- Copy the following

configuration to the pipeline script section in the Configure page of the

Jenkins job with substituting values for upgrade-image-version, OCCNE_CLUSTER ,

CENTRAL_REPO, and CENTRAL_REPO_DOCKER_PORT. All of these values should be known

to the user.

node ('master') { sh "docker run -i --rm -v /var/occne/cluster/${OCCNE_CLUSTER}:/host -e ANSIBLE_NOCOLOR=1 ${CENTRAL_REPO}:${CENTRAL_REPO_DOCKER_PORT}/occne/provision:<upgrade-image-version> cp deploy_upgrade/JenkinsFile /host/artifacts" sh "docker run -i --rm -v /var/occne/cluster/${OCCNE_CLUSTER}:/host -e ANSIBLE_NOCOLOR=1 ${CENTRAL_REPO}:${CENTRAL_REPO_DOCKER_PORT}/occne/provision:<upgrade-image-version> cp deploy_upgrade/upgrade_services.py /host/artifacts" load '/var/occne/cluster/<cluster-name>/artifacts/JenkinsFile' } - Select Apply and Save

- Go back to the Job page and select Build with Parameter for the new pipeline script to be enabled for the job, this job will be aborted.

- Select Build with Parameters option to see latest Jenkins file parameters in the GUI

Setup admin.conf

Execute the pre-upgrade admin.conf setup:

Note:

Only for upgrade from 1.3.2 to 1.4.0.- Add following entries to

/etc/hosts file on Bastion Host, master and worker nodes. Add all the master

internal ip's with name lb-apiserver.kubernetes.local:

<master-internal-ip-1> lb-apiserver.kubernetes.local <master-internal-ip-2> lb-apiserver.kubernetes.local <master-internal-ip-3> lb-apiserver.kubernetes.local Example for 3 master nodes- 172.16.5.6 lb-apiserver.kubernetes.local 172.16.5.7 lb-apiserver.kubernetes.local 172.16.5.8 lb-apiserver.kubernetes.local - Login to bastion host and

get dependencies for 1.4.0 latest pipeline:

$ docker run -it --rm -v /var/occne/cluster/<cluster-name>:/host -e ANSIBLE_NOCOLOR=1 -e 'OCCNEARGS= ' <central_repo>:<central_repo_docker_port>/occne/provision:<upgrade_image_version> /getdeps/getdeps Example: $ docker run -it --rm -v /var/occne/cluster/delta:/host -e ANSIBLE_NOCOLOR=1 -e 'OCCNEARGS= ' winterfell:5000/occne/provision:1.4.0 /getdeps/getdeps- Execute below command

on Bare Metal Cluster:

$ docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/<cluster-name>:/host -v /var/occne:/var/occne:rw -e 'OCCNEARGS= --tags=pre_upgrade ' <central_repo>:<central_repo_docker_port>/occne/provision:1.4.0 Example: $ docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/delta:/host -v /var/occne:/var/occne:rw -e 'OCCNEARGS= --tags=pre_upgrade ' winterfell:5000/occne/provision:1.4.0 - Execute below command

on VCNE Cluster:

Run kubectl get nodes command to verify above changes were applied correctly.$ docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/<cluster-name>:/host -v /var/occne:/var/occne:rw -e OCCNEINV=/host/terraform/hosts -e 'OCCNEARGS= --tags=pre_upgrade --extra-vars={"occne_vcne":"1","occne_cluster_name":"<occne_cluster_name>","occne_repo_host":"<occne_repo_host_name>","occne_repo_host_address":"<occne_repo_host_address>"} ' <central_repo>:<central_repo_port_name>/occne/provision:<upgrade_image_version> Example: $ docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/delta:/host -v /var/occne:/var/occne:rw -e OCCNEINV=/host/terraform/hosts -e 'OCCNEARGS= --tags=pre_upgrade --extra-vars={"occne_vcne":"1","occne_cluster_name":"ankit-upgrade-3","occne_repo_host":"ankit-upgrade-3-bastion-1","occne_repo_host_address":"192.168.200.9"} ' winterfell:5000/occne/provision:1.4.0 - Run following command

on all the master/ worker nodes:

yum clean all

- Execute below command

on Bare Metal Cluster: