2 Installation Procedures

The installation procedures in this document provision and configure an Oracle Communications Signaling, Network Function Cloud Native Environment (OCCNE). OCCNE offers the choice of deployment platform; the CNE can be deployed directly onto dedicated hardware, (referred to as a bare metal CNE), or deployed onto OpenStack-hosted VMs. (referred to as a virtualized CNE).

Regardless of which deployment platform is selected, OCCNE installation is highly automated. A collection of container-based utilities are used to automate the provisioning, installation, and configuration of OCCNE. These utilities are based on the following automation tools:

- PXE helps reliably automate provisioning the hosts with a minimal operating system.

- Terraform is used to create the virtual resources that the virtualized CNE is hosted on.

- Kubespray helps reliably install a base Kubernetes cluster, including all dependencies (like etcd), using the Ansible provisioning tool.

- Ansible is used to orchestrate the overall deployment.

- Helm is used to deploy and configure common services such as Prometheus, Grafana, ElasticSearch and Kibana.

Note:

Make sure that the shell is configured with Keepalive to avoid unexpected timeout.Bare Metal Installation

This section describes the procedure to install OCCNE onto dedicated bare metal hardware.

OCCNE Installation Overview

Frame and Component Overview

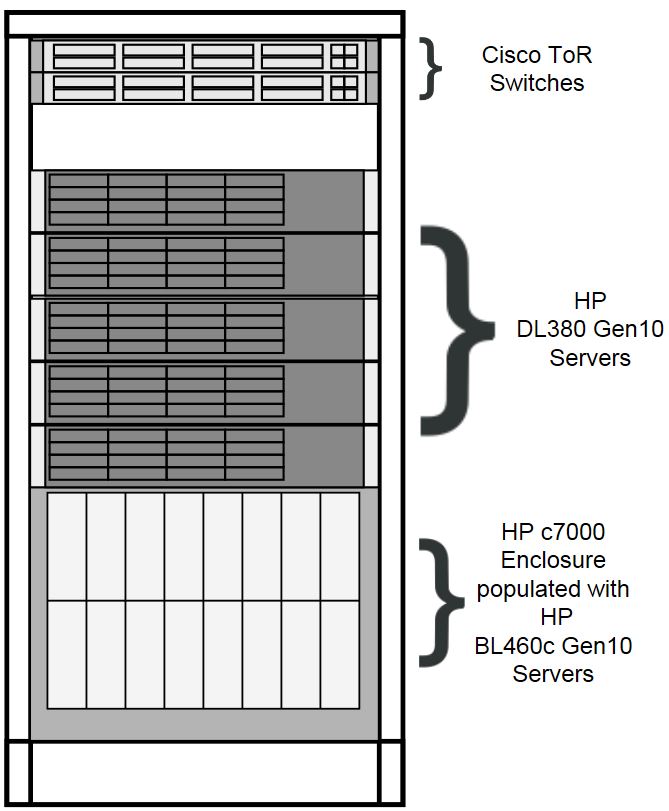

The initial release of the OCCNE system provides support for on-prem deployment to a very specific target environment consisting of a frame holding switches and servers. This section describes the layout of the frame and describes the roles performed by the racked equipment.

Note:

In the installation process, some of the roles of servers change as the installation procedure proceeds.Frame Overview

The physical frame is comprised of HP c-Class enclosure (BL460c blade servers), 5 DL380 rack mount servers, and 2 Top of Rack (ToR) Cisco switches. The frame components are added from the bottom up, thus designations found in the next section number from the bottom of the frame to the top of the frame.

Figure 2-1 Frame Overview

Host Designations

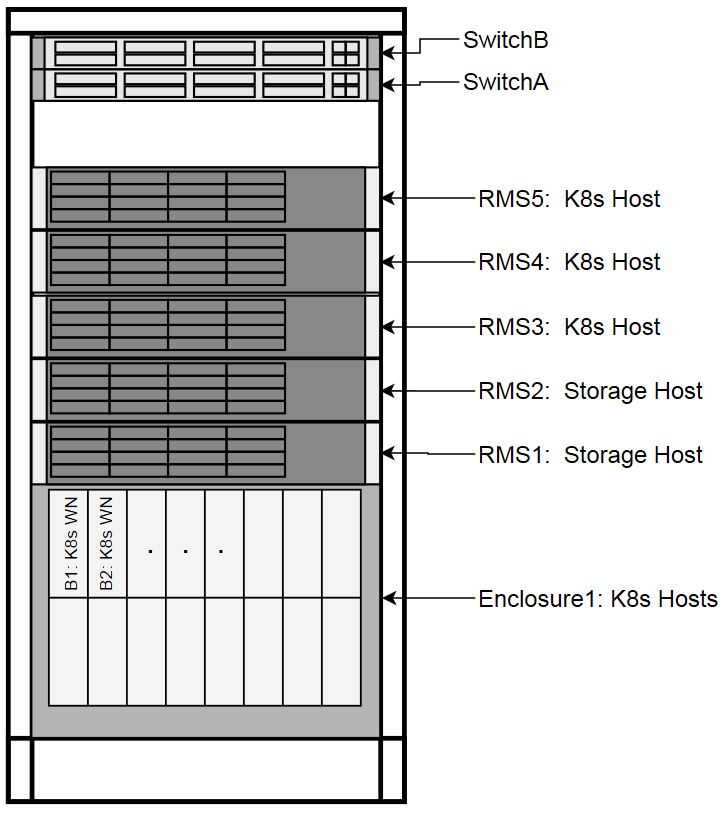

Each physical server has a specific role designation within the CNE solution.

Figure 2-2 Host Designations

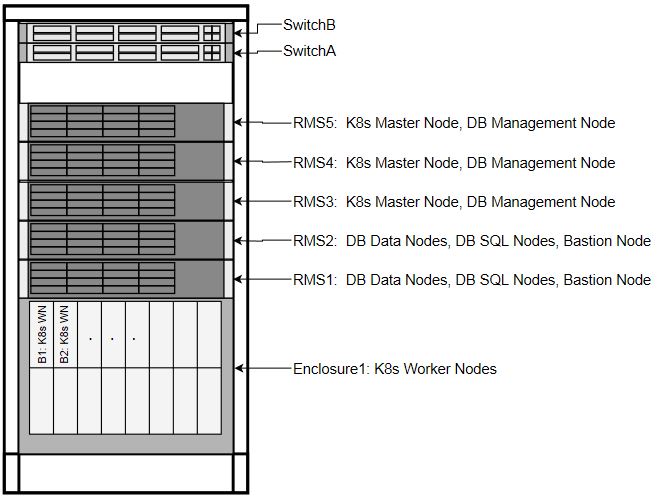

Node Roles

Along with the primary role of each host, a secondary role may be assigned. The secondary role may be software related, or, in the case of the Bootstrap Host, hardware related, as there are unique OOB connections to the ToR switches.

Figure 2-3 Node Roles

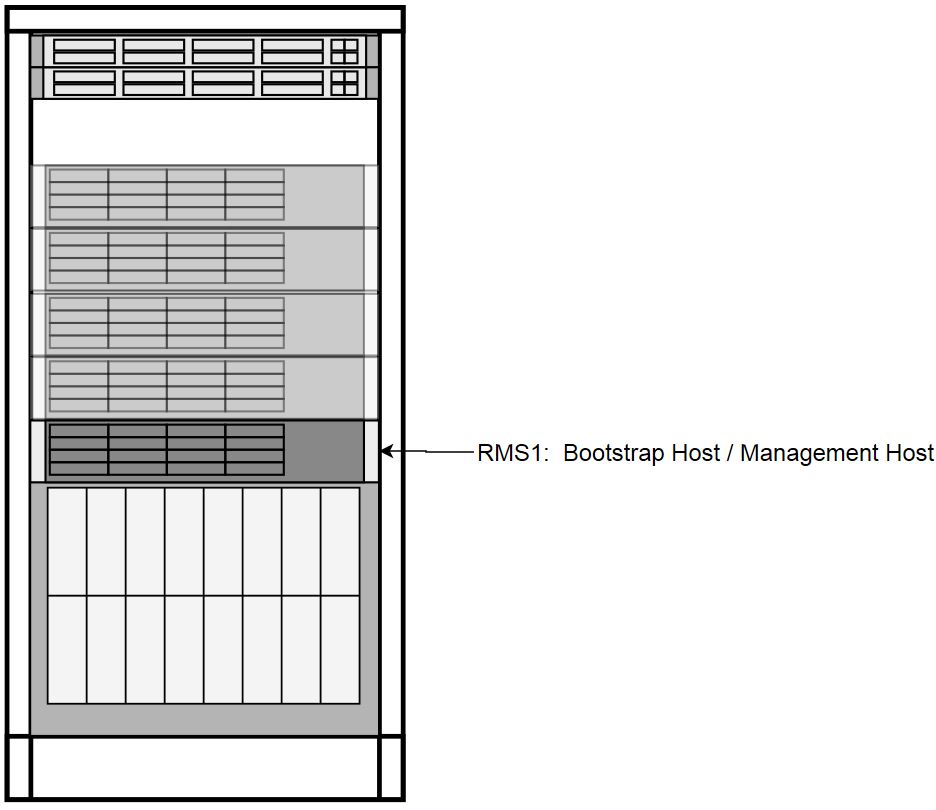

Transient Roles

Transient role is unique in that it has OOB connections to the ToR switches, which includes the designation of Bootstrap Host. This role is only relevant during initial switch configuration and disaster recovery of the switch. RMS1 also has a transient role as the Installer Bootstrap Host, which is only relevant during initial install of the frame, and subsequent to getting an official install on RMS2, this host is re-paved to its Storage Host role.

Figure 2-4 Transient Roles

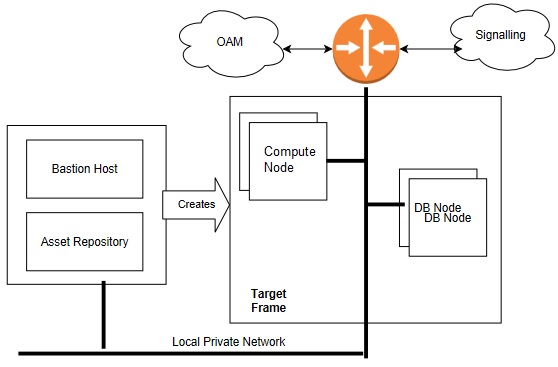

Create OCCNE Instance

This section describes the steps and procedures required to create an OCCNE instance at a customer site. The following diagrams shows the installation context:

Figure 2-5 OCCNE Installation Overview

The following is an overview or basic install flow for reference to understand the overall effort contained within these procedures:

- Check that the hardware is on-site and properly cabled and powered up.

- Pre-assemble the basic

ingredients needed to perform a successful install:

-

Identify

- Download and stage software and other configuration files using provided manifests. Refer to Artifact Acquisition and Hosting for manifests information.

- Identify the layer 2 (MAC) and layer 3 (IP) addresses for the equipment in the target frame

- Identify the addresses of key external network services (for example, NTP, DNS, etc.)

- Verify / Set all of the credentials for the target frame hardware to known settings

-

Prepare

- Software Repositories: Load the various SW repositories (YUM, Helm, Docker, etc.) using the downloaded software and configuration

- Configuration Files: Populate the hosts inventory file with credentials and layer 2 and layer 3 network information, switch configuration files with assigned IP addresses, and yaml files with appropriate information.

-

- Bootstrap the System:

- Manually configure a Minimal Bootstrapping Environment (MBE); perform the minimal set of manual operations to enable networking and initial loading of a single Rack Mount Server - RMS1 - the transient Installer Bootstrap Host. In this procedure, a minimal set of packages needed to configure switches, iLOs, PXE boot environment, and provision RMS2 as an OCCNE Storage Host are installed.

- Using the newly constructed MBE, automatically create the first (complete) Management VM on RMS2. This freshly installed Storage Host will include a virtual machine for hosting the Bastion Host.

- Using the newly constructed Bastion Host on RMS2, automatically deploy and configure the OCCNE on the other servers in the frame

- Final Steps

- Perform post installation checks

- Perform recommended security hardening steps

Cluster Bootstrapping Overview

This install procedure is targeted at installing OCCNE onto a new hardware absent of any networking configurations to switches, or operating systems provisioned. Therefore, the initial step in the installation process is to provision RMS1 (see Installation Procedures) as a temporary Installer Bootstrap Host. The Bootstrap Host is configured with a minimal set of packages needed to configure switches, iLOs, PXE boot environment, and provision RMS2 as an OCCNE Storage Host. A virtual Bastion Host is also provisioned on RMS2. The Bastion Host is then used to provision (and in the case of the Bootstrap Host, re-provision) the remaining OCCNE hosts and install Kubernetes, Database services, and Common Services running within the Kubernetes cluster.

Installation Prerequisites

Obtain Mate Site DB Replication Service Load Balancer IP

Complete the procedures outlined in this section before moving on to the Install Procedures section. OCCNE installation procedures require certain artifacts and information to be made available prior to executing installation procedures. Refer to Configure Artifact Acquisition and Hosting for the prerequisites.

While installing MYSQL NDB on the second site the Mate Site DB Replication Service Load Balancer IP must be provided as the configuration parameter for the geo-replication process to start.

- Login to Bastion Host of the first site and execute the following command to retrieve DB Replication Service Load Balancer IP

- Fetch DB Replication

Service Load Balancer IP of Mate Site MYSQL NDB.

In the above example IPv4: 10.75.182.88 is the Mate Site DB Replication Service Load Balancer IP.$ kubectl get svc --namespace=occne-infra | grep replication Example: $ kubectl get svc --namespace=occne-infra | grep replication occne-db-replication-svc LoadBalancer 10.233.3.117 10.75.182.88 80:32496/TCP 2m8s

Configure Artifact Acquisition and Hosting

OCCNE requires artifacts from Oracle eDelivery and certain open-source projects. OCCNE deployment environments are not expected to have direct internet access. Thus, customer-provided intermediate repositories are necessary for the OCCNE installation process. These repositories will need OCCNE dependencies to be loaded into them. This section will address the artifacts list needed to be in these repositories.

Oracle eDelivery Artifact Acquisition

The OCCNE artifacts are posted on Oracle Software delivery Cloud (OSDC) and/or OHC.

Third Party Artifacts

OCCNE dependencies that come from open-source software must be available in repositories reachable by the OCCNE installation tools. For an accounting of third party artifacts needed for this installation, refer to the Artifact Acquisition and Hosting.

Populate the MetalLB Configuration

Introduction

The metalLB configMap file (mb_configmap.yaml) contains the manifest for the metalLB configMap, this defines the BGP peers and address pools for metalLB. This file (mb_configmap.yaml) must be placed in the same directory (/var/occne/<cluster_name>) as the hosts.ini file.

- Add BGP peers and address groups: Referring to the data collected in the Preflight Checklist, add BGP peers (ToRswitchA_Platform_IP, ToRswitchB_Platform_IP) and address groups for each address pool. Address-pools list the IP addresses that metalLB is allowed to allocate.

- Edit the mb_configmap.yaml file with the site-specific values found in the

Preflight Checklist

Note:

The name "signaling" is prone to different spellings (UK vs US), therefore pay special attention to how this signaling pool is referenced.configInline: peers: - peer-address: <ToRswitchA_Platform_IP> peer-asn: 64501 my-asn: 64512 - peer-address: <ToRswitchB_Platform_IP> peer-asn: 64501 my-asn: 64512 address-pools: - name: signaling protocol: bgp auto-assign: false addresses: - '<MetalLB_Signal_Subnet_IP_Range>' - name: oam protocol: bgp auto-assign: false addresses: - '<MetalLB_OAM_Subnet_IP_Range>'

Install Backup Bastion Host

Introduction

This procedure details the steps necessary to install the Backup Bastion Host on the Storage Host db-1/RMS1 and backing up the data from the active Bastion Host on db-2/RMS2 to the Backup Bastion Host.Prerequisites

- Bastion Host is already created on Storage Host db-2/RMS2.

- Storage Host db-2/RMS2 and the Backup Bastion Host

are defined in the Customer hosts.ini file as defined in procedure: Inventory File Preparation.

Note:

If the initial bootstrap host is RMS1 then the bastion host is created on the RMS2 and backup bastion host is created on the RMS1. - Host names and IP Address, network information assigned to Backup Management VM are captured in the Installation PreFlight Checklist.

- All the Network information should be configured in Inventory File Preparation.

Expectations

- Bastion Host VM on Storage Host db-1/RMS1 is created as a backup for Bastion Host VM on Storage Host db-2/RMS2.

- All the required config files and data configured in the Backup Bastion Host on Storage Host db-1/RMS1 are copied from the active Bastion Host on Storage Host db-2/RMS2.

Procedure

Create the Backup Bastion Host on Storage Host db-1/RMS1

All commands are executed from the active Bastion Host on db-2/RMS2.

- Login to the active Bastion Host (VM on RMS2) using the admusr/****** credentials.

- Execute the deploy.sh script from the

/var/occne/directory with the required parameters set.$ export CENTRAL_REPO=<customer specific repo name> $ export CENTRAL_REPO_IP=<customer_specific_repo_ipv4> $ export OCCNE_CLUSTER=<cluster_name> $ export OCCNE_BASTION=<bastion_full_name> $ ./deploy.sh Customer Example: $ export CENTRAL_REPO=central-repo $ export CENTRAL_REPO_IP=10.10.10.10 $ export OCCNE_CLUSTER=rainbow $ export OCCNE_BASTION=bastion-1.rainbow.lab.us.oracle.com $ ./deploy.sh Note: The above example can be executed like the following: CENTRAL_REPO=central-repo CENTRAL_REPO_IP=10.10.10.10 OCCNE_CLUSTER=rainbow OCCNE_BASTION=bastion-1.rainbow.lab.us.oracle.com ./deploy.sh - Verify installation of the Backup Bastion Host.

Note:

The IP of the backup Bastion Host can be derived from the hosts.ini file under the group host_kernel_virtual for db-1 ansible_host IP.$ ssh -i ~/.ssh/id_rsa admusr@<backup_bastion_ip_address>

Initial Configuration - Prepare a Minimal Boot Strapping Environment

In the first step of the installation, a minimal bootstrapping environment is established that is to support the automated installation of the CNE environment. The steps in this section provide the details necessary to establish this minimal bootstrap environment on the Installer Bootstrap Host using a Keyboard, Video, Mouse (KVM) connection.

Installation of Oracle Linux 7.5 on Bootstrap Host

This procedure outlines the installation steps for installing the OL7 onto the OCCNE Installer Bootstrap Host. This host is used to configure the networking throughout the system and install OL7 onto RMS1. It is re-paved as a Database Host in a later procedure.

Prerequisites

- USB drive of sufficient size to hold the ISO (approximately 5Gb)

- Oracle Linux 7.5 iso

- YUM repository file

- Keyboard, Video, Mouse (KVM)

Limitations and Expectations

- The configuration of the Installer Bootstrap Host is meant to be quick and easy, without a lot of care on appropriate OS configuration. The Installer Bootstrap Host is re-paved with the appropriate OS configuration for cluster and DB operation at a later stage of installation. The Installer Bootstrap Host needs a Linux OS and some basic network to get the installation process started.

- All steps in this procedure are performed using Keyboard, Video, Mouse (KVM).

References

- Oracle Linux 7 Installation guide: https://docs.oracle.com/cd/E52668_01/E54695/html/index.html

- HPE Proliant DL380 Gen10 Server User Guide

Bootstrap Install Procedure

- Create Bootable USB Media:

- Download the Oracle Linux

7.5.

On the installer's notebook, download the OL ISO from the customer's repository. Since Installer notebook may be Windows or Linux OS, and the Customer repository location may vary so the user executing this procedure determines the appropriate detail to execute this task.

- Push the OL ISO image onto the

USB Flash Drive.

Since the installer's notebook may be Windows or Linux OS-based, the user executing this procedure determines the appropriate detail to execute this task. For a Linux based notebook, insert a USB Flash Drive of the appropriate size into a Laptop (or some other linux host where the iso can be copied to), and run the

ddcommand to create a bootable USB drive with the Oracle Linux 7 iso.$ dd -if=<path to ISO> -of=<USB device path> -bs=1m

- Download the Oracle Linux

7.5.

- Install OL7 on the Installer Bootstrap Host:

- Connect a Keyboard, Video, and Mouse (KVM) into the Installer Bootstrap Host's monitor and USB ports.

- Plug the USB flash drive containing the bootable iso into an available USB port on the Bootstrap host (usually in the front panel).

- Reboot the host by momentarily

pressing the power button on the host's front panel. The button will go

yellow. If it holds at yellow, press the button again. The host should

auto-boot to the USB flash drive.

Note:

If the host was previously configured and the USB is not a bootable path in the boot order, it may not boot successfully. - If the host does not boot to the USB, repeat step 3, and interrupt the boot process by pressing F11 which brings up the Boot Menu. If the host has been recently booted with an OL, the Boot Menu will display Oracle Linux at the top of the list. Select Generic USB Boot as the first boot device and proceed.

- The host attempts to boot from the USB. The following menu is displayed on the screen. Select Test this media & install Oracle Linux 7.x and click ENTER. This begins the verification of the media and the boot process. After the verification reaches 100%, the Welcome screen is displayed. When prompted for the language to use, select the default setting: English (United States) and click Continue in the lower left corner.

- The INSTALLATION

SUMMARY page, is displayed. The following settings are expected.

If any of these are not set correctly then please select that menu item

and make the appropriate changes.

- LANGUAGE SUPPORT: English (United States)

- KEYBOARD: English (US)

- INSTALLATION SOURCE: Local Media

- SOFTWARE SELECTION: Minimal Install

INSTALLATION DESTINATION should display

No disks selected. Select INSTALLATION DESTINATION to indicate the drive to install the OS on.Select the first HDD drive ( in this case that would be the first one listed or the 1.6 TB disk) and select DONE in the upper right corner.

If the server has already been installed a red banner at the bottom of the page may indicate there is an error condition. Selecting that banner causes a dialog to appear indicating there is not enough free space (which might mean an OS has already been installed). In the dialog it may show both 1.6 TB HDDs as claimed or just the one.

If only one HDD is displayed (or it could be both 1.6 TB drives selected, select the Reclaim space button. Another dialog appears. Select the Delete all button and the Reclaim space button again. Select DONE to return to the INSTALLATION SUMMARY screen.If the disk selection dialog appears (after selecting the red banner at the bottom of the page), this implies a full installation of the RMS has already been performed (usually this is because the procedure had to be restarted after it was successfully completed). In this case select the Modify Disk Selection. This will return to the disk selection page. Select both HDDs and hit done. The red banner should now indicate the space must be reclaimed. The same steps to reclaim the space can be performed.

- Select DONE. This returns to the INSTALLATION SUMMARY page.

- At the INSTALLATION SUMMARY screen, select Begin Installation. The CONFIGURATION screen is displayed.

- At the CONFIGURATION

screen, select ROOT

PASSWORD.

Enter a root password appropriate for this installation. It is good practice to use a customer provided secure password to minimize the host being compromised during installation.

- At the conclusion of the install, remove the USB and select Reboot to complete the install and boot to the OS on the host. At the end of the boot, the login prompt appears.

Install Additional Packages

- dnsmasq

- dhcp

- xinetd

- tftp-server

- dos2unix

- nfs-utils

- Login with the root user and password configured above.

- Create the mount directory:

$ mkdir /media/usb - Insert the USB into an available USB port (usually the front USB port) of the Installer Bootstrap Host.

- Find and mount the USB partition.

Typically the USB device is enumerated as

/dev/sdabut that is not always the case. Use thelsblkcommand to find the USB device. An examplelsblkoutput is below. The capacity of the USB drive is expected to be approximately 30GiB, therefore the USB drive is enumerated as device/dev/sdain the example below:$ lsblk sdd 8:48 0 894.3G 0 disk sde 8:64 0 1.7T 0 disk sdc 8:32 0 894.3G 0 disk ├─sdc2 8:34 0 1G 0 part /boot ├─sdc3 8:35 0 893.1G 0 part │ ├─ol-swap 252:1 0 4G 0 lvm [SWAP] │ ├─ol-home 252:2 0 839.1G 0 lvm /home │ └─ol-root 252:0 0 50G 0 lvm / └─sdc1 8:33 0 200M 0 part /boot/efi sda 8:0 1 29.3G 0 disk ├─sda2 8:2 1 8.5M 0 part └─sda1 8:1 1 4.3G 0 partThe

dmesgcommand also provides information about how the operating system enumerates devices. In the example below, thedmesgoutput indicates the USB drive is enumerated as device /dev/sda.Note: The output is shortened here for display purposes.

$ dmesg ... [8850.211757] usb-storage 2-6:1.0: USB Mass Storage device detected [8850.212078] scsi host1: usb-storage 2-6:1.0 [8851.231690] scsi 1:0:0:0: Direct-Access SanDisk Cruzer Glide 1.00 PQ: 0 ANSI: 6 [8851.232524] sd 1:0:0:0: Attached scsi generic sg0 type 0 [8851.232978] sd 1:0:0:0: [sda] 61341696 512-byte logical blocks: (31.4 GB/29.3 GiB) [8851.234598] sd 1:0:0:0: [sda] Write Protect is off [8851.234600] sd 1:0:0:0: [sda] Mode Sense: 43 00 00 00 [8851.234862] sd 1:0:0:0: [sda] Write cache: disabled, read cache: enabled, doesn't support DPO or FUA [8851.255300] sda: sda1 sda2 ...The USB device should contain at least two partitions. One is the boot partition and the other is the install media. The install media is the larger of the two partitions. To find information about the partitions use the

fdiskcommand to list the filesystems on the USB device. Use the device name discovered via the steps outlined above. In the examples above, the USB device is/dev/sda.$ fdisk -l /dev/sda Disk /dev/sda: 31.4 GB, 31406948352 bytes, 61341696 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk label type: dos Disk identifier: 0x137202cf Device Boot Start End Blocks Id System /dev/sda1 * 0 8929279 4464640 0 Empty /dev/sda2 3076 20503 8714 ef EFI (FAT-12/16/32)In the example output above, the

/dev/sda2partition is the EFI boot partition. Therefore the install media files are on/dev/sda1. Use themountcommand to mount the install media file system. The same command without any options is used to verify the device is mounted to/media/usb.$ mount /dev/sda1 /media/usb $ mount ... /dev/sda1 on /media/usb type iso9660 (ro,relatime,nojoliet,check=s,map=n,blocksize=2048) - Create a

yum configfile to install packages from local install media.Create a repo file

/etc/yum.repos.d/Media.repowith the following information:[ol7_base_media] name=Oracle Linux 7 Base Media baseurl=file:///media/usb gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-oracle gpgcheck=1 enabled=1 -

Disable the default public yum repo. This is done by renaming the current

.repofile to end with something other than.repo. Adding.disabledto the end of the file name is standard.Note: This can be left in this state as the Installer Bootstrap Host is re-paved in a later procedure.

$ mv /etc/yum.repos.d/public-yum-ol7.repo /etc/yum.repos.d/public-yum-ol7.repo.disabled - Use the

yum repolistcommand to check the repository configuration.The output of

yum repolistshould look like the example below. Verify there no errors regarding un-reachable yum repos.$ yum repolist Loaded plugins: langpacks, ulninfo repo id repo name status ol7_base_media Oracle Linux 7 Base Media 5,134 repolist: 5,134 - Use yum to install the additional

packages from the USB repo.

$ yum install dnsmasq $ yum install dhcp $ yum install xinetd $ yum install tftp-server $ yum install dos2unix $ yum install nfs-utils -

Verify installation of dhcp, xinetd, and tftp-server.

Note: Currently

dnsmasqis not being used. The verification of tftp makes sure the tftp file is included in the/etc/xinetd.ddirectory. Installation/Verification does not include actually starting any of the services. Service configuration/starting is performed in a later procedure.Verify dhcp is installed: ------------------------- $ cd /etc/dhcp $ ls dhclient.d dhclient-exit-hooks.d dhcpd6.conf dhcpd.conf scripts Verify xinetd is installed: --------------------------- $ cd /etc/xinetd.d $ ls chargen-dgram chargen-stream daytime-dgram daytime-stream discard-dgram discard-stream echo-dgram echo-stream tcpmux-server time-dgram time-stream Verify tftp is installed: ------------------------- $ cd /etc/xinetd.d $ ls chargen-dgram chargen-stream daytime-dgram daytime-stream discard-dgram discard-stream echo-dgram echo-stream tcpmux-server tftp time-dgram time-stream - Unmount the USB and remove the USB

from the host. The mount command can be used to verify the USB is no longer

mounted to

/media/usb.$ umount /media/usb $ mount Verify that /dev/sda1 is no longer shown as mounted to /media/usb.

Configure the Installer Bootstrap Host BIOS

Introduction

These procedures define the steps necessary to set up the Legacy BIOS changes on the Bootstrap host using the KVM. Some of the procedures in this document require a reboot of the system and are indicated in the procedure.

Prerequisites

Procedure OCCNE Installation of Oracle Linux 7.5 on Bootstrap Host is complete.

Limitations and Expectations

- Applies to HP Gen10 iLO 5 only.

- The procedures listed here applies to the Bootstrap host only.

Steps to OCCNE Configure the Installer Bootstrap Host BIOS

- Expose the System Configuration Utility: This step details how to expose the HP

iLO 5 System Configuration Utility main page from the KVM. It does not provide

instructions on how to connect the console as these may be different on each

installation.

- After making the proper connections for the KVM on the back of the Bootstrap host to have access to the console, the user should reboot the host by momentarily pressing the power button on the front of the Bootstrap host.

- Expose the HP Proliant DL380

Gen10 System Utilities.

Once the remote console has been exposed, the system must be reset to force it through the restart process. When the initial window is displayed, hit the F9 key repeatedly. Once the F9 is highlighted at the lower left corner of the remote console, it should eventually bring up the main System Utility.

- The System Utilities screen is exposed in the remote console.

- Change over from UEFI Booting Mode to Legacy BIOS Booting Mode: The System

Utility must default the booting mode to UEFI or has been changed to UEFI, it

will be necessary to switch the booting mode to Legacy.

- Expose the System Configuration Utility by following Step 1.

- Select System Configuration.

- Select BIOS/Platform Configuration (RBSU).

- Select Boot Options.

If the Boot Mode is set to UEFI Mode then this procedure should be used to change it to Legacy BIOS Mode.

Note: The server reset must go through an attempt to boot before the changes will actually apply.

- The user is prompted to select the Reboot Required popup dialog. This will drop back into the boot process. The boot must go into the process of actually attempting to boot from the boot order. This should fail since the disks have not been installed at this point. The System Utility can be accessed again.

- After the reboot and the user re-enters the System Utility, the Boot Options page should appear.

- Select F10: Save if it's desired to save and stay in the utility or select the F12: Save and Exit if its desired to save and exit to complete the current boot process.

- Adding a New User Account: This step provides the steps required to add a new

user account to the server iLO 5 interface.

Note:

This user must match the pxe_install_lights_out_usrfields as provided in the hosts inventory files created using the template: OCCNE Inventory File Preparation.- Expose the System Utility by following Step 1.

- Select System Configuration.

- Select iLO 5 Configuration Utility.

- Select User Management → Add User .

- Select the appropriate

permissions. For the root user set all permissions to YES. Enter root as New User Name and

Login Name

fields, and enter

<password>in the Password field. - Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit, to complete the current boot process.

- Force PXE to boot from the first Embedded FlexibleLOM HPE Ethernet 10Gb 2-port

Adapter. During host PXE, the DHCP DISCOVER requests from the hosts must be

broadcast over the 10Gb port. This step provides the steps necessary to

configure the broadcast to use the 10Gb ports before it attempts to use the 1Gb

ports. Moving the 10Gb port up on the search order helps to speed up the

response from the host servicing the DHCP DISCOVER. Enclosure blades have 2 10GE

NICs which default to being configured for PXE booting. The RMS are

re-configured to use the PCI NICs using this step.

- Expose the System Utility by following Step 1.

- Select System Configuration.

- Select BIOS/Platform Configuration (RBSU).

- Select Boot Options.

This menu defines the boot mode which should be set to Legacy BIOS Mode, the UEFI Optimized Boot which should be disabled, and the Boot Order Policy which should be set to Retry Boot Order Indefinitely (this means it will keep trying to boot without ever going to disk). In this screen select Legacy BIOS Boot Order. If not in Legacy BIOS Mode, please follow procedure 2.2 Change over from UEFI Booting Mode to Legacy BIOS Booting Mode to set the Configuration Utility to Legacy BIOS Mode.

- Select Legacy BIOS Boot Order

This page defines the legacy BIOS boot order. This includes the list of devices from which the server will listen for the DHCP OFFER (includes the reserved IPv4) after the PXE DHCP DISCOVER message is broadcast out from the server.

In the default view, the 10Gb Embedded FlexibleLOM 1 Port 1 is at the bottom of the list. When the server begins the scan for the response, it scans down this list until it receives the response. Each NIC will take a finite amount of time before the server gives up on that NIC and attempts another in the list. Moving the 10Gb port up on this list should decrease the time that is required to finally process the DHCP OFFER.

To move an entry, select that entry, hold down the first mouse button and move the entry up in the list below the entry it must reside under.

- Move the 10 Gb Embedded FlexibleLOM 1 Port 1 entry up above the 1Gb Embedded LOM 1 Port 1 entry.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit, to complete the current boot process.

- Enabling Virtualization: This step provides the steps required to enable

virtualization on a given Bare Metal Server. Virtualization can be configured

using the default settings or via the Workload Profiles.

- Verifying Default Settings

- Expose the System Configuration Utility by following Step 1.

- Select System Configuration.

- Select BIOS/Platform Configuration (RBSU)

- Select Virtualization

Options

This screen displays the settings for the Intel(R) Virtualization Technology (IntelVT), Intel(R) VT-d, and SR-IOV options (Enabled or Disabled). The default values for each option is Enabled.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit, to complete the current boot process.

- Verifying Default Settings

- Disable RAID Configurations:

- Expose the System Configuration Utility by following Step 1.

- Select System Configuration.

- Select Embedded RAID 1 : HPE Smart Array P408i-a SR Gen 10.

- Select Array Configuration.

- Select Manage Arrays.

- Select Array A (or any designated Array Configuration if there are more than one).

- Select Delete Array.

- Select Submit Changes.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit, to complete the current boot process.

- Enable the Primary Boot Device: This step provides the steps necessary to

configure the primary bootable device for a given Gen10 Server. In this case the

RMS would include two devices as Hard Drives (HDDs). Some configurations may

also include two Solid State Drives (SSDs). The SSDs are not to be selected for

this configuration. Only the primary bootable device is set in this procedure

since RAID is being disabled. The secondary bootable device remains as Not

Set.

- Expose the System Configuration Utility by following Step 1.

- Select System Configuration.

- Select Embedded RAID 1 : HPE Smart Array P408i-a SR Gen 10.

- Select Set Bootable Device(s) for Legacy Boot Mode. If the boot devices are not set then it will display Not Set for the primary and secondary devices.

- Select Select Bootable Physical Drive.

- Select Port 1| Box:3 Bay:1

Size:1.8 TB SAS HP EG00100JWJNR.

Note: This example includes two HDDs and two SSDs. The actual configuration may be different.

- Select Set as Primary Bootable Device.

- Select Back to Main Menu.

This will return to the HPE Smart Array P408i-a SR Gen10 menu. The secondary bootable device is left as Not Set.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit, to complete the current boot process.

- Configure the iLO 5 Static IP Address: When configuring the Bootstrap host, the

static IP address for the iLO 5 must be configured.

Note:

This step requires a reboot after completion.- Expose the System Configuration Utility by following Step 1.

- Select System Configuration.

- Select iLO 5 Configuration Utility.

- Select Network Options.

- Enter the IP Address, Subnet Mask, and Gateway IP Address fields provided in Installation PreFlight Checklist.

- Select F12: Save and Exit to complete the current boot process. A reboot is required when setting the static IP for the iLO 5. A warning appears indicating that the user must wait 30 seconds for the iLO to reset and then a reboot is required. A prompt appears requesting a reboot. Select Reboot.

- Once the reboot is complete, the user can re-enter the System Utility and verify the settings if necessary.

Configure Top of Rack 93180YC-EX Switches

Introduction

This procedure provides the steps required to initialize and configure Cisco 93180YC-EX switches as per the topology defined in Physical Network Topology Design.Note:

All instructions in this procedure are executed from the Bootstrap Host.Prerequisites

- Procedure OCCNE Installation of Oracle Linux 7.5 on Bootstrap Host has been completed.

- The switches are in factory default state.

- The switches are connected as per Installation PreFlight Checklist. Customer uplinks are not active before outside traffic is necessary.

- DHCP, XINETD, and TFTP are already installed on the Bootstrap host but are not configured.

- The Utility USB is available containing the necessary files as per: Installation PreFlight checklist: Create Utility USB.

Limitations/Expectations

All steps are executed from a Keyboard, Video, Mouse (KVM) connection.

References

Configuration Procedure

Following is the procedure to configure Top of Rack 93180YC-EX Switches:

- Login to the Bootstrap host as root.

Note:

All instructions in this step are executed from the Bootstrap Host. - Insert and mount the Utility USB that contains the configuration and script

files. Verify the files are listed in the USB using the ls /media/usb command.

Note:

Instructions for mounting the USB can be found in: Installation of Oracle Linux 7.5 on Bootstrap Server : Install Additional Packages. Only steps 2 and 3 need to be followed in that procedure. - Create bridge interface: Create bridge interface to connect both management

ports and setup the management bridge to support switch initialization.

Note:

<CNE_Management_IP_With_Prefix> is from Installation PreFlight Checklist : Complete Site Survey Host IP Table. Row 1 CNE Management IP Addresess (VLAN 4) column.<ToRSwitch_CNEManagementNet_VIP> is from Installation PreFlight Checklist : Complete OA and Switch IP Table.

$ nmcli con add con-name mgmtBridge type bridge ifname mgmtBridge $ nmcli con add type bridge-slave ifname eno2 master mgmtBridge $ nmcli con add type bridge-slave ifname eno3 master mgmtBridge $ nmcli con mod mgmtBridge ipv4.method manual ipv4.addresses 192.168.2.11/24 $ nmcli con up mgmtBridge $ nmcli con add type team con-name team0 ifname team0 team.runner lacp $ nmcli con add type team-slave con-name team0-slave-1 ifname eno5 master team0 $ nmcli con add type team-slave con-name team0-slave-2 ifname eno6 master team0The following commands are related to the vlan and ip address for this bootstrap server, the <mgmt_vlan_id> is same as in hosts.ini, <bootstrap team0 address> is same as ansible_host ip for this bootstrap server:nmcli con mod team0 ipv4.method manual ipv4.addresses <bootstrap team0 address> nmcli con add con-name team0.<mgmt_vlan_id> type vlan id <mgmt_vlan_id> dev team0 nmcli con mod team0.<mgmt_vlan_id> ipv4.method manual ipv4.addresses <CNE_Management_IP_Address_With_Prefix> ipv4.gateway <ToRswitch_CNEManagementNet_VIP>nmcli con up team0.<mgmt_vlan_id>Example:nmcli con mod team0 ipv4.method manual ipv4.addresses 172.16.3.4/24 nmcli con add con-name team0.4 type vlan id 4 dev team0 nmcli con mod team0.4 ipv4.method manual ipv4.addresses <CNE_Management_IP_Address_With_Prefix> ipv4.gateway <ToRswitch_CNEManagementNet_VIP> nmcli con up team0.4 - Edit the /etc/xinetd.d/tftp file to enable TFTP service. Change the disable option to

no, if it is set to yes.

$ vi /etc/xinetd.d/tftp # default: off # description: The tftp server serves files using the trivial file transfer \ # protocol. The tftp protocol is often used to boot diskless \ # workstations, download configuration files to network-aware printers, \ # and to start the installation process for some operating systems. service tftp { socket_type = dgram protocol = udp wait = yes user = root server = /usr/sbin/in.tftpd server_args = -s /var/lib/tftpboot disable = no per_source = 11 cps = 100 2 flags = IPv4 } - Enable tftp on the Bootstrap

host:

$ systemctl start tftp $ systemctl enable tftp Verify tftp is active and enabled: $ systemctl status tftp $ ps -elf | grep tftp - Copy the dhcpd.conf file from the Utility USB in Installation PreFlight checklist : Create the dhcpd.conf

File to the /etc/dhcp/ directory.

$ cp /media/usb/dhcpd.conf /etc/dhcp/ - Restart and enable dhcpd

service.

# /bin/systemctl restart dhcpd # /bin/systemctl enable dhcpd Use the systemctl status dhcpd command to verify active and enabled. # systemctl status dhcpd - Copy the switch configuration and script files from the Utility USB to directory

/var/lib/tftpboot/.

$ cp /media/usb/93180_switchA.cfg /var/lib/tftpboot/. $ cp /media/usb/93180_switchB.cfg /var/lib/tftpboot/. $ cp /media/usb/poap_nexus_script.py /var/lib/tftpboot/. - Modify POAP script File. Change Username and password credentials used to login

to the Bootstrap host.

# vi /var/lib/tftpboot/poap_nexus_script.py # Host name and user credentials options = { "username": "<username>", "password": "<password>", "hostname": "192.168.2.11", "transfer_protocol": "scp", "mode": "serial_number", "target_system_image": "nxos.9.2.3.bin", } Note: The version nxos.9.2.3.bin is used by default. If different version is to be used, modify the "target_system_image" with new version. - Modify POAP script file md5sum by executing the md5Poap.sh script from the

Utility USB created from Installation PreFlight checklist: Create the md5Poap

Bash Script.

# cd /var/lib/tftpboot/ # /bin/bash md5Poap.sh - Create the files necessary to configure the ToR switches using the serial number

from the switch. The serial number is located on a pullout card on the back of

the switch in the left most power supply of the switch.

Note:

The serial number is located on a pullout card on the back of the switch in the left most power supply of the switch. Be careful in interpreting the exact letters. If the switches are preconfigured then you can even verify the serial numbers using 'show license host-id' command. - Copy the /var/lib/tftpboot/93180_switchA.cfg into a file called

/var/lib/tftpboot/conf.<switchA serial number> Modify the switch specific

values in the /var/lib/tftpboot/conf.<switchA serial number> file,

including all the values in the curly braces as following code block.

These values are contained at Installation PreFlight checklist : ToR and Enclosure Switches Variables Table (Switch Specific) and Installation PreFlight Checklist : Complete OA and Switch IP Table. Modify these values with the following sed commands, or use an editor such as vi etc.

# sed -i 's/{switchname}/<switch_name>/' conf.<switchA serial number> # sed -i 's/{admin_password}/<admin_password>/' conf.<switchA serial number> # sed -i 's/{user_name}/<user_name>/' conf.<switchA serial number> # sed -i 's/{user_password}/<user_password>/' conf.<switchA serial number> # sed -i 's/{ospf_md5_key}/<ospf_md5_key>/' conf.<switchA serial number> # sed -i 's/{OSPF_AREA_ID}/<ospf_area_id>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER1}/<NTP_server_1>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER2}/<NTP_server_2>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER3}/<NTP_server_3>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER4}/<NTP_server_4>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER5}/<NTP_server_5>/' conf.<switchA serial number> # Note: If less than 5 ntp servers available, delete the extra ntp server lines such as command: # sed -i 's/{NTPSERVER5}/d' conf.<switchA serial number> Note: different delimiter is used in next two commands due to '/' sign in the variables # sed -i 's#{ALLOW_5G_XSI_LIST_WITH_PREFIX_LEN}#<MetalLB_Signal_Subnet_With_Prefix>#g' conf.<switchA serial number> # sed -i 's#{CNE_Management_SwA_Address}#<ToRswitchA_CNEManagementNet_IP>#g' conf.<switchA serial number> # sed -i 's#{CNE_Management_SwB_Address}#<ToRswitchB_CNEManagementNet_IP>#g' conf.<switchA serial number> # sed -i 's#{CNE_Management_Prefix}#<CNEManagementNet_Prefix>#g' conf.<switchA serial number> # sed -i 's#{SQL_replication_SwA_Address}#<ToRswitchA_SQLreplicationNet_IP>#g' conf.<switchA serial number> # sed -i 's#{SQL_replication_SwB_Address}#<ToRswitchB_SQLreplicationNet_IP>#g' conf.<switchA serial number> # sed -i 's#{SQL_replication_Prefix}#<SQLreplicationNet_Prefix>#g' conf.<switchA serial number> # ipcalc -n <ToRswitchA_SQLreplicationNet_IP/<SQLreplicationNet_Prefix> | awk -F'=' '{print $2}' # sed -i 's/{SQL_replication_Subnet}/<output from ipcalc command as SQL_replication_Subnet>/' conf.<switchA serial number> # sed -i 's/{CNE_Management_VIP}/<ToRswitch_CNEManagementNet_VIP>/g' conf.<switchA serial number> # sed -i 's/{SQL_replication_VIP}/<ToRswitch_SQLreplicationNet_VIP>/g' conf.<switchA serial number> # sed -i 's/{OAM_UPLINK_CUSTOMER_ADDRESS}/<ToRswitchA_oam_uplink_customer_IP>/' conf.<switchA serial number> # sed -i 's/{OAM_UPLINK_SwA_ADDRESS}/<ToRswitchA_oam_uplink_IP>/g' conf.<switchA serial number> # sed -i 's/{SIGNAL_UPLINK_SwA_ADDRESS}/<ToRswitchA_signaling_uplink_IP>/g' conf.<switchA serial number> # sed -i 's/{OAM_UPLINK_SwB_ADDRESS}/<ToRswitchB_oam_uplink_IP>/g' conf.<switchA serial number> # sed -i 's/{SIGNAL_UPLINK_SwB_ADDRESS}/<ToRswitchB_signaling_uplink_IP>/g' conf.<switchA serial number> # ipcalc -n <ToRswitchA_signaling_uplink_IP>/30 | awk -F'=' '{print $2}' # sed -i 's/{SIGNAL_UPLINK_SUBNET}/<output from ipcalc command as signal_uplink_subnet>/' conf.<switchA serial number> # ipcalc -n <ToRswitchA_SQLreplicationNet_IP> | awk -F'=' '{print $2}' # sed -i 's/{MySQL_Replication_SUBNET}/<output from the above ipcalc command appended with prefix >/' conf.<switchA serial number> Note: The version nxos.9.2.3.bin is used by default and hard-coded in the conf files. If different version is to be used, run the following command: # sed -i 's/nxos.9.2.3.bin/<nxos_version>/' conf.<switchA serial number> Note: access-list Restrict_Access_ToR # The following line allow one access server to access the switch management and SQL vlan addresses while other accesses are denied. If no need, delete this line. If need more servers, add similar line. # sed -i 's/{Allow_Access_Server}/<Allow_Access_Server>/' conf.<switchA serial number> - Copy the /var/lib/tftpboot/93180_switchB.cfg into a file called

/var/lib/tftpboot/conf.<switchB serial number>

Modify the switch specific values in the /var/lib/tftpboot/conf.<switchA serial number> file, including: hostname, username/password, oam_uplink IP address, signaling_uplink IP address, access-list ALLOW_5G_XSI_LIST permit address, prefix-list ALLOW_5G_XSI.

These values are contained at Installation PreFlight checklist : ToR and Enclosure Switches Variables Table and Installation PreFlight Checklist : Complete OA and Switch IP Table.

# sed -i 's/{switchname}/<switch_name>/' conf.<switchB serial number> # sed -i 's/{admin_password}/<admin_password>/' conf.<switchB serial number> # sed -i 's/{user_name}/<user_name>/' conf.<switchB serial number> # sed -i 's/{user_password}/<user_password>/' conf.<switchB serial number> # sed -i 's/{ospf_md5_key}/<ospf_md5_key>/' conf.<switchB serial number> # sed -i 's/{OSPF_AREA_ID}/<ospf_area_id>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER1}/<NTP_server_1>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER2}/<NTP_server_2>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER3}/<NTP_server_3>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER4}/<NTP_server_4>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER5}/<NTP_server_5>/' conf.<switchB serial number> # Note: If less than 5 ntp servers available, delete the extra ntp server lines such as command: # sed -i 's/{NTPSERVER5}/d' conf.<switchB serial number> Note: different delimiter is used in next two commands due to '/' sign in in the variables # sed -i 's#{ALLOW_5G_XSI_LIST_WITH_PREFIX_LEN}#<MetalLB_Signal_Subnet_With_Prefix>#g' conf.<switchB serial number> # sed -i 's#{CNE_Management_SwA_Address}#<ToRswitchA_CNEManagementNet_IP>#g' conf.<switchB serial number> # sed -i 's#{CNE_Management_SwB_Address}#<ToRswitchB_CNEManagementNet_IP>#g' conf.<switchB serial number> # sed -i 's#{CNE_Management_Prefix}#<CNEManagementNet_Prefix>#g' conf.<switchB serial number> # sed -i 's#{SQL_replication_SwA_Address}#<ToRswitchA_SQLreplicationNet_IP>#g' conf.<switchB serial number> # sed -i 's#{SQL_replication_SwB_Address}#<ToRswitchB_SQLreplicationNet_IP>#g' conf.<switchB serial number> # sed -i 's#{SQL_replication_Prefix}#<SQLreplicationNet_Prefix>#g' conf.<switchB serial number> # ipcalc -n <ToRswitchB_SQLreplicationNet_IP/<SQLreplicationNet_Prefix> | awk -F'=' '{print $2}' # sed -i 's/{SQL_replication_Subnet}/<output from ipcalc command as SQL_replication_Subnet>/' conf.<switchB serial number> # sed -i 's/{CNE_Management_VIP}/<ToRswitch_CNEManagementNet_VIP>/' conf.<switchB serial number> # sed -i 's/{SQL_replication_VIP}/<ToRswitch_SQLreplicationNet_VIP>/' conf.<switchB serial number> # sed -i 's/{OAM_UPLINK_CUSTOMER_ADDRESS}/<ToRswitchB_oam_uplink_customer_IP>/' conf.<switchB serial number> # sed -i 's/{OAM_UPLINK_SwA_ADDRESS}/<ToRswitchA_oam_uplink_IP>/g' conf.<switchB serial number> # sed -i 's/{SIGNAL_UPLINK_SwA_ADDRESS}/<ToRswitchA_signaling_uplink_IP>/g' conf.<switchB serial number> # sed -i 's/{OAM_UPLINK_SwB_ADDRESS}/<ToRswitchB_oam_uplink_IP>/g' conf.<switchB serial number> # sed -i 's/{SIGNAL_UPLINK_SwB_ADDRESS}/<ToRswitchB_signaling_uplink_IP>/g' conf.<switchB serial number> # ipcalc -n <ToRswitchB_signaling_uplink_IP>/30 | awk -F'=' '{print $2}' # sed -i 's/{SIGNAL_UPLINK_SUBNET}/<output from ipcalc command as signal_uplink_subnet>/' conf.<switchB serial number> Note: The version nxos.9.2.3.bin is used by default and hard-coded in the conf files. If different version is to be used, run the following command: # sed -i 's/nxos.9.2.3.bin/<nxos_version>/' conf.<switchB serial number> Note: access-list Restrict_Access_ToR # The following line allow one access server to access the switch management and SQL vlan addresses while other accesses are denied. If no need, delete this line. If need more servers, add similar line. # sed -i 's/{Allow_Access_Server}/<Allow_Access_Server>/' conf.<switchB serial number> - Generate the md5 checksum for each conf file in /var/lib/tftpboot and

copy that into a new file called conf.<switchA/B serial

number>.md5.

$ md5sum conf.<switchA serial number> > conf.<switchA serial number>.md5 $ md5sum conf.<switchB serial number> > conf.<switchB serial number>.md5 - Verify the

/var/lib/tftpboot directory has the correct files.

Make sure the file permissions are set as given below.

Note: The ToR switches are constantly attempting to find and execute the poap_nexus_script.py script which uses tftp to load and install the configuration files.

# ls -l /var/lib/tftpboot/ total 1305096 -rw-r--r--. 1 root root 7161 Mar 25 15:31 conf.<switchA serial number> -rw-r--r--. 1 root root 51 Mar 25 15:31 conf.<switchA serial number>.md5 -rw-r--r--. 1 root root 7161 Mar 25 15:31 conf.<switchB serial number> -rw-r--r--. 1 root root 51 Mar 25 15:31 conf.<switchB serial number>.md5 -rwxr-xr-x. 1 root root 75856 Mar 25 15:32 poap_nexus_script.py - Disable firewalld.

$ systemctl stop firewalld $ systemctl disable firewalld To verify: $ systemctl status firewalldOnce this is complete, the ToR Switches will attempt to boot from the tftpboot files automatically. Eventually the verification steps can be executed below. It may take about 5 minutes for this to complete.

- Un-mount the Utility USB and remove it:

umount /media/usb

Verification

- After the ToR switches configured, ping the switches from

bootstrap server. The switches mgmt0 interfaces are configured with the IP

addresses which are in the conf files. Note: Wait till the

device responds.

# ping 192.168.2.1 PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data. 64 bytes from 192.168.2.1: icmp_seq=1 ttl=255 time=0.419 ms 64 bytes from 192.168.2.1: icmp_seq=2 ttl=255 time=0.496 ms 64 bytes from 192.168.2.1: icmp_seq=3 ttl=255 time=0.573 ms 64 bytes from 192.168.2.1: icmp_seq=4 ttl=255 time=0.535 ms ^C --- 192.168.2.1 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3000ms rtt min/avg/max/mdev = 0.419/0.505/0.573/0.063 ms # ping 192.168.2.2 PING 192.168.2.2 (192.168.2.2) 56(84) bytes of data. 64 bytes from 192.168.2.2: icmp_seq=1 ttl=255 time=0.572 ms 64 bytes from 192.168.2.2: icmp_seq=2 ttl=255 time=0.582 ms 64 bytes from 192.168.2.2: icmp_seq=3 ttl=255 time=0.466 ms 64 bytes from 192.168.2.2: icmp_seq=4 ttl=255 time=0.554 ms ^C --- 192.168.2.2 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3001ms rtt min/avg/max/mdev = 0.466/0.543/0.582/0.051 ms - Attempt to ssh to the switches with the username/password

provided in the conf

files.

# ssh plat@192.168.2.1 The authenticity of host '192.168.2.1 (192.168.2.1)' can't be established. RSA key fingerprint is SHA256:jEPSMHRNg9vejiLcEvw5qprjgt+4ua9jucUBhktH520. RSA key fingerprint is MD5:02:66:3a:c6:81:65:20:2c:6e:cb:08:35:06:c6:72:ac. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '192.168.2.1' (RSA) to the list of known hosts. User Access Verification Password: Cisco Nexus Operating System (NX-OS) Software TAC support: http://www.cisco.com/tac Copyright (C) 2002-2019, Cisco and/or its affiliates. All rights reserved. The copyrights to certain works contained in this software are owned by other third parties and used and distributed under their own licenses, such as open source. This software is provided "as is," and unless otherwise stated, there is no warranty, express or implied, including but not limited to warranties of merchantability and fitness for a particular purpose. Certain components of this software are licensed under the GNU General Public License (GPL) version 2.0 or GNU General Public License (GPL) version 3.0 or the GNU Lesser General Public License (LGPL) Version 2.1 or Lesser General Public License (LGPL) Version 2.0. A copy of each such license is available at http://www.opensource.org/licenses/gpl-2.0.php and http://opensource.org/licenses/gpl-3.0.html and http://www.opensource.org/licenses/lgpl-2.1.php and http://www.gnu.org/licenses/old-licenses/library.txt. # - Verify the running-config has all expected configurations in the

conf file using the show

running-config command.

# show running-config !Command: show running-config !Running configuration last done at: Mon Apr 8 17:39:38 2019 !Time: Mon Apr 8 18:30:17 2019 version 9.2(3) Bios:version 07.64 hostname 12006-93108A vdc 12006-93108A id 1 limit-resource vlan minimum 16 maximum 4094 limit-resource vrf minimum 2 maximum 4096 limit-resource port-channel minimum 0 maximum 511 limit-resource u4route-mem minimum 248 maximum 248 limit-resource u6route-mem minimum 96 maximum 96 limit-resource m4route-mem minimum 58 maximum 58 limit-resource m6route-mem minimum 8 maximum 8 feature scp-server feature sftp-server cfs eth distribute feature ospf feature bgp feature interface-vlan feature lacp feature vpc feature bfd feature vrrpv3 .... .... - Verify license on the switches. In case some of the above

features are missing, verify license on the switches and at least

NXOS_ADVANTAGE level license is "In use". If license not installed or too

low level, contact vendor for correct license key file, following Licensing

document mentioned in reference section to install license key. Then run

"write erase" and "reload" to set back to factory default. The switches will

go to POAP configuration again.

# show license Example output: # show license MDS20190215085542979.lic: SERVER this_host ANY VENDOR cisco INCREMENT NXOS_ADVANTAGE_XF cisco 1.0 permanent uncounted \ VENDOR_STRING=<LIC_SOURCE>MDS_SWIFT</LIC_SOURCE><SKU>NXOS-AD-XF</SKU> \ HOSTID=VDH=FDO22412J2F \ NOTICE="<LicFileID>20190215085542979</LicFileID><LicLineID>1</LicLineID> \ <PAK></PAK>" SIGN=8CC8807E6918 # show license usage Example output: # show license usage Feature Ins Lic Status Expiry Date Comments Count -------------------------------------------------------------------------------- ... NXOS_ADVANTAGE_M4 No - Unused - NXOS_ADVANTAGE_XF Yes - In use never - NXOS_ESSENTIALS_GF No - Unused - ... # - Verify the RMS1 can ping the CNE_Management VIP.

# ping <ToRSwitch_CNEManagementNet_VIP> PING <ToRSwitch_CNEManagementNet_VIP> (<ToRSwitch_CNEManagementNet_VIP>) 56(84) bytes of data. 64 bytes from <ToRSwitch_CNEManagementNet_VIP>: icmp_seq=2 ttl=255 time=1.15 ms 64 bytes from <ToRSwitch_CNEManagementNet_VIP>: icmp_seq=3 ttl=255 time=1.11 ms 64 bytes from <ToRSwitch_CNEManagementNet_VIP>: icmp_seq=4 ttl=255 time=1.23 ms ^C --- 10.75.207.129 ping statistics --- 4 packets transmitted, 3 received, 25% packet loss, time 3019ms rtt min/avg/max/mdev = 1.115/1.168/1.237/0.051 ms - Enable customer uplink.

- Verify the RMS1 can be accessed from laptop. Use application

such as putty etc to ssh to RMS1.

$ ssh root@<CNE_Management_IP_Address> Using username "root". root@<CNE_Management_IP_Address>'s password:<root password> Last login: Mon May 6 10:02:01 2019 from 10.75.9.171 [root@RMS1 ~]#

SNMP Trap Configuration

- SNMPv2c Configuration.

When SNMPv2c configuration is needed, ssh to the two switches, run the following commands:

These values <SNMP_Trap_Receiver_Address>and <SNMP_Community_String> are from Installation Preflight Checklist.

[root@RMS1 ~]# ssh <user_name>@<ToRswitchA_CNEManagementNet_IP> # configure terminal (config)# snmp-server host <SNMP_Trap_Receiver_Address> traps version 2c <SNMP_Community_String> (config)# snmp-server host <SNMP_Trap_Receiver_Address> use-vrf default (config)# snmp-server host <SNMP_Trap_Receiver_Address> source-interface Ethernet1/51 (config)# snmp-server enable traps (config)# snmp-server community <SNMP_Community_String> group network-admin - Restrict direct access to ToR switches. In order to restrict direct access

to ToR switches, IP access list is created and applied on the uplink

interfaces, the following commands are needed on ToR switches:

[root@RMS1 ~]# ssh <user_name>@<ToRswitchA_CNEManagementNet_IP> # configure terminal (config)# ip access-list Restrict_Access_ToR permit ip {Allow_Access_Server}/32 any permit ip {NTPSERVER1}/32 {OAM_UPLINK_SwA_ADDRESS}/32 permit ip {NTPSERVER2}/32 {OAM_UPLINK_SwA_ADDRESS}/32 permit ip {NTPSERVER3}/32 {OAM_UPLINK_SwA_ADDRESS}/32 permit ip {NTPSERVER4}/32 {OAM_UPLINK_SwA_ADDRESS}/32 permit ip {NTPSERVER5}/32 {OAM_UPLINK_SwA_ADDRESS}/32 deny ip any {CNE_Management_VIP}/32 deny ip any {CNE_Management_SwA_Address}/32 deny ip any {CNE_Management_SwB_Address}/32 deny ip any {SQL_replication_VIP}/32 deny ip any {SQL_replication_SwA_Address}/32 deny ip any {SQL_replication_SwB_Address}/32 deny ip any {OAM_UPLINK_SwA_ADDRESS}/32 deny ip any {OAM_UPLINK_SwB_ADDRESS}/32 deny ip any {SIGNAL_UPLINK_SwA_ADDRESS}/32 deny ip any {SIGNAL_UPLINK_SwB_ADDRESS}/32 permit ip any any interface Ethernet1/51 ip access-group Restrict_Access_ToR in interface Ethernet1/52 ip access-group Restrict_Access_ToR in - Traffic egress out of cluster, including snmptrap traffic to SNMP trap

receiver, and traffic goes to signal server:

[root@RMS1 ~]# ssh <user_name>@<ToRswitchA_CNEManagementNet_IP> # configure terminal (config)# feature nat ip access-list host-snmptrap 10 permit udp 172.16.3.0/24 <snmp trap receiver>/32 eq snmptrap log ip access-list host-sigserver 10 permit ip 172.16.3.0/24 <signal server>/32 ip nat pool sig-pool 10.75.207.211 10.75.207.222 prefix-length 27 ip nat inside source list host-sigserver pool sig-pool overload add-route ip nat inside source list host-snmptrap interface Ethernet1/51 overload interface Vlan3 ip nat inside interface Ethernet1/51 ip nat outside interface Ethernet1/52 ip nat outside Run the same commands on ToR switchB

Configure Addresses for RMS iLOs, OA, EBIPA

Introduction

This procedure is used to configure RMS iLO addresses and add a new user account for each RMS other than the Bootstrap Host. When the RMSs are shipped and out of box after hardware installation and powerup, the RMSs are in a factory default state with the iLO in DHCP mode waiting for DHCP service. DHCP is used to configure the ToR switches, OAs, Enclosure switches, and blade server iLOs, so DHCP can be used to configure RMS iLOs as well.

Prerequisites

Procedure Configure Top of Rack 93180YC-EX Switches has been completed.

Limitations/Expectations

All steps are executed from the ssh session of the Bootstrap server.

Procedure

Following is the procedure to configure Addresses for RMS iLOs, OA, EBIPA:

- Setup the vlan interface to access ilo subnet. The ilo_vlan_id and

ilo_subnet_cidr are the same value as in

hosts.ini:

$ nmcli con add con-name team0.<ilo_vlan_id> type vlan id <ilo_vlan_id> dev team0 $ nmcli con mod team0.<ilo_vlan_id> ipv4.method manual ipv4.addresses <unique ip in ilo subnet>/<ilo_subnet_cidr> $ nmcli con up team0.<ilo_vlan_id>Example:

$ nmcli con add con-name team0.2 type vlan id 2 dev team0 $ nmcli con mod team0.2 ipv4.method manual ipv4.addresses 192.168.20.11/24 $ nmcli con up team0.2 - Subnet and conf file address.

The /etc/dhcp/dhcpd.conf file should already have been configured in OCCNE Configure Top of Rack 93180YC-EX Switches procedure.

Configure Top of Rack 93180YC-EX Switches and dhcp started/enabled on the bootstrap server. The second subnet 192.168.20.0 is used to assign addresses for OA and RMS iLOs. The "next-server 192.168.20.11" option is same as the server team0.2 IP address. - Display the dhcpd leases file at

/var/lib/dhcpd/dhcpd.leases. The DHCPD lease file will display the DHCP addresses for all RMS iLOs, Enclosure OAs.# cat /var/lib/dhcpd/dhcpd.leases # The format of this file is documented in the dhcpd.leases(5) manual page. # This lease file was written by isc-dhcp-4.2.5 lease 192.168.20.101 { starts 4 2019/03/28 22:05:26; ends 4 2019/03/28 22:07:26; tstp 4 2019/03/28 22:07:26; cltt 4 2019/03/28 22:05:26; binding state free; hardware ethernet 48:df:37:7a:41:60; } lease 192.168.20.103 { starts 4 2019/03/28 22:05:28; ends 4 2019/03/28 22:07:28; tstp 4 2019/03/28 22:07:28; cltt 4 2019/03/28 22:05:28; binding state free; hardware ethernet 48:df:37:7a:2f:70; } lease 192.168.20.102 { starts 4 2019/03/28 22:05:16; ends 4 2019/03/28 23:03:29; tstp 4 2019/03/28 23:03:29; cltt 4 2019/03/28 22:05:16; binding state free; hardware ethernet 48:df:37:7a:40:40; } lease 192.168.20.106 { starts 5 2019/03/29 11:14:04; ends 5 2019/03/29 14:14:04; tstp 5 2019/03/29 14:14:04; cltt 5 2019/03/29 11:14:04; binding state free; hardware ethernet b8:83:03:47:5f:14; uid "\000\270\203\003G_\024\000\000\000"; } lease 192.168.20.105 { starts 5 2019/03/29 12:56:23; ends 5 2019/03/29 15:56:23; tstp 5 2019/03/29 15:56:23; cltt 5 2019/03/29 12:56:23; binding state free; hardware ethernet b8:83:03:47:5e:54; uid "\000\270\203\003G^T\000\000\000"; } lease 192.168.20.104 { starts 5 2019/03/29 13:08:21; ends 5 2019/03/29 16:08:21; tstp 5 2019/03/29 16:08:21; cltt 5 2019/03/29 13:08:21; binding state free; hardware ethernet b8:83:03:47:64:9c; uid "\000\270\203\003Gd\234\000\000\000"; } lease 192.168.20.108 { starts 5 2019/03/29 09:57:02; ends 5 2019/03/29 21:57:02; tstp 5 2019/03/29 21:57:02; cltt 5 2019/03/29 09:57:02; binding state active; next binding state free; rewind binding state free; hardware ethernet fc:15:b4:1a:ea:05; uid "\001\374\025\264\032\352\005"; client-hostname "OA-FC15B41AEA05"; } lease 192.168.20.107 { starts 5 2019/03/29 12:02:50; ends 6 2019/03/30 00:02:50; tstp 6 2019/03/30 00:02:50; cltt 5 2019/03/29 12:02:50; binding state active; next binding state free; rewind binding state free; hardware ethernet 9c:b6:54:80:d7:d7; uid "\001\234\266T\200\327\327"; client-hostname "SA-9CB65480D7D7"; } server-duid "\000\001\000\001$#\364\344\270\203\003Gim"; lease 192.168.20.107 { starts 5 2019/03/29 18:09:47; ends 6 2019/03/30 06:09:47; cltt 5 2019/03/29 18:09:47; binding state active; next binding state free; rewind binding state free; hardware ethernet 9c:b6:54:80:d7:d7; uid "\001\234\266T\200\327\327"; client-hostname "SA-9CB65480D7D7"; } lease 192.168.20.108 { starts 5 2019/03/29 18:09:54; ends 6 2019/03/30 06:09:54; cltt 5 2019/03/29 18:09:54; binding state active; next binding state free; rewind binding state free; hardware ethernet fc:15:b4:1a:ea:05; uid "\001\374\025\264\032\352\005"; client-hostname "OA-FC15B41AEA05"; } lease 192.168.20.106 { starts 5 2019/03/29 18:10:04; ends 5 2019/03/29 21:10:04; cltt 5 2019/03/29 18:10:04; binding state active; next binding state free; rewind binding state free; hardware ethernet b8:83:03:47:5f:14; uid "\000\270\203\003G_\024\000\000\000"; client-hostname "ILO2M2909004B"; } lease 192.168.20.104 { starts 5 2019/03/29 18:10:35; ends 5 2019/03/29 21:10:35; cltt 5 2019/03/29 18:10:35; binding state active; next binding state free; rewind binding state free; hardware ethernet b8:83:03:47:64:9c; uid "\000\270\203\003Gd\234\000\000\000"; client-hostname "ILO2M2909004F"; } lease 192.168.20.105 { starts 5 2019/03/29 18:10:40; ends 5 2019/03/29 21:10:40; cltt 5 2019/03/29 18:10:40; binding state active; next binding state free; rewind binding state free; hardware ethernet b8:83:03:47:5e:54; uid "\000\270\203\003G^T\000\000\000"; client-hostname "ILO2M29090048"; - Access RMS iLO from the DHCP address with default Administrator password. From

the above

dhcpd.leasesfile, find the IP address for the iLO name, the default username is Administrator, the password is on the label which can be pulled out from front of server.Note:

The DNS Name on the pull-out label. The DNS Name on the pull-out label should be used to match the physical machine with the iLO IP since the same default DNS Name from the pull-out label is displayed upon logging in to the iLO command line interface, as shown in the example below.# ssh Administrator@192.168.20.104 Administrator@192.168.20.104's password: User:Administrator logged-in to ILO2M2909004F.labs.nc.tekelec.com(192.168.20.104 / FE80::BA83:3FF:FE47:649C) iLO Standard 1.37 at Oct 25 2018 Server Name: Server Power: On - Create RMS iLO new user. Create new user with customized username and password.

</>hpiLO-> create /map1/accounts1 username=root password=TklcRoot group=admin,config,oemHP_rc,oemHP_power,oemHP_vm status=0 status_tag=COMMAND COMPLETED Tue Apr 2 20:08:30 2019 User added successfully. - Disable the DHCP before able to setup static IP. Setup static failed before DHCP

is

disabled.

</>hpiLO-> set /map1/dhcpendpt1 EnabledState=NO status=0 status_tag=COMMAND COMPLETED Tue Apr 2 20:04:53 2019 Network settings change applied. Settings change applied, iLO 5 will now be reset. Logged Out: It may take several minutes before you can log back in. CLI session stopped packet_write_wait: Connection to 192.168.20.104 port 22: Broken pipe - Setup RMS iLO static IP address. After a while after previous step, can login

back with the same address (which is static IP now) and new username/password.

If don't want to use the same address, go to next step to change the IP address.

# ssh <new username>@192.168.20.104 <new username>@192.168.20.104's password: <new password> User: logged-in to ILO2M2909004F.labs.nc.tekelec.com(192.168.20.104 / FE80::BA83:3FF:FE47:649C) iLO Standard 1.37 at Oct 25 2018 Server Name: Server Power: On </>hpiLO-> set /map1/enetport1/lanendpt1/ipendpt1 IPv4Address=192.168.20.122 SubnetMask=255.255.255.0 status=0 status_tag=COMMAND COMPLETED Tue Apr 2 20:22:23 2019 Network settings change applied. Settings change applied, iLO 5 will now be reset. Logged Out: It may take several minutes before you can log back in. CLI session stopped packet_write_wait: Connection to 192.168.20.104 port 22: Broken pipe # - Set EBIPA addresses for InterConnect Bays (Enclosure Switches). From bootstrap

server, login to OA, set EBIPA addressed for the two enclosure switches. The

addresses have to be in the subnet with server team0.2 address in order for TFTP

to work.

Set address for each enclosure switch, note the last number 1 or 2 is the interconnect bay number. OA-FC15B41AEA05> set ebipa interconnect 192.168.20.133 255.255.255.0 1 Entering anything other than 'YES' will result in the command not executing. It may take each interconnect several minutes to acquire the new settings. Are you sure you want to change the IP address for the specified interconnect bays? yes Successfully set 255.255.255.0 as the netmask for interconnect bays. Successfully set interconnect bay # 1 to IP address 192.168.20.133 For the IP addresses to be assigned EBIPA must be enabled. OA-FC15B41AEA05> set ebipa interconnect 192.168.20.134 255.255.255.0 2 Entering anything other than 'YES' will result in the command not executing. It may take each interconnect several minutes to acquire the new settings. Are you sure you want to change the IP address for the specified interconnect bays? yes Successfully set 255.255.255.0 as the netmask for interconnect bays. Successfully set interconnect bay # 2 to IP address 192.168.20.134 For the IP addresses to be assigned EBIPA must be enabled. - Set EBIPA addresses for Blade Servers. Set EBIPA addressed for all the blade

servers. The addresses are in the same subnet with first server team0.2 address

and enclosure switches.

OA-FC15B41AEA05> set ebipa server 192.168.20.141 255.255.255.0 1-16 Entering anything other than 'YES' will result in the command not executing. Changing the IP address for device (iLO) bays that are enabled causes the iLOs in those bays to be reset. Are you sure you want to change the IP address for the specified device (iLO) bays? YES Successfully set 255.255.255.0 as the netmask for device (iLO) bays. Successfully set device (iLO) bay # 1 to IP address 192.168.20.141 Successfully set device (iLO) bay # 2 to IP address 192.168.20.142 Successfully set device (iLO) bay # 3 to IP address 192.168.20.143 Successfully set device (iLO) bay # 4 to IP address 192.168.20.144 Successfully set device (iLO) bay # 5 to IP address 192.168.20.145 Successfully set device (iLO) bay # 6 to IP address 192.168.20.146 Successfully set device (iLO) bay # 7 to IP address 192.168.20.147 Successfully set device (iLO) bay # 8 to IP address 192.168.20.148 Successfully set device (iLO) bay # 9 to IP address 192.168.20.149 Successfully set device (iLO) bay #10 to IP address 192.168.20.150 Successfully set device (iLO) bay #11 to IP address 192.168.20.151 Successfully set device (iLO) bay #12 to IP address 192.168.20.152 Successfully set device (iLO) bay #13 to IP address 192.168.20.153 Successfully set device (iLO) bay #14 to IP address 192.168.20.154 Successfully set device (iLO) bay #15 to IP address 192.168.20.155 Successfully set device (iLO) bay #16 to IP address 192.168.20.156 For the IP addresses to be assigned EBIPA must be enabled. OA-FC15B41AEA05> - Add New User for OA.

Create new user, set access level as ADMINISTRATOR, and assign access to all blades, all enclosure switches and OAs. After that, the username and password can be used to access OAs.

OA-FC15B41AEA05> ADD USER <username> New Password: ******** Confirm : ******** User "<username>" created. You may set user privileges with the 'SET USER ACCESS' and 'ASSIGN' commands. OA-FC15B41AEA05> set user access <username> ADMINISTRATOR "<username>" has been given administrator level privileges. OA-FC15B41AEA05> ASSIGN SERVER ALL <username> <username> has been granted access to the valid requested bay(s) OA-FC15B41AEA05> ASSIGN INTERCONNECT ALL <username> <username> has been granted access to the valid requested bay(s) OA-FC15B41AEA05> ASSIGN OA <username> <username> has been granted access to the OA. - From OA, go to each blade with "connect server <bay number>", add New User

for each blade.

OA-FC15B41AEA05> connect server 4 Connecting to bay 4 ... User:OAtmp-root-5CBF2E61 logged-in to ILO2M290605KP.(192.168.20.144 / FE80::AF1:EAFF:FE89:460) iLO Standard Blade Edition 1.37 at Oct 25 2018 Server Name: Server Power: On </>hpiLO-> </>hpiLO-> create /map1/accounts1 username=root password=TklcRoot group=admin,config,oemHPE_rc,oemHPE_power,oemHPE_vm status=2 status_tag=COMMAND PROCESSING FAILED error_tag=COMMAND SYNTAX ERROR Tue Apr 23 16:18:58 2019 User added successfully. - Change to static IP on OA. In order not reply on DHCP and make the OA address

stable, change to static IP.

Note:

After the following change, on the active OA (could be the bay1 OA or bay2 OA), the OA session will be stuck due to the address change, make another server session ready to ssh with the new IP address and new root user. The change on the standby OA will not stuck the OA session.OA-FC15B41AEA05> SET IPCONFIG STATIC 1 192.168.20.131 255.255.255.0 Static IP settings successfully updated. These setting changes will take effect immediately. OA-FC15B41AEA05> SET IPCONFIG STATIC 2 192.168.20.132 255.255.255.0 Static IP settings successfully updated. These setting changes will take effect immediately. OA-FC15B41AEA05>

Configure Legacy BIOS on Remaining Hosts

Note:

The procedures in this document apply to the HP iLO console accessed via KVM. Each procedure is executed in the order listed.Prerequisites

Procedure OCCNE Configure Addresses for RMS iLOs, OA, EBIPA is complete.

Limitations and Expectations

- Applies to HP iLO 5 only.

- Should the System Utility indicate (or defaults to) UEFI booting, then the user must go through the steps to reset booting back to the Legacy BIOS mode by following step: Change over from UEFI Booting Mode to Legacy BIOS Booting Mode.

- The procedures listed here apply to both Gen10 DL380 RMSs and Gen10 BL460c Blades in a C7000 enclosure.

- Access to the enclosure blades in these procedures is via the Bootstrap host using SSH on the KVM. This is possible because the prerequisites are complete. If the prerequisites are not completed before executing this procedure, the enclosure blades are only accessible via the KVM connected directly to the active OA. In this case the mouse is not usable and screen manipulations are performed using the keyboard ESC and directional keys.

- This procedure does NOT apply to the Bootstrap Host.

References

Procedure

- Expose the System Configuration Utility on a RMS Host on the KVM. This

procedure does not provide instructions on how to connect the KVM as this

may be different on each installation.

- Once the remote console has been exposed, the system must be reset by manually pressing the power button on the front of the RMS host to force it through the restart process. When the initial window is displayed, hit the F9 key repeatedly. Once the F9 is highlighted at the lower left corner of the remote console, it should eventually bring up the main System Utility.

- The System Utilities screen is exposed in the remote console.

- Expose the System Utility for an Enclosure Blade.

- The blades are maintained

via the OAs in the enclosure. Because each blade iLO has already

been assigned an IP address from the prerequisites, the blades can

each be reached using SSH from the Bootstrap host login shell on the

KVM.

- SSH to the blade

using the iLO IP address and the root user and password.

This brings up the HP iLO prompt.

$ ssh root@<blade_ilo_ip_address> Using username "root". Last login: Fri Apr 19 12:24:56 2019 from 10.39.204.17 [root@localhost ~]# ssh root@192.168.20.141 root@192.168.20.141's password: User:root logged-in to ILO2M290605KM.(192.168.20.141 / FE80::AF1:EAFF:FE89:35E) iLO Standard Blade Edition 1.37 at Oct 25 2018 Server Name: Server Power: On </>hpiLO-> - Use VSP to connect

to the blade remote console.

</>hpiLO->vsp -

Power cycle the blade to bring up the System Utility for that blade.

Note:

The System Utility is a text based version of that exposed on the RMS via the KVM. The user must use the directional (arrow) keys to manipulate between selections, ENTER key to select, and ESC to go back from the current selection. - Access the System Utility by hitting ESC 9.

- SSH to the blade

using the iLO IP address and the root user and password.

This brings up the HP iLO prompt.

- Enabling Virtualization

This procedure provides the steps required to enable virtualization on a given Bare Metal Server. Virtualization can be configured using the default settings or via the default Workload Profiles.

Verifying Default Settings- Expose the System Utility by following step 1 or 2 depending on the hardware being configured.

- Select System Configuration

- Select BIOS/Platform Configuration (RBSU)

- Select Virtualization Options

This view displays the settings for the Intel(R) Virtualization Technology (IntelVT), Intel(R) VT-d, and SR-IOV options (Enabled or Disabled). The default values for each option is Enabled.

- Select F10 if it is desired to save and stay in the utility or select the F12 if it is desired to save and exit to continue the current boot process.

- The blades are maintained

via the OAs in the enclosure. Because each blade iLO has already

been assigned an IP address from the prerequisites, the blades can

each be reached using SSH from the Bootstrap host login shell on the

KVM.

- Change over from UEFI Booting Mode to Legacy BIOS Booting Mode:

- Expose the System Utility by following step 1 or 2 depending on the hardware being configured.

- Select System Configuration

- Select BIOS/Platform Configuration (RBSU)

- Select Boot Options.

This menu defines the boot mode.

If the Boot Mode is set to UEFI Mode then continue this procedure. Otherwise there is no need to make any of the changes below. - Select Boot Mode

This generates a warning indicating the following:Hit the ENTER key and two selections appear: UEFI Mode(highlighted) and Legacy BIOS Mode

Boot Mode changes require a system reboot in order to take effect. Changing the Boot Mode can impact the ability of the server to boot the installed operating system. An operating system is installed in the same mode as the platform during the installation. If the Boot Mode does not match the operating system installation, the system cannot boot. The following features require that the server be configured for UEFI Mode: Secure Boot, IPv6 PXE Boot, Boot > 2.2 TB Disks in AHCI SATA Mode, and Smart Array SW RAID. - Use the down arrow key to

select Legacy BIOS

Mode and hit the ENTER. The screen indicates:

A reboot is required for the Boot Mode changes. - Hit F12. This

displays the following:

Changes are pending. Do you want to save changes? Press 'Y" to save and exit, 'N' to discard and stay, or 'ESC' to cancel. - Hit the y key and an

additional warning appears indicating:

System configuration changed. A system reboot is required. Press ENTER to reboot the system. -

- Hit ENTER

to force a reboot.

Note:

The boot must go into the process of actually trying to boot from the boot devices using the boot order (not just go back through initialization and access the System Utility again). The boot should fail and the System Utility can be accessed again to continue any further changes needed. - After the reboot, hit the ESC 9key sequence to re-enter the System Utility. Selecting System Configuration->BIOS/Platform Configuration (RBSU)->Boot Options. Verify the Boot Mode is set to Legacy Boot Mode UEFI Optimized Boot is set to Disabled

- Hit ENTER

to force a reboot.

- Select F10 if it is desired to save and stay in the utility or select the F12 if it is desired to save and exit to complete the current boot process.

- Force PXE to boot from the first Embedded FlexibleLOM HPE Ethernet 10Gb

2-port Adapter.

- Expose the System Utility by following step 1 or 2 depending on the hardware being configured.

- Select System Configuration.

- Select BIOS/Platform Configuration (RBSU) .

- Select Boot Options. This menu defines the boot mode.

- Confirm the following settings: Boot Mode Legacy BIOS Mode UEFI Optimized Boot, and Boot Order Policy Retry Boot Order Indefinitely(this means it keeps trying to boot without ever going to disk). If not in Legacy BIOS Mode, follow procedure Change over from UEFI Booting Mode to Legacy BIOS Booting Mode.

- Select Legacy BIOS Boot Order In the default view, the 10Gb Embedded FlexibleLOM 1 Port 1 is at the bottom of the list.

- Move the 10 Gb Embedded FlexibleLOM 1 Port 1 entry up above the 1Gb Embedded LOM 1 Port 1 entry. To move an entry press the '+' key to move an entry higher in the boot list and the '-' key to move an entry lower in the boot list. Use the arrow keys to navigate through the Boot Order list.

- Select F10 if it is desired to save and stay in the utility or select the F12 it is desired to save and exit to continue the current boot process.

- Enabling Virtualization:

This step provides the steps required to enable virtualization on a given Bare Metal Server. Virtualization can be configured using the default settings or via the Workload Profiles.

Verifying Default Settings- Expose the System Utility by following step 1 or 2 depending on the hardware being configured.

- Select System Configuration

- Select BIOS/Platform Configuration (RBSU)

- Select Virtualization Options

This view displays the settings for the Intel(R) Virtualization Technology (IntelVT), Intel(R) VT-d, and SR-IOV options (Enabled or Disabled). The default values for each option is Enabled.