4 Fault Recovery

This chapter describes the fault recovery procedures for various failure scenarios.

4.1 Kubernetes Cluster

This section describes the fault recovery procedures for various failure scenarios in a Kubernetes Cluster.

4.1.1 Recovering a Failed Bastion Host

This section describes the procedure to replace a failed Bastion Host.

- You must have login access to Bastion Host.

Note:

This procedure is applicable for a single Bastion Host failure only.- Use SSH to log in to a working Bastion Host. If the working Bastion Host was

a standby Bastion Host, then it must have successfully become an Active

Bastion Host as per Bastion HA Feature, within 10 seconds. To verify this,

run the following command and check if the output is

IS active-bastion:$ is_active_bastionSample output:

IS active-bastion - Use SSH to log in to a working Bastion Host and run the

following commands to deploy a new Bastion Host.

Replace <bastion name> in the following command with its corresponding name.

$ cd /var/occne/cluster/$OCCNE_CLUSTER/ $ OCCNE_ARGS=--limit=<bastion name> OCCNE_CONTAINERS=(PROV) artifacts/pipeline.sh

- Log in to OpenStack cloud using your credentials.

- From the Compute menu, select Instances, and locate the failed Bastion's instance that you want to replace.

- Click the drop-down list from the Actions column, and

select Delete Instance to detele the failed Bastion host:

- Use SSH to log in to a working Bastion Host. If the working Bastion Host was

a standby Bastion Host, then it must have successfully become an Active

Bastion Host as per Bastion HA Feature, within 10 seconds. To verify this,

run the following command and check if the output is

IS active-bastion:$ is_active_bastionSample output:

IS active-bastion - Use SSH to log in to a working Bastion Host and run the

following commands to create a new Bastion Host.

Replace <bastion name> in the following command with its corresponding name.

$ cd /var/occne/cluster/$OCCNE_CLUSTER/ $ source openrc.sh $ terraform apply -var-file=$OCCNE_CLUSTER/cluster.tfvars -auto-approve $ OCCNE_ARGS=--limit=<bastion name> OCCNE_CONTAINERS=(PROV) artifacts/pipeline.sh

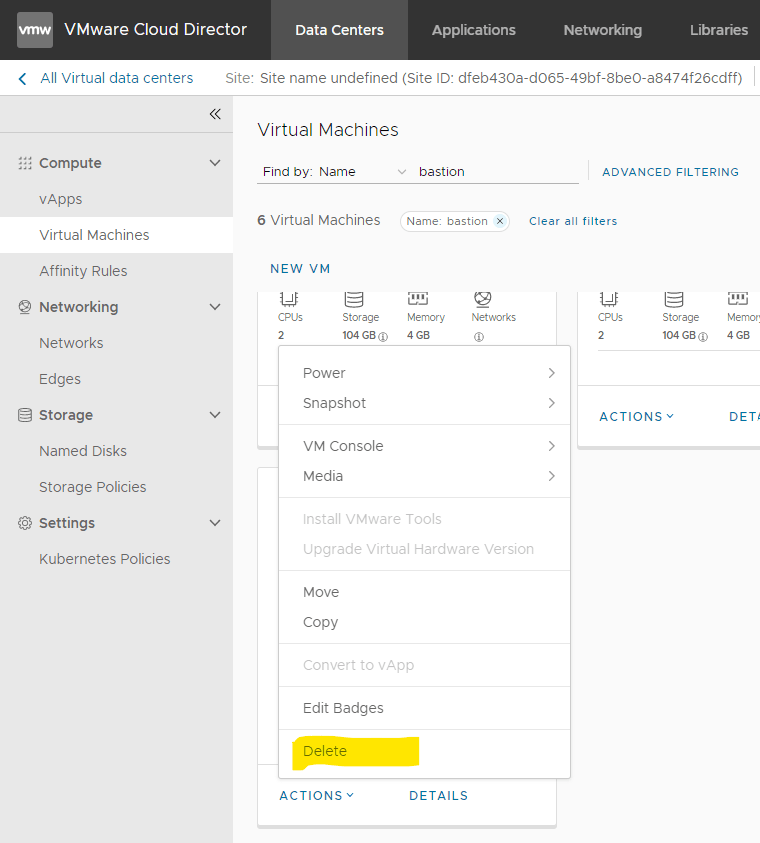

- Log in to VMware cloud using your credentials.

- From the Compute menu, select Virtual Machines, and locate the failed Bastion's VM that you want to replace.

- From the Actions menu, select Delete to delete

the failed Bastion Host:

- Use SSH to log in to a working Bastion Host. If the working Bastion Host was

a standby Bastion Host, then it must have successfully become an Active

Bastion Host as per Bastion HA Feature, within 10 seconds. To verify this,

run the following command and check if the output is

IS active-bastion:$ is_active_bastionSample output:

IS active-bastion - Use SSH to log in to a working Bastion Host and run the

following commands to deploy a new Bastion Host.

Replace <bastion name> in the following command with its corresponding name.

$ cd /var/occne/cluster/$OCCNE_CLUSTER/ $ terraform apply -var-file=$OCCNE_CLUSTER/cluster.tfvars -auto-approve $ OCCNE_ARGS=--limit=<bastion name> OCCNE_CONTAINERS=(PROV) artifacts/pipeline.sh

4.1.2 Recovering a Failed Kubernetes Controller Node

This section describes the procedure to recover a single failed Kubernetes controller node in vCNE deployments.

Note:

- This procedure is applicable for vCNE (OpenStack and VMWare) deployments only.

- This procedure is applicable for replacing a single Kubernetes controller node only.

- Control Node 1 (member of etcd1) requires specific steps to be performed. Be mindful when you are replacing this node.

Prerequisites

- You must have login access to a Bastion Host.

- You must have login access to the cloud GUI.

4.1.2.1 Recovering a Failed Kubernetes Controller Node in OpenStack

This section describes the procedure to recover a failed Kubernetes controller node in an OpenStack deployment.

- Use SSH to log in to Bastion Host and remove the failed Kubernetes

controller node by following the procedure described in the Removing a Controller Node in OpenStack Deployment section of the

Oracle Communications Cloud Native Core, Cloud Native Environment User

Guide.

Take a note of the internal IP addresses of all the controller nodes and the etcd member number (etcd1, etcd2 or etcd3) of the failed controller node. Also take note of the IPs and hostnames of the other working Control nodes.

- Use the original terraform file to create a new controller node VM:

Note:

To revert the changes, perform this step only if the failed control node was a member of etcd1.cd /var/occne/cluster/${OCCNE_CLUSTER} mv terraform.tfstate /tmp cp ${OCCNE_CLUSTER}/terraform.tfstate.backup terraform.tfstate - Run the following commands to create a new Controller Node Instance

within the

cloud:

$ source openrc.sh $ terraform apply -var-file=$OCCNE_CLUSTER/cluster.tfvars -auto-approve - Switch the terraform file.

Note:

Perform this step only if the failed control node was a member of etcd1.$ cd /var/occne/cluster/$OCCNE_CLUSTER $ python3 scripts/switchTfstate.pyFor example:[cloud-user@occne7-test-bastion-1]$ python3 scripts/switchTfstate.py

Sample output:Beginning tfstate switch order k8s control nodes terraform.tfstate.lastversion created as backup Controller Nodes order before rotation: occne7-test-k8s-ctrl-1 occne7-test-k8s-ctrl-2 occne7-test-k8s-ctrl-3 Controller Nodes order after rotation: occne7-test-k8s-ctrl-2 occne7-test-k8s-ctrl-3 occne7-test-k8s-ctrl-1 Success: terraform.tfstate rotated for cluster occne7-test - Edit the current failed kube_control_plane IP with the IP of working control

node.

Note:

Perform this step only if the failed control node was a member of etcd1.$ kubectl edit cm -n kube-public cluster-infoSample output:. . server: https://<working control node IP address>:6443 . .

- Log in to OpenStack GUI using your credentials and note the replaced

node's internal IP address and hostname. In most of the cases, the new IP

address and hostname remains the same as the ones before deletion. The new IP

address and hostname are referred to as

replaced_node_ipandreplaced_node_hostnamein the remaining procedure. - Run the following command from Bastion Host to configure the replaced control

node

OS:

OCCNE_CONTAINERS=(PROV) OCCNE_ARGS=--limit=<replaced_node_hostname> artifacts/pipeline.shFor example:OCCNE_CONTAINERS=(PROV) OCCNE_ARGS=--limit=occne7-test-k8s-ctrl-1 artifacts/pipeline.sh

- Update the

/etc/hostsfile in Bastion Host with thereplaced_node_ipandreplaced_node_hostname. Make sure there are two matching entries.$ sudo vi /etc/hostsSample output:192.168.202.232 occne7-test-k8s-ctrl-1.novalocal occne7-test-k8s-ctrl-1 192.168.202.232 lb-apiserver.kubernetes.local - Use SSH to log in to each controller node in the cluster, except

the controller node that is newly created, and run the following commands as a

root user to update the replaced_node_ip in the

kube-apiserver.yaml,kubeadm-config.yaml, andhostsfiles:- kube-apiserver.yaml:

vi /etc/kubernetes/manifests/kube-apiserver.yamlSample output:- --etcd-servers=https://192.168.202.232:2379,https://192.168.203.194:2379,https://192.168.200.115:2379 - kubeadm-config.yaml:

$ vi /etc/kubernetes/kubeadm-config.yamlSample output:etcd: external: endpoints: - https://<replaced_node_ip>:2379 - https://192.168.203.194:2379 - https://192.168.200.115:2379 ------------------------------------ certSANs: - kubernetes - kubernetes.default - kubernetes.default.svc - kubernetes.default.svc.occne7-test - 10.233.0.1 - localhost - 127.0.0.1 - occne7-test-k8s-ctrl-1 - occne7-test-k8s-ctrl-2 - occne7-test-k8s-ctrl-3 - lb-apiserver.kubernetes.local - <replaced_node_ip> - 192.168.203.194 - 192.168.200.115 - localhost.localdomain timeoutForControlPlane: 5m0s - hosts:

$ vi /etc/hostsSample output:<replaced_node_ip> occne7-test-k8s-ctrl-1.novalocal occne7-test-k8s-ctrl-1

- kube-apiserver.yaml:

- Run the following commands in a Bastion Host to update all

instances of the <replaced_node_ip>. If the failed controller node was a

member of etcd1, then update the

controlPlaneEndpointvalue with the IP address of the working controller node (that is, from ctrl-1 to ctrl-2):$ kubectl edit configmap kubeadm-config -n kube-systemSample output:apiServer: certSANs: - kubernetes - kubernetes.default - kubernetes.default.svc - kubernetes.default.svc.occne7-test - 10.233.0.1 - localhost - 127.0.0.1 - occne7-test-k8s-ctrl-1 - occne7-test-k8s-ctrl-2 - occne7-test-k8s-ctrl-3 - lb-apiserver.kubernetes.local - <replaced_node_ip> - 192.168.203.194 - 192.168.200.115 - localhost.localdomain ---------------------------------------------------- controlPlaneEndpoint: <working_node_ip>:6443 #Only update if was part of etcd1 ---------------------------------------------------- etcd: external: caFile: /etc/ssl/etcd/ssl/ca.pem certFile: /etc/ssl/etcd/ssl/node-occne7-test-k8s-ctrl-1.pem endpoints: - https://<replaced_node_ip>:2379 - https://192.168.203.194:2379 - https://192.168.200.115:2379 - Run the cluster.yml playbook from Bastion Host 1 to add the

new controller node into the

cluster:

$ podman run -it --rm --rmi --network host --name DEPLOY_$OCCNE_CLUSTER -v /var/occne/cluster/$OCCNE_CLUSTER:/host -v /var/occne:/var/occne:rw -e OCCNE_vCNE=openstack -e OCCNEINV=/host/hosts -e 'PLAYBOOK=/kubespray/cluster.yml' -e 'OCCNEARGS=--extra-vars={"occne_userpw":"<occne password>"} --extra-vars=occne_hostname=$OCCNE_CLUSTER-bastion-1 -i /host/occne.ini' winterfell:5000/occne/k8s_install:$OCCNE_VERSION bash $ set -e $ /copyHosts.sh ${OCCNEINV} $ ansible-playbook -i /kubespray/inventory/occne/hosts --become --private-key /host/.ssh/occne_id_rsa /kubespray/cluster.yml ${OCCNEARGS} $ exit - Verify if the new controller node is added to the cluster using the following

command:

$ kubectl get nodeSample output:NAME STATUS ROLES AGE VERSION occne7-test-k8s-ctrl-1 Ready control-plane,master 30m v1.22.5 occne7-test-k8s-ctrl-2 Ready control-plane,master 2d19h v1.22.5 occne7-test-k8s-ctrl-3 Ready control-plane,master 2d19h v1.22.5 occne7-test-k8s-node-1 Ready <none> 2d19h v1.22.5 occne7-test-k8s-node-2 Ready <none> 2d19h v1.22.5 occne7-test-k8s-node-3 Ready <none> 2d19h v1.22.5 occne7-test-k8s-node-4 Ready <none> 2d19h v1.22.5 - Perform the following steps to validate the addition to the etcd cluster using

etcdctl:

- Use SSH to log in to the Bastion

Host:

$ ssh <working control node hostname>For example:$ ssh occne7-test-k8s-ctrl-2 - Switch to the root

user:

$ sudo suFor example:[cloud-user@occne7-test-k8s-ctrl-2]# sudo su - Source

/etc/etcd.env:$ source /etc/etcd.envFor example:[root@occne7-test-k8s-ctrl-2 cloud-user]# source /etc/etcd.env - Run the following command to list the etcd members

list:

$ /usr/local/bin/etcdctl --endpoints https://<working control node IP address>:2379 --cacert=$ETCD_PEER_TRUSTED_CA_FILE --cert=$ETCD_CERT_FILE --key=$ETCD_KEY_FILE member listFor example:[root@occne7-test-k8s-ctrl-2 cloud-user]# /usr/local/bin/etcdctl --endpoints https://192.168.203.194:2379 --cacert=$ETCD_PEER_TRUSTED_CA_FILE --cert=$ETCD_CERT_FILE --key=$ETCD_KEY_FILE member listSample output:52513ddd2aa49770, started, etcd1, https://192.168.202.232:2380, https://192.168.201.158:2379, false f1200d9975868073, started, etcd2, https://192.168.203.194:2380, https://192.168.203.194:2379, false 80845fb2b5120458, started, etcd3, https://192.168.200.115:2380, https://192.168.200.115:2379, false

- Use SSH to log in to the Bastion

Host:

4.1.2.2 Recovering a Failed Kubernetes Controller Node in VMware

This section describes the procedure to recover a failed Kubernetes controller node in a VMware deployment.

- Use SSH to log in to Bastion Host and remove the failed Kubernetes

controller node by following the procedure described in the "Removing a

Controller Node in VMware Deployment" section of Oracle Communications Cloud Native Core, Cloud Native Environment User

Guide.

Note the internal IP addresses of all the controller nodes and the etcd member number (etcd1, etcd2 or etcd3) of the failed controller node. Also take note of the IPs and hostnames of the other working Control nodes.

- Use the original terraform file to create a new controller node VM:

Note:

To revert the switch changes, perform this step only if the failed control node was a member of etcd1.mv terraform.tfstate /tmp cp terraform.tfstate.original terraform.tfstate - Run the following commands to create a new Controller Node Instance within the

cloud:

$ cd /var/occne/cluster/$OCCNE_CLUSTER/ $ terraform apply -var-file=$OCCNE_CLUSTER/cluster.tfvars -auto-approve - Switch the terraform file.

Note:

Perform this step only if the failed control node was a member of etcd1.$ cd /var/occne/cluster/$OCCNE_CLUSTER $ cp terraform.tfstate terraform.tfstate.original $ python3 scripts/switchTfstate.pyFor example:[cloud-user@occne7-test-bastion-1]$ python3 scripts/switchTfstate.py

Sample output:Beginning tfstate switch order k8s control nodes terraform.tfstate.lastversion created as backup Controller Nodes order before rotation: occne7-test-k8s-ctrl-1 occne7-test-k8s-ctrl-2 occne7-test-k8s-ctrl-3 Controller Nodes order after rotation: occne7-test-k8s-ctrl-2 occne7-test-k8s-ctrl-3 occne7-test-k8s-ctrl-1 Success: terraform.tfstate rotated for cluster occne7-test - Edit the current failed kube_control_plane IP with the IP of working control

node.

Note:

Perform this step only if the failed control node was a member of etcd1.$ kubectl edit cm -n kube-public cluster-infoSample output:. . server: https://<working control node IP address>:6443 . .

- Log in to VMware GUI using your credentials and note the replaced

node's internal IP address and hostname. In most of the cases, the new IP

address and hostname remains the same as the ones before deletion. The new IP

address and hostname are referred to as

replaced_node_ipandreplaced_node_hostnamein the remaining procedure. - Run the following command from Bastion Host to configure the replaced control

node

OS:

OCCNE_CONTAINERS=(PROV) OCCNE_ARGS=--limit=<replaced_node_hostname> artifacts/pipeline.shFor example:OCCNE_CONTAINERS=(PROV) OCCNE_ARGS=--limit=occne7-test-k8s-ctrl-1 artifacts/pipeline.sh

- Update the

/etc/hostsfile in Bastion Host with thereplaced_node_ipandreplaced_node_hostname. Make sure there are two matching entries.$ sudo vi /etc/hostsSample output:192.168.202.232 occne7-test-k8s-ctrl-1.novalocal occne7-test-k8s-ctrl-1 192.168.202.232 lb-apiserver.kubernetes.local - Use SSH to log in to each controller node in the cluster, except

the controller node that is newly created, and run the following commands as a

root user to update the replaced_node_ip in the

kube-apiserver.yaml,kubeadm-config.yaml, andhostsfiles:- kube-apiserver.yaml:

vi /etc/kubernetes/manifests/kube-apiserver.yamlSample output:- --etcd-servers=https://192.168.202.232:2379,https://192.168.203.194:2379,https://192.168.200.115:2379 - kubeadm-config.yaml:

$ vi /etc/kubernetes/kubeadm-config.yamlSample output:etcd: external: endpoints: - https://<replaced_node_ip>:2379 - https://192.168.203.194:2379 - https://192.168.200.115:2379 ------------------------------------ certSANs: - kubernetes - kubernetes.default - kubernetes.default.svc - kubernetes.default.svc.occne7-test - 10.233.0.1 - localhost - 127.0.0.1 - occne7-test-k8s-ctrl-1 - occne7-test-k8s-ctrl-2 - occne7-test-k8s-ctrl-3 - lb-apiserver.kubernetes.local - <replaced_node_ip> - 192.168.203.194 - 192.168.200.115 - localhost.localdomain timeoutForControlPlane: 5m0s - hosts:

$ vi /etc/hostsSample output:<replaced_node_ip> occne7-test-k8s-ctrl-1.novalocal occne7-test-k8s-ctrl-1

- kube-apiserver.yaml:

- Run the following commands in a Bastion Host to update all

instances of the <replaced_node_ip>. If the failed controller node was a

member of etcd1, then update the

controlPlaneEndpointvalue with the IP address of the working controller node (that is, from ctrl-1 to ctrl-2):$ kubectl edit configmap kubeadm-config -n kube-systemSample output:apiServer: certSANs: - kubernetes - kubernetes.default - kubernetes.default.svc - kubernetes.default.svc.occne7-test - 10.233.0.1 - localhost - 127.0.0.1 - occne7-test-k8s-ctrl-1 - occne7-test-k8s-ctrl-2 - occne7-test-k8s-ctrl-3 - lb-apiserver.kubernetes.local - <replaced_node_ip> - 192.168.203.194 - 192.168.200.115 - localhost.localdomain ---------------------------------------------------- controlPlaneEndpoint: <working_node_ip>:6443 #Only update if was part of etcd1 ---------------------------------------------------- etcd: external: caFile: /etc/ssl/etcd/ssl/ca.pem certFile: /etc/ssl/etcd/ssl/node-occne7-test-k8s-ctrl-1.pem endpoints: - https://<replaced_node_ip>:2379 - https://192.168.203.194:2379 - https://192.168.200.115:2379 - Run the

cluster.ymlplaybook from Bastion Host 1 to add the new controller node into the cluster:$ podman run -it --rm --rmi --network host --name DEPLOY_$OCCNE_CLUSTER -v /var/occne/cluster/$OCCNE_CLUSTER:/host -v /var/occne:/var/occne:rw -e OCCNE_vCNE=openstack -e OCCNEINV=/host/hosts -e 'PLAYBOOK=/kubespray/cluster.yml' -e 'OCCNEARGS=--extra-vars={"occne_userpw":"<occne password>"} --extra-vars=occne_hostname=$OCCNE_CLUSTER-bastion-1 -i /host/occne.ini' winterfell:5000/occne/k8s_install:$OCCNE_VERSION bash $ set -e $ /copyHosts.sh ${OCCNEINV} $ ansible-playbook -i /kubespray/inventory/occne/hosts --become --private-key /host/.ssh/occne_id_rsa /kubespray/cluster.yml ${OCCNEARGS} $ exit - Verify if the new controller node is added to the cluster using the following

command:

$ kubectl get nodeSample output:NAME STATUS ROLES AGE VERSION occne7-test-k8s-ctrl-1 Ready control-plane,master 30m v1.22.5 occne7-test-k8s-ctrl-2 Ready control-plane,master 2d19h v1.22.5 occne7-test-k8s-ctrl-3 Ready control-plane,master 2d19h v1.22.5 occne7-test-k8s-node-1 Ready <none> 2d19h v1.22.5 occne7-test-k8s-node-2 Ready <none> 2d19h v1.22.5 occne7-test-k8s-node-3 Ready <none> 2d19h v1.22.5 occne7-test-k8s-node-4 Ready <none> 2d19h v1.22.5 - Perform the following steps to validate the addition to the etcd

cluster using etcdctl:

- Use SSH to log in to the Bastion

Host:

$ ssh <working control node hostname>For example:$ ssh occne7-test-k8s-ctrl-2 - Switch to the root

user:

$ sudo suFor example:[cloud-user@occne7-test-k8s-ctrl-2]# sudo su - Source

/etc/etcd.env:$ source /etc/etcd.envFor example:[root@occne7-test-k8s-ctrl-2 cloud-user]# source /etc/etcd.env - Run the following command to list the etcd members

list:

$ /usr/local/bin/etcdctl --endpoints https://<working control node IP address>:2379 --cacert=$ETCD_PEER_TRUSTED_CA_FILE --cert=$ETCD_CERT_FILE --key=$ETCD_KEY_FILE member listFor example:[root@occne7-test-k8s-ctrl-2 cloud-user]# /usr/local/bin/etcdctl --endpoints https://192.168.203.194:2379 --cacert=$ETCD_PEER_TRUSTED_CA_FILE --cert=$ETCD_CERT_FILE --key=$ETCD_KEY_FILE member listSample output:52513ddd2aa49770, started, etcd1, https://192.168.202.232:2380, https://192.168.201.158:2379, false f1200d9975868073, started, etcd2, https://192.168.203.194:2380, https://192.168.203.194:2379, false 80845fb2b5120458, started, etcd3, https://192.168.200.115:2380, https://192.168.200.115:2379, false

- Use SSH to log in to the Bastion

Host:

4.1.3 Restoring the etcd Database

This section describes the procedure to restore etcd cluster data from the backup.

- A backup copy of the etcd database must be available. For the procedure to create a backup of your etcd database, refer to the "Performing an etcd Data Backup" section of Oracle Communications Cloud Native Core, Cloud Native Environment User Guide.

- At least one Kubernetes controller node must be operational.

- Find Kubernetes controller hostname: Run the following command to

get the names of Kubernetes controller nodes.

$ kubectl get nodesSample output:NAME STATUS ROLES AGE VERSION occne3-my-cluster-k8s-ctrl-1 Ready control-plane,master 4d1h v1.23.7 occne3-my-cluster-k8s-ctrl-2 Ready control-plane,master 4d1h v1.23.7 occne3-my-cluster-k8s-ctrl-3 Ready control-plane,master 4d1h v1.23.7 occne3-my-cluster-k8s-node-1 Ready <none> 4d1h v1.23.7 occne3-my-cluster-k8s-node-2 Ready <none> 4d1h v1.23.7 occne3-my-cluster-k8s-node-3 Ready <none> 4d1h v1.23.7 occne3-my-cluster-k8s-node-4 Ready <none> 4d1h v1.23.7You must restore the etcd data on any one of the controller nodes that is in Ready state. From the output, note the name of a controller node, that is in Ready state, to restore the etcd data.

- Run the etcd-restore script:

- On the Bastion Host, switch to the

/var/occne/cluster/${OCCNE_CLUSTER}/artifactsdirectory:$ cd /var/occne/cluster/${OCCNE_CLUSTER}/artifacts - Run the

etcd_restore.shscript:$ ./etcd_restore.shOn running the script, the system prompts you to enter the following details:- k8s-ctrl node: Enter the name of the controller node (noted in Step 1) on which you want to restore the etcd data.

- Snapshot: Select the PVC snapshot that you want to restore from the list of PVC snapshots displayed.

Example:$ ./artifacts/etcd_restore.shSample output:Enter the K8s-ctrl hostname to restore etcd backup: occne3-my-cluster-k8s-ctrl-1 occne-etcd-backup pvc exists! occne-etcd-backup pvc is in bound state! Creating occne-etcd-backup pod pod/occne-etcd-backup created waiting for Pod to be in running state waiting for Pod to be in running state waiting for Pod to be in running state waiting for Pod to be in running state waiting for Pod to be in running state occne-etcd-backup pod is in running state! List of snapshots present on the PVC: snapshotdb.2022-11-14 Enter the snapshot from the list which you want to restore: snapshotdb.2022-11-14 This site is for the exclusive use of Oracle and its authorized customers and partners. Use of this site by customers and partners is subject to the Terms of Use and Privacy Policy for this site, as well as your contract with Oracle. Use of this site by Oracle employees is subject to company policies, including the Code of Conduct. Unauthorized access or breach of these terms may result in termination of your authorization to use this site and/or civil and criminal penalties. Restoring etcd data backup This site is for the exclusive use of Oracle and its authorized customers and partners. Use of this site by customers and partners is subject to the Terms of Use and Privacy Policy for this site, as well as your contract with Oracle. Use of this site by Oracle employees is subject to company policies, including the Code of Conduct. Unauthorized access or breach of these terms may result in termination of your authorization to use this site and/or civil and criminal penalties. Deprecated: Use `etcdutl snapshot restore` instead. 2022-11-14T20:22:37Z info snapshot/v3_snapshot.go:248 restoring snapshot {"path": "snapshotdb.2022-11-14", "wal-dir": "default.etcd/member/wal", "data-dir": "default.etcd", "snap-dir": "default.etcd/member/snap", "stack": "go.etcd.io/etcd/etcdutl/v3/snapshot.(*v3Manager).Restore\n\t/go/src/go.etcd.io/etcd/release/etcd/etcdutl/snapshot/v3_snapshot.go:254\ngo.etcd.io/etcd/etcdutl/v3/etcdutl.SnapshotRestoreCommandFunc\n\t/go/src/go.etcd.io/etcd/release/etcd/etcdutl/etcdutl/snapshot_command.go:147\ngo.etcd.io/etcd/etcdctl/v3/ctlv3/command.snapshotRestoreCommandFunc\n\t/go/src/go.etcd.io/etcd/release/etcd/etcdctl/ctlv3/command/snapshot_command.go:129\ngithub.com/spf13/cobra.(*Command).execute\n\t/go/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:856\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\t/go/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:960\ngithub.com/spf13/cobra.(*Command).Execute\n\t/go/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:897\ngo.etcd.io/etcd/etcdctl/v3/ctlv3.Start\n\t/go/src/go.etcd.io/etcd/release/etcd/etcdctl/ctlv3/ctl.go:107\ngo.etcd.io/etcd/etcdctl/v3/ctlv3.MustStart\n\t/go/src/go.etcd.io/etcd/release/etcd/etcdctl/ctlv3/ctl.go:111\nmain.main\n\t/go/src/go.etcd.io/etcd/release/etcd/etcdctl/main.go:59\nruntime.main\n\t/go/gos/go1.16.15/src/runtime/proc.go:225"} 2022-11-14T20:22:37Z info membership/store.go:141 Trimming membership information from the backend... 2022-11-14T20:22:37Z info membership/cluster.go:421 added member {"cluster-id": "cdf818194e3a8c32", "local-member-id": "0", "added-peer-id": "8e9e05c52164694d", "added-peer-peer-urls": ["http://localhost:2380"]} 2022-11-14T20:22:37Z info snapshot/v3_snapshot.go:269 restored snapshot {"path": "snapshotdb.2022-11-14", "wal-dir": "default.etcd/member/wal", "data-dir": "default.etcd", "snap-dir": "default.etcd/member/snap"} Removing etcd-backup-pod pod "occne-etcd-backup" deleted etcd-data-restore is successful!!

- On the Bastion Host, switch to the

4.1.4 Recovering a Failed Kubernetes Worker Node

This section provides the manual procedures to replace a failed Kubernetes Worker Node for bare metal, OpenStack, and VMware.

Prerequisites

- Kubernetes worker node must be taken out of service.

- Bare metal server must be repaired and the same bare metal server must be added back into the cluster.

- You must have credentials to access Openstack GUI.

- You must have credentials to access VMware GUI or CLI.

Limitations

Some of the steps in these procedures must be run manually.

4.1.4.1 Recovering a Failed Kubernetes Worker Node in Bare Metal

This section describes the manual procedure to replace a failed Kubernetes Worker Node in a bare metal deployment.

Prerequisites

- Kubernetes worker node must be taken out of service.

- Bare metal server must be repaired and the same bare metal server must be added back into the cluster.

Procedure

- Run the following command to remove Object Storage Daemon (OSD)

from the worker node, before removing the worker node from the Kubernetes

cluster:

Note:

Remove one OSD at a time. Do not remove multiple OSDs at once. Check the cluster status between removing multiple OSDs.Sample rook_toolbox file.

# Note down the osd-id hosted on the worker node which is to be removed $ kubectl get pods -n rook-ceph -o wide |grep osd |grep <worker-node> # Scale down the rook-ceph-operator deployment and OSD deployment $ kubectl -n rook-ceph scale deployment rook-ceph-operator --replicas=0 $ kubectl -n rook-ceph scale deployment rook-ceph-osd-<ID> --replicas=0 # Install the rook-ceph tool box $ kubectl create -f rook_toolbox.yaml # Connect to the rook-ceph toolbox using the following command: $ kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- bash # Once connected to the toolbox, check the ceph cluster status using the following commands: $ ceph status $ ceph osd status $ ceph osd tree # Mark the OSD deployment as out using the following commands and purge the OSD: $ ceph osd out osd.<ID> $ ceph osd purge <ID> --yes-i-really-mean-it # Verify that the OSD is removed from the node and ceph cluster status: $ ceph status $ ceph osd status $ ceph osd tree # Exit the rook-ceph toolbox $ exit # Delete the OSD deployments of the purged OSD $ kubectl delete deployment -n rook-ceph rook-ceph-osd-<ID> # Scale up the rook-ceph-operator deployment using the following command: $ kubectl -n rook-ceph scale deployment rook-ceph-operator --replicas=1 # Remove the rook-ceph tool box deployment $ kubectl -n rook-ceph delete deploy/rook-ceph-tools - Set CENTRAL_REPO, CENTRAL_REPO_REGISTRY_PORT, and NODE

environment variables to allow podman commands to run on the Bastion

Host:

$ export NODE=<workernode-full-name> $ export CENTRAL_REPO=<central-repo-name> $ export CENTRAL_REPO_REGISTRY_PORT=<central-repo-port>Example:$ export NODE=k8s-6.delta.lab.us.oracle.com $ export CENTRAL_REPO=winterfell $ export CENTRAL_REPO_REGISTRY_PORT=5000 - Run one of the following commands to remove the old worker

node:

- If the worker node is reachable from the Bastion

Host:

$ sudo podman run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/${OCCNE_CLUSTER}:/host -v /var/occne:/var/occne:rw -e OCCNEARGS=--extra-vars="{'node':'${NODE}'}" -e 'PLAYBOOK=/kubespray/remove-node.yml' ${CENTRAL_REPO}:${CENTRAL_REPO_REGISTRY_PORT:-5000}/occne/k8s_install:${OCCNE_VERSION} - If the worker node is not reachable from the Bastion

Host:

$ sudo podman run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/${OCCNE_CLUSTER}:/host -v /var/occne:/var/occne:rw -e OCCNEARGS=--extra-vars="{'node':'${NODE}','reset_nodes':false}" -e 'PLAYBOOK=/kubespray/remove-node.yml' ${CENTRAL_REPO}:${CENTRAL_REPO_REGISTRY_PORT:-5000}/occne/k8s_install:${OCCNE_VERSION}

Confirmation message is prompted asking to remove the node. Enter "

yes" at the prompt. This will take several minutes, mostly spent at the task "Drain node except daemonsets resource" (even if the node is unreachable). - If the worker node is reachable from the Bastion

Host:

- Run the following command to verify that the node was

removed:

$ kubectl get nodesVerify that the target worker node is no longer listed.

4.1.4.1.1 Adding Node to a Kubernetes Cluster

This section describes the procedure to add a new node to a Kubernetes cluster.

- Replace the node's settings in

hosts.iniwith the replacement node's settings (probably a MAC address change, if the node is a direct replacement). If you are adding a node, add it to thehosts.iniin all the relevant places (machine inventory section, and proper groups). - Set the environment variables (CENTRAL_REPO, CENTRAL_REPO_REGISTRY_PORT

(if not 5000), and NODE) to run the

dockercommand on the Bastion Host:export NODE=k8s-6.delta.lab.us.oracle.com export CENTRAL_REPO=winterfell - Install the OS on the new target worker

node:

podman run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/${OCCNE_CLUSTER}:/host -v /var/occne:/var/occne:rw -e OCCNEARGS=--limit=${NODE},localhost ${CENTRAL_REPO}:${CENTRAL_REPO_REGISTRY_PORT:-5000}/occne/provision:${OCCNE_VERSION} - Run the following command to scale up the Kubernetes cluster with the

new worker

node:

podman run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/${OCCNE_CLUSTER}:/host -v /var/occne:/var/occne:rw -e 'INSTALL=scale.yml' ${CENTRAL_REPO}:${CENTRAL_REPO_REGISTRY_PORT:-5000}/occne/k8s_install:${OCCNE_VERSION} - Run the following command to verify the new node is up and running in the

cluster:

kubectl get nodes

4.1.4.1.2 Adding OSDs in a Ceph Cluster

This procedure sets up a ceph-osd daemon, configures it to use one drive, and configures the cluster to distribute data to the Object Storage Daemon (OSD). If your host has multiple drives, you may add an OSD for each drive by repeating this procedure. To add an OSD, create a data directory for it, mount a drive to that directory, add the OSD to the cluster, and then add it to the crush map. When you add the OSD to the crush map, consider the weight you give to the new OSD.

- Connect to the

rook-cephtoolbox using the following command:$ kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- bash - Before adding OSD, make sure that the current OSD tree does not have

any outliers which could become (nearly) full if the new crush map decides to put

even more Placement Groups (PGs) on that

OSD:

$ ceph osd df | sort -k 7 -nUsereweight-by-utilizationto force PGs off the OSD:$ ceph osd test-reweight-by-utilization $ ceph osd reweight-by-utilizationFor optimal viewing, set up atmuxsession and make three panes:- A pane with the "

watch ceph -s" command that displays the status of the Ceph cluster every 2 seconds. - A pane with the "

watch ceph osd tree" command that displays the status of the OSDs in the Ceph cluster every 2 seconds. - A pane to run the actual commands.

- A pane with the "

- In order to deploy an OSD, there must be a storage device that is available on which

the OSD is deployed.

Run the following command to display an inventory of storage devices on all cluster hosts:

$ ceph orch device lsA storage device is considered available, if all of the following conditions are met:- The device must have no partitions.

- The device must not have any LVM state.

- The device must not be mounted.

- The device must not contain a file system.

- The device must not contain a Ceph BlueStore OSD.

- The device must be larger than 5 GB.

- Ceph will not provision an OSD on a device that is not available.

- To verify that the cluster is in a healthy state, connect to the Rook Toolbox and run the

ceph statuscommand:- All

monsmust be in quorum. - A

mgrmust be active state. - At least one OSD must be active state.

- If the

healthis notHEALTH_OK, the warnings or errors must be investigated.

- All

4.1.4.2 Recovering a Failed Kubernetes Worker Node in OpenStack

This section describes the manual procedure to replace a failed Kubernetes Worker Node in an OpenStack deployment.

Prerequisites

- You must have credentials to access Openstack GUI.

Procedure

- Perform the following steps to identify and remove the failed worker

node:

Note:

Run all the commands as a cloud-user in the/var/occne/cluster/${OCCNE_CLUSTER}folder.- Identify the node that is in a not ready, not reachable, or degraded

state and note the node's IP

address:

kubectl get node -A -o wideSample output:NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME occne3-user-k8s-ctrl-1 Ready control-plane,master 178m v1.23.7 192.168.1.92 192.168.1.92 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-ctrl-2 Ready control-plane,master 178m v1.23.7 192.168.1.117 192.168.1.117 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-ctrl-3 Ready control-plane,master 178m v1.23.7 192.168.1.118 192.168.1.118 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-node-1 Ready <none> 176m v1.23.7 192.168.1.135 192.168.1.135 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-node-2 Ready <none> 176m v1.23.7 192.168.1.137 192.168.1.137 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-node-3 Ready <none> 176m v1.23.7 192.168.1.136 192.168.1.136 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-node-4 Ready <none> 176m v1.23.7 192.168.1.119 192.168.1.119 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 - Copy the original Terraform tfstate

file:

# cp terraform.tfstate terraform.tfstate.bkp-orig - On identifying the failed node, drain the node from the Kubernetes

cluster:

# kubectl drain occne3-user-k8s-node-2 --ignore-daemonsets --delete-emptydir-dataThis command ignores daemon sets and all possible storage attached as the failed worker node may contain some local storage volumes attached to it.

Note:

If this command runs without an error, move to Step e. Else, perform Step d. - If Step c fails, perform the following steps to manually remove the pods

that are running in the failed worker node:

- Identify the pods which are not in online state and delete each

of the pods by running the following

command.

Repeat this step until all the pods are removed from the cluster.# kubectl delete pod --force <pod-name> -n <name-space> - Run the following command to drain the node from the Kubernetes

cluster:

# kubectl drain occne3-user-k8s-node-2 --force --ignore-daemonsets --delete-emptydir-data

- Identify the pods which are not in online state and delete each

of the pods by running the following

command.

- Verify if the failed node is removed from the

cluster:

# kubectl get nodesSample output:NAME STATUS ROLES AGE VERSION occne3-user-k8s-ctrl-1 Ready control-plane,master 2d v1.23.7 occne3-user-k8s-ctrl-2 Ready control-plane,master 2d v1.23.7 occne3-user-k8s-ctrl-3 Ready control-plane,master 2d v1.23.7 occne3-user-k8s-node-1 Ready <none> 2d v1.23.7 occne3-user-k8s-node-3 Ready <none> 2d v1.23.7 occne3-user-k8s-node-4 Ready <none> 2d v1.23.7Verify that the target worker node is no longer listed.

- Identify the node that is in a not ready, not reachable, or degraded

state and note the node's IP

address:

- Delete the failed node from OpenStack GUI:

- Log in to the Openstack GUI console by using your credentials.

- From the list of nodes displayed, locate and select the failed worker node.

- From the Actions menu in the last column of the record, select

Delete Instance.

- Reconfirm your action by clicking Delete Instance and wait for the node to be deleted.

- Run terraform apply to recreate and add the node into the Kubernetes cluster:

- Log in to the Bastion Host and switch to the cluster tools directory:

/var/occne/cluster/${OCCNE_CLUSTER}. - Run the following command to log in to the cloud using the openrc.sh

script and provide the required details (username, password, and domain

name):

Example:

$ source openrc.shSample output:Please enter your OpenStack Username for project Team-CNE: user@oracle.com Please enter your OpenStack Password for project Team-CNE as user : ************** Please enter your OpenStack Domain for project Team-CNE: DSEE - Run terraform apply to recreate the

node:

# terraform apply -var-file=$OCCNE_CLUSTER/cluster.tfvars -auto-approve - Locate the IP address of the newly created node in the

terraform.tfstatefile. If the IP is same as the old node that is removed, move to step f. Else, perform Step e.# grep -A6 occne3-user-k8s-node-2 terraform.tfstate | grep ipSample output:"ip": "192.168.1.137", "ip_allocation_mode": "POOL", - If the IP address of the newly created node is different from the old

node's IP, replace the IP address in the following

files:

- /etc/hosts - /var/occne/cluster/${OCCNE_CLUSTER}/hosts.ini - /var/occne/cluster/${OCCNE_CLUSTER}/lbvm/lbCtrlData.json - Run the pipeline command to provide the node with the OS:

Example, considering the affected node as worker-node-2:

# OCCNE_CONTAINERS='(PROV)' OCCNE_DEPS_SKIP=1 OCCNE_ARGS='--limit=occne3-user-k8s-node-2' OCCNE_STAGES=(DEPLOY) pipeline.sh - Run the following command to install and configure Kubernetes. This adds

the node back into the

cluster.

# OCCNE_CONTAINERS='(K8S)' OCCNE_DEPS_SKIP=1 OCCNE_STAGES=(DEPLOY) pipeline.sh - Verify if the node is added back into the

cluster:

# kubectl get nodesSample output:NAME STATUS ROLES AGE VERSION occne3-user-k8s-ctrl-1 Ready control-plane,master 2d1h v1.23.7 occne3-user-k8s-ctrl-2 Ready control-plane,master 2d1h v1.23.7 occne3-user-k8s-ctrl-3 Ready control-plane,master 2d1h v1.23.7 occne3-user-k8s-node-1 Ready <none> 2d1h v1.23.7 occne3-user-k8s-node-2 Ready <none> 111m v1.23.7 occne3-user-k8s-node-3 Ready <none> 2d1h v1.23.7 occne3-user-k8s-node-4 Ready <none> 2d1h v1.23.7

- Log in to the Bastion Host and switch to the cluster tools directory:

4.1.4.3 Recovering a Failed Kubernetes Worker Node in VMware

This section describes the manual procedure to replace a failed Kubernetes Worker Node in a VMware deployment.

Prerequisites

- You must have credentials to access VMware GUI or CLI.

Procedure

- Perform the following steps to identify and remove the failed worker

node:

Note:

Run all the commands as a cloud-user in the/var/occne/cluster/${OCCNE_CLUSTER}folder.- Identify the node that is in a not ready, not reachable, or degraded

state and note the node's IP

address:

kubectl get node -A -o wideSample output:NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME occne3-user-k8s-ctrl-1 Ready control-plane,master 178m v1.23.7 192.168.1.92 192.168.1.92 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-ctrl-2 Ready control-plane,master 178m v1.23.7 192.168.1.117 192.168.1.117 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-ctrl-3 Ready control-plane,master 178m v1.23.7 192.168.1.118 192.168.1.118 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-node-1 Ready <none> 176m v1.23.7 192.168.1.135 192.168.1.135 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-node-2 Ready <none> 176m v1.23.7 192.168.1.137 192.168.1.137 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-node-3 Ready <none> 176m v1.23.7 192.168.1.136 192.168.1.136 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 occne3-user-k8s-node-4 Ready <none> 176m v1.23.7 192.168.1.119 192.168.1.119 Oracle Linux Server 8.6 5.4.17-2136.309.5.el8uek.x86_64 containerd://1.6.4 - Copy the original Terraform tfstate

file:

# cp terraform.tfstate terraform.tfstate.bkp-orig - On identifying the failed node, drain the node from the

Kubernetes

cluster:

# kubectl drain occne3-user-k8s-node-2 --ignore-daemonsets --delete-emptydir-dataThis command ignores daemon sets and all possible storage attached as the failed worker node may contain some local storage volumes attached to it.

Note:

If this command runs without an error, move to Step e. Else, perform Step d. - If Step c fails, perform the following steps to manually remove the pods

that are running in the failed worker node:

- Identify the pods which are not in online state and delete each

of the pods by running the following

command.

Repeat this step until all the pods are removed from the cluster.# kubectl delete pod --force <pod-name> -n <name-space> - Run the following command to drain the node from the Kubernetes

cluster:

# kubectl drain occne3-user-k8s-node-2 --force --ignore-daemonsets --delete-emptydir-data

- Identify the pods which are not in online state and delete each

of the pods by running the following

command.

- Verify if the failed node is removed from the

cluster:

# kubectl get nodesSample output:NAME STATUS ROLES AGE VERSION occne3-user-k8s-ctrl-1 Ready control-plane,master 2d v1.23.7 occne3-user-k8s-ctrl-2 Ready control-plane,master 2d v1.23.7 occne3-user-k8s-ctrl-3 Ready control-plane,master 2d v1.23.7 occne3-user-k8s-node-1 Ready <none> 2d v1.23.7 occne3-user-k8s-node-3 Ready <none> 2d v1.23.7 occne3-user-k8s-node-4 Ready <none> 2d v1.23.7Verify that the target worker node is no longer listed.

- Identify the node that is in a not ready, not reachable, or degraded

state and note the node's IP

address:

- Login into the VCD or VMWare console and manually delete the failed node from VMware.

- Recreate and add the node back into the Kubernetes

cluster:

Note:

Run all the commands as a cloud-user in the/var/occne/cluster/${OCCNE_CLUSTER}folder.- Run terraform apply to recreate the

node:

# terraform apply -var-file=$OCCNE_CLUSTER/cluster.tfvars -auto-approve - Locate the IP address of the newly created node in the

terraform.tfstatefile. If the IP is same as the old node that is removed, move to step d. Else, perform Step c.# grep -A6 occne3-user-k8s-node-2 terraform.tfstate | grep ipSample output:"ip": "192.168.1.137", "ip_allocation_mode": "POOL", - If the IP address of the newly created node is different

from the old node's IP, replace the IP address in the following

files:

- /etc/hosts - /var/occne/cluster/${OCCNE_CLUSTER}/hosts.ini - /var/occne/cluster/${OCCNE_CLUSTER}/lbvm/lbCtrlData.json - Run the pipeline command to provide the node with the OS:

Example, considering the affected node as worker-node-2:

# OCCNE_CONTAINERS='(PROV)' OCCNE_DEPS_SKIP=1 OCCNE_ARGS='--limit=occne3-user-k8s-node-2' OCCNE_STAGES=(DEPLOY) pipeline.sh - Run the following command to install and configure

Kubernetes. This adds the node back into the

cluster.

# OCCNE_CONTAINERS='(K8S)' OCCNE_DEPS_SKIP=1 OCCNE_STAGES=(DEPLOY) pipeline.sh - Verify if the node is added back into the

cluster:

# kubectl get nodesSample output:NAME STATUS ROLES AGE VERSION occne3-user-k8s-ctrl-1 Ready control-plane,master 2d1h v1.23.7 occne3-user-k8s-ctrl-2 Ready control-plane,master 2d1h v1.23.7 occne3-user-k8s-ctrl-3 Ready control-plane,master 2d1h v1.23.7 occne3-user-k8s-node-1 Ready <none> 2d1h v1.23.7 occne3-user-k8s-node-2 Ready <none> 111m v1.23.7 occne3-user-k8s-node-3 Ready <none> 2d1h v1.23.7 occne3-user-k8s-node-4 Ready <none> 2d1h v1.23.7

- Run terraform apply to recreate the

node:

4.1.5 Restoring CNE from Backup

This section provides details about restoring a CNE cluster from backup.

4.1.5.1 Prerequisites

Before restoring CNE from backups, ensure that the following prerequisites are met.

- The CNE cluster must have been backed up successfully. For more information about taking a CNE backup, see the "Creating CNE Cluster Backup" sectiopn of Oracle Communications Cloud Native Core, Cloud Native Environment User Guide.

- At least one Kubernetes controller node must be operational.

- As this is a non-destructive restore, all the corrupted or non-functioning resources must be destroyed before initiating the restore process.

- This procedure replaces your current cluster directory with the one saved in your CNE cluster backup. Therefore before performing a restore, backup any Bastion directory file that you consider sensitive.

- For a bare metal deployment, the following rook-ceph storage

classes must created and made available:

- standard

- occne-esdata-sc

- occne-esmaster-sc

- occne-metrics-sc

- For a bare metal deployment, PVCs must be created for all, except bastion-controller.

- For a vCNE deployment, PVCs must be created for all, except bastion-controller and lb-controller.

Note:

- Velero backups have a default retention period of 30 days. CNE provides only the non-expired backups for an automated cluster restore.

- Perform the restore procedure from the same Bastion Host from which the backups were taken from.

4.1.5.2 Performing a Cluster Restore From Backup

This section describes the procedure to restore a CNE cluster from backup.

Note:

- The backup restore procedure can restore backups of both Bastion and Velero.

- This procedure is used for running a restore for the first time only. If you want to rerun a restore, see Rerunning Cluster Restore.

Dropping All CNE Services:

Perform the following steps to run thevelero_drop_services.sh script to drop only the

currently supported services:

- Navigate to the

/var/occne/cluster/${OCCNE_CLUSTER}/artifacts/directory where thevelero_drop_services.shis located:$ cd /var/occne/cluster/${OCCNE_CLUSTER}/artifacts/ - Run the

velero_drop_services.shscript:Note:

If you are using this script for the first time, you can run the script by passing--help / -has an argument or run the script without passing any argument to get more information about the script../restore/velero_drop_services.sh -hSample output:This script helps you drop services to prepare your cluster for a velero restore from backup, it receives a space separated list of arguments for uninstalled different components Usage: provision/provision/roles/bastion_setup/files/scripts/backup/velero_drop_services.sh [space separated arguments] Valid arguments: - bastion-controller - opensearch - fluentd-opensearch - jaeger - snmp-notifier - metrics-server - nginx-promxy - promxy - vcne-egress-controller - istio - cert-manager - kube-system - all: Drop all the above Note: If you place 'all' anywhere in your arguments all will be dropped.

velero_drop_services.sh script to drop a

service or set of services. For example:

- To drop a services or a set of services,

pass the service names as a space separated

list:

./velero_drop_services.sh jaeger fluentd-opensearch istio

- To drop all the supported services, use

all:./velero_drop_services.sh all

Initiating Cluster Restore

- Perform the following steps to initiate a cluster

restore:

- Navigate to the

/var/occne/cluster/${OCCNE_CLUSTER}/artifacts/directory:$ cd /var/occne/cluster/${OCCNE_CLUSTER}/artifacts - Run the

createClusterRestore.pyscript:- If you do not know the name of the backup that

you are going to restore, run the following

command to choose the backup from the available

list and then run the

restore:

$ ./restore/createClusterRestore.pySample output:Please choose and type the available backup you want to restore into your OCCNE cluster - occne3-cluster-20230706-160923 - occne3-cluster-20230706-185856 - occne3-cluster-20230706-190007 - occne3-cluster-20230706-190313 Please type the name of your backup: ... - If you know the name of the back that you are

going to restore, run the script by passing the

backup

name:

$ ./restore/createClusterRestore.py $<BACKUP_NAME>where,

<BACKUP_NAME>is the name of the Velero backup previously created.For example, considering the backup name as "occne-cluster-20230706-190313" the restore script is run as follows:$ ./restore/createClusterRestore.py $occne-cluster-20230706-190313Sample output:Initializing cluster restore with backup: occne-cluster-20230706-190313... Initializing bastion restore : 'occne-cluster-20230706-190313' Downloading bastion backup occne-cluster-20230706-190313 Successfully downloaded bastion backup occne-cluster-20230706-190313.tar at home directory GENERATED LOG FILE AT: /var/occne/cluster/occne-cluster/downloadBastionBackup-20230706-201508.log - Finished bastion backup restore GENERATED LOG FILE AT: /home/cloud-user/createBastionRestore-20230706-201508.log Initializing Velero K8s restore : 'occne-cluster-20230706-190313' Successfully created velero restore Successfully created cluster restore

- If you do not know the name of the backup that

you are going to restore, run the following

command to choose the backup from the available

list and then run the

restore:

- Navigate to the

Verifying Restore

- When Verlero restore is completed, it may take several minutes

for Kubernetes resources to fully up and functional. You

must monitor the restore to ensure that all services are up

and running. Run the following command to get the status of

all pods, deployments, and

services:

$ kubectl get all -n occne-infra - Once you verify that all resources are restored,

run a cluster test to verify if every single resource is up

and running.

$ OCCNE_CONTAINERS=(CFG) OCCNE_STAGES=(TEST) pipeline.sh

4.1.5.3 Rerunning Cluster Restore

This section describes the procedure to rerun a restore that is already completed successfully.

- Navigate to the

/var/occne/cluster/$OCCNE_CLUSTER/artifactsdirectory:$ cd /var/occne/cluster/$OCCNE_CLUSTER/artifacts - Open the

cluster_restores_log.jsonfile:vi ./restore/cluster_restores_log.jsonSample output:{ "occne-cluster-20230712-220439": { "cluster-restore-state": "COMPLETED", "bastion-restore": { "state": "COMPLETED" }, "velero-restore": { "state": "COMPLETED" } } } - Edit the file to set the value of

"cluster-restore-state"to"RESTART"as shown in the following code block:{ "occne-cluster-20230712-220439": { "cluster-restore-state": "RESTART", "bastion-restore": { "state": "COMPLETED" }, "velero-restore": { "state": "COMPLETED" } } } - Perform the following steps to remove the previously created Velero

restore objects:

- Run the following command to delete the Velero restore

object:

$ velero restore delete $<BACKUP_NAME>where,

<BACKUP_NAME>is the name of the previously created Velero backup. - Wait until the restore object is deleted and verify the

same using the following

command:

$ velero get restore - Run the Dropping All CNE Services procedure to delete all the services that were created when this procedure was run first.

- Verify that Step c is completed successfully and no resources are left. Spiking this verification can cause the cluster to go into an unrecoverable state.

- Run the CNE restore script without an interactive

menu:

$ ./restore/createClusterRestore.py $<BACKUP_NAME>where,

<BACKUP_NAME>is the name of the previously created Velero backup.

- Run the following command to delete the Velero restore

object:

4.1.5.4 Rerunning a Failed Cluster Restore

This section describes the procedure to rerun a restore that failed.

CNE provides options to resume cluster restore from the stage it failed. Perform any of the following steps depending on the stage in which your restore failed:

Bastion Host Failure

To resume the cluster restore from this stage, rerun the restore script without using the interactive menu:$ ./restore/createClusterRestore.py $<BACKUP_NAME>where,

<BACKUP_NAME> is the name of the Velero backup previously

created.

Kubernetes Velero Restore Failure

- Run the following command to delete the Velero restore

object:

$ velero restore delete $<BACKUP_NAME>where,

<BACKUP_NAME>is the name of the previously created Velero backup. - Wait until the restore object is deleted and verify the same using

the following

command:

$ velero get restore - Run the Dropping All CNE Services procedure to delete all the services that were created when this procedure was run first.

- Verify that Step c is completed successfully and no resources are left. Spiking this verification can cause the cluster to go into an unrecoverable state.

- Run the CNE restore script without an interactive

menu:

$ ./restore/createClusterRestore.py $<BACKUP_NAME>where,

<BACKUP_NAME>is the name of the previously created Velero backup.

Modifying Annotations and Deleting PV in Kubernetes:

If the restore fails at this point and shows that the are pods are

waiting for their PVs, use the updatePVCAnnotations.py script to

automatically modify annotations and delete PV in Kubernetes.

updatePVCAnnotations.py script is used to:

- add specific annotations to the affected PVCs for specifying storage provider.

- remove affected pods associated with the affected PVCs to force the pods to recreate themselves.

updatePVCAnnotations.py

script:$ ./restore/updatePVCAnnotations.py4.1.5.5 Troubleshooting Restore Failures

This section provides the guidelines to troubleshoot restore failures.

Prerequisites

Before using this section to troubleshoot a restore failure, verify the following:- Verify connectivity with S3 object storage.

- Verify if the credentials used while activating Velero are still active.

- Verify if the credentials are granted with read or write permission.

Troubleshooting Failed Bastion Restore

Table 4-1 Troubleshooting Failed Bastion Restore

| Cause | Possible Solution |

|---|---|

|

|

Troubleshooting Failed Kubernetes Velero Restore

Table 4-2 Troubleshooting Failed Kubernetes Velero Restore

| Cause | Possible Solution |

|---|---|

| Velero backup object are not available. | Run the following command and verify the following:

Sample

output:

|

| PVCs are not attached correctly. |

Verify that, after a restore, every PVC that was created with

the CNE services under the occne-infra is still available

and is in

Bound

status:Sample

output:

|

| PVs are not available. | Verify that, before and after a restore, PVs are

available for the common services to

restore:Sample

output:

|

4.2 Common Services

This section describes the fault recovery procedures for common services.

4.2.1 Restoring a Failed Load Balancer

This section provides a detailed procedure to restore a Virtualized CNE (vCNE) Load Balancer that fails when the Kubernetes cluster is in service. This procedure also can be used to recreate an LBVM that is manually deleted.

- You must know the reason for the Load Balancer Virtual Machines (LBVM) failure.

- You must know the LBVM name to be replaced and the address pool.

- Ensure that the

cluster.tfvarsfile is available for terraform to recreate the LBVM. - You must run this procedure in the active bastion.

- The following procedure does not attempt to determine the failure of LBVM.

- The role or status of LBVM to be replaced must not be ACTIVE.

- If a LOAD_BALANCER_NO_SERVICE alert is raised and both LBVMs are down, then this procedure must be used to recover one LBVM at a time.

4.2.2 Restoring a Failed LB Controller

This section provides a detailed procedure to restore a failed LB controller using a backup crontab.

- Ensure that a LB controller is installed.

- Ensure that a metal-lb is installed.

Creating Backup Crontab

- Run the following command to switch to the root

user:

sudo su - Create the

backuplbcontroller.shfile in root directory ("/root"):cd /root vi backuplbcontroller.shAdd the following content tobackuplbcontroller.sh:#!/bin/bash OCCNE_CLUSTER=$1 export KUBECONFIG=/var/occne/cluster/$OCCNE_CLUSTER/artifacts/admin.conf occne_lb_pod_name=$(/var/occne/cluster/$OCCNE_CLUSTER/artifacts/kubectl get po -n occne-infra | grep occne-lb-controller | awk '{print $1}' 2>&1) timenow=`date +%Y-%m-%d.%H:%M:%S` if [ -z "$occne_lb_pod_name" ] then echo "\$occne_lb_pod_name could not be found $timenow" >> lb_backup.log else echo "Backing up db from pod" $occne_lb_pod_name on $timenow >> lb_backup.log containercopy=$(/var/occne/cluster/$OCCNE_CLUSTER/artifacts/kubectl cp occne-infra/$occne_lb_pod_name:data/sqlite/db tmp 2>&1) echo "Backup db from pod " $occne_lb_pod_name on $timenows status $containercopy >> lb_backup.log fi - Run the following command to add the executable permission for

backuplbcontroller.sh:chmod +x backuplbcontroller.sh - Run the following command to edit crontab:

crontab -e - Add the following entry to

crontab:

* * * * * ./backuplbcontroller.sh <CLUSTER_NAME>where,

<CLUSTER_NAME>is the name of the cluster. - Run the following command to view the contents in

crontab:

crontab -l

Reinitiating LB Controller Restore in case of PVC Failure

- Run the following command as root user in root directory

(

"/root") to edit crontab:crontab -e - Remove the following entry from crontab to stop the automated backup

of LB controller

database:

* * * * * ./backuplbcontroller.sh <CLUSTER_NAME> - Exit from root user and run the following command to uninstall

metallbandlbcontroller. These components must be uninstalled to recreatebgppeering.

After uninstallinghelm uninstall occne-metallb occne-lb-controller -n occne-inframetallbandlbcontroller, wait for the pod and PVC to terminate before proceeding to the next step. - Reinstall

metallbandlb_controllerpods:OCCNE_CONTAINERS=(CFG) OCCNE_STAGES=(DEPLOY) OCCNE_ARGS="--tags=metallb,vcne-lb-controller" /var/occne/cluster/occne4-jose-y-hernandez/artifacts/pipeline.sh - Set

UPGRADE_IN_PROGRESSto true. This setting stops the monitor in lb-controller after the install until the DB file is updated:lb_deployment=$(kubectl get deploy -n occne-infra | grep occne-lb-controller | awk '{print $1}') kubectl set env deployment/$lb_deployment UPGRADE_IN_PROGRESS="true" -n occne-infraWait for pod to be recreated before proceeding to the next step.

- Load the DB file back to the container using the

kubectl cpfrom Bastion Host:occne_lb_pod_name=$(kubectl get po -n occne-infra | grep occne-lb-controller | awk '{print $1}') kubectl cp /tmp/lbCtrlData.db occne-infra/$occne_lb_pod_name:/data/sqlite/db - Reset the

UPGRADE_IN_PROGRESScontainer environment variable to false so that the monitor starts again:kubectl set env deployment/$lb_deployment UPGRADE_IN_PROGRESS="false" -n occne-infraWait for the LB controller pod to terminate and recreate before proceeding to the next step.

- Follow the Creating Backup Crontab procedure to create the crontab and start the backup of LB controller.