2 Installing CNE

This chapter provides information about installing Oracle Communications Cloud Native Core, Cloud Native Environment (CNE). CNE can be deployed onto dedicated hardware, referred to as a baremetal CNE, or deployed onto Virtual Machines, referred to as a virtualized CNE.

Regardless of which deployment platform is selected, CNE installation is highly automated. A collection of container-based utilities automate the provisioning, installation, and configuration of CNE. These utilities are based on the following automation tools:

- PXE helps to reliably automate the process of provisioning the hosts with a minimal operating system.

- Terraform creates the virtual resources that are used to host the virtualized CNE.

- Kubespray helps reliably install a base Kubernetes cluster, including all dependencies such as etcd, using the Ansible provisioning tool.

- Ansible is used to deploy and manage a collection

of operational tools (Common Services) that are:

- provided by open source third party products such as Prometheus and Grafana

- built from source and packaged as part of CNE releases such as Oracle OpenSearch and Oracle OpenSearch Dashboard

- Kyverno Policy management is used to enforce security posture in CNE.

- Helm is used to deploy and configure common services such as Prometheus, Grafana, and OpenSearch.

Note:

- Ensure that the shell is configured with Keepalive to avoid unexpected timeout.

- The 'X' in Oracle Linux

Xor OLXin the installation procedures indicates the latest version of Oracle Linux supported by CNE.

2.1 Preinstallation Tasks

This section describes the procedure to run before installing an Oracle Communications Cloud Native Environment also known in these installation procedures as CNE.

2.1.1 Sizing Kubernetes Cluster

CNE deploys a Kubernetes cluster to host application workloads and common services. The minimum node counts as per table Table 2-1 are required for production deployments of CNE.

Note:

Deployments that do not meet the minimum sizing requirements may not operate correctly when maintenance operations (including upgrades) are performed on the CNE.Table 2-1 Kubernetes Cluster Sizing

| Node Type | Minimum Required | Maximum Allowed |

|---|---|---|

| Kubernetes controller node | 3 | 3 |

| Kubernetes worker node | 6 | 100 |

2.1.2 Sizing Prometheus Persistent Storage

Prometheus stores metrics in Kubernetes persistent storage. Use the following calculations to reserve the correct amount of persistent storage during installation so that Prometheus can store metrics for the desired retention period.

total_metrics_storage = (nf_metrics_daily_growth + occne_metrics_daily_growth) * metrics_retention_period * 1.2Note:

- An extra 20% storage is reserved to allow for a future increase in metrics growth.

- It is recommended to maintain the

metrics_retention_periodat the default value of 14 days. - If the resulting storage size as per the above formula is greater than 500GB, then the retention period must be reduced until the resulting value is less than 500GB.

- Ensure that the retention period is set to more than 3 days.

- The default value for Prometheus persistent storage is 8GB.

- If the

total_metrics_storagevalue calculated as per the above fomula is less than 8GB, then use the default value.

One NF is installed on the OCCNE instance. From the NFs documentation, we learn that it generates 150 MB of metrics data per day at the expected ingress signaling traffic rate. Using the formula above:

metrics_retention_period = 14 days

nf_metrics_daily_growth = 150 MB/day (from NF documentation)

occne_metrics_daily_growth = 144 MB/day (from calculation below)

(0.15 GB/day + 0.144 GB/day) * 14 days * 1.2 = 5 GB (rounded up)

Since this is less than the default value of 8 GB, use 8 GB as the total_metrics_storage value.Note:

After determining the required metrics storage, record thetotal_metrics_storage

value for later use in the installation procedures.

Calculating CNE metrics daily storage growth requirements

CNE stores varying amounts of metrics data

each day depending on the size of the Kubernetes cluster deployed in the CNE

instance. To determine the correct occne_metrics_daily_growth

value for the CNE instance, use the

formula:

occne_metrics_daily_growth = 36 MB * num_kubernetes_worker_nodes2.1.3 Sizing Oracle OpenSearch Persistent Storage

Oracle OpenSearch stores logs and traces in Kubernetes persistent storage. Use the following calculations to reserve the correct amount of persistent storage during installation so that Oracle OpenSearch can store logs and traces for the desired retention period.

Calculating log+trace storage requirementslog_trace_daily_growth = (nf_logs_daily_growth + nf_trace_daily_growth + occne_logs_daily_growth)log_trace_active_storage = log_trace_daily_growth * 2 * 1.2

log_trace_inactive_storage = log_trace_daily_growth * (log_trace_retention_period-1) * 1.2Note:

- An extra day's worth of storage is allocated on the hot data nodes that gets used when deactivating the old daily indices.

- An extra 20% storage is reserved to allow for a future increase in logging growth.

- It is recommended to maintain the

log_trace_retention_periodat the default value of 7 days. - If the resulting storage size as per the above formula is greater than 500GB, then the retention period must be reduced until the resulting value is less than 500GB.

- Ensure that the retention period is set to more than 3 days.

- The default values for Oracle OpenSearch persistent storage for

log_trace_active_storageis 10GB and forlog_trace_inactive_storageis 30GB - If the

log_trace_active_storageandlog_trace_inactive_storagevalues calculated as per the above fomula is less than 10GB and 30GB, then use the default value.

One NF will be installed on the OCCNE instance. From the NFs documentation, we learn that it generates 150 MB of logs data per day at the expected ingress signaling traffic rate. Using the formula above:

log_trace_retention_period = 7

nf_mps_rate = 200 msgs/sec

nf_logs_daily_growth = 150 MB/day (from NF documentation)

nf_trace_daily_growth = 500 MB/day (from section below)

occne_logs_daily_growth = 100 MB/day (from section below)

log_trace_daily_growth = (0.15 GB/day + 0.50 GB/day + 0.10 GB/day) = 0.75 GB/day

log_trace_active_storage = 0.75 * 2 * 1.2 = 1.8 GB

log_trace_inactive_storage = 0.75 * 6 * 1.2 = 5.4 GBNote:

After determining the required logs and trace storage, record thelog_trace_active_storage and

log_trace_inactive_storage values for later use in the

installation procedures.

Calculating NF trace data daily storage growth requirements

NFs store varying amounts of trace data each day, depending on the ingress traffic rate, the trace sampling rate, and the error rate for handling ingress traffic. The default trace sampling rate is .01%. Space is reserved for 10M trace records per NF per day (an amount equivalent to a 1% trace sampling rate) and uses 50 bytes as the average record size (as measured during testing). 1% is used instead of .01% to account for the capture of error scenarios and overhead.

nf_trace_daily_num_records = 10M records/day

nf_trace_avg_record_size = 50 bytes/record

nf_trace_daily_growth = 10M records/day * 50 bytes/record = 500 MB/dayRecord the value log_trace_daily_growth for later use

in the installation procedures.

Note:

Ensure that trace sampling rate is set to less than 1% under normal circumstances. Collecting a higher percentage of traces causes the Oracle OpenSearch to respond more slowly, and impacts the performance of the CNE instance. If you want to collect a higher percentage of traces, contact Oracle customer service.Calculating CNE logs daily storage growth requirements

occne_logs_daily_growth value for the CNE instance, use the

formula:occne_logs_daily_growth = 25 MB * num_kubernetes_worker_nodesNote:

CNE does not generate any traces, so no additional storage is reserved for CNE trace data.2.1.4 Generating Root CA Certificate

To use an intermediate Certificate Authority (CA) as an issuer for Istio service mesh mTLS certificates, a signing certificate and key from an external CA must be generated. The generated certificate and key values must be base64 encoded.

Check the Certificate Authority documentation for generating the required certificate and key.

2.2 Baremetal Installation

Note:

Before installing CNE in bare metal, you must complete the preinstallation tasks.2.2.1 Overview

2.2.1.1 Frame and Component

The initial release of the CNE system provides support for on-prem deployment to a very specific target environment consisting of a frame holding switches and servers. This section describes the layout of the frame and the roles performed by the racked equipment.

2.2.1.1.1 Frame

The physical frame is comprised of DL380 or DL360 rack mount servers, and 2 Top of Rack (ToR) Cisco switches. The frame components are added from the bottom up, thus designations found in the next section number from the bottom of the frame to the top of the frame.

Figure 2-1 Frame Overview

2.2.1.1.2 Host Designations

Each physical server has a specific role designation within the CNE solution.

Figure 2-2 Host Designations

2.2.1.1.3 Node Roles

Along with the primary role of each host, you can assign a secondary role. The secondary role can be software related or in the case of the Bootstrap Host, hardware related, as there are unique out-of-band (OOB) connection to the ToR switches.

Figure 2-3 Node Roles

2.2.1.1.4 Transient Roles

RMS1 has unique out-of-band (OOB) connections to the ToR switches, which brings the designation of management host. This role is only relevant during initial switch configuration and fault recovery of the switch. RMS1 also has a transient role as the Installer Bootstrap Host, which is applicable only during initial installation of the frame, and subsequently to get an official install on RMS2. Later, this host is re-paved to its K8s Master role.

Figure 2-4 Transient Roles

2.2.1.2 Creating CNE Instance

This section describes the procedures to create the CNE instance at a customer site. The following diagram shows the installation context:

Figure 2-5 CNE Installation Overview

Following is the basic installation flow to understand the overall effort:

- Check that the hardware is on-site, properly cabled, and powered up.

- Pre-assemble the basic equipments needed to perform

a successful install:

-

Identify

- Download and stage software and other configuration files using the manifests.

- Identify the layer 2 (MAC) and layer 3 (IP) addresses for the equipment in the target frame.

- Identify the addresses of key external network services, for example, NTP, DNS, and so on.

- Verify or set all of the credentials for the target frame hardware to known settings.

-

Prepare

- Software Repositories: Load the various SW repositories (YUM, Helm, Docker, and so on) using the downloaded software and configuration files.

- Configuration Files: Populate the host's inventory file with credentials, layer 2 and layer 3 network information, switch configuration files with assigned IP addresses, and yaml files with appropriate information.

-

Identify

- Bootstrap the System:

- Manually configure a Minimal Bootstrapping Environment (MBE): perform the minimal set of manual operations to enable networking and initial loading of a single Rack Mount Server (RMS1), the transient Installer Bootstrap Host. In this procedure, a minimal set of packages needed to configure switches, iLOs, PXE boot environment, and provision RMS2 as an CNE Storage Host are installed.

- Using the newly constructed MBE, automatically create the first Bastion Host on RMS2.

- Using the newly constructed Bastion Host on RMS2, automatically deploy and configure the CNE on the other servers in the frame.

- Final Steps:

- Perform postinstallation checks.

- Perform recommended security hardening steps.

Cluster Bootstrapping Overview

The following install procedure describes how to install the CNE onto a new hardware that does not contain any networking configurations to switches or provisioned operating systems. Therefore, the initial step in the installation process is to provision RMS1 (see Installing CNE) as a temporary Installer Bootstrap Host. The Bootstrap Host is configured with a minimal set of packages to configure switches, iLOs, and Boot Firmware. From the Bootstrap host, a virtual Bastion Host is provisioned on RMS2. The Bastion Host is then used to provision (and in the case of the Bootstrap Host, re-provision) the remaining CNE hosts, install Kubernetes, and Common Services running within the Kubernetes cluster.

2.2.2 Prerequisites

Before installing and configuring CNE on Bare Metal, ensure that the following prerequisites are met.

Note:

Ensure that Oracle X8-2 or X9-2 Server's Integrated Lights Out Manager (ILOM) firmware is up to date. The ILOM firmware is crucial for seamless functioning of CNE and is essential for optimal performance, security, and compatibility. To update the ILOM firmware, perform the steps outlined in the Oracle documentation or contact the system administrator.2.2.2.1 Configuring Artifact Acquisition and Hosting

CNE requires artifacts from Oracle Software Delivery Cloud (OSDC), Oracle Support (MOS), the Oracle YUM repository, and certain open-source projects. CNE deployment environments are not expected to have direct internet access. Thus, customer-provided intermediate repositories are necessary for the CNE installation process. These intermediate repositories need CNE dependencies to be loaded into them. This section provides the list of artifacts needed in the repositories.

2.2.2.1.1 Oracle eDelivery Artifact Acquisition

Table 2-2 CNE Artifacts

| Artifact | Description | File Type | Destination repository |

|---|---|---|---|

| occne-images-23.4.x.tgz | CNE Installers (Docker images) from OSDC/MOS | Tar GZ | Docker Registry |

| Templates | OSDC/MOS

|

Config files (.conf, .ini, .yaml, .mib, .sh, .txt) | Local media |

2.2.2.1.2 Third Party Artifacts

CNE dependencies needed from open-source software must be available in repositories that are reachable by the CNE installation tools. For an accounting of third party artifacts needed for this installation, see the Artifact Acquisition and Hosting chapter.

2.2.2.1.3 Populating MetalLB Configuration

Introduction

The metalLB resources file (mb_resources.yaml) defines the

BGP peers and address pools for metalLB. The mb_resources.yaml file must be

placed in the same directory (/var/occne/<cluster_name>) as the

hosts.ini file.

Limitations

The IP addresses listed below can have three possible formats. Each peer address pool can use a different format from the others if desired.- IP List

A list of IPs (each on a single line) in single quotes in the following format: 'xxx.xxx.xxx.xxx/32'. The IPs do not have to be sequential. The number of IPs must cover the number of IPs as needed by the application.

- IP Range

A range of IPs, separated by a dash in the following format: 'xxx.xxx.xxx.xxx - xxx.xxx.xxx.xxx'. The range must cover the number of IPs as needed by the application.

- CIDR (IP-slash notation)

A single subnet defined in the following format: 'xxx.xxx.xxx.xxx/nn'. The CIDR must cover the number of IPs as needed by the application.

- The peer-address IP must be a different subnet from the IP subnets used to define the IPs for each peer address pool.

mb_resources.yaml file.

Configuring MetalLB Pools and Peers

- Add BGP peers and address groups: Referring to the data collected in the Installation Preflight Checklist, add BGP peers (ToRswitchA_Platform_IP, ToRswitchB_Platform_IP) and address groups for each address pool. The Address-pools list the IP addresses that metalLB is allowed to allocate.

- Edit the

mb_resources.yamlfile with the site-specific values found in the Installation Preflight ChecklistNote:

Theoampeer address pool is required for defining the IPs. Other pools are application specific and can be named to best fit the application it applies to. The following examples show howoamandsignalingare used to define the IPs, each using a different method.Example for oam:

apiVersion: metallb.io/v1beta2 kind: BGPPeer metadata: creationTimestamp: null name: peer1 namespace: occne-infra spec: holdTime: 1m30s keepaliveTime: 0s myASN: 64512 passwordSecret: {} peerASN: 64501 peerAddress: <ToRswitchA_Platform_IP> status: {} --- apiVersion: metallb.io/v1beta2 kind: BGPPeer metadata: creationTimestamp: null name: peer2 namespace: occne-infra spec: holdTime: 1m30s keepaliveTime: 0s myASN: 64512 passwordSecret: {} peerASN: 64501 peerAddress: <ToRswitchB_Platform_IP> status: {} --- apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: creationTimestamp: null name: oam namespace: occne-infra spec: addresses: - '<MetalLB_oam_Subnet_IPs>' autoAssign: false status: {} --- apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: creationTimestamp: null name: <application_specific_peer_address_pool_name> namespace: occne-infra spec: addresses: - '<MetalLB_app_Subnet_IPs>' autoAssign: false status: {} --- apiVersion: metallb.io/v1beta1 kind: BGPAdvertisement metadata: creationTimestamp: null name: bgpadvertisement1 namespace: occne-infra spec: ipAddressPools: - oam - <application_specific_peer_address_pool_name> status: {}Example for signaling:apiVersion: metallb.io/v1beta2 kind: BGPPeer metadata: creationTimestamp: null name: peer1 namespace: occne-infra spec: holdTime: 1m30s keepaliveTime: 0s myASN: 64512 passwordSecret: {} peerASN: 64501 peerAddress: 172.16.2.3 status: {} --- apiVersion: metallb.io/v1beta2 kind: BGPPeer metadata: creationTimestamp: null name: peer2 namespace: occne-infra spec: holdTime: 1m30s keepaliveTime: 0s myASN: 64512 passwordSecret: {} peerASN: 64501 peerAddress: 172.16.2.2 status: {} --- apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: creationTimestamp: null name: oam namespace: occne-infra spec: addresses: - '10.75.200.22/32' - '10.75.200.23/32' - '10.75.200.24/32' - '10.75.200.25/32' - '10.75.200.26/32' autoAssign: false status: {} --- apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: creationTimestamp: null name: signalling namespace: occne-infra spec: addresses: - '10.75.200.30 - 10.75.200.40' autoAssign: false status: {} --- apiVersion: metallb.io/v1beta1 kind: BGPAdvertisement metadata: creationTimestamp: null name: bgpadvertisement1 namespace: occne-infra spec: ipAddressPools: - oam - signalling status: {}

2.2.3 Predeployment Configuration - Preparing a Minimal Boot Strapping Environment

The steps in this section provide the details to establish a minimal bootstrap environment (to support the automated installation of the CNE environment) on the Installer Bootstrap Host using a Keyboard, Video, Mouse (KVM) connection.

2.2.3.1 Installing Oracle Linux X.x on Bootstrap Host

This procedure defines the steps to install Oracle Linux X.x onto the CNE Installer Bootstrap Host. This host is used to configure the networking throughout the system and install OLX. After OLX installation, the host is repaved as a Kubernetes Master Host in a later procedure.

Prerequisites

- USB drive of sufficient size to contain the ISO (approximately 5Gb)

- Oracle Linux X.x iso (For example: Oracle Linux 9.x iso) is available

- YUM repository file is available

- Keyboard, Video, Mouse (KVM) are available

Limitations and Expectations

- The configuration of the Installer Bootstrap Host has to be quick and easy. The Installer Bootstrap Host is re-paved with the appropriate OS configuration for cluster and DB operation at a later installation stage. The Installer Bootstrap Host needs a Linux OS and some basic network to start the installation process.

- All steps in this procedure are performed using Keyboard, Video, Mouse (KVM).

Bootstrap Install Procedure

- Create Bootable USB Media:

- On the installer's notebook, download the OLX ISO from the customer's repository.

- Push the OLX ISO image onto the

USB Flash Drive.

Since the installer's notebook can be Windows or Linux OS, you must determine the appropriate details to run this task. For a Linux based notebook, insert a USB Flash Drive of the appropriate size into a Laptop (or some other Linux host on which you can copy the ISO), and run the

ddcommand to create a bootable USB drive with the Oracle Linux X ISO (For example: Oracle Linux 9 ISO).$ dd -if=<path to ISO> -of=<USB device path> -bs=1m

- Install Oracle Linux on the Installer Bootstrap Host:

Note:

The following procedure considers installing OL9 and provides the options and commands accordingly. The procedure vary for other versions.- Connect a Keyboard, Video, and Mouse (KVM) into the Installer Bootstrap Host's monitor and USB ports.

- Plug the USB flash drive containing the bootable ISO into an available USB port on the Bootstrap host (usually in the front panel).

- Reboot the host by momentarily

pressing the power button on the host's front panel. The button turns

yellow. If the button stays yellow, press the button again. The host

automatically boots onto the USB flash drive.

Note:

If the host was configured previously, and the USB is unavailable in the bootable path as per the boot order, the booting process will be unsuccessful. - If the host is unable to boot

onto the USB, repeat step 2c, and interrupt the boot process by pressing

F11 button

which displays the Boot Menu.

If the host has been recently booted with an OL, the Boot Menu displays Oracle Linux at the top of the list. Select Generic USB Boot as the first boot device and proceed.

- The host attempts to boot from

the USB. The Boot Menu is displayed on the screen. Select

Install Oracle Linux 9.x.y and click ENTER. This

begins the boot process and the system displays the Welcome screen.

When prompted for the language to use, select the default setting: English (United States) and click Continue in the lower left corner.

Note:

You can also select the second option Test this media & install Oracle Linux 9.x.y. This option first runs the media verification process. This process takes some time to complete. - The options on the Welcome screen are displayed with a list of languages on the left and a list of locales on the right. Select the appropriate language from the search box below the list of language.

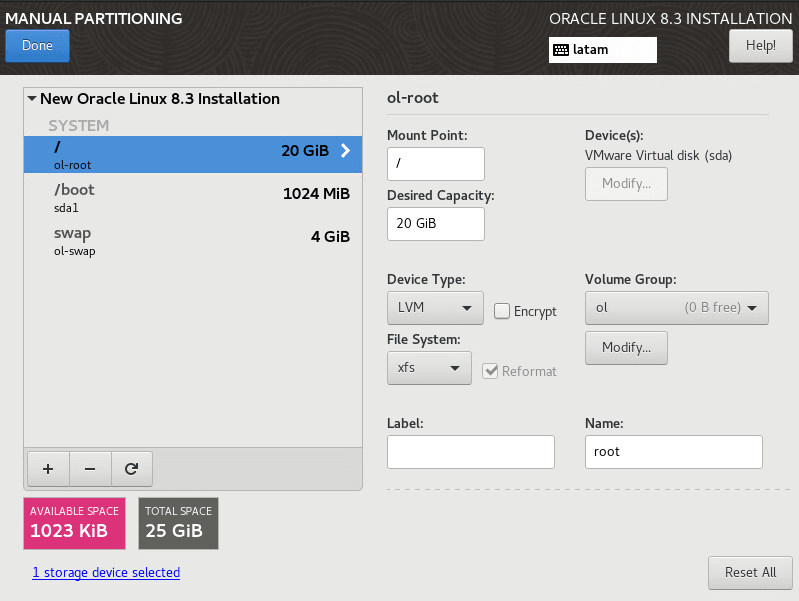

- The system displays the INSTALLATION

SUMMARY page. The system expects the following settings on the

page. If any of these are not set correctly, then select that menu item

and make the appropriate changes.

- LANGUAGE SUPPORT: English (United States)

- KEYBOARD: English (US)

- INSTALLATION SOURCE: Local Media

- SOFTWARE SELECTION: Minimal Install

- INSTALLATION DESTINATION must display

No disks selected. Select INSTALLATION DESTINATION to indicate on which drive to install the OS.

- Select the first HDD drive (in this case, it is the first

one on the list or the 1.6 TB disk) and click DONE on the upper

right corner.

If the server has already been installed, a red banner at the bottom of the page indicates an error condition. Selecting that banner causes a dialog to appear indicating there is not enough free space (which can also indicate an OS has already been installed). In the dialog it can show both 1.6 TB HDDs, as claimed, or just the one.

If only one HDD is displayed (or it can be both 1.6 TB drives selected), select the Reclaim space button. Another dialog appears. Select the Delete all button and the Reclaim space button again. Select DONE to return to the INSTALLATION SUMMARY screen.

If the disk selection dialog appears (after selecting the red banner at the bottom of the page), this implies a full installation of the RMS has already been performed (usually this is because the procedure had to be restarted after it was successfully completed). In this case, select the Modify Disk Selection. This will return to the disk selection page. Select both HDDs and click DONE. The red banner must indicate to reclaim the space. Perform step 2h to reclaim the space again.

- Select DONE. This returns to the INSTALLATION SUMMARY screen.

- At the INSTALLATION

SUMMARY screen, select ROOT PASSWORD.

Enter a root password appropriate for this installation.

It is recommended to use the secure password that the customer provides. This helps to minimize the host from being compromised during installation.

- At the INSTALLATION SUMMARY screen, select Begin Installation. The INSTRALLATION PROGRESS screen is displayed.

- After completing the installation process, remove the USB and select Reboot System to complete the installation and booting to the OS on the Bootstrap Host. At the end of the boot process, the Log in prompt appears.

2.2.3.2 Configuring Host BIOS

Introduction

The following procedure defines the steps to set up the Basic Input Output System (BIOS) changes on the following server types:- Bootstrap host uses the KVM. If you are using a previously configured Bootstrap host that can be accessed through the remote HTML5 console, follow the procedure according to the remaining servers.

- All the remaining servers use remote HTML5 console.

The steps can vary based on the server type. Follow the appropriate steps specific to configured server. Some of the steps require a system reboot and are indicated in the procedure.

Prerequisites

- For Bootstrap host, the procedure Installing of Oracle Linux X.X on Bootstrap Host is complete.

- For all other servers, the procedure Configure Top of Rack 93180YC-EX Switches and Configuring Addresses for RMS iLOs are complete.

Limitations and Expectations

- Applies to HP Gen10 iLO 5 and Netra X8-2 server only.

- Procedures listed here apply to both Bootstrap Host and other servers unless indicated explicitly.

Procedure for Netra X8-2 server

By default, BIOS of the Netra X8-2 server is set to the factory settings with predefined default values. Do not change BIOS of a new X8-2 server. If any issue occurs in the new X8-2 server, reset BIOS to the default factory settings.

- Log in to https://<netra ilom address>.

- Navigate to System Management, select BIOS.

- Set the value to Factory from the drop-down list for Reset to Defaults under Settings.

- Click Save.

Exposing the System Configuration Utility on a RMS Host

Perform the following steps to launch the HP iLO 5 System Configuration Utility main page from the KVM. It does not provide instructions to connect the console, as it can differ on each installation.

- After providing connections for the KVM to access the console, you must reboot the host by momentarily pressing the power button on the front of the Bootstrap host.

- Navigate to the HP ProLiant DL380 Gen10 System Utilities.

Once the remote console has been exposed, reset the system to force it through a restart. When the initial window is displayed, keep pressing the F9 key repeatedly. Once the F9 key is highlighted at the lower left corner of the remote console, it eventually brings up the main System Utilities screen.

- The System Utilities screen is displayed in the remote console.

Note:

As CNE 23.4.0 upgraded Oracle Linux to version 9, some of the OS capabilities are removed for security reasons. This includes the removal of older insecure cryptographic policies as well as shorter RSA lengths that are no longer supported. For more information, see Step c.- Perform the following steps to launch the system utility for other RMS

servers:

- SSH to the RMS using the iLO IP address, and the root

user and password previously assigned at the Installation PreFlight Checklist. This

displays the HP iLO

prompt.

ssh root@<rms_ilo_ip_address> Using username "root". Last login: Fri Apr 19 12:24:56 2019 from 10.39.204.17 [root@localhost ~]# ssh root@192.168.20.141 root@192.168.20.141's password: User:root logged-in to ILO2M2909004F.labs.nc.tekelec.com(192.168.20.141 / FE80::BA83:3FF:FE47:649C) Integrated Lights-Out 5 iLO Advanced 2.30 at Aug 24 2020 Server Name: Server Power: On </>hpiLO-> - Use Virtual Serial Port (VSP) to connect to the blade

remote

console:

</>hpiLO->vsp - Power cycle the blade to bring up the System

Utilities for that blade.

Note:

The System Utility is a text based version of that exposed on the RMS via the KVM. You must use the directional (arrow) keys to manipulate between selections, ENTER key to select, and ESC to return from the current selection. - Access the System Utility by pressing ESC 9.

- SSH to the RMS using the iLO IP address, and the root

user and password previously assigned at the Installation PreFlight Checklist. This

displays the HP iLO

prompt.

- [Optional]: If you are using OL9, depending on the host that is used to

connect the RMS, you may encounter the following error messages when you

connect to iLOM. These errors are encountered in OL9 due to the change in

security:

Error in Oracle X8-2 or X9-2 server when RSA key length is too short:

$ ssh root@172.10.10.10 Bad server host key: Invalid key lengthError in HP server when legacy crypto policy is not enabled:Perform the following steps to resolve these iLOM connectivity issues:$ ssh root@172.11.11.10 Unable to negotiate with 172.11.11.10 port 22: no matching key exchange method found. Their offer: diffie-hellman-group14-sha1,diffie-hellman-group1-sha1Note:

Run the following command on the connecting host only if the host experiences the aforementioned errors while connecting to HP iLO or Oracle iLOM through SSH.- Perform the following steps to reenable Legacy crypto policies to

connect to HP iLO using SSH:

- Run the following command to enable Legacy crypto

policies:

$ sudo update-crypto-policies --set LEGACY - Run the following command to revert the policies to

default:

$ sudo update-crypto-policies --set DEFAULT

- Run the following command to enable Legacy crypto

policies:

- Run the following command to allow short short RSA length while

connecting to Oracle X8-2 or X9-2 server using

SSH:

$ ssh -o RSAMinSize=1024 root@172.10.10.10

- Perform the following steps to reenable Legacy crypto policies to

connect to HP iLO using SSH:

- Navigate to the System Utility as per step 1.

- Select System Configuration.

- Select BIOS/Platform Configuration (RBSU).

- Select Boot Options: If the Boot Mode is

currently UEFI Mode and you decide to use BIOS Mode, use this procedure to

change to Legacy BIOS Mode.

Note:

The server reset must go through an attempt to boot before the changes apply. - Select the Reboot Required dialog window to drop back into the boot process. The boot must go into the process of actually attempting to boot from the boot order. Attempting to boot fails as disks are not installed at this point. The System Utility can be accessed again.

- After the reboot and you re-enter the System Utility, the Boot Options page appears.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit to complete the current boot process.

- Navigate to the System Utility as per step 1.

- Select System Configuration.

- Select BIOS/Platform Configuration (RBSU).

- Select Boot Options. Click drop-down the

Warning prompt appears, click OK.

Note:

The server reset must go through an attempt to boot before the changes apply. - Select the Reboot Required dialog window. Click OK for the warning reboot window.

- After the reboot and you re-enter the System

Utility, the Boot Options page appears.

The Boot Mode is changed to UEFI Mode and the UEFI Optimized Boot has changed to Enabled automatically.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit to complete the current boot process.

Note:

Ensure the pxe_install_lights_out_usr and pxe_install_lights_out_passwd fields match as provided in the hosts inventory files created using the template: CNE Inventory File Preparation.- Navigate to the System Utility as per step 1.

- Select System Configuration.

- Select iLO 5 Configuration Utility.

- Select User Management → Add User.

- Select the appropriate

permissions. For the root user set all permissions to YES. Enter root as New User Name and Login Name fields,

and select Password

field, press Enter key to type

<password>twice. - Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit to complete the current boot process.

- Navigate to the System Utility as per step 1.

- Select System Configuration.

- Select BIOS/Platform Configuration (RBSU).

- Select Boot Options for

Legacy Mode:

This menu defines the boot mode that must be set to Legacy BIOS Mode, the UEFI Optimized Boot which must be disabled, and the Boot Order Policy which must be set to Retry Boot Order Indefinitely (this means it will keep trying to boot without ever going to disk). In this screen select Legacy BIOS Boot Order. If not in Legacy BIOS Mode, follow procedure Change over from UEFI Booting Mode to Legacy BIOS Booting Mode to set the Configuration Utility to Legacy BIOS Mode.

- Select Boot Option for UEFI Mode, click UEFI Boot Settings. Click UEFI Boot Order.

- Select Legacy BIOS Boot

Order.

This window defines the legacy BIOS boot order. It includes the list of devices from which the server will listen for the DHCP OFFER, including the reserved IPv4 after the PXE DHCP DISCOVER message is broadcast out from the server.

The 10Gb Embedded FlexibleLOM 1 Port 1 is at the bottom of the list in the default view. When the server begins the scan for the response, it scans down this list until it receives the response. Each NIC will take a finite amount of time before the server gives up on that NIC and attempts another in the list. Moving the 10Gb port up on this list decreases the time required to process the DHCP OFFER.

To move an entry, select that entry, hold down the left mouse button and move the entry up in the list under the entry it must reside. The UEFI Mode has a similar list.

- Move the 10 Gb Embedded FlexibleLOM 1 Port 1 entry up above the 1Gb Embedded LOM 1 Port 1 entry.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit to complete the current boot process.

- Verifying Default Settings

- Navigate to the System Configuration Utility as per step 1.

- Select System Configuration.

- Select BIOS/Platform Configuration (RBSU).

- Select

Virtualization Options.

This screen displays the settings for the Intel(R) Virtualization Technology (IntelVT), Intel(R) VT-d, and SR-IOV options (Enabled or Disabled). The default value for each option is Enabled.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit to complete the current boot process.

- Navigate to the System Configuration Utility as per step 1.

- Select System Configuration.

- Select Embedded RAID 1 : HPE Smart Array P408i-a SR Gen10.

- Select Array Configuration.

- Select Manage Arrays.

- Select Array A (or any designated Array Configuration if there are more than one).

- Select Delete Array.

- Select Submit Changes.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit to complete the current boot process.

- Navigate to the System Configuration Utility as per step 1.

- Select System Configuration.

- Select Embedded RAID 1 : HPE Smart Array P408i-a SR Gen10.

- Select Set Bootable Device(s) for Legacy Boot Mode. If the boot devices are not set then it will display Not Set for the primary and secondary devices.

- Select Select Bootable Physical Drive.

- Select Port 1| Box:3 Bay:1

Size:1.8 TB SAS HP EG00100JWJNR.

Note:

This example includes two HDDs and two SSDs. The actual configuration can be different. - Select Set as Primary Bootable Device.

- Select Back to Main

Menu.

This returns to the HPE Smart Array P408i-a SR Gen10 menu. The secondary bootable device is left as Not Set.

- Select F10: Save to save and stay in the utility or select the F12: Save and Exit to save and exit to complete the current boot process.

Note:

This step requires a reboot after completion.- Navigate to the System Configuration Utility as per step 1.

- Select System Configuration.

- Select iLO 5 Configuration Utility.

- Select Network Options.

- Enter the IP Address, Subnet Mask, and Gateway IP Address fields provided in Installation PreFlight Checklist.

- Select F12: Save and Exit to complete the current boot process. A reboot is required when setting the static IP for the iLO 5. A warning appears indicating that you must wait 30 seconds for the iLO to reset. A prompt requesting a reboot appears. Select Reboot.

- Once the reboot is complete, you can re-enter the System Utility and verify the settings if necessary.

2.2.3.3 Configuring Top of Rack 93180YC-EX Switches

Introduction

The following procedure provides the steps to initialize and configure Cisco 93180YC-EX switches as per the topology design.Note:

All instructions in this procedure are run from the Bootstrap Host.Prerequisites

- Procedure Installation of Oracle Linux X.X on Bootstrap Host is complete.

- The switches are in a factory default state. If

the switches are out of the box or preconfigured, run

write eraseand reload to factory default. - The switches are connected as per the Installation PreFlight Checklist. The customer uplinks are not active before outside traffic is necessary.

- DHCP, XINETD, and TFTP are already installed on the Bootstrap host but not configured.

- Available Utility USB contains all the necessary files according to the Installation PreFlight checklist: Create Utility USB.

Limitations/Expectations

All steps are run from a Keyboard, Video, Mouse (KVM) connection.

References

Configuration Procedure

Following is the procedure to configure Top of Rack 93180YC-EX Switches:

- Log in to the Bootstrap host as the root user.

Note:

All instructions in this step are run from the Bootstrap Host. - Insert and mount the Utility USB that contains the configuration and script

files. Verify the files are listed in the USB using the

ls /media/usbcommand.Note:

Instructions for mounting the USB can be found in: Installation of Oracle Linux X.X on Bootstrap Host. Perform only steps 2 and 3 in that procedure. - Create a bridge interface as follows:

- If it is a HP Gen10 RMS server, create bridge interface to connect both

management ports and setup the management bridge to support switch

initialization.

Note:

<CNE_Management_IP_With_Prefix>is from Installation PreFlight Checklist: Complete Site Survey Host IP Table. Row 1 CNE Management IP Addresess (VLAN 4) column.<ToRSwitch_CNEManagementNet_VIP>is from Installation PreFlight Checklist: Complete OA and Switch IP Table.nmcli con add con-name mgmtBridge type bridge ifname mgmtBridge nmcli con add type bridge-slave ifname eno2 master mgmtBridge nmcli con add type bridge-slave ifname eno3 master mgmtBridge nmcli con mod mgmtBridge ipv4.method manual ipv4.addresses 192.168.2.11/24 nmcli con up mgmtBridge nmcli con add type bond con-name bond0 ifname bond0 mode 802.3ad nmcli con add type bond-slave con-name bond0-slave-1 ifname eno5 master bond0 nmcli con add type bond-slave con-name bond0-slave-2 ifname eno6 master bond0The following commands are related to the VLAN and IP address for this bootstrap server, the<mgmt_vlan_id>is the same as inhosts.iniand the<bootstrap bond0 address>is same asansible_hostIP for this bootstrap server:nmcli con mod bond0 ipv4.method manual ipv4.addresses <bootstrap bond0 address> nmcli con add con-name bond0.<mgmt_vlan_id> type vlan id <mgmt_vlan_id> dev bond0 nmcli con mod bond0.<mgmt_vlan_id> ipv4.method manual ipv4.addresses <CNE_Management_IP_Address_With_Prefix> ipv4.gateway <ToRswitch_CNEManagementNet_VIP> nmcli con up bond0.<mgmt_vlan_id>Example:nmcli con mod bond0 ipv4.method manual ipv4.addresses 172.16.3.4/24 nmcli con add con-name bond0.4 type vlan id 4 dev bond0 nmcli con mod bond0.4 ipv4.method manual ipv4.addresses <CNE_Management_IP_Address_With_Prefix> ipv4.gateway <ToRswitch_CNEManagementNet_VIP> nmcli con up bond0.4 - For Netra X8-2 server, due to limitation of Ethernet ports, only three out of

five ports can be enabled. So there are not enough ports to configure

mgmtBridgeandbond0at the same time. Connect NIC1 or NIC2 to ToR switch mgmt ports in this step to configure ToR switches. After that, reconnect the ports to Ethernet ports on the ToR switches.nmcli con add con-name mgmtBridge type bridge ifname mgmtBridge nmcli con add type bridge-slave ifname eno2np0 master mgmtBridge nmcli con add type bridge-slave ifname eno3np1 master mgmtBridge nmcli con mod mgmtBridge ipv4.method manual ipv4.addresses 192.168.2.11/24 nmcli con up mgmtBridge

- If it is a HP Gen10 RMS server, create bridge interface to connect both

management ports and setup the management bridge to support switch

initialization.

- Copy the customer Oracle Linux repo file (for example:

ol9.repo) from USB to/etc/yum.repo.ddirectory. Move the origin to backup file (For exmaple:oracle-linux-ol9.repo,uek-ol9.repo, andvirt-ol9.repo):# cd /etc/yum.repos.d # mv oracle-linux-ol9.repo oracle-linux-ol9.repo.bkp # mv virt-ol9.repo virt-ol9.repo.bkp # mv uek-ol9.repo uek-ol9.repo.bkp # cp /media/usb/winterfell-ol9.repo ./ - Install and set up tftp server on bootstrap

host:

dnf install -y tftp-server tftp cp /usr/lib/systemd/system/tftp.service /etc/systemd/system/tftp-server.service cp /usr/lib/systemd/system/tftp.socket /etc/systemd/system/tftp-server.socket tee /etc/systemd/system/tftp-server.service<<'EOF' [Unit] Description=Tftp Server Requires=tftp-server.socket Documentation=man:in.tftpd [Service] ExecStart=/usr/sbin/in.tftpd -c -p -s /var/lib/tftpboot StandardInput=socket [Install] WantedBy=multi-user.target Also=tftp-server.socket EOF - Enable tftp on the Bootstrap host and verify if tftp is active and

enabled:

systemctl daemon-reload systemctl enable --now tftp-server systemctl status tftp-server ps -elf | grep tftpVerify tftp is active and enabled:$ systemctl status tftp $ ps -elf | grep tftp - Install and setup dhcp server on bootstrap

host:

dnf -y install dhcp-server - Copy the

dhcpd.conffile from the Utility USB in Installation PreFlight checklist : Create the dhcpd.conf File to the/etc/dhcp/directory.$ cp /media/usb/dhcpd.conf /etc/dhcp/ - Restart and enable dhcpd service.

# systemctl enable --now dhcpdUse the following command to verify the active and enabled state:# systemctl status dhcpd - Copy the switch configuration and script files from the Utility USB to

/var/lib/tftpboot/directory as follows:cp /media/usb/93180_switchA.cfg /var/lib/tftpboot/ cp /media/usb/93180_switchB.cfg /var/lib/tftpboot/ cp /media/usb/poap_nexus_script.py /var/lib/tftpboot/ - To modify the POAP script file change username and password credentials used to

log in to the Bootstrap host.

# vi /var/lib/tftpboot/poap_nexus_script.py # Host name and user credentials options = { "username": "<username>", "password": "<password>", "hostname": "192.168.2.11", "transfer_protocol": "scp", "mode": "serial_number", "target_system_image": "nxos.9.2.3.bin", }Note:

The versionnxos.9.2.3.binis used by default. If different version is to be used, modifytarget_system_imagewith new version. - Run the

md5Poap.shscript from the Utility USB created from Installation PreFlight checklist: Create the md5Poap Bash Script, to modify the POAP script file md5sum as follows:# cd /var/lib/tftpboot/ # /bin/bash md5Poap.sh - Create the files necessary to configure the ToR switches using the serial

number from the switch.

Note:

The serial number is located on a pullout card on the back of the switch in the left most power supply of the switch. Be careful in interpreting the exact letters. If the switches are preconfigured, then you can even verify the serial numbers usingshow license host-idcommand. - Copy the

/var/lib/tftpboot/93180_switchA.cfginto a file called/var/lib/tftpboot/conf.<switchA serial number>.Modify the switch specific values in the

/var/lib/tftpboot/conf.<switchA serial number>file, including all the values in the curly braces as shown in the following code block.These values are contained at Installation PreFlight checklist : ToR and Enclosure Switches Variables Table (Switch Specific) and Installation PreFlight Checklist : Complete OA and Switch IP Table. Modify these values with the followingsedcommands or use an editor such as vi to modify the commands.Note:

The template supports 12 RMS servers. If there are less than 12 servers, then the extra configurations may not work without physical connections and will not affect the first number of servers. If there are more than 12 servers, simulate the pattern to add for more servers.# sed -i 's/{switchname}/<switch_name>/' conf.<switchA serial number> # sed -i 's/{admin_password}/<admin_password>/' conf.<switchA serial number> # sed -i 's/{user_name}/<user_name>/' conf.<switchA serial number> # sed -i 's/{user_password}/<user_password>/' conf.<switchA serial number> # sed -i 's/{ospf_md5_key}/<ospf_md5_key>/' conf.<switchA serial number> # sed -i 's/{OSPF_AREA_ID}/<ospf_area_id>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER1}/<NTP_server_1>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER2}/<NTP_server_2>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER3}/<NTP_server_3>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER4}/<NTP_server_4>/' conf.<switchA serial number> # sed -i 's/{NTPSERVER5}/<NTP_server_5>/' conf.<switchA serial number> # Note: If less than 5 ntp servers available, delete the extra ntp server lines such as command: # sed -i 's/{NTPSERVER5}/d' conf.<switchA serial number> Note: different delimiter is used in next two commands due to '/' sign in the variables # sed -i 's#{ALLOW_5G_XSI_LIST_WITH_PREFIX_LEN}#<MetalLB_Signal_Subnet_With_Prefix>#g' conf.<switchA serial number> # sed -i 's#{CNE_Management_SwA_Address}#<ToRswitchA_CNEManagementNet_IP>#g' conf.<switchA serial number> # sed -i 's#{CNE_Management_SwB_Address}#<ToRswitchB_CNEManagementNet_IP>#g' conf.<switchA serial number> # sed -i 's#{CNE_Management_Prefix}#<CNEManagementNet_Prefix>#g' conf.<switchA serial number> # sed -i 's#{SQL_replication_SwA_Address}#<ToRswitchA_SQLreplicationNet_IP>#g' conf.<switchA serial number> # sed -i 's#{SQL_replication_SwB_Address}#<ToRswitchB_SQLreplicationNet_IP>#g' conf.<switchA serial number> # sed -i 's#{SQL_replication_Prefix}#<SQLreplicationNet_Prefix>#g' conf.<switchA serial number> # ipcalc -n <ToRswitchA_SQLreplicationNet_IP/<SQLreplicationNet_Prefix> | awk -F'=' '{print $2}' # sed -i 's/{SQL_replication_Subnet}/<output from ipcalc command as SQL_replication_Subnet>/' conf.<switchA serial number> # sed -i 's/{CNE_Management_VIP}/<ToRswitch_CNEManagementNet_VIP>/g' conf.<switchA serial number> # sed -i 's/{SQL_replication_VIP}/<ToRswitch_SQLreplicationNet_VIP>/g' conf.<switchA serial number> # sed -i 's/{OAM_UPLINK_CUSTOMER_ADDRESS}/<ToRswitchA_oam_uplink_customer_IP>/' conf.<switchA serial number> # sed -i 's/{OAM_UPLINK_SwA_ADDRESS}/<ToRswitchA_oam_uplink_IP>/g' conf.<switchA serial number> # sed -i 's/{SIGNAL_UPLINK_SwA_ADDRESS}/<ToRswitchA_signaling_uplink_IP>/g' conf.<switchA serial number> # sed -i 's/{OAM_UPLINK_SwB_ADDRESS}/<ToRswitchB_oam_uplink_IP>/g' conf.<switchA serial number> # sed -i 's/{SIGNAL_UPLINK_SwB_ADDRESS}/<ToRswitchB_signaling_uplink_IP>/g' conf.<switchA serial number> # ipcalc -n <ToRswitchA_signaling_uplink_IP>/30 | awk -F'=' '{print $2}' # sed -i 's/{SIGNAL_UPLINK_SUBNET}/<output from ipcalc command as signal_uplink_subnet>/' conf.<switchA serial number> # ipcalc -n <ToRswitchA_SQLreplicationNet_IP> | awk -F'=' '{print $2}' # sed -i 's/{MySQL_Replication_SUBNET}/<output from the above ipcalc command appended with prefix >/' conf.<switchA serial number> Note: The version nxos.9.2.3.bin is used by default and hard-coded in the conf files. If different version is to be used, run the following command: # sed -i 's/nxos.9.2.3.bin/<nxos_version>/' conf.<switchA serial number> Note: access-list Restrict_Access_ToR # The following line allow one access server to access the switch management and SQL vlan addresses while other accesses are denied. If no need, delete this line. If need more servers, add similar line. # sed -i 's/{Allow_Access_Server}/<Allow_Access_Server>/' conf.<switchA serial number> - Copy the

/var/lib/tftpboot/93180_switchB.cfginto a/var/lib/tftpboot/conf.<switchB serial number>file:Modify the switch specific values in the

/var/lib/tftpboot/conf.<switchA serial number>file, including: hostname, username/password, oam_uplink IP address, signaling_uplink IP address, access-list ALLOW_5G_XSI_LIST permit address, prefix-list ALLOW_5G_XSI.These values are contained at Installation PreFlight checklist : ToR and Enclosure Switches Variables Table and Installation PreFlight Checklist : Complete OA and Switch IP Table.

# sed -i 's/{switchname}/<switch_name>/' conf.<switchB serial number> # sed -i 's/{admin_password}/<admin_password>/' conf.<switchB serial number> # sed -i 's/{user_name}/<user_name>/' conf.<switchB serial number> # sed -i 's/{user_password}/<user_password>/' conf.<switchB serial number> # sed -i 's/{ospf_md5_key}/<ospf_md5_key>/' conf.<switchB serial number> # sed -i 's/{OSPF_AREA_ID}/<ospf_area_id>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER1}/<NTP_server_1>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER2}/<NTP_server_2>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER3}/<NTP_server_3>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER4}/<NTP_server_4>/' conf.<switchB serial number> # sed -i 's/{NTPSERVER5}/<NTP_server_5>/' conf.<switchB serial number> # Note: If less than 5 ntp servers available, delete the extra ntp server lines such as command: # sed -i 's/{NTPSERVER5}/d' conf.<switchB serial number> Note: different delimiter is used in next two commands due to '/' sign in in the variables # sed -i 's#{ALLOW_5G_XSI_LIST_WITH_PREFIX_LEN}#<MetalLB_Signal_Subnet_With_Prefix>#g' conf.<switchB serial number> # sed -i 's#{CNE_Management_SwA_Address}#<ToRswitchA_CNEManagementNet_IP>#g' conf.<switchB serial number> # sed -i 's#{CNE_Management_SwB_Address}#<ToRswitchB_CNEManagementNet_IP>#g' conf.<switchB serial number> # sed -i 's#{CNE_Management_Prefix}#<CNEManagementNet_Prefix>#g' conf.<switchB serial number> # sed -i 's#{SQL_replication_SwA_Address}#<ToRswitchA_SQLreplicationNet_IP>#g' conf.<switchB serial number> # sed -i 's#{SQL_replication_SwB_Address}#<ToRswitchB_SQLreplicationNet_IP>#g' conf.<switchB serial number> # sed -i 's#{SQL_replication_Prefix}#<SQLreplicationNet_Prefix>#g' conf.<switchB serial number> # ipcalc -n <ToRswitchB_SQLreplicationNet_IP/<SQLreplicationNet_Prefix> | awk -F'=' '{print $2}' # sed -i 's/{SQL_replication_Subnet}/<output from ipcalc command as SQL_replication_Subnet>/' conf.<switchB serial number> # sed -i 's/{CNE_Management_VIP}/<ToRswitch_CNEManagementNet_VIP>/' conf.<switchB serial number> # sed -i 's/{SQL_replication_VIP}/<ToRswitch_SQLreplicationNet_VIP>/' conf.<switchB serial number> # sed -i 's/{OAM_UPLINK_CUSTOMER_ADDRESS}/<ToRswitchB_oam_uplink_customer_IP>/' conf.<switchB serial number> # sed -i 's/{OAM_UPLINK_SwA_ADDRESS}/<ToRswitchA_oam_uplink_IP>/g' conf.<switchB serial number> # sed -i 's/{SIGNAL_UPLINK_SwA_ADDRESS}/<ToRswitchA_signaling_uplink_IP>/g' conf.<switchB serial number> # sed -i 's/{OAM_UPLINK_SwB_ADDRESS}/<ToRswitchB_oam_uplink_IP>/g' conf.<switchB serial number> # sed -i 's/{SIGNAL_UPLINK_SwB_ADDRESS}/<ToRswitchB_signaling_uplink_IP>/g' conf.<switchB serial number> # ipcalc -n <ToRswitchB_signaling_uplink_IP>/30 | awk -F'=' '{print $2}' # sed -i 's/{SIGNAL_UPLINK_SUBNET}/<output from ipcalc command as signal_uplink_subnet>/' conf.<switchB serial number> Note: The version nxos.9.2.3.bin is used by default and hard-coded in the conf files. If different version is to be used, run the following command: # sed -i 's/nxos.9.2.3.bin/<nxos_version>/' conf.<switchB serial number> Note: access-list Restrict_Access_ToR # The following line allow one access server to access the switch management and SQL vlan addresses while other accesses are denied. If no need, delete this line. If need more servers, add similar line. # sed -i 's/{Allow_Access_Server}/<Allow_Access_Server>/' conf.<switchB serial number> - Generate the md5 checksum for each conf file in

/var/lib/tftpbootand copy that into a new file calledconf.<switchA/B serial number>.md5.$ md5sum conf.<switchA serial number> > conf.<switchA serial number>.md5 $ md5sum conf.<switchB serial number> > conf.<switchB serial number>.md5 - Verify that the

/var/lib/tftpbootdirectory has the correct files.Ensure that the file permissions are set as follows:Note:

The ToR switches are constantly attempting to find and run thepoap_nexus_script.pyscript which uses tftp to load and install the configuration files.# ls -l /var/lib/tftpboot/ total 1305096 -rw-r--r--. 1 root root 7161 Mar 25 15:31 conf.<switchA serial number> -rw-r--r--. 1 root root 51 Mar 25 15:31 conf.<switchA serial number>.md5 -rw-r--r--. 1 root root 7161 Mar 25 15:31 conf.<switchB serial number> -rw-r--r--. 1 root root 51 Mar 25 15:31 conf.<switchB serial number>.md5 -rwxr-xr-x. 1 root root 75856 Mar 25 15:32 poap_nexus_script.py - Enable tftp-server again and verify the tftp-server status.

Note:

The tftp-server status stays as active for only 15 minutes after enable. So you must enable the tftp-server again before configuring the switches.# systemctl enable --now tftp-serverVerify tftp is active and enabled:# systemctl status tftp-server # ps -elf | grep tftp - Disable firewalId and verify the firewalId service

status:

systemctl stop firewalld systemctl disable firewalld systemctl status firewalldAfter completing the above steps, the ToR Switches will attempt to boot from the tftpboot files automatically.

- Unmount the Utility USB and remove it as follows:

umount /media/usb

Verification

- After the configuration of ToR switches, ping the switches from bootstrap

server. The switches mgmt0 interfaces are configured with the IP addresses

that are in the conf files.

Note:

Wait for the device to respond.# ping 192.168.2.1 PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data. 64 bytes from 192.168.2.1: icmp_seq=1 ttl=255 time=0.419 ms 64 bytes from 192.168.2.1: icmp_seq=2 ttl=255 time=0.496 ms 64 bytes from 192.168.2.1: icmp_seq=3 ttl=255 time=0.573 ms 64 bytes from 192.168.2.1: icmp_seq=4 ttl=255 time=0.535 ms ^C --- 192.168.2.1 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3000ms rtt min/avg/max/mdev = 0.419/0.505/0.573/0.063 ms # ping 192.168.2.2 PING 192.168.2.2 (192.168.2.2) 56(84) bytes of data. 64 bytes from 192.168.2.2: icmp_seq=1 ttl=255 time=0.572 ms 64 bytes from 192.168.2.2: icmp_seq=2 ttl=255 time=0.582 ms 64 bytes from 192.168.2.2: icmp_seq=3 ttl=255 time=0.466 ms 64 bytes from 192.168.2.2: icmp_seq=4 ttl=255 time=0.554 ms ^C --- 192.168.2.2 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3001ms rtt min/avg/max/mdev = 0.466/0.543/0.582/0.051 ms - Attempt to ssh to the switches with the username and password provided in the

conf

files.

# ssh plat@192.168.2.1 The authenticity of host '192.168.2.1 (192.168.2.1)' can't be established. RSA key fingerprint is SHA256:jEPSMHRNg9vejiLcEvw5qprjgt+4ua9jucUBhktH520. RSA key fingerprint is MD5:02:66:3a:c6:81:65:20:2c:6e:cb:08:35:06:c6:72:ac. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '192.168.2.1' (RSA) to the list of known hosts. User Access Verification Password: Cisco Nexus Operating System (NX-OS) Software TAC support: http://www.cisco.com/tac Copyright (C) 2002-2019, Cisco and/or its affiliates. All rights reserved. The copyrights to certain works contained in this software are owned by other third parties and used and distributed under their own licenses, such as open source. This software is provided "as is," and unless otherwise stated, there is no warranty, express or implied, including but not limited to warranties of merchantability and fitness for a particular purpose. Certain components of this software are licensed under the GNU General Public License (GPL) version 2.0 or GNU General Public License (GPL) version 3.0 or the GNU Lesser General Public License (LGPL) Version 2.1 or Lesser General Public License (LGPL) Version 2.0. A copy of each such license is available at http://www.opensource.org/licenses/gpl-2.0.php and http://opensource.org/licenses/gpl-3.0.html and http://www.opensource.org/licenses/lgpl-2.1.php and http://www.gnu.org/licenses/old-licenses/library.txt. # - Verify that the running-config contains all expected configurations in the conf

file using the

show running-configcommand as follows:# show running-config !Command: show running-config !Running configuration last done at: Mon Apr 8 17:39:38 2019 !Time: Mon Apr 8 18:30:17 2019 version 9.2(3) Bios:version 07.64 hostname 12006-93108A vdc 12006-93108A id 1 limit-resource vlan minimum 16 maximum 4094 limit-resource vrf minimum 2 maximum 4096 limit-resource port-channel minimum 0 maximum 511 limit-resource u4route-mem minimum 248 maximum 248 limit-resource u6route-mem minimum 96 maximum 96 limit-resource m4route-mem minimum 58 maximum 58 limit-resource m6route-mem minimum 8 maximum 8 feature scp-server feature sftp-server cfs eth distribute feature ospf feature bgp feature interface-vlan feature lacp feature vpc feature bfd feature vrrpv3 .... .... - In case some of the above features are missing, verify license on the switches

and at least NXOS_ADVANTAGE level license is in use. If the license is not

installed or too low level, contact the vendor for correct license key file.

Then run

write eraseandreloadto set back to factory default. The switches will go to POAP configuration again.# show licenseExample output:# show license MDS20190215085542979.lic: SERVER this_host ANY VENDOR cisco INCREMENT NXOS_ADVANTAGE_XF cisco 1.0 permanent uncounted \ VENDOR_STRING=<LIC_SOURCE>MDS_SWIFT</LIC_SOURCE><SKU>NXOS-AD-XF</SKU> \ HOSTID=VDH=FDO22412J2F \ NOTICE="<LicFileID>20190215085542979</LicFileID><LicLineID>1</LicLineID> \ <PAK></PAK>" SIGN=8CC8807E6918# show license usageExample output:# show license usage Feature Ins Lic Status Expiry Date Comments Count -------------------------------------------------------------------------------- ... NXOS_ADVANTAGE_M4 No - Unused - NXOS_ADVANTAGE_XF Yes - In use never - NXOS_ESSENTIALS_GF No - Unused - ... # - For Netra X8-2 server, reconnect the cable on mgmt ports to the Ethernet

ports for RMS1, delete mgmtBridge, and configure bond0 and management vlan

interface:

nmcli con delete con-name mgmtBridge nmcli con add type bond con-name bond0 ifname bond0 mode 802.3ad nmcli con add type bond-slave con-name bond0-slave-1 ifname eno2np0 master bond0 nmcli con add type bond-slave con-name bond0-slave-2 ifname eno3np1 master bond0The following commands are related to the VLAN and IP address for this bootstrap server, the<mgmt_vlan_id>is the same as inhosts.iniand the<bootstrap bond0 address>is same asansible_hostIP for this bootstrap server:nmcli con mod bond0 ipv4.method manual ipv4.addresses <bootstrap bond0 address> nmcli con add con-name bond0.<mgmt_vlan_id> type vlan id <mgmt_vlan_id> dev bond0 nmcli con mod bond0.<mgmt_vlan_id> ipv4.method manual ipv4.addresses <CNE_Management_IP_Address_With_Prefix> ipv4.gateway <ToRswitch_CNEManagementNet_VIP> nmcli con up bond0.<mgmt_vlan_id>For example:nmcli con mod bond0 ipv4.method manual ipv4.addresses 172.16.3.4/24 nmcli con add con-name bond0.4 type vlan id 4 dev bond0 nmcli con mod bond0.4 ipv4.method manual ipv4.addresses <CNE_Management_IP_Address_With_Prefix> ipv4.gateway <ToRswitch_CNEManagementNet_VIP> nmcli con up bond0.4 - Verify if the RMS1 can ping the CNE_Management VIP.

# ping <ToRSwitch_CNEManagementNet_VIP> PING <ToRSwitch_CNEManagementNet_VIP> (<ToRSwitch_CNEManagementNet_VIP>) 56(84) bytes of data. 64 bytes from <ToRSwitch_CNEManagementNet_VIP>: icmp_seq=2 ttl=255 time=1.15 ms 64 bytes from <ToRSwitch_CNEManagementNet_VIP>: icmp_seq=3 ttl=255 time=1.11 ms 64 bytes from <ToRSwitch_CNEManagementNet_VIP>: icmp_seq=4 ttl=255 time=1.23 ms ^C --- 10.75.207.129 ping statistics --- 4 packets transmitted, 3 received, 25% packet loss, time 3019ms rtt min/avg/max/mdev = 1.115/1.168/1.237/0.051 ms - Connect or enable customer uplink.

- Verify if the RMS1 can be accessed from laptop. Use application such as Putty

to ssh to RMS1.

$ ssh root@<CNE_Management_IP_Address> Using username "root". root@<CNE_Management_IP_Address>'s password:<root password> Last login: Mon May 6 10:02:01 2019 from 10.75.9.171 [root@RMS1 ~]#

SNMP Trap Configuration

- SNMPv2c Configuration.

When SNMPv2c configuration is needed, ssh to the two switches and run the following commands:

These values

<SNMP_Trap_Receiver_Address>and<SNMP_Community_String>are from Installation Preflight Checklist.[root@RMS1 ~]# ssh <user_name>@<ToRswitchA_CNEManagementNet_IP> # configure terminal (config)# snmp-server host <SNMP_Trap_Receiver_Address> traps version 2c <SNMP_Community_String> (config)# snmp-server host <SNMP_Trap_Receiver_Address> use-vrf default (config)# snmp-server host <SNMP_Trap_Receiver_Address> source-interface Ethernet1/51 (config)# snmp-server enable traps (config)# snmp-server community <SNMP_Community_String> group network-admin - To restrict the direct access to ToR switches, create IP access

list and apply on the uplink interfaces. Use the following commands on ToR

switches:

[root@RMS1 ~]# ssh <user_name>@<ToRswitchA_CNEManagementNet_IP> # configure terminal (config)# ip access-list Restrict_Access_ToR permit ip {Allow_Access_Server}/32 any permit ip {NTPSERVER1}/32 {OAM_UPLINK_SwA_ADDRESS}/32 permit ip {NTPSERVER2}/32 {OAM_UPLINK_SwA_ADDRESS}/32 permit ip {NTPSERVER3}/32 {OAM_UPLINK_SwA_ADDRESS}/32 permit ip {NTPSERVER4}/32 {OAM_UPLINK_SwA_ADDRESS}/32 permit ip {NTPSERVER5}/32 {OAM_UPLINK_SwA_ADDRESS}/32 deny ip any {CNE_Management_VIP}/32 deny ip any {CNE_Management_SwA_Address}/32 deny ip any {CNE_Management_SwB_Address}/32 deny ip any {SQL_replication_VIP}/32 deny ip any {SQL_replication_SwA_Address}/32 deny ip any {SQL_replication_SwB_Address}/32 deny ip any {OAM_UPLINK_SwA_ADDRESS}/32 deny ip any {OAM_UPLINK_SwB_ADDRESS}/32 deny ip any {SIGNAL_UPLINK_SwA_ADDRESS}/32 deny ip any {SIGNAL_UPLINK_SwB_ADDRESS}/32 permit ip any any interface Ethernet1/51 ip access-group Restrict_Access_ToR in interface Ethernet1/52 ip access-group Restrict_Access_ToR in - Traffic egress out of cluster (including snmptrap traffic to

SNMP trap receiver) and traffic goes to signal server:

[root@RMS1 ~]# ssh <user_name>@<ToRswitchA_CNEManagementNet_IP> # configure terminal (config)# feature nat ip access-list host-snmptrap 10 permit udp 172.16.3.0/24 <snmp trap receiver>/32 eq snmptrap log ip access-list host-sigserver 10 permit ip 172.16.3.0/24 <signal server>/32 ip nat pool sig-pool 10.75.207.211 10.75.207.222 prefix-length 27 ip nat inside source list host-sigserver pool sig-pool overload add-route ip nat inside source list host-snmptrap interface Ethernet1/51 overload interface Vlan3 ip nat inside interface Ethernet1/51 ip nat outside interface Ethernet1/52 ip nat outside Run the same commands on ToR switchB

2.2.3.4 Configuring Addresses for RMS iLOs

Introduction

Note:

Skip this procedure if the iLO network is controlled by lab network or customer network that is beyond the ToR switches. The iLO network can be accessed from the bastion host management interface. Perform this procedure only if the iLO network is local on the ToR switches and iLO addresses are not configured on the servers.Prerequisites

Ensure that the procedure Configure Top of Rack 93180YC-EX Switches has been completed.

Limitations

All steps must be run from the SSH session of the Bootstrap server.

References

Procedure

Following is the procedure to configure addresses for RMS iLOs:

Setting up interface on bootstrap server and find iLO DHCP address- Setup the VLAN interface to access ILO subnet. The

ilo_vlan_idandilo_subnet_cidrare the same value as inhosts.ini:nmcli con add con-name bond0.<ilo_vlan_id> type vlan id <ilo_vlan_id> dev bond0 nmcli con mod bond0.<ilo_vlan_id> ipv4.method manual ipv4.addresses <unique ip in ilo subnet>/<ilo_subnet_cidr> nmcli con up bond0.<ilo_vlan_id>Example:

nmcli con add con-name bond0.2 type vlan id 2 dev bond0 nmcli con mod bond0.2 ipv4.method manual ipv4.addresses 192.168.20.11/24 nmcli con up bond0.2 - Subnet and conf file address.

The

/etc/dhcp/dhcpd.conffile is already configured as per the OCCNE Configure Top of Rack 93180YC-EX Switches procedure and DHCP started or enabled on the bootstrap server. The second subnet 192.168.20.0 is used to assign addresses for OA and RMS iLOs. - Display the DHCPD leases file at

/var/lib/dhcpd/dhcpd.leases. The DHCPD lease file displays the DHCP addresses for all RMS iLOs:# cat /var/lib/dhcpd/dhcpd.leases # The format of this file is documented in the dhcpd.leases(5) manual page. # This lease file was written by isc-dhcp-4.2.5 ... lease 192.168.20.106 { starts 5 2019/03/29 18:10:04; ends 5 2019/03/29 21:10:04; cltt 5 2019/03/29 18:10:04; binding state active; next binding state free; rewind binding state free; hardware ethernet b8:83:03:47:5f:14; uid "\000\270\203\003G_\024\000\000\000"; client-hostname "ILO2M2909004B"; } lease 192.168.20.104 { starts 5 2019/03/29 18:10:35; ends 5 2019/03/29 21:10:35; cltt 5 2019/03/29 18:10:35; binding state active; next binding state free; rewind binding state free; hardware ethernet b8:83:03:47:64:9c; uid "\000\270\203\003Gd\234\000\000\000"; client-hostname "ILO2M2909004F"; } lease 192.168.20.105 { starts 5 2019/03/29 18:10:40; ends 5 2019/03/29 21:10:40; cltt 5 2019/03/29 18:10:40; binding state active; next binding state free; rewind binding state free; hardware ethernet b8:83:03:47:5e:54; uid "\000\270\203\003G^T\000\000\000"; client-hostname "ILO2M29090048";

- Access RMS iLO from the DHCP address with default Administrator password.

From the above

dhcpd.leasesfile. Find the IP address for the iLO name, the default username is Administrator, the password is on the label that can be pulled out from front of server.Note:

The DNS Name is on the pull-out label. Use the DNS Name on the pull-out label to match the physical machine with the iLO IP. The same default DNS Name from the pull-out label is displayed upon logging in to the iLO command line interface, as shown in the following example:# ssh Administrator@192.168.20.104 Administrator@192.168.20.104's password: User:Administrator logged-in to ILO2M2909004F.labs.nc.tekelec.com(192.168.20.104 / FE80::BA83:3FF:FE47:649C) iLO Standard 1.37 at Oct 25 2018 Server Name: Server Power: On - Create an RMS iLO new user with customized username and password.

</>hpiLO-> create /map1/accounts1 username=root password=TklcRoot group=admin,config,oemHP_rc,oemHP_power,oemHP_vm status=0 status_tag=COMMAND COMPLETED Tue Apr 2 20:08:30 2019 User added successfully. - Disable the DHCP before you are able to setup the static IP. The setup of

static IP failed before DHCP is

disabled.

</>hpiLO-> set /map1/dhcpendpt1 EnabledState=NO status=0 status_tag=COMMAND COMPLETED Tue Apr 2 20:04:53 2019 Network settings change applied. Settings change applied, iLO 5 will now be reset. Logged Out: It may take several minutes before you can log back in. CLI session stopped packet_write_wait: Connection to 192.168.20.104 port 22: Broken pipe - Setup RMS iLO static IP address.

After the previous step, log in back with the same address (which is static IP now), and enter new username and password. Go to next step to change the IP address, if required.

# ssh <new username>@192.168.20.104 <new username>@192.168.20.104's password: <new password> User: logged-in to ILO2M2909004F.labs.nc.tekelec.com(192.168.20.104 / FE80::BA83:3FF:FE47:649C) iLO Standard 1.37 at Oct 25 2018 Server Name: Server Power: On </>hpiLO-> set /map1/enetport1/lanendpt1/ipendpt1 IPv4Address=192.168.20.122 SubnetMask=255.255.255.0 status=0 status_tag=COMMAND COMPLETED Tue Apr 2 20:22:23 2019 Network settings change applied. Settings change applied, iLO 5 will now be reset. Logged Out: It may take several minutes before you can log back in. CLI session stopped packet_write_wait: Connection to 192.168.20.104 port 22: Broken pipe - Setup RMS iLO default gateway.

# ssh <new username>@192.168.20.122 <new username>@192.168.20.122's password: <new password> User: logged-in to ILO2M2909004F.labs.nc.tekelec.com(192.168.20.104 / FE80::BA83:3FF:FE47:649C) iLO Standard 1.37 at Oct 25 2018 Server Name: Server Power: On </>hpiLO-> set /map1/gateway1 AccessInfo=192.168.20.1 status=0 status_tag=COMMAND COMPLETED Fri Oct 8 16:10:27 2021 Network settings change applied. Settings change applied, iLO will now be reset. Logged Out: It may take several minutes before you can log back in. CLI session stopped Received disconnect from 192.168.20.122 port 22:11: Client Disconnect Disconnected from 192.168.20.122 port 22

- Access RMS iLO from the DHCP address with default root password. From the

above

dhcpd.leasesfile, find the IP address for the iLO name. The default username is root and the password is changeme. At the same time, note the DNS Name on the pull-out label.Note:

The DNS Name is on the pull-out label. Use the DNS Name on the pull-out label to match the physical machine with the iLO IP. The same default DNS Name from the pull-out label is displayed upon logging in to the iLO command line interface, as shown in the following example:Using username "root". Using keyboard-interactive authentication. Password: Oracle(R) Integrated Lights Out Manager Version 5.0.1.28 r140682 Copyright (c) 2021, Oracle and/or its affiliates. All rights reserved. Warning: password is set to factory default. Warning: HTTPS certificate is set to factory default. Hostname: ORACLESP-2117XLB00V - Netra server has the default root user. To change the default password, run the

following set

command:

-> set /SP/users/root password Enter new password: ******** Enter new password again: ******** - Create an RMS iLO new user with customized username and

password:

-> create /SP/users/<username> Creating user... Enter new password: **** create: Non compliant password. Password length must be between 8 and 16 characters. Enter new password: ******** Enter new password again: ******** Created /SP/users/<username> - Setup RMS iLO static IP address.

After the previous step, log in with the same address (which is a static IP now), and the new username and password. If not using the same address, go to next step to change the IP address:

- Check the current state before

change:

# show /SP/network - Set command to

configure:

# set /SP/network state=enabled|disabled ipdiscovery=static|dhcp ipaddress=value ipgateway=value ipnetmask=valueExample command:# set /SP/network state=enabled ipdiscovery=static ipaddress=172.16.9.13 ipgateway=172.16.9.1 ipnetmask=255.255.255.0

- Check the current state before

change:

- Commit the changes to implement the updates

performed:

# set /SP/network commitpending=true

2.2.3.5 Generating SSH Key on Oracle Servers with Oracle ILOM

This section provides the procedures to generate a new SSH key on an Oracle X8-2 and Oracle X9-2 server using the Oracle Integrated Lights Out Manager (ILOM) web interface. The new SSH key is created at the service level and has a length of 3072 bits. It is automatically managed by the firmware.

- Oracle ILOM: Version 5.1.3.20, revision 153596

- BIOS (Basic Input/Output System): Version 51.11.02.00

Oracle X9-2 server is compatible with firmware 5.1. For more details, contact My Oracle Support.

Before craeting a new SSH key, ensure that you have the necessary access permissions to log in to the Oracle ILOM web interface.

- Open a web browser and access the Oracle ILOM user interface by entering the corresponding IP address in the address bar.

- Enter your login credentials for Oracle ILOM.

- Perform the following steps to generate the SSH key:

- Navigate to the SSH or security configuration section in the following path: ILOM Administration → Managament Access → SSH Server

- Click Generate Key to generate a

new SSH key.

The system generates a new SSH key of 3072 bits length.

- Run the following command on the CLI to validate the

generated

key:

-> show -d properties /SP/services/ssh/keys/rsaSample output:/SP/services/ssh/keys/rsa Properties: fingerprint = 53:66:65:85:45:ba:4e:63:2d:aa:ab:8b:ef:fa:95:ac:9e:17:8e:92 fingerprint_algorithm = SHA1 length = 3072Note:

- The length of the SSH key is managed by the firmware and set to 3072 bits. There are no options to configure it to 1024 or 2048 bits.

- Ensure that the client's configuration is compatible with 3072 bit SSH keys.

- After making the changes to SSH keys or user configuration in the ILOM web interface, log out from Oracle ILOM and then log back in. This applies the changes without having to restart the entire ILOM.

- [Optional]: You can also restart ILOM using the ILOM

command line interface by running the following command. This

command applies any configuration changes that you've made and

initiates a restart of the

ILOM:

-> reset /SP

Use the following properties and commands in the Oracle ILOM CLI to configure and manage SSH settings on the X9-2 server. Refer to the specific documentation for Oracle ILOM version 5.1 on the X9-2 server for any updates or changes to the commands (https://docs.oracle.com/en/servers/management/ilom/5.1/admin-guide/modifying-default-management-access-configuration-properties.html#GUID-073D4AA6-E5CC-45B5-9CF4-28D60B56B548).

- CLI path:

/SP/services/ssh - Web path: ILOM Administration > Management Access > SSH Server > SSH Server Settings

- User Role:

admin(a). Required for all property modifications.

Table 2-3 SSH Configuration Properties

| Property | Description |

|---|---|

| State |

Parameter: Description: Determines whether the SSH server is enabled or disabled. When enabled, the SSH server uses the server side keys to allow remote clients to securely connect to the Oracle ILOM SP using a command-line interface. On disabling or restarting, the SSH server automatically terminates all connected SP CLI sessions over SSH. Default Value: Enabled CLI

Syntax:

Note: If you are using a web interface, the changes you made to the SSH Server State in the web interface takes effect in Oracle ILOM only after clicking Save. Restarting the SSH server is not required for this property. |

| Restart Button |

Parameter:

Description: This property allows you to restart the SSH server by terminating all connected SP CLI sessions and activating the newly generated server-side keys. Default Value: NA Available Options: CLI

Syntax:

|

| Generate RSA Key Button |

Parameter:

Description: This property provides the ability to generate a new RSA SSH key. This action is used for creating a new key pair for SSH authentication. Default Value: NA CLI

Syntax:

|

Note:

- Periodic firmware updates for Oracle ILOM are crucial. Regularly check for updates to access the new features, improvements, or security enhancements in the Firmware Downloads and Release History for Oracle Systems page.

- Verify that the clients connecting to the Oracle X8-2 server support 3072 bit SSH keys.

- For detailed information about SSH key generation and management in your specific environment, refer to the official Oracle ILOM documentation.

2.2.4 Installing Bastion Host

This section describes the use of Installer Bootstrap Host to provision RMS2 with an operating system and configure it to fulfill the role of Kubernetes master. After the Bastion Host is created, it is used to complete the installation of CNE.

Provision Second Database Host (RMS2) from Installer Bootstrap Host (RMS1)

Table 2-4 Terminology used in Procedure

| Name | Description |

|---|---|

| bastion_full_name | This is the full name of the Bastion Host as defined in the hosts.ini

file.

Example: bastion-2.rainbow.lab.us.oracle.com |

| bastion_kvm_host_full_name | This is the full name of the KVM server (usually RMS2/db-2) that hosts

the Bastion Host VM.

Example: db-2.rainbow.lab.us.oracle.com |

| bastion_kvm_host_ip_address |

This is the IPv4 ansible_host IP address of the server (usually RMS2/db-2) that hosts the Bastion Host VM. Example: 172.16.3.5 |

| bastion_short_name | This is the name of the Bastion Host derived

from the bastion_full_name up to the first ".".

Example: bastion-2 |

| bastion_external_ip_address | This is the external address for the Bastion Host

Example: 10.75.148.5 for bastion-2 |

| bastion_ip_address |

This is the internal IPv4 "ansible_host" address of the Bastion Host as defined within the hosts.ini file. Example: 172.16.3.100 for bastion-2 |