3 Benchmarking Policy Call Models

This section describes different Policy call models and the performance test scenarios which were run using these call model.

3.1 PCRF Call Model 1

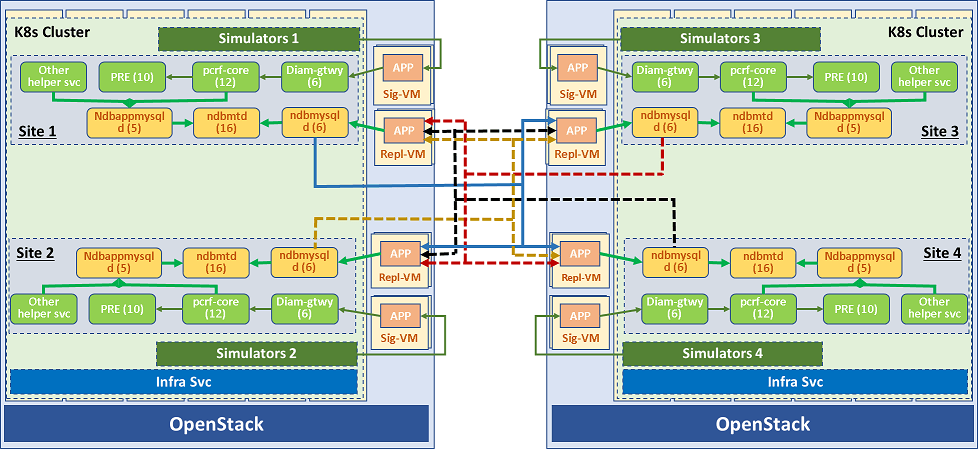

The following diagram describes the architecture for a multisite PCRF deployment.

Figure 3-1 PCRF 4 Site GR Deployment Architecture

To test this PCRF call model, the Policy application is deployed in converged mode on a four-site georedundant site. The cnDBTier database and PCRF application are replicated on all the four-sites. The database replication is used to perform data synchronization between databases over the replication channels.

3.1.1 Test Scenario 1: PCRF Data Call Model on Four-Site GeoRedundant setup, with 7.5K Transaction Per Second (TPS) on each site and ASM disabled

This test run benchmarks the performance and capacity of PCRF data call model that is deployed in converged mode on a four-site georedundant setup. Each site in the setup handles an incoming traffic of 7.5K TPS. Aspen Service Mesh (ASM) is disabled.

3.1.1.1 Test Case and Setup Details

Table 3-1 Test Case Parmeters

| Parameters | Values |

|---|---|

| Call Rate | 30K TPS (7.5K TPS on each site) |

| Execution Time | 12 Hours |

| ASM | Disable |

Table 3-2 Call Model Data

| Messages | Total CPS Instance-1 | sy Traffic | Ldap Traffic | Total TPS |

|---|---|---|---|---|

| CCR-I | 320 | 320 | 320 | 960 |

| CCR-U | 320 | 0 | 0 | 320 |

| CCR-T | 320 | 320 | 0 | 640 |

| Total Messages | 960 | 640 | 320 | 1920 |

Table 3-3 PCRF Configurations

| Service Name | Status |

|---|---|

| Binding Service | Disable |

| Policy Event Record (PER) | Disable |

| Subscriber Activity Log (SAL) | Enable |

| LDAP | Enable |

| Online Charging System (OCS) | Enable |

Table 3-4 PCF Interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Disable |

| N36 UDR subscription (N7/N15-Nudr) | Disable |

| UDR on-demand nrf discovery | Disable |

| CHF (SM-Nchf) | Disable |

| BSF (N7-Nbsf) | Disable |

| AMF on demand nrf discovery | Disable |

| LDAP (Gx-LDAP) | Enable |

| Sy (PCF N7-Sy) | Enable |

Table 3-5 PCRF Interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | Enable |

| Sd (Gx-Sd) | Disable |

| Gx UDR query (Gx-Nudr) | Disable |

| Gx UDR subscription (Gx-Nudr | Disable |

| CHF enabled (AM) | Disable |

| Usage Monitoring (Gx) | Disable |

| Subscriber HTTP Notifier (Gx) | Disable |

Table 3-6 Configuring cnDBTier Helm Parameters

| Helm Parameter | New Value |

|---|---|

| ndb_batch_size | 2G |

| TimeBetweenEpochs | 100 |

| NoOfFragmentLogFiles | 50 |

| FragmentLogFileSize | 256M |

| RedoBuffer | 1024M |

| ndbappmysqld Pods Memory | 19/20 Gi |

| ndbmtd pods CPU | 8/8 |

| ndb_report_thresh_binlog_epoch_slip | 50 |

| ndb_eventbuffer_max_alloc | 19G |

| ndb_log_update_minimal | 1 |

| ndbmysqld Pods Memory | 25/25 Gi |

| replicationskiperrors | enable: true |

| replica_skip_errors | '1007,1008,1050,1051,1022' |

| numOfEmptyApiSlots | 4 |

Table 3-7 Policy Microservices Resource

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replica |

|---|---|---|---|---|---|

| ocpcf-appinfo | 1 | 1 | 0.5 | 1 | 1 |

| ocpcf-oc-binding | 5 | 6 | 1 | 8 | 15 |

| ocpcf-oc-diam-connector | 3 | 4 | 1 | 2 | 8 |

| ocpcf-oc-diam-gateway | 3 | 4 | 1 | 2 | 7 |

| ocpcf-occnp-config-server | 2 | 4 | 0.5 | 2 | 1 |

| ocpcf-occnp-egress-gateway | 3 | 4 | 4 | 6 | 2 |

| ocpcf-ocpm-ldap-gateway | 3 | 4 | 1 | 2 | 10 |

| ocpcf-occnp-ingress-gateway | 3 | 4 | 4 | 6 | 2 |

| ocpcf-occnp-nrf-client-nfdiscovery | 3 | 4 | 0.5 | 2 | 2 |

| ocpcf-occnp-nrf-client-nfmanagement | 1 | 1 | 1 | 1 | 2 |

| ocpcf-ocpm-audit-service | 1 | 2 | 1 | 1 | 1 |

| ocpcf-ocpm-cm-service | 2 | 4 | 0.5 | 2 | 1 |

| ocpcf-ocpm-policyds | 5 | 6 | 1 | 4 | 25 |

| ocpcf-ocpm-pre | 5 | 5 | 0.5 | 4 | 25 |

| ocpcf-ocpm-queryservice | 1 | 2 | 1 | 1 | 1 |

| ocpcf-pcf-smservice | 7 | 8 | 1 | 4 | 2 |

| ocpcf-pcrf-core | 7 | 8 | 8 | 8 | 30 |

| ocpcf-performance | 1 | 1 | 0.5 | 1 | 2 |

Note:

Min Replica = Max ReplicaTable 3-8 cnDBTier Services Resources:

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replica |

|---|---|---|---|---|---|

| ndbappmysqld | 8 | 8 | 19 | 20 | 5 |

| ndbmgmd | 2 | 2 | 9 | 11 | 2 |

| ndbmtd | 8 | 8 | 73 | 83 | 8 |

| ndbmysqld | 4 | 4 | 19 | 20 | 12 |

Note:

Min Replica = Max Replica3.1.1.2 CPU Utilization

This section lists the CPU utilization for Policy and cnDBTier microservices. The CPU utilization is the ratio between the (total CPU utilization against total CPU request (X)) versus (target CPU Utilization (Y) configured for the Pod).

Table 3-9 Policy Microservices Resource Utilization

| Service | CPU (X/Y) - Site 1 | CPU (X/Y)- Site 2 | CPU(X/Y) - Site 3 | CPU(X/Y) - Site 4 |

|---|---|---|---|---|

| ocpcf-alternate-route | 0%/80% | 0%/80% | 0%/80% | 0%/80% |

| ocpcf-appinfo | 1%/80% | 2%/80% | 2%/80% | 3%/80% |

| ocpcf-occnp-config-server | 10%/80% | 11%/80% | 12%/80% | 12%/80% |

| ocpcf-oc-diam-connector | 10%/40% | 11%/40% | 10%/40% | 10%/40% |

| ocpcf-occnp-egress-gateway | 0%/80% | 0%/80% | 0%/80% | 0%/80% |

| ocpcf-occnp-ingress-gateway | 0%/80% | 0%/80% | 0%/80% | 0%/80% |

| ocpcf-ocpm-ldap-gateway | 4%/60% | 4%/60% | 5%/60% | 4%/60% |

| ocpcf-occnp-nrf-client-nfdiscovery | 0%/80% | 0%/80% | 0%/80% | 0%/80% |

| ocpcf-occnp-nrf-client-nfmanagement | 0%/80% | 0%/80% | 0%/80% | 0%/80% |

| ocpcf-oc-binding | 0%/60% | 0%/60% | 0%/60% | 0%/60% |

| ocpcf-occnp-chf-connector | 0%/50% | 0%/50% | 0%/50% | 0%/50% |

| ocpcf-occnp-udr-connector | 0%/50% | 0%/50% | 0%/50% | 0%/50% |

| ocpcf-ocpm-audit-service | 0%/60% | 0%/60% | 0%/60% | 0%/60% |

| ocpcf-ocpm-policyds | 11%/60% | 11%/60% | 11%/60% | 11%/60% |

| ocpcf-ocpm-soapconnector | 0%/60% | 0%/60% | 0%/60% | 0%/60% |

| ocpcf-ocpm-pre | 13%/80% | 13%/80% | 13%/80% | 13%/80% |

| ocpcf-pcf-smservice | 0%/50% | 0%/50% | 0%/50% | 0%/50% |

| ocpcf-pcrf-core | 7%/40% | 7%/40% | 7%/40% | 7%/40% |

| ocpcf-ocpm-queryservice | 0%/80% | 0%/80% | 0%/80% | 0%/80% |

Table 3-10 cnDBTier Services Resource Utilization

| Name | CPU (X/Y) - Site 1 | CPU (X/Y) - Site 2 | CPU (X/Y) - Site 3 | CPU (X/Y) - Site 4 |

|---|---|---|---|---|

| ndbappmysqld | 35%/80% | 36%/80% | 35%/80% | 35%/80% |

| ndbmgmd | 1%/80% | 1%/80% | 0%/80% | 0%/80% |

| ndbmtd | 15%/80% | 15%/80% | 18%/80% | 17%/80% |

| ndbmysqld | 5%/80% | 5%/80% | 5%/80% | 5%/80% |

3.1.1.3 Results

Table 3-11 Result and Observations

| Parameter | Values |

|---|---|

| Test Duration | 12 Hours |

| TPS Achieved | 30K TPS (7.5KTPS on each site) |

It was observed that on a four-site GR setup, handling an incoming traffic of 7.5K TPS on each site, the call model was working successfully without any replication delay and traffic drop.

3.1.2 Test Scenario 2: PCRF Voice Call Model on Two-Sites of Four-Site GeoRedundant setup, with 15K Transaction Per Second (TPS) on each site and ASM disabled

This test run benchmarks the performance and capacity of PCRF voice call model that is deployed in converged mode on a two-site of a four-site georedundant setup. Each site in the setup handles an incoming traffic of 15K TPS, and with Aspen Service Mesh (ASM) disabled.

3.1.2.1 Test Case and Setup Details

Table 3-12 Test Case Parmeters

| Parameters | Values |

|---|---|

| Call Rate | 30K TPS (15K TPS on each site) |

| Execution Time | 10 Hours |

| ASM | Disable |

Table 3-13 Call Model Data

| Command | Messages per call |

|---|---|

| CCRI (Single APN) | 9.08% |

| CCRU (Single APN) | 18.18% |

| CCRT (Single APN) | 9.09 % |

| Gx RAR | 18.18% |

| AARI | 9.09 % |

| AARU | 9.09 % |

| Rx RAR | 18.18% |

| STR | 9.09% |

Table 3-14 PCRF Configurations

| Service Name | Status |

|---|---|

| Binding Service | Enable |

| Policy Event Record (PER) | Disable |

| Subscriber Activity Logging (SAL) | Enable |

| LDAP | Disable |

| Online Charging System (OCS) | Disable |

Table 3-15 PCF Interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Disable |

| N36 UDR subscription (N7/N15-Nudr) | Disable |

| UDR on-demand nrf discovery | Disable |

| CHF (SM-Nchf) | Disable |

| BSF (N7-Nbsf) | Disable |

| AMF on demand nrf discovery | Disable |

| LDAP (Gx-LDAP) | Disable |

| Sy (PCF N7-Sy) | Disable |

Table 3-16 PCRF Interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | Disable |

| Sd (Gx-Sd) | Disable |

| Gx UDR query (Gx-Nudr) | Disable |

| Gx UDR subscription (Gx-Nudr | Disable |

| CHF enabled (AM) | Disable |

| Usage Monitoring (Gx) | Disable |

| Subscriber HTTP Notifier (Gx) | Disable |

Table 3-17 Configuring cnDBTier Helm Parameters

| Helm Parameter | Value |

|---|---|

| ndb_batch_size | 2G |

| TimeBetweenEpochs | 100 |

| NoOfFragmentLogFiles | 50 |

| FragmentLogFileSize | 256M |

| RedoBuffer | 1024M |

| ndbappmysqld Pods Memory | 19/20 Gi |

| ndbmtd pods CPU | 8/8 |

| ndb_report_thresh_binlog_epoch_slip | 50 |

| ndb_eventbuffer_max_alloc | 19G |

| ndb_log_update_minimal | 1 |

| ndbmysqld Pods Memory | 25/25 Gi |

| replicationskiperrors | enable: true |

| replica_skip_errors | '1007,1008,1050,1051,1022' |

| numOfEmptyApiSlots | 4 |

Table 3-18 Policy Microservices Resource

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replica |

|---|---|---|---|---|---|

| ocpcf-appinfo | 1 | 1 | 0.5 | 1 | 1 |

| ocpcf-oc-binding | 5 | 6 | 1 | 8 | 18 |

| ocpcf-oc-diam-connector | 3 | 4 | 1 | 2 | 8 |

| ocpcf-oc-diam-gateway | 3 | 4 | 1 | 2 | 9 |

| ocpcf-occnp-config-server | 2 | 4 | 0.5 | 2 | 2 |

| ocpcf-occnp-egress-gateway | 3 | 4 | 4 | 6 | 1 |

| ocpcf-ocpm-ldap-gateway | 3 | 4 | 1 | 2 | 0 |

| ocpcf-occnp-ingress-gateway | 3 | 4 | 4 | 6 | 2 |

| ocpcf-occnp-nrf-client-nfdiscovery | 3 | 4 | 0.5. | 2 | 1 |

| ocpcf-occnp-nrf-client-nfmanagement | 1 | 1 | 1 | 1 | 1 |

| ocpcf-ocpm-audit-service | 1 | 2 | 1 | 1 | 1 |

| ocpcf-ocpm-cm-service | 2 | 4 | 0.5 | 2 | 1 |

| ocpcf-ocpm-policyds | 5 | 6 | 1 | 4 | 2 |

| ocpcf-ocpm-pre | 5 | 5 | 0.5 | 4 | 15 |

| ocpcf-ocpm-queryservice | 1 | 2 | 1 | 1 | 1 |

| ocpcf-pcf-smservice | 7 | 8 | 1 | 4 | 2 |

| ocpcf-pcrf-core | 7 | 8 | 8 | 8 | 24 |

| ocpcf-performance | 1 | 1 | 0.5 | 1 | 2 |

Note:

Min Replica = Max ReplicaTable 3-19 cnDBTier Microservices Resources:

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replica |

|---|---|---|---|---|---|

| ndbappmysqld | 8 | 8 | 19 | 20 | 5 |

| ndbmgmd | 2 | 2 | 9 | 11 | 3 |

| ndbmtd | 8 | 8 | 73 | 83 | 8 |

| ndbmysqld | 4 | 4 | 19 | 20 | 6 |

Note:

Min Replica = Max Replica3.1.2.2 CPU Utilization

This section lists the CPU utilization for Policy and cnDBTier microservices. The CPU utilization is the ratio between the (total CPU utilization against total CPU request (X)) versus (target CPU Utilization (Y) configured for the pod).

Table 3-20 Policy Microservices Resource Utilization

| Service | CPU (X/Y) - Site 1 | CPU (X/Y) - Site 2 |

|---|---|---|

| ocpcf-appinfo | 2%/80% | 1%/80% |

| ocpcf-occnp-config-server | 8%/80% | 8%/80% |

| ocpcf-oc-diam-connector | 0%/40% | 0%/40% |

| ocpcf-occnp-egress-gateway | 0%/80% | 0%/80% |

| ocpcf-occnp-ingress-gateway | 0%/80% | 1%/80% |

| ocpcf-occnp-nrf-client-nfdiscovery | 0%/80% | 0%/80% |

| ocpcf-occnp-nrf-client-nfmanagement | 0%/80% | 0%/80% |

| ocpcf-oc-binding | 12%/60% | 0%/60% |

| ocpcf-ocpm-audit-service | 0%/60% | 0%/60% |

| ocpcf-ocpm-policyds | 0%/60% | 0%/60% |

| ocpcf-ocpm-pre | 13%/80% | 0%/80% |

| ocpcf-pcf-smservice | 0%/50% | 0%/50% |

| ocpcf-pcrf-core | 25%/40% | 0%/40% |

| ocpcf-ocpm-queryservice | 0%/80% | 0%/80% |

Table 3-21 cnDBTier Services Resource Utilization

| Name | CPU (X/Y) - Site 1 | CPU (X/Y) - Site 2 |

|---|---|---|

| ndbappmysqld | 75%/80% | 76%/80% |

| ndbmgmd | 0%/80% | 0%/80% |

| ndbmtd | 19%/80% | 6%/80% |

| ndbmysqld | 8%/80% | 3%/80% |

3.2 PCF Call Model 2

3.2.1 Test Scenario: PCF Call Model on Two-Site GeoRedundant setup, with 15K TPS each for AM/UE and ASM enabled.

This test run benchmarks the performance and capacity of Policy data call model that is deployed in PCF mode. The PCF application handles an incoming traffic of 30K TPS, with 15K TPS each for AM and UE services. For this setup Aspen Service Mesh (ASM) was enabled.

3.2.1.1 Test Case and Setup Details

Table 3-22 Test Case Parmeters

| Parameters | Values |

|---|---|

| Call Rate | 30K TPS on Single site |

| Execution Time | 17 Hours |

| ASM | Enable |

| Traffic Ratio | 1:0:1 (AM/UE Create: AM/UE Update: AM/UE delete) |

| Active Subscribers | ~10000000 |

Table 3-23 Call Model

| Service Name | AM Service | UE Service | Total MPS | Total TPS | ||||

|---|---|---|---|---|---|---|---|---|

| Ingress | Egress | Total MPS | Ingress | Egress | Total MPS | |||

| Ingress | 3600 | 3600 | 7200 | 3600 | 3600 | 7200 | 14400 | 7200 |

| PRE | 3600 | 0 | 3600 | 3600 | 0 | 3600 | 7200 | 3600 |

| PDS | 9000 | 9000 | 18000 | 8100 | 6300 | 14400 | 34200 | 17100 |

| Egress | 9900 | 9900 | 19800 | 13500 | 13500 | 27000 | 46800 | 23,400 |

| Nrf Discovery | 1800 | 1800 | 3600 | 1800 | 1800 | 3600 | 7200 | 3600 |

| UDR Connector | 6300 | 8100 | 14400 | 6300 | 6300 | 12600 | 27000 | 13500 |

| CHF Connector | 3600 | 3600 | 7200 | 0 | 0 | 0 | 7200 | 3600 |

| AM | 3600 | 18900 | 22500 | 0 | 0 | 0 | 22500 | 11250 |

| UE | 0 | 0 | 0 | 3600 | 20700 | 24300 | 24300 | 12150 |

| Bulwark | 7200 | 0 | 7200 | 7200 | 0 | 7200 | 14400 | 7200 |

Table 3-24 PCF Configuration

| Service Name | Status |

|---|---|

| Bulwark Service | Enable |

| Binding Service | Disable |

| Subscriber State Variable (SSV) | Enable |

| Validate_user | Disable |

| Alternate Route Service | Disable |

| Audit Service | Enable |

| Binlog | Enable |

Table 3-25 PCF Interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Enable |

| N36 UDR subscription (N7/N15-Nudr) | Enable |

| UDR on-demand nrf discovery | Disable |

| CHF (SM-Nchf) | Disable |

| BSF (N7-Nbsf) | Disable |

| AMF on demand nrf discovery | Disable |

| LDAP (Gx-LDAP) | Disable |

| Sy (PCF N7-Sy) | Enable |

Table 3-26 PCRF Interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | Disable |

| Sd (Gx-Sd) | Disable |

| Gx UDR query (Gx-Nudr) | Disable |

| Gx UDR subscription (Gx-Nudr | Disable |

| CHF enabled (AM) | Disable |

| Usage Monitoring (Gx) | Disable |

| Subscriber HTTP Notifier (Gx) | Disable |

Table 3-27 Configuring cnDBTier Helm Parameters

| Helm Parameter | Value |

|---|---|

| restartSQLNodesIfBinlogThreadStalled | true |

| binlog_cache_size | 65536 |

| ndbsqld node memory | 54Gi |

| NoOfFragmentLogFiles | 96 |

| ndb_allow_copying_alter_table | 1 |

Table 3-28 Policy Microservices Resources

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replica |

|---|---|---|---|---|---|

| Appinfo | 1 | 1 | 0.5 | 1 | 2 |

| Audit Service | 1 | 2 | 1 | 1 | 2 |

| CM Service | 2 | 4 | 0.5 | 2 | 2 |

| Config Service | 2 | 4 | 0.5 | 2 | 2 |

| Egress Gateway | 4 | 4 | 4 | 6 | 13 |

| Ingress Gateway | 4 | 4 | 4 | 6 | 4 |

| Nrf Client Management | 1 | 1 | 1 | 1 | 2 |

| Diameter Gateway | 4 | 4 | 1 | 2 | 0 |

| Diameter Connector | 4 | 4 | 1 | 2 | 0 |

| AM Service | 8 | 8 | 1 | 4 | 9 |

| UE Service | 8 | 8 | 1 | 4 | 11 |

| Nrf Client Discovery | 4 | 4 | 0.5 | 2 | 4 |

| Query Service | 1 | 2 | 1 | 1 | 2 |

| PCRF Core Service | 8 | 8 | 8 | 8 | 0 |

| Performance | 1 | 1 | 0.5 | 1 | 2 |

| PRE Service | 4 | 4 | 0.5 | 2 | 6 |

| SM Service | 8 | 8 | 1 | 4 | 0 |

| PDS | 6 | 6 | 1 | 4 | 17 |

| UDR Connector | 6 | 6 | 1 | 4 | 7 |

| CHF Connector | 6 | 6 | 1 | 4 | 2 |

| LDAP Gateway Service | 3 | 4 | 1 | 2 | 0 |

| Binding Service | 5 | 6 | 1 | 8 | 0 |

| SOAP Connector | 2 | 4 | 4 | 4 | 0 |

| Alternate Route Service | 2 | 2 | 2 | 4 | 4 |

| Bulwark Service | 8 | 8 | 1 | 4 | 3 |

Note:

Min Replica = Max ReplicaTable 3-29 cnDBTier Microservices Resources:

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replica |

|---|---|---|---|---|---|

| ndbappmysqld | 15 | 15 | 18 | 18 | 6 |

| ndbmgmd | 3 | 3 | 10 | 10 | 2 |

| ndbmtd | 12 | 12 | 96 | 96 | 12 |

| ndbmysqld | 4 | 4 | 54 | 54 | 2 |

Note:

Min Replica = Max Replica3.2.1.2 CPU Utilization

This section lists the CPU utilization for Policy and cnDBTier microservices. The CPU utilization is the ratio between the (total CPU utilization against total CPU request (X)) versus (target CPU Utilization (Y) configured for the pod).

Table 3-30 Policy Microservices Resource Utilization

| Service | CPU (X/Y) - Site1 |

|---|---|

| ocpcf-alternate-route | 0%/80% |

| ocpcf-appinfo | 0%/80% |

| ocpcf-bulwark | 0%/60% |

| ocpcf-occnp-config-server | 9%/80% |

| ocpcf-occnp-egress-gateway | 46%/80% |

| ocpcf-occnp-ingress-gateway | 38%/80% |

| ocpcf-occnp-nrf-client-nfdiscovery | 38%/80% |

| ocpcf-occnp-nrf-client-nfmanagement | 15%/80% |

| ocpcf-oc-binding | 0%/60% |

| ocpcf-occnp-chf-connector | 0%/50% |

| ocpcf-occnp-udr-connector | 46%/50% |

| ocpcf-ocpm-audit-service | 0%/60% |

| ocpcf-ocpm-policyds | 32%/60% |

| ocpcf-ocpm-pre | 18%/80% |

| ocpcf-pcf-amservice | 21%/30% |

| ocpcf-pcf-ueservice | 33%30% |

| ocpcf-ocpm-queryservice | 0%80% |

Table 3-31 cnDBTier Services Resource Utilization

| Name | CPU (X/Y) - Site1 |

|---|---|

| ndbappmysqld | 31%/80% |

| ndbmgmd | 0%/80% |

| ndbmtd | 43%/80% |

| ndbmysqld | 9%/80% |

3.2.1.3 Results

Table 3-32 Latency Observations

| NF | Procedure | NF Processing Time - (Average/50%) ms | NF Processing Time - (99%) ms |

|---|---|---|---|

| AM-PCF | AM-Create (simulator) | 56.2 | 47.6 |

| AM-Delete (simulator) | 50.2 | 44.6 | |

| UE-PCF | AM-Create (simulator) | 78.6 | 63.3 |

| AM-Delete (simulator) | 7.6 | 6.3 |

Table 3-33 Latency Observations for Policy Services:

| Services | Average Latency (ms) |

|---|---|

| Ingress | 45.6 |

| PDS | 26.9 |

| UDR | 7.60 |

| NrfClient Discovery - OnDemand | 6.39 |

| Egress | 0.914 |

- Able to achieve 30K TPS with AM (15K) and UE (15K) with constant approximate run of 17 Hours.

- Latency was constant through out the call model run, with

- approximate of 46ms for Ingress, and

- approximate of <=20ms for rest of the PCF services

3.2.2 Test Scenario: PCF AM/UE Call Model on Two-Site GeoRedundant setup, with each site handling 25K TPS traffic and ASM enabled

This test run benchmarks the performance and capacity of Policy AM/UE data call model that is deployed in PCF mode. The PCF application handles a total (Ingress + Egress) traffic of 50K TPS, with each site handling a traffic of 25K TPS. For this setup Aspen Service Mesh (ASM) was enabled.

In this test setup, the Georedundant (GR) mode was enabled in cnDBTier and it was configured for 3 channel replication.

3.2.2.1 Test Case and Setup Details

Table 3-34 Test Case Parmeters

| Parameters | Values |

|---|---|

| Call Rate (Ingress + Egress) | 50K TPS on Single site |

| Execution Time | 94 Hours |

| ASM | Enable |

| Traffic Ratio | 1:0:1 (AM/UE Create: AM/UE Update: AM/UE delete) |

| Active Subscribers | 12591141 |

Table 3-35 TPS Distribution

| TPS Distribution | Site1 | Site2 |

|---|---|---|

| AM Ingress | 6.12K | 0 |

| AM Egress | 18.88K | 0 |

| UE Ingress | 6.12K | 0 |

| UE Egress | 18.88K | 0 |

| Total TPS | 50K | 0 |

Table 3-36 Call Model

| Service Name | AM Service | UE Service | Total MPS | Total TPS | ||||

|---|---|---|---|---|---|---|---|---|

| Ingress | Egress | Total MPS | Ingress | Egress | Total MPS | |||

| Ingress | 6250 | 6250 | 12500 | 6250 | 6250 | 12500 | 25000 | 12500 |

| PRE | 6250 | 0 | 6250 | 6250 | 0 | 6250 | 12500 | 6250 |

| PDS | 9375 | 9375 | 18750 | 9375 | 9375 | 18750 | 37500 | 18750 |

| Egress | 12500 | 12500 | 25000 | 25000 | 25000 | 50000 | 75000 | 37500 |

| Nrf Discovery | 3125 | 3125 | 6250 | 6250 | 6250 | 12500 | 18750 | 9375 |

| UDR Connector | 9375 | 12500 | 21875 | 9375 | 12500 | 21875 | 43750 | 21875 |

| CHF Connector | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| AM | 6250 | 15625 | 21875 | 0 | 0 | 0 | 21875 | 10937.5 |

| UE | 0 | 0 | 0 | 6250 | 28125 | 34375 | 34375 | 17187.5 |

| Bulwark | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

Table 3-37 PCF Configuration

| Service Name | Status |

|---|---|

| Bulwark Service | Disable |

| Binding Service | NA |

| Subscriber State Variable (SSV) | Enable |

| Validate_user | Disable |

| Alternate Route Service | Disable |

| Audit Service | Enable |

| Binlog | Enable |

Table 3-38 PCF Interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Enable |

| N36 UDR subscription (N7/N15-Nudr) | Enable |

| UDR on-demand nrf discovery | Enable |

| CHF (SM-Nchf) | Disable |

| BSF (N7-Nbsf) | NA |

| AMF on demand nrf discovery | Enable |

| LDAP (Gx-LDAP) | Disable |

| Sy (PCF N7-Sy) | Disable |

Table 3-39 PCRF Interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | NA |

| Sd (Gx-Sd) | NA |

| Gx UDR query (Gx-Nudr) | NA |

| Gx UDR subscription (Gx-Nudr | NA |

| CHF enabled (AM) | Enable |

| Usage Monitoring (Gx) | NA |

| Subscriber HTTP Notifier (Gx) | NA |

Table 3-40 Configuring cnDBTier Helm Parameters

| Helm Parameter | Value | cnDBTier Helm Configuration |

|---|---|---|

| restartSQLNodesIfBinlogThreadStalled | true |

|

| binlog_cache_size | 10485760 |

|

| ConnectCheckIntervalDelay | 500 |

|

| NoOfFragmentLogFiles | 32 |

|

| NoOfFragmentLogParts | 6 |

|

| MaxNoOfExecutionThreads | 14 |

|

| FragmentLogFileSize | 128M |

|

| binlogthreadstore.capacity | 5 |

|

| ndb_allow_copying_alter_table | ON |

|

Note:

The cnDBTier customized parameters values remains same for both site1 and site2.Table 3-41 Policy Microservices Resources

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Min Replicas | Max Replicas | Request/Limit Isito CPU | Request/Limit Isito Memory |

|---|---|---|---|---|---|---|---|---|

| Appinfo | 1 | 1 | 0.5 | 1 | 2 | 2 | 2 | 2 |

| Audit Service | 2 | 2 | 4 | 4 | 2 | 2 | 2 | 2 |

| CM Service | 2 | 4 | 0.5 | 2 | 2 | 2 | 2 | 2 |

| Config Service | 4 | 4 | 0.5 | 2 | 2 | 2 | 2 | 2 |

| Egress Gateway | 4 | 4 | 6 | 6 | 2 | 27 | 2 | 2 |

| Ingress Gateway | 5 | 5 | 6 | 6 | 2 | 8 | 2.5 | 2 |

| Nrf Client Management | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 2 |

| Diameter Gateway | 4 | 4 | 1 | 2 | 0 | 0 | 2 | 2 |

| Diameter Connector | 4 | 4 | 1 | 2 | 0 | 0 | 2 | 2 |

| AM Service | 8 | 8 | 1 | 4 | 2 | 6 | 2 | 2 |

| UE Service | 8 | 8 | 1 | 4 | 2 | 16 | 2 | 2 |

| Nrf Client Discovery | 4 | 4 | 0.5 | 2 | 2 | 7 | 2 | 2 |

| Query Service | 1 | 2 | 1 | 1 | 2 | 2 | 2 | 2 |

| PCRF Core Service | 8 | 8 | 8 | 8 | 0 | 0 | 2 | 2 |

| Performance | 1 | 1 | 0.5 | 1 | 2 | 2 | 2 | 2 |

| PRE Service | 4 | 4 | 4 | 4 | 2 | 4 | 1.5 | 2 |

| SM Service | 7 | 7 | 10 | 10 | 0 | 0 | 2.5 | 2 |

| PDS | 7 | 7 | 8 | 8 | 2 | 22 | 2.5 | 4 |

| UDR Connector | 6 | 6 | 4 | 4 | 2 | 14 | 2 | 2 |

| CHF Connector | 6 | 6 | 4 | 4 | 0 | 0 | 2 | 2 |

| LDAP Gateway Service | 3 | 4 | 1 | 2 | 0 | 0 | 2 | 2 |

| Binding Service | 6 | 6 | 8 | 8 | 2 | 0 | 2.5 | 2 |

| SOAP Connector | 2 | 4 | 4 | 4 | 0 | 0 | 2 | 2 |

| Alternate Route Service | 2 | 2 | 2 | 4 | 2 | 5 | 2 | 2 |

| Bulwark Service | 8 | 8 | 6 | 6 | 0 | 0 | 2.5 | 2 |

Table 3-42 cnDBTier Microservices Resources:

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replicas | Request/Limit Istio CPU | Request/Limit Istio Memory |

|---|---|---|---|---|---|---|---|

| ndbappmysqld | 12 | 20 | 12 | 5 | 5 | ||

| ndbmgmd | 3 | 10 | 2 | 2 | 2 | ||

| ndbmtd | 12 | 129 | 10 | 6 | 6 | ||

| ndbmysqld | 4 | 54 | 6 | 4 | 4 |

3.2.2.2 CPU Utilization

This section lists the CPU utilization for Policy and cnDBTier microservices. The average CPU utilization is the ratio between the (total CPU utilization against total CPU request (X)) versus (target CPU Utilization (Y) configured for the pod).

Table 3-43 Policy Microservices Resource Utilization

| Service | CPU (X/Y) - Site1 |

|---|---|

| ocpcf-alternate-route | 0%/80% |

| ocpcf-appinfo | 0%/80% |

| ocpcf-bulwark | 0%/60% |

| ocpcf-occnp-config-server | 16%/80% |

| ocpcf-occnp-egress-gateway | 60%/80% |

| ocpcf-occnp-ingress-gateway | 55%/80% |

| ocpcf-occnp-nrf-client-nfdiscovery | 43%/80% |

| ocpcf-occnp-nrf-client-nfmanagement | 0%/80% |

| ocpcf-oc-binding | 0%/60% |

| ocpcf-occnp-chf-connector | 0%/50% |

| ocpcf-occnp-udr-connector | 48%/50% |

| ocpcf-ocpm-audit-service | 0%/60% |

| ocpcf-ocpm-policyds | 49%/60% |

| ocpcf-ocpm-pre | 25%/80% |

| ocpcf-pcf-amservice | 32%/30% |

| ocpcf-pcf-ueservice | 54%30% |

| ocpcf-ocpm-queryservice | 0%80% |

Table 3-44 cnDBTier Services Resource Utilization

| Name | CPU (X/Y) - Site1 | CPU (X/Y) - Site2 |

|---|---|---|

| ndbappmysqld | 26%/80% | 20%/80% |

| ndbmgmd | 0%/80% | 0%/80% |

| ndbmtd | 63%/80% | 60%/80% |

| ndbmysqld | 6%/80% | 1%/80% |

3.2.3 Test Scenario: PCF SM Call Model on Two-Site GeoRedundant setup, with each site handling 43K TPS traffic and ASM Enabled

This test run benchmarks the performance and capacity of Policy SM data call model that is deployed in PCF mode on a two-site georedundant setup. The PCF application handles a total (Ingress + Egress) traffic of 60K TPS, with each site handling a traffic of 21.5K TPS. For this setup Aspen Service Mesh (ASM) was enabled.

In this test setup, the Georedundant (GR) mode was enabled in cnDBTier and it was configured for 3 channel replication.

3.2.3.1 Test Case and Setup Details

Table 3-46 Test Case Parmeters

| Parameters | Values |

|---|---|

| Call Rate (Ingress + Egress) | 21.5K TPS on Site1, 21.5K TPS on Site2 |

| ASM | Enable |

| Traffic Ratio | Internet:- 1 SM Create : 74 SM Updates : 1 SM DeleteIMS:- 1 SM Create : 8 SM Updates : 1 SM DeleteAPP:- 1 SM Create : 0 SM Updates : 1 SM DeleteADMIN:- 1 SM Create : 0 SM Updates : 1 SM DeleteIMS Rx:- 1 Create : 1 STR |

| Active Subscribers | 10000000 subscribers and 20000000 sessions |

Policy Project Details:

The Policy design editor based on the Blockly interface was used to set the Policy project for each of the Policy services. The complexity level of Policy Project configured for this run was High.

- Low– No Usage of Loops in Blockly logic, No JSON operations, No complex Java Script code in Object Expression /Statement Expression.

- Medium - Usage of Loops in Blockly logic, Policy Table Wildcard match <= 3 fields, MatchList < 3, 3 < RegEx match < 6

- High - JSON Operations – Custom, complex Java Script code in Object Expression /Statement Expression, Policy Table Wildcard match > 3 fields, MatchLists >= 3, RegEx mat >= 6

Table 3-47 PCF Configuration

| Name | Status |

|---|---|

| Bulwark Service | Enable |

| Binding Service | Enable |

| Subscriber State Variable (SSV) | Enable |

| Validate_user | Disable |

| Alternate Route | Disable |

| Audit Service | Enable |

| Enable Custom JSON | Enable |

Table 3-48 PCF Interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Enable |

| N36 UDR subscription (N7/N15-Nudr) | Enable |

| UDR on-demand nrf discovery | Disable |

| CHF (SM-Nchf) | Enable |

| BSF (N7-Nbsf) | Enable |

| AMF on demand nrf discovery | NA |

| LDAP (Gx-LDAP) | NA |

| Sy (PCF N7-Sy) | NA |

Table 3-49 PCRF Interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | NA |

| Sd (Gx-Sd) | NA |

| Gx UDR query (Gx-Nudr) | NA |

| Gx UDR subscription (Gx-Nudr | NA |

| CHF enabled (AM) | NA |

| Usage Monitoring (Gx) | NA |

| Subscriber HTTP Notifier (Gx) | NA |

Table 3-50 Configuring Policy Helm Parameters

| Service Name | Policy Helm Configuration |

|---|---|

| Ingress Gateway |

|

| Egress Gateway |

|

Note:

The Policy customized parameters values remains same for both site1 and site2.Table 3-51 Configuring cnDBTier Helm Parameters

| Helm Parameter | Value | cnDBTier Helm Configuration |

|---|---|---|

| binlog_cache_size | 10485760 |

|

| ConnectCheckIntervalDelay | 500 |

|

| NoOfFragmentLogFiles | 32 |

|

| NoOfFragmentLogParts | 4 |

|

| MaxNoOfExecutionThreads | 11 |

|

| FragmentLogFileSize | 128M |

|

| binlogthreadstore.capacity | 5 |

|

| ndb_allow_copying_alter_table | ON |

|

Note:

The cnDBTier customized parameters values remains same for both site1 and site2.Table 3-52 Policy Microservices Resources

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Min Replicas | Max Replicas | Request/Limit Isito CPU | Request/Limit Isito Memory |

|---|---|---|---|---|---|---|---|---|

| Appinfo | 1 | 1 | 0.5 | 1 | 2 | 2 | 2 | 2 |

| Audit Service | 2 | 2 | 4 | 4 | 2 | 2 | 2 | 2 |

| CM Service | 2 | 4 | 0.5 | 2 | 2 | 2 | 2 | 2 |

| Config Service | 4 | 4 | 0.5 | 2 | 2 | 2 | 2 | 2 |

| Egress Gateway | 4 | 4 | 6 | 6 | 2 | 6 | 2 | 2 |

| Ingress Gateway | 5 | 5 | 6 | 6 | 2 | 27 | 2.5 | 2 |

| NRF Client Management | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 2 |

| Diameter Gateway | 4 | 4 | 1 | 2 | 2 | 2 | 2 | 2 |

| Diameter Connector | 4 | 4 | 1 | 2 | 2 | 2 | 2 | 2 |

| AM Service | 8 | 8 | 1 | 4 | 0 | 0 | 2 | 2 |

| UE Service | 8 | 8 | 1 | 4 | 0 | 0 | 2 | 2 |

| NRF Client Discovery | 4 | 4 | 2 | 2 | 2 | 2 | 2 | 2 |

| Query Service | 1 | 2 | 1 | 1 | 2 | 2 | 2 | 2 |

| PCRF Core Service | 8 | 8 | 8 | 8 | 0 | 0 | 2 | 2 |

| Performance | 1 | 1 | 0.5 | 1 | 2 | 2 | 2 | 2 |

| PRE Service | 4 | 4 | 4 | 4 | 2 | 55 | 1.5 | 2 |

| SM Service | 7 | 7 | 10 | 10 | 2 | 76 | 2 | 2 |

| PDS Service | 7 | 7 | 8 | 8 | 2 | 21 | 2.5 | 4 |

| UDR Connector | 6 | 6 | 4 | 2 | 2 | 2 | 2 | 2 |

| CHF Connector | 6 | 6 | 4 | 4 | 2 | 2 | 2 | 2 |

| LDAP Gateway Service | 3 | 4 | 1 | 2 | 0 | 0 | 2 | 2 |

| Binding Service | 6 | 6 | 8 | 8 | 2 | 3 | 2.5 | 2 |

| SOAP Connector | 2 | 4 | 4 | 4 | 0 | 0 | 2 | 2 |

| Alternate Route Service | 2 | 2 | 2 | 4 | 2 | 2 | 2 | 2 |

| Bulwark Service | 8 | 8 | 6 | 6 | 2 | 19 | 2.5 | 2 |

Table 3-53 cnDBTier Microservices Resources:

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replicas |

|---|---|---|---|---|---|

| ndbappmysqld | 12 | 12 | 18 | 18 | 18 |

| ndbmgmd | 3 | 3 | 8 | 8 | 2 |

| ndbmtd | 10 | 10 | 132 | 132 | 10 |

| ndbmysqld | 4 | 4 | 54 | 54 | 12 |

Note:

Min Replica = Max Replica3.2.3.2 CPU Utilization

Table 3-54 Policy Microservices Resource Utilization

| Service | CPU (X/Y) - Site 1 | CPU (X/Y) - Site 2 |

|---|---|---|

| ocpcf-occnp-alternate route | 0.10%%/9.56% | 0.10%%/9.97% |

| ocpcf-appinfo | 4.40%/25.78% | 4.50%/25.34% |

| ocpcf-bulwark | 17.55%/17.13% | 0.04%/14.53% |

| ocpcf-occnp-config-server | 6.17%/42.65% | 3.70%/40.19% |

| ocpcf-occnp-egress-gateway | 19.48%/21.97% | 0.04%/20.34% |

| ocpcf-occnp-ingress-gateway | 16.50%/32.03% | 0.54%/25.63% |

| ocpcf-occnp-nrf-client-nfdiscovery | 7.94%/51.84% | 0.07%/38.38% |

| ocpcf-occnp-nrf-client-nfmanagement | 1.75%/50.29% | 0.35%/48.73% |

| ocpcf-oc-binding | 12.36%/17.44% | 0.05%/12.41% |

| ocpcf-occnp-chf-connector | 11.87%/22.10% | 0.05%/18.97% |

| ocpcf-occnp-udr-connector | 14.83%/23.34% | 0.06%/17.67% |

| ocpcf-ocpm-audit-service | 0.22%/16.35% | 0.10%/12.41% |

| ocpcf-ocpm-policyds | 21.13%/22.16% | 0.03%/18.47% |

| ocpcf-ocpm-pre | 21.64%/47.43% | 0.21%/12.82% |

| ocpcf-pcf-smservice | 22.38%/25.81% | 0.04%/18.15% |

| ocpcf-ocpm-queryservice | 0.05%/23.54% | 0.05%/24.12% |

Table 3-55 cnDBTier Services Resource Utilization

| Name | CPU (X/Y) - Site1 | CPU (X/Y) - Site2 |

|---|---|---|

| ndbappmysqld | 28.57%/41.04% | 0.31%/32.17% |

| ndbmgmd | 0.22%/25.38% | 0.22%/25.41% |

| ndbmtd | 55.88%/46.89% | 9.32%/46.90% |

3.2.4 Test Scenario: PCF SM Call Model on Two-Site GeoRedundant setup, with each site handling 30K TPS traffic and ASM Enabled

This test run benchmarks the performance and capacity of Policy SM data call model that is deployed in PCF mode on a two-site georedundant setup. The PCF application handles a total (Ingress + Egress) traffic of 60K TPS, with each site handling a traffic of 30K TPS. For this setup Aspen Service Mesh (ASM) was enabled.

In this test setup, the Georedundant (GR) mode was enabled in cnDBTier and it was configured for 3 channel replication.

3.2.4.1 Test Case and Setup Details

Table 3-57 Test Case Parmeters

| Parameters | Values |

|---|---|

| Call Rate (Ingress + Egress) | 30K TPS on Site1, 30K TPS on Site2 |

| ASM | Enable |

| Traffic Ratio |

Internet:- 1 SM Create : 74 SM Updates : 1 SM Delete IMS Rx:- 1 Create : 1 Update : 1 STR |

| Active Subscribers | 393590 (Site1) + 393589 (Site2) = 787179 |

Policy Project Details:

The Policy design editor based on the Blockly interface was used to set the Policy project for each of the Policy services. The complexity level of Policy Project configured for this run was High.

- Low– No Usage of Loops in Blockly logic, No JSON operations, No complex Java Script code in Object Expression /Statement Expression.

- Medium - Usage of Loops in Blockly logic, Policy Table Wildcard match <= 3 fields, MatchList < 3, 3 < RegEx match < 6

- High - JSON Operations – Custom, complex Java Script code in Object Expression /Statement Expression, Policy Table Wildcard match > 3 fields, MatchLists >= 3, RegEx mat >= 6

Table 3-58 Call Model

| Service Name | DNN1 SM Service (MPS) | DNN2 SM Service and Rx Interface (MPS) | Total MPS | ||||

|---|---|---|---|---|---|---|---|

| Inbound Message | Outbound Message | Inbound Message | Outbound Message | Inbound Message | Outbound Message | ||

| Ingress Gateway | 49000 | 49000 | 1520 | 1520 | 0 | 0 | 101040 |

| SM Service | 49654 | 209036 | 1526 | 10739 | 2533 | 7094 | 280590 |

| PRE Service | 49000 | 0 | 1520 | 0 | 1520 | 0 | 52040 |

| PDS Service | 58114 | 3924 | 3623 | 525 | 3040 | 0 | 69230 |

| Egress Gateway | 4578 | 4578 | 1545 | 1545 | 1520 | 1520 | 15290 |

| NRF Discovery | 654 | 654 | 6 | 6 | 0 | 0 | 1320 |

| UDR Connector | 1962 | 2616 | 513 | 519 | 0 | 0 | 5610 |

| CHF Connector | 1308 | 1308 | 6 | 6 | 0 | 0 | 2630 |

| Binding Service | 1307 | 0 | 2027 | 1014 | 0 | 0 | 4350 |

| Diameter Connector | 0 | 0 | 507 | 507 | 1520 | 2533 | 5070 |

| Diameter Gateway | 0 | 0 | 507 | 507 | 1520 | 1520 | 4060 |

| Bulwark Service | 99308 | 0 | 3052 | 0 | 1013 | 0 | 103380 |

Table 3-59 PCF Configuration

| Name | Status |

|---|---|

| Bulwark Service | Enable |

| Binding Service | Enable |

| Subscriber State Variable (SSV) | Enable |

| Validate_user | Disable |

| Alternate Route | Disable |

| Audit Service | Enable |

| Enable Custom JSON | Enable |

Table 3-60 PCF Interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Enable |

| N36 UDR subscription (N7/N15-Nudr) | Enable |

| UDR on-demand nrf discovery | Disable |

| CHF (SM-Nchf) | Enable |

| BSF (N7-Nbsf) | Enable |

| AMF on demand nrf discovery | NA |

| LDAP (Gx-LDAP) | NA |

| Sy (PCF N7-Sy) | NA |

Table 3-61 PCRF Interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | NA |

| Sd (Gx-Sd) | NA |

| Gx UDR query (Gx-Nudr) | NA |

| Gx UDR subscription (Gx-Nudr | NA |

| CHF enabled (AM) | NA |

| Usage Monitoring (Gx) | NA |

| Subscriber HTTP Notifier (Gx) | NA |

Table 3-62 Configuring Policy Helm Parameters

| Service Name | Policy Helm Configuration |

|---|---|

| Ingress Gateway |

|

| Egress Gateway |

|

Note:

The Policy customized parameters values remains same for both site1 and site2.Table 3-63 Configuring cnDBTier Helm Parameters

| Helm Parameter | Value | cnDBTier Helm Configuration |

|---|---|---|

| binlog_cache_size | 10485760 |

|

| ConnectCheckIntervalDelay | 500 |

|

| NoOfFragmentLogFiles | 32 |

|

| NoOfFragmentLogParts | 4 |

|

| MaxNoOfExecutionThreads | 11 |

|

| FragmentLogFileSize | 128M |

|

| binlogthreadstore.capacity | 5 |

|

| ndb_allow_copying_alter_table | ON |

|

Note:

The cnDBTier customized parameters values remains same for both site1 and site2.Table 3-64 Policy Microservices Resources

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Min Replicas | Max Replicas | Request/Limit Isito CPU | Request/Limit Isito Memory |

|---|---|---|---|---|---|---|---|---|

| Appinfo | 1 | 1 | 0.5 | 1 | 2 | 2 | 2 | 2 |

| Audit Service | 2 | 2 | 4 | 4 | 2 | 2 | 2 | 2 |

| CM Service | 2 | 4 | 0.5 | 2 | 2 | 2 | 2 | 2 |

| Config Service | 4 | 4 | 0.5 | 2 | 2 | 2 | 2 | 2 |

| Egress Gateway | 4 | 4 | 6 | 6 | 2 | 6 | 2 | 2 |

| Ingress Gateway | 5 | 5 | 6 | 6 | 2 | 27 | 2.5 | 2 |

| NRF Client Management | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 2 |

| Diameter Gateway | 4 | 4 | 1 | 2 | 2 | 2 | 2 | 2 |

| Diameter Connector | 4 | 4 | 1 | 2 | 2 | 2 | 2 | 2 |

| AM Service | 8 | 8 | 1 | 4 | 0 | 0 | 2 | 2 |

| UE Service | 8 | 8 | 1 | 4 | 0 | 0 | 2 | 2 |

| NRF Client Discovery | 4 | 4 | 2 | 2 | 2 | 2 | 2 | 2 |

| Query Service | 1 | 2 | 1 | 1 | 2 | 2 | 2 | 2 |

| PCRF Core Service | 8 | 8 | 8 | 8 | 0 | 0 | 2 | 2 |

| Performance | 1 | 1 | 0.5 | 1 | 2 | 2 | 2 | 2 |

| PRE Service | 4 | 4 | 4 | 4 | 2 | 55 | 1.5 | 2 |

| SM Service | 7 | 7 | 10 | 10 | 2 | 76 | 2.5 | 2 |

| PDS Service | 7 | 7 | 8 | 8 | 2 | 21 | 2.5 | 4 |

| UDR Connector | 6 | 6 | 4 | 2 | 2 | 2 | 2 | 2 |

| CHF Connector | 6 | 6 | 4 | 4 | 2 | 2 | 2 | 2 |

| LDAP Gateway Service | 3 | 4 | 1 | 2 | 0 | 0 | 2 | 2 |

| Binding Service | 6 | 6 | 8 | 8 | 2 | 3 | 2.5 | 2 |

| SOAP Connector | 2 | 4 | 4 | 4 | 0 | 0 | 2 | 2 |

| Alternate Route Service | 2 | 2 | 2 | 4 | 2 | 2 | 2 | 2 |

| Bulwark Service | 8 | 8 | 6 | 6 | 2 | 19 | 2.5 | 2 |

Table 3-65 cnDBTier Microservices Resources:

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replicas |

|---|---|---|---|---|---|

| ndbappmysqld | 12 | 12 | 18 | 18 | 18 |

| ndbmgmd | 3 | 3 | 8 | 8 | 2 |

| ndbmtd | 10 | 10 | 132 | 132 | 10 |

| ndbmysqld | 4 | 4 | 54 | 54 | 12 |

Note:

Min Replica = Max Replica3.2.4.2 CPU Utilization

Table 3-66 Policy Microservices Resource Utilization

| Service | CPU (X/Y) - Site1 | CPU (X/Y) - Site2 |

|---|---|---|

| ocpcf-alternate-route | 0%/80% | 0%/80% |

| ocpcf-appinfo | 1%/80% | 1%/80% |

| ocpcf-bulwark | 22%/60% | 23%/60% |

| ocpcf-occnp-config-server | 9%/80% | 10%/80% |

| ocpcf-oc-diam-connector | 8%/40% | 8%/40% |

| ocpcf-occnp-egress-gateway | 11%/80% | 10%/80% |

| ocpcf-occnp-ingress-gateway | 19%/80% | 24%/80% |

| ocpcf-occnp-nrf-client-nfdiscovery | 5%/80% | 5%/80% |

| ocpcf-occnp-nrf-client-nfmanagement | 0%/80% | 0%/80% |

| ocpcf-oc-binding | 17%/60% | 17%/60% |

| ocpcf-occnp-chf-connector | 7%/50% | 7%/50% |

| ocpcf-occnp-udr-connector | 15%/50% | 14%/50% |

| ocpcf-ocpm-audit-service | 0%/50% | 0%/50% |

| ocpcf-ocpm-policyds | 19%/60% | 19%/60% |

| ocpcf-ocpm-pre | 26%/80% | 27%/80% |

| ocpcf-pcf-amservice | 0%/30% | 0%/30% |

| ocpcf-pcf-ueservice | 0%/30% | 0%/30% |

| ocpcf-pcf-smservice | 25%/50% | 25%/50% |

| ocpcf-ocpm-queryservice | 0%80% | 0%80% |

Table 3-67 cnDBTier Services Resource Utilization

| Name | CPU (X/Y) - Site1 | CPU (X/Y) - Site2 |

|---|---|---|

| ndbappmysqld | 42%/80% | 37%/80% |

| ndbmgmd | 0%/80% | 0%/80% |

| ndbmtd | 32%/80% | 31%/80% |

| ndbmysqld | 4%/80% | 4%/80% |

3.2.5 Test Scenario: PCF SM Call Model on Two-Site GeoRedundant setup, with 41K TPS Traffic on Site-1 and ASM Enabled

This test run benchmarks the performance and capacity of Policy SM data call model that is deployed in PCF mode on a two-site georedundant setup. The PCF application handles a total (Ingress + Egress+Diameter) traffic of 41K TPS on either site. For this setup Aspen Service Mesh (ASM) was enabled.

3.2.5.1 Test Case and Setup Details

Table 3-69 Test Case Parmeters

| Parameters | Values |

|---|---|

| Call Rate (Ingress + Egress + Diameter) | 41K TPS on Site-1 |

| ASM | Enable |

| Traffic Ratio |

|

| Active Subscribers | 10000000 subscribers and 20000000 sessions |

Policy Project Details:

The Policy design editor based on the Blockly interface was used to set the Policy project for each of the Policy services. The complexity level of Policy Project configured for this run was High.

- Low– No Usage of Loops in Blockly logic, No JSON operations, No complex Java Script code in Object Expression /Statement Expression.

- Medium - Usage of Loops in Blockly logic, Policy Table Wildcard match <= 3 fields, MatchList < 3, 3 < RegEx match < 6

- High - JSON Operations – Custom, complex Java Script code in Object Expression /Statement Expression, Policy Table Wildcard match > 3 fields, MatchLists >= 3, RegEx mat >= 6

Table 3-70 PCF Configuration

| Name | Status |

|---|---|

| Bulwark Service | Enable |

| Binding Service | Enable |

| Subscriber State Variable (SSV) | Enable |

| Validate_user | Disable |

| Alternate Route | Enable |

| Audit Service | Enable |

| Enable Custom JSON | Enable |

Table 3-71 PCF Interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Enable |

| N36 UDR subscription (N7/N15-Nudr) | Enable |

| UDR on-demand nrf discovery | Disable |

| CHF (SM-Nchf) | Enable |

| BSF (N7-Nbsf) | Enable |

| AMF on demand nrf discovery | NA |

| LDAP (Gx-LDAP) | NA |

| Sy (PCF N7-Sy) | NA |

Table 3-72 PCRF Interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | NA |

| Sd (Gx-Sd) | NA |

| Gx UDR query (Gx-Nudr) | NA |

| Gx UDR subscription (Gx-Nudr | NA |

| CHF enabled (AM) | NA |

| Usage Monitoring (Gx) | NA |

| Subscriber HTTP Notifier (Gx) | NA |

Table 3-73 Configuring Policy Helm Parameters

| Service Name | Policy Helm Configuration |

|---|---|

| Ingress Gateway |

|

| Egress Gateway |

|

Note:

The Policy customized parameters values remains same for both site1 and site2.Table 3-74 Configuring cnDBTier Helm Parameters

| Helm Parameter | Value |

|---|---|

| global.additionalndbconfigurations.mysqld.binlog_cache_size | 10485760 |

| global.additionalndbconfigurations.ndb.ConnectCheckIntervalDelay | 0 |

| global.additionalndbconfigurations.ndb.NoOfFragmentLogFiles | 64 |

| global.additionalndbconfigurations.ndb.NoOfFragmentLogParts | 4 |

| global.additionalndbconfigurations.ndb.MaxNoOfExecutionThreads | 11 |

| global.additionalndbconfigurations.ndb.FragmentLogFileSize | 32M |

| db-monitor-svc.binlogthreadstore.capacity | 5 |

| global.additionalndbconfigurations.mysqld.ndb_allow_copying_alter_table | ON |

| global.additionalndbconfigurations.ndb.HeartbeatIntervalDbDb | 1250 |

Note:

The cnDBTier customized parameters values remains same for both site1 and site2.Table 3-75 Policy Microservices Resources

| Service Name | CPU Limit Per Pod | CPU Request Per Pod | Memory Limit Per Pod (Gi) | Memory Request Per Pod (Gi) | Min Replicas | Max Replicas |

|---|---|---|---|---|---|---|

| Appinfo | 2 | 2 | 1 | 0.5 | 2 | 2 |

| Appinfo-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Audit Service | 2 | 2 | 4 | 4 | 2 | 2 |

| Audit Service-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| CM Service | 4 | 2 | 2 | 0.5 | 2 | 2 |

| CM Service-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Config Service | 4 | 4 | 2 | 0.5 | 2 | 2 |

| Config Service-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Egress Gateway | 8 | 8 | 6 | 6 | 9 | 9 |

| Egress Gateway-Istio | 4 | 4 | 2 | 2 | 9 | 9 |

| Ingress Gateway | 5 | 5 | 6 | 6 | 29 | 29 |

| Ingress Gateway-Istio | 2.5 | 2.5 | 2 | 2 | 29 | 29 |

| Nrf Client Management | 1 | 1 | 1 | 1 | 2 | 2 |

| Nrf Client Management-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Diameter Gateway | 4 | 4 | 2 | 1 | 2 | 2 |

| Diameter Gateway-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Diameter Connector | 4 | 4 | 2 | 1 | 2 | 2 |

| Diameter Connector-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Nrf Client Discovery | 4 | 4 | 2 | 2 | 2 | 2 |

| Nrf Client Discovery-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Query Service | 2 | 1 | 1 | 1 | 2 | 2 |

| Query Service-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Performance | 1 | 1 | 1 | 0.5 | 2 | 2 |

| PRE Service | 4 | 4 | 4 | 4 | 39 | 39 |

| PRE Service-Istio | 1.5 | 1.5 | 2 | 2 | 39 | 39 |

| SM Service | 7 | 7 | 10 | 10 | 64 | 64 |

| SM Service-Istio | 2.5 | 2.5 | 2 | 2 | 64 | 64 |

| UDR Connector | 6 | 6 | 4 | 4 | 8 | 8 |

| UDR Connector-Istio | 2 | 2 | 2 | 2 | 8 | 8 |

| CHF Connector | 6 | 6 | 4 | 4 | 4 | 4 |

| CHF Connector-Istio | 2 | 2 | 2 | 2 | 4 | 4 |

| Binding Service | 6 | 6 | 8 | 8 | 11 | 11 |

| Binding Service-Istio | 2.5 | 2.5 | 2 | 2 | 11 | 11 |

| Alternate Route Service | 2 | 2 | 4 | 2 | 2 | 2 |

| Alternate Route Service-Istio | 2 | 2 | 2 | 2 | 2 | 2 |

| Bulwark Service | 8 | 8 | 6 | 6 | 15 | 15 |

| Bulwark Service-Istio | 2.5 | 2.5 | 2 | 2 | 15 | 15 |

Table 3-76 cnDBTier Microservices Resources:

| Service Name | CPU Request Per Pod | CPU Limit Per Pod | Memory Request Per Pod (Gi) | Memory Limit Per Pod (Gi) | Replicas |

|---|---|---|---|---|---|

| ndbappmysqld | 12 | 12 | 18 | 18 | 18 |

| ndbappmysqld-istio | 3 | 3 | 2 | 2 | 18 |

| ndbmgmd | 3 | 3 | 8 | 8 | 2 |

| ndbmgmd-istio | 1 | 1 | 2 | 2 | 2 |

| ndbmtd | 10 | 10 | 132 | 132 | 10 |

| ndbmtd-istio | 4 | 4 | 2 | 2 | 10 |

| ndbmysqld | 4 | 4 | 24 | 24 | 0 |

| ndbmysqld-istio | 5 | 5 | 4 | 4 | 12 |

Note:

Min Replica = Max Replica3.2.5.2 CPU Utilization

Table 3-77 Policy Microservices Resource Utilization

| Service | CPU (X/Y) - Site 1 | CPU (X/Y) - Site 2 |

|---|---|---|

| ocpcf-occnp-alternate route | 32.90%%/26.37% | None |

| ocpcf-appinfo | 2.45%/26.51% | None |

| ocpcf-bulwark | 25.43%/23.64% | None |

| ocpcf-occnp-config-server | 6.39%/45.14% | None |

| ocpcf-occnp-egress-gateway | 15.74%/34.63% | None |

| ocpcf-occnp-ingress-gateway | 19.09%/44.05% | None |

| ocpcf-occnp-nrf-client-nfdiscovery | 3.78%/67.32% | None |

| ocpcf-occnp-nrf-client-nfmanagement | 0.35%/49.46% | None |

| ocpcf-oc-binding | 14.30%/39.18% | None |

| ocpcf-occnp-chf-connector | 12.21%/22.02% | None |

| ocpcf-occnp-udr-connector | 13.64%/30.17% | None |

| ocpcf-ocpm-audit-service | 1.20%/22.68% | None |

| ocpcf-ocpm-policyds | 23.23%/51.93% | None |

| ocpcf-ocpm-pre | 23.32%/45.91% | None |

| ocpcf-pcf-smservice | 27.51%/56.20% | None |

| ocpcf-ocpm-queryservice | 0.05%/23.54% | None |

Table 3-78 cnDBTier Services Resource Utilization

| Name | CPU (X/Y) - Site1 | CPU (X/Y) - Site2 |

|---|---|---|

| ndbappmysqld | 33.69%/39.51% | 0.16%/24.91% |

| ndbmgmd | 0.23%/25.37% | 0.28%/25.40% |

| ndbmtd | 52.08%/66.69% | 8.63%/66.61% |

3.2.6 Test Scenario: PCF AM/UE Call Model on Two-Site Georedundant Setup, with Each Site Handling 30K TPS Traffic and ASM Enabled

This test run benchmarks the performance and capacity of Policy AM/UE data call model that is deployed in PCF mode. The PCF application handles a total (Ingress + Egress) traffic of 60K TPS, with each site handling a traffic of 30K TPS. For this setup, Aspen Service Mesh (ASM) was enabled between Policy services and it was disabled between Policy services and cnDBTier data services. Application data compression was enabled at AM, UE, and PDS services. The Multithreaded Applier (MTA) feature that helps in peak replication throughput was enabled at cnDBTier.

3.2.6.1 Test Case and Setup Details

Testcase Parameters

The following table describes the testcase parameters and their values:

| Parameters | Values |

|---|---|

| Call Rate (Ingress + Egress) | 60K TPS (30K on site-1 and 30K on SITE-2) |

| ASM | Enable |

| Traffic Ratio | AM 1-Create 0-update 1-delete UE 1-Create 0-update 1-delete |

| Active User Count | 12000000 |

Project Details

The Policy Design editor based on the Blockly interface was used to set the Policy project for each of the Policy services. The complexity level of Policy Project configured for this run was High.

Complexity Level Definition:

- Low – No usage of loops in Blockly logic, no JSON operations, and no complex Java Script code in object expression/statement expression.

- Medium – Usage of loops in Blockly logic, Policy table wildcard match <= 3 fields, MatchList < 3, and 3 < RegEx match < 6

- High – JSON Operations – Custom, complex Java script code in object Expression/statement expression, Policy table wildcard match > 3 fields, MatchLists >= 3, and RegEx mat >= 6

Call Model Data

Table 3-80 Traffic distribution

| Ingress Gateway | Egress Gateway | Total Ingress/Egress Traffic | Ingress Gateway | Egress Gateway | Total Ingress/Egress Traffic | |

|---|---|---|---|---|---|---|

| UE service | Site 1 | Site 2 | ||||

| 3157 | 10953 | 14109 | 3036 | 10579 | 13615 | |

| AM service | 3158 | 10953 | 14111 | 3078 | 10579 | 13657 |

| Total | 28220 | 27271 | ||||

Policy Configurations

Following Policy configurations were either enabled or disabled for running this call flow:

Table 3-81 Policy microservices configuration

| Name | Status |

|---|---|

| Bulwark | Enabled |

| Binding | Disabled |

| Subscriber State Variable (SSV) | Enabled |

| Validate_user | Disabled |

| Alternate Route | Disabled |

| Audit | Enabled |

| Compression (Binding & SM Service) | Enabled |

| SYSTEM.COLLISION.DETECTION | Enabled |

Following Policy interfaces were either enabled or disabled for running this call flow:

Table 3-82 Policy interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Enabled |

| N36 UDR subscription (N7/N15-Nudr) | Enabled |

| UDR on-demand nrf discovery | Disabled |

| CHF (Nchf) | Enabled |

| BSF (N7-Nbsf) | Enabled |

| AMF on demand nrf discovery | NA |

| LDAP (Gx-LDAP) | NA |

| Subscriber HTTP Notifier (Gx) | NA |

Table 3-83 PCRF interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | NA |

| Sd (Gx-Sd) | NA |

| Gx UDR query (Gx-Nudr) | NA |

| Gx UDR subscription (Gx-Nudr | NA |

| CHF enabled (AM) | NA |

| Usage Monitoring (Gx) | NA |

| Subscriber HTTP Notifier (Gx) | NA |

Configuring Policy Helm Parameters

The following Policy optimization parameters were configured for this run:

Table 3-84 Optimization parameters for Policy services

| Service | Policy Helm Configurations |

|---|---|

| policyds |

|

| UE |

|

| INGRESS |

|

| EGRESS |

|

Policy Microservices Resources

Table 3-85 Policy microservices Resource allocation for Site1

| Service Name | CPU Resource per Container (Limit) | CPU Resource per Container (Request) | Memory Resource per Container (Limit) | Memory Resource per Container (Request) | Replica Count | Request/Limit Istio CPU | Request/Limit Istio Memory (Gi) |

|---|---|---|---|---|---|---|---|

| Appinfo | 1 | 1 | 1 Gi | 512Mi | 2 | 2 | 2 Gi |

| Audit Service | 2 | 2 | 4 Gi | 4 Gi | 2 | 2 | 2 Gi |

| CM Service | 4 | 4 | 2 Gi | 2 Gi | 2 | 2 | 2 Gi |

| Config Service | 4 | 2 | 2Gi | 2Gi | 2 | 2 | 2 Gi |

| Egress Gateway | 2 | 2 | 6Gi | 6Gi | 27 | 4 | 2 Gi |

| Ingress Gateway | 5 | 5 | 6Gi | 6Gi | 8 | 2500m | 2Gi |

| NRF Client NF Discovry | 6 | 6 | 10Gi | 10Gi | 9 | 2 | 2Gi |

| NRF Client Management | 1 | 1 | 1Gi | 1Gi | 1 | 2 | 2Gi |

| AM Service | 6 | 6 | 10Gi | 12 | 3 | 2Gi | |

| UE Service | 8 | 8 | 2Gi | 2Gi | 20 | 3 | 1Gi |

| Query Service | 2 | 1 | 1Gi | 1Gi | 2 | ||

| Performance | 1 | 1 | 1Gi | 512Mi | 2 | ||

| PRE | 4 | 4 | 4Gi | 4Gi | 7 | 1500m | 2Gi |

| SM Service | 1 | 1 | 1Gi | 1Gi | 1 | 3 | 2Gi |

| PDS | 7 | 7 | 8Gi | 8Gi | 24 | 3 | 4 Gi |

| UDR Connector | 4 | 4 | 4Gi | 4Gi | 20 | 2 | 2Gi |

| CHF Connector/ User Service | 6 | 6 | 4Gi | 4Gi | 8 | 2 | 2Gi |

| Alternate Route Service | 2 | 2 | 4Gi | 2Gi | 1 | 2 | 2Gi |

| Bulwark Service | 8 | 8 | 4Gi | 4Gi | 7 | 3 | 4Gi |

Table 3-86 Policy microservices Resource allocation for site2

| Service Name | CPU Resource per Container (Limit) | CPU Resource per Container (Request) | Memory Resource per Container (Limit) | Memory Resource per Container (Request) | Replica Count | Request/Limit Istio CPU | Request/Limit Istio Memory (Gi) |

|---|---|---|---|---|---|---|---|

| Appinfo | 1 | 1 | 1 Gi | 512Mi | 2 | 2 | 2 Gi |

| Audit Service | 2 | 2 | 4 Gi | 4 Gi | 2 | 2 | 2 Gi |

| CM Service | 4 | 4 | 2 Gi | 2 Gi | 2 | 2 | 2 Gi |

| Config Service | 4 | 2 | 2Gi | 500m | 2 | 2 | 2 Gi |

| Egress Gateway | 4 | 4 | 6Gi | 6Gi | 20 | 2 | 2 Gi |

| Ingress Gateway | 5 | 5 | 6Gi | 6Gi | 8 | 2.5 | 2Gi |

| NRF Client NF Discovery | 6 | 6 | 10Gi | 10Gi | 9 | 2 | 2Gi |

| NRF Client Management | 1 | 1 | 1Gi | 1Gi | 1 | 2 | 2Gi |

| AM Service | 6 | 6 | 10Gi | 10Gi | 9 | 3 | 2Gi |

| UE Service | 8 | 8 | 4Gi | 4Gi | 18 | 2 | 2Gi |

| Query Service | 2 | 1 | 1Gi | 1Gi | 2 | ||

| Performance | 1 | 1 | 1Gi | 512Mi | 2 | ||

| PRE | 4 | 4 | 4Gi | 4Gi | 7 | 1.5 | 2Gi |

| SM Service | 1 | 1 | 1Gi | 1Gi | 1 | 0.5 | 2Gi |

| PDS | 7 | 7 | 8Gi | 8Gi | 22 | 2.5 | 4Gi |

| UDR Connector | 4 | 4 | 4Gi | 4Gi | 20 | 2 | 2Gi |

| CHF Connector/ User Service | 6 | 6 | 4Gi | 4Gi | 3 | 2 | 2Gi |

| Alternate Route Service | 0.5 | 0.5 | 4Gi | 2Gi | 1 | 0.5 | 2Gi |

| Bulwark Service | 8 | 8 | 4Gi | 4Gi | 5 | 2 | 4Gi |

Table 3-87 CnDBTier Resource allocation for site1

| Service Name | CPU Resource per Container (Limit) | CPU Resource per Container (Request) | Memory Resource per Container (Limit) | Memory Resource per Container (Request) | Replica Count | Request/Limit Istio CPU | Request/Limit Istio Memory (Gi) |

|---|---|---|---|---|---|---|---|

| ndbappmysqld | 12 | 12 | 20Gi | 20Gi | 12 | 5 | 5Gi |

| ndbmgmd | 3 | 3 | 8Gi | 8Gi | 2 | 3 | 1Gi |

| ndbmtd | 12 | 12 | 129Gi | 129Gi | 10 | 6 | 6Gi |

| ndbmysqld | 4 | 4 | 16Gi | 16Gi | 6 | 5 | 5Gi |

Table 3-88 CnDBTier resource allocation for site2

| Service Name | CPU Resource per Container (Limit) | CPU Resource per Container (Request) | Memory Resource per Container (Limit) | Memory Resource per Container (Request) | Replica Count | Request/Limit Istio CPU | Request/Limit Istio Memory (Gi) |

|---|---|---|---|---|---|---|---|

| ndbappmysqld | 12 | 12 | 20Gi | 20Gi | 12 | 5 | 5Gi |

| ndbmgmd | 3 | 3 | 8Gi | 8Gi | 2 | 3 | 1Gi |

| ndbmtd | 12 | 12 | 129Gi | 129Gi | 10 | 6 | 6Gi |

| ndbmysqld | 4 | 4 | 16Gi | 16Gi | 6 | 5 | 5Gi |

3.2.6.2 CPU Utilization

This section lists the CPU utilization for Policy and cnDBTier microservices. The CPU utilization is the ratio between the (total CPU utilization against total CPU request (X)) versus (target CPU Utilization (Y) configured for the pod).

Policy Microservices Resource Utilization

The following table describes the bench mark number as per the system maximum capacity utilization for Policy microservices.

The average CPU utilization is the ratio between the current usage of resource to the requested resources of the pod i.e., total sum of CPU utilized for service pods / total CPU requested for service pods.

Table 3-89 CPU/Memory Utilization by Policy Microservices

| Service | CPU (Site 1) | Memory (Site 1) | CPU (Site 2) | Memory (Site 2) |

|---|---|---|---|---|

| ocpcf-occnp-alternate route/istio | 0.10% | 4.88% | 0.60% | 4.44% |

| ocpcf-occnp-alternate route | 0.15% | 9.38% | 0.60% | 6.76% |

| ocpcf-appinfo/istio | '0.18% | 5.35% | 0.20% | 5.18% |

| ocpcf-appinfo | 2.65% | 23.78% | '4.40% | 23.58% |

| ocpcf-bulwark/istio | 25.27% | 2.30% | 59.09% | 2.88% |

| ocpcf-bulwark | 17.78%' | 17.36% | 29.15% | 20.51% |

| ocpcf-occnp-config-server/istio | 11.30% | 5.42%' | 14.03% | 6.42% |

| ocpcf-occnp-config-server | 7.51% | 29.98% | 9.46% | 30.44% |

| ocpcf-occnp-egress-gateway/istio | 5.90% | 5.18% | 13.11% | 5.89% |

| ocpcf-occnp-egress-gateway | 23.25% | 19.32% | 38.80% | 20.48% |

| ocpcf-occnp-ingress-gateway/istio | 21.98% | 6.99% | 18.80% | 7.64% |

| ocpcf-occnp-ingress-gateway | 19.87% | 24.11% | 23.62% | 23.45% |

| ocpcf-occnp-nrf-client-nfdiscovery/istio | 17.95% | 5.21% | 27.92% | 5.83% |

| ocpcf-occnp-nrf-client-nfdiscovery | 9.81% | 9.91% | 13.84% | 9.48% |

| ocpcf-occnp-nrf-client-nfmanagement/istio | 0.15% | 4.79% | 0.20% | 5.22% |

| ocpcf-occnp-nrf-client-nfmanagement | 0.40% | 44.92% | 0.40% | 47.17% |

| ocpcf-performance/perf-info | 1.90% | 11.82% | 1.00% | 12.40% |

| ocpcf-occnp-chf-connector/istio | 14.88% | 5.22% | 47.70% | 6.23% |

| ocpcf-occnp-chf-connector | 7.78% | 14.96% | 24.25% | 14.87% |

| ocpcf-occnp-udr-connector/istio | 20.30% | 5.52% | 29.43% | 6.24% |

| ocpcf-occnp-udr-connector | 18.32% | 15.26% | 23.51% | 15.08% |

| ocpcf-ocpm-audit-service/istio | 0.18% | 4.61% | 0.25% | 5.10% |

| ocpcf-ocpm-audit-service | 0.22% | 13.00% | 0.83% | 12.59% |

| ocpcf-ocpm-cm-service/istio | 0.80% | 4.96% | 0.92% | 5.20% |

| ocpcf-ocpm-cm-service/cm-service | 0.76% | 28.34% | 0.83% | 30.76% |

| ocpcf-ocpm-policyds/istio | 21.30% | 2.84% | 35.80% | 3.03% |

| ocpcf-ocpm-policyds | 24.84% | 30.74% | 33.41% | 31.08% |

| ocpcf-occnp-amservice/istio | 24.62% | 5.72% | 43.19% | 6.43% |

| ocpcf-occnp-amservice | 26.90% | 9.40% | 44.37% | 10.71% |

| ocpcf-ocpm-pre/istio | 24.99% | 5.81% | 45.51% | 5.82% |

| ocpcf-ocpm-pre | '18.59% | 32.53% | 30.70% | 30.35% |

| ocpcf-pcf-smservice/istio | 0.17% | 4.83% | .60% | 6.01% |

| ocpcf-pcf-smservice | 0.40% | 37.11% | 0.40% | 37.40% |

| ocpcf-pcf-ueservice/istio | 15.49% | 5.64% | 35.09% | 6.01% |

| ocpcf-pcf-ueservice | 22.16% | 34.16% | 29.61% | 38.23% |

| ocpcf-ocpm-queryservice | 0.05% | 23.39% | 0.50% | 23.68% |

Observed CPU utilization Values of cnDBTier Services

The following table provides information about observed values of cnDBTier services.

Table 3-90 CPU/Memory Utilization by CnDBTier services

| Service | CPU (Site 1) | Memory CPU (Site 1) | CPU (Site 2) | Memory (Site 2) |

|---|---|---|---|---|

| ndbappmysqld/istio | 23.14% | 2.48% | 22.78% | 2.50% |

| ndbappmysqld/mysqlndbcluster | 21.31% | 50.17% | 26.48% | 35.47% |

| ndbappmysqld/init-sidecar | 2.25% | 0.39% | 3.00% | 0.39% |

| ndbmgmd/istio-proxy | 0.33% | 10.74% | 0.43% | 11.38% |

| ndbmgmd/mysqlndbcluster | 0.25% | 25.21% | 0.35% | 25.16% |

| ndbmtd/istio-proxy | 47.02% | 2.06% | 31.61% | 1.96% |

| ndbmtd/mysqlndbcluster | 44.95% | 81.17% | 42.45% | 79.71% |

| ndbmysqld/istio-proxy | 0.00% | 0.00% | 0.00% | 0.00% |

| ndbmysqld/mysqlndbcluster | 4.23% | 30.30% | 7.72% | 28.85% |

| ndbmysqld/init-sidecar | 2.00% | 0.39% | 2.83% | 0.59% |

3.2.7 Test Scenario: PCF AM/UE Call Model on Two-Site Georedundant Setup, with Single-Site Handling 60K TPS Traffic and ASM Enabled

This test run benchmarks the performance and capacity of Policy AM/UE data call model that is deployed in PCF mode.. The PCF application handles a total traffic (Ingress + Egress) of 60K TPS on one site and there is no traffic on the other site. APP Compression was enabled. The test was run for 1.0 hour duration. For this setup, Aspen Service Mesh (ASM) was enabled between Policy services and it was disabled between Policy service pods and DB data pods.

In this test setup, the Georedundant (GR) mode was enabled in cnDBTier. It was configured for 2 channel replication and the Application Data compression was enabled at AM, UE, and PDS services on Site 2.

3.2.7.1 Test Case and Setup Details

Testcase Parameters

The following table describes the testcase parameters and their values:

| Parameters | Values |

|---|---|

| Call Rate (Ingress + Egress) | 60k on site-1 and no traffic on site-2 |

| ASM | Enable |

| Traffic Ratio |

AM 1-Create 0-update 1-delete UE 1-Create 0-update 1-delete |

| Active User Count | 12000000 |

Project Details

The Policy Design editor based on the Blockly interface was used to set the Policy project for each of the Policy services. The complexity level of Policy Project configured for this run was High.

Complexity Level Definition:

- Low – No usage of loops in Blockly logic, no JSON operations, and no complex Java Script code in object expression/statement expression.

- Medium – Usage of loops in Blockly logic, Policy table wildcard match <= 3 fields, MatchList < 3, and 3 < RegEx match < 6

- High – JSON Operations – Custom, complex Java script code in object Expression/statement expression, Policy table wildcard match > 3 fields, MatchLists >= 3, and RegEx mat >= 6

Call Model Data

Table 3-91 Traffic distribution

| Ingress Gateway | Egress Gateway | Total Ingress/Egress Traffic | Ingress Gateway | Egress Gateway | Total Ingress/Egress Traffic | |

|---|---|---|---|---|---|---|

| UE service | Site 1 | Site 2 | ||||

| 6672 | 30024 | 36696 | - | - | - | |

| AM service | 6672 | 16680 | 23352 | - | - | - |

| Total | 60048 | - | - | - | ||

Policy Configurations

Following Policy microservices were either enabled or disabled for running this call flow:

Table 3-92 Policy microservices configuration

| Name | Status |

|---|---|

| Bulwark | Enabled |

| Binding | Disabled |

| Subscriber State Variable (SSV) | Dnabled |

| Validate_user | Disabled |

| Alternate Route | Disabled |

| Audit | Enabled |

| Compression (Binding & SM Service) | Enabled |

| SYSTEM.COLLISION.DETECTION | Enabled |

Following Policy interfaces were either enabled or disabled for running this call flow:

Table 3-93 Policy interfaces

| Feature Name | Status |

|---|---|

| N36 UDR query (N7/N15-Nudr) | Enable |

| N36 UDR subscription (N7/N15-Nudr) | Enable |

| UDR on-demand nrf discovery | Disable |

| CHF (SM-Nchf) | Enable |

| BSF (N7-Nbsf) | Enable |

| AMF on demand nrf discovery | NA |

| LDAP (Gx-LDAP) | NA |

| Subscriber HTTP Notifier (Gx) | NA |

The following PCRF interfaces that were either enabled or disabled to run this call flow:

Table 3-94 PCRF interfaces

| Feature Name | Status |

|---|---|

| Sy (PCRF Gx-Sy) | NA |

| Sd (Gx-Sd) | NA |

| Gx UDR query (Gx-Nudr) | NA |

| Gx UDR subscription (Gx-Nudr | NA |

| CHF enabled (AM) | NA |

| Usage Monitoring (Gx) | NA |

| Subscriber HTTP Notifier (Gx) | NA |

Configuring Policy Helm Parameters

The following Policy optimization parameters were configured for this run:

Table 3-95 Optimization parameters for Policy services

| Service | Policy Helm Configurations |

|---|---|

| policyds |

|

| UE |

|

| INGRESS |

|

| EGRESS |

|

Policy Microservices Resources

Table 3-96 Policy microservices resource allocation for site1

| Service Name | CPU Resource per Container (Limit) | CPU Resource per Container (Request) | Memory Resource per Container (Limit) | Memory Resource per Container (Request) | Replica Count | Request/Limit Istio CPU | Request/Limit Istio Memory (Gi) |

|---|---|---|---|---|---|---|---|

| Appinfo | 1 | 1 | 1 Gi | 512Mi | 2 | 2 | 2 Gi |

| Audit Service | 2 | 2 | 4 Gi | 4 Gi | 2 | 2 | 2 Gi |

| CM Service | 4 | 4 | 2 Gi | 2 Gi | 2 | 2 | 2 Gi |

| Config Service | 4 | 2 | 2Gi | 2Gi | 2 | 2 | 2 Gi |

| Egress Gateway | 2 | 2 | 6Gi | 6Gi | 27 | 4 | 2 Gi |

| Ingress Gateway | 5 | 5 | 6Gi | 6Gi | 8 | 2.5 | 2 Gi |

| NRF Client NF Discovry | 6 | 6 | 10Gi | 10Gi | 9 | 2 | 2 Gi |

| NRF Client Management | 1 | 1 | 1Gi | 1Gi | 1 | 2 | 2 Gi |

| AM Service | 6 | 6 | 10Gi | 10Gi | 12 | 3 | 2 Gi |

| UE Service | 8 | 8 | 2Gi | 2Gi | 20 | 2 | 1 Gi |

| Query Service | 2 | 1 | 1Gi | 1Gi | 2 | ||

| Performance | 1 | 1 | 1Gi | 512Mi | 2 | 2 | 1 Gi |

| PRE | 4 | 4 | 4Gi | 4Gi | 7 | 1.5 | 2 Gi |

| SM Service | 1 | 1 | 1Gi | 1Gi | 1 | 3 | 2 Gi |

| PDS | 7 | 7 | 8Gi | 8Gi | 24 | 3 | 4 Gi |

| UDR Connector | 4 | 4 | 4Gi | 4Gi | 20 | 2 | 2 Gi |

| CHF Connector/ User Service | 6 | 6 | 4Gi | 4Gi | 8 | 2 | 2 Gi |

| Alternate Route Service | 2 | 2 | 4Gi | 2Gi | 1 | 2 | 2 Gi |

| Bulwark Service | 8 | 8 | 4Gi | 4Gi | 7 | 3 | 4 Gi |

Table 3-97 Policy microservices resource allocation for site2

| Service Name | CPU Resource per Container (Limit) | CPU Resource per Container (Request) | Memory Resource per Container (Limit) | Memory Resource per Container (Request) | Replica Count | Request/Limit Istio CPU | Request/Limit Istio Memory |

|---|---|---|---|---|---|---|---|

| Appinfo | 1 | 1 | 1 Gi | 512Mi | 2 | 2 | 2 Gi |

| Audit Service | 2 | 2 | 4 Gi | 4 Gi | 2 | 2 | 2 Gi |

| CM Service | 4 | 4 | 2 Gi | 2 Gi | 2 | 2 | 2 Gi |

| Config Service | 4 | 2 | 2Gi | 500m | 2 | 2 | 2 Gi |

| Egress Gateway | 4 | 4 | 6Gi | 6Gi | 20 | 2 | 2 Gi |

| Ingress Gateway | 5 | 5 | 6Gi | 6Gi | 8 | 2.5 | 2Gi |

| NRF Client NF Discovery | 6 | 6 | 10Gi | 10Gi | 9 | 2 | 2 Gi |

| NRF Client Management | 1 | 1 | 1Gi | 1Gi | 1 | 2 | 2 Gi |

| AM Service | 6 | 6 | 10Gi | 10Gi | 9 | 3 | 2 Gi |

| UE Service | 8 | 8 | 4Gi | 4Gi | 18 | 2 | 2 Gi |

| Query Service | 2 | 1 | 1Gi | 1Gi | 2 | ||

| Performance | 1 | 1 | 1Gi | 512Mi | 2 | ||

| PRE | 4 | 4 | 4Gi | 4Gi | 7 | 1.5 | 2 Gi |

| SM Service | 1 | 1 | 1Gi | 1Gi | 1 | 0.5 | 2 Gi |

| PDS | 7 | 7 | 8Gi | 8Gi | 22 | 2.5 | 4 Gi |

| UDR Connector | 4 | 4 | 4Gi | 4Gi | 20 | 2 | 2 Gi |

| CHF Connector/ User Service | 6 | 6 | 4Gi | 4Gi | 3 | 2 | 2 Gi |

| Alternate Route Service | 0.5 | 0.5 | 4Gi | 2Gi | 1 | 0.5 | 2 Gi |

| Bulwark Service | 8 | 8 | 4Gi | 4Gi | 5 | 2 | 4 Gi |

Table 3-98 CnDBTier resource allocation for site1

| Service Name | CPU Resource per Container (Limit) | CPU Resource per Container (Request) | Memory Resource per Container (Limit) | Memory Resource per Container (Request) | Replica Count | Request/Limit Istio CPU | Request/Limit Istio Memory |

|---|---|---|---|---|---|---|---|

| ndbappmysqld/mysqlndbcluster | 12 | 12 | 20Gi | 20Gi | 12 | 5 | 5Gi |

| ndbappmysqld/init-sidecar | 0.1 | 0.1 | 256Mi | 256Mi | 12 | ||

| ndbmgmd/mysqlndbcluster | 3 | 3 | 8Gi | 8Gi | 2 | 3 | 1Gi |

| ndbmtd/mysqlndbcluster | 12 | 12 | 129Gi | 129Gi | 10 | 6 | 6Gi |

| ndbmysqld/mysqlndbcluster | 4 | 4 | 16Gi | 16Gi | 6 | 5 | 5Gi |

| ndbmysqld/init-sidecar | 0.1 | 0.1 | 256Mi | 256Mi | 6 |

Table 3-99 CnDBTier resource allocation for site2

| Service Name | CPU Resource per Container (Limit) | CPU Resource per Container (Request) | Memory Resource per Container (Limit) | Memory Resource per Container (Request) | Replica Count | Request/Limit Istio CPU | Request/Limit Istio Memory |

|---|---|---|---|---|---|---|---|

| ndbappmysqld/mysqlndbcluster | 12 | 12 | 20Gi | 20Gi | 12 | 5 | 5Gi |

| ndbappmysqld/init-sidecar | 0.1 | 0.1 | 256Mi | 256Mi | 12 | ||

| ndbmgmd/mysqlndbcluster | 3 | 3 | 8Gi | 8Gi | 2 | 3 | 1Gi |

| ndbmtd/mysqlndbcluster | 12 | 12 | 129Gi | 129Gi | 10 | 6 | 6Gi |

| ndbmysqld/mysqlndbcluster | 4 | 4 | 16Gi | 16Gi | 6 | 5 | 5Gi |

| ndbmysqld/init-sidecar | 0.1 | 0.1 | 256Mi | 256Mi | 6 |

3.2.7.2 CPU Utilization

This section lists the CPU utilization for Policy and cnDBTier microservices. The CPU utilization is the ratio between the (total CPU utilization against total CPU request (X)) versus (target CPU Utilization (Y) configured for the pod).

Policy Microservices Resource Utilization

The following table describes the bench mark number as per the system maximum capacity utilization for Policy microservices.

The average CPU utilization is the ratio between the current usage of resource to the requested resources of the pod i.e., total sum of CPU utilized for service pods / total CPU requested for service pods.

Table 3-100 CPU/Memory Utilization by Policy Microservices

| Service | CPU (Site 1) | Memory (Site 1) | CPU (Site 2) | Memory (Site 2) |

|---|---|---|---|---|

| ocpcf-appinfo/istio | 0.25% | 7.18% | 0.22% | 5.59% |

| ocpcf-appinfo | 4.20 | 32.97% | 2.50% | 23.24% |

| ocpcf-bulwark/istio | 0.10% | 2.91% | 0.15% | 2.78% |

| ocpcf-bulwark | 0.04% | 37.21% | 0.05% | 12.23% |

| ocpcf-oc-binding/istio | 0.20% | 5.57% | 0.30% | 6.01% |

| ocpcf-oc-binding/binding | 0.03% | 7.73% | 0.03% | 7.46% |

| ocpcf-occnp-alternate route/istio | 0.15% | 5.27% | 0.25% | 5.42% |

| ocpcf-occnp-alternate route/istio | 0.10% | 9.59% | 0.10% | 9.35% |

| ocpcf-occnp-chf-connector/istio | 11.60% | 5.03% | 0.50% | 5.76% |

| ocpcf-occnp-chf-connector | 12.10% | 10.72% | 0.08% | 10.94% |

| ocpcf-occnp-config-server/istio | 13.85% | 6.13% | 5.80% | 6.23% |

| ocpcf-occnp-config-server | 9.50% | 43.14% | 3.50% | 36.67% |

| ocpcf-occnp-egress-gateway/istio | 10.13% | 5.40% | 0.19% | 5.92% |

| ocpcf-occnp-egress-gateway | 49.76% | 19.64% | 0.07% | 9.69% |

| ocpcf-occnp-ingress-gateway/istio | 36.23% | 10.00% | 0.20% | 5.85% |

| ocpcf-occnp-ingress-gateway | 45.73% | 32.97% | 0.24% | 19.07% |

| ocpcf-occnp-nrf-client-nfdiscovery/istio | 59.12% | 8.17% | 0.26% | 5.82% |

| ocpcf-occnp-nrf-client-nfdiscovery | 51.44% | 59.33% | 0.08% | 33.86% |

| ocpcf-occnp-nrf-client-nfmanagement/istio | 0.70% | 5.42% | 0.20% | 5.57% |

| ocpcf-occnp-nrf-client-nfmanagement | 0.40% | 44.82% | 0.40% | 46.39% |

| ocpcf-occnp-udr-connector/istio | 69.88% | 8.00% | 0.47% | 5.69% |

| ocpcf-occnp-udr-connector | 35.60% | 32.06% | 0.08% | 11.15% |

| ocpcf-ocpm-audit-service/istio | 0.25% | 5.59% | 0.25% | 5.47% |

| ocpcf-ocpm-audit-service | 0.57% | 23.69% | 0.38% | 13.01% |

| ocpcf-ocpm-cm-service/istio | 0.85% | 5.27% | 0.55% | 6.05% |

| ocpcf-ocpm-cm-service/cm-service | 0.71% | 37.21% | 0.33% | 33.81% |

| ocpcf-ocpm-policyds/istio | 49.69% | 3.91% | 0.17% | 2.86% |

| ocpcf-ocpm-policyds | 40.46% | 32.78% | 0.03% | 14.43% |

| ocpcf-ocpm-pre/istio | 33.67% | 7.14% | 0.35% | 6.24% |

| ocpcf-ocpm-pre | 37.21% | 49.02% | 0.31% | 8.65% |

| ocpcf-ocpm-queryservice | 0.05% | 28.22% | 0.08% | 24.41% |

| ocpcf-occnp-amservice/istio | 32.87% | 8.59% | 0.39% | 5.86% |

| ocpcf-occnp-amservice | 29.83% | 23.16% | 0.04% | 12.90% |

| ocpcf-pcf-ueservice/istio | 56.27% | 9.83% | 0.35% | 5.65% |

| ocpcf-pcf-ueservice | 44.94% | 45.22% | 0.05% | 14.07% |

| ocpcf-performance/perf-info | 3.10% | 10.84% | 1.40% | 11.04% |

Observed CPU utilization Values of cnDBTier Services

The following table provides information about observed values of cnDBTier services.

Table 3-101 CPU/Memory Utilization by CnDBTier services

| App/Container | CPU (Site1) | Memory (Site1) | CPU (Site2) | Memory (Site2) |

|---|---|---|---|---|

| ndbappmysqld/istio-proxy | 0.40% | 2.00% | 0.33% | 2.22% |

| ndbappmysqld/mysqlndbcluster | 0.19% | 20.91% | 0.20% | 20.88% |

| ndbappmysqld/init-sidecar | 2.08% | 0.39% | 2.17% | 0.39% |

| ndbmgmd/istio-proxy | 0.55% | 9.96% | 0.68% | 10.79% |

| ndbmgmd/mysqlndbcluster | 0.37% | 25.12% | 0.40% | 25.12% |

| ndbmtd/istio-proxy | 0.66% | 1.75% | 0.53% | 1.39% |

| ndbmtd/mysqlndbcluster | 0.69% | 81.13% | 5110.41% | 71.33% |

| ndbmysqld/istio-proxy | 0.00% | 0.00% | 0.00% | 0.00% |

| ndbmysqld/mysqlndbcluster | 0.52% | 26.07% | 0.57% | 26.07% |

| ndbmysqld/init-sidecar | 2.33% | 0.39% | 2.17% | 0.39% |

3.2.8 Test Scenario: PCF AM/UE Call Model on Two-Site Georedundant Setup, with Single-Site Handling 75K TPS Traffic and ASM Enabled

This test run benchmarks the performance and capacity of Policy AM/UE data call model that is deployed in PCF mode. The PCF application handles a total traffic (Ingress + Egress) of 75K TPS on one site and there is no traffic on the other site. Application compression was enabled. For this setup, Aspen Service Mesh (ASM) was enabled between Policy services and it was disabled between Policy service pods and Database data pods.

In this test setup, the Georedundant (GR) mode was enabled in cnDBTier. It was configured for 3 channel replication and the Application Data compression was enabled at AM, UE, and PDS services on Site 2.

3.2.8.1 Test Case and Setup Details

Table 3-102 Testcase Parameters

| Parameters | Values |

|---|---|

| Call Rate (Ingress + Egress) | 75k on site-1 and no traffic on site-2 |

| ASM | Enable |

| Traffic Ratio |

AM 1-Create 0-update 1-delete UE 1-Create 0-update 1-delete |

| Active User Count | 12000000 |

Project Details

The Policy Design editor based on the Blockly interface was used to set the Policy project for each of the Policy services. The complexity level of Policy Project configured for this run was High.

Complexity Level Definition:

- Low – No usage of loops in Blockly logic, no JSON operations, and no complex Java Script code in object expression/statement expression.

- Medium – Usage of loops in Blockly logic, Policy table wildcard match <= 3 fields, MatchList < 3, and 3 < RegEx match < 6

- High – JSON Operations – Custom, complex Java script code in object Expression/statement expression, Policy table wildcard match > 3 fields, MatchLists >= 3, and RegEx mat >= 6

Call Model Data

Table 3-103 Traffic distribution on Site1

| Services | Ingress Gateway | Egress Gateway | Total Ingress/Egress Traffic |

|---|---|---|---|

| UE service | 8340 | 37530 | 45870 |

| AM service | 8340 | 20850 | 29190 |

| Total | 75060 |

Policy Configurations