3 Benchmarking Model B

- Monitoring Event (ME)

- Quality of Service (QOS)

- Traffic Influence (TI)

- Device Trigger (DT)

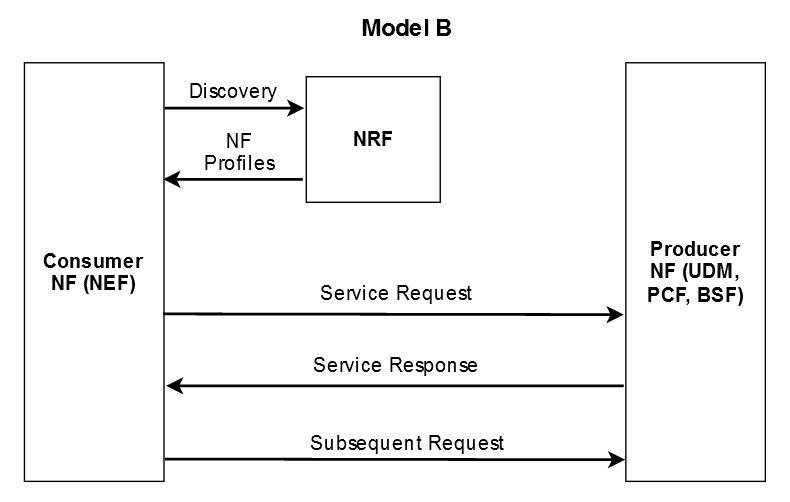

Using the Model B deployment, the consumer NF performs NF discovery by querying NRF. The consumer NF discovers services available in the network based on service name and target NF type. The consumer NF invokes Nnrf_NFDiscovery_Request from an appropriate configured NRF. Based on the discovery result, the consumer NF selects the target producer NF and then sends the service request to that producer NF.

- Service request address

- Discovery using NRF services, no SCP, and direct routing

3.1 Test Topology for Model B Benchmarking

- Consumer NF (NEF)

- NRF

- Producer NFs

Figure 3-1 Model B test topology

- NEF sends a service request to producer NF such as UDM, PCF, BSF, and so on.

- The service request contains the address of the selected service producer NF.

- Producer NF responds to NEF with the service response.

Note:

- Performance number to be calculated with pre-loaded database with 1 million subscription records for Monitoring (ME), Quality Of Service (QOS), and Traffic Influence (TI).

- The logging levels in all the involved microservices and stub are set to ERROR. This reduces some execution time.

3.2 Model B Testcases

Note:

The setup on which performance was run for Model B testcases is Bulkhead (10.75.150.180).3.2.1 5G Callflow Testcases

- Monitoring Event (ME)

- Quality of Service (QOS)

- Traffic Influence (TI)

- Device Trigger (DT)

- MSISDN-Less MO SMS

Note:

For the above listed features in 5G callflow, the disk size for sql pod is 256Gi in testcase 3 and 100Gi in testcase 1 and 2.3.2.1.1 Monitoring Event Testcases

In the network deployments, operators must track the current or the last known location of User Equipment (UE) to provide customized and enhanced network services. Any change in the UE location is considered as an event and NEF facilitates third-party applications or internal Application Functions (AFs) to monitor and get the report about such event.

- Location reporting

- PDU session status

- UE reachability

- Loss of connectivity

- Current location of UE

- Last known location of UE with time stamp

- PDU Session status

- Loss of Connectivity Event

- UE Reachability Event

- One-time reporting

- Maximum number of reports

- Maximum duration of reporting

- Monitoring type

The following subsections provide information about monitoring event test scenarios considered to perform benchmark tests.

3.2.1.1.1 Single Site

This section provides information about configuration, parameters, and Monitoring Event Model B feature test scenarios of single site.

Deployment Configuration

Table 3-1 Deployment Configuration

| Microservice | Replica | CPU | Memory |

|---|---|---|---|

| NEF Ingress Gateway | 4 | 4 | 4Gi |

| NEF Egress Gateway | 4 | 4 | 4Gi |

| API Router | 4 | 4 | 4Gi |

| ME | 2 | 4 | 4Gi |

| TI | 2 | 4 | 4Gi |

| QOS | 2 | 4 | 4Gi |

| Expiry Auditor | 2 | 4 | 4Gi |

| FiveGC Agent | 4 | 4 | 4Gi |

| FiveGC Egress | 4 | 4 | 4Gi |

| FiveGC Ingress | 4 | 4 | 4Gi |

| Apd Manager | 2 | 4 | 4Gi |

| nrf-client-nfmanagement | 1 | 1 | 1Gi |

| nrf-client-nfdiscovery | 1 | 1 | 1Gi |

| performance | 2 | 200m | 1Gi |

| Config-server | 1 | 1 | 1Gi |

| app-info | 1 | 200m | 1Gi |

Note:

The VAR value for microservice in each scenario is based on the corresponding Replica value.Table 3-2 Stub Parameters

| Stub Parameter | Replica | CPU | Memory |

|---|---|---|---|

| GMLC | 3 | 1 | 1Gi |

| UDM | 3 | 1 | 1Gi |

| AF | 3 | 1 | 1Gi |

| PCF | 3 | 1 | 1Gi |

| BSF | 3 | 1 | 1Gi |

| NRF | 1 | 1 | 1Gi |

| UDR | 3 | 1 | 1Gi |

3.2.1.1.1.1 Monitoring Event Test Scenario 1

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilization that Subscription, Unsubscription, and Notification endpoints can handle with two replicas of applicable services for both UDM and GMLC request types.

Table 3-3 Monitoring Event Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for ME | 2 |

| ME Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 10% Subscription, 10% Unsubscription, and 80% Notifications |

Result and Observation

- The server used is bulk head, which is a shared Bare metal server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 90 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time sub/unsub/notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-4 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 90 hrs |

| Replica | 2 |

Table 3-5 NEF Microservices and their Utilization

| Resources | Subscription | Unsubscription | Notification | Total |

|---|---|---|---|---|

| Average CPU | NA | NA | NA | ~60% |

| Total TPS | 250 | 250 | 2000 | 2500 |

| Average Latency | 99.2 ms | 106.2 ms | 40.6 ms | NA |

| Average Memory | NA | NA | NA | 1.7 GB |

| Max Memory | NA | NA | NA | 2.0 GB |

3.2.1.1.1.2 Monitoring Event Test Scenario 2

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilization that Subscription, Unsubscription, and Notification endpoints can handle with two replicas of applicable services for both UDM and GMLC request types.

Table 3-6 Monitoring Event Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for ME | 2 |

| ME Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 10% Subscription, 10% Unsubscription, and 80% Notifications |

Result and Observation

- The server used is bulk head, which is a shared Bare metal server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 19 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time sub/unsub/notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-7 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 19 hrs |

| Replica | 2 |

Table 3-8 NEF Microservices and their Utilization

| Resources | Subscription | Unsubscription | Notification | Total |

|---|---|---|---|---|

| Average CPU | NA | NA | NA | ~65% |

| Total TPS | 250 | 250 | 2000 | 2500 |

| Average Latency | 100.5 ms | 1532 ms | 97.3 ms | NA |

| Average Memory | NA | NA | NA | 1.7 GB |

| Max Memory | NA | NA | NA | 1.9 GB |

3.2.1.2 Quality of Service Testcases

In network deployments, operators have the requirement to offer services of a certain quality. The quality of service depends on different parameters, such as the availability of a link, the number of bit errors, network latency, and jitter. There are scenarios when operators need to provide different quality of services to different types of subscribers or UEs.

NEF enables the operators to manage the QoS using a set of parameters related to the traffic performance on networks. It also provides the capability to set up different QoS standards for different UE sessions based on the service requirements and other specifications. To perform this functionality, the NEF QoS service communicates with Policy Control Function (PCF) to set up, modify, and revoke an AF session with the required QoS.

The AF session with the QoS service feature allows AF to request a data session for a UE with a specific QoS.

- Set up an AF session with the required QoS

- Get the QoS session details for an AF

- Delete an AF session with QoS

- Receive the QoS notifications, such as QoS Monitoring or Usage reports from the PCF and forward them to the subscribed AF

To set up AF sessions with QoS, OCNEF invokes the PCF that is responsible to control the privacy checking of the target subscriber. The invoked PCF authorizes the subscription or notification request, performs the required operation, and sends responses to NEF. NEF exposes the information as and when received from PCF to AF.

The following subsections provide information about quality of service test scenarios considered to perform benchmark tests.

3.2.1.2.1 Single Site

This section provides information about configuration, parameters, and Quality of Service Model B feature test scenarios of single site.

Deployment Configuration

Table 3-9 Deployment Configuration

| Microservice | Replica | CPU | Memory |

|---|---|---|---|

| NEF Ingress Gateway | 4 | 4 | 4Gi |

| NEF Egress Gateway | 4 | 4 | 4Gi |

| API Router | 4 | 4 | 4Gi |

| ME | 2 | 4 | 4Gi |

| TI | 2 | 4 | 4Gi |

| QOS | 2 | 4 | 4Gi |

| Expiry Auditor | 2 | 4 | 4Gi |

| FiveGC Agent | 4 | 4 | 4Gi |

| FiveGC Egress | 4 | 4 | 4Gi |

| FiveGC Ingress | 4 | 4 | 4Gi |

| Apd Manager | 2 | 4 | 4Gi |

| nrf-client-nfmanagement | 1 | 1 | 1Gi |

| nrf-client-nfdiscovery | 1 | 1 | 1Gi |

| performance | 2 | 200m | 1Gi |

| Config-server | 1 | 1 | 1Gi |

| app-info | 1 | 200m | 1Gi |

Note:

The VAR value for microservice in each scenario is based on the corresponding Replica value.Table 3-10 Stub Parameters

| Stub Parameter | Replica | CPU | Memory |

|---|---|---|---|

| GMLC | 3 | 1 | 1Gi |

| UDM | 3 | 1 | 1Gi |

| AF | 3 | 1 | 1Gi |

| PCF | 3 | 1 | 1Gi |

| BSF | 3 | 1 | 1Gi |

| NRF | 1 | 1 | 1Gi |

| UDR | 3 | 1 | 1Gi |

3.2.1.2.1.1 Quality of Service Test Scenario 1

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilization that Subscription, Unsubscription, and Notification endpoints can handle with two replicas of applicable services for PCF requests with BSF enabled.

Table 3-11 Quality of Service Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for QoS | 2 |

| QoS Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 25% Subscription, 25% Unsubscription, and 50% Notification |

Result and Observation

- The server used is bulk head, which is a shared Bare metal server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 90 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time sub/unsub/notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-12 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 90 hrs |

| Replica | 2 |

Table 3-13 NEF Microservices and their Utilization

| Resources | Subscription | Unsubscription | Notification | Total |

|---|---|---|---|---|

| Average CPU | NA | NA | NA | ~65% |

| Total TPS | 625 | 625 | 1250 | 2500 |

| Average Latency | 454.1 ms | 353.6 ms | 31.6 ms | NA |

| Average Memory | NA | NA | NA | 1.7 GB |

| Max Memory | NA | NA | NA | 1.9 GB |

3.2.1.2.1.2 Quality of Service Test Scenario 2

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilization that Subscription, Unsubscription, and Notification endpoints can handle with two replicas of applicable services for PCF requests with BSF enabled.

Table 3-14 Quality of Service Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for QoS | 2 |

| QoS Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 25% Subscription, 25% Unsubscription, and 50% Notification |

Result and Observation

- The server used is bulk head, which is a shared Bare metal server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 19 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time sub/unsub/notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-15 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 19 hrs |

| Replica | 2 |

Table 3-16 NEF Microservices and their Utilization

| Resources | Subscription | Unsubscription | Notification | Total |

|---|---|---|---|---|

| Average CPU | NA | NA | NA | ~61% |

| Total TPS | 625 | 625 | 1250 | 2500 |

| Average Latency | 451.4 ms | 360.4 ms | 46.8 ms | NA |

| Average Memory | NA | NA | NA | 1.65 GB |

| Max Memory | NA | NA | NA | 2 GB |

3.2.1.3 Traffic Influence Testcases

Application Function (AF) influence on traffic routing in NEF is supported through the Traffic Influence service. It allows an AF to send requests to NEF through the Traffic Influence APIs. Based on the availability of UE information in the AF request, NEF determines call flow towards 5G core NFs.

Traffic Influence is used by AFs to influence the routing decisions on the user plane traffic. It allows AF to decide on the routing profile and the route for data plane from UE to the network in a particular PDU session.

The following subsections provide information about traffic influence test scenarios considered to perform benchmark tests.

3.2.1.3.1 Single Site

This section provides information about configuration, parameters, and Traffic Influence Model B feature test scenarios of single site.

Deployment Configuration

Table 3-17 Deployment Configuration

| Microservice | Replica | CPU | Memory |

|---|---|---|---|

| NEF Ingress Gateway | 4 | 4 | 4Gi |

| NEF Egress Gateway | 4 | 4 | 4Gi |

| API Router | 4 | 4 | 4Gi |

| ME | 2 | 4 | 4Gi |

| TI | 2 | 4 | 4Gi |

| QOS | 2 | 4 | 4Gi |

| Expiry Auditor | 2 | 4 | 4Gi |

| FiveGC Agent | 4 | 4 | 4Gi |

| FiveGC Egress | 4 | 4 | 4Gi |

| FiveGC Ingress | 4 | 4 | 4Gi |

| Apd Manager | 2 | 4 | 4Gi |

| nrf-client-nfmanagement | 1 | 1 | 1Gi |

| nrf-client-nfdiscovery | 1 | 1 | 1Gi |

| performance | 2 | 200m | 1Gi |

| Config-server | 1 | 1 | 1Gi |

| app-info | 1 | 200m | 1Gi |

Note:

The VAR value for microservice in each scenario is based on the corresponding Replica value.Table 3-18 Stub Parameters

| Stub Parameter | Replica | CPU | Memory |

|---|---|---|---|

| GMLC | 3 | 1 | 1Gi |

| UDM | 3 | 1 | 1Gi |

| AF | 3 | 1 | 1Gi |

| PCF | 3 | 1 | 1Gi |

| BSF | 3 | 1 | 1Gi |

| NRF | 1 | 1 | 1Gi |

| UDR | 3 | 1 | 1Gi |

3.2.1.3.1.1 Traffic Influence Test Scenario 1

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilization that PCF or UDR Subscription, Unsubscription, and SMF Notification endpoints can handle with two replicas of applicable services for PCF requests with BSF enabled.

Table 3-19 Traffic Influence Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for TI | 2 |

| TI Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 25% Subscription, 25% Unsubscription, and 50% Notifications |

Result and Observation

- The server used is bulk head, which is a shared Bare metal server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 90 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time sub/unsub/notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-20 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 90 hrs |

| Replica | 2 |

Table 3-21 NEF Microservices and their Utilization

| Resources | Subscription | Unsubscription | Notification | Total |

|---|---|---|---|---|

| Average CPU | NA | NA | NA | ~69% |

| Total TPS | 650 | 650 | 1250 | 2550 |

| Average Latency | 108.1 ms | 107.9 ms | 30.0 ms | NA |

| Average Memory | NA | NA | NA | 1.8 GB |

| Max Memory | NA | NA | NA | 1.9 GB |

3.2.1.3.1.2 Traffic Influence Test Scenario 2

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilization that PCF or UDR Subscription, Unsubscription, and SMF Notification endpoints can handle with two replicas of applicable services for PCF requests with BSF enabled.

Table 3-22 Traffic Influence Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for TI | 2 |

| TI Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 25% Subscription, 25% Unsubscription, and 50% Notifications |

Result and Observation

- The server used is bulk head, which is a shared Bare metal server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 19 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time sub/unsub/notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-23 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 19 hrs |

| Replica | 2 |

Table 3-24 NEF Microservices and their Utilization

| Resources | Subscription | Unsubscription | Notification | Total |

|---|---|---|---|---|

| Average CPU | NA | NA | NA | ~64% |

| Total TPS | 650 | 650 | 1250 | 2500 |

| Average Latency | 84.5 ms | 560 ms | 90.6 ms | NA |

| Average Memory | NA | NA | NA | 1.78 GB |

| Max Memory | NA | NA | NA | 1.9 GB |

3.2.1.4 Device Trigger Testcases

Using Device Triggering, applications notify User Equipment (UE) to perform application- specific tasks such as establishing communication with applications, changing device settings, and so on. Device triggering is required when an IP address for the UE is unavailable or unreachable by an application.

This mechanism enhances NEF functionality by introducing new APIs that allow AFs to remotely trigger specific actions on devices within a 5G network.

The Device Trigger feature enables an Application Function (AF) to notify a particular User Equipment (UE) by sending a device trigger request through 5G core (5GC) to perform application-specific tasks such as initiating communication with AF. This is required when the AF does not hold information of IP address for the UE or if the UE is not reachable. Device trigger request includes information required for an AF to send a message to the correct UE and to route the message to the required application. This information including the message to the application and the UE information is known as Trigger payload.

The following subsections provide information about device trigger test scenarios considered to perform benchmark tests.

3.2.1.4.1 Single Site

This section provides information about configuration, parameters, and Device Trigger Model B feature test scenarios of single site.

Deployment Configuration

Table 3-25 Deployment Configuration

| Microservice | Replica | CPU | Memory |

|---|---|---|---|

| NEF Ingress Gateway | 4 | 4 | 4Gi |

| NEF Egress Gateway | 4 | 4 | 4Gi |

| Diameter Gateway | 4 | 4 | 4Gi |

| API Router | 4 | 4 | 4Gi |

| ME | 2 | 4 | 4Gi |

| DT | 2 | 4 | 4Gi |

| QOS | 2 | 4 | 4Gi |

| MSISDNLess Mo SMS | 2 | 4 | 4Gi |

| Expiry Auditor | 2 | 4 | 4Gi |

| FiveGC Agent | 4 | 4 | 4Gi |

| FiveGC Egress | 4 | 4 | 4Gi |

| FiveGC Ingress | 1 | 4 | 4Gi |

| Apd Manager | 2 | 4 | 4Gi |

| nrf-client-nfmanagement | 1 | 1 | 1Gi |

| nrf-client-nfdiscovery | 1 | 1 | 1Gi |

| performance | 2 | 200m | 1Gi |

| Config-server | 1 | 1 | 1Gi |

| app-info | 1 | 200m | 1Gi |

Note:

The VAR value for microservice in each scenario is based on the corresponding Replica value.Table 3-26 Stub Parameters

| Stub Parameter | Replica | CPU | Memory |

|---|---|---|---|

| GMLC | 3 | 1 | 1Gi |

| UDM | 3 | 1 | 1Gi |

| AF | 3 | 1 | 1Gi |

| PCF | 3 | 1 | 1Gi |

| BSF | 3 | 1 | 1Gi |

| NRF | 1 | 1 | 1Gi |

| UDR | 3 | 1 | 1Gi |

| DIAM | 3 | 1 | 1Gi |

3.2.1.4.1.1 Device Trigger Test Scenario 1

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilization that Subscription, Unsubscription, and Notification endpoints can handle with two replicas of applicable services for PCF requests with BSF enabled.

Table 3-27 Device Trigger Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for DT | 2 |

| DT Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 25% Subscription, 25% Unsubscription, and 50% Notification |

Result and Observation

- The server used is bulk head, which is a shared VMware server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 90 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time sub/unsub/notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-28 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 90 hrs |

| Replica | 2 |

Table 3-29 NEF Microservices and their Utilization

| Resources | Subscription | Unsubscription | Notification | Total |

|---|---|---|---|---|

| Average CPU | NA | NA | NA | ~68% |

| Total TPS | 650 | 650 | 1300 | 2600 |

| Average Latency | 78.8 ms | 79.8 ms | 23.6 ms | NA |

| Average Memory | NA | NA | NA | 1.6 GB |

| Max Memory | NA | NA | NA | 1.9 GB |

3.2.1.4.1.2 Device Trigger Test Scenario 2

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilization that Subscription, Unsubscription, and Notification endpoints can handle with two replicas of applicable services for PCF requests with BSF enabled.

Table 3-30 Device Trigger Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for DT | 2 |

| DT Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 25% Subscription, 25% Unsubscription, and 50% Notification |

Result and Observation

- The server used is bulk head, which is a shared VMware server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 19 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time sub/unsub/notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-31 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 19 hrs |

| Replica | 2 |

Table 3-32 NEF Microservices and their Utilization

| Resources | Subscription | Unsubscription | Notification | Total |

|---|---|---|---|---|

| Average CPU | NA | NA | NA | ~67% |

| Total TPS | 650 | 650 | 1300 | 2600 |

| Average Latency | 215.7 ms | 210.3 ms | 24.8 ms | NA |

| Average Memory | NA | NA | NA | 1.65 GB |

| Max Memory | NA | NA | NA | 1.8 GB |

3.2.1.5 MSISDN-Less MO SMS Testcases

Support for MSISDN-Less-MO-SMS feature enables NEF to deliver the MSISDN-less MO-SMS notification message from Short Message Service - Service Center (SMSSC) to Application Function (AF).

With this feature, user equipment (UE) can send messages to AF without using Mobile Station International Subscriber Directory Number (MSISDN) through T4 interface, which is an interface between SMS-SC and NEF. NEF uses the Nnef_MSISDN-Less-MO-SMS API to send UE messages to AF.

The following subsections provide information about MSISDN-Less MO SMS test scenarios considered to perform benchmark tests.

3.2.1.5.1 Single Site

This section provides information about configuration, parameters, and MSISDN-Less MO SMS Model B feature test scenarios of single site.

Deployment Configuration

Table 3-33 Deployment Configuration

| Microservice | Replica | CPU | Memory |

|---|---|---|---|

| NEF Ingress Gateway | 4 | 4 | 4Gi |

| NEF Egress Gateway | 4 | 4 | 4Gi |

| Diameter Gateway | 4 | 4 | 4Gi |

| API Router | 4 | 4 | 4Gi |

| ME | 2 | 4 | 4Gi |

| DT | 2 | 4 | 4Gi |

| QOS | 2 | 4 | 4Gi |

| MSISDNLess Mo SMS | 2 | 4 | 4Gi |

| Expiry Auditor | 2 | 4 | 4Gi |

| FiveGC Agent | 4 | 4 | 4Gi |

| FiveGC Egress | 4 | 4 | 4Gi |

| FiveGC Ingress | 1 | 4 | 4Gi |

| Apd Manager | 2 | 4 | 4Gi |

| nrf-client-nfmanagement | 1 | 1 | 1Gi |

| nrf-client-nfdiscovery | 1 | 1 | 1Gi |

| performance | 2 | 200m | 1Gi |

| Config-server | 1 | 1 | 1Gi |

| app-info | 1 | 200m | 1Gi |

Note:

The VAR value for microservice in each scenario is based on the corresponding Replica value.Table 3-34 Stub Parameters

| Stub Parameter | Replica | CPU | Memory |

|---|---|---|---|

| GMLC | 3 | 1 | 1Gi |

| UDM | 3 | 1 | 1Gi |

| AF | 3 | 1 | 1Gi |

| PCF | 3 | 1 | 1Gi |

| BSF | 3 | 1 | 1Gi |

| NRF | 1 | 1 | 1Gi |

| UDR | 3 | 1 | 1Gi |

| DIAM | 3 | 1 | 1Gi |

3.2.1.5.1.1 MSISDN-Less-MO-SMS Test Scenario 1

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilizes Notification endpoints.

Table 3-35 MSISDN-Less-MO-SMS Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for DT | 2 |

| MSISDN-Less-MO-SMS Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 100% Notification |

Result and Observation

- The server used is bulk head, which is a shared VMware server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 90 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-36 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 90 hrs |

| Replica | 2 |

Table 3-37 NEF Microservices and their Utilization

| Resources | Notification | Total |

|---|---|---|

| Average CPU | NA | ~55% |

| Total TPS | 6000 | 6000 |

| Average Latency | 10 ms | 9.4 ms |

| Average Memory | NA | 1.5 GB |

| Max Memory | NA | 1.75 GB |

3.2.1.5.1.2 MSISDN-Less-MO-SMS Test Scenario 2

This test scenario describes transactions per second when CPU average utilization reaches around 70%.

Objective

To determine average TPS and average latency at 70% CPU utilizes Notification endpoints.

Table 3-38 MSISDN-Less-MO-SMS Testcase Parameters

| Input Parameter Details | Configuration Values |

|---|---|

| Cluster | For more information, see CNE Cluster Details. |

| Topology | For more information, see Test Topology for Model B Benchmarking. |

| Deployment Configuration | For more information, see Test Topology for Model B Benchmarking. |

| NEF Version Tag | 24.2.0 |

| Target CPU | 70% |

| Number of NEF Pods for DT | 2 |

| MSISDN-Less-MO-SMS Pod Profile | 4 vCPU and 4 Gi Memory |

| Traffic Distribution | 100% Notification |

Result and Observation

- The server used is bulk head, which is a shared VMware server.

- The global.cache.evict.time is set to a higher value to avoid frequent calls from apd-agent in Fivegcagent microservice to apd-manager microservices. Therefore, global.cache.evict.time is set to 19 hrs.

- apdManager was not tuned as it gets hit only when the apd-agent cache evicts at fivegc and that is set to a higher value now.

- Tuning nrf-client related to pods is not required as it is contacted separately through apdmanager and that does not come into our real-time notification flow.

- Scaling is not required for pods related to nrf-client.

Table 3-39 Result and Observation

| Parameter | Values |

|---|---|

| Test Duration | 19 hrs |

| Replica | 2 |

Table 3-40 NEF Microservices and their Utilization

| Resources | Notification | Total |

|---|---|---|

| Average CPU | NA | ~68% |

| Total TPS | 6000 | 6000 |

| Average Latency | 9.1 ms | 12.9 ms |

| Average Memory | NA | 1.64 GB |

| Max Memory | NA | 1.66 GB |