7 Fault Recovery

Note:

The fault recovery procedures can be used by Oracle customers as long as Oracle Customer Service personnel are involved or consulted.7.1 Restoring Single Node Failure

This section provides fault recovery procedures for restoring a single MySQL Network Database (NDB) cluster node failure (Management node, Data node, appSQL (non-georeplication) node, or SQL (georeplication node) in Cloud Native Database Tier (cnDBTier) Environment.

Prerequisites

- The fault recovery procedures described in this section can be

applied to one of following scenarios, consider a MySQL NDB cluster with 3

Management nodes, 4 Data nodes (2 data node groups and each group has 2 data

nodes) and 2 SQL nodes for example:

- One of the management nodes fails, while others are all in a running state.

- Two management nodes fail, while others are all in a running state.

- One of the data nodes fails, while others are all in a running state.

- One of the SQL (georeplication) or appSQL (non-georeplication) nodes fails, while others are all in a running state.

- One management node, one data node, one SQL (georeplication) node, and one appSQL (non-georeplication) node fails, while others are all in a running state.

- One management node, one Data node in each of the Data node group, and one SQL (georeplication) or appSQL (non-georeplication) node fails, while others are all in a running state.

- Ensure that the Oracle Communication Cloud Native Environment (OCCNE) cluster has a running Bastion Host for MySQL NDB cluster restoration.

7.1.1 Restoring Single Database Node Failure

Following is the fault recovery procedure for restoring a single Cloud Native Database Tier (cnDBTier) cluster node failure (Management node, Data node, SQL (georeplication) node, or appSQL (non-georeplication node) in the cnDBTier environment.

- Log in to the ndbmgmd-0 in

Cluster1:

$ kubectl -n Cluster1 exec -ti pod/ndbmgmd-0 -c mysqlndbcluster /bin/bash - Log in to the NDB cluster management

client:

[mysql@ndbmgmd-0 ~]$ ndb_mgm - Run the following command to verify that all nodes are listed with

no error

message:

-- NDB Cluster -- Management Client -- ndb_mgm> showSample output:-- NDB Cluster -- Management Client -- ndb_mgm> show Connected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 4 node(s) id=1 @10.233.116.101 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 0, *) id=2 @10.233.70.106 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 0) id=3 @10.233.96.102 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 1) id=4 @10.233.119.205 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 1) [ndb_mgmd(MGM)] 2 node(s) id=49 @10.233.77.54 (mysql-8.4.2 ndb-8.4.2) id=50 @10.233.112.126 (mysql-8.4.2 ndb-8.4.2) [mysqld(API)] 8 node(s) id=70 @10.233.90.189 (mysql-8.4.2 ndb-8.4.2) id=71 @10.233.71.30 (mysql-8.4.2 ndb-8.4.2) id=72 @10.233.118.58 (mysql-8.4.2 ndb-8.4.2) id=73 @10.233.100.65 (mysql-8.4.2 ndb-8.4.2) id=222 (not connected, accepting connect from any host) id=223 (not connected, accepting connect from any host) id=224 (not connected, accepting connect from any host) id=225 (not connected, accepting connect from any host)

Note:

Node IDs 222 to 225 in the sample output are shown as "not connected" as these are added as empty slot IDs that are used for georeplication recovery. You can ignore these node IDs.Note:

For appSQL nodes that are not in georeplication, follow the procedure till Step3.The following recovery scenario is an example explaining a data node pod failure and its corrupted PVC:

- Delete the corrupted PVC of the node that must be restored, for example,

the corrupted PVC belongs to the second data node pod:

Note:

Since the pod is not yet deleted, deleting the PVC using the below command freezes the session. To avoid this, open another terminal and continue the following steps.$ kubectl -n <namespace> delete pvc pvc-ndbmtd-ndbmtd-<id, could be 0, 1, 2, 3>Where

<namespace>is the failed data node.$ kubectl -n Cluster1 delete pvc pvc-ndbmtd-ndbmtd-1Sample output:persistentvolumeclaim "pvc-ndbmtd-ndbmtd-1" deleted - Delete the corrupted pod of the node that must be restored, for example,

the second data node

pod:

$ kubectl -n <namespace> delete pod/ndbmtd-<id, could be 0, 1, 2, 3>For example:$ kubectl -n Cluster1 delete pod/ndbmtd-1Sample output:pod "ndbmtd-1" deletedThis step may take some time to complete. Once the process exits with no error, the pod is in pending state and its PVC is not created automatically. This is because the HPA tries to create the pod without recreating the PVC.

$ kubectl get pod -n Cluster1Sample output:NAME READY STATUS RESTARTS AGE pod/mysql-cluster-Cluster1-Cluster2-replication-svc-7b5cb67c9fqd7b4 1/1 Running 0 36m pod/mysql-cluster-Cluster1-Cluster3-replication-svc-86445d8cb42pz5x 1/1 Running 0 2m9s pod/mysql-cluster-db-monitor-svc-57d688947-bbqp7 1/1 Running 1 69m pod/ndbmgmd-0 2/2 Running 0 69m pod/ndbmgmd-1 2/2 Running 0 68m pod/ndbmtd-0 3/3 Running 0 69m pod/ndbmtd-1 3/3 Running 0 68m pod/ndbmysqld-0 3/3 Running 0 69m pod/ndbmysqld-1 3/3 Running 0 2m9s pod/ndbmysqld-2 3/3 Running 0 68m pod/ndbmysqld-3 3/3 Running 0 68m

- Delete the pod that you want to restore. Once you delete the pod,

Kubernetes brings it up

successfully:

$ kubectl -n <namespace> delete pod/ndbmtd-<id, could be 0, 1, 2, 3>For example:$ kubectl -n Cluster1 delete pod/ndbmtd-1Sample output:pod "ndbmtd-1" deleted - Wait until the pod is up and running and verigy the status of the

pod:

$ kubectl get pod -n Cluster1Sample output:NAME READY STATUS RESTARTS AGE pod/mysql-cluster-Cluster1-Cluster2-replication-svc-7b5cb67c9fqd7b4 1/1 Running 0 36m pod/mysql-cluster-Cluster1-Cluster3-replication-svc-86445d8cb42pz5x 1/1 Running 0 2m9s pod/mysql-cluster-db-monitor-svc-57d688947-bbqp7 1/1 Running 1 69m pod/ndbmgmd-0 2/2 Running 0 69m pod/ndbmgmd-1 2/2 Running 0 68m pod/ndbmtd-0 3/3 Running 0 69m pod/ndbmtd-1 3/3 Running 0 68m pod/ndbmysqld-0 3/3 Running 0 69m pod/ndbmysqld-1 3/3 Running 0 2m9s pod/ndbmysqld-2 3/3 Running 0 68m pod/ndbmysqld-3 3/3 Running 0 68m

- Perform the following to restore a SQL node after failure:

If the restored SQL node was involved in active replication, then try switching from the original standby SQL node to a newly restored SQL node that is on standby now, and verify that the replication channel can be successfully established.

- Stop replication on original standby SQL node so that switch

over of active replication channel happens to newly restored SQL

node:

$ kubectl -n Cluster1 exec -it ndbmysqld-1 -- mysql -h 127.0.0.1 -u<username> -p<password> -e "STOP REPLICA;"For example:kubectl -n Cluster1 exec -it ndbmysqld-1 -- mysql -h 127.0.0.1 -uroot -pNextGenCne -e "STOP REPLICA;" - Verify active replication on the newly restored SQL

node:

$ kubectl -n Cluster1 exec -it ndbmysqld-0 -- mysql -h127.0.0.1 -u<username> -p<password> -e "SHOW REPLICA STATUS\G"Example:kubectl -n Cluster1 exec -it ndbmysqld-0 -- mysql -h127.0.0.1 -uroot -pNextGenCne -e "SHOW REPLICA STATUS\G"Sample output:Defaulted container "mysqlndbcluster" out of: mysqlndbcluster, init-sidecar mysql: [Warning] Using a password on the command line interface can be insecure. *************************** 1. row *************************** Replica_IO_State: Waiting for source to send event Source_Host: 10.75.180.77 Source_User: occnerepluser Source_Port: 3306 Connect_Retry: 60 Source_Log_File: mysql-bin.000005 Read_Source_Log_Pos: 62625 Relay_Log_File: mysql-relay-bin.000002 Relay_Log_Pos: 2191 Relay_Source_Log_File: mysql-bin.000005 Replica_IO_Running: Yes Replica_SQL_Running: Yes Replicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0 Last_Error: Skip_Counter: 0 Exec_Source_Log_Pos: 62625 Relay_Log_Space: 2401 Until_Condition: None Until_Log_File: Until_Log_Pos: 0 Source_SSL_Allowed: No Source_SSL_CA_File: Source_SSL_CA_Path: Source_SSL_Cert: Source_SSL_Cipher: Source_SSL_Key: Seconds_Behind_Source: 0 Source_SSL_Verify_Server_Cert: No Last_IO_Errno: 0 Last_IO_Error: Last_SQL_Errno: 0 Last_SQL_Error: Replicate_Ignore_Server_Ids: Source_Server_Id: 2000 Source_UUID: 89219509-8fce-11ec-89b7-56d8e6d44947 Source_Info_File: mysql.slave_master_info SQL_Delay: 0 SQL_Remaining_Delay: NULL Replica_SQL_Running_State: Replica has read all relay log; waiting for more updates Source_Retry_Count: 86400 Source_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: Source_SSL_Crl: Source_SSL_Crlpath: Retrieved_Gtid_Set: Executed_Gtid_Set: Auto_Position: 0 Replicate_Rewrite_DB: Channel_Name: Source_TLS_Version: Source_public_key_path: Get_Source_public_key: 0 Network_Namespace:

Note:

For management nodes or SQL nodes, follow this procedure by replacing every occurrence of 'ndbmtd' in the commands with 'ndbmgmd' or 'ndbmysqld' respectively. - Stop replication on original standby SQL node so that switch

over of active replication channel happens to newly restored SQL

node:

7.1.2 Recovering a Single Node Replication Service

This section provides the procedure to recover a single node replication service in case of PVC corruption.

- Perform the following steps to scale down the replication service pod:

- Run the following command to get the list of deployments in

cluster1:

$ kubectl get deployment --namespace=cluster1Sample output:NAME READY UP-TO-DATE AVAILABLE AGE mysql-cluster-cluster1-cluster2-replication-svc 1/1 1 1 11m mysql-cluster-db-backup-manager-svc 1/1 1 1 11m mysql-cluster-db-monitor-svc 1/1 1 1 11m - Scale down the replication service of cluster1 with respect to

cluster2:

$ kubectl scale deployment mysql-cluster-cluster1-cluster2-replication-svc --namespace=cluster1 --replicas=0Sample output:deployment.apps/mysql-cluster-cluster1-cluster2-replication-svc scaled

- Run the following command to get the list of deployments in

cluster1:

- Perform the following steps to delete the corrupted PVC of the db-replication-svc

pod:

- Run the following command to get the corrupted

PVC:

$ kubectl get pvc -n cluster1Sample output:NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE pvc-cluster1-cluster2-replication-svc Bound pvc-4f1e7afa-724d-470a-b1a5-dbe1257e7a48 8Gi RWO occne-dbtier-sc 12m pvc-ndbappmysqld-ndbappmysqld-0 Bound pvc-307f3d29-01d8-48a8-b580-15db8edc1121 2Gi RWO occne-dbtier-sc 12m pvc-ndbappmysqld-ndbappmysqld-1 Bound pvc-358b8d50-b53c-427f-87a3-ac9c139581b6 2Gi RWO occne-dbtier-sc 10m pvc-ndbmgmd-ndbmgmd-0 Bound pvc-965061dd-3d88-40fe-bf25-4260a61d0fa4 1Gi RWO occne-dbtier-sc 11m pvc-ndbmgmd-ndbmgmd-1 Bound pvc-8601a9b3-769c-41ca-aeb8-c5b5725693e3 1Gi RWO occne-dbtier-sc 11m pvc-ndbmtd-ndbmtd-0 Bound pvc-8bfab5e0-4a24-432f-98a4-a64080ccb33c 3Gi RWO occne-dbtier-sc 11m pvc-ndbmtd-ndbmtd-1 Bound pvc-196f7e87-824c-46d0-b0c9-8aad56806a34 3Gi RWO occne-dbtier-sc 11m pvc-ndbmysqld-ndbmysqld-0 Bound pvc-6ea3cd11-8cbe-4d2a-864d-baf7d22f4295 2Gi RWO occne-dbtier-sc 11m pvc-ndbmysqld-ndbmysqld-1 Bound pvc-c978f6de-0477-4a68-8ebe-3bcb899d27bb 2Gi RWO occne-dbtier-sc 10m - Run the following command to delete the corrupted

PVC:

$ kubectl delete pvc pvc-cluster1-cluster2-replication-svc -n cluster1Sample output:persistentvolumeclaim "pvc-cluster1-cluster2-replication-svc" deleted

- Run the following command to get the corrupted

PVC:

- Perform the following steps to check if the corrupted PVC is deleted:

- Get the list of deployments in cluster 1 and verify that the deleted PVC is

not present in the

output:

$ kubectl get pvc -n cluster1Sample output:NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE pvc-ndbappmysqld-ndbappmysqld-0 Bound pvc-307f3d29-01d8-48a8-b580-15db8edc1121 2Gi RWO occne-dbtier-sc 12m pvc-ndbappmysqld-ndbappmysqld-1 Bound pvc-358b8d50-b53c-427f-87a3-ac9c139581b6 2Gi RWO occne-dbtier-sc 11m pvc-ndbmgmd-ndbmgmd-0 Bound pvc-965061dd-3d88-40fe-bf25-4260a61d0fa4 1Gi RWO occne-dbtier-sc 12m pvc-ndbmgmd-ndbmgmd-1 Bound pvc-8601a9b3-769c-41ca-aeb8-c5b5725693e3 1Gi RWO occne-dbtier-sc 12m pvc-ndbmtd-ndbmtd-0 Bound pvc-8bfab5e0-4a24-432f-98a4-a64080ccb33c 3Gi RWO occne-dbtier-sc 12m pvc-ndbmtd-ndbmtd-1 Bound pvc-196f7e87-824c-46d0-b0c9-8aad56806a34 3Gi RWO occne-dbtier-sc 12m pvc-ndbmysqld-ndbmysqld-0 Bound pvc-6ea3cd11-8cbe-4d2a-864d-baf7d22f4295 2Gi RWO occne-dbtier-sc 12m pvc-ndbmysqld-ndbmysqld-1 Bound pvc-c978f6de-0477-4a68-8ebe-3bcb899d27bb 2Gi RWO occne-dbtier-sc 10m - Run the following command to get the PV details and verify that the PV

associated with the corrupted PVC is not present in the

output:

$ kubectl get pv |grep cluster1 | grep replSample output:pvc-ecc6d691-ca31-41c3-930c-c092d73452e8 8Gi RWO Delete Bound cluster2/pvc-cluster2-cluster1-replication-svc occne-dbtier-sc 10m

- Get the list of deployments in cluster 1 and verify that the deleted PVC is

not present in the

output:

- Run the following command to upgrade cnDBTier with the modified

custom_values.yamlfile:$ helm upgrade mysql-cluster occndbtier -f occndbtier/custom_values.yaml -n cluster1When the upgrade is complete, the new db-replication-svc pod comes up with the new PVC.

- Perform a Helm test to ensure that all the cnDBTier services are

running

smoothly:

$ helm test mysql-cluster -n ${OCCNE_NAMESPACE}Sample output:NAME: mysql-cluster LAST DEPLOYED: Mon May 20 09:07:56 2024 NAMESPACE: cluster1 STATUS: deployed REVISION: 2 TEST SUITE: mysql-cluster-node-connection-test Last Started: Mon May 20 09:15:39 2024 Last Completed: Mon May 20 09:16:16 2024 Phase: SucceededCheck the status of cnDBTier Cluster1 by running the following command:$ kubectl -n ${OCCNE_NAMESPACE} exec -it ndbmgmd-0 -- ndb_mgm -e showSample output:Defaulted container "mysqlndbcluster" out of: mysqlndbcluster, db-infra-monitor-svc Connected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=1 @10.233.74.160 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 0, *) id=2 @10.233.79.154 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 0) [ndb_mgmd(MGM)] 2 node(s) id=49 @10.233.78.169 (mysql-8.4.2 ndb-8.4.2) id=50 @10.233.102.220 (mysql-8.4.2 ndb-8.4.2) [mysqld(API)] 8 node(s) id=56 @10.233.72.151 (mysql-8.4.2 ndb-8.4.2) id=57 @10.233.84.206 (mysql-8.4.2 ndb-8.4.2) id=70 @10.233.79.153 (mysql-8.4.2 ndb-8.4.2) id=71 @10.233.73.138 (mysql-8.4.2 ndb-8.4.2) id=222 (not connected, accepting connect from any host) id=223 (not connected, accepting connect from any host) id=224 (not connected, accepting connect from any host) id=225 (not connected, accepting connect from any host)

7.2 Restoring Database From Backup

This chapter provides procedure to restore the database from backup stored in ndb_restore utility.

7.2.1 Restoring Database from Backup with ndb_restore

This procedure restores the database nodes of a cnDBTier cluster from backup with MySQL ndb_restore utility.

Note:

- To restore the single site cnDBTier cluster deployment, download the NDB backup to the Bastion Host. If the backup is already downloaded in Bastion Host, then you can use the same backup for restoring the cnDBtier cluster in case of fatal errors.

- The cnDBTier backup that is used for the restore must be taken using the same cnDBTier version as that of the cnDBTier site that is being restored.

- For restoring the failed cnDBTier clusters in two site, three site, and four site cnDBTier deployment models, perform the procedures as described in Restoring Georeplication (GR) Failure section.

- db-backup-manager-svc is designed to automatically restart in case of errors. Therefore, when the backup-manager-svc encounters a temporary error during the georeplication recovery process, it may undergo several restarts. When cnDBTier reaches a stable state, the db-backup-manager-svc pod operates normally without any further restarts.

- You can locate the scripts used in this section in the following location:

<path where CSAR package of cnDBTier is extracted>/Artifacts/Scripts/dr-procedure.

Downloading the Latest DB Backup Before Restoration

Create a database backup of cnDBTier Cluster1. If the cnDBTier is UP, copy the backup directories and files to a local directory in your Bastion Host. For more information on how to create a backup, see Creating On-demand Database Backup.

- Check if the backup is already existing in the cnDBTier cluster that needs

to be downloaded to the Bastion

Host:

$ kubectl -n cluster1 exec -it ndbmtd-0 -- ls -lrt /var/ndbbackup/dbback/BACKUPExample:$ kubectl -n cluster1 exec -it ndbmtd-0 -- ls -lrt /var/ndbbackup/dbback/BACKUPSample output:Defaulting container name to mysqlndbcluster. Use 'kubectl describe pod/ndbmtd-0 -n cluster1' to see all of the containers in this pod. total 8 drwxr-sr-x. 6 mysql mysql 4096 Feb 19 17:50 BACKUP-217221233 drwxr-s---. 6 mysql mysql 4096 Feb 19 18:33 BACKUP-217221332 - Download the backup from the cnDBTier cluster data nodes to the Bastion

Host.

- Run the following command to download the backup files to the Bastion

Host and compress the files as a single tar ball file. Select the backup ID from step

1 that needs to be

downloaded.

$ SKIP_NDB_APPLY_STATUS=1 CNDBTIER_NAMESPACE=<namespace of cndbtier cluster on which backup is created> BACKUP_DIR=<path where backup files are copied> DATA_NODE_COUNT=<ndb data node count> BACKUP_ID=<backup id> BACKUP_ENCRYPTION_ENABLE=false ./download_backup.sh <backup tar ball path>Example:$ SKIP_NDB_APPLY_STATUS=1 CNDBTIER_NAMESPACE=cluster1 BACKUP_DIR=/var/ndbbackup DATA_NODE_COUNT=4 BACKUP_ID=217221233 BACKUP_ENCRYPTION_ENABLE=false ./download_backup.sh backup_217221233.tar.gzSample output:Defaulting container name to mysqlndbcluster. tar: Removing leading `/' from member names Defaulting container name to mysqlndbcluster. tar: Removing leading `/' from member names Defaulting container name to mysqlndbcluster. tar: Removing leading `/' from member names Defaulting container name to mysqlndbcluster. tar: Removing leading `/' from member names ./ ./ndbmtd-0/ ./ndbmtd-0/BACKUP-217221233/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.1.log ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233-0.1.Data ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.1.ctl ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.1.log ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233-0.1.Data ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.1.ctl ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.1.log ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233-0.1.Data ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.1.ctl ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.1.log ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233-0.1.Data ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.1.ctl ./ndbmtd-1/ ./ndbmtd-1/BACKUP-217221233/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.2.log ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233-0.2.Data ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.2.ctl ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.2.log ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233-0.2.Data ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.2.ctl ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.2.log ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233-0.2.Data ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.2.ctl ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.2.log ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233-0.2.Data ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.2.ctl ./ndbmtd-2/ ./ndbmtd-2/BACKUP-217221233/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.3.ctl ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.3.log ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233-0.3.Data ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.3.ctl ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.3.log ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233-0.3.Data ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.3.ctl ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.3.log ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233-0.3.Data ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.3.ctl ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.3.log ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233-0.3.Data ./ndbmtd-3/ ./ndbmtd-3/BACKUP-217221233/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233-0.4.Data ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.4.log ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.4.ctl ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233-0.4.Data ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.4.log ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.4.ctl ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233-0.4.Data ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.4.log ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.4.ctl ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233-0.4.Data ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.4.log ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.4.ctl Cluster backup 217221233 downloaded and compressed to backup_217221233.tar.gz successfully.

- Run the following command to download the backup files to the Bastion

Host and compress the files as a single tar ball file. Select the backup ID from step

1 that needs to be

downloaded.

- Note down the location of the backup tar file and backup ID. This path is required later for database restoration.

Restoring Database Schema and Tables

Note:

- Ensure that the cnDBTier backup that is used for the restore is taken using the same cnDBTier version as that of the cnDBTier site that is being restored.

- You can ignore the temporary errors observed in the NDB database that is restored if the restore completes successfully.

- Log in to the Bastion Host of the cnDBTier cluster to restore the database.

Check the backup tar file downloaded in the previous section, the backup file must be available before restoring of the database.

- If the backup is fetched from a remote server, then perform the following steps to

convert the backup file from zip format to the format required by the restore script:

- Copy the backup zip file to the folder containing the restore script.

- Run the following command to unzip the backup

file:

$ unzip backup_<backup_id>_<Encrypted/Unencrypted>.zipFor example:$ unzip backup_708241812_Encrypted.zipSample output:Archive: backup_708241812_Encrypted.zip inflating: backup_708241812_dn_1.tar.gz inflating: backup_708241812_dn_1.tar.gz.sha256 inflating: backup_708241812_dn_2.tar.gz inflating: backup_708241812_dn_2.tar.gz.sha256 - Untar the tar file that belongs to the first data node (the file name containing

dn_1is the file for the first data node):$ tar -xzvf backup_<backup_id>_dn_1.tar.gz -C ./For example:$ tar -xzvf backup_708241812_dn_1.tar.gz -C ./Sample output:BACKUP-708241812/ BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/ BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812.1.ctl BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812-0.1.Data BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812.1.log BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/ BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812.1.ctl BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812-0.1.Data BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812.1.log BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/ BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812.1.ctl BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812-0.1.Data BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812.1.log BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/ BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812.1.ctl BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812-0.1.Data BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812.1.log - Create a directory named

ndbmtd-0and move the folder extracted in the previous step to thendbmtd-0folder:$ mkdir ndbmtd-0 $ mv BACKUP-<backup_id> ./ndbmtd-0/For example:$ mkdir ndbmtd-0 $ mv BACKUP-708241812 ./ndbmtd-0/ - Untar the tar file that belongs to the second data node (the file name containing

dn_2is the file for the second data node):$ tar -xzvf backup_<backup_id>_dn_2.tar.gz -C ./For example:$ tar -xzvf backup_708241812_dn_2.tar.gz -C ./Sample output:BACKUP-708241812/ BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/ BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812.2.log BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812.2.ctl BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812-0.2.Data BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/ BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812.2.log BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812.2.ctl BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812-0.2.Data BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/ BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812.2.log BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812.2.ctl BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812-0.2.Data BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/ BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812.2.log BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812.2.ctl BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812-0.2.Data - Create a directory named

ndbmtd-1and move the folder extracted in the previous step to thendbmtd-1folder:$ mkdir ndbmtd-1 $ mv BACKUP-<backup_id> ./ndbmtd-1/For example:$ mkdir ndbmtd-1 $ mv BACKUP-708241812 ./ndbmtd-1/ - Repeat steps e and f for the remaining tar files of respective data nodes.

- Create a tar file to contain all the folders created in the previous

steps:

$ tar -czvf backup_<backup_id>.tar.gz ndbmtd-*For example:tar -czvf backup_708241812.tar.gz ndbmtd-*Sample output:ndbmtd-0/ ndbmtd-0/BACKUP-708241812/ ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/ ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812.1.ctl ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812-0.1.Data ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812.1.log ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/ ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812.1.ctl ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812-0.1.Data ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812.1.log ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/ ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812.1.ctl ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812-0.1.Data ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812.1.log ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/ ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812.1.ctl ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812-0.1.Data ndbmtd-0/BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812.1.log ndbmtd-1/ ndbmtd-1/BACKUP-708241812/ ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/ ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812.2.log ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812.2.ctl ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-1-OF-4/BACKUP-708241812-0.2.Data ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/ ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812.2.log ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812.2.ctl ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-4-OF-4/BACKUP-708241812-0.2.Data ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/ ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812.2.log ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812.2.ctl ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-2-OF-4/BACKUP-708241812-0.2.Data ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/ ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812.2.log ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812.2.ctl ndbmtd-1/BACKUP-708241812/BACKUP-708241812-PART-3-OF-4/BACKUP-708241812-0.2.Data - Use the created file (

backup_<backup_id>.tar.gz) in the following steps to perform a restore.

- Reinstall the cnDBTier cluster, if the cluster fails due to fatal errors. For more information about installing the cnDBTier cluster, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

- Disable cnDBTier replication services on cnDBTier cluster if exists.

For single site deployment, this service is disabled.

Log in to the Bastion Host of cnDBTier Cluster and scale down the DB replication service deployment.

$ kubectl -n <namespace of cnDBTier cluster> get deployments | egrep 'replication' | awk '{print $1}' | xargs -L1 -r kubectl -n <namespace of cnDBTier cluster> scale deployment --replicas=0Example:$ kubectl -n cluster1 get deployments | egrep 'replication' | awk '{print $1}' | xargs -L1 -r kubectl -n cluster1 scale deployment --replicas=0Sample output:deployment.apps/mysql-cluster-cluster1-cluster2-replication-svc scaled - Run the following command to disable DB backup manager service in cnDBTier

cluster:

Log in to the Bastion Host of cnDBTier Cluster and scale down the DB backup manager service deployment.

$ kubectl -n <namespace of cnDBTier cluster> get deployments | egrep 'db-backup-manager-svc' | awk '{print $1}' | xargs -L1 -r kubectl -n cluster1 scale deployment --replicas=0Example:$ kubectl -n cluster1 get deployments | egrep 'db-backup-manager-svc' | awk '{print $1}' | xargs -L1 -r kubectl -n cluster1 scale deployment --replicas=0Sample output:deployment.apps/mysql-cluster-db-backup-manager-svc scaled - Wait until cnDBTier cluster is up and running. Check the status of the

cnDBTier cluster by running the following

commands:

$ kubectl -n <namespace of cnDBTier Cluster> get pods $ kubectl -n <namespace of cnDBTier Cluster> exec -it ndbmgmd-0 -- ndb_mgm -e showExample: Checking the cluster status by accessing the pods running in the cluster$ kubectl -n cluster1 get podsSample output:NAME READY STATUS RESTARTS AGE mysql-cluster-db-monitor-svc-777dc5d7d7-pmzxz 1/1 Running 0 6h19m ndbappmysqld-0 2/2 Running 0 6h19m ndbappmysqld-1 2/2 Running 0 6h14m ndbmgmd-0 2/2 Running 0 6h19m ndbmgmd-1 2/2 Running 0 6h18m ndbmtd-0 3/3 Running 0 6h19m ndbmtd-1 3/3 Running 0 6h17m ndbmtd-2 3/3 Running 0 6h17m ndbmtd-3 3/3 Running 0 6h16m ndbmysqld-0 3/3 Running 0 6h19m ndbmysqld-1 3/3 Running 0 6h13mExample: Checking the status of cluster from the management pod$ kubectl -n cluster1 exec -it ndbmgmd-0 -- ndb_mgm -e showSample output:Connected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 4 node(s) id=1 @10.233.116.101 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 0, *) id=2 @10.233.70.106 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 0) id=3 @10.233.96.102 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 1) id=4 @10.233.119.205 (mysql-8.4.2 ndb-8.4.2, Nodegroup: 1) [ndb_mgmd(MGM)] 2 node(s) id=49 @10.233.77.54 (mysql-8.4.2 ndb-8.4.2) id=50 @10.233.112.126 (mysql-8.4.2 ndb-8.4.2) [mysqld(API)] 8 node(s) id=56 @10.233.90.189 (mysql-8.4.2 ndb-8.4.2) id=57 @10.233.71.30 (mysql-8.4.2 ndb-8.4.2) id=71 @10.233.118.58 (mysql-8.4.2 ndb-8.4.2) id=72 @10.233.100.65 (mysql-8.4.2 ndb-8.4.2) id=222 (not connected, accepting connect from any host) id=223 (not connected, accepting connect from any host) id=224 (not connected, accepting connect from any host) id=225 (not connected, accepting connect from any host)Note:

Node IDs 222 to 225 in the sample output are shown as "not connected" as these are added as empty slot IDs that are used for georeplication recovery. You can ignore these node IDs. - Perform the following steps to restore NDB database

automatically:

- Run the following command to pick a backup to restore the NDB

cluster:

$ SKIP_NDB_APPLY_STATUS=1 CNDBTIER_NAMESPACE=<namespace> BACKUP_DIR=<backup_DIR> BACKUP_ID=<backup_ID> BACKUP_ENCRYPTION_ENABLE=<true/false> BACKUP_ENCRYPTION_PASSWORD=<backup encryption password> ./cndbtier_restore.sh <backup_tar_path>where:- <namespace>: is the namespace of cnDBTier cluster to restore

- <backup_DIR>: is the path where backup files are copied

- <backup_ID>: is the backup ID obtained in Downloading Latest DB Backup Before Restoration

- <backup encryption password>: if BACKUP_ENCRYPTION_ENABLE is set to true, then this variable gives the backup encryption password

- <backup_tar_path>: is the backup tar ball path generated in Downloading Latest DB Backup Before Restoration

For example:

$ SKIP_NDB_APPLY_STATUS=1 CNDBTIER_NAMESPACE=cluster1 BACKUP_DIR="/var/ndbbackup" BACKUP_ID=217221233 BACKUP_ENCRYPTION_ENABLE=true BACKUP_ENCRYPTION_PASSWORD="NextGenCne" ./cndbtier_restore.sh backup_217221233.tar.gzSample output:Extracting backup part files to /tmp/.cndbtier-backup-MVkgJJperv ... ./ ./ndbmtd-0/ ./ndbmtd-0/BACKUP-217221233/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.1.log ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233-0.1.Data ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.1.ctl ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.1.log ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233-0.1.Data ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.1.ctl ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.1.log ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233-0.1.Data ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.1.ctl ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/ ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.1.log ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233-0.1.Data ./ndbmtd-0/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.1.ctl ./ndbmtd-1/ ./ndbmtd-1/BACKUP-217221233/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.2.log ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233-0.2.Data ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.2.ctl ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.2.log ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233-0.2.Data ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.2.ctl ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.2.log ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233-0.2.Data ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.2.ctl ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/ ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.2.log ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233-0.2.Data ./ndbmtd-1/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.2.ctl ./ndbmtd-2/ ./ndbmtd-2/BACKUP-217221233/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.3.ctl ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.3.log ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233-0.3.Data ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.3.ctl ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.3.log ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233-0.3.Data ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.3.ctl ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.3.log ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233-0.3.Data ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/ ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.3.ctl ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.3.log ./ndbmtd-2/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233-0.3.Data ./ndbmtd-3/ ./ndbmtd-3/BACKUP-217221233/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233-0.4.Data ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.4.log ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-4-OF-4/BACKUP-217221233.4.ctl ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233-0.4.Data ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.4.log ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-1-OF-4/BACKUP-217221233.4.ctl ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233-0.4.Data ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.4.log ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-3-OF-4/BACKUP-217221233.4.ctl ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/ ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233-0.4.Data ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.4.log ./ndbmtd-3/BACKUP-217221233/BACKUP-217221233-PART-2-OF-4/BACKUP-217221233.4.ctl Defaulting container name to mysqlndbcluster. Defaulting container name to mysqlndbcluster. Defaulting container name to mysqlndbcluster. Defaulting container name to mysqlndbcluster. mysql: [Warning] Using a password on the command line interface can be insecure. mysql: [Warning] Using a password on the command line interface can be insecure. Nodeid = 1 Backup Id = 217221233 backup path = /var/ndbbackup/dbback/BACKUP/BACKUP-217221233 Found backup 217221233 with 4 backup parts ................ ................ ................ ................ ................ ................ 2023-11-07 18:04:06 [rebuild_indexes] Rebuilding indexes 2023-11-07 18:04:06 [rebuild_indexes] Rebuilding indexes 2023-11-07 18:04:06 [rebuild_indexes] Rebuilding indexes 2023-11-07 18:04:06 [rebuild_indexes] Rebuilding indexes Rebuilding index `PRIMARY` on table `DBTIER_REPLICATION_CHANNEL_INFO` ... OK (0 s) Rebuilding index `PRIMARY` on table `DBTIER_BACKUP_COMMAND_QUEUE` ... OK (0 s) Rebuilding index `PRIMARY` on table `DBTIER_BACKUP_INFO` ... OK (0 s) Rebuilding index `PRIMARY` on table `DBTIER_REPL_SITE_INFO` ... OK (0 s) Rebuilding index `PRIMARY` on table `DBTIER_SITE_INFO` ... OK (0 s) Rebuilding index `PRIMARY` on table `DBTIER_INITIAL_BINLOG_POSTION` ... OK (0 s) Rebuilding index `PRIMARY` on table `DBTIER_BACKUP_TRANSFER_INFO` ... OK (0 s) Rebuilding index `PRIMARY` on table `City` ... OK (0 s) Create foreign keys Create foreign keys done [INFO]: Value of ndbmysqldcount: 2. statefulset.apps/ndbmysqld scaled [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndbmysqld pods are up. [INFO]: Wait till all ndb pods are up. [INFO]: Scaled up all replication ndbmysqld pods. pod "ndbappmysqld-0" deleted pod "ndbappmysqld-1" deleted Cluster restore completed.

- Run the following command to pick a backup to restore the NDB

cluster:

- If user accounts do not exist, create the required NF-specific user accounts

and grants to match NF users and grants of the good site in the reinstalled cnDBTier. For

sample procedure, see Creating NF Users.

Note:

For more details about creating NF-specific user account and grants, refer to the NF-specific fault recovery guide. - Clean up backup_info database tables and mysql.ndb_apply_status table, once

the restore is

complete:

$ kubectl -n <namespace of cnDBTier Cluster> exec -it ndbmysqld-0 -- mysql -h 127.0.0.1 -uroot -pSample output:Enter Password:$ mysql> DELETE FROM backup_info.DBTIER_BACKUP_COMMAND_QUEUE; $ mysql> DELETE FROM backup_info.DBTIER_BACKUP_INFO; $ mysql> DELETE FROM backup_info.DBTIER_BACKUP_TRANSFER_INFO; $ mysql> DELETE FROM mysql.ndb_apply_status; DELETE FROM replication_info.DBTIER_INITIAL_BINLOG_POSTION;When georeplication failure occurs on all sites, clean up thereplication_infodatabase tables as the backup is taken from other clusters and the backup can be either a multi-channel or single-channel backup:$ mysql> DELETE FROM replication_info.DBTIER_REPLICATION_CHANNEL_INFO $ mysql> DELETE FROM replication_info.DBTIER_REPL_ERROR_SKIP_INFO; $ mysql> DELETE FROM replication_info.DBTIER_REPL_EVENT_INFO; $ mysql> DELETE FROM replication_info.DBTIER_REPL_SITE_INFO; $ mysql> DELETE FROM replication_info.DBTIER_REPL_SVC_INFO; $ mysql> DELETE FROM replication_info.DBTIER_SITE_INFO;Example to remove all the entries from thebackup_info.DBTIER_BACKUP_INFOtable andmysql.ndb_apply_statustable:$ kubectl -n cluster1 exec -it ndbmysqld-0 -- mysql -h 127.0.0.1 -uroot -p Enter Password: $ mysql> DELETE FROM backup_info.DBTIER_BACKUP_COMMAND_QUEUE; $ mysql> DELETE FROM backup_info.DBTIER_BACKUP_INFO; $ mysql> DELETE FROM backup_info.DBTIER_BACKUP_TRANSFER_INFO; $ mysql> DELETE FROM mysql.ndb_apply_status;Example to clean up thereplication_infodatabase tables$ mysql> DELETE FROM replication_info.DBTIER_REPLICATION_CHANNEL_INFO $ mysql> DELETE FROM replication_info.DBTIER_REPL_ERROR_SKIP_INFO; $ mysql> DELETE FROM replication_info.DBTIER_REPL_EVENT_INFO; $ mysql> DELETE FROM replication_info.DBTIER_REPL_SITE_INFO; $ mysql> DELETE FROM replication_info.DBTIER_REPL_SVC_INFO; $ mysql> DELETE FROM replication_info.DBTIER_SITE_INFO; - Reenable the cnDBTier replication service in cnDBTier Cluster:

- Log in to the Bastion Host of cnDBTier Cluster and scale up the DB

replication service deployment if deployment

exists:

$ kubectl -n <namespace of cnDBTier cluster> get deployments | egrep 'replication' | awk '{print $1}' | xargs -L1 -r kubectl -n <namespace of cnDBTier cluster> scale deployment --replicas=1Example:$ kubectl -n cluster1 get deployments | egrep 'replication' | awk '{print $1}' | xargs -L1 -r kubectl -n cluster1 scale deployment --replicas=1Sample output:deployment.apps/mysql-cluster-cluster1-cluster2-replication-svc scaled

- Log in to Bastion Host of cnDBTier cluster and scale up the DB backup

manager service

deployment:

$ kubectl -n <namespace of cnDBTier cluster> get deployments | egrep 'db-backup-manager-svc' | awk '{print $1}' | xargs -L1 -r kubectl -n <namespace of cnDBTier cluster> scale deployment --replicas=1For example:$ kubectl -n cluster1 get deployments | egrep 'db-backup-manager-svc' | awk '{print $1}' | xargs -L1 -r kubectl -n cluster1 scale deployment --replicas=1Sample output:deployment.apps/mysql-cluster-db-backup-manager-svc scaled

- Log in to the Bastion Host of cnDBTier Cluster and scale up the DB

replication service deployment if deployment

exists:

7.3 Creating On-demand Database Backup

This section provides the procedure to create on-demand database backup using cnDBTier backup service.

Prerequisites

The cnDBTier cluster must be in a healthy state, that is, every database node must be in the Running state.- Log in to the bastion host of the cnDBTier cluster.

- Perform the following to create the on-demand backup:

- Run the following command to get

the

db-backup-manager-svcpod name from cnDBTier cluster:$ kubectl -n <namespace of cnDBTier cluster> get pods | grep "db-backup-manager-svc" | awk '{print $1}'For example:$ kubectl -n cluster1 get pods | grep "db-backup-manager-svc" | awk '{print $1}'Sample output:mysql-cluster-db-backup-manager-svc-b49488f8f-lbpbb

- Run the following command to get

the

db-backup-manager-svcservice name from cnDBTier cluster:$ kubectl -n <namespace of cnDBTier cluster> get svc | grep "db-backup-manager-svc" | awk '{print $1}'For example:kubectl -n cluster1 get svc | grep "db-backup-manager-svc" | awk '{print $1}'Sample output:mysql-cluster-db-backup-manager-svc

- Log in to the

db-backup-manager-svcpod from cnDBTier cluster:$ kubectl -n <namespace of cnDBTier cluster> exec -it <db-backup-manager-svc pod> -- bashFor example:$ kubectl -n cluster1 exec -it mysql-cluster-db-backup-manager-svc-b49488f8f-lbpbb -- bash - Run the following REST API CURL

command for creating the on-demand

backup:

$ curl -X POST http://<db-backup-manager-svc svc>:8080/db-tier/on-demand/backup/initiateFor example:$ curl -X POST http://mysql-cluster-db-backup-manager-svc:8080/db-tier/on-demand/backup/initiateSample output:{"backup_encryption_flag":"True","backup_id":"410231044","status":"BACKUP_INITIATED"}

Note down the latest

backup_idin the output (for example, 216220937 in this case) which is required later for Network Database (NDB) cluster restoration. - Run the following command to get

the

- Perform the following to verify the status of the

on-demand backup:

- Run the following command to get

the

db-backup-manager-svcpod name from cnDBTier cluster:$ kubectl -n <namespace of cnDBTier cluster> get pods | grep "db-backup-manager-svc" | awk '{print $1}'For example:$ kubectl -n cluster1 get pods | grep "db-backup-manager-svc" | awk '{print $1}'Sample output:mysql-cluster-db-backup-manager-svc-b49488f8f-lbpbb

- Run the following command to get

the

db-backup-manager-svcservice name from cnDBTier cluster:$ kubectl -n <namespace of cnDBTier cluster> get svc | grep "db-backup-manager-svc" | awk '{print $1}'For example:kubectl -n cluster1 get svc | grep "db-backup-manager-svc" | awk '{print $1}'Sample output:mysql-cluster-db-backup-manager-svc

- Log in to the

db-backup-manager-svcpod:$ kubectl -n <namespace of cnDBTier cluster> exec -it <db-backup-manager-svc pod> -- bashFor example:$ kubectl -n cluster1 exec -it mysql-cluster-db-backup-manager-svc-b49488f8f-lbpbb -- bash - Run the following cURL command to verify the status of the on-demand

backup:

Replace

BACKUP_ID_INITIATEDin the following command with the backup ID retrieved from step 2d.$ curl -X GET http://<db-backup-manager-svc svc>:8080/db-tier/on-demand/backup/<BACKUP_ID_INITIATED>/statusFor example:$ curl -X GET http://mysql-cluster-db-backup-manager-svc:8080/db-tier/on-demand/backup/216220937/statusSample output:{"backup_status":"BACKUP_COMPLETED", "transfer_status":"BACKUP_TRANSFER_REQUESTED"}Note:

The"transfer_status"parameter in the response payload indicates the backup transfer status fromdb-backup-manager-svctodb-replication-svcand not to the remote server.

- Run the following command to get

the

7.4 Restoring Georeplication (GR) Failure

This section provides the procedures to restore georeplication failures in cnDBTier clusters using cnDBTier fault recovery APIs or CNC Console.

- Georeplication failure between healthy clusters when binlog entries (database commits) are not replicated between clusters due to network outage or latency.

- Georeplication failure between the clusters when one or more clusters have fatal error and needs to be reinstalled.

Note:

- All the georeplication cnDBTier clusters must be on the same cnDBTier version to restore from georeplication failures. However, you can perform georeplication recovery in a cnDBTier cluster with a higher version, using the backup that is from a cnDBTier cluster with a lower version.

- You cannot restore empty databases.

- cnDBTier Cluster1, the first cluster in two-cluster, three-cluster, or four-cluster georeplication setup.

- cnDBTier Cluster2, the second cluster in two-cluster, three-cluster, or four-cluster georeplication setup.

- cnDBTier Cluster3, the third cluster in three-cluster or four-cluster georeplication setup.

- cnDBTier Cluster4, the fourth cluster in four-cluster georeplication setup.

- Bastion Host, the host that is used for installing cnDBTier clusters where kubectl and helm are installed and configured to access the kubernetes cluster and helm repository.

Georeplication Channels Between cnDBTier Clusters

The following table shows the sample cnDBTier cluster names, and the corresponding namespace and login credentials. Before starting the fault recovery procedures, update the following table with the actual values for all the cnDBTier clusters to refer while recovering the failed cnDBTier clusters.

Table 7-1 Cluster Details

| cnDBTier Cluster | Cluster Name | Namespace | MySQL Root Password |

|---|---|---|---|

| cnDBTier cluster1 | Cluster1 | Cluster1 | SamplePassword |

| cnDBTier cluster2 | Cluster2 | Cluster2 | SamplePassword |

| cnDBTier cluster3 | Cluster3 | Cluster3 | SamplePassword |

| cnDBTier cluster4 | Cluster4 | Cluster4 | SamplePassword |

7.4.1 Restoring GR Failures Using cnDBTier GRR APIs

This chapter describes the procedures to restore Georeplication (GR) failures using cnDBTier Georeplication Recovery (GRR) APIs. For more information about the GRR APIs, see Fault Recovery APIs.

7.4.1.1 Two-Cluster Georeplication Failure

This section provides the procedures to recover georeplication failure in two-cluster georeplication deployments.

7.4.1.1.1 Resolving GR Failure Between cnDBTier Clusters in a Two-Cluster Replication

This section describes the procedure to resolve a georeplication failure between cnDBTier clusters in a two-cluster replication using cnDBTier georeplication recovery APIs.

- The failed cnDBTier cluster has a replication delay impacting the NF functionality, with respect to other cnDBTier cluster or has some fatal errors which require to be reinstalled.

- All cnDBTier clusters (cnDBTier cluster1 and cnDBTier cluster2) are in a healthy state, that is, all database nodes (including management node, data node, and API node) are in a Running state if their is only a replication delay and no fatal errors exist in the cnDBTier cluster which needs to be restored.

- NF or application traffic is diverted from the failed cnDBTier Cluster to the working cnDBTier Cluster.

- CURL is installed in the environment from where commands are run.

Note:

All the georeplication cnDBTier clusters must be on the same cnDBTier version to restore from georeplication failures. However, you can perform georeplication recovery in a cnDBTier cluster with a higher version, using the backup that is from a cnDBTier cluster with a lower version.To resolve this georeplication failure, restore cnDBTier Cluster to the latest DB backup using automated backup and restore and then re-establish the replication channels.

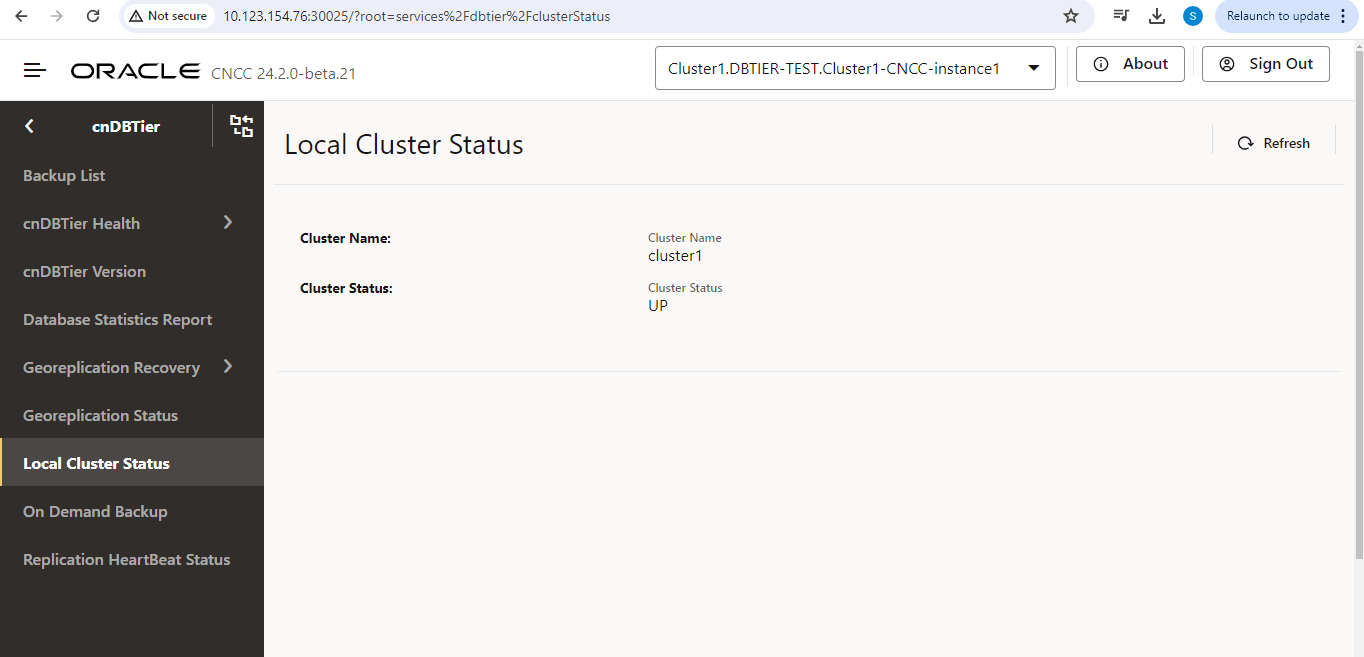

- Check the status of the cnDBTier cluster status in both cnDBTier clusters. Follow Verifying Cluster Status Using cnDBTier to check the status of the cnDBTier cluster1 and cnDBTier cluster2.

- Run the following commands to mark

gr_stateof the failed cnDBTier cluster as FAILED in cnDBTier clusters that are accessible and are in one of the healthy cnDBTier clusters:- Run the following command to get the replication service

LoadBalancer IP for the cluster that must be marked as

failed:

$ export IP=$(kubectl get svc -n <namespace of failed cluster> | grep repl | awk '{print $4}' | head -n 1 ) - Run the following command to get the replication service

LoadBalancer Port for the cluster that must be marked as

failed:

$ export PORT=$(kubectl get svc -n <namespace of failed cluster> | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Mark the cnDBTier cluster as failed in the failed cnDBTier

cluster if it is accessible. http response code 200 indicates that the

cluster's gr_state is marked as failed

successfully.

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/<name of failed cluster>/failed - Mark the cnDBTier cluster as failed in one of the other healthy

remote cnDBTier cluster:

- Get the replication service LoadBalancer IP for the

healthy

cluster:

$ export IP=$(kubectl get svc -n <namespace of healthy cluster> | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service LoadBalancer port of the

healthy

cluster:

$ export PORT=$(kubectl get svc -n <namespace of healthy cluster> | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Mark the cnDBTier cluster as failed in one of the

healthy cnDBTier clusters. HTTP response code 200 indicates that the

cluster's gr_state is marked as failed

successfully.

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/remotesite/<name of the failed cluster>/failedNote:

For more information about georeplication recovery API responses, error codes, and curl commands for HTTPS enabled replication service, see Fault Recovery APIs.

- Get the replication service LoadBalancer IP for the

healthy

cluster:

For example:- If cnDBTier cluster1 is failed, mark the cnDBTier cluster as

failed in the unhealthy cnDBTier cluster cluster1:

- Get the replication service LoadBalancer IP of

cluster1:

$ export IP=$(kubectl get svc -n cluster1 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service LoadBalancer Port of

cluster1:

$ export PORT=$(kubectl get svc -n cluster1 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark the cnDBTier

cluster cluster1 as failed in unhealthy cnDBTier cluster

cluster1 if it is

accessible:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/cluster1/failed

- Mark the cnDBTier cluster1 as failed in one of the

other healthy remote cnDBTier cluster2:

- Get the replication service LoadBalancer IP

for the healthy

cluster.

$ export IP=$(kubectl get svc -n cluster2 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service LoadBalancer

Port for the healthy

cluster:

$ export PORT=$(kubectl get svc -n cluster2 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark cluster1

as failed in the healthy cnDBTier cluster cluster2. http

response code 200 indicates that the cluster's gr_state

is marked as failed

successfully.

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/remotesite/cluster1/failed

- Get the replication service LoadBalancer IP

for the healthy

cluster.

- Get the replication service LoadBalancer IP of

cluster1:

- If cnDBTier cluster2 is failed, mark the cnDBTier cluster

as failed in the unhealthy cnDBTier cluster cluster2:

- Get the replication service LoadBalancer IP of

cluster2:

$ export IP=$(kubectl get svc -n cluster2 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service LoadBalancer Port of

cluster2:

$ export PORT=$(kubectl get svc -n cluster2 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark the cnDBTier

cluster cluster2 as failed in unhealthy cnDBTier cluster

cluster2 if it is

accessible:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/cluster2/failed

- Mark the cnDBTier cluster2 as failed in one of the

other healthy remote cnDBTier cluster1:

- Get the replication service LoadBalancer IP

for the healthy

cluster:

$ export IP=$(kubectl get svc -n cluster1 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service LoadBalancer

Port for the healthy

cluster:

$ export PORT=$(kubectl get svc -n cluster1 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark cluster2

as failed in the healthy cnDBTier cluster cluster2. http

response code 200 indicates that the cluster's gr_state

is marked as failed

successfully.

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/remotesite/cluster2/failed

- Get the replication service LoadBalancer IP

for the healthy

cluster:

- Get the replication service LoadBalancer IP of

cluster2:

- Run the following command to get the replication service

LoadBalancer IP for the cluster that must be marked as

failed:

- Run the following commands for restoring the cnDBTier cluster depending

on the status of cnDBTier cluster:

Follow step a, if the cnDBTier cluster has fatal errors such as PVC corruption, PVC not accessible, and other fatal errors.

Follow step b and c, if the cnDBTier cluster does not have any fatal errors and database restore is needed because of the georeplication failure.

- If cnDBTier cluster which needs to be restored has fatal errors,

or if cnDBTier cluster status is DOWN, then reinstall the cnDBTier cluster

to restore the database from remote cnDBTier Cluster.

Follow Reinstalling cnDBTier Cluster for installing the cnDBTier Cluster by configuring the remote cluster IP address of the replication service of remote cnDBTier Cluster for restoring the database.

For example:- If cnDBTier Cluster1 needs to be restored, then uninstall cnDBtier cluster1 and reinstall cnDBTier cluster1 using the above procedures which restores the database from the remote cnDBTier Cluster2 by configuring the remote clusters replication service IP address of cnDBTier cluster2 in cnDBTier cluster1.

- If cnDBTier Cluster2 needs to be restored, then uninstall cnDBtier cluster2 and reinstall cnDBTier cluster2 using the above procedures which restores the database from the remote cnDBTier Cluster1 by configuring the remote clusters replication service IP address of cnDBTier cluster1 in cnDBTier cluster2.

- Create the required NF-specific user accounts and grants to

match NF users and grants of the good cluster in the reinstalled cnDBTier.

For sample procedure, see Creating NF Users.

Note:

For more details about creating NF-specific user account and grants, refer to the NF-specific fault recovery guide. - If the cnDBTier cluster that needs to be restored is UP, does

not have any fatal errors, and if the georeplication has failed or a large

replication delay exists, then run the following commands to restore the

database from remote cnDBTier Cluster:

- Get the replication service LoadBalancer IP of the

cluster where georeplication needs to be

restored:

$ export IP=$(kubectl get svc -n <namespace of failed cluster> | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service LoadBalancer IP of the

cluster where georeplication needs to be

restored:

$ export PORT=$(kubectl get svc -n <namespace of failed cluster> | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to start the GR procedure on

the failed cluster for restoring the cluster from remote cnDBTier

Cluster:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/<name of the failed cluster>/startNote:

For more information about georeplication recovery API responses, error codes, and curl commands for HTTPS enabled replication service, see Fault Recovery APIs.For example,- If cnDBTier cluster1 needs to be restored,

then run the following commands to start the

georeplication procedure on the failed cluster

(cluster1) for restoring the cluster from remote

cnDBTier Cluster:

- Get the replication service

LoadBalancer IP for

cluster1:

$ export IP=$(kubectl get svc -n cluster1 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer port for

cluster1:

$ export PORT=$(kubectl get svc -n cluster1 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to start

the georeplication procedure on the failed

cluster(cluster1) for restoring the cluster from

remote cnDBTier

Cluster:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/cluster1/start

- Get the replication service

LoadBalancer IP for

cluster1:

- If cnDBTier cluster2 needs to be restored,

then run the following commands to start the

georeplication procedure on the failed cluster

(cluster2) for restoring the cluster from remote

cnDBTier Cluster:

- Get the replication service

LoadBalancer IP for

cluster2:

$ export IP=$(kubectl get svc -n cluster2 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer port for

cluster2:

$ export PORT=$(kubectl get svc -n cluster2 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to start

the georeplication procedure on the failed cluster

(cluster2) for restoring the cluster from remote

cnDBTier

Cluster:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/cluster2/start

- Get the replication service

LoadBalancer IP for

cluster2:

- If cnDBTier cluster1 needs to be restored,

then run the following commands to start the

georeplication procedure on the failed cluster

(cluster1) for restoring the cluster from remote

cnDBTier Cluster:

- Get the replication service LoadBalancer IP of the

cluster where georeplication needs to be

restored:

- If cnDBTier cluster which needs to be restored has fatal errors,

or if cnDBTier cluster status is DOWN, then reinstall the cnDBTier cluster

to restore the database from remote cnDBTier Cluster.

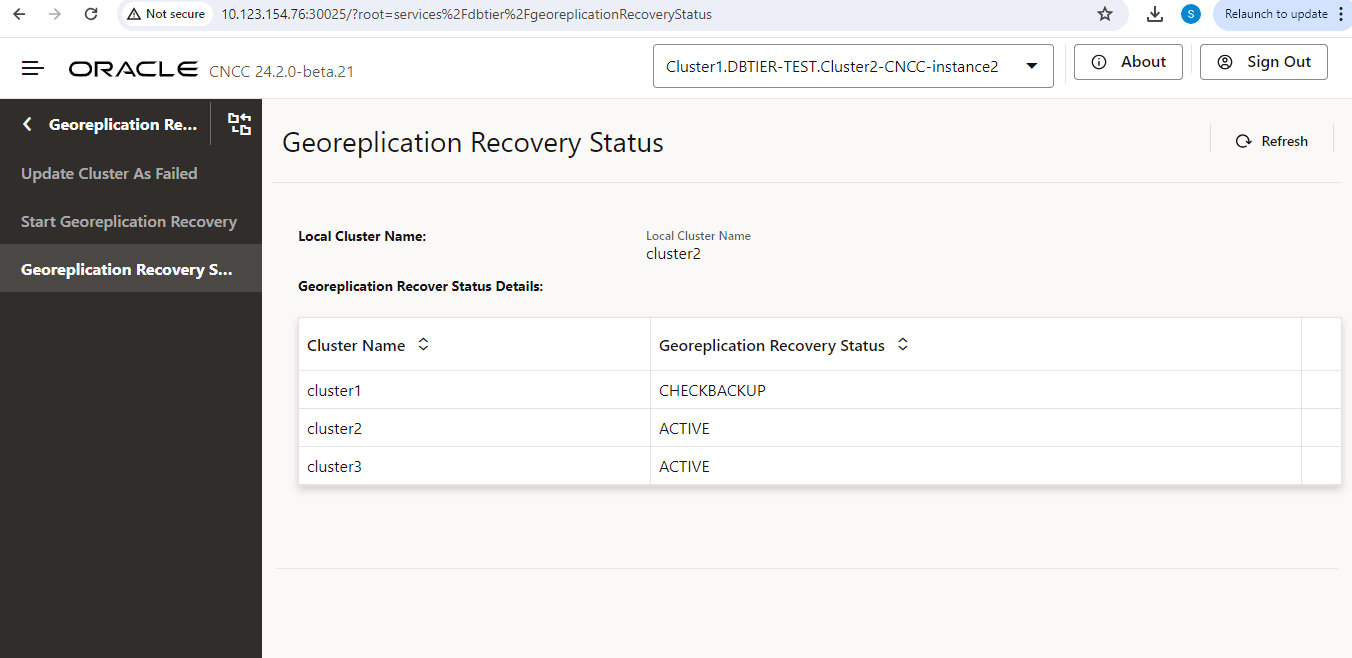

- Wait until the georeplication recovery is complete. You can check the

cluster status using Verifying Cluster Status Using cnDBTier procedure. When the cnDBTier cluster is UP, continue monitoring

the georeplication recovery status by following the Monitoring Georeplication Recovery Status Using cnDBTier APIs procedure.

Note:

The backup-manager-service pod may restart a couple of times during the georeplication recovery. You can ignore these restarts, however, ensure that the backup-manager-service pod is up at the end of the georeplication recovery.

7.4.1.2 Three-Cluster Georeplication Failure

This section provides the procedures to recover georeplication failure in three-cluster georeplication deployments.

7.4.1.2.1 Resolving GR Failure Between cnDBTier Clusters in a Three-Cluster Replication

This section describes the procedure to resolve a georeplication failure between cnDBTier clusters in a three-cluster replication using cnDBTier georeplication recovery APIs.

- The failed cnDBTier cluster is having a replication delay impacting the NF functionality, with respect to other cnDBTier clusters or has some fatal errors which require to be reinstalled.

- cnDBTier clusters are in a healthy state, that is, all database nodes (including management node, data node, and api node) are in Running state if there is only a replication delay and no fatal errors exist in the cnDBTier cluster which needs to be restored.

- Other two cnDBTier clusters (that is, first working cnDBTier cluster and second working cnDBTier cluster) are in a healthy state, that is, all database nodes (including management node, data node, and api node) are in Running state, and the replication channels between them are running correctly.

- NF or application traffic is diverted from the failed cnDBTier Cluster to any of the working cnDBTier Cluster.

- CURL is installed in the environment from where commands are run.

Note:

All the georeplication cnDBTier clusters must be on the same cnDBTier version to restore from georeplication failures. However, you can perform georeplication recovery in a cnDBTier cluster with a higher version, using the backup that is from a cnDBTier cluster with a lower version.To resolve this georeplication failure in one cluster, restore cnDBTier failed cluster to the latest DB backup using automated backup and restore, and then reestablish the replication channels between cnDBTier cluster1, cnDBTier cluster2, and cnDBTier cluster3.

Procedure:

- Check the status of the cnDBTier cluster status in three cnDBTier clusters. Follow Verifying Cluster Status Using cnDBTier to check the status of the cnDBTier cluster1, cnDBTier cluster2, and cnDBTier cluster3.

- Run the following commands to mark

gr_stateof the failed cnDBTier cluster as FAILED in cnDBTier clusters that are accessible and in one of the healthy cnDBTier cluster:- Run the following command to get the replication service

LoadBalancer IP for the cluster that must be marked as

failed:

$ export IP=$(kubectl get svc -n <namespace of failed cluster> | grep repl | awk '{print $4}' | head -n 1 ) - Run the following command to get the replication service

LoadBalancer Port for the cluster that must be marked as

failed:

$ export PORT=$(kubectl get svc -n <namespace of failed cluster> | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Mark the cnDBTier cluster as failed in the failed cnDBTier

cluster if it is accessible. http response code 200 indicates that the

cluster's gr_state is marked as failed

successfully.

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/<name of failed cluster>/failed - Mark the cnDBTier cluster as failed in one of the other

healthy remote cnDBTier cluster:

- Get the replication service LoadBalancer IP of the

healthy

cluster.

$ export IP=$(kubectl get svc -n <namespace of healthy cluster> | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service LoadBalancer port of

the healthy

cluster:

$ export PORT=$(kubectl get svc -n <namespace of healthy cluster> | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Mark the cnDBTier cluster as failed in one of the

healthy cnDBTier clusters. http response code 200 indicates that

the cluster's gr_state is marked as failed

successfully.

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/remotesite/<name of failed cluster>/failedNote:

For more information about georeplication recovery API responses, error codes, and curl commands for HTTPS enabled replication service, see Fault Recovery APIs.

- Get the replication service LoadBalancer IP of the

healthy

cluster.

For example:- If cnDBTier cluster1 is failed then perform the

following:

- mark the cnDBTier cluster as failed in cnDBTier

cluster cluster1:

- Get the replication service LoadBalancer

IP of

cluster1:

$ export IP=$(kubectl get svc -n cluster1 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer Port of

cluster1:

$ export PORT=$(kubectl get svc -n cluster1 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark the

cnDBTier cluster cluster1 as failed if it is

accessible. http response code 200 indicates that

the cluster's gr_state is marked as failed

successfully:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/cluster1/failed

- Get the replication service LoadBalancer

IP of

cluster1:

- Mark the unhealthy cnDBTier cluster as failed

in one of the healthy cnDBTier cluster:

- Get the loadbalancer IP of healthy

cnDBTier cluster

cluster2.

$ export IP=$(kubectl get svc -n cluster2 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer Port for

cluster2:

$ export PORT=$(kubectl get svc -n cluster2 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark

cluster1 as

failed:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/remotesite/cluster1/failed

- Get the loadbalancer IP of healthy

cnDBTier cluster

cluster2.

- mark the cnDBTier cluster as failed in cnDBTier

cluster cluster1:

- If cnDBTier cluster2 is failed, then perform the

following:

- mark the cnDBTier cluster as failed in cnDBTier

cluster cluster2:

- Get the replication service LoadBalancer

IP of

cluster2:

$ export IP=$(kubectl get svc -n cluster2 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer Port of

cluster2:

$ export PORT=$(kubectl get svc -n cluster2 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark the

cnDBTier cluster cluster2 as failed if it is

accessible. http response code 200 indicates that

the cluster's gr_state is marked as failed

successfully:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/cluster2/failed

- Get the replication service LoadBalancer

IP of

cluster2:

- Mark the unhealthy cnDBTier cluster as failed

in one of the healthy cnDBTier cluster:

- Get the loadbalancer IP of healthy

cnDBTier cluster

cluster1.

$ export IP=$(kubectl get svc -n cluster1 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer Port for

cluster1:

$ export PORT=$(kubectl get svc -n cluster1 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark

cluster2 as

failed:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/remotesite/cluster2/failed

- Get the loadbalancer IP of healthy

cnDBTier cluster

cluster1.

- mark the cnDBTier cluster as failed in cnDBTier

cluster cluster2:

- If cnDBTier cluster3 is failed, then perform the

following:

- mark the cnDBTier cluster as failed in unhealthy

cnDBTier cluster if it is accessible:

- Get the replication service

LoadBalancer IP of

cluster3:

$ export IP=$(kubectl get svc -n cluster3 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer Port of

cluster3:

$ export PORT=$(kubectl get svc -n cluster3 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark the

cnDBTier cluster cluster3 as failed in the unhealthy

cnDBTier cluster if it is accessible. http response

code 200 indicates that the cluster's gr_state is

marked as failed

successfully:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/cluster3/failed

- Get the replication service

LoadBalancer IP of

cluster3:

- Mark the unhealthy cnDBTier cluster3 as failed

in one of the healthy cnDBTier cluster:

- Get the loadbalancer IP of healthy

cnDBTier cluster

cluster1.

$ export IP=$(kubectl get svc -n cluster1 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer Port for

cluster1:

$ export PORT=$(kubectl get svc -n cluster1 | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to mark

cluster3 as

failed:

$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/remotesite/cluster3/failed

- Get the loadbalancer IP of healthy

cnDBTier cluster

cluster1.

- mark the cnDBTier cluster as failed in unhealthy

cnDBTier cluster if it is accessible:

- Run the following command to get the replication service

LoadBalancer IP for the cluster that must be marked as

failed:

- Run the following commands for restoring the cnDBTier cluster

depending on the status of cnDBTier cluster.

Follow step a, if the cnDBTier cluster has fatal errors such as PVC corruption, PVC not accessible, and other fatal errors.

Follow step b and c, if the cnDBTier cluster does not have any fatal errors and database restore is needed because of the georeplication failure.

- If cnDBTier cluster which needs to be restored has fatal

errors, or if cnDBTier cluster status is DOWN, then reinstall the

cnDBTier cluster to restore the database from remote cnDBTier Cluster.

Follow Reinstalling cnDBTier Cluster for installing the cnDBTier Cluster by configuring the remote cluster IP address of the replication service of remote cnDBTier Cluster for restoring the database.

For example:- If cnDBTier Cluster 1 needs to be restored, then uninstall and reinstall cnDBTier cluster1 using the above procedures which will restore the database from the remote cnDBTier Clusters by configuring the remote clusters replication service IP address of cnDBTier cluster2 and cnDBTier cluster3 in cnDBTier cluster1.

- If cnDBTier Cluster 2 needs to be restored, then uninstall and reinstall cnDBTier cluster2 using the above procedures which will restore the database from the remote cnDBTier Clusters by configuring the remote clusters replication service IP address of cnDBTier cluster1 and cnDBTier cluster3 in cnDBTier cluster2.

- If cnDBTier Cluster 3 needs to be restored, then uninstall and install cnDBTier cluster3 using the above procedures which will restore the database from the remote cnDBTier Clusters by configuring the remote clusters replication service IP address of cnDBTier cluster1 and cnDBTier cluster2 in cnDBTier cluster3.

- Create the required NF-specific user accounts and grants to

match NF users and grants of the good cluster in the reinstalled

cnDBTier. For sample procedure, see Creating NF Users.

Note:

For more details about creating NF-specific user account and grants, refer to the NF-specific fault recovery guide. - If cnDBTier cluster that needs to be restored is UP, and

does not have any fatal errors, then run the following commands to

restore the database from remote cnDBTier Clusters:

- Get the replication service LoadBalancer IP of the

cluster where georeplication needs to be

restored:

$ export IP=$(kubectl get svc -n <namespace of failed cluster> | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service LoadBalancer IP of the

cluster where georeplication needs to be

restored:

$ export PORT=$(kubectl get svc -n <namespace of failed cluster> | grep repl | awk '{print $5}' | cut -d '/' -f 1 | cut -d ':' -f 1 | head -n 1) - Run the following command to start the GR procedure

on the failed cluster for restoring the cluster from remote

cnDBTier

Cluster:

or$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/<name of failed cluster>/startRun the following command to start the GR procedure on the failed cluster for restoring the cluster by selecting the backup cluster name where backup is taken:$ curl -i -X POST http://$IP:$PORT/db-tier/gr-recovery/site/<name of failed cluster>/grbackupsite/<cluster name where backup is initiated>/startNote:

For more information about georeplication recovery API responses, error codes, and curl commands for HTTPS enabled replication service, see Fault Recovery APIs.For example,- If cnDBTier cluster1 needs to be

restored, then run the following commands to start

the georeplication procedure on the failed cluster

(cluster1) for restoring the cluster from remote

cnDBTier Cluster:

- Get the replication service

LoadBalancer IP for

cluster1:

$ export IP=$(kubectl get svc -n cluster1 | grep repl | awk '{print $4}' | head -n 1 ) - Get the replication service

LoadBalancer port for

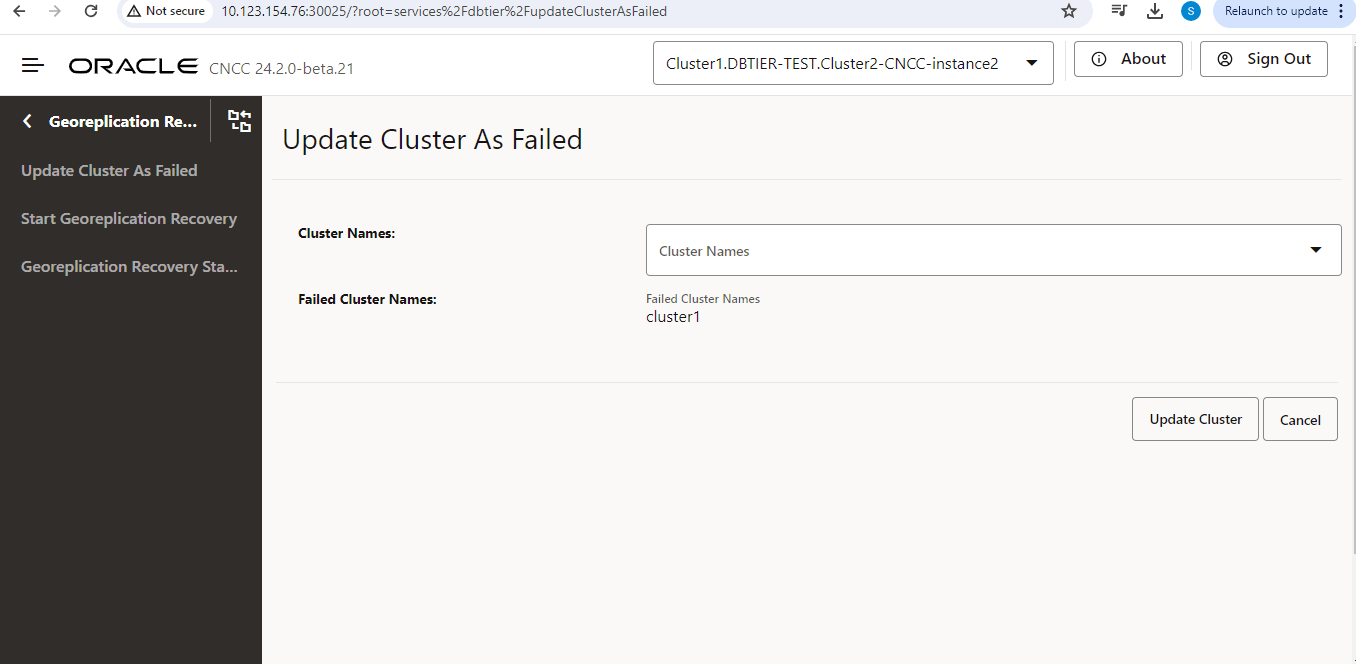

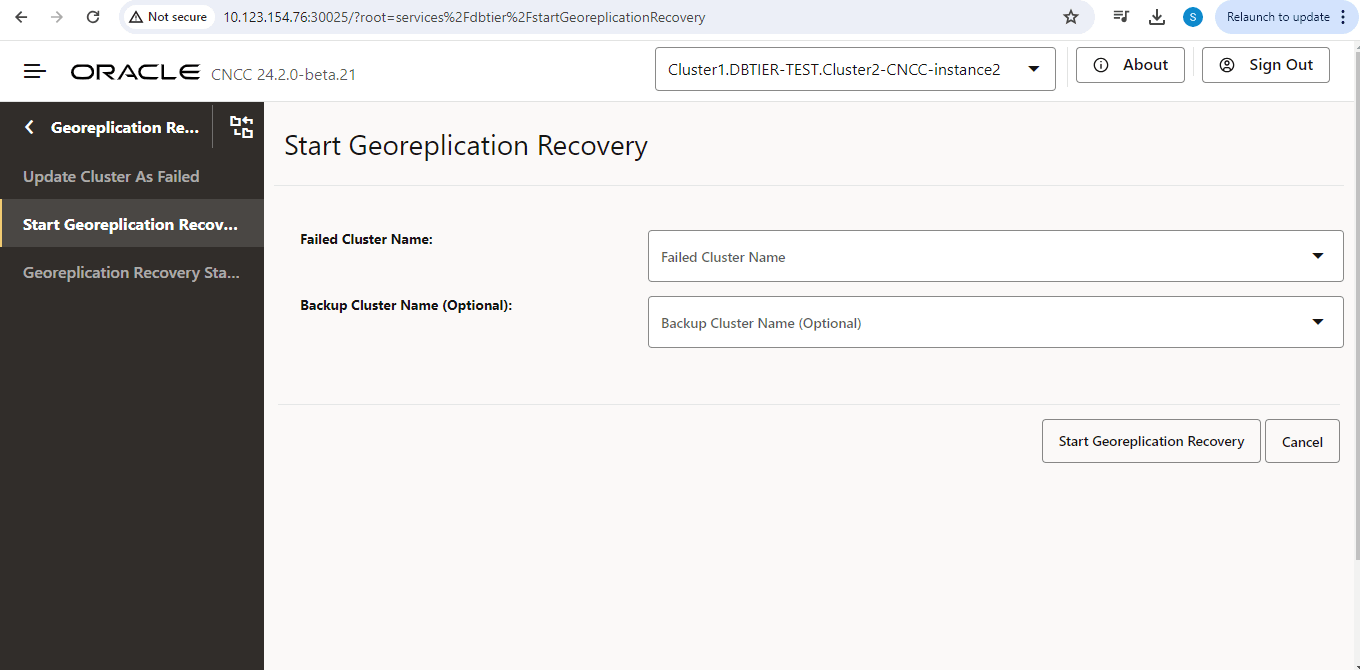

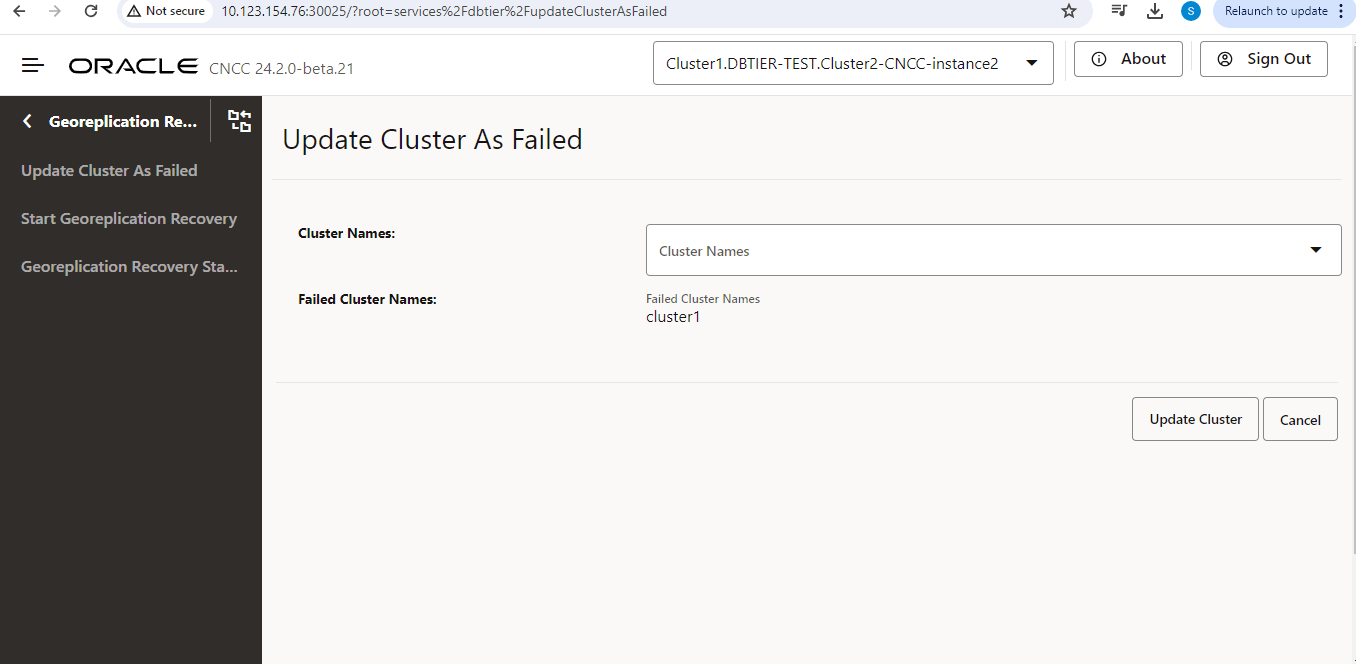

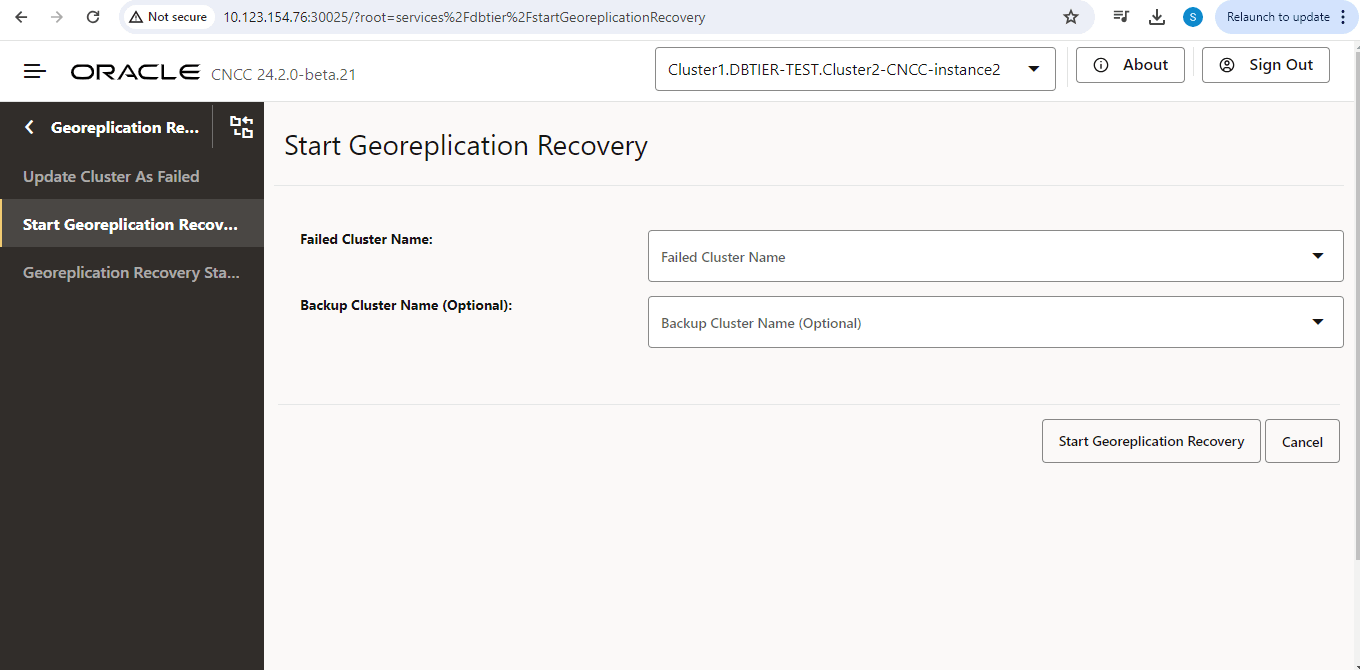

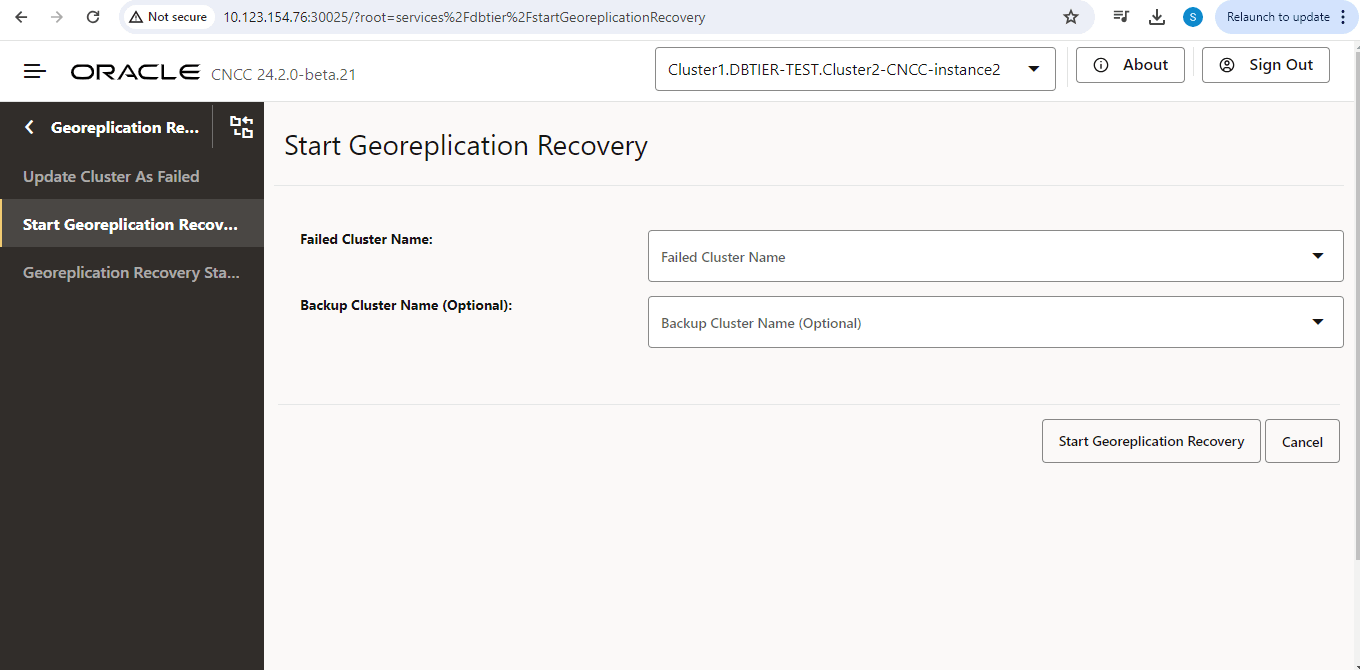

cluster1: