3 Finding Error and Status Information

Effective troubleshooting relies on the availability of useful and detailed information. The Oracle Communications Cloud Native Core, Converged Policy provides various sources of information that may be helpful in the troubleshooting process.

3.1 Logs

Log files are used to register system events, together with their date and time of occurrence. They can be valuable tools for troubleshooting. Not only do logs indicate that specific events occurred, they also provide important clues about a chain of events that led to an error or problem.

Collecting Logs

- Run the following command to get the PODs

details:

$ kubectl -n <namespace_name> get pods - Collect the logs from the specific pods or containers:

$ kubectl logs <podname> -n <namespace> -c <containername> - Store the log in a file using the following

command:

$ kubectl logs <podname> -n <namespace> > <filename> - (Optional) You can also use the following commands for the log

stream with file redirection starting with last 100 lines of

log:

$ kubectl logs <podname> -n <namespace> -f --tail <number of lines> > <filename>

For more information on kubectl commands, see Kubernetes website.

3.1.1 Log Levels

This section provides information on log levels supported by Policy.

A log level helps in defining the severity level of a log message. Using this information, the logs can be filtered based on the system requirements. For instance, if you want to filter the critical information about your system from the informational log messages, set a filter to view messages with only WARN log level in Kibana.

As shown in the following image, only log messages with level defined as WARN are shown,

after adding filter:

Supported Log Levels

- TRACE: A log level describing events showing step by step execution of your code that can be ignored during the standard operation, but may be useful during extended debugging sessions.

- DEBUG: A log level used for events considered to be useful during software debugging when more granular information is needed.

- INFO: The standard log level indicating that something happened, the application entered a certain state, etc.

- WARN: Indicates that something unexpected happened in the application, a problem, or a situation that might disturb one of the processes. But that doesn’t mean that the application failed. The WARN level should be used in situations that are unexpected, but the code can continue the work.

- ERROR: The log level that should be used when the application hits an issue preventing one or more functionalities from properly functioning.

Configuring Log Levels

To view logging configurations and update logging levels, use the Logging Level page under Logging Configurations on the CNC Console. For more information, see the section "Log Level" in Oracle Communications Cloud Native Core, Converged Policy User's Guide.

Log Message Examples with different Level values

The following is a sample log message with level ERROR:

{

"_index": "logstash-2021.08.15",

"_type": "_doc",

"_id": "DiuOSHsBX9U84vckBYSO",

"_version": 1,

"_score": null,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "fc7c3e68ba775ddca4e7f5d0603c8ba1bc414703e7d28f6177012893ca342a3b"

},

"kubernetes": {

"container_name": "user-service",

"namespace_name": "mdc3",

"pod_name": "mdc3-cnpolicy-occnp-udr-connector-697f7f5b8b-9l2jz",

"container_image": "titans-1-bastion-1:5000/occnp/oc-pcf-user:1.14.0-nb-20210804",

"container_image_id": "titans-1-bastion-1:5000/occnp/oc-pcf-user@sha256:d66b1017fd8b1946744a2115bc088349c95f93db17626a20fbb11e25ff543f83",

"pod_id": "f0b233bb-10a1-4b4c-9b77-f864659b9c3e",

"host": "titans-1-k8s-node-2",

"labels": {

"application": "occnp",

"engVersion": "1.14.0-nb-20210804",

"microservice": "occnp_pcf_user",

"mktgVersion": "1.0.0",

"pod-template-hash": "697f7f5b8b",

"vendor": "Oracle",

"app_kubernetes_io/instance": "mdc3-cnpolicy",

"app_kubernetes_io/managed-by": "Helm",

"app_kubernetes_io/name": "user-service",

"app_kubernetes_io/part-of": "occnp",

"app_kubernetes_io/version": "1.0.0",

"helm_sh/chart": "user-service-1.14.0-nb-20210804",

"io_kompose_service": "mdc3-cnpolicy-occnp-udr-connector"

},

"master_url": "https://10.233.0.1:443/api",

"namespace_id": "aadab0ec-ce08-4f81-b70c-2ffda2f39055",

"namespace_labels": {

"istio-injection": ""

}

},

"instant": {

"epochSecond": 1629009871,

"nanoOfSecond": 244837074

},

"thread": "CmAgentTask1",

"level": "ERROR",

"loggerName": "ocpm.cne.common.cmclient.CmRestClient",

"message": "Error performing GET operation for URI /pcf/nf-common-component/v1/nrf-client-nfmanagement/nfProfileList",

"thrown": {

"commonElementCount": 0,

"localizedMessage": "I/O error on GET request for \"http://mdc3-cnpolicy-occnp-config-mgmt:8000/pcf/nf-common-component/v1/nrf-client-nfmanagement/nfProfileList\": Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out; nested exception is org.apache.http.conn.ConnectTimeoutException: Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out",

"message": "I/O error on GET request for \"http://mdc3-cnpolicy-occnp-config-mgmt:8000/pcf/nf-common-component/v1/nrf-client-nfmanagement/nfProfileList\": Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out; nested exception is org.apache.http.conn.ConnectTimeoutException: Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out",

"name": "org.springframework.web.client.ResourceAccessException",

"cause": {

"commonElementCount": 14,

"localizedMessage": "Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out",

"message": "Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out",

"name": "org.apache.http.conn.ConnectTimeoutException",

"cause": {

"commonElementCount": 14,

"localizedMessage": "Connect timed out",

"message": "Connect timed out",

"name": "java.net.SocketTimeoutException",

"extendedStackTrace": "java.net.SocketTimeoutException: Connect timed out\n\tat sun.nio.ch.NioSocketImpl.timedFinishConnect(NioSocketImpl.java:546) ~[?:?]\n\tat sun.nio.ch.NioSocketImpl.connect(NioSocketImpl.java:597) ~[?:?]\n\tat java.net.SocksSocketImpl.connect(SocksSocketImpl.java:333) ~[?:?]\n\tat java.net.Socket.connect(Socket.java:645) ~[?:?]\n\tat org.apache.http.conn.socket.PlainConnectionSocketFactory.connectSocket(PlainConnectionSocketFactory.java:75) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:142) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:376) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:393) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:186) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.springframework.http.client.HttpComponentsClientHttpRequest.executeInternal(HttpComponentsClientHttpRequest.java:87) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractBufferingClientHttpRequest.executeInternal(AbstractBufferingClientHttpRequest.java:48) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractClientHttpRequest.execute(AbstractClientHttpRequest.java:66) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.web.client.RestTemplate.doExecute(RestTemplate.java:776) ~[spring-web-5.3.4.jar!/:5.3.4]\n"

},

"extendedStackTrace": "org.apache.http.conn.ConnectTimeoutException: Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out\n\tat org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:151) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:376) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:393) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:186) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.springframework.http.client.HttpComponentsClientHttpRequest.executeInternal(HttpComponentsClientHttpRequest.java:87) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractBufferingClientHttpRequest.executeInternal(AbstractBufferingClientHttpRequest.java:48) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractClientHttpRequest.execute(AbstractClientHttpRequest.java:66) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.web.client.RestTemplate.doExecute(RestTemplate.java:776) ~[spring-web-5.3.4.jar!/:5.3.4]\nCaused by: java.net.SocketTimeoutException: Connect timed out\n\tat sun.nio.ch.NioSocketImpl.timedFinishConnect(NioSocketImpl.java:546) ~[?:?]\n\tat sun.nio.ch.NioSocketImpl.connect(NioSocketImpl.java:597) ~[?:?]\n\tat java.net.SocksSocketImpl.connect(SocksSocketImpl.java:333) ~[?:?]\n\tat java.net.Socket.connect(Socket.java:645) ~[?:?]\n\tat org.apache.http.conn.socket.PlainConnectionSocketFactory.connectSocket(PlainConnectionSocketFactory.java:75) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:142) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:376) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:393) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:186) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.springframework.http.client.HttpComponentsClientHttpRequest.executeInternal(HttpComponentsClientHttpRequest.java:87) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractBufferingClientHttpRequest.executeInternal(AbstractBufferingClientHttpRequest.java:48) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractClientHttpRequest.execute(AbstractClientHttpRequest.java:66) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.web.client.RestTemplate.doExecute(RestTemplate.java:776) ~[spring-web-5.3.4.jar!/:5.3.4]\n\t... 14 more\n"

},

"extendedStackTrace": "org.springframework.web.client.ResourceAccessException: I/O error on GET request for \"http://mdc3-cnpolicy-occnp-config-mgmt:8000/pcf/nf-common-component/v1/nrf-client-nfmanagement/nfProfileList\": Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out; nested exception is org.apache.http.conn.ConnectTimeoutException: Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out\n\tat org.springframework.web.client.RestTemplate.doExecute(RestTemplate.java:785) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.web.client.RestTemplate.execute(RestTemplate.java:751) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.web.client.RestTemplate.getForEntity(RestTemplate.java:377) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat ocpm.cne.common.cmclient.CmRestClient.lambda$get$0(CmRestClient.java:54) ~[cne-common-0.0.8-SNAPSHOT-dev.jar!/:?]\n\tat org.springframework.retry.support.RetryTemplate.doExecute(RetryTemplate.java:329) ~[spring-retry-1.3.1.jar!/:?]\n\tat org.springframework.retry.support.RetryTemplate.execute(RetryTemplate.java:209) ~[spring-retry-1.3.1.jar!/:?]\n\tat ocpm.cne.common.cmclient.CmRestClient.get(CmRestClient.java:53) [cne-common-0.0.8-SNAPSHOT-dev.jar!/:?]\n\tat ocpm.cne.common.cmclient.CmRestClientTask.run(CmRestClientTask.java:32) [cne-common-0.0.8-SNAPSHOT-dev.jar!/:?]\n\tat org.springframework.scheduling.support.DelegatingErrorHandlingRunnable.run(DelegatingErrorHandlingRunnable.java:54) [spring-context-5.3.4.jar!/:5.3.4]\n\tat java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515) [?:?]\n\tat java.util.concurrent.FutureTask.runAndReset(FutureTask.java:305) [?:?]\n\tat java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:305) [?:?]\n\tat java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1130) [?:?]\n\tat java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:630) [?:?]\n\tat java.lang.Thread.run(Thread.java:831) [?:?]\nCaused by: org.apache.http.conn.ConnectTimeoutException: Connect to mdc3-cnpolicy-occnp-config-mgmt:8000 [mdc3-cnpolicy-occnp-config-mgmt/10.233.53.78] failed: Connect timed out\n\tat org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:151) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:376) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:393) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:186) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.springframework.http.client.HttpComponentsClientHttpRequest.executeInternal(HttpComponentsClientHttpRequest.java:87) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractBufferingClientHttpRequest.executeInternal(AbstractBufferingClientHttpRequest.java:48) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractClientHttpRequest.execute(AbstractClientHttpRequest.java:66) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.web.client.RestTemplate.doExecute(RestTemplate.java:776) ~[spring-web-5.3.4.jar!/:5.3.4]\n\t... 14 more\nCaused by: java.net.SocketTimeoutException: Connect timed out\n\tat sun.nio.ch.NioSocketImpl.timedFinishConnect(NioSocketImpl.java:546) ~[?:?]\n\tat sun.nio.ch.NioSocketImpl.connect(NioSocketImpl.java:597) ~[?:?]\n\tat java.net.SocksSocketImpl.connect(SocksSocketImpl.java:333) ~[?:?]\n\tat java.net.Socket.connect(Socket.java:645) ~[?:?]\n\tat org.apache.http.conn.socket.PlainConnectionSocketFactory.connectSocket(PlainConnectionSocketFactory.java:75) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:142) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:376) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:393) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:186) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56) ~[httpclient-4.5.13.jar!/:4.5.13]\n\tat org.springframework.http.client.HttpComponentsClientHttpRequest.executeInternal(HttpComponentsClientHttpRequest.java:87) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractBufferingClientHttpRequest.executeInternal(AbstractBufferingClientHttpRequest.java:48) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.http.client.AbstractClientHttpRequest.execute(AbstractClientHttpRequest.java:66) ~[spring-web-5.3.4.jar!/:5.3.4]\n\tat org.springframework.web.client.RestTemplate.doExecute(RestTemplate.java:776) ~[spring-web-5.3.4.jar!/:5.3.4]\n\t... 14 more\n"

},

"endOfBatch": false,

"loggerFqcn": "org.apache.logging.slf4j.Log4jLogger",

"threadId": 21,

"threadPriority": 5,

"messageTimestamp": "2021-08-15T06:44:31.244+0000",

"@timestamp": "2021-08-15T06:44:31.245670273+00:00",

"tag": "kubernetes.var.log.containers.mdc3-cnpolicy-occnp-udr-connector-697f7f5b8b-9l2jz_mdc3_user-service-fc7c3e68ba775ddca4e7f5d0603c8ba1bc414703e7d28f6177012893ca342a3b.log"

},

"fields": {

"messageTimestamp": [

"2021-08-15T06:44:31.244Z"

],

"@timestamp": [

"2021-08-15T06:44:31.245Z"

]

},

"sort": [

1629009871245

]

}{

"_index": "logstash-2021.08.15",

"_type": "_doc",

"_id": "pYKOSHsBgXqNeaK8Blhv",

"_version": 1,

"_score": null,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "d373ee8717f2c21ba1c06d7b78ba1d74b15239e044db24a98d8cbd7e0e0c70b6"

},

"kubernetes": {

"container_name": "perf-info",

"namespace_name": "mdc2",

"pod_name": "mdc2-cnpolicy-performance-b9587f5cc-mxvp4",

"container_image": "titans-1-bastion-1:5000/occnp/oc-perf-info:1.14.0-rc.1",

"container_image_id": "titans-1-bastion-1:5000/occnp/oc-perf-info@sha256:c7b04350374a238aa4b05f1e5de50feeb65a45c09b48260b0639fb0771094975",

"pod_id": "13f40f5f-dcea-4a1f-88bf-396520d360df",

"host": "titans-1-k8s-node-11",

"labels": {

"application": "occnp",

"engVersion": "1.14.0-rc.1",

"microservice": "perf_info",

"mktgVersion": "1.0.0",

"pod-template-hash": "b9587f5cc",

"vendor": "Oracle",

"app_kubernetes_io/instance": "mdc2-cnpolicy",

"app_kubernetes_io/managed-by": "Helm",

"app_kubernetes_io/name": "perf-info",

"app_kubernetes_io/part-of": "occnp",

"app_kubernetes_io/version": "1.0.0",

"helm_sh/chart": "perf-info-1.14.0-rc.1",

"io_kompose_service": "mdc2-cnpolicy-performance"

},

"master_url": "https://10.233.0.1:443/api",

"namespace_id": "df5cee99-9b95-4bce-a3cc-d0453c214283",

"namespace_labels": {

"istio-injection": ""

}

},

"name": "stat_helper",

"message": "Probing prometheus URL http://occne-prometheus-server.occne-infra/prometheus",

"level": "INFO",

"filename": "stat_helper.py",

"lineno": 36,

"module": "stat_helper",

"func": "probe_prometheus_url",

"thread": "MainThread",

"messageTimestamp": "2021-08-15T06:44:22.715+0000",

"@timestamp": "2021-08-15T06:44:22.715709480+00:00",

"tag": "kubernetes.var.log.containers.mdc2-cnpolicy-performance-b9587f5cc-mxvp4_mdc2_perf-info-d373ee8717f2c21ba1c06d7b78ba1d74b15239e044db24a98d8cbd7e0e0c70b6.log"

},

"fields": {

"messageTimestamp": [

"2021-08-15T06:44:22.715Z"

],

"@timestamp": [

"2021-08-15T06:44:22.715Z"

]

},

"sort": [

1629009862715

]

}3.1.2 Understanding Logs

This section provides information on how to read logs for various services of Policy in Kibana.

Understanding Logs

{

"instant": {

"epochSecond": 1627016656,

"nanoOfSecond": 137175036

},

"thread": "Thread-2",

"level": "INFO",

"loggerName": "ocpm.pcf.framework.domain.orchestration.AbstractProcess",

"marker": {

"name": "ALWAYS"

},

"message": "Received RECONFIGURE request",

"endOfBatch": false,

"loggerFqcn": "org.apache.logging.slf4j.Log4jLogger",

"threadId": 34,

"threadPriority": 5,

"messageTimestamp": "2021-07-23T05:04:16.137+0000"

}The log message format is same for all the Policy services.

Table 3-1 Log Attributes

| Attribute | Description |

|---|---|

| level | Log level of the log printed |

| loggerName | Class/Module which printed the log |

| message | Message related to the log providing brief details |

| loggerFqcn | Log4j2 Internal, Fully Qualified class name of logger module |

| thread | Thread name |

| threadId | Thread ID generated internally by Log4j2 |

| threadPriority | Thread priority generated internally by Log4j2 |

| messageTimeStamp | Timestamp of log from application container |

| kubernetes.labels.application | NF Application Name |

| kubernetes.labels.engVersion | Engineering version of software |

| kubernetes.labels.mktgVersion | Marketing version of software |

| kubernetes.labels.microservice | Name of the microservice |

| kubernetes.namespace_name | Namespace of OCPCF deployment |

| kubernetes.host | worker node name on which container is running |

| kubernetes.pod_name | Pod Name |

| kubernetes.container_name | Container Name |

| Docker.container_id | Process ID internally assigned |

| kubernetes.labels.vendor | Vendor of product |

3.2 Subscriber Activity Logging

Subscriber Activity Logging allows you to define a list of the subscribers (identifier) and trace all the logs related to the identified subscribers separately while troubleshooting certain issues. This functionality can be used to troubleshoot problematic subscribers without enabling logs or traces that can impact all subscribers.

To enable the subscriber activity logging functionality, set value of the Enable Subscriber Activity Logging parameter to true on the Subscriber Activity Logging page on the CNC Console. By default, this functionality remains disabled.

For more information on how to enable this feature, see the section "Subscriber Activity Logging" in Oracle Communications Cloud Native Core, Converged Policy User's Guide.

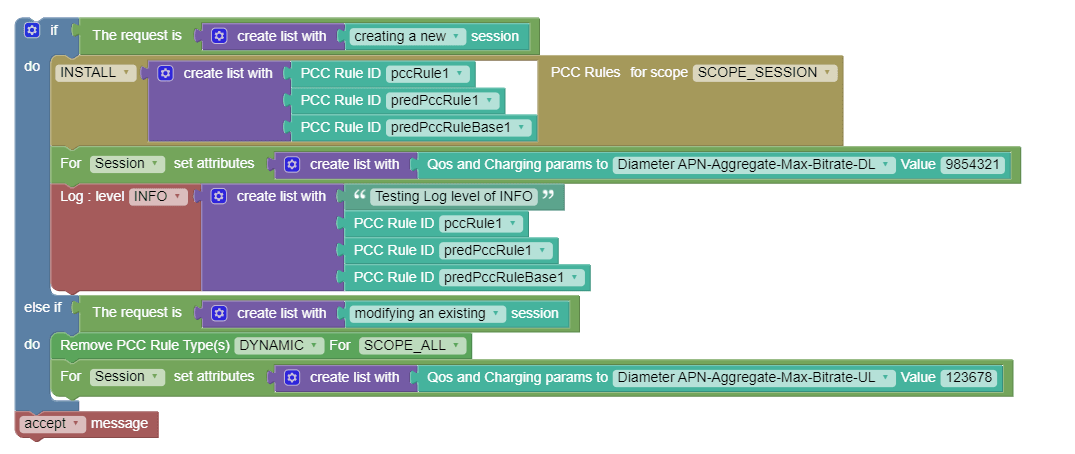

3.3 Log Block

While managing Policy Projects, users can use the Log block to log a message or the value of a policy variable in the logging system.

The logged message can subsequently be viewed in Kibana (or other logging) GUI.

{

"messageTimestamp":"2021-07-08T17:54:51.425Z",

"marker": {"name":"SUBSCRIBER"} ,

"level":"INFO",

"message":"{

"type":"POLICY_EXECUTION",

"reqeustId":"supi;

imsi-60000000001",

"policyStartTime":"2021-07-08T17:54:51.423Z",

"policyEndTime":"2021-07-08T17:54:51.425Z",

"body":[

" Start evaluating policy main",

"request.request.operationType == 'CREATE' evaluates to be true",

" get row data from table '[Policy Table name]' for service pcf-sm with conditions column '[Column name]' 'equal to:###eq###' request.request.smPolicyContextData.dnn",

" INSTALL PCC Rules [utils.getColumnData((typeof row == "undefined")? {rowtableId: "",rowData: null}: row ,

"[Table name]",

"[Column name]")]",

" Execute mandatory action accept message",

" End evaluating policy main"

]

}"

}For more information on how to use this block, see Oracle Communications Cloud Native Core, Converged Policy Design Guide.

3.4 Using Debug Tool

Overview

The Debug Tool provides third-party troubleshooting tools for debugging the runtime issues in the lab environment.

- tcpdump

- ip

- netstat

- curl

- ping

- nmap

- dig

Prerequisites

This section explains the prerequisites for using debug tool.

Note:

-

Configuration in CNE

The following configurations must be performed in the Bastion Host.

- When Policy is installed on CNE version 23.2.0 or above:

Note:

- In CNE version 23.2.0 or above, the default CNE 23.2.0 Kyverno policy, disallow-capabilities, do not allow NET_ADMIN and NET_RAW capabilities that are required for debug tool.

- To run Debug tool on CNE 23.2.0 and above, the user must modify the existing Kyverno policy, disallow-capabilities, as below.

Adding a Namespace to an Empty Resource-

Run the following command to verify if the current disallow-capabilities cluster policy has namespace in it.

Example:

$ kubectl get clusterpolicies disallow-capabilities -oyamlSample output:

apiVersion: kyverno.io/v1 kind: ClusterPolicy ... ... spec: rules: -exclude: any: -resources:{} -

If there are no namespaces, then patch the policy using the following command to add <namespace> under resources.

$ kubectl patch clusterpolicy disallow-capabilities --type=json \ -p='[{"op": "add", "path": "/spec/rules/0/exclude/any/0/resources", "value": {"namespaces":["<namespace>"]} }]'Example:

$ kubectl patch clusterpolicy disallow-capabilities --type=json \ -p='[{"op": "add", "path": "/spec/rules/0/exclude/any/0/resources", "value": {"namespaces":["occnp"]} }]'Sample output:

apiVersion: kyverno.io/v1 kind: ClusterPolicy ... ... spec: rules: -exclude: resources: namespaces: -occnp -

If in case it is needed to remove the namespace added in the above step, use the following command:

$ kubectl patch clusterpolicy disallow-capabilities --type=json \ -p='[{"op": "replace", "path": "/spec/rules/0/exclude/any/0/resources", "value": {} }]'Sample output:

apiVersion: kyverno.io/v1 kind: ClusterPolicy ... ... spec: rules: -exclude: any: -resources:{}

Adding a Namespace to an Existing Namespace List-

Run the following command to verify if the current disallow-capabilities cluster policy has namespaces in it.

Example:

$ kubectl get clusterpolicies disallow-capabilities -oyamlSample output:

apiVersion: kyverno.io/v1 kind: ClusterPolicy ... ... spec: rules: -exclude: any: -resources: namespaces: -namespace1 -namespace2 -namespace3 -

If there are namespaces already added, then patch the policy using the following command to add <namespace> to the existing list:

$ kubectl patch clusterpolicy disallow-capabilities --type=json \ -p='[{"op": "add", "path": "/spec/rules/0/exclude/any/0/resources/namespaces/-", "value": "<namespace>" }]'Example:

$ kubectl patch clusterpolicy disallow-capabilities --type=json \ -p='[{"op": "add", "path": "/spec/rules/0/exclude/any/0/resources/namespaces/-", "value": "occnp" }]'Example:

$ kubectl patch clusterpolicy disallow-capabilities --type=json \ -p='[{"op": "add", "path": "/spec/rules/0/exclude/any/0/resources/namespaces/-", "value": "occnp" }]'Sample output:

apiVersion: kyverno.io/v1 kind: ClusterPolicy ... ... spec: rules: -exclude: resources: namespaces: -namespace1 -namespace2 -namespace3 -occnp -

If in case it is needed to remove the namespace added in the above step, use the following command:

$ kubectl patch clusterpolicy disallow-capabilities --type=json \ -p='[{"op": "remove", "path": "/spec/rules/0/exclude/any/0/resources/namespaces/<index>"}]'Example:

$ kubectl patch clusterpolicy disallow-capabilities --type=json \ -p='[{"op": "remove", "path": "/spec/rules/0/exclude/any/0/resources/namespaces/3"}]'Sample output:

apiVersion: kyverno.io/v1 kind: ClusterPolicy ... ... spec: rules: -exclude: resources: namespaces: -namespace1 -namespace2 -namespace3

Note:

While removing the namespace, provide the index value for namespace within the array. The index starts from '0'.

-

When Policy is installed on CNE version prior to 23.2.0

PodSecurityPolicy (PSP) Creation

- Log in to the Bastion Host.

- Create a new PSP by running the following

command from the bastion host. The parameters

readOnlyRootFileSystem, allowPrivilegeEscalation,

allowedCapabilities are required by debug container.

Note:

Other parameters are mandatory for PSP creation and can be customized as per the CNE environment. Default values are recommended.$ kubectl apply -f - <<EOF apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: debug-tool-psp spec: readOnlyRootFilesystem: false allowPrivilegeEscalation: true allowedCapabilities: - NET_ADMIN - NET_RAW fsGroup: ranges: - max: 65535 min: 1 rule: MustRunAs runAsUser: rule: MustRunAsNonRoot seLinux: rule: RunAsAny supplementalGroups: rule: RunAsAny volumes: - configMap - downwardAPI - emptyDir - persistentVolumeClaim - projected - secret EOF

Role Creation

Run the following command to create a role for the PSP:$ kubectl apply -f - <<EOF apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: debug-tool-role namespace: ocnrf rules: - apiGroups: - policy resources: - podsecuritypolicies verbs: - use resourceNames: - debug-tool-psp EOFRoleBinding Creation

Run the following command to associate the service account for the Policy namespace with the role created for the PSP:$ kubectl apply -f - <<EOF apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: debug-tool-rolebinding namespace: ocnrf roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: debug-tool-role subjects: - kind: Group apiGroup: rbac.authorization.k8s.io name: system:serviceaccounts EOFRefer to Debug Tool Configuration Parameters for parameter details.

- When Policy is installed on CNE version 23.2.0 or above:

-

Configuration in NF specific Helm

Following updates must be performed in

custom_values.yamlfile.- Log in to the Policy server.

- Open the

custom_values.yamlfile:$ vim <custom_values file> - Under global configuration, add the

following:

global: extraContainers: ENABLEDNote:

-

Debug Tool Container comes up with the default user ID - 7000. If the operator wants to override this default value, it can be done using the `runAsUser` field, otherwise the field can be skipped.

Default value: uid=7000(debugtool) gid=7000(debugtool) groups=7000(debugtool)

- In case you want to customize the container

name, replace the `name` field in the above values.yaml with

the

following:

This will ensure that the container name is prefixed and suffixed with the necessary values.name: {{ printf "%s-tools-%s" (include "getprefix" .) (include "getsuffix" .) | trunc 63 | trimPrefix "-" | trimSuffix "-" }}

For more information on how to customize parameters in the custom yaml value files, see Oracle Communications Cloud Native Core, Converged Policy Installation, Upgrade and Fault Recovery Guide.

-

- Under service specific configurations for which debugging

is required, add the

following:

am-service: #extraContainers: DISABLED envMysqlDatabase: occnp_pcf_am resources: limits: cpu: 1 memory: 1Gi requests: cpu: 0.5 memory: 1Gi minReplicas: 1Note:

- At the global level,

extraContainersflag can be used to enable/disable injecting extra containers globally. This ensures that all the services that use this global value have extra containers enabled/disabled using a single flag. - At the service level,

extraContainersflag determines whether to use the extra container configuration from the global level or enable/disable injecting extra containers for the specific service.

- At the global level,

Running Debug Tool

- Run the following command to retrieve the POD

details:

$ kubectl get pods -n <namespace>Example:

$ kubectl get pods -n occnp - Run the following command to enter into Debug Tool

Container:

$ kubectl exec -it <pod name> -c <debug_container name> -n <namespace> bash - Run the debug

tools:

bash -4.2$ <debug_tools>Example:bash -4.2$ tcpdump - Copy the output files from container to

host:

$ kubectl cp -c <debug_container name> <pod name>:<file location in container> -n <namespace> <destination location>

Tools Tested in Debug Container

Following is the list of debug tools that are tested.

Table 3-2 tcpdump

| Options Tested | Description | Output | Capabilities |

|---|---|---|---|

| -D | Print the list of the network interfaces available on the system and on which tcpdump can capture packets. | tcpdump

-D |

NET_ADMIN, NET_RAW |

| -i | Listen on interface. | tcpdump -i eth0tcpdump: verbose output suppressed, use -v or -vv for full protocol decodelistening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes12:10:37.381199 IP cncc-core-ingress-gateway-7ffc49bb7f-2kkhc.46519 > kubernetes.default.svc.cluster.local.https: Flags [P.], seq 1986927241:1986927276, ack 1334332290, win 626, options [nop,nop,TS val 849591834 ecr 849561833], length 3512:10:37.381952 IP cncc-core-ingress-gateway-7ffc49bb7f-2kkhc.45868 > kube-dns.kube-system.svc.cluster.local.domain: 62870+ PTR? 1.0.96.10.in-addr.arpa. (40) |

NET_ADMIN, NET_RAW |

| -w | Write the raw packets to file rather than parsing and printing them. | tcpdump -w capture.pcap -i

eth0 |

NET_ADMIN, NET_RAW |

| -r | Read packets from file (which was created with the -w option). | tcpdump -r capture.pcapreading from file /tmp/capture.pcap, link-type EN10MB (Ethernet)12:13:07.381019 IP cncc-core-ingress-gateway-7ffc49bb7f-2kkhc.46519 > kubernetes.default.svc.cluster.local.https: Flags [P.], seq 1986927416:1986927451, ack 1334332445, win 626, options [nop,nop,TS val 849741834 ecr 849711834], length 3512:13:07.381194 IP kubernetes.default.svc.cluster.local.https > cncc-core-ingress-gateway-7ffc49bb7f-2kkhc.46519: Flags [P.], seq 1:32, ack 35, win 247, options [nop,nop,TS val 849741834 ecr 849741834], length 3112:13:07.381207 IP cncc-core-ingress-gateway-7ffc49bb7f-2kkhc.46519 > kubernetes.default.svc.cluster.local.https: Flags [.], ack 32, win 626, options [nop,nop,TS val 849741834 ecr 849741834], length 0 |

NET_ADMIN, NET_RAW |

Table 3-3 ip

| Options Tested | Description | Output | Capabilities |

|---|---|---|---|

| addr show | Look at protocol addresses |

ip addr show1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group defaultlink/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group defaultlink/ipip 0.0.0.0 brd 0.0.0.04: eth0@if190: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group defaultlink/ether aa:5a:27:8d:74:6f brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 192.168.219.112/32 scope global eth0valid_lft forever preferred_lft forever |

-- |

| route show | List routes | ip route showdefault via 169.254.1.1 dev eth0 169.254.1.1 dev eth0 scope link |

-- |

| addrlabel list | List address labels |

ip addrlabel listprefix ::1/128 label 0 prefix ::/96 label 3 prefix ::ffff:0.0.0.0/96 label 4 prefix 2001::/32 label 6 prefix 2001:10::/28 label 7 prefix 3ffe::/16 label 12 prefix 2002::/16 label 2 prefix fec0::/10 label 11 prefix fc00::/7 label 5 prefix ::/0 label 1 |

-- |

Table 3-4 netstat

| Options Tested | Description | Output | Capabilities |

|---|---|---|---|

| -a | Show both listening and non-listening (for TCP this means established connections) sockets. | netstat -aActive Internet connections (servers and established) Proto Recv-Q Send-Q Local Address Foreign Address Statetcp 0 0 0.0.0.0:tproxy 0.0.0.0:* LISTENtcp 0 0 0.0.0.0:websm 0.0.0.0:* LISTENtcp 0 0 cncc-core-ingress:websm 10-178-254-194.ku:47292 TIME_WAITtcp 0 0 cncc-core-ingress:46519 kubernetes.defaul:https ESTABLISHEDtcp 0 0 cncc-core-ingress:websm 10-178-254-194.ku:47240 TIME_WAITtcp 0 0 cncc-core-ingress:websm 10-178-254-194.ku:47347 TIME_WAITudp 0 0 localhost:59351 localhost:ambit-lm ESTABLISHEDActive UNIX domain sockets (servers and established)Proto RefCnt Flags Type State I-Node Pathunix 2 [ ] STREAM CONNECTED 576064861 |

-- |

| -l | Show only listening sockets. | netstat -lActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address Statetcp 0 0 0.0.0.0:tproxy 0.0.0.0:* LISTENtcp 0 0 0.0.0.0:websm 0.0.0.0:* LISTENActive UNIX domain sockets (only servers)Proto RefCnt Flags Type State I-Node Path |

-- |

| -s | Display summary statistics for each protocol. | netstat -sIp:4070 total packets received0 forwarded0 incoming packets discarded4070 incoming packets delivered4315 requests sent outIcmp:0 ICMP messages received0 input ICMP message failed.ICMP input histogram:2 ICMP messages sent0 ICMP messages failedICMP output histogram:destination unreachable: 2 |

-- |

| -i | Display a table of all network interfaces. | netstat -iKernel Interface tableIface MTU RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flgeth0 1440 4131 0 0 0 4355 0 0 0 BMRUlo 65536 0 0 0 0 0 0 0 0 LRU |

-- |

Table 3-5 jq

| Options Tested | Description | Output | Capabilities |

|---|---|---|---|

| <jq filter> [file...] |

Use it to slice and filter and map and transform structured data. Sample JSON file: |

|

-- |

| Sample JSON file: |

|

|

-- |

Table 3-6 curl

| Options Tested | Description | Output | Capabilities |

|---|---|---|---|

| -o | Write output to <file> instead of stdout. |

curl -o file.txt

http://abc.com/file.txt |

-- |

| -x | Use the specified HTTP proxy. |

curl -x proxy.com:8080 -o

http://abc.com/file.txt |

-- |

Table 3-7 ping

| Options Tested | Description | Output | Capabilities |

|---|---|---|---|

| <ip> | Run a ping test to see whether the target host is reachable or not. |

ping 10.178.254.194 |

NET_ADMIN, NET_RAW |

| -c | Stop after sending 'c' number of ECHO_REQUEST packets. |

ping -c 5 10.178.254.194 |

NET_ADMIN, NET_RAW |

| -f (with non-zero interval) | Flood ping. For every ECHO_REQUEST sent, a period ''.'' is printed, while for every ECHO_REPLY received a backspace is printed. |

ping -f -i 2 10.178.254.194 |

NET_ADMIN, NET_RAW |

Table 3-8 nmap

| Options Tested | Description | Output | Capabilities |

|---|---|---|---|

| <ip> | Scan for Live hosts, Operating systems, packet filters, and open ports running on remote hosts. |

nmap

10.178.254.194 |

-- |

| -v | Increase verbosity level. |

nmap -v

10.178.254.194 |

-- |

| -iL | Scan all the listed IP addresses in a file. |

nmap -iL

sample.txt |

-- |

Table 3-9 dig

| Options Tested | Description | Output | Capabilities |

|---|---|---|---|

| <ip> | It performs DNS lookups and displays the answers that are returned from the name server(s) that were queried. | dig

10.178.254.194Note: The IP should be reachable from inside the container. |

-- |

| -x | Query DNS Reverse Look-up. | dig -x 10.178.254.194 |

-- |

3.4.1 Debug Tool Configuration Parameters

Following are the parameters used to configure debug tool.

CNE Parameters

Table 3-10 CNE Parameters

| Parameter | Description |

|---|---|

| apiVersion | APIVersion defines the version schema of this representation of an object. |

| kind | Kind is a string value representing the REST resource this object represents. |

| metadata | Standard object's metadata. |

| metadata.name | Name must be unique within a namespace. |

| spec | spec defines the policy enforced. |

| spec.readOnlyRootFilesystem | Controls whether the containers run with a read-only root filesystem (i.e. no writable layer). |

| spec.allowPrivilegeEscalation | Gates whether or not a user is allowed to set the security context of a container to allowPrivilegeEscalation=true. |

| spec.allowedCapabilities | Provides a list of capabilities that are allowed to be added to a container. |

| spec.fsGroup | Controls the supplemental group applied to some volumes. RunAsAny allows any fsGroup ID to be specified. |

| spec.runAsUser | Controls which user ID the containers are run with. RunAsAny allows any runAsUser to be specified. |

| spec.seLinux | RunAsAny allows any seLinuxOptions to be specified. |

| spec.supplementalGroups | Controls which group IDs containers add. RunAsAny allows any supplementalGroups to be specified. |

| spec.volumes | Provides a list of allowed volume types. The allowable values correspond to the volume sources that are defined when creating a volume. |

Role Creation Parameters

Table 3-11 Role Creation

| Parameter | Description |

|---|---|

| apiVersion | APIVersion defines the versioned schema of this representation of an object. |

| kind | Kind is a string value representing the REST resource this object represents. |

| metadata | Standard object's metadata. |

| metadata.name | Name must be unique within a namespace. |

| metadata.namespace | Namespace defines the space within which each name must be unique. |

| rules | Rules holds all the PolicyRules for this Role |

| apiGroups | APIGroups is the name of the APIGroup that contains the resources. |

| rules.resources | Resources is a list of resources this rule applies to. |

| rules.verbs | Verbs is a list of Verbs that apply to ALL the ResourceKinds and AttributeRestrictions contained in this rule. |

| rules.resourceNames | ResourceNames is an optional white list of names that the rule applies to. |

Table 3-12 Role Binding Creation

| Parameter | Description |

|---|---|

| apiVersion | APIVersion defines the versioned schema of this representation of an object. |

| kind | Kind is a string value representing the REST resource this object represents. |

| metadata | Standard object's metadata. |

| metadata.name | Name must be unique within a namespace. |

| metadata.namespace | Namespace defines the space within which each name must be unique. |

| roleRef | RoleRef can reference a Role in the current namespace or a ClusterRole in the global namespace. |

| roleRef.apiGroup | APIGroup is the group for the resource being referenced |

| roleRef.kind | Kind is the type of resource being referenced |

| roleRef.name | Name is the name of resource being referenced |

| subjects | Subjects holds references to the objects the role applies to. |

| subjects.kind | Kind of object being referenced. Values defined by this API group are "User", "Group", and "ServiceAccount". |

| subjects.apiGroup | APIGroup holds the API group of the referenced subject. |

| subjects.name | Name of the object being referenced. |

Debug Tool Configuration Parameters

Table 3-13 Debug Tool Configuration Parameters

| Parameter | Description |

|---|---|

| command | String array used for container command. |

| image | Docker image name |

| imagePullPolicy | Image Pull Policy |

| name | Name of the container |

| resources | Compute Resources required by this container |

| resources.limits | Limits describes the maximum amount of compute resources allowed |

| resources.requests | Requests describes the minimum amount of compute resources required |

| resources.limits.cpu | CPU limits |

| resources.limits.memory | Memory limits |

| resources.limits.ephemeral-storage | Ephemeral Storage limits |

| resources.requests.cpu | CPU requests |

| resources.requests.memory | Memory requests |

| resources.requests.ephemeral-storage | Ephemeral Storage requests |

| securityContext | Security options the container should run with. |

| securityContext.allowPrivilegeEscalation | AllowPrivilegeEscalation controls whether a process can gain more privileges than its parent process. This directly controls if the no_new_privs flag will be set on the container process |

| secuirtyContext.readOnlyRootFilesystem | Whether this container has a read-only root filesystem. Default is false. |

| securityContext.capabilities | The capabilities to add/drop when running containers. Defaults to the default set of capabilities granted by the container runtime. |

| securityContext.capabilities.drop | Removed capabilities |

| secuirtyContext.capabilities.add | Added capabilities |

| securityContext.runAsUser | The UID to run the entrypoint of the container process. |

| debugToolContainerMemoryLimit | Indicates the memory assigned for the debug tool container. |

| extraContainersVolumesTpl | Specifies the extra container template for the debug tool volume. |

| extraContainersVolumesTpl.name | Indicates the name of the volume for debug tool logs storage. |

| extraContainersVolumesTpl.emptyDir.medium | Indicates the location where

emptyDir volume is stored.

|

| extraContainersVolumesTpl.emptyDir.sizeLimit | Indicates the emptyDir volume

size.

|

| volumeMounts.mountPath | Indicates the path for volume mount. |

| volumeMounts.name | Indicates the name of the directory for debug tool logs storage. |