6 NSSF Metrics, KPIs, and Alerts

Note:

The performance and capacity of the NSSF system may vary based on the call model, Feature or Interface configuration, and underlying CNE and hardware environment.6.1 NSSF Metrics

This section includes information about dimensions, common attributes, and metrics for NSSF.

Metric Types

The following table describes the NSSF metric types used to measure the health and performance of NSSF and its core functionalities:

Table 6-1 Metric Type

| Metric Type | Description |

|---|---|

| Counter | Represents the total number of occurrences of an event or traffic, such as measuring the total amount of traffic received and transmitted by SCP, and so on. |

| Gauge | Represents a single numerical value that changes randomly. This metric type is used to measure various parameters, such as SCP load values, memory usage, and so on. |

| Histogram | Represents samples of observations (such as request durations or response sizes) and counts them in configurable buckets. It also provides a sum of all observed values. |

Dimensions

The following table describes different types of metric dimensions:

Table 6-2 Dimensions

| Dimension | Description | Values |

|---|---|---|

| retryCount | The attempt number to send a notification. | Depends on the helm parameter httpMaxRetries (1, 2...) |

| ResponseCode | HTTP response code. | Bad Request, Internal Server Error etc. (HttpStatus.*) |

| CauseCode | It specifies the cause code of an error response. | Cause Code of the error response.

For example, "SUBSCRIPTION_NOT_FOUND" |

| Message Type | This specifies the type of NS-Selection query message. | INITIAL_REGISTRATION/PDU_SESSION/UE_CONFIG_UPDATE |

| NFType | It specifies the name of the NF Type. | For example: Path is /nxxx-yyy/vz/.......

Where XXX(Upper Case) is NFType UNKNOWN if unable to extract NFType from the path. |

| NFServiceType | Name of the Service within the NF. | For Eg: Path is /nxxx-yyy/vz/.......

Where nxxx-yyy is NFServiceType UNKNOWN if unable to extract NFServiceType from the path. |

| Host | Specifies IP or FQDN port of ingress gateway. | NA |

| HttpVersion | Specifies Http protocol version. | HTTP/1.1, HTTP/2.0 |

| Scheme | Specifies the Http protocol scheme. | HTTP, HTTPS, UNKNOWN |

| ClientCertIdentity | Cerificate Identity of the client. | SAN=127.0.0.1,localhost CN=localhost, N/A if data is not available |

| Route_Path | Path predicate or Header predicate that matches the current request. | NA |

| InstanceIdentifier | Prefix of the pod configured in helm when there are multiple instances in same deployment. | Prefix configured in helm, UNKNOWN |

| ErrorOriginator | Captures the ErrorOriginator. | ServiceProducer, Nrf, IngresGW, None |

| quantile | Captures the latency values with ranges as 10ms, 20ms, 40ms, 80ms, 100ms, 200ms, 500ms, 1000ms and 5000ms. | Integer values |

| releaseVersion | Indicates the current release version of Ingress or Egress gateway. | Picked from helm chart {{ .Chart.Version }} |

| configVersion | Indicates the configuration version that Ingress or gateway is currently maintaining. | Value received from config server (1, 2...) |

| updated | Indicates whether the configuration is updated or not. | True, False |

| Direction | Indicates the direction of connection established, that is, whether it is incoming or outgoing. | ingress, egressOut |

| AMF Instance Id | NF-Id of AMF | NA |

| Subscription- Id | Subscription -ID | NA |

| Operation | NSAvailability Operation | UPDATE/DELETE/SUBSCRIBE/UNSUBSCRIBE |

| Method | HTTP method | POST/PUT/PATCH/DELETE/GET/OPTIONS |

| Status | HTTP response code | NA |

| query_type | Type of DB read query | applypolicy_reg/applypolicy_pdu/evaluate_amfset/evaluate_resolution |

| ConsumerNFInstanceId | NF instance id of the NF service consumer. | NA |

| ConsumerNFType | The NF type of the NF service consumer. | NRF, UDM, AMF, SMF, AUSF, NEF, PCF, SMSF, NSSF, UDR, LMF, GMLC,5G_EIR, SEPP, UPF, N3IWF, AF, UDSF, BSF, CHF, NWDAF |

| TargetNFType | The NF type of the NF service producer. | NRF, UDM, AMF, SMF, AUSF, NEF, PCF, SMSF, NSSF, UDR, LMF, GMLC,5G_EIR, SEPP, UPF, N3IWF, AF, UDSF, BSF, CHF, NWDAF |

| TargetNFInstanceId | NF instance ID of the NF service producer | NA |

| scope | NF service name(s) of the NF service producer(s), separated by whitespaces. | NA |

| StatusCode | Status code of NRF access token request. | Bad Request, Internal Server Error etc. (HttpStatus.*) |

| issuer | NF instance ID of NRF | NA |

| subject | NF instance ID of service consumer | NA |

| reason | The reason contains the human readable message for oauth validation failure. | NA |

| ConfigurationType | Determines the type of configuration in place for OAuth Client in Egress Gateway. If nrfClientQueryEnabled Helm parameter in oauthClient Helm configurations at Egress Gateway is false then the ConfigurationType is STATIC, else DYNAMIC. | STATIC, DYNAMIC |

| id | Determines the keyid or instance id that is configured via persistent configuration when oauth is enabled. | NA |

| certificateName | Determines the certificate name inside a specific secret that is configured via persistent configuration when oauth is enabled. | NA |

| secretName | Determines the secret name that is configured via persistent configuration when oauth is enabled | NA |

| Source | Determines if the configuration is done by the operator or fetched from AMF. | OperatorConfig/LearnedConfigAMF |

| ERRORTYPE | Determines the type of error. | DB_ERROR/MISSING_CONFIGURATION/UNKNOWN |

| NegotiatedTLSVersion | This denotes the TLS version used for communication between the server and the client. | TLSv1.2, TLSv1.3. |

| serialNumber | Indicates the type of the certificate. | serialNumber=4661 is used for RSA and serialNumber =4662 is used for ECDSA |

| NrfUri | URI of the Network Repository Function Instance. | For example: nrf-stubserver.ocnssf-site:8080 |

| Subscription_removed | The dimension indicates the status of a subscription upon receiving a 404 response from the AMF after a notification is sent. |

"false": The subscription was not deleted. This value applies if the feature is disabled, indicating no deletion attempt was made. "true": The subscription was successfully deleted. This value applies if the feature is enabled and the deletion process completed successfully. "error": The subscription was not deleted due to internal issues, such as a database error, despite the feature being enabled and a deletion attempt being made. |

| VirtualFqdn | FQDN that shall be used by the alternate service for the DNS lookup | Valid FQDN |

Common Attributes

The following table includes information about common attributes for NSSF.

Table 6-3 Common Attributes

| Attribute | Description |

|---|---|

| application | The name of the application that the microservice is a part of. |

| eng_version | The engineering version of the application. |

| microservice | The name of the microservice. |

| namespace | The namespace in which microservice is running. |

| node | The name of the worker node that the microservice is running on. |

6.1.1 NSSF Success Metrics

This section provides details about the NSSF success metrics.

Table 6-4 ocnssf_nsselection_rx_total

| Field | Details |

|---|---|

| Description | Count of request messages received by NSSF for the Nnssf_NSSelection service. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension |

|

Table 6-5 ocnssf_nsselection_success_tx_total

| Field | Details |

|---|---|

| Description | Count of success response messages sent by NSSF for requests for the Nnssf_NSSelection service. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension |

|

Table 6-6 ocnssf_nsselection_policy_match_total

| Field | Details |

|---|---|

| Description | Count of policy matches found during processing of request messages for the Nnssf_NSSelection service. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension |

|

Table 6-7 ocnssf_nsselection_time_match_total

| Field | Details |

|---|---|

| Description | Count of time profile matches found during processing of request messages for the Nnssf_NSSelection service. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension |

|

Table 6-8 ocnssf_nsselection_nsi_selected_total

| Field | Details |

|---|---|

| Description | Count of NRF discoveries performed during processing of request messages for the Nnssf_NSSelection service. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension |

|

Table 6-9 ocnssf_nsavailability_notification_trigger_tx

| Field | Details |

|---|---|

| Description | Count of notification triggers sent to NsSubscription. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension | Method |

Table 6-10 ocnssf_nsavailability_notification_trigger_response_rx

| Field | Details |

|---|---|

| Description | Count of success response for notification trigger by NSSubscription. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension | Method |

Table 6-11 ocnssf_nsselection_nrf_disc

| Field | Details |

|---|---|

| Description | Count of NRF discoveries performed during processing of request messages for the Nnssf_NSSelection service. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-12 ocnssf_nsselection_nrf_disc_success

| Field | Details |

|---|---|

| Description | Count of successful discovery results received from NRF during processing of request messages for the Nnssf_NSSelection service. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-13 ocnssf_nssaiavailability_rx_total

| Field | Details |

|---|---|

| Description | Count of request messages received by NSSF for the Nnssf_NSSAIAvailability service. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-14 ocnssf_nssaiavailability_success_tx_total

| Field | Details |

|---|---|

| Description | Count of success response messages sent by NSSF for requests for the Nnssf_NSSAIAvailability service. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-15 ocnssf_nssaiavailability_options_rx

| Field | Details |

|---|---|

| Description | Count of HTTP options received at NSAvailability service. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-16 ocnssf_nssaiavailability_options_tx_status_ok

| Field | Details |

|---|---|

| Description | Count of HTTP options response with status 200 OK. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-17 ocnssf_nssaiavailability_notification_indirect_communication_rx_total

| Field | Details |

|---|---|

| Description | Count of request notification messages sent by NSSF using indirect communication. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-18 ocnssf_nssaiavailability_notification_indirect_communication_tx_total

| Field | Details |

|---|---|

| Description | Count of notification response messages received by NSSF using indirect communication. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-19 ocnssf_nssaiavailability_indirect_communication_rx_total

| Field | Details |

|---|---|

| Description | Count of request when subscription messages received by NSSF using indirect communication. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-20 ocnssf_nssaiavailability_indirect_communication_tx_total

| Field | Details |

|---|---|

| Description | Count of subscription response messages sent by NSSF using indirect communication. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-21 ocnssf_nsselection_requests_duration_seconds_sum

| Field | Details |

|---|---|

| Description | Time duration in seconds taken by NSSF to process requests to NSSelection. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-22 ocnssf_nsselection_requests_duration_seconds_count

| Field | Details |

|---|---|

| Description | Count of number of requests processed by NSSelection. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-23 ocnssf_nsselection_requests_duration_seconds_max

| Field | Details |

|---|---|

| Description | Maximum time duration in seconds taken by NSSF to process requests to NSSelection. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-24 ocnssf_db_query_duration_seconds_sum

| Field | Details |

|---|---|

| Description | Time duration in seconds to process dbQuery. |

| Type | Counter |

| Service Operation | NA |

| Dimension | query_type |

Table 6-25 ocnssf_db_query_duration_seconds_count

| Field | Details |

|---|---|

| Description | Count of number of dbQuery. |

| Type | Counter |

| Service Operation | NA |

| Dimension | query_type |

Table 6-26 ocnssf_db_query_duration_seconds_max

| Field | Details |

|---|---|

| Description | Maximum time duration in seconds taken to process dbQuery. |

| Type | Counter |

| Service Operation | NA |

| Dimension | query_type |

Table 6-27 ocnssf_nssaiavailability_submod_rx_total

| Field | Details |

|---|---|

| Description | Count of HTTP patch for subscription (SUBMOD) request messages received by NSSF for ocnssf_NSSAIAvailability service. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-28 ocnssf_nssaiavailability_submod_success_response_tx_total

| Field | Details |

|---|---|

| Description | Count of success response messages sent by NSSF for HTTP patch for subscription (SUBMOD) requests for ocnssf_NSSAIAvailability service. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-29 ocnssf_nssaiavailability_notification_success_response_rx_total

| Field | Details |

|---|---|

| Description | Count of success notification response messages received by NSSF for requests for the Nnssf_NSSAIAvailability service. |

| Type | Counter |

| Service Operation | NSSubscription |

| Dimension |

|

Table 6-30 ocnssf_nssaiavailability_notification_tx_total

| Field | Details |

|---|---|

| Description | Count of notification messages sent by NSSF as part of Nnssf_NSSAIAvailability service. |

| Type | Counter |

| Service Operation | NSSubscription |

| Dimension |

|

Table 6-31 ocnssf_notification_trigger_rx_total

| Field | Details |

|---|---|

| Description | Count of notification triggers received by NSSF. |

| Type | Counter |

| Service Operation | NSSubscription |

| Dimension |

|

Table 6-32 ocnssf_nsconfig_notification_trigger_tx_total

| Field | Details |

|---|---|

| Description | Count of notification triggers sent to NsSubscription. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension |

|

Table 6-33 ocnssf_nsconfig_notification_trigger_response_rx_total

| Field | Details |

|---|---|

| Description | Count of success response for notification trigger by NsSubscription. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension | Method |

Table 6-34 ocnssf_nsconfig_nrf_disc_success_total

| Field | Details |

|---|---|

| Description | Count of successful discovery results received from NRF during processing of configuration of amf_set in Nnssf_NSConfig service. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension | None |

Table 6-35 ocnssf_subscription_nrf_tx_total

| Field | Details |

|---|---|

| Description | Count of successful subscription results received from NRF during processing of configuration of amf_set in Nnssf_NSConfig service. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension | None |

6.1.2 NSSF Error Metrics

This section provides details about the NSSF error metrics.

Table 6-36 ocnssf_configuration_database_read_error

| Field | Details |

|---|---|

| Description | Count of errors encountered when trying to read the configuration database. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-37 ocnssf_configuration_database_write_error

| Field | Details |

|---|---|

| Description | Count of errors encountered when trying to write to the configuration database. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension | None |

Table 6-38 ocnssf_nsconfig_notification_trigger_failure_response_rx_total

| Field | Details |

|---|---|

| Description | Count of failure response for notification trigger by NSSubscription. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension |

|

Table 6-39 ocnssf_nsconfig_notification_trigger_retry_tx_total

| Field | Details |

|---|---|

| Description | Count of retry notification triggers sent to NSSubscription. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension |

|

Table 6-40 ocnssf_nsconfig_notification_trigger_failed_tx_total

| Field | Details |

|---|---|

| Description | Count of failed notification triggers (all retrys failed) to NSSubscription. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension |

|

Table 6-41 ocnssf_nsconfig_nrf_disc_error_total

| Field | Details |

|---|---|

| Description | Count of failed discovery results received from NRF during processing of configuration of amf_set in Nnssf_NSConfig service. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension | None |

Table 6-42 ocnssf_discovery_nrf_tx_failed_total

| Field | Details |

|---|---|

| Description | Count of failed discovery requests sent by NSSF to NRF during configuration of amf_set in Nnssf_NSConfig service. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension | None |

Table 6-43 ocnssf_subscription_nrf_tx_failed_total

| Field | Details |

|---|---|

| Description | Count of failed subscription results received from NRF during processing of configuration of amf_set in Nnssf_NSConfig service. |

| Type | Counter |

| Service Operation | NSConfig |

| Dimension | None |

Table 6-44 ocnssf_state_data_read_error

| Field | Details |

|---|---|

| Description | Count of errors encountered when trying to read the state database. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-45 ocnssf_state_data_write_error

| Field | Details |

|---|---|

| Description | Count of errors encountered when trying to write to the state database. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension | None |

Table 6-46 ocnssf_nsselection_nrf_disc_failure_total

| Field | Details |

|---|---|

| Description | Count of errors encountered when trying to reach the NRF's discovery service. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-47 ocnssf_nsselection_policy_not_found_total

| Field | Details |

|---|---|

| Description | Count of request messages that did not find a configured policy. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension |

|

Table 6-48 ocnssf_nsselection_unsupported_plmn_total

| Field | Details |

|---|---|

| Description | Count of request messages that did not find mcc and mnc in the PLMN list. |

| Type | Counter |

| Service Operation | NSSelection |

| Dimension | None |

Table 6-49 ocnssf_nssaiavailability_subscription_failure_total

| Field | Details |

|---|---|

| Description | Count of subscribe requests rejected by NSSF. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension | None |

Table 6-50 ocnssf_nssaiavailability_notification_error_response_rx_total

| Field | Details |

|---|---|

| Description | Count of failure notification response messages received by NSSF for requests by the Nnssf_NSSAIAvailability service. |

| Type | Counter |

| Service Operation | NSSubscription |

| Dimension |

|

Table 6-51 ocnssf_nssaiavailability_notification_failure

| Field | Details |

|---|---|

| Description | Count of failure notification response messages received by NSSF for requests by the Nnssf_NSSAI Availability service. |

| Type | Counter |

| Service Operation | NSSubscription |

| Dimension |

|

Table 6-52 ocnssf_nssaiavailability_options_tx_status_unsupportedmediatype

| Field | Details |

|---|---|

| Description | Count of HTTP OPTIONS response with status 415 Unsupported Media type. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-53 ocnssf_nsavailability_unsupported_plmn_total

| Field | Details |

|---|---|

| Description | Count of request messages with unsupported PLMN received by NSSF for the ocnssf_NSAvailability service. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-54 ocnssf_nsavailability_invalid_location_url_total

| Field | Details |

|---|---|

| Description | Count of invalid location header. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-55 ocnssf_nssaiavailability_submod_error_response_tx_total

| Field | Details |

|---|---|

| Description | Count of error response messages sent by NSSF for HTTP patch for subscription (SUBMOD) requests for ocnssf_NSSAIAvailability service. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-56 ocnssf_nssaiavailability_submod_unimplemented_op_total

| Field | Details |

|---|---|

| Description | Count of HTTP patch request messages received by NSSF for ocnssf_NSSAIAvailability service for which PATCH operation (op) is not implemented. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-57 ocnssf_nssaiavailability_submod_patch_apply_error_total

| Field | Details |

|---|---|

| Description | Count of HTTP patch request messages received by OCNSSFfor ocnssf_NSSAIAvailability service for which PATCH application returned error. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-58 ocnssf_nsavailability_notification_trigger_failure_response_rx

| Field | Details |

|---|---|

| Description | Count of failure response for notification trigger by NSSubscription. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension | Method |

Table 6-59 ocnssf_nsavailability_notification_trigger_retry_tx

| Field | Details |

|---|---|

| Description | Count of retry notification triggers sent to NSSubscription. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension | Method |

Table 6-60 ocnssf_nsavailability_notification_trigger_failed_tx

| Field | Details |

|---|---|

| Description | Count of failed notification triggers (all retries failed) to NSSubscription. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension | Method |

Table 6-61 ocnssf_nssaiavailability_notification_delete_on_subscription_not_found_total

| Field | Details |

|---|---|

| Description | Triggered when 404 Subscription with SUBSCRIPTION_NOT_FOUND is received by AMF. |

| Type | Counter |

| Service Operation | NsSubscription |

| Dimension | Subscription_Removed |

Table 6-62 ocnssf_nssaiavailability_notification_db_error

| Field | Details |

|---|---|

| Description | Triggered when DB error or exception occurs when trying to delete NssaiSubscription. |

| Type | Counter |

| Service Operation | NsSubscription |

| Dimension | None |

Table 6-63 ocnssf_nssaiavailability_indirect_communication_subscription_failure_total

| Field | Details |

|---|---|

| Description | Count of failure when subscription messages sent by NSSF using indirect communication. |

| Type | Counter |

| Service Operation | NSAvailability |

| Dimension |

|

Table 6-64 ocnssf_nssaiavailability_indirect_communication_notification_failure_total

| Field | Details |

|---|---|

| Description | Count of failure when notification messages sent by NSSF using indirect communication. |

| Type | Counter |

| Service Operation | NSSubscription |

| Dimension |

|

6.1.3 NSSF Common metrics

This section provides details about the NSSF common metrics.

Table 6-65 security_cert_x509_expiration_seconds

| Field | Details |

|---|---|

| Description | Indicates the time to certificate expiry in epoch seconds. |

| Type | Histogram |

| Dimension | serialNumber |

Table 6-66 http_requests_total

| Field | Details |

|---|---|

| Description | This is pegged as soon as the request reaches the Ingress or Egress gateway in the first custom filter of the application. |

| Type | Counter |

| Dimension |

|

Table 6-67 http_responses_total

| Field | Details |

|---|---|

| Description | Responses received or sent from the microservice . |

| Type | Counter |

| Dimension |

|

Table 6-68 http_request_bytes

| Field | Details |

|---|---|

| Description | Size of requests, including header and body. Grouped in 100 byte buckets. |

| Type | Histogram |

| Dimension |

|

Table 6-69 http_response_bytes

| Field | Details |

|---|---|

| Description | Size of responses, including header and body. Grouped in 100 byte buckets. |

| Type | Histogram |

| Dimension |

|

Table 6-70 bandwidth_bytes

| Field | Details |

|---|---|

| Description | Amount of ingress and egress traffic sent and received by the microservice. |

| Type | Counter |

| Dimension | direction |

Table 6-71 request_latency_seconds

| Field | Details |

|---|---|

| Description | This metric is pegged in the last custom filter of the Ingress or Egress gateway while the response is being sent back to the consumer NF. It tracks the amount of time taken for processing the request. It starts as soon the request reaches the first custom filter of the application and lasts till the response is sent back to the consumer NF from the last custom filter of the application. |

| Type | Histogram |

| Dimension |

|

Table 6-72 connection_failure_total

| Field | Details |

|---|---|

| Description | This metric is pegged by jetty client when the destination is not reachable by Ingress or Egress gateway. In case of Ingress gateway, the destination service will be a back-end microservice of the NF, and TLS connection failure metrics when connecting to ingress with direction as ingress. For Egress gateway, the destination is producer NF. |

| Type | Counter |

| Dimension |

|

Table 6-73 request_processing_latency_seconds

| Field | Details |

|---|---|

| Description | This metric is pegged in the last custom filter of the Ingress or Egress gateway while the response is being sent back to the consumer NF. This metric captures the amount of time taken for processing of the request only within Ingress or Egress gateway. It starts as soon the request reaches the first custom filter of the application and lasts till the request is forwarded to the destination. |

| Type | Timer |

| Dimension |

|

Table 6-74 jetty_request_stat_metrics_total

| Field | Details |

|---|---|

| Description | This metric is pegged for every event occurred when a request is sent to Ingress or Egress gateway. |

| Type | Counter |

| Dimension |

|

Table 6-75 jetty_response_stat_metrics_total

| Field | Details |

|---|---|

| Description | This metric is pegged for every event occurred when a response is received by Ingress or Egress gateway. |

| Type | Counter |

| Dimension |

|

Table 6-76 server_latency_seconds

| Field | Details |

|---|---|

| Description | This metric is pegged in Jetty response listener that captures the amount of time taken for processing of the request by jetty client |

| Type | Timer |

| Dimension |

|

Table 6-77 roundtrip_latency_seconds

| Field | Details |

|---|---|

| Description | This metric is pegged in Netty outbound handler that captures the amount of time taken for processing of the request by netty server. |

| Type | Timer |

| Dimension |

|

Table 6-78 oc_configclient_request_total

| Field | Details |

|---|---|

| Description | This metric is pegged whenever config client is polling for configuration update from common configuration server. |

| Type | Counter |

| Dimension |

|

Table 6-79 oc_configclient_response_total

| Field | Details |

|---|---|

| Description | This metrics is pegged whenever config client receives response from common configuration server. |

| Type | Counter |

| Dimension |

|

Table 6-80 incoming_connections

| Field | Details |

|---|---|

| Description | This metric pegs active incoming connections from client to Ingress or Egress gateway. |

| Type | Gauge |

| Dimension |

|

Table 6-81 outgoing_connections

| Field | Details |

|---|---|

| Description | This metric pegs active outgoing connections from Ingress gateway or Egress gateway to destination |

| Type | Gauge |

| Dimension |

|

Table 6-82 sbitimer_timezone_mismatch

| Field | Details |

|---|---|

| Description | This metric pegs when sbiTimerTimezone is set to ANY and time zone is not specified in the header then above metric is pegged in ingress and egress gateways. |

| Type | Gauge |

| Dimension |

|

Table 6-83 nrfclient_nrf_operative_status

| Field | Details |

|---|---|

| Description | The current operative status of the NRF

Instance.

Note: The HealthCheck mechanism is an important component that allows monitoring and managing the health of NRF services. When enabled, it makes periodic HTTP requests

to NRF services to check their availability and updates their status

accordingly so that the metric

When disabled, for each NRF route, it is checked

whether the retry time has expired. If so, the health state is reset

to |

| Type | Gauge |

| Dimension | NrfUri - URI of the NRF Instance |

Table 6-84 nrfclient_dns_lookup_request_total

| Field | Details |

|---|---|

| Description | Total number of times a DNS lookup request is sent to the alternate route service. |

| Type | Counter |

| Dimension |

|

6.1.4 NSSF OAuth Metrics

This section provides details about the NSSF OAuth metrics.

Table 6-85 oc_oauth_nrf_request_total

| Field | Details |

|---|---|

| Description | This is pegged in the OAuth client implementation if the request is sent to NRF for requesting the OAuth token. OAuth client implementation is used in Egress gateway. |

| Type | Counter |

| Dimension |

|

Table 6-86 oc_oauth_nrf_response_success_total

| Field | Details |

|---|---|

| Description | This is pegged in the OAuth client implementation if an OAuth token is successfully received from the NRF. OAuth client implementation is used in Egress gateway. |

| Type | Counter |

| Dimension |

|

Table 6-87 oc_oauth_nrf_response_failure_total

| Field | Details |

|---|---|

| Description | This is pegged in the OAuthClientFilter in Egress gateway whenever GetAccessTokenFailedException is captured. |

| Type | Counter |

| Dimension |

|

Table 6-88 oc_oauth_nrf_response_failure_total

| Field | Details |

|---|---|

| Description | This is pegged in the OAuthClientFilter in Egress gateway whenever GetAccessTokenFailedException is captured. |

| Type | Counter |

| Dimension |

|

Table 6-89 oc_oauth_request_failed_internal_total

| Field | Details |

|---|---|

| Description | This is pegged in the OAuthClientFilter in Egress gateway whenever InternalServerErrorException is captured. |

| Type | Counter |

| Dimension |

|

Table 6-90 oc_oauth_token_cache_total

| Field | Details |

|---|---|

| Description | This is pegged in the OAuth Client Implementation if the OAuth token is found in the cache. |

| Type | Counter |

| Dimension |

|

Table 6-91 oc_oauth_request_invalid_total

| Field | Details |

|---|---|

| Description | This is pegged in the OAuthClientFilter in Egress gateway whenever a BadAccessTokenRequestException/JsonProcessingException is captured. |

| Type | Counter |

| Dimension |

|

Table 6-92 oc_oauth_validation_successful_total

| Field | Details |

|---|---|

| Description | This is pegged in OAuth validator implementation if the received OAuth token is validated successfully. OAuth validator implementation is used in Ingress gateway. |

| Type | Counter |

| Dimension |

|

Table 6-93 oc_oauth_validation_failure_total

| Field | Details |

|---|---|

| Description | This is pegged in OAuth validator implementation if the validation of the received OAuth token is failed. OAuth validator implementation is used in Ingress gateway. |

| Type | Counter |

| Dimension |

|

Table 6-94 oc_oauth_cert_expiryStatus

| Field | Details |

|---|---|

| Description | Metric used to peg expiry date of the certificate. This metric is further used for raising alarms if certificate expires within 30 days or 7 days. |

| Type | Gauge |

| Dimension |

|

Table 6-95 oc_oauth_cert_loadStatus

| Field | Details |

|---|---|

| Description | Metric used to peg whether given certificate can be loaded from secret or not. If it is loadable then "0" is pegged otherwise "1" is pegged. This metric is further used for raising alarms when certificate is not loadable. |

| Type | Gauge |

| Dimension |

|

Table 6-96 oc_oauth_request_failed_cert_expiry

| Field | Details |

|---|---|

| Description | Metric used to keep track of number of requests with keyId in token that failed due to certificate expiry. Pegged whenever oAuth Validator module throws oauth custom exception due to certificate expiry for an incoming request. |

| Type | Metric |

| Dimension |

|

Table 6-97 oc_oauth_keyid_count

| Field | Details |

|---|---|

| Description | Metric used to keep track of number of requests received with keyId in token. Pegged whenever a request with an access token containing kid in header comes to oAuth Validator. This is independent of whether the validation failed or was successful. |

| Type | Metric |

| Dimension |

|

6.1.5 Managed Objects Metrics

This section provides details about the NSSF Managed Object (MO) metrics.

Table 6-98 ocnssf_nssaiauth_req_rx

| Field | Details |

|---|---|

| Description | Count of nssaiauth requests received by NSConfig.

Trigger Condition: Operator configuration of the Managed Object. Operator configuration of the Managed Object. This is pegged when HTTP GET, POST, DELETE, or PUT request is received by NSSF. |

| Type | Counter |

| Service Operation | nssaiauth |

| Dimension | Method |

Table 6-99 ocnssf_nssaiauth_res_tx

| Field | Details |

|---|---|

| Description | Count of successful responses sent by NSConfig for a nssaiauth

request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when a 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | nssaiauth |

| Dimension | Method |

Table 6-100 ocnssf_nssaiauth_error_res_tx

| Field | Details |

|---|---|

| Description | Count of error responses sent by NSConfig for a nssaiauth request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when non 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | nssaiauth |

| Dimension |

Method Status |

Table 6-101 ocnssf_nssaiauth_created

| Field | Details |

|---|---|

| Description | Count of nssaiauth created in the database.

Trigger Condition: Operator configuration of the Managed Object leading to storage of the Managed Object in the database and Autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source and pegged with LearnedConfigAMF when NsAvailabilityUpdate leads to storage of nssaiauth. |

| Type | Counter |

| Service Operation | nssaiauth |

| Dimension | Source |

Table 6-102 ocnssf_nssaiauth_deleted

| Field | Details |

|---|---|

| Description | Count of nssaiauth deleted in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source and pegged with LearnedConfigAMF when NSAvailability Update leads to storage of nssaiauth. |

| Type | Counter |

| Service Operation | nssaiauth |

| Dimension | Source |

Table 6-103 ocnssf_nssaiauth_updated

| Field | Details |

|---|---|

| Description | Count of nssaiauth updated in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator config is the source and pegged with LearnedConfigAMF when NSAvailability Update leads to storage of nssaiauth. Note: In current scenario, autoconfiguration does not update the Managed Object in the database, it only deletes and creates Managed Objects. |

| Type | Counter |

| Service Operation | nssaiauth |

| Dimension | Source |

Table 6-104 ocnssf_nssaiauth_error

| Field | Details |

|---|---|

| Description | Count of failures on Managed Object processing.

Trigger Condition: Error while creating, deleting, or updating a Managed object. This is pegged when error occurs while handling a Managed Object. Note: This must be pegged when ocnssf_nssaiauth_error_res_tx is pegged. |

| Type | Counter |

| Service Operation | nssaiauth |

| Dimension |

|

Table 6-105 ocnssf_nsiprofile_req_rx

| Field | Details |

|---|---|

| Description | Count of nsiprofile requests received by NSConfig.

Trigger Condition: Operator configuration of the Managed Object. Operator configuration of the Managed Object. This is pegged when HTTP GET, POST, DELETE, or PUT request is received by NSSF. |

| Type | Counter |

| Service Operation | nsiprofile |

| Dimension | Method |

Table 6-106 ocnssf_amfset_req_rx

| Field | Details |

|---|---|

| Description | Count of amfset requests received by NSConfig.

Trigger Condition: Operator configuration of the Managed Object. Operator configuration of the Managed Object. This is pegged when HTTP GET, POST, DELETE, or PUT request is received by NSSF. |

| Type | Counter |

| Service Operation | amfset |

| Dimension | Method |

Table 6-107 ocnssf_amfset_res_tx

| Field | Details |

|---|---|

| Description | Count of successful responses sent by NSConfig for a amfset request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when a 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | amfset |

| Dimension | Method |

Table 6-108 ocnssf_amfset_error_res_tx

| Field | Details |

|---|---|

| Description | Count of error responses sent by NSConfig for a amfset request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when non 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | amfset |

| Dimension |

Method Status |

Table 6-109 ocnssf_amfset_created

| Field | Details |

|---|---|

| Description | Count of amfset created in the database.

Trigger Condition: Operator configuration of the Managed Object leading to storage of the Managed Object in the database and Autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | amfset |

| Dimension | Source |

Table 6-110 ocnssf_amfset_deleted

| Field | Details |

|---|---|

| Description | Count of amfset deleted in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | amfset |

| Dimension | Source |

Table 6-111 ocnssf_amfset_updated

| Field | Details |

|---|---|

| Description | Count of amfset updated in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator config is the source. |

| Type | Counter |

| Service Operation | amfset |

| Dimension | Source |

Table 6-112 ocnssf_amfset_error

| Field | Details |

|---|---|

| Description | Count of failures on Managed Object processing.

Trigger Condition: Error while creating, deleting, or updating a Managed object. This is pegged when error occurs while handling a Managed Object. |

| Type | Counter |

| Service Operation | amfset |

| Dimension |

|

Table 6-113 ocnssf_amfresolution_req_rx

| Field | Details |

|---|---|

| Description | Count of amfresolution requests received by NSConfig.

Trigger Condition: Operator configuration of the Managed Object. Operator configuration of the Managed Object. This is pegged when HTTP GET, POST, DELETE, or PUT request is received by NSSF. |

| Type | Counter |

| Service Operation | amfresolution |

| Dimension | Method |

Table 6-114 ocnssf_amfresolution_res_tx

| Field | Details |

|---|---|

| Description | Count of successful responses sent by NSConfig for a amfresolution

request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when a 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | amfresolution |

| Dimension | Method |

Table 6-115 ocnssf_amfresolution_error_res_tx

| Field | Details |

|---|---|

| Description | Count of error responses sent by NSConfig for a amfresolution

request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when non 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | amfresolution |

| Dimension |

Method Status |

Table 6-116 ocnssf_amfresolution_created

| Field | Details |

|---|---|

| Description | Count of amfresolution created in the database.

Trigger Condition: Operator configuration of the Managed Object leading to storage of the Managed Object in the database and Autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | amfresolution |

| Dimension | Source |

Table 6-117 ocnssf_amfresolution_deleted

| Field | Details |

|---|---|

| Description | Count of amfresolution deleted in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | amfresolution |

| Dimension | Source |

Table 6-118 ocnssf_amfresolution_updated

| Field | Details |

|---|---|

| Description | Count of amfresolution updated in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator config is the source. |

| Type | Counter |

| Service Operation | amfresolution |

| Dimension | Source |

Table 6-119 ocnssf_amfresolution_error

| Field | Details |

|---|---|

| Description | Count of failures on Managed Object processing.

Trigger Condition: Error while creating, deleting, or updating a Managed object. This is pegged when error occurs while handling a Managed Object. |

| Type | Counter |

| Service Operation | amfresolution |

| Dimension |

|

Table 6-120 ocnssf_timeprofile_req_rx

| Field | Details |

|---|---|

| Description | Count oftimeprofile requests received by NSConfig.

Trigger Condition: Operator configuration of the Managed Object. Operator configuration of the Managed Object. This is pegged when HTTP GET, POST, DELETE, or PUT request is received by NSSF. |

| Type | Counter |

| Service Operation | timeprofile |

| Dimension | Method |

Table 6-121 ocnssf_timeprofile_res_tx

| Field | Details |

|---|---|

| Description | Count of successful responses sent by NSConfig for a timeprofile

request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when a 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | timeprofile |

| Dimension | Method |

Table 6-122 ocnssf_timeprofile_error_res_tx

| Field | Details |

|---|---|

| Description | Count of error responses sent by NSConfig for a timeprofile request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when non 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | timeprofile |

| Dimension |

Method Status |

Table 6-123 ocnssf_timeprofile_created

| Field | Details |

|---|---|

| Description | Count of timeprofile created in the database.

Trigger Condition: Operator configuration of the Managed Object leading to storage of the Managed Object in the database and Autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | timeprofile |

| Dimension | Source |

Table 6-124 ocnssf_timeprofile_deleted

| Field | Details |

|---|---|

| Description | Count of timeprofile deleted in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | timeprofile |

| Dimension | Source |

Table 6-125 ocnssf_timeprofile_updated

| Field | Details |

|---|---|

| Description | Count of timeprofile updated in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator config is the source. |

| Type | Counter |

| Service Operation | timeprofile |

| Dimension | Source |

Table 6-126 ocnssf_timeprofile_error

| Field | Details |

|---|---|

| Description | Count of failures on Managed Object processing.

Trigger Condition: Error while creating, deleting, or updating a Managed object. This is pegged when error occurs while handling a Managed Object. |

| Type | Counter |

| Service Operation | timeprofile |

| Dimension |

|

Table 6-127 ocnssf_defaultsnssai_req_rx

| Field | Details |

|---|---|

| Description | Count of defaultsnssai requests received by NSConfig.

Trigger Condition: Operator configuration of the Managed Object. Operator configuration of the Managed Object. This is pegged when HTTP GET, POST, DELETE, or PUT request is received by NSSF. |

| Type | Counter |

| Service Operation | defaultsnssai |

| Dimension | Method |

Table 6-128 ocnssf_defaultsnssai_res_tx

| Field | Details |

|---|---|

| Description | Count of successful responses sent by NSConfig for a defaultsnssai

request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when a 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | defaultsnssai |

| Dimension | Method |

Table 6-129 ocnssf_defaultsnssai_error_res_tx

| Field | Details |

|---|---|

| Description | Count of error responses sent by NSConfig for a defaultsnssai

request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when non 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | defaultsnssai |

| Dimension |

Method Status |

Table 6-130 ocnssf_defaultsnssai_created

| Field | Details |

|---|---|

| Description | Count of defaultsnssai created in the database.

Trigger Condition: Operator configuration of the Managed Object leading to storage of the Managed Object in the database and Autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | defaultsnssai |

| Dimension | Source |

Table 6-131 ocnssf_defaultsnssai_deleted

| Field | Details |

|---|---|

| Description | Count of defaultsnssai deleted in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | defaultsnssai |

| Dimension | Source |

Table 6-132 ocnssf_defaultsnssai_updated

| Field | Details |

|---|---|

| Description | Count of defaultsnssai updated in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator config is the source. |

| Type | Counter |

| Service Operation | defaultsnssai |

| Dimension | Source |

Table 6-133 ocnssf_mappingofnssai_req_rx

| Field | Details |

|---|---|

| Description | Count of mappingofnssai requests received by NSConfig.

Trigger Condition: Operator configuration of the Managed Object. Operator configuration of the Managed Object. This is pegged when HTTP GET, POST, DELETE, or PUT request is received by NSSF. |

| Type | Counter |

| Service Operation | mappingofnssai |

| Dimension | Method |

Table 6-134 ocnssf_mappingofnssai_res_tx

| Field | Details |

|---|---|

| Description | Count of successful responses sent by NSConfig for a mappingofnssai

request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when a 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | mappingofnssai |

| Dimension | Method |

Table 6-135 ocnssf_mappingofnssai_error_res_tx

| Field | Details |

|---|---|

| Description | Count of error responses sent by NSConfig for a mappingofnssai

request.

Trigger Condition: Operator configuration of the Managed Object. This is pegged when non 2xx response for HTTP GET, POST, DELETE, or PUT request is sent by NSSF. |

| Type | Counter |

| Service Operation | mappingofnssai |

| Dimension |

Method Status |

Table 6-136 ocnssf_mappingofnssai_created

| Field | Details |

|---|---|

| Description | Count of mappingofnssai created in the database.

Trigger Condition: Operator configuration of the Managed Object leading to storage of the Managed Object in the database and Autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | mappingofnssai |

| Dimension | Source |

Table 6-137 ocnssf_mappingofnssai_deleted

| Field | Details |

|---|---|

| Description | Count of mappingofnssai deleted in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator configuration is the source. |

| Type | Counter |

| Service Operation | mappingofnssai |

| Dimension | Source |

Table 6-138 ocnssf_mappingofnssai_updated

| Field | Details |

|---|---|

| Description | Count of mappingofnssai updated in the database.

Trigger Condition: Operator configuration of the Managed Object leading to deleting of the Managed Object in the database and autoconfiguration by learning from the AMF. This is pegged as source OperatorConfig when operator config is the source. |

| Type | Counter |

| Service Operation | mappingofnssai |

| Dimension | Source |

6.1.6 Perf-info metrics for Overload Control

This section provides details about Perf-info metrics for overload control.

Table 6-139 cgroup_cpu_nanoseconds

| Field | Details |

|---|---|

| Description | Reports the total CPU time (in nanoseconds) on each CPU core for all the tasks in the cgroup. |

| Type | Gauge |

| Dimension | NA |

Table 6-140 cgroup_memory_bytes

| Field | Details |

|---|---|

| Description | Reports the memory usage. |

| Type | Gauge |

| Dimension | NA |

Table 6-141 load_level

| Field | Details |

|---|---|

| Description | Provides information about the overload manager load level. |

| Type | Gauge |

| Dimension |

|

6.1.7 Egress Gateway Metrics

This section provides details about Egress Gateway metrics.

Table 6-142 oc_egressgateway_outgoing_tls_connections

| Field | Details |

|---|---|

| Description | Number of TLS connections received on the Egress Gateway and their negotiated TLS versions. The versions can be TLSv1.3 or TLSv1.2 |

| Type | Gauge |

| Service Operation | Egress Gateway |

| Dimension |

|

Table 6-143 oc_fqdn_alternate_route_total

| Field | Details |

|---|---|

| Description | Tracks number of registration, deregistration and GET calls received

for a given scheme and FQDN.

Note: Registration does not reflect active registration numbers. It captured number of registration requests received. |

| Type | Counter |

| Service Operation | Egress Gateway |

| Dimension |

|

Table 6-144 oc_dns_srv_lookup_total

| Field | Details |

|---|---|

| Description | Track number of time DNS SRV lookup was done for a given scheme and FQDN. |

| Type | Counter |

| Service Operation | Egress Gateway |

| Dimension | binding_value: <scheme>+<FQDN> |

Table 6-145 oc_alternate_route_resultset

| Field | Details |

|---|---|

| Description | Value provides number of alternate routes known for a given scheme

and FQDN.

Whenever DNS SRV lookup or static configuration is done, this metric provide number of known alternate route for a given pair. For example, <"http", "abc.oracle.com">: 2. |

| Type | Gauge |

| Service Operation | Egress Gateway |

| Dimension | binding_value: <scheme>+<FQDN> |

Table 6-146 oc_configclient_request_total

| Field | Details |

|---|---|

| Description | This metric is pegged whenever a polling request is made from config client to the server for configuration updates. |

| Type | Counter |

| Service Operation | Egress Gateway |

| Dimension | Tags: releaseVersion, configVersion.

|

Table 6-147 oc_configclient_response_total

| Field | Details |

|---|---|

| Description | This metric is pegged whenever a response is received from the server to client. |

| Type | Counter |

| Service Operation | Egress Gateway |

| Dimension |

Tags: releaseVersion, configVersion, updated.

|

Table 6-148 oc_egressgateway_peer_health_status

| Field | Details |

|---|---|

| Description | It defines Egress Gateway peer health status.

This metric is set to 1, if a peer is unhealthy. This metric is reset to 0, when it becomes healthy again. |

| Type | Gauge |

| Service Operation | Egress Gateway |

| Dimension |

|

Table 6-149 oc_egressgateway_peer_health_ping_request_total

| Field | Details |

|---|---|

| Description | It defines Egress Gateway peer health ping request.

This metric is incremented every time Egress Gateway send a health ping towards a peer. |

| Type | Counter |

| Service Operation | Egress Gateway |

| Dimension |

|

Table 6-150 oc_egressgateway_peer_health_ping_response_total

| Field | Details |

|---|---|

| Description | Egress Gateway Peer health ping response. This metric is incremented every time a Egress Gateway receives a health ping response (irrespective of success or failure) from a peer. |

| Type | Counter |

| Service Operation | Egress Gateway |

| Dimension |

|

Table 6-151 oc_egressgateway_peer_health_status_transitions_total

| Field | Details |

|---|---|

| Description | It defines Egress Gateway peer health status transitions. Egress Gateway increments this metric every time a peer transitions from available to unavailable or unavailable to available. |

| Type | Counter |

| Service Operation | Egress Gateway |

| Dimension |

|

Table 6-152 oc_egressgateway_peer_count

| Field | Details |

|---|---|

| Description | It defines Egress Gateway peer count. This metric is incremented every time for the peer count. |

| Type | Gauge |

| Service Operation | Egress Gateway |

| Dimension | peerset |

Table 6-153 oc_egressgateway_peer_available_count

| Field | Details |

|---|---|

| Description | It defines Egress Gateway available peer count. This metric is incremented every time for the available peer count. |

| Type | Gauge |

| Service Operation | Egress Gateway |

| Dimension | peerset |

Table 6-154 oc_egressgateway_user_agent_consumer

| Field | Details |

|---|---|

| Description | Whenever the feature is enabled and User-Agent Header is getting generated. |

| Type | Counter |

| Service Operation | Egress Gateway |

| Dimension | ConsumerNfInstanceId: ID of consumer NF (NSSF) as configured in Egress Gateway. |

6.1.8 Ingress Gateway Metrics

This section provides details about Ingress Gateway metrics.

Table 6-155 oc_ingressgateway_incoming_tls_connections

| Field | Details |

|---|---|

| Description | Number of TLS connections received on the Ingress Gateway and their negotiated TLS versions. The versions can be TLSv1.3 or TLSv1.2. |

| Type | Gauge |

| Service Operation | Ingress Gateway |

| Dimension |

|

Table 6-156 oc_ingressgateway_pod_congestion_state

| Field | Details |

|---|---|

| Description | It is used to track congestion state of a pod. |

| Type | Gauge |

| Service Operation | Ingress Gateway |

| Dimension | level = 0,1,2

|

Table 6-157 oc_ingressgateway_pod_resource_stress

| Field | Details |

|---|---|

| Description | It tracks CPU, memory, and queue usage (as percentages) to determine the congestion state of the POD that is performing the calculations. |

| Type | Gauge |

| Service Operation | Ingress Gateway |

| Dimension | type = "PendingRequest","CPU","Memory" |

Table 6-158 oc_ingressgateway_pod_resource_state

| Field | Details |

|---|---|

| Description | It tracks the congestion state of individual resources, which is calculated based on their usage and the configured threshold. |

| Type | Gauge |

| Service Operation | Ingress Gateway |

| Dimension | type = "PendingRequest","CPU","Memory"

level = 0,1,2

|

Table 6-159 oc_ingressgateway_incoming_pod_connections_rejected_total

| Field | Details |

|---|---|

| Description | It tracks the number of connections dropped in the congested or Danger Of Congestion (DOC) state. |

| Type | Counter |

| Service Operation | Ingress Gateway |

| Dimension | NA |

6.2 NSSF KPIs

This section includes information about KPIs for Oracle Communications Cloud Native Core, Network Slice Selection Function.

The following are the NSSF KPIs:

6.2.1 NSSelection KPIs

Table 6-160 NSSF NSSelection Initial Registration Success Rate

| Field | Details |

|---|---|

| Description | Percentage of NSSelection Initial registration messages with success response |

| Expression | sum(ocnssf_nsselection_success_tx_total{message_type=\"registration\"})/ sum(ocnssf_nsselection_rx_total{message_type=\"registration\"}))*100" |

Table 6-161 NSSF NSSelection PDU establishment success rate

| Field | Details |

|---|---|

| Description | Percentage of NSSelection PDU establishment messages with success response |

| Expression | sum(ocnssf_nsselection_success_tx_total{message_type=\"pdu_session\"})/ sum(ocnssf_nsselection_rx_total{message_type=\"pdu_session\"}))*100" |

Table 6-162 NSSF NSSelection UE-Config Update success rate

| Field | Details |

|---|---|

| Description | Percentage of NSSelection UE-Config Update messages with success response |

| Expression | sum(ocnssf_nsselection_success_tx_total{message_type=\"ue_config_update\"})/ sum(ocnssf_nsselection_rx_total{message_type=\"ue_config_update\"}))*100", |

Table 6-163 4xx Responses (NSSelection)

| Field | Details |

|---|---|

| Description | Rate of 4xx response for NSSelection |

| Expression | sum(increase(oc_ingressgateway_http_responses{Status=~"4.* ",Uri=~".*nnssf-nsselection.*",Method="GET"}[5m])) |

Table 6-164 5xx Responses (NSSelection)

| Field | Details |

|---|---|

| Description | Rate of 5xx response for NSSelection |

| Expression | sum(increase(oc_ingressgateway_http_responses{Status=~"5.* ",Uri=~".*nnssf-nsselection.*",Method="GET"}[5m]) |

6.2.2 NSAvailability KPIs

Table 6-165 NSSF NSAvailability PUT success rate

| Field | Details |

|---|---|

| Description | Percentage of NSAvailability UPDATE PUT messages with success response |

| Expression | sum(ocnssf_nssaiavailability_success_tx_total{message_type=\"availability_update\"}{method=\"PUT"})/sum(ocnssf_nssaiavailability_rx_total{message_type=\"availability_update\"}{method=\"PUT"}))*100" |

Table 6-166 NSSF NSAvailability PATCH success rate

| Field | Details |

|---|---|

| Description | Percentage of NSAvailability UPDATE PATCH messages with success response |

| Expression | sum(ocnssf_nssaiavailability_success_tx_total{message_type=\"availability_update\"}{method=\"PATCH"})/sum(ocnssf_nssaiavailability_rx_total{message_type=\"availability_update\"}{method=\"PATCH"}))*100" |

Table 6-167 NSSF NSAvailability Delete success rate

| Field | Details |

|---|---|

| Description | Percentage of NSAvailability Delete messages with success response |

| Expression | sum(ocnssf_nssaiavailability_success_tx_total{message_type=\"availability_update\"}{method=\"DELETE"})/sum(ocnssf_nssaiavailability_rx_total{message_type=\"availability_update\"}{method=\"DELETE"}))*100"" |

Table 6-168 NSSF NSAvailability Subscribe success rate

| Field | Details |

|---|---|

| Description | Percentage of NSAvailability Subscribe messages with success response |

| Expression | sum(ocnssf_nssaiavailability_success_tx_total{message_type=\"availability_subscribe\"}{method=\"POST"})/sum(ocnssf_nssaiavailability_rx_total{message_type=\"availability_subscribe\"}{method=\"POST"}))*100" |

Table 6-169 NSSF NSAvailability Unsubscribe success rate

| Field | Details |

|---|---|

| Description | Percentage of NSAvailability Unsubscribe messages with success response |

| Expression | sum(ocnssf_nssaiavailability_success_tx_total{message_type=\"availability_subscribe\"}{method=\"DELETE"})/sum(ocnssf_nssaiavailability_rx_total{message_type=\"availability_subscribe\"}{method=\"DELETE"}))*100" |

Table 6-170 4xx Responses (NSAvailability)

| Field | Details |

|---|---|

| Description | Rate of 4xx response for NSAvailability |

| Expression | sum(increase(oc_ingressgateway_http_responses{Status=~"4.* ",Uri=~".*nnssf-nsavailability.*",Method="GET"}[5m])) |

Table 6-171 5xx Responses (NSAvailability)

| Field | Details |

|---|---|

| Description | Rate of 5xx response for NSAvailability |

| Expression | sum(increase(oc_ingressgateway_http_responses{Status=~"4.* ",Uri=~".*nnssf-nsavailability.*",Method="GET"}[5m])) |

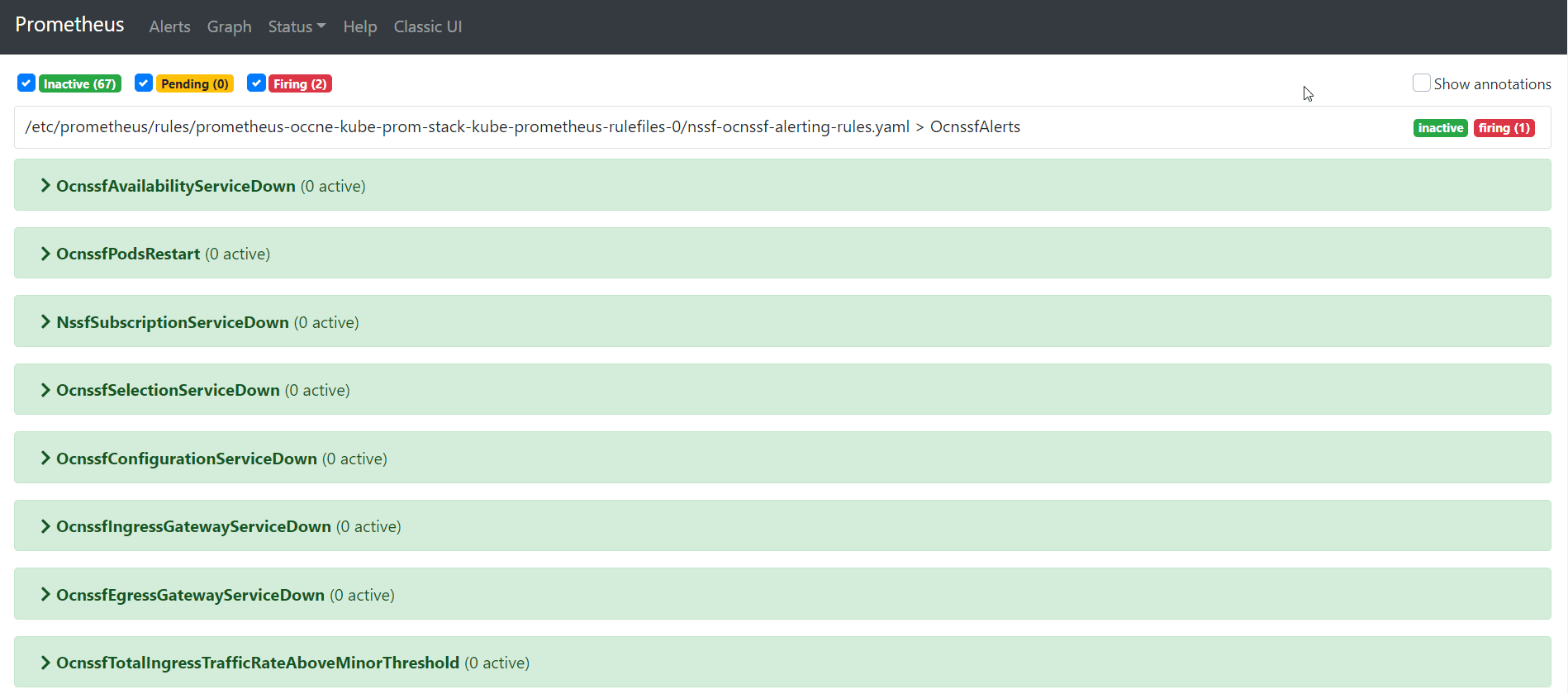

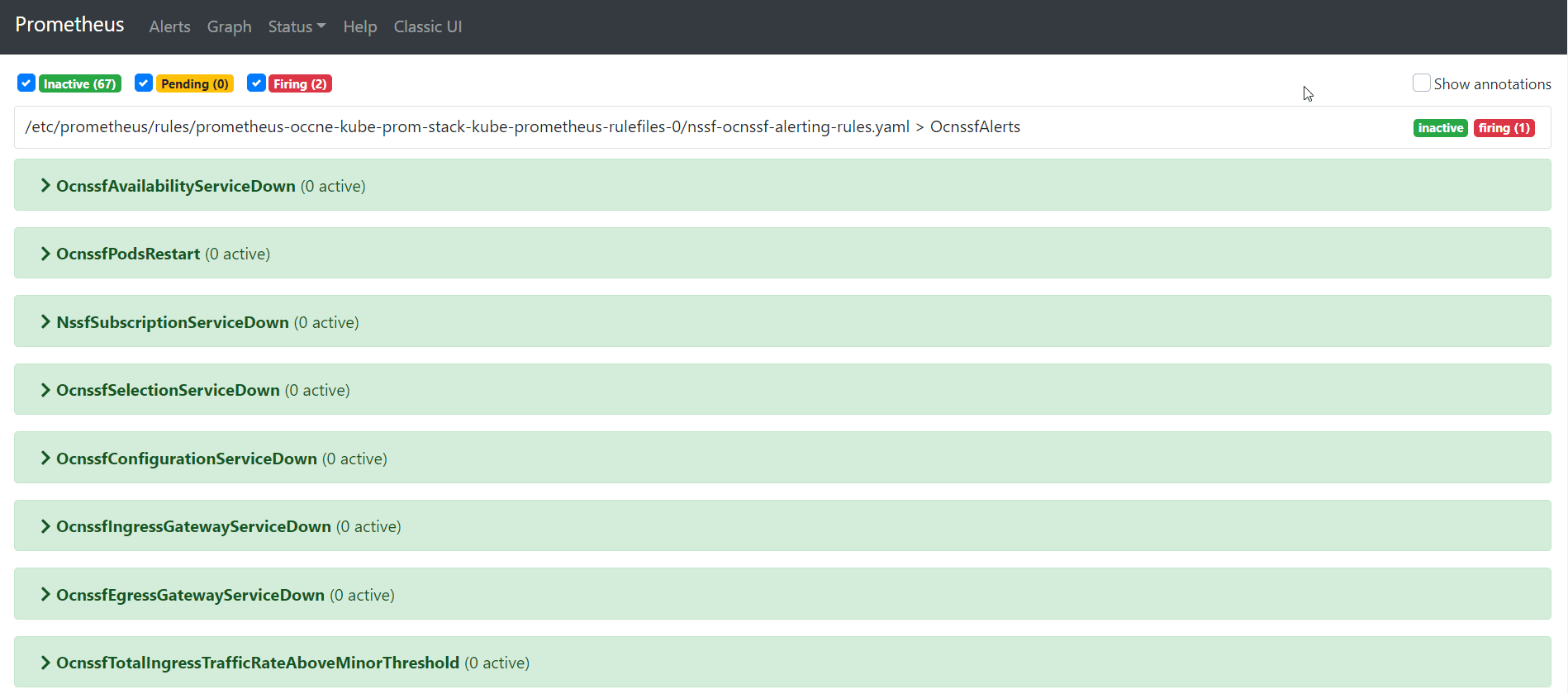

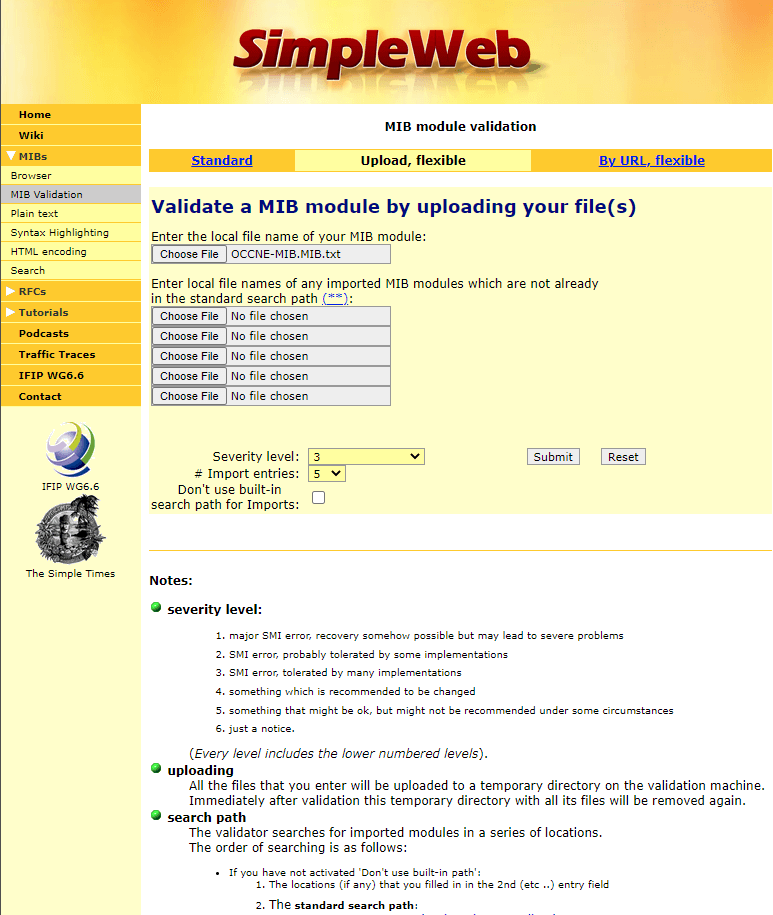

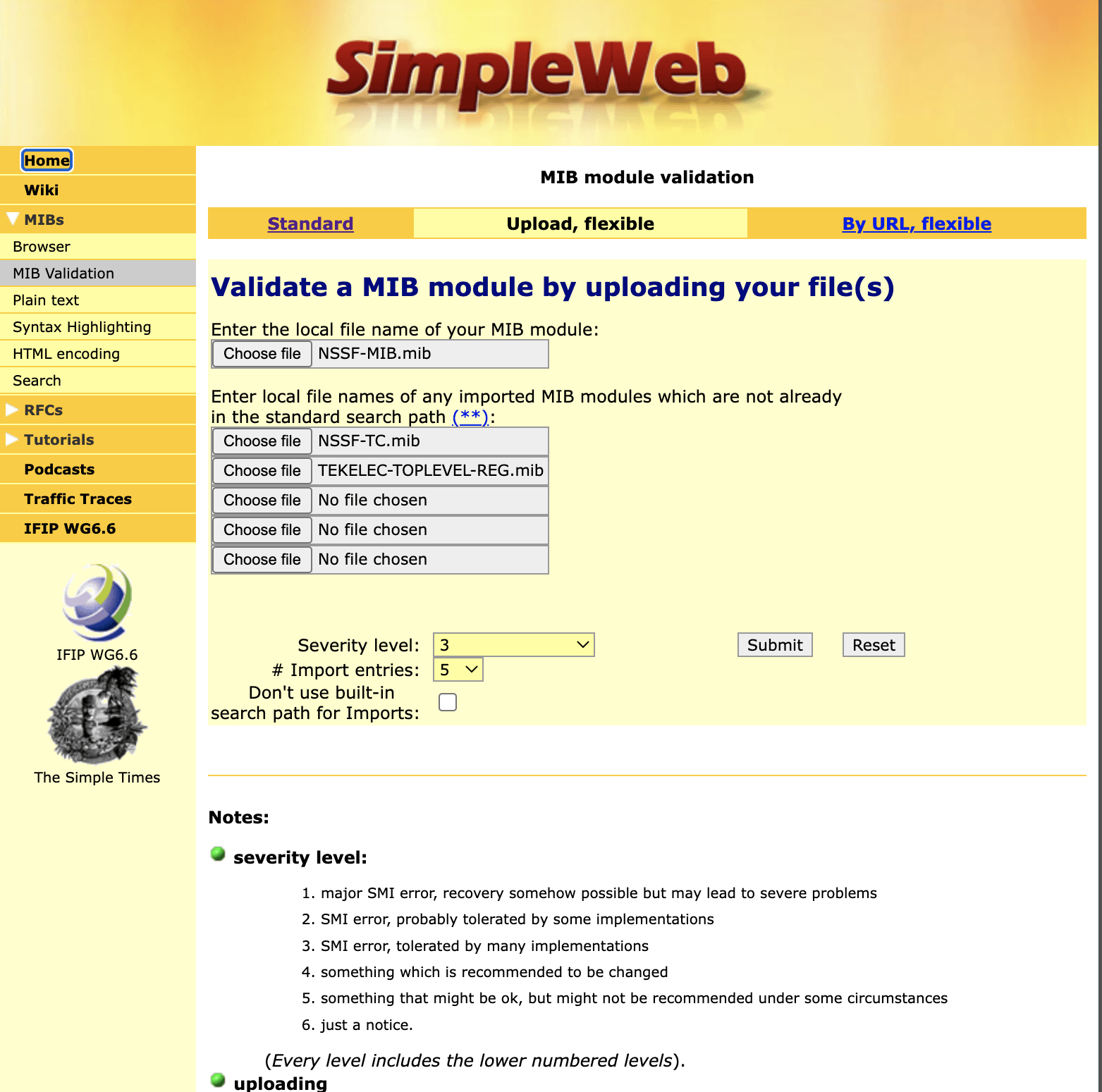

6.3 NSSF Alerts

This section includes information about alerts for Oracle Communications Network Slice Selection Function.

Note:

The performance and capacity of the NSSF system may vary based on the call model, feature or interface configuration, network conditions, and underlying CNE and hardware environment.You can configure alerts in Prometheus and ocnssf_alert_rules_25.1.100.yaml

file.

The following table describes the various severity types of alerts generated by NSSF:

Table 6-173 Alerts Levels or Severity Types

| Alerts Levels / Severity Types | Definition |

|---|---|

| Critical | Indicates a severe issue that poses a significant risk to safety, security, or operational integrity. It requires immediate response to address the situation and prevent serious consequences. Raised for conditions may affect the service of NSSF. |

| Major | Indicates a more significant issue that has an impact on operations or poses a moderate risk. It requires prompt attention and action to mitigate potential escalation. Raised for conditions may affect the service of NSSF. |

| Minor | Indicates a situation that is low in severity and does not pose an immediate risk to safety, security, or operations. It requires attention but does not demand urgent action. Raised for conditions may affect the service of NSSF. |

| Info or Warn (Informational) | Provides general information or updates that are not related to immediate risks or actions. These alerts are for awareness and do not typically require any specific response. WARN and INFO alerts may not impact the service of NSSF. |

Caution:

User, computer and applications, and character encoding settings may cause an issue when copy-pasting commands or any content from PDF. The PDF reader version also affects the copy-pasting functionality. It is recommended to verify the pasted content when the hyphens or any special characters are part of the copied content.Note:

kubectlcommands might vary based on the platform deployment. Replacekubectlwith Kubernetes environment-specific command line tool to configure Kubernetes resources through kube-api server. The instructions provided in this document are as per the Oracle Communications Cloud Native Environment (OCCNE) version of kube-api server.- The alert file can be customized as required by the deployment environment. For example, namespace can be added as a filtered criteria to the alert expression to filter alerts only for a specific namespace.

6.3.1 System Level Alerts

This section lists the system level alerts.

6.3.1.1 OcnssfNfStatusUnavailable

Table 6-174 OcnssfNfStatusUnavailable

| Field | Details |

|---|---|

| Description | 'OCNSSF services unavailable' |

| Summary | 'kubernetes_namespace: {{$labels.kubernetes_namespace}}, timestamp: {{ with query "time()" }}{{ . | first | value | humanizeTimestamp }}{{ end }} : All OCNSSF services are unavailable.' |

| Severity | Critical |

| Condition | All the NSSF services are unavailable, either because the NSSF is getting deployed or purged. These NSSF services considered are nssfselection, nssfsubscription, nssfavailability, nssfconfiguration, appinfo, ingressgateway and egressgateway. |

| OID | 1.3.6.1.4.1.323.5.3.40.1.2.9001 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use the similar metric as exposed by the monitoring system. |

| Recommended Actions | The alert is cleared automatically when the NSSF

services start becoming available.

Steps:

|

6.3.1.2 OcnssfPodsRestart

Table 6-175 OcnssfPodsRestart

| Field | Details |

|---|---|

| Description | 'Pod <Pod Name> has restarted. |

| Summary | 'kubernetes_namespace: {{$labels.namespace}}, podname: {{$labels.pod}}, timestamp: {{ with query "time()" }}{{ . | first | value | humanizeTimestamp }}{{ end }} : A Pod has restarted' |

| Severity | Major |

| Condition | A pod belonging to any of the NSSF services has restarted. |

| OID | 1.3.6.1.4.1.323.5.3.40.1.2.9002 |

| Metric Used | 'kube_pod_container_status_restarts_total'Note: This is a Kubernetes metric. If this metric is not available, use the similar metric as exposed by the monitoring system. |

| Recommended Actions |

The alert is cleared automatically if the specific pod is up. Steps:

|

6.3.1.3 OcnssfSubscriptionServiceDown

Table 6-176 OcnssfSubscriptionServiceDown

| Field | Details |

|---|---|

| Description | 'OCNSSF Subscription service <ocnssf-nssubscription> is down' |

| Summary | 'kubernetes_namespace: {{$labels.kubernetes_namespace}}, timestamp: {{ with query "time()" }}{{ . | first | value | humanizeTimestamp }}{{ end }} : NssfSubscriptionServiceDown service down' |

| Severity | Critical |

| Condition | NssfSubscription services is unavailable. |

| OID | 1.3.6.1.4.1.323.5.3.40.1.2.9003 |

| Metric Used |

''up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use the similar metric as exposed by the monitoring system. |

| Recommended Actions | The alert is cleared when the NssfSubscription services

is available.

Steps:

|

6.3.1.4 OcnssfSelectionServiceDown

Table 6-177 OcnssfSelectionServiceDown

| Field | Details |

|---|---|

| Description | 'OCNSSF Selection service <ocnssf-nsselection> is down'. |

| Summary | 'kubernetes_namespace: {{$labels.kubernetes_namespace}}, timestamp: {{ with query "time()" }}{{ . | first | value | humanizeTimestamp }}{{ end }} : OcnssfSelectionServiceDown service down' |

| Severity | Critical |

| Condition | None of the pods of the NSSFSelection microservice is available. |

| OID | 1.3.6.1.4.1.323.5.3.40.1.2.9004 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use the similar metric as exposed by the monitoring system. |

| Recommended Actions | The alert is cleared when the nfsubscription service is

available.

Steps:

|

6.3.1.5 OcnssfAvailabilityServiceDown

Table 6-178 OcnssfAvailabilityServiceDown

| Field | Details |

|---|---|

| Description | 'Ocnssf Availability service ocnssf-nsavailability is down' |

| Summary | 'kubernetes_namespace: {{$labels.kubernetes_namespace}}, timestamp: {{ with query "time()" }}{{ . | first | value | humanizeTimestamp }}{{ end }} : NssfAvailability service down' |

| Severity | Critical |

| Condition | None of the pods of the OcnssfAvailabilityServiceDown microservice is available. |

| OID | 1.3.6.1.4.1.323.5.3.40.1.2.9005 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use the similar metric as exposed by the monitoring system. |

| Recommended Actions | The alert is cleared when the ocnssf-nsavailability

service is available.

Steps:

|

6.3.1.6 OcnssfConfigurationServiceDown

Table 6-179 OcnssfConfigurationServiceDown

| Field | Details |

|---|---|

| Description | 'OCNSSF Config service nssfconfiguration is down' |

| Summary | 'kubernetes_namespace: {{$labels.kubernetes_namespace}}, timestamp: {{ with query "time()" }}{{ . | first | value | humanizeTimestamp }}{{ end }} : OcnssfConfigServiceDown service down' |

| Severity | Critical |

| Condition | None of the pods of the NssfConfiguration microservice is available. |

| OID | 1.3.6.1.4.1.323.5.3.40.1.2.9006 |

| Metric Used |

'up' Note: : This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use the similar metric as exposed by the monitoring system. |

| Recommended Actions |

The alert is cleared when the nssfconfiguration service is available. Steps:

|

6.3.1.7 OcnssfAppInfoServiceDown

Table 6-180 OcnssfAppInfoServiceDown

| Field | Details |

|---|---|

| Description | OCNSSF Appinfo service appinfo is down' |

| Summary | kubernetes_namespace: {{$labels.kubernetes_namespace}}, timestamp: {{ with query "time()" }}{{ . | first | value | humanizeTimestamp }}{{ end }} : Appinfo service down' |

| Severity | Critical |

| Condition | None of the pods of the App Info microservice is available. |

| OID | 1.3.6.1.4.1.323.5.3.40.1.2.9007 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use the similar metric as exposed by the monitoring system. |

| Recommended Actions |

The alert is cleared when the app-info service is available. Steps:

|

6.3.1.8 OcnssfIngressGatewayServiceDown

Table 6-181 OcnssfIngressGatewayServiceDown

| Field | Details |

|---|---|

| Description | 'Ocnssf Ingress-Gateway service ingressgateway is down' |

| Summary | 'kubernetes_namespace: {{$labels.kubernetes_namespace}}, timestamp: {{ with query "time()" }}{{ . | first | value | humanizeTimestamp }}{{ end }} : OcnssfIngressGwServiceDown service down' |

| Severity | Critical |